Power Optimization by Cooling Photovoltaic Plants as a Dynamic

Self-adaptive Regulation Problem

Valerian Guivarch, Carole Bernon and Marie-Pierre Gleizes

IRIT, Universit

´

e de Toulouse, Toulouse, France

Keywords:

Multi-agent System, Control, Photovoltaic energy, Self-adaptation.

Abstract:

This paper shows an approach to control cooling devices for photovoltaic plants in order to optimize the

energy production thanks to a limited reserve of harvested rainwater. This is a complex problem, considering

the dynamic environment and the interdependence of the parameters, such as the weather data and the state of

the photovoltaic panels. Our claim is to design a system composed of autonomous components cooperating in

order to obtain an emergent efficient control.

1 COOLING PHOTOVOLTAIC

PANELS

Solar energy is a very promising solution for energy

production since the sun provides an unlimited source

of energy. Many studies have been performed to im-

prove the technology for converting the solar energy

into electrical energy (Lewis, 2016). A photovoltaic

(PV) plant consists in a large number of photovoltaic

panels connected in series, producing energy accord-

ing to the power received from the sun or irradiance.

Studies (Akbarzadeh and Wadowski, 1996) (Skoplaki

and Palyvos, 2009) showed that the ability of a PV

panel depends strongly of its temperature, with a volt-

age decreasing by one volt per half degree (Shan et al.,

2014). So, when the perceived irradiation is very

high, the photovoltaic panel heats and produces less

energy than with a lower irradiation.

In order to increase the panels efficiency and en-

sure them a longer life, researchers converge toward

cooling and cleaning solutions (Sargunanathan et al.,

2016). (Alami, 2014), (Chandrasekar and Senthilku-

mar, 2015), (Ebrahimi et al., 2015), (Bahaidarah et al.,

2016), (Ni

ˇ

zeti

´

c et al., 2016), (Sargunanathan et al.,

2016). These solutions involve an intelligent use of

water reserves in order to be efficient. Adopting an

automatic regulation reinforces the importance of a

right balance between using water supplies to im-

prove current energy production and saving the water

reserves in order to not miss them later. This equi-

librium depends on several interdependent data: cur-

rent water level and current energy production, but

also current meteorological conditions, weather fore-

casts, statistics about past meteorological conditions,

etc. Consequently the regulation process for clean-

ing and cooling panels must answer several questions:

What amount of water reserve has to be used for the

current day? How to distribute it during the day? How

the estimation of water reserve during the next days is

influenced by the weather forecast?

Considering the non-linearity of this regulation

problem, the imprecision of the forecast, the possi-

ble changes (addition or removal of sensors), or the

degradation of the photovoltaic panels, these choices

become a complex problem. Using a system able to

perform a learning process for changing its own be-

haviour at runtime becomes therefore inevitable.

The objective of the work described in this paper

is to propose such an intelligent strategy. A multi-

agent system, named AmaSun, is designed for opti-

mizing the energy production of a photovoltaic plant

considering a limited amount of water. The paper is

structured as follows: sections 2 and 3 present the

context of this work, section 4 describes the system

designed to regulate the cooling of PV panels, and

section 5 evaluates some aspects of this system before

concluding on prospects in section 6.

2 LIMITATIONS OF STANDARD

CONTROL PROCESSES

Controlling systems is a generic problem that can be

expressed as finding which modifications are needed

276

Guivarch, V., Bernon, C. and Gleizes, M-P.

Power Optimization by Cooling Photovoltaic Plants as a Dynamic Self-adaptive Regulation Problem.

DOI: 10.5220/0006654502760281

In Proceedings of the 10th International Conference on Agents and Artificial Intelligence (ICAART 2018) - Volume 1, pages 276-281

ISBN: 978-989-758-275-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

to be applied on the inputs in order to obtain the de-

sired effects on the ouputs. The most well-known

types of control are perfomed by PID, adaptive or in-

telligent controllers.

The widely used Proportional-Integral-Derivative

(PID) controller computes three terms related to the

error between the current and the desired state of the

controlled system, from which it deduces the next ac-

tion to apply (

˚

Astr

¨

om and H

¨

agglund, 2001). PID con-

trollers are not efficient with complex systems, due to

their difficulties to handle several inputs and outputs

and to deal with non-linearity.

Model-based approaches like Model Predictive

Control (MPC) (Nikolaou, 2001) use a model able to

forecast the behaviour of the system in order to find

the optimal control scheme. These approaches han-

dle several inputs but are limited by the mathematical

models they use. The Dual Control Theory uses two

types of commands: the actual controls that drive the

system to the desired state, and probes to observe the

system reactions and refine the controllers knowledge

(Feldbaum, 1961). The concepts behind this approach

are interesting but a heavy instantiation work is still

required for a system such as a PV plant.

Intelligent control regroups approaches that use

Artificial Intelligence methods to enhance existing

controllers among which neural networks (Hamm

et al., 2002), fuzzy logic (Lee, 1990), expert systems

(Stengel and Ryan, 1991) and Bayesian controllers

(Del Castillo, 2007). These methods can be easily

combined but they unfortunately require a fine grain

description of the system to control, which is inap-

propriate for the photovoltaic plant because it evolves

during time.

3 ADAPTIVE MULTI-AGENT

SYSTEMS

Considering the dynamics to take into account dur-

ing the regulation: inaccuracy of the weather fore-

casts, possible changes in sensors (addition/removal),

or degradation of the PV panels, a system able to per-

form a learning process in order to change its own

behaviour at runtime is then required. Some heuristic

learning algorithms, as the genetic algorithms, allow

to take account of these constraints but require a large

number of iterations to obtain a relevant behaviour.

Therefore they are not relevant for our objective of

a quick learning. On the other hand, because of the

evolution of the environment, a dynamic learning is

required, making the offline learning process not rel-

evant.

Multi-agent systems represent an appropriate

technology to deal with the dynamic and complex

nature of such a problem (Jennings and Bussmann,

2003), and considering the fact that self-adaptation is

a key to solve it, we focused our study on Adaptive

Multi-Agent Systems (AMAS) (Gleizes, 2011). The

AMAS approach enables to design a system to solve a

complex problem through a bottom-up approach: lo-

cal functions of the agents composing the system are

first defined – bearing in mind that each agent tries to

reach its own objective – and then the cooperative in-

teractions between these agents allow to collectively

produce a global emerging functionality. According

to the AMAS approach, each agent must maintain –

from its local point of view – cooperative interactions

with the agents it knows and with the environment

of the system (Georg

´

e et al., 2011). If an agent en-

counters a Non Cooperative Situation (NCS), it has to

solve it to come back into a cooperative state. The

criticality measure – the distance between the current

state of an agent and the state where its goal is reached

– helps also an agent to remain in a cooperative state.

The behaviour of an agent in an AMAS consists to

continuously act for decreasing both its own critical-

ity and the criticality of its neighborhood. The AMAS

technology has been, for instance, used to solve prob-

lems of real-time control such as heat engine (Boes

et al., 2013) or game parameters control (Pons and

Bernon, 2013).

Considering the ability of AMAS to take into ac-

count environmental dynamics, we consider this ap-

proach relevant for designing a system able to opti-

mize the energy production of a photovoltaic plant by

using environmental conditions and weather forecasts

to determine when to activate cooling devices.

4 A SELF-ADAPTIVE

CONTROLLER FOR

OPTIMIZING PV

PRODUCTION

This section first defines the general architecture of

AmaSun, a self-adaptive multi-agent system which

aims at maximizing energy production by controlling

when to cool photovoltaic panels while using water

reserves in an effective way, as only harvested rainwa-

ter can be used. The behaviours of the agents involved

in this control system are then described.

Figure 1 represents the global architecture of

AmaSun and its environment. The AmaSun control

system collects data from external modules such as

the database in which the historical meteorological

data of previous years are recorded, as well as the

Power Optimization by Cooling Photovoltaic Plants as a Dynamic Self-adaptive Regulation Problem

277

weather forecasts. It also collects data from local

sensors through the DataManager module, including

environmental data (temperature, wind power, solar

irradiance) and internal data about the photovoltaic

plant (panel temperatures, water level in the tank, cur-

rent energy produced). All these data are collected

thanks to sensors at the level of the photovoltaic pan-

els. The system is also connected to the cooling de-

vices to which it can send activation commands.

Figure 1: General architecture of AmaSun.

Four types of agents are used in AmaSun to con-

trol the cooling: the Energy agent determines if the

current energy production is satisfactory or not, the

Water agent determines the amount of water usable

by the system, and the Controller agent, thanks to a

set of Context agents, determines when to activate the

cooling devices. Every minute (i.e. every cycle), the

system receives a data update from the DataManager,

and each agent acts depending on these values.

4.1 Energy Agent

The objective of the Energy agent is to maximize the

Energy Production (EP) i.e. to minimize the loss of

efficiency by reducing the gap between the optimum

energy production and the current energy production.

As we do not consider the optimum energy production

as a theoretical value but as an empirical evaluation

depending on the additions or removals of sensors, the

decay of the panels, and so on, we prefer to consider

the Real Optimum Energy Production (ROEP).

If we consider a PV panel with a constant low

temperature, the energy production depends only on

solar irradiance. Therefore, ROEP, the highest en-

ergy value that can be produced with this irradiance,

is computed by the linear function F

ROEP

which ap-

plies a ratio R to the value of irradiance Ir:

F

ROEP

(Ir) = R ∗ Ir

Even if the Energy agent cannot directly decide

when to activate the cooling devices, it can express its

criticality level to drive the Controller agent to spray

water when it is necessary. Because the goal of the

Energy agent is to minimize the difference between

EP and ROEP, we consider the criticality C of the

Energy agent as this difference : C = ROEP − EP.

The good behaviour of the system depends on

the ability of the Energy agent to correctly estimate

ROEP. If C is globally too low, the system will not

perform enough cooling activations and the Energy

agent will not be able to reach its goal. If C is globally

too high, too many activations will be performed to

try to decrease it, to the detriment of the other agents.

These two problems occur when the ratio R used to

convert the irradiance into ROEP is too high or too

low. Therefore, the Energy agent has to detect these

situations and consequently to adapt the value of R to

solve them.

When the value of EP is higher than ROEP, since

this situation is theoretically not possible, this means

that R is too low, so the Energy agent increases R until

EP becomes lower or equal to ROEP. When ROEP is

higher than 100%, another situation theoretically not

possible, this means that R is too high, so the Energy

agent decreases R until ROEP becomes lower than

100.

This cooperative mechanism allows the Energy

agent to learn at runtime how to convert the irradiance

value into ROEP.

4.2 Water Agent

The goal of the Water agent is to make an efficient

use of the harvested rainwater; it is satisfied when

it is able to supply the water requested by the Con-

troller agent to cool panels. Two obvious situations

could prevent it to perform in the best way: the tank is

empty when water is required, which means too much

water was previously used, or the tank is full while it

is raining, which means more water could have been

used previously. However, it is not reasonable to wait

until these situations happen to decide too much or

too little water was used.

The decisions about when to efficiently use water

are then performed by cooperation of both the Water

and Controller agents: the Water agent determines

how much water the system has to use for a given

amount of time, and the Controller agent determines

which policy as to be applied during this same period

of time in order to use as precisely as possible this

amount of water. On average, a constant loss of wa-

ter is used each time a cooling device is activated, the

Water agent therefore expresses the amount of water

the Controller agent is allowed to use as a number of

activations of the cooling devices.

Actually, the Water agent is not completely im-

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

278

plemented yet in the current AmaSun version, and an

empirical number of activations of the cooling devices

per day is considered. In the future, the Water agent

will decide the value of the activation number based

on the weather forecast and the amount of water.

4.3 Controller Agent

The aim of the Controller agent is to determine the

behaviour of the cooling devices, for a given period

of time (e.g., a day), in order to meet both the Water

and Energy agents’ requirements. At the beginning

of the day, the Controller agent determines an activa-

tion threshold value, AT. During the day, for meet-

ing the constraints of the Energy agent, each time the

criticality C of this Energy agent exceeds AT , cooling

devices are activated. To fulfil also the constraints of

the Water agent, the value of AT takes into account

the required number of cooling devices activations it

requested.

However, this policy of activation depends

strongly on the context in which the photovoltaic pan-

els are, in particular the temperature, the solar irradi-

ance and, more generally, the weather conditions.

The AT value is determined thanks to the weather

forecast for the next day. As a matter of fact, if we

consider the number of activations for a period of one

hundred cycles, i.e. the percentage of activations Pa

for a period of time, this value depends, on the one

hand, on the AT value, a higher value for AT involv-

ing a lower value of Pa, and on the other hand, on

the weather of the next day, a bright day involving a

higher value for Pa than a rainy or a dark day. To

determine which value of AT will involve the correct

Pa value for the next day, the Controller agent is as-

sociated with a set of Context agents. Each Context

agent represents a specific weather, and owns a func-

tion τ(AT ) that takes an AT value chosen by the Con-

troller agent, as input, and sends back an estimation

of the Pa value with this value of AT , as output.

Thanks to the set of Context agents represent-

ing the weather forecasts – each Context agent be-

ing involved depending on the duration its associated

weather is forecasted – the Controller agent can es-

tablish the value of Pa for the next day depending on

a given value of AT by interacting with the Context

agent, as explained in the next section. So, at the be-

ginning of each day, it estimates by dichotomy the

correct value of AT to obtain the Pa that corresponds

to the required number of cooling device activations.

4.4 Context Agents

There are typically several hundred of Context agents

in the controller system since a Context agent repre-

sents information about a specific weather condition.

The goal of such an agent is to be able to evaluate

the percentage of activations of the cooling devices

for its associated weather depending on the activation

threshold AT given by the Controller agent.

A Context agent owns a values range per each

weather piece of data associated with its weather con-

dition: temperature, solar irradiance and wind speed.

When the current weather values are included in the

values ranges of a Context agent, it considers itself as

valid, and invalid otherwise. In a similar way, when it

becomes valid for a weather forecast, this means that

it will probably be valid the next day, so it signals this

information to the Controller agent which will take it

into account when computing AT .

A Context agent observes the state of the cooling

devices, it counts the total number of cycles where

it is valid and the specific number of cycles where

the cooling devices are also activated. A Context

agent records a map to associate these values, in other

words, the ratio of the number of cycles with activated

cooling devices NB

activated

[AT ] to the total of cycles

NB

total

[AT ], depending on the AT value. This ratio

not only depends on AT but also on the weather asso-

ciated with the Context agent, some weather involv-

ing more cooling devices activations, so each Context

agent has its own map.

When the Controller agent tries to estimate the

percentage of activations Pa with a given threshold

AT at the beginning of the day, each Context agent

has to be able to generalize its estimation thanks to its

previous observations. So, it uses its function τ(AT ),

which is a linear regression weighted by the total

number of cycles for each value of AT . With every

functioning day, the Context agent increases the pre-

cision of the τ(AT ) function. To perform the learning

process, a Context agent makes evolving its knowl-

edge, such as its τ(AT ) function, in order to represent

more correctly its associated weather. Then, the evo-

lution of the number and knowledge of the Context

agents allows to improve the knowledge of the Con-

troller agent.

5 RESULTS

The goal of the Adaptive Multi-Agent System Ama-

Sun is to estimate the amount of rainwater which has

to be used each day, and then to determine each day

when to activate the cooling devices to maximize the

Power Optimization by Cooling Photovoltaic Plants as a Dynamic Self-adaptive Regulation Problem

279

energy production by using this rainwater amount.

However, the Water agent behaviour is still under

study and then we focus here on evaluating the abil-

ity of AmaSun to learn how to maximize the energy

production with a given amount of rainwater.

The learning process performed by AmaSun is

an on-line learning, that means it requires a retro-

action loop with its environment: it makes actions

and observes the feedback from this environment. To

perform the next evaluations, simulated photovoltaic

panels are used for testing several months of Ama-

Sun operation in a few minutes. They are based on

real data recorded from an actual PV panel plant and

coupled with historical weather data, to generate a

model of the photovoltaic panels using a neural net-

work system, thanks to the Weka tool (Holmes et al.,

1994). This model takes into account the environmen-

tal conditions (solar irradiance, temperature and wind

power), state of the cooling devices (activated or not)

and the previous temperature of the PV panels, for

generating the new temperature of the panels.

In this evaluation, the Water agent decides how

many cooling devices activations have to be per-

formed each day, depending only on the solar irra-

diance forecast. This simple computing is sufficient

enough to evaluate the AmaSun ability to perform the

number of actions we tell it to perform, independently

from the pertinence of this number. Once the Water

agent will also be able to decide what is the best num-

ber of activations to do, AmaSun will be able to find

the best cooling control.

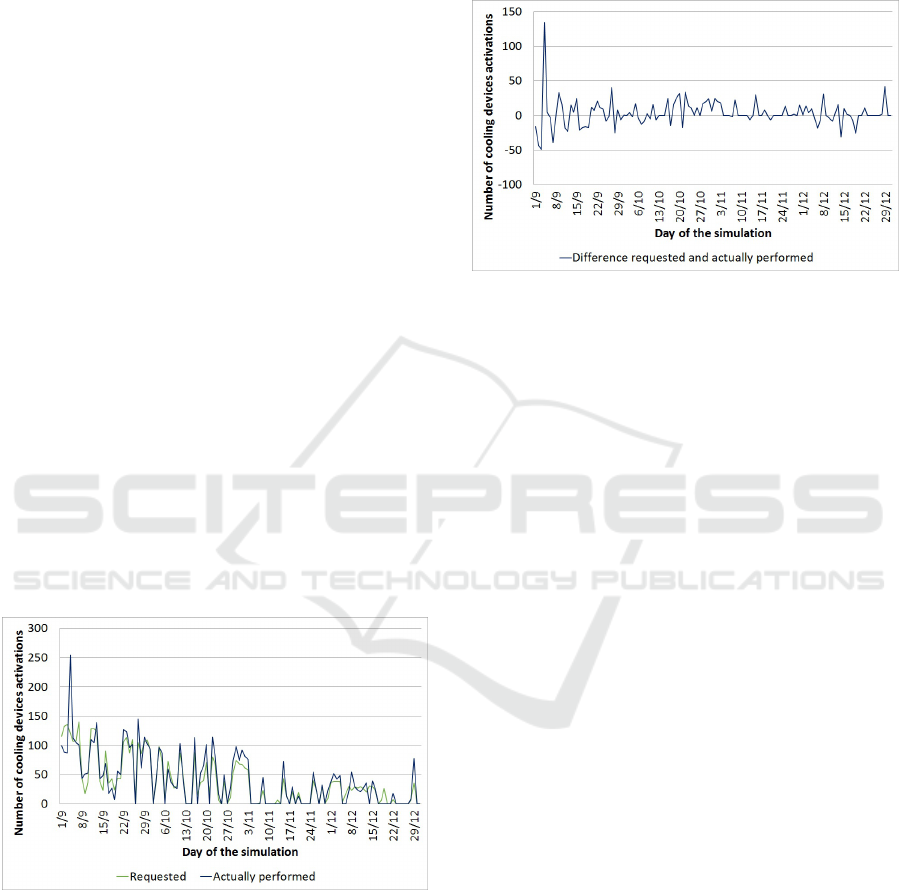

Figure 2: Requested and actually performed number of

cooling devices activations.

Figure 2 shows the number of cooling devices ac-

tivations that AmaSun has to perform during the day

(i.e. requested, light curve), as determined by the Wa-

ter agent at the beginning of the day, and the num-

ber of activations actually performed at the end of

each day (dark curve). The decreasing number of re-

quested actions is only the result of the decreasing ir-

radiance between the beginning of September and the

end of December. The important thing is the low dif-

ference between the two curves, represented in figure

3. While still high the very first days of the learning,

this difference rapidly decreases.

Figure 3: Difference between requested and actually per-

formed number of cooling devices activations.

We performed the same simulation with data com-

ing from three different real power plants. For each

place, we observed the difference between the num-

ber of requested and actually performed actions, and

we obtained an average deviation of 18%. This value

can be considered good because it is obtained with-

out any previous knowledge. At the end of the sim-

ulations, we have an average number of 154 Context

agents to represent the encountered weathers. Since

AmaSun starts without any Context agents, the ability

of the agents to evaluate the correct value of thresh-

old AT in order to obtain the right number of activa-

tions depends only on the observations made by the

system at runtime over the days. Moreover, the data

used as input to AmaSun are subject to a lot of distur-

bances: the data perceived by the sensors are heavily

noisy, weather forecasts are partially inaccurate, and

given hourly whereas the system works with a cycle

per minute.

6 CONCLUSION

This paper introduced the problem of using a Multi-

Agent System-based controller to increase the energy

production of a photovoltaic plant thanks to the use

of cooling devices connected to a limited reserve of

rainwater. Due to the interdependence of several pa-

rameters, we cannot define a classical control function

to optimize the energy production.

To answer this problem, we designed AmaSun, an

Adaptive Multi-Agent System able to learn, depend-

ing on environmental conditions and weather fore-

casts, the amount of water to use during a period,

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

280

the optimal energy production depending on the per-

ceived solar irradiance value and which gap, between

the current energy production and the estimated opti-

mal energy production, is permitted before activating

the cooling devices in order to use the allowed water

in the most efficient manner.

Preliminary results were given on this latter point

and to complete AmaSun, our ongoing work will

study how, depending on weather forecasts, the op-

timal number of activations is to be estimated, and,

considering the amount of water the system pos-

sesses, how many activations it has to perform each

day. Moreover, in order to more efficiently evaluate

the impact of AmaSun, we plan to equip half of the

photovoltaic panels of a real plant with this control

system, while the other half of the panels will work

without any cooling device.

ACKNOWLEDGEMENT

This work is part of a research project SuniAgri

funded by the ERDF of the European Union and the

French Occitanie Region. We would like also to thank

the SUNiBRAIN company, our partner in this project.

REFERENCES

Akbarzadeh, A. and Wadowski, T. (1996). Heat pipe-based

cooling systems for photovoltaic cells under concen-

trated solar radiation. Applied Thermal Engineering,

16(1):81–87.

Alami, A. H. (2014). Effects of evaporative cooling on ef-

ficiency of photovoltaic modules. Energy Conversion

and Management, 77:668–679.

˚

Astr

¨

om, K. J. and H

¨

agglund, T. (2001). The future of pid

control. Control engineering practice, 9(11):1163–

1175.

Bahaidarah, H. M., Baloch, A. A., and Gandhidasan, P.

(2016). Uniform cooling of photovoltaic panels: a

review. Renewable and Sustainable Energy Reviews,

57:1520–1544.

Boes, J., Migeon, F., and Gatto, F. (2013). Self-organizing

agents for an adaptive control of heat engines. In

ICINCO (1), pages 243–250.

Chandrasekar, M. and Senthilkumar, T. (2015). Experi-

mental demonstration of enhanced solar energy uti-

lization in flat pv (photovoltaic) modules cooled by

heat spreaders in conjunction with cotton wick struc-

tures. Energy, 90:1401–1410.

Del Castillo, E. (2007). Bayesian process monitoring, con-

trol and optimization.

Ebrahimi, M., Rahimi, M., and Rahimi, A. (2015). An

experimental study on using natural vaporization for

cooling of a photovoltaic solar cell. Int. Com. in Heat

and Mass Transfer, 65:22–30.

Feldbaum, A. (1961). Dual control theory. Automn. Remote

Control, 22:1–12.

Georg

´

e, J.-P., Gleizes, M.-P., and Camps, V. (2011). Coop-

eration. In Self-organising Software, pages 193–226.

Springer.

Gleizes, M.-P. (2011). Self-adaptive complex systems. In

European Workshop on Multi-Agent Systems, pages

114–128. Springer.

Hamm, L., Brorsen, B. W., and Hagan, M. T. (2002). Global

optimization of neural network weights. In Proc.

Int. Joint Conf. on Neural Networks, volume 2, pages

1228–1233. IEEE.

Holmes, G., Donkin, A., and Witten, I. H. (1994). Weka:

A machine learning workbench. In Proc. of the 2nd

Conf. on Intelligent Information Systems, pages 357–

361. IEEE.

Jennings, N. R. and Bussmann, S. (2003). Agent-based con-

trol systems: Why are they suited to engineering com-

plex systems? IEEE control systems, 23(3):61–73.

Lee, C.-C. (1990). Fuzzy logic in control systems: fuzzy

logic controller. IEEE Trans. on systems, man, and

cybernetics, 20(2):404–418.

Lewis, N. S. (2016). Research opportunities to advance so-

lar energy utilization. Science, 351(6271):aad1920.

Nikolaou, M. (2001). Model predictive controllers: A criti-

cal synthesis of theory and industrial needs. Advances

in Chemical Engineering, 26:131–204.

Ni

ˇ

zeti

´

c, S.,

ˇ

Coko, D., Yadav, A., and Grubi

ˇ

si

´

c-

ˇ

Cabo, F.

(2016). Water spray cooling technique applied on a

photovoltaic panel: The performance response. En-

ergy Conversion and Management, 108:287–296.

Pons, L. and Bernon, C. (2013). A multi-agent system for

autonomous control of game parameters. In Int. Conf.

on Systems, Man, and Cybernetics, pages 583–588.

IEEE.

Sargunanathan, S., Elango, A., and Mohideen, S. T. (2016).

Performance enhancement of solar photovoltaic cells

using effective cooling methods: A review. Renewable

and Sustainable Energy Reviews, 64:382–393.

Shan, F., Tang, F., Cao, L., and Fang, G. (2014). Compar-

ative simulation analyses on dynamic performances

of photovoltaic–thermal solar collectors with different

configurations. Energy Conversion and Management,

87:778–786.

Skoplaki, E. and Palyvos, J. A. (2009). On the temperature

dependence of photovoltaic module electrical perfor-

mance: A review of efficiency/power correlations. So-

lar energy, 83(5):614–624.

Stengel, R. F. and Ryan, L. (1991). Stochastic robustness of

linear time-invariant control systems. IEEE Transac-

tions on Automatic Control, 36(1):82–87.

Power Optimization by Cooling Photovoltaic Plants as a Dynamic Self-adaptive Regulation Problem

281