Machine Floriography: Sentiment-inspired Flower Predictions

over Gated Recurrent Neural Networks

Avi Bleiweiss

BShalem Research, Sunnyvale, U.S.A.

Keywords:

Language of Flowers, Gated Recurrent Neural Networks, Machine Translation, Softmax Regression.

Abstract:

The design of a flower bouquet often comprises a manual step of plant selection that follows an artistic style

arrangement. Floral choices for a collection are typically founded on visual aesthetic principles that include

shape, line, and color of petals. In this paper, we propose a novel framework that instead classifies sentences

that describe sentiments and emotions typically conveyed by flowers, and predicts the bouquet content impli-

citly. Our work exploits the figurative Language of Flowers that formalizes an expandable list of translation

records, each mapping a short-text sentiment sequence to a unique flower type we identify with the bouquet

center-of-interest. Records are represented as word embeddings we feed into a gated recurrent neural-network,

and a discriminative decoder follows to maximize the score of the lead flower and rank complementary flower

types based on their posterior probabilities. Already normalized, these scores directly shape the mix weights

in the final arrangement and support our intuition of a naturally formed bouquet. Our quantitative evaluation

reviews both stand-alone and baseline comparative results.

1 INTRODUCTION

Communicating through the use of flower meanings

to express emotions, also known as Floriography, has

been a traditional and well established practice around

the world for centuries. Nonetheless, endorsing this

coded exchange as a Language of Flowers only gai-

ned traction during the Victorian era, and was backed

by publishing a growing compilation body of floral

dictionaries that explained the meanings of flowers.

Shortly thereafter, the language of flowers was fa-

vored as the prime medium to send secret messages

otherwise prohibited in public conversations. Flori-

ography was not only about the simple emotion atta-

ched to an individual flower, but rather what portrayed

in a combination of petals and thrones placed in an

arranged bouquet. The language had since develo-

ped considerably, and today several online resources

(Roof and Roof, 2010; Diffenbaugh, 2011) provide

updated flower interpretations and most authentic sen-

timent transcriptions.

In this research, we investigate the linguistic pro-

perties of the language of flowers using unsupervi-

sed learning of word vector representations (Mikolov

et al., 2013a; Pennington et al., 2014), and modeling

the language after neural machine translation that pre-

dicts a definitive flower type given a sentiment phrase

as input. Furthermore, we extend the single target

perspective of the language and relate the short-text

sentiment sequence to a plurality of flowers that com-

bine both a principal or pivotal flower, with statisti-

cally ranked subordinate flowers to form a bouquet.

Recurrent Neural Networks (RNN) recently be-

came a widespread tool for language modeling tasks

(Sutskever et al., 2014; Hoang et al., 2016; Tran

et al., 2016). In our case study, we feed sequentially

concatenated translation records into a shallow RNN

architecture that consists of an input, hidden, and

output layers (Elman, 1990; Mikolov et al., 2010).

At every time step, the output probability distribu-

tion over the entire language vocabulary renders our

framework for an automatic selection of sentiment-

aware flower species that requires minimal human

counseling. We ran experiments on both a standard

RNN that applies a hyperbolic activation function di-

rectly and through a gated recurrent unit (GRU) (Cho

et al., 2014; Chung et al., 2014), and confirmed GRU

to better sustain vanishing propagating gradient (Ho-

chreiter and Schmidhuber, 1997) and improve our re-

call performance.

The main contribution of our work is an effective

neural translation model we apply to a small cor-

pus comprised of extremely short-text sequences, by

sharing representation power of context both adja-

Bleiweiss, A.

Machine Floriography: Sentiment-inspired Flower Predictions over Gated Recurrent Neural Networks.

DOI: 10.5220/0006583204130421

In Proceedings of the 10th International Conference on Agents and Artificial Intelligence (ICAART 2018) - Volume 2, pages 413-421

ISBN: 978-989-758-275-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

413

cent in time and closely related in semantic vector

space. The rest of this paper is organized as fol-

lows. In Section 2, we give a brief review of our

compiled version of the Language of Flowers and the

use of Word2Vec word embeddings to represent sen-

timent sentences and flower names. Section 3 then

overviews the gated recurrent unit (GRU) extension

to a standard RNN, and derives our neural network

architecture for predicting bouquet flower candidacy

from a sentiment phrase. As Section 4 motivates the

order of feeding RNN semantically-close sentiment

vectors to improve accuracy. We proceed to present

our methodology for evaluating system performance

end-to-end, and report extensive quantitative results

over a range of experiments, in Section 5. Summary

and identified prospective avenues for future work are

provided in Section 6.

2 LANGUAGE OF FLOWERS

The online floral dictionaries we obtained sort flo-

wers by name and distribute respective meanings in

instructive alphabetical chapters (Figure 1). Inter-

nally, we represent the language of flowers in a size-l

named-member list of variable-length sentiment phra-

ses, each identified with a single pivotal flower f , as

illustrated in Table 1. To keep the plant names uni-

quely labeled in our implementation, those compo-

sed of multiple words make up a hyphenated com-

pound modifier. In total, we tokenize and lowercase

l = 701 sentiment-flower pairs of distinct flower ty-

pes, as we pare down 3,857 unfiltered words to build

a succinct vocabulary of 1,386 symbols, after remo-

ving stop words and punctuation marks.

Figure 1: Language of Flowers: distributed flower types

arranged in alphabetically sorted buckets of names. Buckets

U and X are evidently empty.

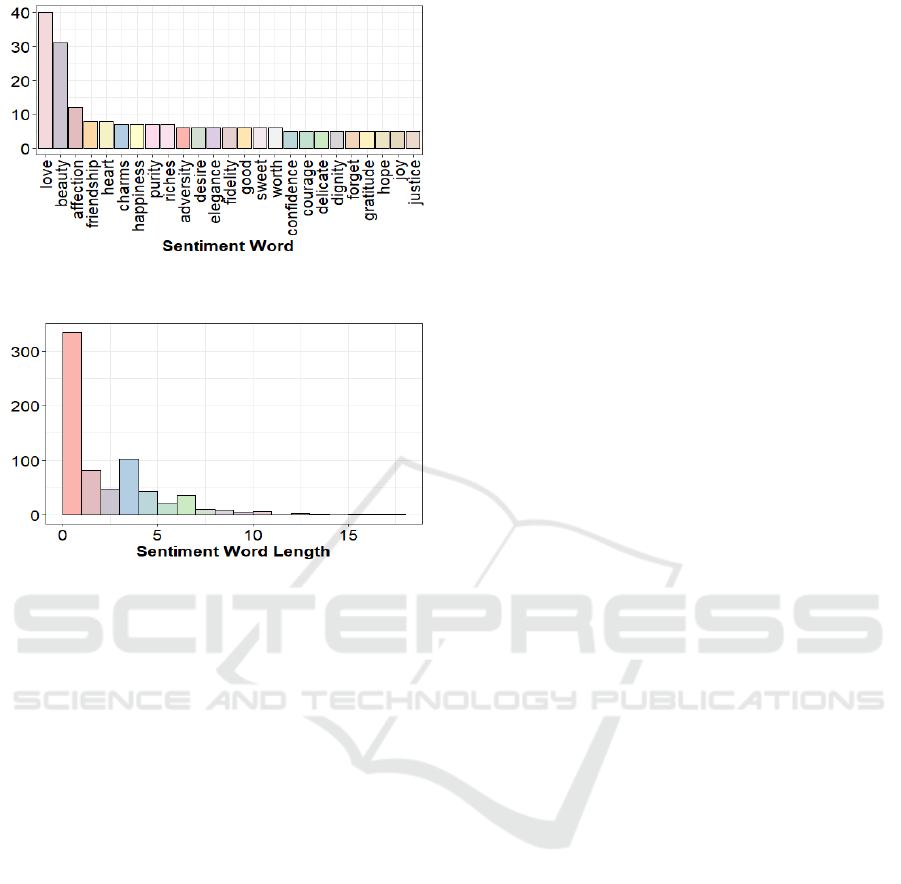

To visualize the distribution of the vocabulary

from several different viewpoints, we used R (R Core

Team, 2013) to render a word cloud (Figure 2) that

depicts the top 150 frequent sentiment labels in the

Table 1: Language of Flowers: a sample of sentiment phra-

ses each identified with a single ground-truth flower-type.

Flower names of multiple words are hyphenated to form a

compound.

Sentiment Phrase Pivotal Flower

endearment, sweet and lovely carnation-white

you pierce my heart gladiolus

pleasant thoughts, think of me pansy

joy, maternal tenderness sorrel-wood

enchantment, sensibility, pray for me verbena

dictionary of the language of flowers. Noticeably of

the highest occurrence count are words of emotio-

nal romantic connotations like ‘love’, ‘beauty’, ‘af-

fection’, ‘friendship’, and ‘heart’. In Figure 3, we

follow to provide term frequencies for each of the top

25 sentiment labels in the vocabulary, as the words

‘love’, ‘beauty’, and ‘affection’ occur 40, 31, and 12

times, respectively. Lastly, the distribution of senti-

ment word lengths is of notable importance to assess

flower prediction performance. To this extent, Figure

4 highlights 330 single-word, 100 four-word, and 80

two-word long sentiment sentences, with a maximum

sequence length of eighteen words.

pleasures

thoughts

beauty

mental

desire

consumed

bravery

fate

secret

declare

sweetness

hope

luck

welcome

domestic

cheerful

night

indifference

rustic

riches

passion

ardour

charity

indiscretion

instability

pretension

good

meeting

modesty

misanthropy

affection

confidence

lasting

knight−errantry

love

attachment

pleasure

delicacy

remembrance

majesty

bonds

unfortunate

industry

heart

death

worthy

presence

reconciliation

change

think

pride

enthusiasm

purity

friendship

appreciation

merit

preference

justice

bashful

amiability

days

touch

truth

snare

music

die

war

memory

true

wit

grief

crown

fire

best

bridal

Figure 2: Language of Flowers: word cloud of the top

150 frequent sentiment labels. Font size is proportional to

the number of word occurrences in the corpus, with ‘love’,

‘beauty’, and ‘affection’ leading.

Despite the relatively concise vocabulary of size

|

V

|

= 1,386 tokens, rather than to use a 1-of-

|

V

|

sparse representation of a one-hot vector ∈ R

|V |×1

,

we map one-hot vectors onto a lower-dimensional

vector space using the Word2Vec (Mikolov et al.,

2013b) embedding technique that encodes semantic

word relationships in simple vector algebra. To train

word vectors effectively, we enabled negative sam-

pling in both the skip-gram and continuous-bag-of-

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

414

Figure 3: Language of Flowers: distribution of the top 25

frequent sentiment labels in the vocabulary.

Figure 4: Language of Flowers: distribution of sentiment

word lengths across the entire train set.

words (CBOW) neural models, and found word vec-

tor dimension, d, a critical hyperparameter to tune and

yield consistent flower-selection predictions in RNN.

3 FLOWER CANDIDACY

Formally, we represent the language of flowers as a

list of translation records, each defines a pair of a

source sentiment phrase and a unique target flower.

We use the notation v

1:k

to describe the sequence

(v

1

, v

2

, ..., v

k

) of k vectors and correspondingly denote

a translation pair as (s

1:k

, f ). A sentiment-flower pair

is decomposed into a k-length sentiment input s

1:k

we

linearly stream into RNN and an output concatenation

(s

2:k

, f ) of k word vectors, and establish a ground-

truth pivotal relation between a flower type and its

immediate preceding context. We note that our lan-

guage corpus incorporates short-text sentiment phra-

ses of length k that ranges from one to eighteen word

vectors (Figure 4). To further align our rendition in-

terface with the RNN architectural notation, we let

x

t

∈ R

d

be the d-dimensional word vector identified

with the t-th word in a text sequence, and denote an

end-to-end translation record as (x

1

, x

2

, ..., x

k

, x

k+1

),

or more compactly x

1:k+1

. We use the Gated Recur-

rent Unit (GRU) (Cho et al., 2014; Chung et al., 2014)

variant of RNN that adaptively captures long term de-

pendencies of different time scales. At each time step

t, the GRU takes an input word vector x

t

and the pre-

vious n-dimensional hidden state h

t−1

to produce the

next hidden state h

t

. Conceptually, the forward GRU

has four basic functional stages that are governed by

the following set of formulas:

z

t

= σ(W

(z)

x

t

+U

(z)

h

t−1

)

r

t

= σ(W

(r)

x

t

+U

(r)

h

t−1

)

˜

h

t

= tanh(r

t

Uh

t−1

+Wx

t

)

h

t

= (1 − z

t

)

˜

h

t

+ z

t

h

t−1

where W

(z)

,W

(r)

,W ∈ R

n×d

and U

(z)

,U

(r)

,U ∈ R

n×n

are weight matrices, and the dimensions n and d are

input configurable hyperparameters. The symbols

σ() and tanh() refer to the non-linear sigmoid and

hyperbolic-tangent functions, and is an element-

wise multiplication. A backward fed GRU defines

←−

h

t

=

←−−

GRU (x

t

), t ∈ [k, 1].

In modeling the language of flowers, our main ob-

jective is to score flower candidacy for a bouquet,

by predicting flower type probabilities based on the

short-text sentiment sequence retained in each trans-

lation record. Serialized sentiment vectors x

1:k

are

streamed to RNN in isolation and independently, but

the inherent persistent-memory nature of GRU sum-

marizes at each time step the newly observed word

vector in a record sequence, x

t

, with the cumula-

tive previous context, h

t−1

. Similarities computed as

inner-products between each of the input converted

word-vectors and the GRU encoded h

t

are then for-

warded to a softmax discriminative decoder. Hence,

the next predicted word is the output probability dis-

tribution ˆy

t

= softmax(W

(S)

h

t

) over the entire lan-

guage vocabulary, where W

(S)

∈ R

|

V

|

×n

and ˆy

t

∈ R

|

V

|

,

and

|

V

|

is the cardinality of the vocabulary. During

the training of RNN, we attach a higher scoring bias

to the ground-truth pivotal flower-word, x

k+1

, that

immediately succeeds the last word of a sentiment

phrase, x

k

. Whereas in evaluation, to generate a se-

lection for a bouquet of flowers we rank all the poste-

rior probabilities of ˆy

t

that were predicted for x

k

, and

from the top-m indices we filter out all sentiment word

instances, and return to the user the remaining flower

tokens.

4 SHARED REPRESENTATION

In their recent work, Lee and Dernoncourt (2016)

show that the chronology of sequences of short-text

Machine Floriography: Sentiment-inspired Flower Predictions over Gated Recurrent Neural Networks

415

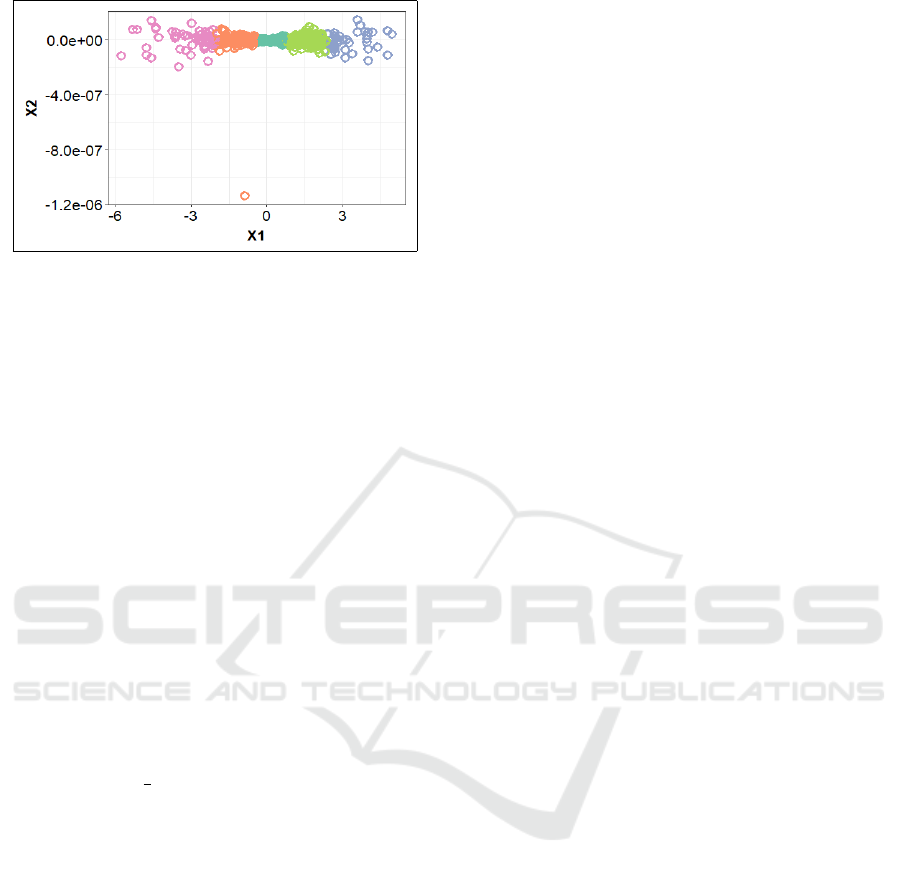

Figure 5: Language of Flowers: combining Multidimensi-

onal Scaling and k-means clustering to visualize sentiment

phrase relatedness in semantic vector space. Shown five

collections with only a few outliers.

representations fed to RNN, improves sentence clas-

sification accuracy. Motivated by their results, we

sought after a sequencing model that schedules sen-

timent sentences to RNN based on plausible seman-

tic relatedness between phrases. However, our online

acquisition of translation records that are enumerated

in alphabetical bins based on flower names (Figure

1), implied a partition type that constrains the more

interesting information about sentiment semantic si-

milarity.

To address this shortcoming, we first had to avoid

non-conforming representations in dot-product simi-

larity computations by reshaping the varying dimen-

sionality of sentiment clauses into uniform sized fe-

ature vectors. We chose to leverage a basic convolu-

tional architecture practice and applied mean pooling

to each of the encoded sentiment sequences x

1:k

, and

averaged the word vectors to yield a single vector re-

presentation s =

1

k

∑

k

t=1

x

t

, where s ∈ R

d

. From the

set of single-vector formatted sentiments s

1:l

, where

l = 701, we follow by constructing a distance matrix

D ∈ R

l×l

, and use Multidimensional Scaling (MDS)

(Torgerson, 1958; Hofmann and Buhmann, 1995)

to project the large dissimilarity matrix onto a two-

dimensional embedding space. By combining MDS

with k-means (Kaufman and Rousseeuw, 1990), we

produced visualization of clusters that group seman-

tically close sentiment phrases (Figure 5). Surpri-

singly, only a few outliers persist and most sentiment

sequences notably gather consistently. Results we re-

port next were obtained by scheduling sentiment se-

quences to RNN in the order prescribed by their pro-

jected coordinates, from left-to-right and bottom-to-

top. This is motivated by sharing representation po-

wer of similar context, where the final hidden state

of the current encoded sentiment is fed as the initial

hidden state of the next spatially closest sentiment se-

quence.

5 EVALUATION

Our workflow for evaluation is straightforward. First,

the user enters our system a desired count of plant ty-

pes ranging from 1 to m, along with an arbitrary sen-

timent composition of words that are part of the voca-

bulary of the language of flowers (Figure 2). The user

text sequence is transformed to a single word-vector

representation and follows cosine similarity calcula-

tions (Salton et al., 1975; Baeza-Yates and Ribeiro-

Neto, 1999) with each of the trained mean-pooled

sentiment-sentences, s

i

. For the semantically closest

translation pair, we query the pivotal and supporting

flowers and return to the user distinct flower images

and proportional weights that are used for final bou-

quet arrangement. To compare the predictive perfor-

mance of our GRU-based RNN system against, we

chose a baseline that we feed with our mean-pooled

representation of a sentiment sentence, and use soft-

max regression for the unsupervised learning algo-

rithm. We cross validated our baseline on both a

held out development set and an exclusively genera-

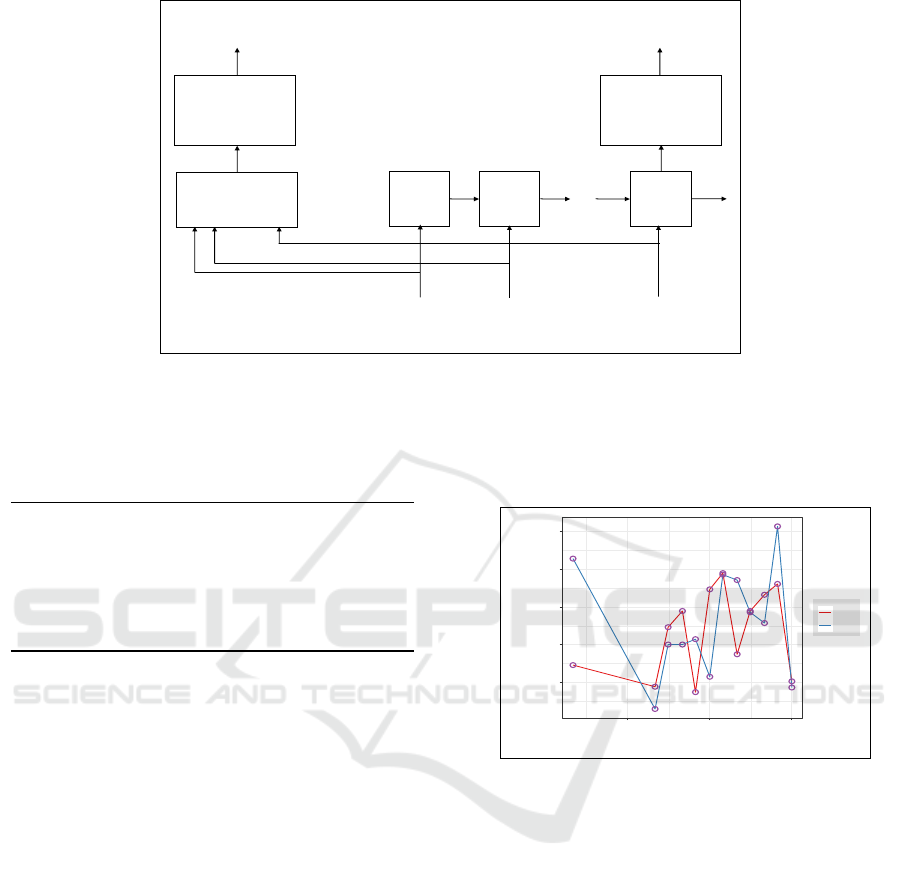

ted test-set. Figure 6 illustrates an architectural over-

view of the pipelines for both the main and baseline

computational paths.

5.1 Experimental Setup

To evaluate our system in practice, we have imple-

mented our own versions of a GRU-based RNN mo-

dule and the Word2Vec embedding technique, both

natively in R (R Core Team, 2013) for better integra-

tion with our software framework. Considering our

self-sustained corpus of irregular context, we chose to

collectively initialize our word vectors randomly and

learn them purely from the dictionary data. Instead of

obtaining pre-trained word vectors on large vocabula-

ries of external corpora that miss most our flower na-

mes. Skip-gram and CBOW performed almost iden-

tically in terms of flower prediction accuracy, both

are challenged by a language that combines short-

text sentences with a small number of samples, re-

spectively. Our model is trained to maximize the log-

likelihood of predicting the ground-truth target flower

for the language set of translation records, using mini-

batch stochastic gradient descent (SGD) and perfor-

ming error back-propagation. At every SGD itera-

tion, GRU weight matrices and word vectors are up-

dated, using the AdaDelta parameter update rule (Zei-

ler, 2012).

In Table 2, we list our experimental choices

of hyperparameters that control both the RNN and

Word2Vec subsystems. Given the succinct nature of

text fragments that constitute our sentiment phrase,

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

416

RNN

RNN

RNN

𝑥

𝑘

𝑥

1

𝑥

2

⋯

Mean

Pooling

⋯

⋯

ℎ

1

ℎ

2

ℎ

𝑘

ℎ

𝑘−1

𝑦

𝑘

Softmax

Activation

𝑦

𝑘

flower

predictions

sentiment phrase word-vectors

𝑠

Softmax

Regression

flower

classification

Figure 6: Architecture overview of computational pipelines for the mainline feedforward GRU-based RNN (right) and softmax

regression baseline (left).

Table 2: Experimental choices of tuned hyperparameters

applied to both RNN and Word2Vec modules.

Hyperparameter Notation Value

Hidden state dimension n 18

Word vector dimension d 100

Context window c

w

5

Negative samples n

s

10

Mini-batch size b 10

Top ranked probabilities m 10

we held the context window size c

w

at five words sym-

metrically, and assigned the number of negative sam-

ples n

s

to its recommended default value for small da-

tasets (Mikolov et al., 2013b). Correspondingly, we

allocated a reasonable example fraction of our train

set size for the SGD mini-batch size b. To optimize

the score for predicting the flower types, we modi-

fied one dimensional parameter at a time and kept

the other fixed. This culminated in setting the pre-

ferred hidden-state dimension n to the maximal word

length of a sentiment phrase (Figure 4), as we noti-

ced accuracy performance improvement by 6.7 per-

centage points when modifying the word vector size d

from 10 to 100. However, we observed a diminishing

return in embeddings larger than the hundred dimen-

sions. Unless stated otherwise, the results we report

were obtained using the skip-gram neural model and

a unidirectional GRU-based RNN, with m = 10.

5.2 Experimental Results

We chose a simple baseline model that replaces RNN

with softmax regression for learning, and as fea-

ture vectors uses a sentiment representation of mean-

pooled word embeddings, s ∈ R

d

. Each short-text

sentiment sequence of the train set is assigned one-

of-five categorical labels (Figure 5), as we turn our

problem to perform multi-class flower classification.

0.15

0.20

0.25

0.30

0.35

1e−06 1e−03 1e+00

Regularization

Precision

dev

test

Figure 7: Baseline softmax regression results on an inclu-

sive development set and exclusive test set. Showing pre-

cision as a function of a logarithmic-scaled regularization

parameter.

For validation evaluation, we formed a two-way

data split of 80/20 by proportionally sampling random

sentiment sentences from each of the five training

classes into a held out development set. To construct

our test set and allow for the study of our problem

domain itself, we leveraged the generative capacity

of an RNN-based language model (Sutskever et al.,

2011), and created entirely new text sequences that

closely resemble the emotional context at the core of

the language of flowers. The test collection we built

comprises 140 synthetic sentiment sentences of va-

riable length, about 20 percent of the train set size,

and is evaluated against the unsplit train-set size of

l = 701. Each of these extended sentiment phrases

is paired with one of the existing flowers in the cor-

pus and hence implicitly inherits a ground-truth multi-

class label. The pairing of a flower to a generated test

Machine Floriography: Sentiment-inspired Flower Predictions over Gated Recurrent Neural Networks

417

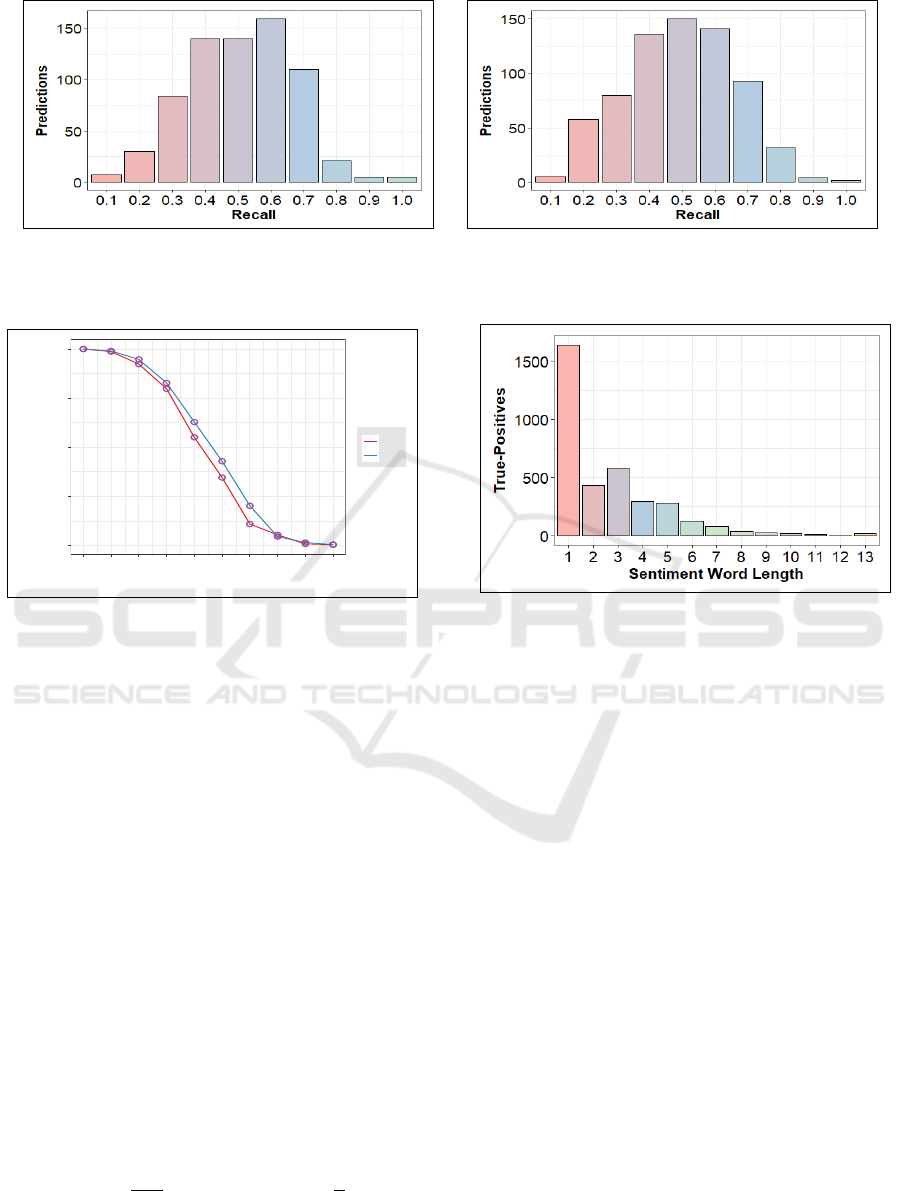

(a) Forward RNN (b) Backward RNN

Figure 8: Distribution of flower predictions that are sampled across the entire train set at discrete recall steps. Contrasting (a)

forward x

1:k

with (b) backward x

k:1

propagation of a sentiment phrase in RNN.

0

25

50

75

100

1 2 3 4 5 6 7 8 9 10

Flower Types

Recall

rnn

gru

Figure 9: Comparing standard to GRU-based RNN on per-

centage recall of flower predictions as a function of increa-

sed flower type count ∈ (1, 2, . . . , m = 10). Curves present

a non-linear decline in performance.

phrase g is obtained by computing the similarity with

each of the train set sequences s

i

, and finding the clo-

sest record in argmax

1≤i≤l

sim(g, s

i

). Figure 7 shows

our baseline performance results as a function of a

logarithmic-scaled regularization parameter, on both

the development and test data sets with an average

precision of 0.22 and 0.23, respectively.

In evaluating our GRU-based RNN system, our

main goal is to quantitatively assess the quality of flo-

wer predictions looked at from differing perspectives.

The top-m ranked probabilities presented in the net-

work output layer may index any combination of flo-

wer and sentiment token types. We let t

f

denote the

number of flower tokens and t

s

the sentiment token

count in this m-sized window of descending probabi-

lities. Thus, the performance interpretation of t

f

and

t

s

in our model amounts to the true-positive and false-

negative observations made in each flower selection

query, respectively. For our flower prediction metric,

we follow the recall measure of relevance that is defi-

ned as the ratio

t

f

t

f

+t

s

, or more compactly

t

f

m

.

Figure 8 provides distribution of flower predicti-

ons in recall bins of 0.1 increments for both forward

Figure 10: Accumulated true-positives of flower predicti-

ons as a function of the word length of the sentiment

phrase. The data produced matches the vocabulary distri-

bution shown in Figure 4.

and backward sentiment-phrase propagation in RNN

(Schuster and Paliwal, 1997), and in Figure 9, we

translate the discrete data to a continuous recall curve

as a function of a non-descending number of flower

types in a bouquet. The average recall for the sweet-

spot content of one to five flower types is 87.8% for

GRU-based, and 81.5% for plain RNN, both outper-

forming the softmax regression baseline by a factor of

3.8X and 3.5X, respectively. In Figure 10, we present

the collective number of true-positives as a function

of a non-descending word length of the sentiment text

sequence. Flower predictions closely correlate with

the word length distribution in the vocabulary (Figure

4), mainly owing to streaming sentiment context to

RNN in an order prescribed by spatial proximity in

semantic vector space.

High-end bouquets created of tens of flower ty-

pes are considered a rarity, but despite their limited

presence and small floral market-share, they make an

important case for our system evaluation. In Figure

11, we review the distribution of flower predictions

for high-end bouquets with the system hyperparame-

ter m = 50. Distinctly, incidents of unpredictable bou-

quet selections do transpire and display a fairly sym-

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

418

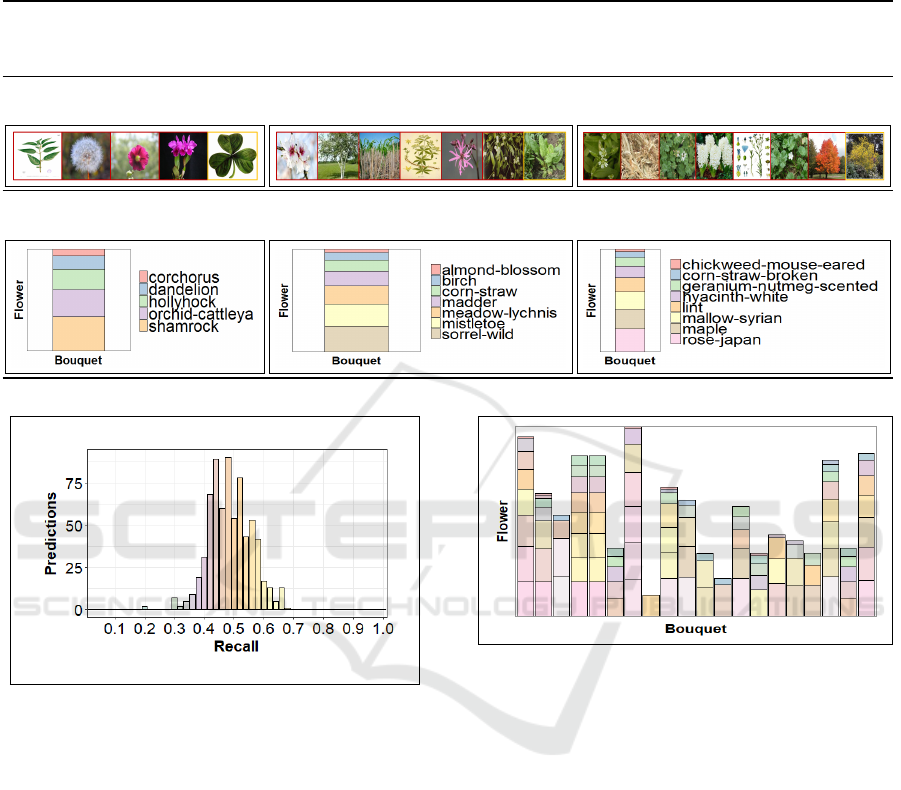

Table 3: Visualization of statistically created flower selections that were prompted by user-supplied sentiment expressions,

shown at the top. We outline side-by-side three bouquets of five, seven, and eight flower candidates, respectively. Depicted

are thumbnail images for both the pivotal flower, on the far right, and top-ranked supporting flowers to its left, along with

individual flower names and their proportional mix weights.

Sentiments

light heartedness wit ill-timed beauty is your only attraction

Flowers

Proportions

Figure 11: Distribution of flower predictions for high-end

bouquets (m = 50). Shown sampled at discrete recall steps

of five flower type bundles, as unpredictable selections

transpire symmetrically.

metrical behavior with sizes ranging from 1 to 14, ex-

cluding a bouquet size of ten plants with two predicti-

ons, and from 35 to 50 assuming no predictions. Con-

versely, for bouquets of mid-range sizes from 20 to

29 species that possess each at least twenty predicti-

ons, the total predictions amounts to 608 and repre-

sent about 86.7 percent of the training set dimension.

We note that unpredictable selection sizes are of very

low probability for mainline bouquets (m ≤ 10), and

for high-end bouquets, to ensure the requested num-

ber of flower types issued by the user is satisfied, our

system supplies the user a precompiled list of prefer-

red bouquet sizes to chose from, for each m.

In Table 3, we present end-to-end visualization

of our approach to statistically-generated flower se-

lections from decoded sentiment clauses. Outlined

Figure 12: A random sample distribution of sentiment sen-

sible bouquets, each shown with proportional weights of an

assembled size that ranges from one to eight flower types.

side-by-side are three bouquets of five, seven, and

eight flower candidates, respectively. The incoming

short-text sentiment sequences are first evaluated for

the most similar sentiment phrase retained in one

of the language translation records to enter our pi-

peline, shown at the top. We then depict compositi-

onal thumbnail images of the pivotal flower, placed

on the far right, and the supplemental flowers to its

left, along with individual names of plants and their

respective proportional blend-weights. Stacked rela-

tively, slices are shown in a non-ascending manner,

starting from the principal flower at the bottom and up

to the lowest ranked flower. In Figure 12, we highlight

a sample distribution of twenty sentiment-perceived

bouquets, each with a unique center-of-attention flo-

wer. Selections are shown for varying sizes ranging

from one to eight plant types.

Machine Floriography: Sentiment-inspired Flower Predictions over Gated Recurrent Neural Networks

419

6 CONCLUSIONS

In this work, we have demonstrated the plausible po-

tential in composing a bouquet made of a lead and

supporting flowers by attending to a set of unquali-

fied sentiment expressions we stream over RNN. We

confirmed that GRU-based RNN improved our flo-

wer prediction quality by about ten percentage points

compared to a standard RNN, and feeding RNN with

spatiotemporal sentiment context prove particularly

beneficial to the performance of short-text sequences.

Yet using bidirectional propagation of sentiment word

vectors that enter RNN was less instinctive, and con-

tributed to an inconsequential prediction gain. Our

proposed simple workflow offers on average high pre-

diction recall to hundreds of mix choices for a main-

line bouquet, and sends a more cohesive emotional

message that is made of semantically related senti-

ments.

To the extent of our knowledge, the work we pre-

sented is first to apply computational linguistic mo-

deling to the language of flowers. We contend that

floriography is an important NLP discipline to pur-

sue from both its rooted historical impact on society

culture, and the prospect to influence areas of critical

theory and sentiment analysis. In its current state, the

corpus we used in this paper is small and challenging,

but we anticipate the language to expand sentiment

translation to thousands of flower plants and further

merit our statistically reasoned system. A direct pro-

gression of our work is to evolve to a task that matches

flower-sentiment pairs from unstructured full text and

not just from a set of prescribed sentiment phrases,

and have a profound practical importance to impact

a much broader scope of application domains that in-

clude cryptography and secured communication.

ACKNOWLEDGEMENTS

We would like to thank the anonymous reviewers for

their insightful suggestions and feedback.

REFERENCES

Baeza-Yates, R. and Ribeiro-Neto, B., editors (1999).

Modern Information Retrieval. ACM Press Se-

ries/Addison Wesley, Essex, UK.

Cho, K., van Merrienboer, B., Bahdanau, D., and Ben-

gio, Y. (2014). On the properties of neural machine

translation: Encoder-decoder approaches. CoRR,

abs/1409.1259. http://arxiv.org/abs/1409.1259.

Chung, J., G

¨

ulc¸ehre, C¸ ., Cho, K., and Bengio, Y. (2014).

Empirical evaluation of gated recurrent neural net-

works on sequence modeling. CoRR, abs/1412.3555.

http://arxiv.org/abs/1412.3555.

Diffenbaugh, V. (2011). Victoria’s dictionary of flo-

wers. http://aboutflowers.com/images/stories/Florist/

languageofflowers-flowerdictionary.pdf.

Elman, J. L. (1990). Finding structure in time. Cognitive

Science, 14(2):179–211.

Hoang, C. D. V., Cohn, T., and Haffari, G. (2016). In-

corporating side information into recurrent neural net-

work language models. In Human Language Techno-

logies: North American Chapter of the Association

for Computational Linguistics (NAACL), pages 1250–

1255, San Diego, California.

Hochreiter, S. and Schmidhuber, J. (1997). Long short-term

memory. Neural Computation, 9(8):1735–1780.

Hofmann, T. and Buhmann, J. (1995). Multidimensional

scaling and data clustering. In Advances in Neural

Information Processing Systems (NIPS), pages 459–

466. MIT Press, Cambridge, MA.

Kaufman, L. and Rousseeuw, P. J., editors (1990). Finding

Groups in Data: An Introduction to Cluster Analysis.

Wiley, New York, NY.

Lee, J. Y. and Dernoncourt, F. (2016). Sequential short-text

classification with recurrent and convolutional neural

networks. In Human Language Technologies: North

American Chapter of the Association for Computatio-

nal Linguistics (NAACL), pages 515–520, San Diego,

California.

Mikolov, T., Chen, K., Corrado, G. S., and Dean, J.

(2013a). Efficient estimation of word represen-

tations in vector space. CoRR, abs/1301.3781.

http://arxiv.org/abs/1301.3781.

Mikolov, T., Karafi

´

at, M., Burget, L., Cernock

´

y, J., and

Khudanpur, S. (2010). Recurrent neural network ba-

sed language model. In International Speech Commu-

nication Association (INTERSPEECH), pages 1045–

1048, Chiba, Japan.

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. S., and

Dean, J. (2013b). Distributed representations of words

and phrases and their compositionality. In Advances in

Neural Information Processing Systems (NIPS), pages

3111–3119. Curran Associates, Inc., Red Hook, NY.

Pennington, J., Socher, R., and Manning, C. D. (2014).

GloVe: Global vectors for word representation. In

Empirical Methods in Natural Language Processing

(EMNLP), pages 1532–1543, Doha, Qatar.

R Core Team (2013). R: A Language and Environment

for Statistical Computing. R Foundation for Sta-

tistical Computing, Vienna, Austria. http://www.R-

project.org/.

Roof, L. and Roof, A. H. (2010). Language of flowers pro-

ject. http://languageofflowers.com/index.htm.

Salton, G. M., Wong, A., and Yang, C. S. (1975). A vector

space model for automatic indexing. Communications

of the ACM, 18(11):613–620.

Schuster, M. and Paliwal, K. K. (1997). Bidirectio-

nal recurrent neural networks. Signal Processing,

45(11):2673–2681.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

420

Sutskever, I., Martens, J., and Hinton, G. (2011). Genera-

ting text with recurrent neural networks. In Internati-

onal Conference on Machine Learning (ICML), pages

1017–1024.

Sutskever, I., Vinyals, O., and Le, Q. V. (2014). Sequence

to sequence learning with neural networks. In Advan-

ces in Neural Information Processing Systems (NIPS),

pages 3104–3112. Curran Associates, Inc., Red Hook,

NY.

Torgerson, W. S. (1958). Theory and Methods of Scaling.

John Wiley and Sons, New York, NY.

Tran, K. M., Bisazza, A., and Monz, C. (2016). Recur-

rent memory networks for language modeling. In Hu-

man Language Technologies: North American Chap-

ter of the Association for Computational Linguistics

(NAACL), pages 321–331, San Diego, California.

Zeiler, M. D. (2012). ADADELTA: an adaptive

learning rate method. CoRR, abs/1212.5701.

http://arxiv.org/abs/1212.5701.

Machine Floriography: Sentiment-inspired Flower Predictions over Gated Recurrent Neural Networks

421