Big Brother is Smart Watching You

Privacy Concerns about Health and Fitness Applications

Christoph Stach

Institute for Parallel and Distributed Systems, University of Stuttgart,

Universit

¨

atsstraße 38, D-70569 Stuttgart, Germany

Keywords:

Smartbands, Health and Fitness Applications, Privacy Concerns, Privacy Management Platform.

Abstract:

Health and fitness applications for mobile devices are becoming more and more popular. Due to novel wearable

metering devices, the so-called Smartbands, these applications are able to capture both health data (e. g., the

heart rate) as well as personal information (e. g., location data) and create a quantified self for their users.

However, many of these applications violate the user’s privacy and misuse the collected data. It becomes

apparent that this threat is inherent in the privacy systems implemented in mobile platforms. Therefore, we

apply the

P

rivacy

P

olicy

M

odel (PPM) a fine-grained and modular expandable permission model to deals with

this problem. We implement our adapted model in a prototype based on the

P

rivacy

M

anagement

P

latform

(PMP). Subsequently, we evaluate our model with the help the prototype and demonstrate its applicability for

any application using Smartbands for its data acquisition.

1 INTRODUCTION

With the Internet of Things, the technology landscape

changed sustainably. New devices with various sen-

sors and sufficient processing power to execute small

applications, so-called Smart Devices, captured the

market. Due to their capability to interconnect with

each other via energy efficient technologies such as

Bluetooth LE, they are able to share their sensor data

with other devices over a long period of time. As a

consequence of this accumulation of data from highly

diverse domains (e. g., location data, health data, or

activity data), new types of devices as well as novel

use cases for pervasive applications emerge. In the

consumer market, especially Smartbands, i. e., har-

dware devices equipped with GPS and a heartbeat

sensor among others which are carried on the wrist,

are currently very popular. Such devices are controlled

via Smartphone applications and provide the recorded

data to these applications. The applications analyze

the data, augment it with additional knowledge about

the user which is stored on the Smartphone, and gain

further insights from it. Since the Smartbands are

small and comfortable to wear, they do not interfere

their users’ activities in any way. So, they can be kept

on even when doing sports or while sleeping.

That is why innovative fitness applications come

up which make use of Smartbands. Such applications

are able, e. g., to capture the user’s movement patterns

in order to determine his or her current activity (Knig-

hten et al., 2015), track him or her to calculate the

traveled distance, his or her speed as well as the na-

ture of the route (this data can be used to calculate the

calorie consumption) (Wijaya et al., 2014), and even

analyze his or her sleeping behavior (Pombo and Gar-

cia, 2016). This data is processed on the Smartphone

and visualized in a user-friendly manner. However, in

order to achieve a quantified self, i. e., a comprehen-

sive mapping of our lifestyle to quantifiable values to

assess our daily routines, this data is sent to a central

storage where it is further analyzed and provided for

other stakeholders, such as physicians (Khorakhun and

Bhatti, 2015).

While this technology has the potential to radically

modify the quality of human life as an unhealthy life-

style or looming up diseases can be detect at an early

stage, it also constitutes a threat towards the user’s pri-

vacy. As Smartbands collect a lot of sensitive data, an

attacker could get insights into a user’s daily routines

or even his or her health status.

Thus, there is a lot research done concerning

the vulnerability of the involved devices (Smartband,

Smartphone, and back-end) or the data transfer chan-

nels in between (Lee et al., 2016). Due to thereby

detected vulnerabilities, the Federal Trade Commis-

sion proposed a catalog of measures of how to pro-

Stach, C.

Big Brother is Smart Watching You - Privacy Concerns about Health and Fitness Applications.

DOI: 10.5220/0006537000130023

In Proceedings of the 4th International Conference on Information Systems Security and Privacy (ICISSP 2018), pages 13-23

ISBN: 978-989-758-282-0

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

13

vide security for these devices (Mayfield and Jagielski,

2015).

However, all of these efforts target attacks from the

outside. Since there are a lot of stakeholders interested

in this kind data, including insurance companies or

the advertisement industry, the data becomes highly

valuable (Funk, 2015) and a lot of “free” applications

sell the collected data to third parties (Leontiadis et al.,

2012). This brings up a completely different problem:

How can the user be informed about the data usage of

an application and how can s/he be enabled to control

the data access privileges of an application as well as

anonymize his or her data before providing it to an

application (Patel, 2015)?

To this end, this paper yields the following contri-

butions:

•

We analyze the state of the art as well as research

projects concerning the protection of private data

in the context of Smartband applications.

•

We adapt a privacy policy model which enables

users to control the data usage of Smartband appli-

cations in a fine-grained manner. Our approach is

based on the

P

rivacy

M

anagement

P

latform (PMP)

and its

P

rivacy

P

olicy

M

odel (PPM) (Stach and

Mitschang, 2013, Stach and Mitschang, 2014).

•

We introduce a prototypical implementation of a

privacy mechanism for Smartband applications

using our privacy policy model.

•

We evaluate our approach and demonstrate its ap-

plicability.

The remainder of the paper is structured as follows.

In Section 2, the privacy control mechanisms of the

currently prevailing mobile platforms (namely Apple’s

iOS and Google’s Android) are discussed, and the

prevailing connection standard Bluetooth LE is cha-

racterized. Section 3 looks at some related work, that

is enhanced privacy control mechanisms for mobile

platforms. Our approach for such a mechanism specifi-

cally for Smartbands and similar devices is introduced

in Section 4. Following this, our generic concept is

implemented using the PMP in Section 5. Section 6

evaluates our approach and reveals whether it fulfills

the requirements towards such a privacy control me-

chanism. Finally, Section 7 concludes this paper and

glances at future work.

2 STATE OF THE ART

In the following, we explain, why especially the usage

of applications for Smartbands and similar Smart De-

vices such as health or fitness applications constitutes

a real threat to privacy. To this end, it is necessary to

look at the privacy mechanisms implemented in mo-

bile platforms as well as the modus operandi of how

to connect a Smartband with a Smartphone.

Privacy Mechanisms in Current Mobile Platforms.

Every relevant mobile platform applies some kind of

permission system to protect sensitive data (Felt et al.,

2012a). This means in effect, that every application has

to declare which data is processed by it. The system

validates for each data access whether the permission

can be granted. A permission refers not to a certain

dataset but to a sensor or a potentially dangerous sy-

stem functionality (Barrera et al., 2010). This concept

is implemented divergently in every mobile platform.

An iOS application requires Apple’s approval prior

to its release. In this process, automated and manual

verification methods check whether the permission re-

quests are justified. If the permissions are granted by

Apple, the application is signed and released. The

user is only informed about permissions which affect

his or her personal information (e. g., the contacts).

On the contrary, Google does not engage in the per-

mission process at all (Enck et al., 2009). When an

Android application is installed, the user is informed

about every requested permission and has to grant all

of them in order to be able to proceed with the installa-

tion (Barrera and Van Oorschot, 2011). With Android

6.0 Runtime Permissions are introduced. A Runtime

Permission is not requested at installation time, but

each time data is accessed, which is protected by the

respective permission.

However, studies prove, that users are unable to

cope with the huge amount of different permissions—

especially since they are not able to understand which

consequences the granting of a certain permission

has (Felt et al., 2012b). This is why Google divide

Android’s permissions into two classes since Android

6.0: Normal Permissions require no longer the user’s

approval. Only Dangerous Permissions (which are a

superset of the Runtime Permissions) have to be gran-

ted. For instance, the ACCESS FINE LOCATION

(access to the GPS) or BODY SENSORS (access to

heart rate data) permission belong to this category.

Yet, the BLUETOOTH and INTERNET permission

are classified as Normal Permissions. Figure 1 depicts

the consequences of these changes. An application

which needs to access GPS data, discover, pair, and

connect to Bluetooth devices, as well as open network

sockets has to declare the following four permissions

in its Manifest: ACCESS FINE LOCATION, BLU-

ETOOTH, BLUETOOTH ADMIN, and INTERNET.

On devices running a pre-Marshmallow Android ver-

sion (

< 6.0

), the user has to grant all permissions at

installation time. The installation dialog however in-

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

14

(a) Android 5.1 Installation Dialog (b) Android 6.0 Installation Dialog (c) Request at Runtime

Figure 1: Permission Requests in Different Android Versions.

forms him or her about the Dangerous Permissions,

only (see Figure 1a). On devices with a higher Android

version, even the Runtime Permissions are hidden (see

Figure 1b). Instead, s/he has to grant the permission

every time the application tries to get access to GPS

data (see Figure 1c). In any case, the user is not aware

of the fact, that the application is also able to send this

data to any Bluetooth device or the Internet.

Transmission Standard of Smart Devices.

Most

of the current Smart Devices use Bluetooth LE to con-

nect to each other as it has a lower power consump-

tion than Classic Bluetooth and a higher connectivity

range than NFC. The device vendor defines UUIDs

via which other devices are able to request the device’s

services. For instance, a service of a Smartband could

provide access to the heart rate. The vendor also speci-

fies how the data is encoded by his or her device. As

a consequence, a mobile platform cannot determine

what data is transferred between a Smartphone and an

other Smart Device, since it does not know the map-

ping of the UUIDs to services and also cannot look

into the transmitted data. This is why the permissions

only refers to the general usage of Bluetooth connecti-

ons and not to the type of data which is transmitted.

The same holds for the onward transmission of the

data to a server. Here too, an application only has to

indicate that it needs access to the Internet, but the user

neither is aware of what kind of data the applications

sends out nor where the data is sent to.

Assuming that a Smartband has a built-in GPS

and heart rate senor. Therefore, it is able to provide

both, location and health data to applications. The

application only needs the permission to discover, pair,

and connect to Bluetooth devices (BLUETOOTH and

BLUETOOTH ADMIN) to that end. Yet, both permis-

sions belong to the Normal Permissions category, i. e.,

the system automatically grants the permission and the

user is not informed about it. If the application wants

to access the very same data from the Smartphone di-

rectly, the permissions ACCESS FINE LOCATION

and BODY SENSORS are required. Both of them

belong to the Dangerous Permissions category, i. e.,

the user has to grant every access at runtime. This

classification is reasonable as the covered data reveals

a lot of private information about the user. The usage

of a Smartband bypasses this protective measure com-

pletely. Moreover, the application is able to share this

information with any external sink without the user’s

knowledge. It only has to declare the INTERNET per-

mission in its Manifest—also a Normal Permissions.

Therefore, a static permission-based privacy mecha-

nism as implemented in current mobile platforms is

inapplicable for health or fitness applications using

Smartbands.

As Android assigns the responsibility over sensi-

tive data to the user, a security vulnerability such as

the careless handling of data interchanges with Smart-

bands, causes the most serious consequences. Thus,

the remainder of this paper focuses on Android. Ho-

wever, the insights and concepts are applicable to any

other mobile platform.

3 RELATED WORK

As the prevailing mobile platforms provide no ade-

quate protection for sensitive data, there are a lot of

research projects dealing with better privacy mecha-

nisms for these platforms. In the following, we present

a representative sample of these approaches and deter-

mine to what extent they are applicable for Smartband

applications.

Apex (Nauman et al., 2010) enables the user to

add contextual conditions to each Android permis-

sion. These conditions specify situations in which a

permission is granted. For instance, the user can set

a timeframe in which an application gets access to

private data or define a maximum number of times a

certain data access is allowed. If the condition is not

kept, a

SecurityException

is raised and the appli-

cation crashes. Furthermore, as Apex is based on the

existing Android permissions, it is too coarse-grained

for the Smartband use case.

Big Brother is Smart Watching You - Privacy Concerns about Health and Fitness Applications

15

AppFence (Hornyack et al., 2011) analyzes the in-

ternal dataflow of applications. When data from a

privacy critical source (e. g., the camera or the microp-

hone) is sent to the Internet, the user gets informed.

S/he is then able to alter the data before it is sent out or

s/he can enable the flight mode whenever the affected

application is started. However, AppFence does not

knows which data an applications reads from a Blue-

tooth source. Thereby, it cannot differentiate whether

an applications accesses trivial data from headphones

(e. g., the name of the manufacturer) or private data

from a Smartband (e. g., health data). Moreover, App-

Fence cannot identify to which address the data is sent

to.

Aurasium (Xu et al., 2012) introduces an additio-

nal sandbox which is injected into every application.

This has to be done before the application is installed.

The sandbox monitors its embedded application and

intercepts each access to system functions. Thereby,

Aurasium is not limited to the permissions predefined

by Android. Especially for the access to the Internet,

Aurasium introduces fine-grained configuration opti-

ons, e. g., to specify to which servers the application

may send data to. For every other permission, the user

can simply decide whether s/he wants to grant or deny

it. Moreover, Aurasium is not extensible. That is, it

cannot react to new access modes as introduced by

Smartbands where several data types can be accessed

with the same permission. Also, the bytecode injection

which is required for every application is costly and

violates copyright and related rights.

Data-Sluice (Saracino et al., 2016) considers solely

the problem of uncontrolled data transfer to external

sinks. Therefore, Data-Sluice monitors the any kind of

network activities. As soon as an applications attempts

to open a network socket, the user is informed and s/he

can decide whether the access should be allowed or

denied. Additionally, Data-Sluice logs every network

access and is able to blacklist certain addresses. Ho-

wever, the user is neither informed about which data

is sent to the network nor is s/he able to limit the data

access of an application from any other source, except

for the Internet.

I-ARM Droid (Davis et al., 2012) is the most com-

prehensive approach. The user defines critical code

blocks (i. e., a sequence of commands that accesses or

processes private data) and specifies rewriting rules for

each of them. A generic converter realizes the rewri-

ting at bytecode level. However, this approach is much

too complex for common users. As a consequence,

its derivative RetroSkeleton (Davis and Chen, 2013)

assigns this task to a security expert who creates a con-

figuration according to the user’s demands. Because

of this, frequent changes of the privacy rules are not

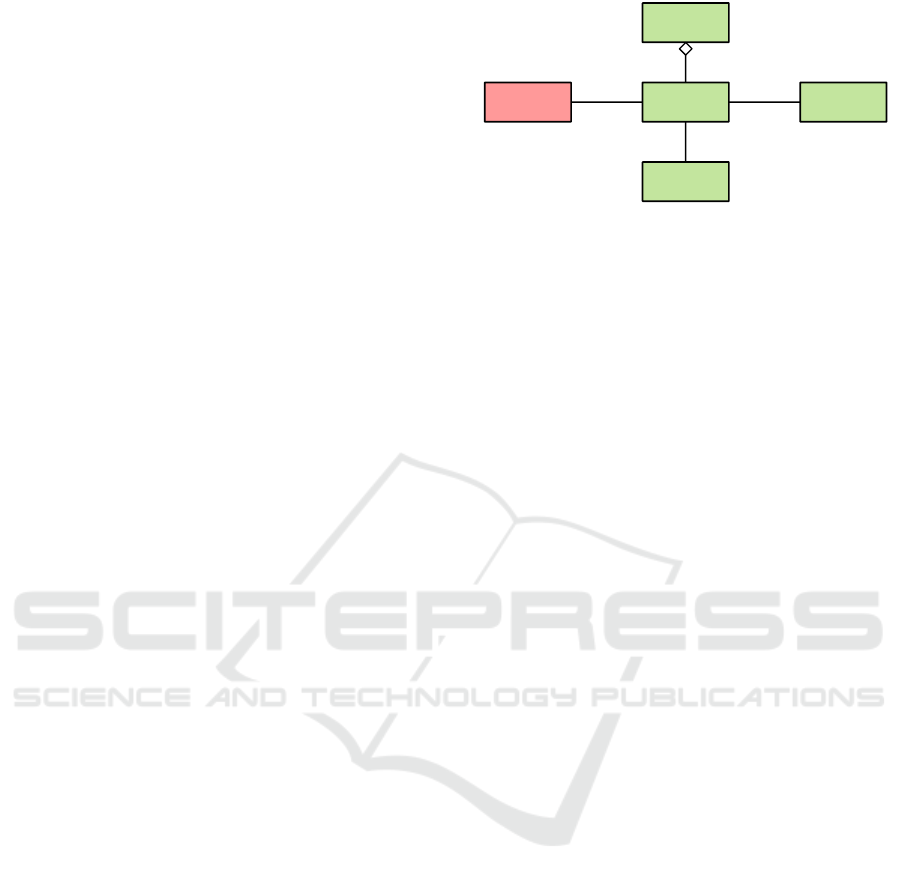

Privacy

Settings

Resource

Group

Application

Privacy

Rule

Privacy

Policy

Services Data

Configuration

Figure 2: Simplified Representation of the Privacy Policy

Model; Untrusted Components are Shaded Red and Trusted

Components are Shaded Green (cf. (Stach and Mitschang,

2013)).

possible—not to mention rule adjustments at runtime.

Additionally, the expert has to know each conceivable

code block that could violate the user’s privacy. In

other words s/he has to know every available Smart-

band, as each vendor defines a specific communication

protocol.

4 A PERMISSION MODEL FOR

SMARTBANDS

None of the privacy mechanisms mentioned in the

foregoing section is applicable for health or fitness ap-

plications using Smartbands due to too coarse-grained

permissions and missing modular expandability in or-

der to support novel device or data types. The

P

rivacy

M

anagement

P

latform (PMP) (Stach and Mitschang,

2013, Stach and Mitschang, 2014, Stach, 2015) pro-

vides these features. Furthermore, the PMP facili-

tates the connection of Smart Devices to Smartpho-

nes (Stach et al., 2017b).

Therefore, we extend the PMP by two additional

components, the Smartband Resource Group and the

Internet Resource Group. With these two components

the PMP enables users to provide the data from Smart-

bands to applications in a privacy-aware manner and

also restricts the transmission of sensitive data to the

Internet.

For that purpose, we characterize the

P

rivacy

P

olicy

M

odel (PPM), which is the foundation of the

PMP, and describe how we adapt it to the Smartband

setting (Section 4.1). Then we outline the operating

principle of the PMP (Section 4.2). Lastly we intro-

duce the concept of our two extensions (Section 4.3

and Section 4.4).

4.1 The Privacy Policy Model

The PPM interrelates applications with data sources

or system functions (labeled as Resource Groups). An

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

16

<<interface>>

IResource

ResourceResourceGroup

1

1..*

Figure 3: Architecture of Resource Groups.

application describes its Features and specifies which

data or system functions are required for their execu-

tion. A Resource Group defines an interface via which

an application can access its data or execute certain

system functions. The user declares in Privacy Rules

which of an application’s Features should be deacti-

vated in order to reduce its access to data or system

functions. Moreover, s/he can refine each Privacy

Rule by adding Privacy Settings, e. g., to downgrade

the accuracy of a Resource Group’s data. The set of

all Privacy Rules forms the Privacy Policy. The model

assumes that applications are untrusted components,

while Resource Groups are provided by trustworthy

parties. The PPM is shown in Figure 2 as a simpli-

fied UML-like class diagram. For more information

on the PPM, please refer to the literature (Stach and

Mitschang, 2013).

In the context of this work, only the Resource

Groups are of interest. Figure 3 gives an insight into

the architecture of a Resource Group. Each Resource

Group defines an interface (

IResource

) and descrip-

tors, which Privacy Settings can be applied to it. The

actual implementation of the defined functions is given

in Resources. A single Resource Group can bundle

many Resources, i. e., many alternative implementa-

tion variants for the interface. For instance, a Resource

Group Location could provide a single method to query

the users current location. This method is implemen-

ted in two varying kinds, once using the GPS and once

using the Cell-ID. Depending on the available har-

dware, the user’s settings, etc., the Resource Group

selects the proper Resource when an application reque-

sts the data. In addition to it, the Location Resource

Group could define a Privacy Settings Accuracy via

which the user defines how accurate the location data

is, that is up to how many meters the actual location

should differ from the provided location in order to

restrict the application’s data access. Naturally, s/he is

also able to prohibit the access to the Resource Group

completely for a certain application.

4.2 The Privacy Management Platform

The PMP is a privacy mechanism which implements

the PPM. Due to the model’s features mentioned

above, the PMP has two characteristics which are

highly beneficial for the work at hand: (a) On the

one hand, the PMP is

expandable by modules

. That

is, further Resource Groups as well as Resources can

be added at runtime. That way recent device models

(by adding Resources) and even completely new kinds

of devices or sensors (by adding Resource Groups) can

be supported. (b) On the other hand, the PMP supports

a

fine-grained access control

. Each Resource Group

defines its own Privacy Settings. These settings are

meeting the demands of the corresponding device. So,

a user is not just able to turn a device or sensor on

and off in order to protect his or her private data, but

s/he can also add numeric or textual restrictions. For

instance, a Resource Group for location information

can have a numeric Privacy Setting via which the accu-

racy of the location data can be reduced, or an Internet

Resource Group can use a textual Privacy Setting to

specify to which addresses an application is allowed

to send data to.

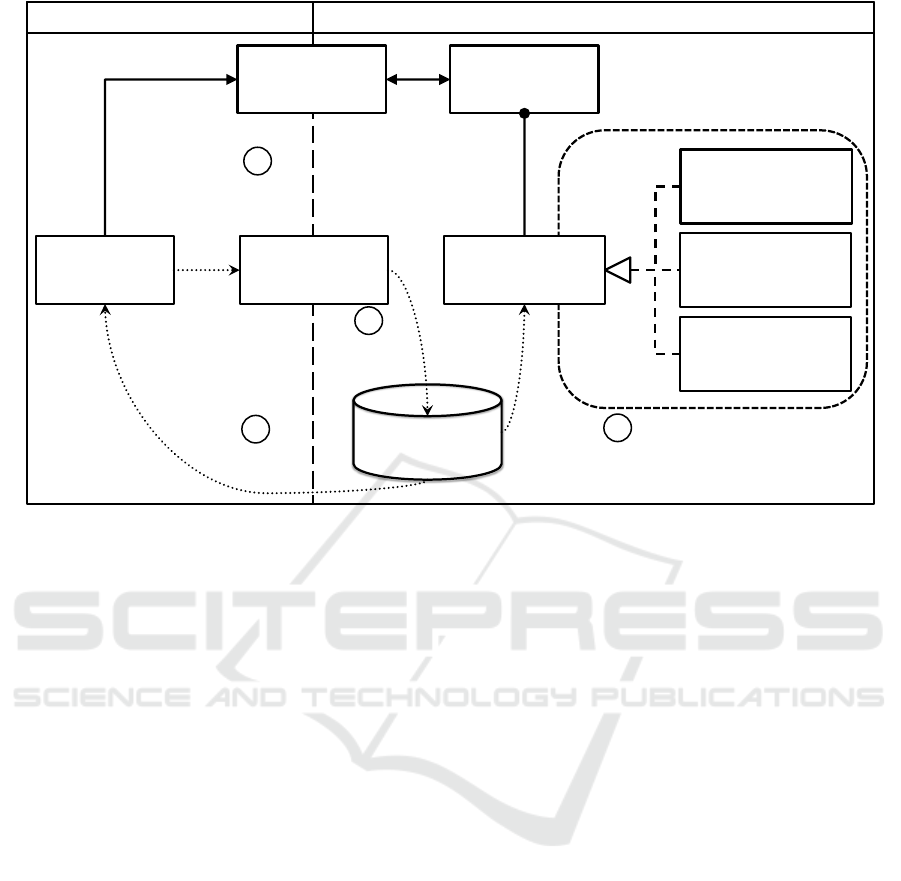

To accomplish these objectives, the PMP is an in-

termediate layer between the application layer and the

actual application platform. For simplification purpo-

ses, in the context of this work the PMP can be seen

as an interface to the application platform. Figure 4

shows the implementation model of the PMP in a sim-

plified representation. Initially, an application requests

access to data sources or system functions—i.e., to a

Resource Group—via the PMP API

1

. The PMP

checks, whether this request complies with the Pri-

vacy Rules in the Privacy Policy

2

. These rules also

specify which restrictions (Privacy Setting) apply for

the respective application. If the access is granted, a

fitting Resource within the requested Resource Group

is selected

3

. For each Resource, the PMP also of-

fers two fake implementation (Cloak Implementation

and Mock Implementation) with highly anonymized

or completely randomized data. The proper imple-

mentation of the selected Resource is then bond as

a Binder

1

to the IBinder interface and the PMP for-

wards the corresponding Binder Token to the inquiring

application 4 .

The actual access to a Resource is realized by the

Android Binder Framework. Proxy components speci-

fied therein use interprocess communication (IPC) to

interchange data with the Stub bond to the implemen-

tation of the Resource. An application cannot access a

Resource directly without the appropriate Binder To-

ken. Thereby it is assured that every data request has to

be made via the PMP and as a consequence the PMP

is able to verify for every request whether it complies

to the Privacy Policy. As all Resource Groups are im-

plemented as subpackages of the PMP and run in the

same process, they are executed in a shared sandbox.

That way, the PMP is able to interact with Resource

Groups directly.

1

see https://developer.android.com/reference/an-droid/

os/Binder.html

Big Brother is Smart Watching You - Privacy Concerns about Health and Fitness Applications

17

Proxy

Application

Process of the Application Process of the PMP

PMP API

Stub

Resource

<<IBinder>>

IResource

<<Binder>>

Mock

Implementation

<<Binder>>

Real

Implementation

<<Binder>>

Cloak

Implementation

IPC

1

Resource

Access

2

Permission

Check

3

Selection of

Implementation Variant

4

Binder

Token

Privacy

Policy

Figure 4: Simplified Implementation Model of the Privacy Management Platform.

Due to these features, we are able to use the PMP

for our approach of a privacy mechanism for Smart-

band applications. Essentially, two additional Re-

source Groups are required to achieve this goal: A

Resource Group for Smartbands which restricts the

access to the diverse data types of these devices and a

second Resource Group which restricts the data trans-

fer of Smartband applications to the Internet. The

specifications for these Resource Groups are given in

the following.

4.3 Smartband Resource Group

The Smartband Resource Group has to provide a uni-

form interface to any Smartband model (including

Smart Watches and related devices). Therefore, the

interface is composed as a superset of data access ope-

rations which are supported by most of such devices.

This includes access to personal data (e. g., age or

name), health-related data (e. g., heart rate or blood

sugar level), activity-related data (e. g., acceleration

or orientation), and location data. In addition to these

reading operations, most Smartband also have a small

display to show short notifications. So, the Smartband

Resource Group also defines a writing operation to

send messages to this display. However, not every

Smartband model supports each of these operations.

The Resources implementing the operations for the

particular Smartbands have to deal with this problem.

They throw an

UnsupportedOperationException

which is caught and handled by the PMP (e. g., by

passing mock data to the application).

To restrict access to data provided by Smartbands,

the Smartband Resource Group defines several fine-

grained Privacy Settings. Basically, there is a two-

valued Privacy Setting for each data type via which

the particular data access has to be granted or denied.

In this way, the user is able to decide which applica-

tion is allowed to access which data from his or her

Smartband. As mentioned above, this is already a ma-

jor advance in comparison with state of the art, since

Android supports only a single Bluetooth permission

for any kind of device and data—let alone the fact

that users cannot see whether an application requests

this permission at all! Furthermore, the Smartband

Resource Group supports for certain data types addi-

tional Privacy Settings (e. g., the accuracy of location

data can be reduced). Moreover, each data source in

the Smartband Resource Group can be replaced by a

mock implementation. All mock values are within a

realistic range so that applications cannot observe a

difference.

Moreover, Smartbands providing access to loca-

tion data can be integrated into the existing Location

Resource Group (see (Stach, 2013)) as additional Re-

sources. So, the PMP is able to switch between the

available Resources when needed (e. g., in case the

Smartband’s location data is more precise than the one

from the Smartphone).

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

18

4.4 Internet Resource Group

The Internet Resource Group provides a simplified in-

terface to send data to and receive data from a network

resource. Essentially, both functions have two parame-

ters, an address of the destination device and the actual

payload. The payload is also used to store the response

from the network resource. This simplification of the

interface is adequate in the context of Smartband ap-

plications. In order to support applications requiring

extensive interactions with network resources, this in-

terface can be extended by more generic I / O functions

(e. g., to support several network protocols).

Analogously to the Smartband Resource Group,

also the Internet Resource Group defines two-valued

Privacy Settings for both I / O functions. Thereby the

user is able to specify for each application separately

whether it is allowed to send data to and / or receive

data from the Internet. In addition to it, also the admis-

sible destination addresses is restrictable. In theory, it

is possible to realize this by a textual Privacy Setting

via which the user is able to declare admissible addres-

ses. However, the user’s attention is a finite resource

and such a fine-grained address-wise restriction over-

strains him or her (B

¨

ohme and Grossklags, 2011). On

this account, the Internet Resource Group categorizes

addresses by various domains, such as the health dom-

ain or a domain for location-related information. The

category “public” does not restrict the admissible des-

tination addresses at all. In this way, the user is able to

comprehend which domain is reasonable for a certain

kind of application. However, the Resource Group

can also be extended by such textual Privacy Settings

described above if required—e. g., expert users might

ask for a more fine-grained access control.

5 PROTOTYPICAL

IMPLEMENTATION

In order to verify the applicability of our approach, we

implemented a simple fitness application in addition

to the two Resource Groups. The fitness application

creates a local user profile, including inter alia his or

her age, height, and weight. Whenever the user works

out, data from the Smartband’s motion sensors (e. g., to

determine his or her activity) and health data (e. g., his

or her heart rate) is captured. This data is augmented

by location data from the Smartband to track the user’s

favorite workout locations. To share this data with

others (e. g., with an insurance company to document

a healthy lifestyle) or to create a quantified self, this

data can be uploaded to an online account.

i n t e r f a c e Sm a r t b and R e s o urc e {

/ / a c c e s s t o p e r s o n a l d a t a

i n t get A ge ( ) ;

. . .

/ / a c c e s s t o w o r k o u t da t a

i n t g e t H e a r t R a t e ( ) ;

. . .

/ / a c c e s s t o l o c a t i o n d a t a

L o c a t i o n g e t L o c a t i o n ( ) ;

. . .

}

Listing 1: Interface Definition for the Smartband Resource

Group in AIDL (excerpt).

To this end, the fitness application defines five Fe-

atures which can be individually deactivated by the

PMP. When the application is installed, the PMP lists

all of these Features and the user can make a selection

(see Figure 5a). For instance, a user might want to

use the application to capture his or her workout pro-

gresses in a local profile, but the application should

not track the workout locations or upload the profile

to a server. This selection characterizes which service

quality the user expects from the application. In order

to find out which permissions are required for each

Feature, the PMP can show additional information.

Applications access data via the interface of the

respective Resource Group. This interface is described

in the Android Interface Definition Language (AIDL).

Listing 1 shows such a definition for the Smartband

Resource Group in excerpts

2

.

Beyond that, the user is also able to adjust Privacy

Rules from a Resource Group’s point of view. To that

end, all Resource Groups requested by the particular

application are listed together with the therein defined

Privacy Settings (see Figure 5b). Two-valued Privacy

Settings such as “Send Data” can be directly turned on

and off simply by clicking on them. For textual and

numerical Privacy Settings such as “Location Accu-

racy” the user can enter new values in an input mask

with a text box. Enumeration Privacy Settings such as

“Admissible Destination Address” open an input mask

with a selection box (see Figure 5c). If the selected

Privacy Settings are too restrictive for a certain Fea-

ture, the PMP deactivates the Feature and informs the

user.

The Privacy Settings are defined within a so-called

Resource Group Information Set (RGIS) in XML. Si-

milar to Android’s App Manifest this file contains me-

tadata required by the PMP about the Resource Group.

Listing 2 shows an excerpt of the Privacy Settings de-

finition in the RGIS for the Internet Resource Group.

2

The data type

Location

is not supported by AIDL. Ad-

ditional type definition files are required.

Big Brother is Smart Watching You - Privacy Concerns about Health and Fitness Applications

19

<? xml v e r s i o n = ” 1 . 0 ” e n c o d i ng = ”UTF−8” ?>

< r e s o u r c e G r o u p I n f o r m a t i o n S e t>

< r e s o u r c e G r o u p I n f o r m a t i o n i d e n t i f i e r =

” i n t e r n e t ”>

<name> I n t e r n e t< / name>

< d e s c r i p t i o n>Manages an y n e t w o r k

c o n n e c t i o n s .< / d e s c r i p t i o n>

< / r e s o u r c e G r o u p I n f o r m a t i o n>

< p r i v a c y S e t t i n g s>

< p r i v a c y S e t t i n g i d e n t i f i e r =”

s e n d D at a ” v a l i d V a l u e D e s c r i p t i o n = ” ’

t r u e ’ , ’ f a l s e ’ ”>

<name>Send D a ta< / name>

< d e s c r i p t i o n>Al low s a p ps t o se n d

d a t a t o t h e I n t e r n e t .< / d e s c r i p t i o n>

< / p r i v a c y S e t t i n g>

< p r i v a c y S e t t i n g i d e n t i f i e r =”

d e s t i n a t i o n A d d r e s s ”

v a l i d V a l u e D e s c r i p t i o n =” ’PRIVATE ’ , ’

HEALTH’ , ’LOCATION’ , ’PUBLIC ’ ”>

<name> D e s t i n a t i o n A d d r e s s< / name>

< d e s c r i p t i o n> R e s t r i c t s t h e

a d m i s s i b l e d e s t i n a t i o n a d d r e s s e s .< /

d e s c r i p t i o n>

< / p r i v a c y S e t t i n g>

. . .

< / p r i v a c y S e t t i n g s>

< / r e s o u r c e G r o u p I n f o r m a t i o n S e t>

Listing 2: Resource Group Information Set for the Internet

Resource Group (excerpt).

As seen in the listing, each Privacy Setting mainly

consists of a unique identifier, a valid value range,

and a human-readable description. The PMP derives

its configuration dialogs such as the Privacy Settings

dialog (see Figure 5b) from the RGIS.

While the Feature selection is more adequate for

normal users, the direct configuration of the Privacy

Settings is meant for fine tuning by expert users. Ac-

cording to the activated Features and the configuration

of the Privacy Settings, the PMP adapts the appli-

cation’s program flow, binds the required Resources,

and performs the requested anonymization operations.

The user can adjusted all settings at runtime, e. g., to

activate additional Features. Neither applications nor

Resource Groups have to deal with any of these data

or program flow changes.

6 ASSESSMENT

As shown by prevailing studies, mobile platforms have

to face novel challenges concerning the privacy-aware

processing of data from Smartbands (Funk, 2015,Patel,

2015). Since Android permissions are based on techni-

cal functions of a Smartphone, there is only a single

generic BLUETOOTH permission restricting access to

any kind of Bluetooth devices including headphones,

Smartbands, and even medical devices.

On the contrary, our approach introduces a more

data-oriented permission model

. In this way the

user is able to select specifically which data or function

of a Smartband an application should have access to.

Moreover, the PPM, which is the basis of our mo-

del, supports not only two-valued permission settings

(grant and deny) but also numerical or textual con-

straints. Thereby, it enables a

fine-grained access

control

, which is essentially for devices such as Smart-

bands dealing with a lot of different sensitive data.

Lastly, our model is

extensible

. That is, new devices

can be added at runtime as Resources and are imme-

diately available for any application. In conclusion,

due to these three key features our approach solves the

privacy challenges of Smartband applications.

In addition, our approach also provides a solution

for another big challenge in the context of Smartband

applications: The

interoperability of devices

is low.

This means in effect, that each device uses its pro-

prietary data format for the data interchange with an

application (Chan et al., 2012). So, each application

supports a limited number of Smartbands, only. With

our Smarband Resource Group, an application develo-

per has to program against its given unified interface

and the PMP selects the appropriate Resource which

handles the data interchange.

Evaluation results of other Resource Groups show

that the PMP produces an acceptable overhead concer-

ning the overall runtime of an application, the average

CPU usage, the total battery drain, and the memory

usage (Stach and Mitschang, 2016). Likewise, the

usability of the PMP satisfies the users’ expectati-

ons (Stach and Mitschang, 2014). Both properties

apply also to our approach since it is based on the

PMP. Therefore, the usage of the PMP is particularly

useful in a health context (Stach et al., 2018), as early

prototypes of health applications have shown (Stach,

2016).

However, our approach is only able to protect the

user’s privacy as long as his or her data is processed

on the Smartphone. Once the data is sent out, the user

is no longer in control. Since many applications fall

back on online services for data processing (such as

(Wieland et al., 2016) or (Steimle et al., 2017)), it is

part of future work to deal with this problem. In the

following, we give an outlook on a solution to this

problem.

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

20

(a) Feature Selection (b) Privacy Settings (c) Internet Access Restriction

Figure 5: PMP-based Permission Configuration.

7 CONCLUSION AND OUTLOOK

Since the Internet of Things gains in importance, new

devices with various sensors come into the market.

That way, diverse measuring instruments for home use

are available for the end-user. Especially Smartbands

and Smart Watches, i. e., hardware devices equipped

with GPS and a heartbeat sensor among others which

are carried on the wrist, are becoming more and more

popular. Due to their capability to interconnect with

other devices, Smartphone applications are able to

make use of the captured data (e. g., innovative fitness

applications are able to create a quantified self by

analyzing this data). However, without an appropriate

protection mechanism, such applications constitute a

threat towards the user’s privacy, as Smartbands have

access to a lot of sensitive data.

Since the privacy mechanisms in current mobile

platforms—namely Android and iOS—constitute no

protection at all as they are not tailored for the usage

of Smartbands, we introduce a novel privacy me-

chanism specially designed for this use case. Our

approach is based on the

P

rivacy

P

olicy

M

odel

(PPM) and implemented for the

P

rivacy

M

anagement

P

latform (PMP) (Stach and Mitschang, 2013, Stach

and Mitschang, 2014). In this way we are able to pro-

vide a fine-grained access control to each of a Smart-

band’s data type. Moreover, the user is able to restrict

the network access in terms of selecting valid addres-

ses with which an application is allowed to establish a

connection.

Evaluation results show, that our approach meets

the requirements of such a privacy mechanism. Ho-

wever, this is just a first protective measure. As mo-

dern Smartphone applications commonly serve as data

sources for comprehensive stream processing systems

realizing the actual computation. These systems have

access to a wide range of sources and therefore they

are able to derive a lot of knowledge. Even if a user

restricts access to a certain type of data on his or her

device, a stream processing system could be able to re-

trieve this data from another source. Therefore the pri-

vacy rules of each application also have to be applied

to affiliated services which process the application’s

data.

Future work has to investigate, how the PPM-based

rules can be applied to a privacy mechanism for stream

processing systems such as the PATRON research pro-

ject

3

(Stach et al., 2017a).

3

see http://patronresearch.de/

Big Brother is Smart Watching You - Privacy Concerns about Health and Fitness Applications

21

ACKNOWLEDGEMENTS

This paper is part of the PATRON research project

which is commissioned by the Baden-Wrttemberg Stif-

tung gGmbH. The authors would like to thank the

BW-Stiftung for the funding of this research.

REFERENCES

Barrera, D., Kayacik, H. G., van Oorschot, P. C., and Somay-

aji, A. (2010). A Methodology for Empirical Analysis

of Permission-based Security Models and Its Appli-

cation to Android. In Proceedings of the 17

th

ACM

Conference on Computer and Communications Secu-

rity, CCS ’10, pages 73–84.

Barrera, D. and Van Oorschot, P. (2011). Secure Software In-

stallation on Smartphones. IEEE Security and Privacy,

9(3):42–48.

B

¨

ohme, R. and Grossklags, J. (2011). The Security Cost of

Cheap User Interaction. In Proceedings of the 2011

New Security Paradigms Workshop, NSPW ’11, pages

67–82.

Chan, M., Est

`

eve, D., Fourniols, J.-Y., Escriba, C., and

Campo, E. (2012). Smart Wearable Systems: Current

Status and Future Challenges. Artificial Intelligence in

Medicine, 56(3):137–156.

Davis, B. and Chen, H. (2013). RetroSkeleton: Retrofitting

Android Apps. In Proceeding of the 11

th

Annual Inter-

national Conference on Mobile Systems, Applications,

and Services, MobiSys ’13, pages 181–192.

Davis, B., Sanders, B., Khodaverdian, A., and Chen, H.

(2012). I-ARM-Droid: A Rewriting Framework for In-

App Reference Monitors for Android Applications. In

Proceedings of the 2012 IEEE Conference on Mobile

Security Technologies, MoST ’12, pages 28:1–28:9.

Enck, W., Ongtang, M., and McDaniel, P. (2009). Under-

standing Android Security. IEEE Security and Privacy,

7(1):50–57.

Felt, A. P., Egelman, S., Finifter, M., Akhawe, D., and Wag-

ner, D. (2012a). How to Ask for Permission. In Pro-

ceedings of the 7

th

USENIX Conference on Hot Topics

in Security, HotSec ’12, pages 1–6.

Felt, A. P., Ha, E., Egelman, S., Haney, A., Chin, E., and

Wagner, D. (2012b). Android Permissions: User Atten-

tion, Comprehension, and Behavior. In Proceedings of

the Eighth Symposium on Usable Privacy and Security,

SOUPS ’12, pages 3:1–3:14.

Funk, C. (2015). IoT Research - Smartbands. Technical

report, Kaspersky Lab.

Hornyack, P., Han, S., Jung, J., Schechter, S., and Wetherall,

D. (2011). These Aren’t the Droids You’re Looking for:

Retrofitting Android to Protect Data from Imperious

Applications. In Proceedings of the 18

th

ACM Con-

ference on Computer and Communications Security,

CCS ’11, pages 639–652.

Khorakhun, C. and Bhatti, S. N. (2015). mHealth through

quantified-self: A user study. In Proceedings of the

2015 17

th

International Conference on E-health Net-

working, Application & Services, HealthCom ’15, pa-

ges 329–335.

Knighten, J., McMillan, S., Chambers, T., and Payton, J.

(2015). Recognizing Social Gestures with a Wrist-

Worn SmartBand. In Proceedings of the 2015 IEEE

International Conference on Pervasive Computing and

Communication Workshops, PerCom Workshops ’15,

pages 544–549.

Lee, M., Lee, K., Shim, J., Cho, S.-j., and Choi, J. (2016).

Security Threat on Wearable Services: Empirical

Study using a Commercial Smartband. In Proceedings

of the IEEE International Conference on Consumer

Electronics-Asia, ICCE-Asia ’16, pages 1–5.

Leontiadis, I., Efstratiou, C., Picone, M., and Mascolo, C.

(2012). Don’t kill my ads!: Balancing Privacy in an Ad-

Supported Mobile Application Market. In Proceedings

of the Twelfth Workshop on Mobile Computing Systems

& Applications, HotMobile ’12, pages 2:1–2:6.

Mayfield, J. and Jagielski, K. (2015). FTC Report on Internet

of Things Urges Companies to Adopt Best Practices to

Address Consumer Privacy and Security Risks. Techni-

cal report, Federal Trade Commission.

Nauman, M., Khan, S., and Zhang, X. (2010). Apex: Exten-

ding Android Permission Model and Enforcement with

User-defined Runtime Constraints. In Proceedings of

the 5

th

ACM Symposium on Information, Computer

and Communications Security, ASIACCS ’10, pages

328–332.

Patel, M. (2015). The Security and Privacy of Wearable

Health and Fitness Devices. Technical report, IBM

SecurityIntelligence.

Pombo, N. and Garcia, N. M. (2016). ubiSleep: An Ubiqui-

tous Sensor System for Sleep Monitoring. In Procee-

dings of the 2016 IEEE 12

th

International Conference

on Wireless and Mobile Computing, Networking and

Communications, WiMob ’16, pages 1–4.

Saracino, A., Martinelli, F., Alboreto, G., and Dini, G.

(2016). Data-Sluice: Fine-grained traffic control for

Android application. In Proceedings of the 2016

IEEE Symposium on Computers and Communication,

ISCC ’16, pages 702–709.

Stach, C. (2013). How to Assure Privacy on Android Phones

and Devices? In Proceedings of the 2013 IEEE 14

th

In-

ternational Conference on Mobile Data Management,

MDM ’13, pages 350–352.

Stach, C. (2015). How to Deal with Third Party Apps in a Pri-

vacy System — The PMP Gatekeeper. In Proceedings

of the 2015 IEEE 16

th

International Conference on

Mobile Data Management, MDM ’15, pages 167–172.

Stach, C. (2016). Secure Candy Castle — A Prototype for

Privacy-Aware mHealth Apps. In Proceedings of the

2016 IEEE 17

th

International Conference on Mobile

Data Management, MDM ’16, pages 361–364.

Stach, C., D

¨

urr, F., Mindermann, K., Palanisamy, S. M., Ta-

riq, M. A., Mitschang, B., and Wagner, S. (2017a). PA-

TRON — Datenschutz in Datenstromverarbeitungssys-

temen. In Informatik 2017: Digitale Kulturen, Ta-

gungsband der 47. Jahrestagung der Gesellschaft f

¨

ur

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

22

Informatik e.V. (GI), 25.9-29.9.2017, Chemnitz, volume

275 of LNI, pages 1085–1096. (in German).

Stach, C. and Mitschang, B. (2013). Privacy Management

for Mobile Platforms – A Review of Concepts and

Approaches. In Proceedings of the 2013 IEEE 14

th

In-

ternational Conference on Mobile Data Management,

MDM ’13, pages 305–313.

Stach, C. and Mitschang, B. (2014). Design and Implemen-

tation of the Privacy Management Platform. In Procee-

dings of the 2014 IEEE 15

th

International Conference

on Mobile Data Management, MDM ’14, pages 69–72.

Stach, C. and Mitschang, B. (2016). The Secure Data Con-

tainer: An Approach to Harmonize Data Sharing with

Information Security. In Proceedings of the 2016 IEEE

17

th

International Conference on Mobile Data Mana-

gement, MDM ’16, pages 292–297.

Stach, C., Steimle, F., and Franco da Silva, A. C. (2017b).

TIROL: The Extensible Interconnectivity Layer for

mHealth Applications. In Proceedings of the 23

nd

International Conference on Information and Software

Technologies, ICIST ’17, pages 190–202.

Stach, C., Steimle, F., and Mitschang, B. (2018). The Pri-

vacy Management Platform: An Enabler for Device

Interoperability and Information Security in mHealth

Applications. In Proceedings of the 11

th

International

Conference on Health Informatics, HEALTHINF ’18.

(to appear).

Steimle, F., Wieland, M., Mitschang, B., Wagner, S., and

Leymann, F. (2017). Extended Provisioning, Security

and Analysis Techniques for the ECHO Health Data

Management System. Computing, 99(2):183–201.

Wieland, M., Hirmer, P., Steimle, F., Gr

¨

oger, C., Mitschang,

B., Rehder, E., Lucke, D., Abdul Rahman, O., and Bau-

ernhansl, T. (2016). Towards a Rule-based Manufactu-

ring Integration Assistant. Procedia CIRP, 57(Supple-

ment C):213–218.

Wijaya, R., Setijadi, A., Mengko, T. L., and Mengko, R. K. L.

(2014). Heart Rate Data Collecting Using Smart Watch.

In Proceedings of the 2014 IEEE 4

th

International

Conference on System Engineering and Technology,

ICSET ’14, pages 1–3.

Xu, R., Sa

¨

ıdi, H., and Anderson, R. (2012). Aurasium:

Practical Policy Enforcement for Android Applicati-

ons. In Proceedings of the 21

st

USENIX Security Sym-

posium, Security ’12, pages 539–552.

Big Brother is Smart Watching You - Privacy Concerns about Health and Fitness Applications

23