Improvement of Privacy Prevented Person Tracking System

using Artificial Fiber Pattern

Hiroki Urakawa, Kitahiro Kaneda and Keiichi Iwamura

Tokyo University of Science 6-3-1 Niijuku, katusika-ku Tokyo, 125-8585, Japan

Keywords: Data Hiding, Surveillance Camera, Deep Learning, Artificial Fiber Pattern, Object Detection, Image

Reconstruction.

Abstract: Owing to the low equipment cost, the number of surveillance cameras installed has increased significantly;

however, most of them are not being used effectively. These cameras can be used for various purposes, such

as marketing, if behavior tracking is possible from the obtained images. Previously, we proposed a method of

tracking by embedding information in “Artificial Fiber Pattern.” However, the body shape of the wearer and

wrinkles of the clothes affect the accuracy of the results. To overcome this drawback, in this study, we

combined PIFu HD, a technology that generates a full three-dimensional model from a single image of a

person, with the modeling and calculation of the body shape of the subject to verify the conditions under

which the body shape of the wearer and wrinkles in the clothes affect the accuracy. Consequently, we achieved

precision improvement by removing data that met unsuitable conditions.

1 INTRODUCTION

Recently, the price of cameras has dropped, and

consequently, countless surveillance cameras have

been installed at various locations in cities. An

enormous amount of data is obtained from

surveillance cameras, and in the era of information

technology, it is expected to have a great value

beyond the original purpose of installation, which

includes "crime prevention" and "criminal

investigation".

Surveillance cameras, which are in constant

operation at various locations, are effective devices

for use in human flow analysis. The purpose of this

study is to use the data obtained from surveillance

cameras effectively for "crime prevention" and "trend

investigation for marketing, events, advertisement,

etc.

In our previous research, (

Kaneda, et al., 2008),

and (K. Kaneda, et al., 2010), behavioral tracking

using surveillance cameras was performed by

embedding information in specific patterns in

clothing. Existing methods for embedding

information in patterns include QR codes and

barcodes; however, these methods are visually

uncomfortable and are not suitable for clothing that

requires a good design. Therefore, we use "Artificial

Fiber Pattern," which is less visually distracting and

more resistant to noise. In addition, by automating the

system using general object detection technology

based on deep learning, a human-dynamics

monitoring system can be realized that obtains time

and location information with high accuracy and low

cost. However, as the patterns are embedded in

clothes, the patterned area is deformed by the wearer's

body shape and wrinkles in the clothes, thus

significantly degrading the accuracy of the system.

The advantage of the Artificial Fiber Pattern over

conventional person tracking methods (e.g., tracking

by face recognition) is its superior privacy protection.

While tracking by face recognition requires the

system to retain the privacy information of the

individual's face, the Artificial Fiber Pattern does not.

In this study, we modeled the three-dimensional

(3D) shape of the wearer using machine learning,

estimated the deformation of the embedded area of

the pattern, and verified whether the detection

accuracy can be improved by rejecting problematic

areas and frames in comparison with the conditions

that reduce the accuracy.

78

Urakawa, H., Kaneda, K. and Iwamura, K.

Improvement of Privacy Prevented Person Tracking System using Artificial Fiber Pattern.

DOI: 10.5220/0011315400003289

In Proceedings of the 19th International Conference on Signal Processing and Multimedia Applications (SIGMAP 2022), pages 78-85

ISBN: 978-989-758-591-3; ISSN: 2184-9471

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

2 CONVENTIONAL RESEARCH

2.1 Background

Numerous studies have been conducted on the

embedding of information in printed materials, which

can be categorized into two main types, visible and

invisible. The visible method is represented by QR

codes and barcodes, whereas the invisible method is

represented by digital watermarks. The visible

method has the advantage of a large and stable

amount of information that can be embedded;

however, it has the disadvantage of a large subjective

sense of discomfort and a low degree of freedom in

design. Conversely, the invisible method has the

advantage of less subjective discomfort but has the

disadvantage of limited embedding content and small

embedding capacity. There is a trade-off between

discomfort and information volume, and no

embedding method satisfies both.

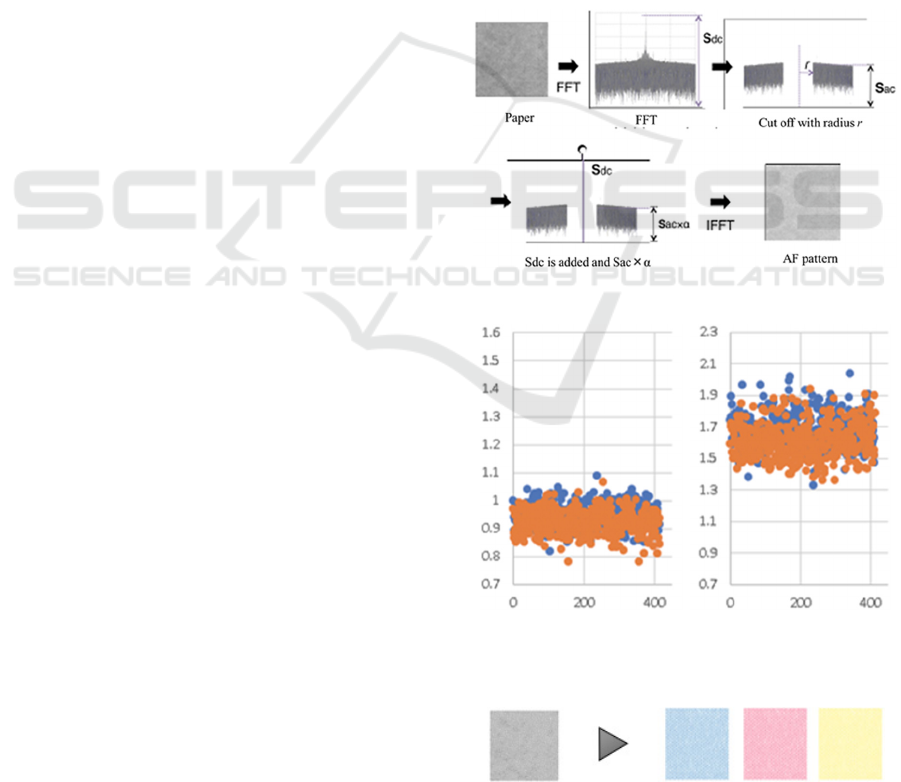

Therefore, we devised a method of embedding

information that allows us to recognize changes in the

content and has less subjective discomfort; we

defined it as an artificial fiber pattern. The

information was embedded by updating the frequency

domain based on the pattern originally contained in

the embedding target. Consequently, there is less

subjective discomfort even though the image quality

is improved compared to conventional artificial

patterns, and the effects of geometric deformation,

printing resistance, and paper shape change due to

low visibility are decreased Specifically, as shown in

Fig. 1, the embedded frequency components are

varied to the extent that the change in content can be

recognized, but with less subjective discomfort.

At the time of extraction, the embedded pattern

information obtained by the camera or scanner was

compared with the neighboring embedded

information using an intensity ratio and a threshold

was used. Thus, the intensity ratio is 1 if the adjacent

embedded information and the target artificial fiber

pattern are the same, and it is far from 1 if they are

different, as shown in Fig. 2. The information was

extracted by determining the intensity ratio with an

appropriately set threshold. This information is

presented in the references of (Inui et al., 2019,

Tomita et al., 2017, Iwamura et al., 2017, Noguchi et

al., 2018, Tomita et al.2018, Urakawa et al., 2021)

2.2 Artificial Fiber Pattern using

Designs

In the literature (

K. Kaneda,

et al., 2008,

K. Kaneda,

et

al., 2010) artificial fiber patterns are generated by

coloring various paper patterns in cyan, magenta, and

yellow to embed information in everyday patterns

(Fig. 3 and 4). The amount of extracted information

is (11 bits embedded in a sheet of A4 size paper) ×

(10 shots) = 110 bits.

2.3 Embedding the Man-Made Fiber

Pattern into the Fabric

Extraction rate in a previous study

as adopted as the

prototype pattern, and the artificial fiber pattern was

generated using the procedure described in Section 2

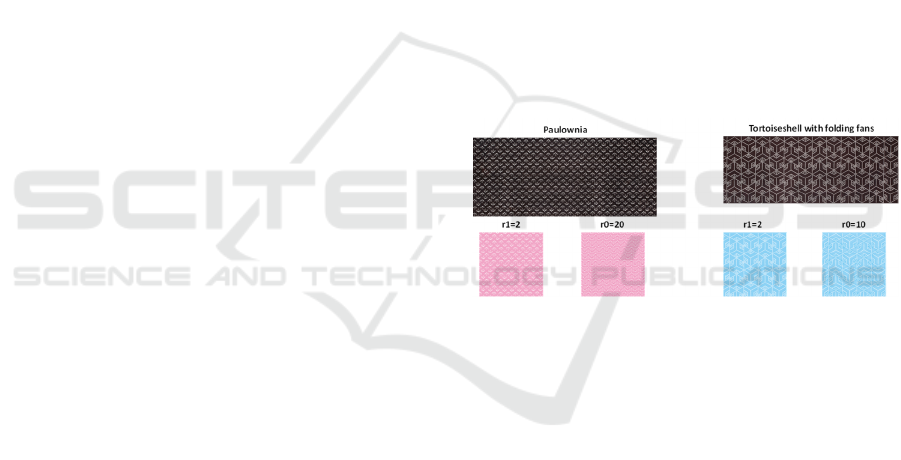

(Fig. 3). The generated artificial fiber pattern was

converted to cyan and printed on "3M Japan

Corporation Cloth Prefabricated Type Glue-less" for

the experiment. An example of the processed image

after photographing the printed artificial fiber pattern

is shown in Fig. 5.

Figure 1: Procedure for generating Artificial Fiber Pattern.

Figure 2: Distribution of intensity values (Vertical axis:

intensity ratio Horizontal axis: frame number)

Left: Different pattern Right: Same pattern.

Figure 3: Colorized patterns.

Improvement of Privacy Prevented Person Tracking System using Artificial Fiber Pattern

79

In the experiment, we used a Canon Web View

Livescope VB-H43 (Full HD video recording:

1920×1080) as a surveillance camera to capture the

man-made fiber pattern (11 bits) from a distance of

1.5 m and verified the extraction accuracy for 54

consecutive frames.

Consequently, 86.3% of the information was

obtained, and 100% of the extraction rate was

obtained for frames with large edge values. The edge

values of each image and the number of errors at that

time are summarized in Table 1.

2.4 YOLOv5

Because processing speed is an important factor in the

human motion monitoring system that we aim to

develop, we used You Only Look Once (YOLO), a

fast general object detection method, to identify

clothes and extract pattern regions of artificial fibers.

In previous research, YOLO version 3 (YOLOv3)

was used for clothing identification and the extraction

of difficult-to-see pattern regions. In this study, we

upgraded to YOLOv5, which is a newer version of

YOLOv3.

Fig. 6 shows that YOLOv5 has higher accuracy

than YOLOv3 and other methods. This image was

sourced from references (Huang et al.,2021) and

(Bochkovskiy et al.,2020). In order to achieve real-

time monitoring, high-speed processing that

consistently exceeds the number of recorded frames

is necessary. In this study, YOLOv5 was chosen to

achieve both low load and high accuracy.

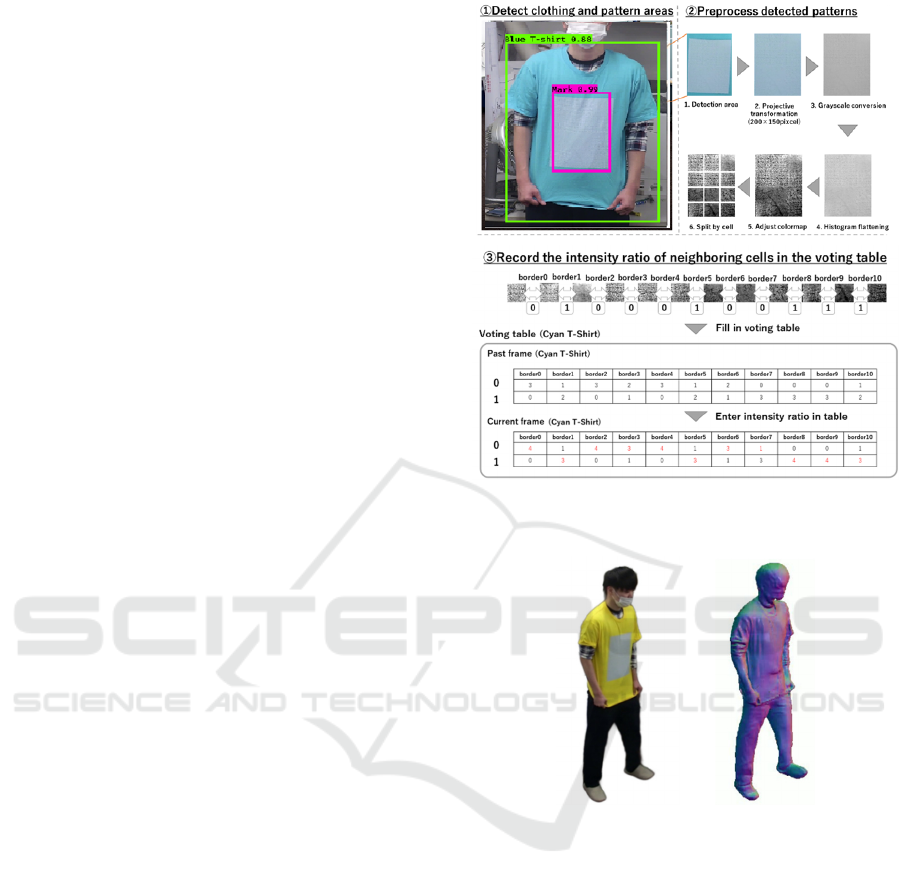

Fig. 7 shows the flow of the information

extraction. First, YOLOv5 detects the pattern regions

of clothing and artificial fibers. The extracted man-

made fiber pattern area is pre-processed by projection

transformation, grayscale transformation, histogram

flattening, and color map adjustment and divided into

cells. Subsequently, the intensity ratio of the adjacent

cells is calculated, and the information extracted from

the threshold is recorded on a voting table. The

pattern is determined by the majority vote among the

voting results of all frames. As depicted in Fig. 7, the

value is "0" if the adjacent cells are the same, and "1"

if the adjacent cells are different.

3 PROPOSED METHOD

3.1 Overview

In our previous research, we built a person tracking

system using surveillance cameras by automating the

reading of an artificial fiber pattern in video.

However, when using this system outdoors, its

accuracy was strongly affected by the wearer's body

shape and movement because the patterns were

embedded in clothing.

Therefore, in this study, we improved the system

such that the influence of the wearer's body shape and

movement is reduced.

To estimate and quantify body shape and

movement, we created 3D data using "PIFu HD,” a

system that can accurately model body shape from

still images. We evaluated the data using our method

to quantitatively calculate the degree of body shape

and wrinkles.

By calculating the numerical values of body shape

and wrinkles for multiple videos of people wearing

the patterns and comparing the accuracy of the

conventional artificial fiber pattern reading system,

the range that affects reading accuracy was

determined.

Based on these values, if the body shape and

wrinkle values were higher than the standard values,

the frame could not be judged, thereby reducing the

number of false judgments.

Figure 4: Sample of patterns.

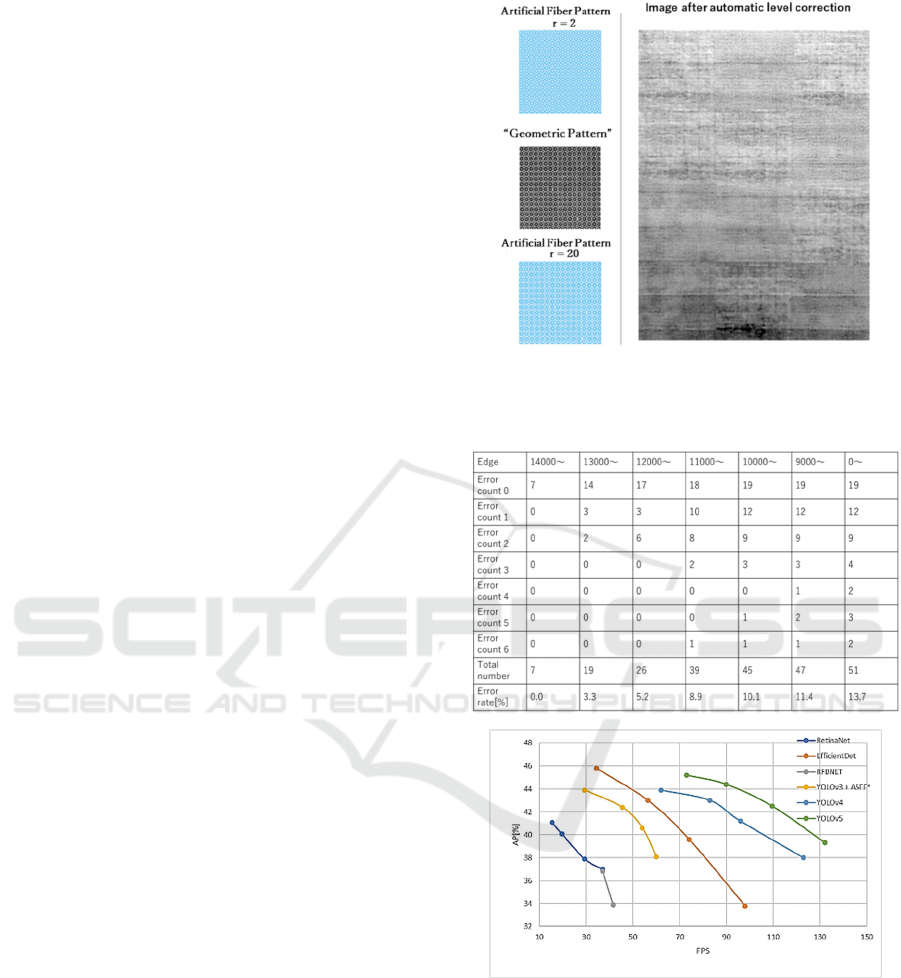

3.2 PIFuHD

PIFuHD is the successor to PIFu, a modeling

technique that estimates the 3D shape of a person

from a single image and enables modeling with a

higher resolution than conventional techniques.

PIFu

is described in detail in (Saito et al.,2019) and (Saito

et al.,2020).

Fig. 8 shows the 3D modeling of the actual image

of the subject wearing the artificial fiber pattern using

the PIFuHD. It can be seen that not only the posture

and body shape of the subject but also the wrinkles

and other details of the clothing are reproduced.

3.3 Effect of Body Shape and Wrinkles

on Accuracy

As mentioned earlier, because the artificial fiber

pattern is embedded in clothes, recognition accuracy

is degraded by the wearer's body shape and wrinkles.

SIGMAP 2022 - 19th International Conference on Signal Processing and Multimedia Applications

80

We examined the impact of these factors on the

accuracy. The cloth and the plane connecting the four

corners of the artificial fiber pattern in the modeling.

This value is known as the deflection value.

3.4 Determination of Body Shape by

Modeling Data

Obesity and chest size can be considered as body

types that affect the shape of a garment. For a normal

body shape, each point on the garment should be on

the same plane. However, changes in the shape of the

garment according to the body shape will greatly

deflect the cloth, and the points will not exist on the

same plane.

Therefore, we quantified the extent to which the

cloth was deflected depending on the body shape by

measuring the average distance between each point of

the cloth, we can determine the distance between the

cloth and the plane. The amount of wane was

quantified by the shape of the body. We call this the

deflection value.

3.4.1 Method to Determine Wrinkles

Unlike the change due to body shape, the change in

position due to the presence of wrinkles is minor;

therefore, it is difficult to make a judgment based on

the coordinates of each point on the modeling data.

In this study, the curvature of each point on the

modeling data was calculated using the Gaussian

curvature. Wrinkles were determined by calculating

the curvature of each point on the modeling data using

Gaussian curvature.

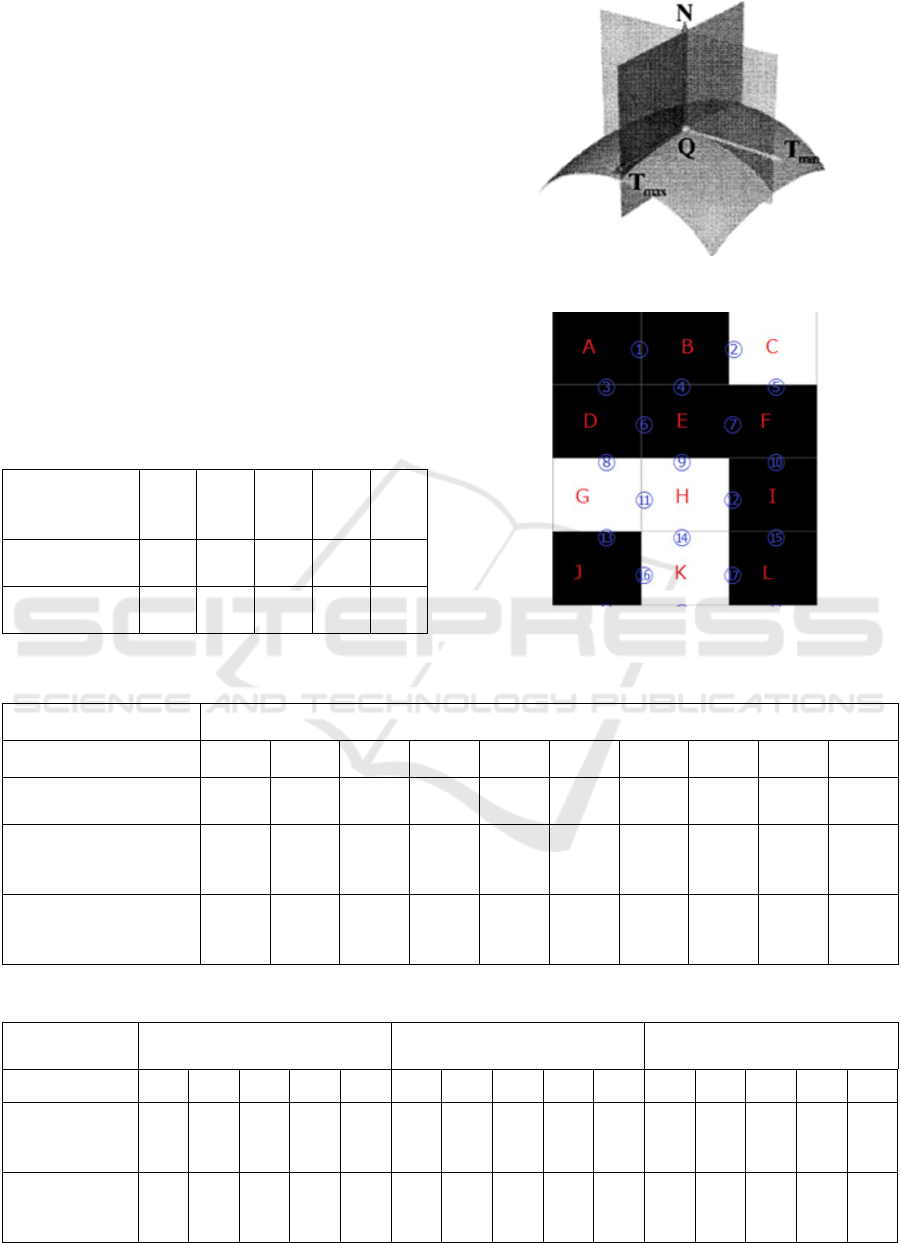

3.4.2 Gaussian Curvature

An example of a surface at an arbitrary point Q is

shown in Fig. 9. This image is sourced from

(Okaniwa, Maekawa 2010). The curvature at point Q

of the intersection curve between the normal plane

and the surface consisting of the unit normal vector N

and unit tangent vector T is called the normal

curvature. Because the unit tangent vector T can be

drawn countless times, normal curvature also exists

countless times. The smallest is called the minimum

principal curvature, and the largest is called the

maximum principal curvature; the product of these

two is called the Gaussian curvature

The larger the absolute value of the Gaussian

curvature, the more curved is the surface at that point.

Figure 5: Image after automatic level correction.

Table 1: Relationship between edge number and error

number.

Figure 6: Performance comparison of object detection

methods.

3.4.3 Estimation of the Size of Wrinkles

First, by calculating the average of the absolute values

of the Gaussian curvature in the region, we can

estimate the number of wrinkles in the region.

Thereafter, the area of wrinkles in the region can be

estimated by counting the number of points where the

absolute value of the Gaussian curvature exceeds a

certain value.

Improvement of Privacy Prevented Person Tracking System using Artificial Fiber Pattern

81

3.4.4 Determination of Wrinkle Position by

Modeling Data

Unlike changes due to body shape, wrinkles are

expected to appear locally only on a part of the

pattern. If we exclude only the boundary, including

the wrinkled pattern, from the judgment, the

information contained in the remaining pattern can be

extracted accurately.

Fig. 10 shows the layout of the artificial fiber

pattern used in this study, which consisted of 12

combinations of patterns in three horizontal rows and

four vertical columns. Considering the deflection of

the fabric due to the body shape, the first row is 1/18

to 5/18, second row is 7/18 to 11/18, and third row is

13/18 to 17/18; the first row is 1/24 to 5/24, second

row is 7/24 to 11/24, third row is 13/24 to 17/24, and

19th row is 19/24. Further, 24 is the third row, 7/24 to

11/24 is the second row, and 19/24 to 23/24 is the

fourth row, and the wrinkles in the corresponding area

are judged.

4 EXPERIMENT

4.1 Recording Conditions of the

Surveillance Camera

The subjects wore yellow T-shirts embedded with an

artificial fiber pattern and stood at a distance of 3 m

from a surveillance camera installed in the Iwamura

Laboratory.

4.2 Dataset

We prepared 4300 images of three T-shirts (cyan,

magenta and yellow) embedded with Artificial Fiber

Pattern and trained them on YOLOv5.

4.3 Experimental Environment

1) Surveillance camera: Canon Web View Livescope

VB-H43 (Full HD video recording: 1920×1080)

2) Cloth media ・Printstar T-shirt Yellow

3) Printer for printing ・Brother GT-381

4) CPU: AMD Ryzen 5900x

5) OS: Windows 10 Pro

6) GPU: GeForce RTX 3080

7) Software used: Python 3.8

8) Artificial Fiber Pattern:2inch square r=2 α=0.65

・2inch square r=20 α=0.65

Figure 7: Information extraction flow of the proposed

method.

Figure 8: Modeling by PIFuHD.

4.4 Results

4.4.1 Verification of Appropriate Deflection

Values

We reproduced the obese state of the abdomen by

placing a cylindrical rolled cushion between the

abdomen and T-shirt embedded with the artificial

fiber pattern. Four videos were captured for each of

the five patterns of the abdominal circumference (73,

85, 95, 105, and 115 cm), and the average deflection

values for each pattern and the number of videos that

were below 70% in accuracy were compared.

The results are shown in Table 2, which suggests

a correlation between abdominal circumference,

deflection values, and several misjudgments. In

particular, the average deflection value increased

SIGMAP 2022 - 19th International Conference on Signal Processing and Multimedia Applications

82

significantly from 85 to 95 cm in the abdominal

circumference, resulting in a corresponding increase

in the number of misjudgments.

4.4.2 Derivation of Appropriate Gaussian

Curvature

As shown in Section 3.3.3, there are two possible

criteria for judging wrinkles based on the Gaussian

curvature: the total absolute value of the Gaussian

curvature and the number of points where the

absolute value of the Gaussian curvature exceeds a

certain value.

The wrinkles in the clothes were reproduced by

inserting a bubble wrap (petit-pouch cushion) in the

abdomen while wearing a T-shirt in which an

artificial fiber pattern was embedded, and 10 patterns

of videos were taken by changing the amount and

position of the bubble wrap. However, as the

boundaries between different patterns (②, ⑤, ⑧,

⑨, ⑫, ⑬, ⑯, ⑰) are not easily affected by wrinkles,

we examined the Gaussian curvature facing each

boundary between the same patterns (①, ③, ④, ⑤,

⑧, ⑨, ⑫, ⑬, ⑯, ⑰), which are easily affected by

wrinkles (1), (2), (3), (4), (6), (7), (10), (11), (14), and

(15). Note that if only one of the two patterns facing

each boundary is affected by wrinkles, the Gaussian

curvature of the unaffected pattern will be low and

that of the affected pattern will be high. The

verification results are presented in Table 4.

As shown in Table 4, the Gaussian curvature

mean, which does not affect the judgment, is less than

0.07.

Moreover, the range of the Gaussian curvature

that can affect the judgment is 0.14 or more.

However, this range is too wide, and it is impossible

to determine a constant value necessary to estimate

the area.

4.4.3 Accuracy Change by Body Shape and

Wrinkle Detection

From the data obtained in sections 5.1 and 5.2, the

boundary between a person whose average deflection

value exceeds 500 and a region containing a pattern

whose average Gaussian curvature exceeds 0.1

among all frames is considered as undeterminable.

A total of 15 videos were taken, 5 each wearing a

T-shirt embedded with an artificial fiber pattern,

holding a cushion in the abdomen, holding a bubble

wrapping material and holding nothing in between.

Table 4 shows a comparison of the accuracy

between the conventional new methods for the

boundary with the lowest accuracy. × means that a

condition that lowers reading accuracy was detected

and excluded from the decision. Table 2 shows that

only the boundary with the lowest accuracy is

avoided.

4.4.4 Discussion and Consideration

The results in Table 4 show that this method was able

to reject only those that were not read correctly (3,4,5

with cushion and 3,4,5 with bubble-filled packaging

material). It can be said that a kind of error detection

function is implemented, a result that leads to

improved accuracy. In addition, the accuracy of the

two methods with the bubble-wrapping material was

better than that of the conventional method. This is

because only the problematic area was removed from

the judgment, indicating that the local wrinkles could

be identified. At present, it is only possible to avoid

judging the relevant areas, but further improvement

in accuracy is expected by combining it with error-

correcting codes.

In addition, the comparison of the area where

wrinkles exist, as mentioned in Section 3.3.3, could

not be successfully realized. This is because the

accuracy was greatly reduced even when there were a

small number of wrinkles. However, if we use the

absolute value of the Gaussian curvature as an index,

we can clearly distinguish the area where the

accuracy decreases.

5 SUMMARY

In this study, we modeled the 3D shape of the wearer

using PIFuHD, estimated the deformation of the

embedded region of the pattern, and verified whether

the detection accuracy could be improved by rejecting

problematic regions and frames in comparison with

the conditions for accuracy loss.

We assumed that the deformation of the embedded

area was caused by two factors: "deflection of clothes

due to body shape" and "wrinkles". For the former, we

attempted to show the degree of deformation by

calculating the distance between each point and the

plane connecting the four corners of the embedding

area, taking advantage of the fact that each point in the

model data moved away from the same plane as it was

deflected. In the latter case, because the amount of

change in the position of each point owing to wrinkles

is not very large, it is difficult to measure the degree of

wrinkles directly using the coordinates. We attempted

to express the degree of wrinkles based on the amount

of curvature and the number of points that were bent to

a certain degree.

Improvement of Privacy Prevented Person Tracking System using Artificial Fiber Pattern

83

Thus, by calculating the distance between the

plane and each point, the deflection of clothes based

on the body shape is quantified, and by avoiding

judgments of people whose body shape is so deflected

that it affects accuracy, we succeeded in reducing

misjudgments. Regarding the wrinkle determination

using Gaussian curvature, the number of points that

were bent more than a certain level was determined

from the original number of bent points, and the

wrinkles can be identified in which regions of the

artificial fiber pattern. Further improvement of the

accuracy can be expected by combining with error-

correcting codes In future, we plan to further improve

the accuracy by improving the illumination resistance

and introduction of error -correcting codes, and to

increase the number of bits that can be embedded to

include additional information.

Table 2: Relationship between deflection value and

accuracy.

Abdominal

circumference

[cm]

72 85 95 105 115

Deflection

value avera

g

e

52 243 2174 3821 4592

Number of

mis

j

ud

g

ments

0 0 2 4 4

Figure 9: Illustration of Gaussian curvature.

Figure 10: Placement of Artificial Fiber Pattern.

Table 3: Calculation of the appropriate Gaussian curvature.

Movie Number

1 2 3 4 5 6 7 8 9 10

Misjudgment

N

umbe

r

0 0 2 2 3 4 6 10 10 10

Minimum Gaussian

curvature when

mis

j

ud

g

ed [%].

× × 0.15 0.28 0.14 0.24 0.18 0.43 0.49 0.53

Maximum Gaussian

curvature when

j

ud

g

ed correctl

y

[%].

0.03 0.04 0.03 0.03 0.06 × × none none ×

Table 4: Overall accuracy change.

Normal With cushion

With Air bubble packing

material

1 2 3 4 5 1 2 3 4 5 1 2 3 4 5

Previous

Method

[%]

93 89 86 86 82 84 68 54 22 6.7 78 66 12 0 0

Proposed

method

[%]

93 89 86 86 82 86 68 × × × 78 79 × × ×

SIGMAP 2022 - 19th International Conference on Signal Processing and Multimedia Applications

84

REFERENCE

Kaneda, K., Hirano, K., Iwamura, K. and Hangai, S (2008)

“Information Hiding Method utilizing Low Visible

Natural Fiber Pattern for Printed Document,” 2008

International Conference on Intelligent Information

Hiding and Multimedia Signal Processing,

IIHMSP2008-IS05-007.

Kaneda, K. Hirano, K. Iwamura, K. and Hangai, S, (2010)

“An Improvement of Robustness against Physical

Attacks and Equipment Independence in Information

Hiding based on the Artificial Fiber Pattern”, WAIS-

2010.

Inui, T. Kaneda, K. Iwamura, K. and Echizen, I., (2014)

“Improved proposal of information hiding technology

by utilizing an artificial fiber pattern for an augmented

reality system , ” Proceedings of the Fourth IIEEJ

International Workshop on Image Electronics and

Visual Computing

Tomita, W., Kaneda, K., and Iwamura, K., (2017)

“Expanding Information Hiding Scheme Using

Artifical Fiber (AF) Pattern into Katagami Patterns with

a Low-Resolution Web Camera”, Proceedings of the

Fifth IIEEJ International Workshop on Image

Electronics and Visual Computing 2017.

Iwamura, K. and Kaneda, K. (2017) “Printed Document

Protection Using Artificial Fiber Pattern and Its

Application” Journal of Printing Science and

Technology (JPST),54-2 p. 103-111

Noguchi, N., Kaneda, K., and Iwamura, K. (2018) “New

approach for embedding secret information into fliber

materials”, GCCE, October 2018.

Tomita, W., Noguchi, N., Kaneda, K., Iwamura, K., and

Echizen, I. (2018) “Artificial Fiber Pattern ni yoru

moyou heno umekomi syuhou no kentou” (A Method

for Embedding Information in Patterns Using Artificial

Patterns) The institute of Electrical Engineers of Japan

13-4 P435 – 440

Urakawa, H., Iwamura, K. Kaneda, K. (2021) “Improving

a tracking accuracy using Artificial Fiber Patterns by

applying a new image processing method” IEICE, vol.

121, no. 29, EMM2021-4, pp. 19-24.

Huang,X., Wang, X., Lv, W., Bai, X., Long, X., Deng, K.,

Dang, Q., Han, S., Liu, Q., Hu, X., Yu, D., Ma, Y.,

Yoshie, O. (2021) “PP-YOLOv2: A Practical Object

Detector” arXiv:2104.10419 [cs.CV].

Bochkovskiy, A., Wang, C. and Liao H. M. (2020)

“YOLOv4: Optimal Speed and Accuracy of Object

Detection” arXiv:2004.10934 [cs.CV].

Saito, S., Huang, Z., Natsume, R., Morishima, S., Li, H. and

Kanazawa, A. (2019), “PIFu: Pixel-Aligned Implicit

Function for High-Resolution Clothed Human

Digitization” 2019 IEEE/CVF International

Conference on Computer Vision (ICCV).

Saito, S., Simon, T., Saragih, J. and Joo. H (2020)

“PIFuHD: Multi-Level Pixel-Aligned Implicit Function

for High-Resolution 3D Human Digitization” CVPR

2020 (Oral Presentation).”

Okaniwa, S. and Maekawa, S. (2010) “Estimation of

Principal Curvatures and Principal Directions for

Triangular Meshes” sekkeikougaku sistemubumon

kouenkai kouen ronbunsyuu 2010.20(0), 3307-

1__3307-5_, 2010, The Japan Society of Mechanical

Engineers.

Improvement of Privacy Prevented Person Tracking System using Artificial Fiber Pattern

85