Test Case Backward Generation for Communicating Systems from Event

Logs

S

´

ebastien Salva and Jarod Sue

LIMOS - UMR CNRS 6158, Clermont Auvergne University, UCA, France

Keywords:

Communicating Systems, Test Case Generation, Test Case Mutation.

Abstract:

This paper is concerned with generating test cases for communicating systems. Instead of considering that a

complete and up-to-date specification is provided, we assume having an event log collected from an imple-

mentation. Event logs are indeed more and more considered for helping IT personnel understand and monitor

system behaviours or performance. We propose an approach allowing to extract sessions and business knowl-

edge from an event log and to generate an initial set of test cases under the form of abstract test models. The

test architecture is adaptable and taken into consideration during this generation. Then, this approach applies

11 test case mutation operators on test cases to mimic possible failures. These operators, which are specialised

to communicating systems, perform slight modifications by affecting the event sequences, the data, or inject-

ing unexpected events. Executable test cases are finally derived from the test models.

1 INTRODUCTION

This paper focuses on the test of communicating sys-

tems, i.e. systems made up of concurrent components,

e.g., Web services or Internet of things (IoT) compo-

nents, which interact through a message passing pro-

tocol, e.g. HTTP. Testing such systems is known to be

a hard process due to the problems inherent in con-

trolling or monitoring many concurrent components

interacting with one another simultaneously. Sev-

eral works proposed solutions to develop test archi-

tectures, which include points of observation (PO),

to collect information from the implementation and

points of control and observation (PCO), to collect in-

formation but also to control the interactions with the

implementation. Others proposed to apply test strate-

gies, or to perform Model-based Testing (MbT), e.g.,

(Ulrich and K

¨

onig, 1999; van der Bijl et al., 2004;

Cao et al., 2009; Torens and Ebrecht, 2010; Kanso

et al., 2010; Aouadi et al., 2015; Hierons, 2001). De-

spite these significant contributions, generating test

cases for this kind of systems is still difficult and long.

With most of the approaches proposed in the litera-

ture, a complex specification must be written and ver-

ified, then a model-based testing approach is applied

to generate test cases with respect to a static test ar-

chitecture.

In the Industry, models are often neglected

though. Writing models is indeed known to be a hard

and error-prone task. Besides, keeping them up-to-

date is also difficult especially over the long term. In-

stead of using specifications, we propose to rely on

event logs, collected from a system under test, which

we denote SUT. Although no specification is given,

some approaches might be yet considered to generate

test cases:

• a basic record and replay technique: from an event

log, it is possible to extract sessions, i.e. se-

quences of correlated events interchanged among

different components. Then, these sessions could

be converted to executable test cases to evaluate

SUT again. Unfortunately, this technique does not

work well for communicating systems, as SUT

may include non deterministic components, or not

testable ones (not observable, or not controllable).

Test cases must be adapted w.r.t. these properties;

• model learning followed by test case generation:

model learning is a research field gathering al-

gorithms specialised in the construction of mod-

els by inference. On the one hand, active model

learning algorithms interact with SUT by means

of tests to get sequences of reactions encoded

with models. Few approaches are specialised to

communicating systems, e.g., (Petrenko and Avel-

laneda, 2019) and these ones are time-consuming

and cannot produce large models. On the other

hand, passive model learning approaches, e.g.,

Salva, S. and Sue, J.

Test Case Backward Generation for Communicating Systems from Event Logs.

DOI: 10.5220/0011309200003266

In Proceedings of the 17th International Conference on Software Technologies (ICSOFT 2022), pages 213-220

ISBN: 978-989-758-588-3; ISSN: 2184-2833

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

213

(Mariani and Pastore, 2008; Beschastnikh et al.,

2014; Salva and Blot, 2020) are able to gener-

alise behaviours retrieved in large event logs and

to encode them into models. But, this general-

isation might lead to the generation of incorrect

test cases leading to false positives. Once models

are retrieved, classical MbT approaches can be ap-

plied to produce test cases. These approaches (re-

)generate test data along with concrete execution

paths to construct test cases. Again this is a time

consuming activity.

This paper presents another approach to generat-

ing test cases for communicating systems from event

logs, which is devised with the previous attention

points in mind. The resulting test cases aim at exper-

imenting the whole system. In short, our approach

performs an analysis of event logs to extract some

business knowledge, reverse-engineers abstract test

models (but no specification) from event logs, mutates

them with specialised mutation operators and finally

generates executable test cases. The test architecture,

i.e. the number of PCOs, POs along with their capa-

bilities (what they are able to observe), is adaptable

and taken into consideration while the test case gen-

eration. Test case mutation (K

¨

oroglu and 0001, 2018;

Paiva et al., 2020) is an approach that builds new test

cases by performing slight modifications on existing

test cases in order to later check whether the system

under test is resilient to errors, to security attacks etc.

In other terms, we gather some advantages of pas-

sive model learning (extraction of sessions from event

logs, knowledge extraction), which we associate with

test case generation (test selection, generation of test

cases w.r.t. the PCOs and POs) and test case mutation.

The paper is organised as follows: we provide

some definitions used throughout the paper in Sec-

tion 2. Our approach is presented in Section 3 with

a motivating example. We present two algorithms to

build test cases from event logs and 11 test case mu-

tation operators specialised for communicating sys-

tems. Section 4 summarises our contributions and

draws some perspectives for future work.

2 DEFINITIONS

We denote E the set of events of the form e(α) with

e a label and α an assignment of parameters in P to a

value in the set of values V . We write x := ∗ the as-

signment of the parameter x with an arbitrary element

of V , which is not of interest. The concatenation of

two event sequences σ

1

, σ

2

∈ E

∗

is denoted σ

1

.σ

2

. ε

denotes the empty sequence. For sake of readability,

we also write σ

1

∈ σ

2

when σ

1

is a (ordered) subse-

quence of the sequence σ

2

. pre f ix(σ) denotes the set

of initial segments of σ.

We also use the following notations on events to

make our algorithms more readable:

• f rom(e(α)) = c denotes the source of an event;

• to(e(α)) = c denotes the destination;

• isreq(e(α)), isresp(e(α)) are boolean expressions

expressing the nature of the event.

We formulate a test case with a deterministic Input

Output Labelled Transition System (IOLTS) having a

tree form and whose sink states are either labelled by

the test verdicts pass, fail, or inconclusive. Its transi-

tions are labelled by events in E ∪ {θ}, with θ a spe-

cial label expressing the absence of reaction (Phillips,

1987).

Definition 1 (Test Case). A test case is a determinis-

tic IOLTS ⟨Q,q0,Σ,→⟩ where:

• Q is a finite set of states; Q contains three special

states: pass, fail and inconclusive

• q0 is the initial state;

• (Σ ⊆ E) ∪ {θ} is the finite set of events. Σ

I

⊆ Σ is

the finite set of input events beginning with ”?”,

Σ

O

⊆ Σ is the finite set of output events beginning

with ”!”, with Σ

O

∩ Σ

I

=

/

0;

• →⊆ Q × Σ ∪ {θ} × Q is a finite set of transitions

A transition (q, e(α),q

′

) is also denoted q

e(α)

−−→ q

′

.

3 TEST CASE GENERATION AND

MUTATION

The ability of our approach to generate test cases from

an event log produced by a communicating system

SUT, is dependent on the following realistic assump-

tions:

• A1 Event Log: the communications among the

components can be monitored by POs using dif-

ferent techniques, e.g., wireless sniffers or server

logs. These POs may have different observa-

tion capabilities. The latter are known and de-

fined with a relation PO : Component × E →

{true, f alse}. The resulting event logs are col-

lected in a synchronous environment made up

of synchronous communications. They include

timestamps showing when the events occurred.

• A2 Event Content: components produce com-

munication events or non-communication events.

Both include parameter assignments allowing to

identify the source and the destination of each

ICSOFT 2022 - 17th International Conference on Software Technologies

214

event. For non-communication events, both the

source and the destination refer to the same com-

ponent that has produced the event. Besides, a

communication event can be identified either as a

request or a response;

• A3 Component Collaboration: Workflows of

events are correlated by means of parameter as-

signments.

• A4 Component Controllability: we assume

knowing the set of components that can be exper-

imented, which is denoted PCO;

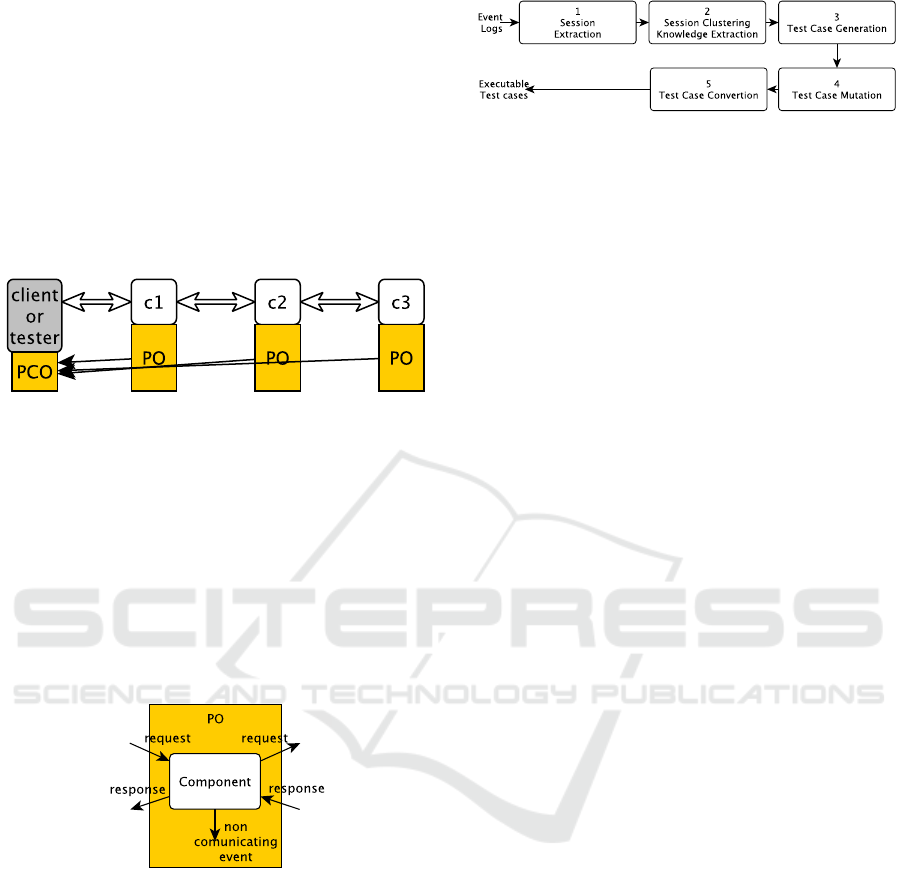

Figure 1: Example of system made up of 3 components; the

test architecture has 3 POs and 1 PCO.

Let’s illustrate these notions of POs and their ca-

pabilities with the example of system and test archi-

tecture of Figure 1. Consider that we can observe

for all the components c

1

, c

2

and c

3

any received

request and the associated response. For c

1

, this

can be formulated by PO(c

1

,e(α)) : (isreq(e(α)) ∧

to(e(α)) == c) ∨ (isresp(e(α)) ∧ f rom(e(α)) ==

c). Consider now that for c

2

nothing can be ob-

served (there is no point of observation), we have

PO(c

2

,e(α)) : f alse.

Figure 2: Classical event types that can be considered for

measuring PO observability.

The relation PO may indirectly be used to eval-

uate the capability of observation for every compo-

nent. For a component c, we define this measure as

follows: obs(c) =

∑

e(α)∈E

PO(c,e(α)) == true. It is

worth noting that dealing with all the potential events

is not required; it is usually sufficient to consider the

five event types illustrated in Figure 2.

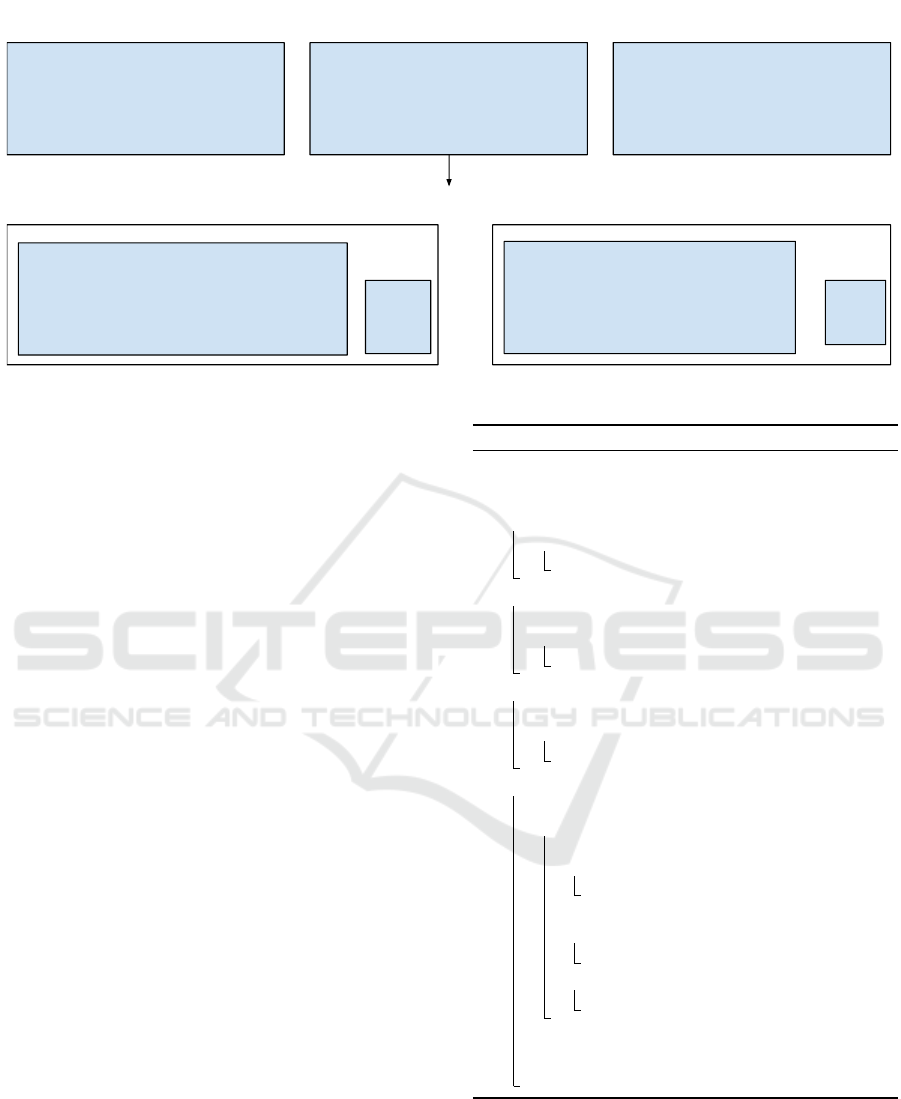

Figure 3 illustrates the successive steps of the test

case generation. The event log is initially segmented

into sessions by means of A3. As a lot of sessions

may express the same behaviours of SUT, Step 2 as-

sembles those expressing similar behaviours and cov-

ers them to extract knowledge, e.g., the fact that an

Figure 3: Approach Overview.

event represents an error or a failure, the fact that

an event sequence represents a token generation, etc.

This knowledge, expressed under the form of labels

will be used for the test case generation and muta-

tion. This step returns a set of sequences of elements

< e(α), l >, with e(α) an event whose parameter val-

ues are hidden and l a list of labels expressing knowl-

edge. These sequences are called abstract traces.

Step 3 generates a first test case set from abstract

traces. It applies a selection of abstract traces to keep

in priority those that should trigger crashes and er-

rors, and those allowing to cover the most components

and events. This step also transforms the selected ab-

stract traces to insert the notion of input and output

and to take into consideration the capabilities of the

POs. Then, test cases, given under the form of IOLTS

trees, are generated.

The next step applies 11 mutation operators on

the initial test case set, which modify events or data.

These operators mostly produce test cases dedicated

to test the robustness or security of SUT. Finally, ev-

ery test case tree is converted into an executable test

case. These steps are detailed below.

3.1 Session Extraction

The recovery of sessions from an event log is quite

straightforward when the parameters used to identify

sessions, namely a correlation set, is known in ad-

vance. When, this is not the case, we proposed in

(Salva et al., 2021) an algorithm (and a tool) to re-

trieve sessions by exploring the correlation set space

that can be derived from an event log. Our algorithm

can return the best session set that meet a predefined

set of quality attributes defined by the user, or returns

several solutions and sort them from the best to the

lowest quality.

The tool returns a set S of sessions along with a

correlation set Corr(σ) for every session σ ∈ S. All

the assignments p := v found in σ whose parameters

are also used in Corr(σ) are replaced by p := ∗.

3.2 Session Clustering and Knowledge

Extraction

This step takes as input the session set S and builds

a set of abstract traces of the form < e

1

(α

1

),l

1

>

Test Case Backward Generation for Communicating Systems from Event Logs

215

··· < e

k

(α

k

),l

k

> such that the parameter values

are replaced by ”*” except for the parameters from,

to. l

1

,...,l

k

are label lists expressing some business

knowledge about the sessions, e.g., ”error” or ”crash”.

Definition 2 (Abstract Traces). Let L be a set of la-

bels. An abstract trace is a sequence < e

1

(α

1

),l

1

>

··· < e

k

(α

k

),l

k

>∈ (E ×L)

∗

such that e

i

(α

i

)

1≤i≤k

∈ E,

and every parameter in P \ { f rom,to} is assigned to

”*” and l

i

⊆ L(1 ≤ i ≤ k) is a set of labels.

To build abstract traces, we begin to partition the

session set S into classes of equivalent sessions. We

formulate that two sessions are equivalent if they

share the same sequence of abstract events. Given an

event e(α), an abstract event e(α

′

) simply results from

the replacement of the parameter values by ”*” ex-

cluding the parameters from and to. The equivalence

relation between two sessions is defined by means of

a projection, which performs this event abstraction:

Definition 3. Two event sequences σ

1

σ

2

∈ E

∗

, are

equivalent, denoted σ

1

∼

b

σ

2

iff pro j

{ f rom,to}

σ

1

=

pro j

{ f rom,to}

σ

2

with: pro j

Q

: E

∗

→ E

∗

is the projec-

tion e

1

(α

′

1

)...e

k

(α

′

k

) = pro j

Q

(e

1

(α

1

)...e

k

(α

k

)) and

α

′

i

= {x := ∗ | x := v ∈ α

i

∧x /∈ Q} ∪ {x := v | x := v ∈

α

i

∧ x ∈ Q}

Now, given a class of equivalent sessions cl =

{σ

1

,...,σ

m

}, we analyse the events and parameter

values to extract knowledge by means of an expert

system. The latter is an inference engine that applies

a set of rules on a base of facts. In our context, the

former base of facts is a session set. The rules encode

expert knowledge about communicating systems and

build abstract traces. It is worth noting that an expert

system offers the benefit to save time by allowing its

reuse on several communicating systems.

We represent inference rules with this format:

When conditions on facts Then actions on facts (for-

mat taken by the Drools inference engine

1

). To ensure

that this step is performed in a finite time and in a de-

terministic way, the inference rules have to meet these

hypotheses:

• Finite Complexity: a rule can only be applied a

limited number of times on the same knowledge

base,

• Soundness: the inference rules are Modus Ponens

(simple implications that lead to sound facts if the

original facts are true).

We devised inference rules that analyse event con-

tent or event sequences to recognise crashes, errors,

1

https://www.drools.org/

rule "LabelCrash 1"

when

$ev: Event(paramStatus>=500);

then

insert(new Aevent($ev, L("crash"));

end

Figure 4: Inference rule example.

the authentication of users, and the generation of Ac-

cess tokens, which temporally provide accesses to

specific user’s data. Figure 4 Figure 4 exemplifies a

rule for recognizing a system crash with an HTTP sta-

tus of above 500. It creates an abstract event having a

new label ”crash”.

Once the equivalent class cl = {σ

1

,...,σ

m

} has

been analysed by the expert system, we obtain

one abstract trace of the form < e

1

(α

′

1

),l

1

> · · · <

e

k

(α

′

k

),l

k

>. From the equivalent classes of sessions

cl

1

,...,cl

n

, we obtain a set of n abstract traces, which

is denoted ATraces.

Figure 5 illustrates an example of 3 sessions ex-

tracted from an event log, itself produced by 3 com-

ponents API, Produts and Pay, using the architecture

of Figure 1. The two first sessions are equivalent as

they share the same sequence of labels and parameters

with different data. Our approach builds two clusters

of equivalent sessions cl(t

1

) and cl(t

2

). It also builds

2 abstract traces. t

2

includes one new label ”crash”

as the expert system has detected that the last event

expresses a crash of the component Pay.

3.3 Test Case Generation

Test cases are generated in the form of IOLTS trees

whose final states are labelled by a verdict. The use of

the IOLTS formalism allows to synthesize generic test

cases from which, written with various languages, can

be derived concrete test scripts. Besides, it is easier

to define transformations or mutations on IOLTS test

cases.

Test cases are built using the set PCO and the re-

lation PO while taking the controllability and observ-

ability of the components into account. At this point,

a user may decide to adapt the system’s POs with re-

gards to the available tooling. As the initial event log

has been collected from every component c in accor-

dance with a certain level of observability given by

obc(c), the user may only lower this level by redefin-

ing PO(c,e(α)).

The test case generation is implemented by Al-

gorithms 1 and 2. Algorithm 1 takes as input a

set of abstract traces ATraces and returns the set

SelectedATraces. As the set ATraces may be large,

it performs a selection according to 3 criteria (lines

ICSOFT 2022 - 17th International Conference on Software Technologies

216

/order(from:=Cl,to:=API,m:=POST,body:=1,key:=*)

/supply(from:=API,to:=Products,m:=POST,body:=1,key:

=*)

/payment(from:=Products,to:=Pay,m:=GET,key:=*)

ok(from:=Pay,to:=Products,status:=200,key:=*)

ok(from:=Products,to:=API,status:=200,key:=*)

ok(from:=API,to:=Cl,status:=200,key:=*)

Abstract Trace t1

Abstract Trace t2

Session S1 Session S2 Session S3

S1

S2

S3

cl(t1) cl(t2)

/order(from=:Cl,to:=API,m:=POST,body:=1,key:=*)

/supply(from:=API,to:=Products,m:=POST,body:=1,

key:=*)

/payment(from:=Products,to:=Pay,m:=GET,key:=*)

ok(from:=Pay,to:=Products,status:=200,key:=*)

ok(from:=Products,to:=API,status:=200,key:=*)

ok(from:=API,to:=Cl,status:=200,key:=*)

/order(from=:Cl,to=:API,m:=POST,body:=1,key:=3)

/supply(from:=API,to=:Products,m:=POST,body:=1,key:

=*)

/payment(from=:Products, to:=Pay,m:=GET,key:=*)

error(from=:Pay,to:=Products,status:=500,key:=*)

</order(from:=Cl,to:=API,m:=*,body:=*,key:=*), {}>

</supply(from:=API,to:=Products,m:=*,body:=*,key:=*), {}>

</payment(from:=Products,to:=Pay,m:=*,m:=*,key:=*), {}>

<ok(from:=Pay,to:=Products,status:=*,key:=*), {}>

<ok(from:=Products,to:=API,status:=*,key:=*), {}>

<ok(from:=API,to:=Client,status:=*,key:=*), {}>

</order(from:=Cl,to:=API,m:=*,body:=*,key:=*), {}>

</supply(from:=API,to:=Products,m:=*,body:=*,key:=*), {}>

</payment(from:=Products,to:=Pay,m=*,key:=*), {}>

<error(from:=Pay,to:=Products,status:=*,key:=*), {crash}>

Figure 5: Example of sessions and abstract traces.

1-11):

1. Crash/Error Coverage: it selects in priority all the

abstract traces whose at least one event expresses

a crash or an error;

2. Component Coverage: it completes them

with abstract traces in such a way that ev-

ery component of SUT will be covered by

the tests. This is performed with the relation

<

c

, which sorts the abstract traces having the

most events produced by components not yet

referenced in SelectedATraces: <

c

: t

1

<

c

t

2

iff | f rom({t

1

}) \ f rom(SelectedATraces)| >=

| f rom({t

2

}) \ f rom(SelectedATraces)|, with

f rom(E) =

S

σ∈E

{ f rom(e(α)) |< e(α),l >∈ σ};

3. Event Coverage: it also selects other abstract

traces until the ratio of selected events over the

total number of events reaches a given threshold.

These events are selected with the relation <

e

,

which orders the abstract traces having the most

events not used in SelectedATraces: t

1

<

e

t

2

iff |event({t

1

}) \ event(SelectedATraces)| >=

|event({t

2

}) \ event(SelectedATraces)| with

event(E) =

S

σ∈E

{e(α) |< e(α),l >∈ σ}.

Then, Algorithm 1 adapts the selected abstract

traces with respect to PCO and PO. It only keeps

the abstract traces whose first event is performed by

a component c that can be experimented (c ∈ PCO).

Besides, the algorithm also calls the procedure InOut,

which covers the events of an abstract trace t to insert

the notion of input and output. Meanwhile, the pro-

cedure filters out the unobservable events performed

by a component with respect to the relation PO

(the event e(α) such that PO( f rom(e

i

(α

i

)),e

i

(α

i

)) ∨

PO(to(e

i

(α

i

),e

i

(α

i

)) is false is not kept to build a test

case line(17)).

Algorithm 1.

input : ATraces

output: SelectedATraces

1 foreach t ∈ ATraces such that

∃1 ≤ i ≤ k : ”error” ∈ l

i

∨ ”crash” ∈ l

i

do

2 if to(e

1

(α

1

)) ∈ PCO then

3 InOut(t);

4 while f rom(SelectedATraces) ̸= f rom(Atraces) do

5 Order traces in ATraces w.r.t. <

c

and take the first trace t;

6 if to(e

1

(α

1

)) ∈ PCO then

7 InOut(t);

8 while Aevent(SelectedATraces)/Aevent(Atraces) <treshold do

9 Order traces in ATraces w.r.t. <

t

and take the first trace t;

10 if to(e

1

(α

1

)) ∈ PCO then

11 InOut(t);

12 Procedure InOut( t =< e

1

(α

1

),l

1

> · · · < e

k

(α

k

),l

k

> ): is

13 t

2

:= ε; cl(t

2

) := cl(t);

14 for 1 ≤ i ≤ k do

15 if (isreq(e

i

(α

i

)) ∧ (to(e

i

(α

i

) ==

to(e

1

(α

1

)) ∧ ( f rom(e

i

(α

i

) == f rom(e

1

(α

1

)) then

16 t

2

:= t

2

. <?e

i

(α

i

),l

i

>;

17 if PO( f rom(e

i

(α

i

)),e

i

(α

i

)) ∨ PO(to(e

i

(α

i

)),e

i

(α

i

))

then

18 t

2

:= t

2

. <!e

i

(α

i

),l

i

>;

19 if (isresp(e

i

(α

i

)) ∧ ( f rom(e

i

(α

i

) == c) then

20 t

2

:= t

2

. <!e

i

(α

i

),l

i

>;

21 Update cl(t

2

) w.r.t. t

2

;

22 SelectedATraces := SelectedATraces ∪ {t

2

};

23 ATraces := ATraces \ {t};

Algorithm 2 takes the set SelectedATraces and

produces IOLTS test cases. Given an abstract trace

t, the algorithm selects one event sequence of the

class cl(t) and builds an initial test case tc com-

posed of parameter values (lines 2-4). The IOLTS

tc is derived by means of the operator lts : (E ×

Test Case Backward Generation for Communicating Systems from Event Logs

217

L)

∗

×E

∗

×{ f ail, pass} → IOLT S, which takes an ab-

stract trace, its related event sequence, a verdict v and

returns an IOLTS ⟨Q, q0, Σ, →⟩ defined by the rule

< e

1

(α

′

1

),l

1

> ··· < e

k

(α

′

k

),l

k

>,e

1

(α

1

)...e

k

(α

k

),v ⊢

q

0

e

1

(α

1

),l

1

−−−−−→ q

1

...q

k−1

e

k

(α

k

),l

k

−−−−−→ v. A verdict v of tc is

established by means of the labels found in the ab-

stract trace. This test verdict denoted v(< e

1

(α

1

),l

1

>

··· < e

k

(α

k

),l

k

> is fail iff ∃1 ≤ i ≤ k : ”crash” ∈ l

i

,

otherwise v(< e

1

(α

1

),l

1

> · · · < e

k

(α

k

),l

k

> is pass.

Algorithm 2 now checks whether other events

may be observed after experimenting SUT with an ar-

bitrary event sequence of pre f ix(traces(tc)) (lines 5-

9). After an execution of events in pre f ix(traces(tc)),

depending on the SUT internal states, one might in-

deed observe the same output event with different pa-

rameter assignments, or different output events, or the

fact that SUT does not react (formulated wit the label

θ). Given an event sequence σ (collected from SUT),

we formulate this notion of observation after the exe-

cution of σ

p

∈ σ with:

out(σ,σ

p

) =

{!e(α)} if σ

p

!e(α) ∈ σ

{θ} otherwise

We can now say that SUT may produce two dif-

ferent observations after being experimented with

the event sequence σ

p

iff it exists two sessions σ

1

and σ

2

such that σ

p

∈ pre f ix(σ

1

) ∩ pre f ix(σ

2

) and

out(σ

1

,σ

p

) ̸= out(σ

2

,σ

p

). Algorithm 2 hence cov-

ers every abstract trace t

2

and session σ

2

in cl(t

2

)

to check whether different observations are possible

against the current test case tc (line 5). If this happens,

an IOLTS tc

2

is built from σ

2

and tc is completed with

tc

2

by means of the parallel synchronisation operator

∥.

Furthermore, as event logs do not necessarily en-

code all the behaviours of SUT, the test case tc

is completed (line 10) with the operator compl :

IOLT S → IOLT S defined by these rules:

r

1

:q

1

?e(α),l

−−−−→ q

2

,q

2

∈ {pass, f ail} ⊢ q

1

?e(α),l

−−−−→ q

11

θ

−→

q

2

,q

11

!∗

−→ f ail,q

1

!∗

−→ f ail

r

2

:q

1

?e(α),l

−−−−→ q

2

/∈ {pass, f ail} ⊢ q

1

?e(α),l

−−−−→ q

2

,

q

1

!∗

−→ f ail

r

3

:q

1

!e(α),l

−−−→ q

2

⊢ q

1

!e(α),l

−−−→ q

2

,q

1

!∗

−→ inconclusive

r

4

:q

1

!e(α),l

−−−→ q

2

,q

1

?e(α),l

−−−−→ q

3

/∈→⊢ q

1

θ

−→ f ail

The inference rule r

1

means that when the test case

tc is finished by an input event, a transition to a ver-

dict state and labelled with θ is added to formulate

that the absence of event is expected. Two transitions

to fail are added to express that the observation of any

other output event (label !*) is incorrect. Similarly, r

2

targets the other transitions labelled by input events.

r

3

completes the test case when an output event is ex-

pected. It adds a new transition q

1

!∗

−→ inconclusive

modelling that we cannot conclude whether the be-

haviour is correct when we observe any other unex-

pected output event from q

1

. r

4

completes the previ-

ous rule in the case there are only outgoing transitions

labelled by output events from q

1

. The rule adds a

transition to fail modelling that the observation of no

reaction is faulty.

Algorithm 2.

input : ATraces

output: TC

1 foreach t =< e

1

(α

1

),l

1

> · · · < e

k

(α

k

),l

k

>∈ ATraces do

2 Choose arbitrary σ ∈ cl(t) ;

3 cl(t) := cl(t) \ {σ};

4 tc := lts(t, σ, v(t));

5 foreach t

2

∈ ATraces, σ

2

∈ cl(t

2

) such that

∃σ

p

∈

S

σ∈traces(tc)

pre f ix(σ) ∩ pre f ix(σ

2

) : out(σ,σ

p

) ̸=

out(σ

2

,σ

p

) do

6 tc

2

:= lts(σ

2

,v(t

2

));

7 cl(t

2

) := cl(t

2

) \ {σ

2

};

8 tc := tc ∥ tc

2

;

9 ATraces := ATraces \ {t

2

};

10 tc := compl(tc);

11 complete the input events of tc;

12 TC := TC ∪ {tc};

13 ATraces := ATraces \ {t};

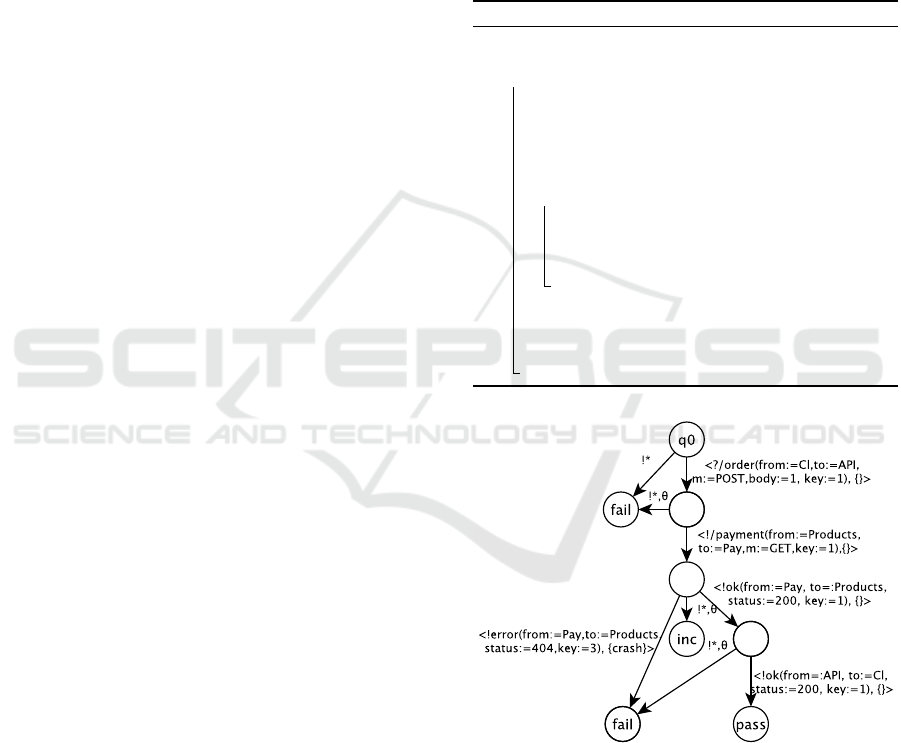

Figure 6: Test case example.

Figure 6 illustrates a test case obtained from

the abstract traces of Figure 5 after having re-

moved the PO for the component ”Products”

(PO(”Products, e()α) : f alse). The events /supply()

and the response ok() were hence removed from the

test case to comply with the new test architecture.

ICSOFT 2022 - 17th International Conference on Software Technologies

218

Table 1: Mutation operators and short description.

Name Def. Desc.: all the test cases that can be derived from tc by Expected

Event

Duplication

∀(?e(α),L) ∈ Σ

I

,

add(tc, (?e(α), L), (?e(α),L))

adding just before ?e(α) a copy of the event followed by its related

response

at least the response,

no error, no crash

Event

Swapping

∀(?e

1

(α

1

),L

1

),(?e

2

(α

2

),L

2

) ∈

Σ

I

, swap(tc,(?e

1

(α

1

),L

1

),

(?e

2

(α

2

),L

2

))

swapping two different input events and their related responses along

with all their nested output events

no crash

Event

Removal

∀(?e(α),L) ∈ Σ

I

,

del(tc, (?e(α), L))

deleting an input event and its related response along with all the

nested output events. tc must still hold at least one input event

no crash

HTTP Verb

Change

∀(?e(α),L) ∈ Σ

I

,

Verb(tc,(?e(α), L))

changing the HTTP verb of the input event if available no crash

Data

Alteration

∀(?e(α),L) ∈ Σ

I

, dataAlt(α

\{ f rom := c

1

,to := c

2

,

session id := id1,token := id2})

randomly changing the data no crash

Delay

Addition

∀(?e(α),L) ∈ Σ

I

,

add(tc, (?e(α), L), θ)

adding a delay during which no reaction should be observed before

every input event

no error, no crash

Token

Removal

∀(?e(α),L) ∈ Σ

I

,”token” ∈ L :

delToken(tc, α)

delete the token in the input event error, no crash

Token

Alteration

∀(?e(α),L) ∈ Σ

I

,”token” ∈ L :

tokenAlt(tc,α)

replacing a token by another one error, no crash

Session id

Alteration

∀(?e(α),L) ∈ Σ

I

:

sessionidAlt(tc, α)

replacing a session id by another one error, no crash

Session

Closure

closingSession(tc) adding a long delay and an input event at the end of every branch of

the test case to check whether the session is terminated

error, no crash

Stress

Testing

∀(?e(α),L) ∈ Σ

I

,

add

100

(tc,(?e(α), L))

adding just before ?e(α) 100 copies of the event with overwhelming

data

no error, no crash

3.4 Test Case Mutation

We now have an initial set TC of test cases, which

somehow mimic the behaviours encoded in the event

log w.r.t. observability and controllability of the com-

ponents. This test case set is completed to experi-

ment SUT with further executions. To generate ad-

ditional test cases, we apply 11 mutation operators

on TC whose purposes are to perform slight test case

modifications to mimic possible failures. These mod-

ifications may affect the sequence of events (Event

duplication, swapping, removal), change data (HTTP

Verb change, Data alteration, Token removal, Al-

teration, Session id Alteration) or add unexpected

events(Delay Addition, Session Closure, Stress Test-

ing).

The list of mutation operators along with short

descriptions are given in Table 1. Several opera-

tors refer to the notion of nested events, which oc-

cur between a request q

1

?req(α),l

−−−−−→ q

2

and its response

q

1

!resp(α

′

),l

′

−−−−−−→ q

2

. These nested events have to be taken

into account while the test case mutation. For in-

stance, for Event Removal, if the operator deletes

?req(α) and its response into a test case, it also

needs to delete the potential nested requests and re-

sponses !e

1

(α

1

),...,!e

k

(α

k

) such that to(?req(α)) =

f rom(!e

1

(α

1

)), to(!e

i

(α

i

)) = f rom(!e

i+1

(α

i+1

))(1 ≤

i < k) and to(!e

k

(α

k

)) = f rom(!resp(α

′

)).

Figure 7: Test case example obtained with the mutation op-

erator ”HTTP Verb Change”.

If we take back the example of Figure 6 and if we

apply the operator ”HTTP Verb Change” we obtain

the test case of Figure 7. The verb Post was replaced

by Delete in the request /order; any output event not

expressing a crash is allowed.

Finally, executable test cases are generated from

the IOLTS trees. Some input events may still have

assignments of the form p := ∗. These ones refer to

parameter that belong to a correlation set. The first

input event of a test case tc is completed by means of

the data found in Corr(σ) and translated into source

code. Every other input event of tc is also translated

but the source code refers here to the correlation sets

recovered in the output events preceding this input.

Test Case Backward Generation for Communicating Systems from Event Logs

219

4 CONCLUSION

We proposed in this paper a solution to generate test

cases for communicating systems from event logs.

Instead of proposing a basic ”record and replay”

technique or an approach combining model learning

and MbT, we presented algorithms allowing to ex-

tract knowledge by means of an expert system and

generate an initial test suite made up of IOLTS test

cases. By doing this, we intend to extract test ver-

dicts, save computation time, and avoid the impreci-

sion brought by models produced with passive model

learning techniques. Besides, we proposed 11 test

case mutation operators to expand the initial test case

set.

We have implemented this approach in a tool pro-

totype. Due to lack of room, we briefly summarise

its features here: the tool is specialised for Web ser-

vice compositions. It takes event logs as inputs and

generates sessions by means of the tool presented in

(Salva et al., 2021). The tool Drools is the expert sys-

tem used to analyse sessions. Then, our tools pro-

duces test cases written with the Citrus framework

2

.

A complete evaluation will be presented in a future

work.

REFERENCES

Aouadi, M. H. E., Toumi, K., and Cavalli, A. R. (2015).

An active testing tool for security testing of distributed

systems. In 10th International Conference on Avail-

ability, Reliability and Security, ARES 2015, Toulouse,

France, August 24-27, 2015, pages 735–740. IEEE

Computer Society.

Beschastnikh, I., Brun, Y., Ernst, M. D., and Krishna-

murthy, A. (2014). Inferring models of concurrent

systems from logs of their behavior with csight. In

Proceedings of the 36th International Conference on

Software Engineering, ICSE 2014, pages 468–479,

New York, NY, USA. ACM.

Cao, D., Felix, P., Castanet, R., and Berrada, I. (2009). Test-

ing Service Composition Using TGSE tool. In Press,

I. C. S., editor, IEEE 3rd International Workshop on

Web Services Testing (WS-Testing 2009), Los Ange-

les, United States. IEEE Computer Society Press.

Hierons, R. (2001). Testing a distributed system: gener-

ating minimal synchronised test sequences that detect

output-shifting faults. Information and Software Tech-

nology, 43(9):551–560.

Kanso, B., Aiguier, M., Boulanger, F., and Touil, A. (2010).

Testing of Abstract Components. In ICTAC 2010 - In-

ternational Conference on Theoretical Aspect of Com-

puting., pages 184–198, Brazil.

2

https://citrusframework.org

K

¨

oroglu, Y. and 0001, A. S. (2018). TCM: Test Case Muta-

tion to Improve Crash Detection in Android. In Pro-

ceedings of the 21st International Conference on Fun-

damental Approaches to Software Engineering, pages

264–280. Springer.

Mariani, L. and Pastore, F. (2008). Automated identification

of failure causes in system logs. In Software Reliabil-

ity Engineering, 2008. ISSRE 2008. 19th International

Symposium on, pages 117–126.

Paiva, A., Restivo, A., and Almeida, S. (2020). Test case

generation based on mutations over user execution

traces. Software Quality Journal, 28.

Petrenko, A. and Avellaneda, F. (2019). Learning commu-

nicating state machines. In Tests and Proofs, page

112–128, Berlin, Heidelberg. Springer-Verlag.

Phillips, I. C. C. (1987). Refusal testing. Theor. Comput.

Sci., 50:241–284.

Salva, S. and Blot, E. (2020). Cktail: Model learning

of communicating systems. In Ali, R., Kaindl, H.,

and Maciaszek, L. A., editors, Proceedings of the

15th International Conference on Evaluation of Novel

Approaches to Software Engineering, ENASE 2020,

Prague, Czech Republic, May 5-6, 2020, pages 27–

38. SCITEPRESS.

Salva, S., Provot, L., and Sue, J. (2021). Conversation ex-

traction from event logs. In Cucchiara, R., Fred, A.

L. N., and Filipe, J., editors, Proceedings of the 13th

International Joint Conference on Knowledge Discov-

ery, Knowledge Engineering and Knowledge Manage-

ment, IC3K 2021, Volume 1: KDIR, Online Streaming,

October 25-27, 2021, pages 155–163. SCITEPRESS.

Torens, C. and Ebrecht, L. (2010). Remotetest: A frame-

work for testing distributed systems. In 2010 Fifth In-

ternational Conference on Software Engineering Ad-

vances, pages 441–446.

Ulrich, A. and K

¨

onig, H. (1999). Architectures for Test-

ing Distributed Systems, pages 93–108. Springer US,

Boston, MA.

van der Bijl, M., Rensink, A., and Tretmans, J. (2004).

Compositional testing with ioco. In Petrenko, A. and

Ulrich, A., editors, Formal Approaches to Software

Testing, pages 86–100, Berlin, Heidelberg. Springer

Berlin Heidelberg.

ICSOFT 2022 - 17th International Conference on Software Technologies

220