Autonomous Loading of a Washing Machine with a Single-arm Robot

Hassan Shehawy

a

, Andrea Maria Zanchettin

b

and Paolo Rocco

c

Politecnico di Milano, 20133 Milan, Italy

Keywords:

Robotics, Deformable Objects, Image Clustering, Computer Vision.

Abstract:

The perception and autonomous manipulation of clothes by robots is an ongoing research topic that is attracting

a lot of contributions. We consider the application of handling garments for laundry in this work. A framework

for loading a washing machine with clothes placed initially inside a box is presented. Our framework is created

in a modular way to account for the sub-problems associated with the full process. We extend our grasping

point estimation algorithm by finding multiple grasping points and defining a score to select one. Active

contours segmentation is added to the algorithm as well for more robust clustering of the image. Model of the

washing machine is used to create a motion plan for the robot to place the clothes inside the drum. A new

module is added for detection of items fallen outside the drum so to plan corresponding corrective action. We

use ROS, depth and 2D cameras and the Doosan A0509 robot for experiments.

1 INTRODUCTION

Robots have been used in many projects with clothes

for some time now and several works have been pub-

lished with a wide variety of applications. For ex-

ample in (Yamazaki et al., 2010), a robot was used

for tidying a room which included manipulation of

clothes whereas in (Yamazaki et al., 2014) the authors

used a robot for assistance in dressing. The variation

is not only in the application, but also the configura-

tion of the system. If folding clothes is considered

as an application, then a dual-arm robot was used in

(Stria et al., 2014) while a single-arm one was used

in (Petr

´

ık et al., 2017). Using various approaches for

the perception is also noticeable which included the

recognition of clothes and/or their state. Computer vi-

sion was used in many works either using a 2D cam-

era as in (Yamazaki et al., 2011) or a depth camera

as in (Sun et al., 2018). Haptics was also used as in

(Clegg et al., 2017) and hybrid methods have been

used as well in (Yuan et al., 2018) and (Kampouris

et al., 2016).

Finding a grasping point is a fundamental step

prior to the manipulation of clothes and different

methods were used to detect one. In (Willimon et al.,

2011b), a set of possible grasping points were calcu-

lated and an arbitrary one was selected while in other

a

https://orcid.org/0000-0002-8369-8963

b

https://orcid.org/0000-0002-1866-7482

c

https://orcid.org/0000-0001-6716-434X

works, features as wrinkles (Willimon et al., 2011a)

and edges (Ramisa et al., 2011) were extracted. Mod-

eling the grasping process was part of some works as

in (Gil et al., 2016) and meanwhile some other works

didn’t consider it (Mira et al., 2015).

Even though there is a lot of works that have been

published or ongoing, there are many open challenges

to consider. In this paper, we present a full pipeline

for loading a washing machine with clothes placed in-

side a laundry basket. We use a single-arm robot for

the manipulation, 2D and an RGB-D camera for per-

ception. Figure 1 shows the robot placing garments

inside the washing machine.

To consider the full process of loading the wash-

ing machine, we created a modular approach that ac-

counts for the different problems associated with the

process. we extend our grasping point estimation al-

gorithm (Shehawy et al., 2021) by finding curves in

the image that can be bounding garments, possibly ex-

tracting multiple grasping points and defining thresh-

olds for picking one. We use Active Contours for seg-

menting the image for more robust clustering. With

the knowledge of the machine CAD model, a direc-

tion and a set of waypoints are used to create the mo-

tion plan for the loading process. Another module

has been developed to detect if garments fall out of

the drum and a corrective action is taken to put them

back inside the drum. The contribution of this pa-

per is the proposed framework which realizes the au-

tonomous loading of a washing machine. It includes

Shehawy, H., Zanchettin, A. and Rocco, P.

Autonomous Loading of a Washing Machine with a Single-arm Robot.

DOI: 10.5220/0011276200003271

In Proceedings of the 19th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2022), pages 443-450

ISBN: 978-989-758-585-2; ISSN: 2184-2809

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

443

Robot successfully grasped an item from a laundry basket and mid-

way to place it inside the washing machine.

Figure 1: Robot loading clothes inside a washing machine.

our extended algorithm for finding a grasping point in

a more robust way using active contours.

The paper is organized as follows. The next sec-

tion explains the framework which includes finding

a set of grasping points and selecting one according

to predefined criteria, creation of motion plan, and

checking the status of the loaded garments. Section

3 discusses the experiments and the practical aspects

of the system which includes the design of the ROS

nodes and the gripper.

2 METHOD

A dedicated framework for loading the washing ma-

chine has been developed and is presented in this sec-

tion. The first part is finding a grasping point and

to this, we focus on finding a continuous area in the

image of the loaded basket and use its centroid as a

grasping point. To account for the possible failures

in the process, we add two modules for detecting and

correcting such situations. The first one is checking

if an item is partially outside the washing machine af-

ter being placed inside the drum. The second one is

checking the floor to see if any item(s) has fallen dur-

ing the process of loading the washing machine.

2.1 Grasping Point

In this work, Active Contours method is used for seg-

mentation, and centroids of clusters (that identify dif-

ferent clothes) are all considered as grasping points.

A set of weights is introduced for assessment of the

points to select one. We use our SW-filter that we

have presented in our previous work(Shehawy et al.,

2021) before the segmentation.

2.1.1 Pre-segmentation

Prior to the segmentation, each pixel in position (x, y)

is given the value:

I(x, y) =

q

P

L

L(x,y)

2

+ P

a

a

∗

(x,y)

2

+ P

b

b

∗

(x,y)

2

where L(x,y), a

∗

(x,y) and b

∗

(x,y) correspond to the

conversion from the RGB to the La*b* (Ganesan

et al., 2010) color space. P

L

, P

a

and P

b

are parame-

ters to allow for the balance of luminance and color

weights for images. Results were very sensitive to the

selection of these parameters, and they were set as P

L

= 4, P

a

= 1.5 and P

b

= 1.5.

2.1.2 Clustering using Active Contours

Active Contours methods rely on the concept of an

energy functional that is minimized to find a curve

of interest inside an image (Kass et al., 1988). The

functional is a combination of two components, one

of them controls the smoothness of the curve and the

other brings the curve closer to the boundary. The

curve is assumed to form a contour around the objects

in the image. It can be represented implicitly as

C = {(x, y)|u(t,x,y) = 0}

and its evolution can be described by the PDE:

∂u

∂t

= F|∇u|

where F represents the speed of the evolution and

may be chosen as the curvature. In this case, the PDE

becomes:

∂u

∂t

= div(

∇u

|∇u|

)|∇u|

where div(

∇u

|∇u|

) stands for the divergence of the nor-

malized gradient which is the curvature calculated on

the level-sets of u.

This approach has been extended in multiple

works like the the Active Contours Without Edges

(ACWE) (Chan and Vese, 2001) and the Geodesic

Active Contours (GAC) (Caselles et al., 1995). We

use the GAC method here where the curve evolution

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

444

based on the energy functional introduced in (Caselles

et al., 1995) is defined as:

∂u

∂t

= g(I)|∇u|div(

∇u

|∇u|

) + ∇u∇g(I)

where g (I) is the function that dictates the regions

of interest in the image and it is usually selected to

highlight the edges in the image. In our work we used

this form:

g(I) =

1

q

1 + α|∇

ˆ

I|

where

ˆ

I is a smoothed image obtained by applying

a Gaussian filter (with standard deviation σ) on the

image I and α is an empirical parameter.

To overcome the problems associated with a

wrong initial guessed curve u

0

or the curve passing

through noisy points that can stop the curve evolu-

tion, the balloon force is added to the functional as

described in (Cohen, 1991) and hence the curve evo-

lution becomes:

∂u

∂t

= g(I)|∇u|div(

∇u

|∇u|

) + ∇u∇g(I) + |∇u|g(I)υ

where υ ∈ R is the parameter for the balloon force.

Replacing differential operators with morpholog-

ical ones helps in decreasing the numerical and

computational complexity associated with solving

PDEs as introduced in (Marquez-Neila et al., 2014)

which presents a full framework for implementing the

ACWE and GAC using morphological operations. To

reduce the time associated with this step, we adopt

this technique of implementing the GAC method.

2.1.3 Post-segmenting

An initial level set of a circle with a small radius was

used and running the segmentation with that initial

curve at different points could cluster the different

garments in the image. These clusters can be com-

bined together into one segmented image as shown in

Figure 2 where three garments are successfully seg-

mented. To complete the segmentation step and ad-

dress some possible problems, we perform this extra

step: for every pair of clusters (C

i

and C

j

), the in-

tersection (C

i j

= C

i

∩C

j

) is calculated and a ratio η

is defined as an intersection threshold (set at 0.95).

Then the number of elements in the clusters and their

intersection is computed and:

• if n(C

i j

)/n(C

i

) > η and n(C

i j

)/n(C

j

) > η, they

are combined into one cluster.

• if n(C

i j

)/n(C

i

) > η and n(C

i j

)/n(C

j

) < η, the me-

dian values of the clusters are computed. If the

difference between them is small, the two clusters

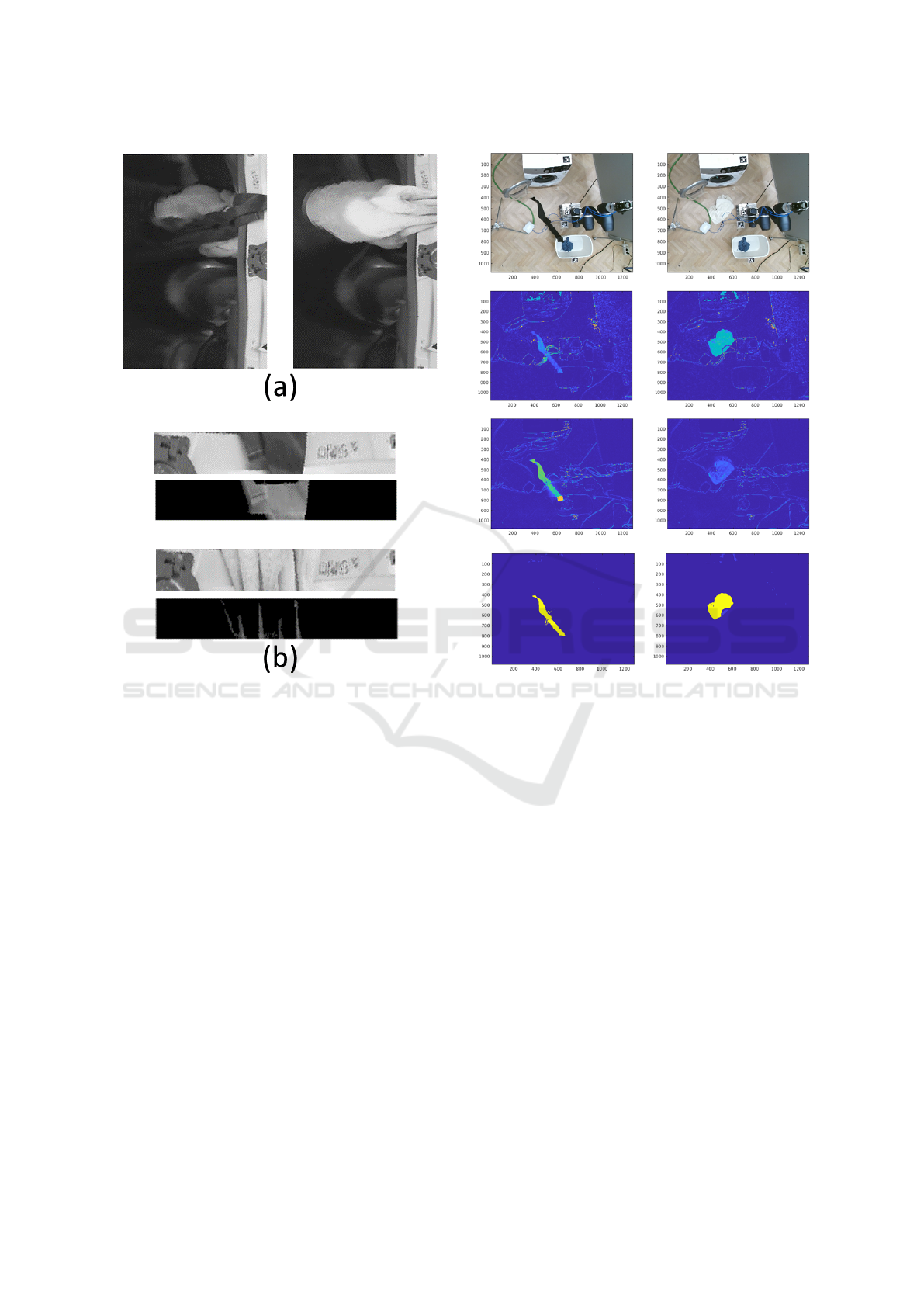

Input image of clothes (left) and the result of applying our SW-

filter (right) are in first row. Running MGAC clustering at different

points gave the results in second and third (left) rows while the

combination of the clusters is shown in the third row (right).

Figure 2: Image Clustering using MGAC.

are combined into one. An example of this case

is shown in Figure 3 (upper row). Otherwise, we

have two clusters; one of them is the smaller clus-

ter and the other is the difference between the two

clusters. An example of this case is shown in Fig-

ure 3 (lower row).

2.1.4 Weights of Grasping Points

The main criterion for selecting a grasping point is

the area of the cluster. However, two more criteria are

added to consider other factors in selecting the point:

• Normal distances from the horizontal and vertical

edges, w

eh

and w

ev

. These weights are corrected

by scaling the distance to the longer edge by a fac-

tor (we chose it as the ratio of the longer to the

smaller edge). This is illustrated in Figure 4 (on

the left).

• Euclidean distance from the center of the basket,

w

rc

. We consider the reciprocal of the distance as

a weight so that the closer the point to the center,

the higher the weight. This is illustrated in Figure

4 (on the right).

The final weight is the sum of the normalized weights

w

rc

, w

eh

and w

ev

. The detected clusters are sorted ac-

cording to their area and the largest one is considered

for grasping. However, if multiple clusters have an

Autonomous Loading of a Washing Machine with a Single-arm Robot

445

Two examples of intersection of two clusters where analysis is

needed. In upper row, the smaller cluster (right) is indeed part

of the bigger one (left). In the lower row, this is not the case and it

is an independent cluster.

Figure 3: MGAC Segmentation Intersection.

Distance from the edges (left) is one criterion for a grasping point

while distance from the center (right) is another one.

Figure 4: Weights of Grasping Points.

area > 40 cm

2

, we use the weight defined here to se-

lect a point.

2.2 Checking for Items Partly Outside

the Drum

The first inspection is to check if the loading process

was indeed successful in terms of placing the item

completely inside the drum and making sure that no

part of it is still outside the drum. For this we detect

the drum opening by finding two circles in the im-

age using Hough Transform (Yuen et al., 1990). To

ensure that the correct circle is detected, we place a

fiduciary marker (AprilTag (Wang and Olson, 2016))

at a known position relative to the drum center. A re-

gion of interest (ROI) is created as the disk between

the smaller and larger circle. Figure 5 shows two ex-

amples for this process where the two circles and the

disk of interest are highlighted.

To check this region, we convert the image from

the Cartesian space into the log-polar one. This is

done using the following equation:

ρ = log(

q

(x − x

c

)

2

+ (y − y

c

)

2

)

Region Of Interest (ROI) is created to check if an item is partially

outside the washing machine. Two examples are shown here with

a dark color (right) and a more challenging bright color (left) .

Figure 5: Inspection of the outer part of the drum.

and

θ = tan

−1

y − y

c

x − x

c

where x

c

and y

c

are the center coordinates in the

Cartesian space. In the new space, the curved area of

interest is now a rectangular patch in the image. This

requires the correct positioning of the center of the

transformation which is set as the center of the drum.

Figure 6 (a) shows the output of this transformation

applied to the images in Figure 5.

Prior to the loading process, an image I

◦d

is cap-

tured for the washing machine when the drum is

empty and used as a reference to compare against it.

For a new image I, the difference |I − I

◦d

| is calcu-

lated and in this difference image pixels with values

less than a threshold (set at 50) are ignored. Figure 6

(b) shows the ROI and difference images for the setup

in Figure 5.

2.3 Checking for Items Fallen on the

Floor

We use the same idea here as well by having an image

I

◦ f

for the floor before the loading process. However,

here we find the difference for a new image I |I −I

◦ f

|,

using the HSV (Sural et al., 2002) color space. To

adjust for the possible variations of the garments col-

ors, we calculate the difference in the hue and value

channels of the HSV. In some cases, the difference

in the hue channel is very small and will not lead to

identification of fallen items. In other cases, this can

happen in the value channel as well and therefore, we

consider the larger value. Figure 7 shows an exam-

ple for two items where the hue difference gave better

results in one case and the value difference showed

better results in the other one. We apply morpholog-

ical closing on the difference image and then find the

largest connected component that represents the item

on the floor as shown in Figure 7.

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

446

Images from Figure 5 are transformed to log-polar space in (a). In

(b), both the ROI and difference images are shown. ROI image

is the disk between the inner and outer circle of the drum. Dif-

ference image is obtained based on an initial image taken before

the loading process. For dark objects, detection is easier because

the contrast against the white surface is higher (as in upper two

images). For bright ones, it is more challenging as the contrast is

lower. However, the wrinkles are helpful in recognizing the gar-

ment (as in lower two images).

Figure 6: Analyzing the drum images.

2.4 Planning Robot Movements

For an efficient grasping, fingers need to create some

wrinkles/creases when they close. This is more proba-

ble and effective when the gripper reaches the clothes

vertically. To do this we define a point that is shifted

upwards from the grasping point by 10 cm and move

the arm from it to the grasping point. After the grip-

per closes, the arm will not move immediately to

the washing machine, but above the basket so that a

grasped item would fall inside the basket if it slips.

When the arm is placing an item inside the drum,

a path is designed to make the robot push down the

clothes already within the drum in a coherent way.

Images of the floor captured after the loading process to check for

any fallen item(s). Raw images are shown in the first row. The

second and third rows show the difference with images of floor

before the loading process in the hue channel of the HSV and the

saturation channel. Fourth row show the extraction of the largest

component that corresponds to the fallen garment.

Figure 7: Floor Inspection.

With the washing machine CAD model, locating the

drum center can be done using an AprilTag with

known position relative to the washing machine. Fig-

ure 8 (left) shows the washing machine model, the

drum axis and the AprilTag. A point is defined with

respect to the drum center as a pre-entry point for the

robot. Figure 8 (middle) shows this point. The path is

generated from that point to the drum taking into con-

sideration collision avoidance with the drum. Figure

8 (right) shows the robot inside the drum.

If an item is detected as partially outside the drum,

the robot moves to the detected item, grasps it and

then moves up towards the drum center and then in-

side it. Figure 9 shows an example of a sequence of

placing an item back into the drum.

Autonomous Loading of a Washing Machine with a Single-arm Robot

447

The detection of the AprilTag placed on top of the washing ma-

chine allows for finding the location of the center of the drum with

the knowledge of the washing machine CAD model. The direc-

tion of the AprilTag x-axis (in red) also dictates the direction of

the drum central axis (left). The actual pre-entry point (middle)

and inside the drum one (right) are shown for the robot with the

grasped item.

Figure 8: Washing Machine Drum Axis.

After checking if there’s partial fall on the drum, the robot moves

item into the drum.

Figure 9: Robot moving item inside the drum.

3 EXPERIMENTS

We use the Doosan A0509 collaborative robot, Kinect

V2 depth camera and Microsoft LifeCam camera.

Figure 10 shows the system components which is the

used setup in our simulation environment. For im-

plementing the framework, we used ROS (Quigley

et al., 2009) Melodic and the PCL library (Rusu and

Cousins, 2011) for handling the point cloud captured

All system components (including washing machine, laundry bas-

ket, the robot and the cameras) in the simulation inside rviz.

Figure 10: Simulation Setup.

from the Kinect camera. The generation of trajecto-

ries was done using MoveIt (Coleman et al., 2014)

and for execution, it was communicated to the Doosan

motion controller. The dimensions of the laundry bas-

ket and the CAD model of the washing machine are

assumed to be known. This information was included

into our URDF model but the location and orienta-

tion of them can be adjusted based on the AprilTag

attached to the basket and the washing machine.

Clothes were randomly thrown into the basket and

the robot could successfully grasp the items and place

them inside the drum. We had 8 garments in to-

tal varying in size, color and texture. They were

put in random configurations 5 times and the load-

ing pipeline was tested. We had 34 loading attempts

where a loading attempt is defined as a complete ex-

ecution of the pipeline starting from identifying a

grasping point and ending by releasing the garment

inside the drum. Out of the 34 loading attempts we

had:

• 18 completely successfully attempts. Complete

success is defined as placing the garment com-

pletely inside the drum

• 11 partially successfully attempts. Partial success

is defined as placing the garment inside the drum

while part of it is partially outside the drum.

• 5 failed attempts. Failed attempt corresponds to

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

448

the cases when garments fall on the floor.

The partially successfully and failed attempts

cases were mostly related to the large items like

trousers or big towels. However, with the two mod-

ules for checking the drum and the floor, the garments

could be placed inside the drum.

We created two ROS packages; one for the per-

ception and the other for planning and executing the

movements. The perception package included the fol-

lowing nodes:

• ROI-Creation Nodes: extracting the clothes in-

side laundry basket and the drum opening. These

nodes published the cropped images.

• Inspection Nodes: checking the drum if an item

is out and another for checking the floor. These

nodes publish a boolean message (there is an item

to grasp or not).

• Grasping-Points Nodes: finding a grasping point

in the laundry basket, on the drum edge and on

the floor (if items were detected). These nodes

publish a 3D point in the end-effector frame.

The planning package included the following nodes:

• Loading Node: it subscribes to the grasping point

message and creates a motion plan for grasping

clothes, moving upwards above the basket and

then into the drum passing by the pre-entry point.

• Corrections Nodes: they subscribe to the inspec-

tion nodes boolean messages. If a correction is

needed, they move the robot to a grasping point

and either puts the partially-out item back into

drum or the fallen item on the floor into the wash-

ing machine.

We use the Camozzi CGPS pneumatic gripper for

grasping the clothes. However, the maximum aper-

ture is very small (2 cm) does not give satisfactory re-

sults as it only works with clothes of small sizes and

rough surfaces when there is enough friction force.

But for the general case, wrinkles have to be formed

inside the gripper to get a reliable grasping. To do

this, we designed the gripper shown in Figure 11 that

uses linkages for converting linear motion to rotary

one and thus extends the maximum aperture.

A demonstration for the loading sequence

and recovery modules can be viewed here:

https://www.youtube.com/watch?v=rK4GwbaqT8Q

4 CONCLUSIONS

Using our proposed framework it was possible to

autonomously load a washing machine with clothes

placed inside a basket. Our framework is modular

Fingers attached to the pneumatic gripper with this mechanism

created better grasping and grip .

Figure 11: Gripper in open/close positions.

in its design to allow for the recovery of failures,

when needed. Active Contours showed robust clus-

tering of the image without prior knowledge of the

number or colors of items in the basket or even an

estimate number of it. Despite the incomplete clus-

tering of the image in some cases, clusters were cre-

ated and a grasping point was found. More complete

clustering could be achieved by adding more points

for the initial curve. However this would add to the

runtime and complexity of the work and such a com-

plete clustering of the image is not needed as the main

objective is to find at least one continuous area to

consider for grasping which could be achieved using

fewer number of points. The grasped item(s) could be

placed inside the drum and when this failed, the re-

covery modules for drum and floor checking ensured

the completion of the loading. Most of the failures

were associated with big or heavy items that either

slipped from the robot or were not completely placed

inside the drum. A deeper grasp could be achieved by

shifting the grasping point downwards by a few cen-

timeters. This resulted in more success by having a

firm grip but also increased the probability of grasp-

ing other item(s) that could be below it which could

result in falling the items on the ground. Again, this

kind of failure could be recovered by our floor inspec-

tion module.

Possible extensions for this work can rely on ma-

chine learning to detect a grasping point and use Dy-

namical Movement Primitives (DMP) to learn a tra-

jectory for the loading process. Multi-fingers gripper

can also be utilized for more robust and firm grasping.

REFERENCES

Caselles, V., Kimmel, R., and Sapiro, G. (1995). Geodesic

active contours. In IEEE International Conference on

Computer Vision, pages 694—-699.

Autonomous Loading of a Washing Machine with a Single-arm Robot

449

Chan, T. and Vese, L. (2001). Active contours with-

out edges. IEEE Transactions on Image Processing,

10(2), pages 266—-277.

Clegg, A., Yu, W., Erickson, Z., Tan, J., Liu, C., and Turk,

G. (2017). Learning to navigate cloth using haptics. In

IEEE International Conference on Intelligent Robots

and Systems, pages 2799–2805. Institute of Electrical

and Electronics Engineers Inc.

Cohen, L. D. (1991). On active contour models and bal-

loons. CVGIP: Image Understanding, vol. 53, no. 2,

pages 211–218.

Coleman, D., Sucan, I., Chitta, S., and Correll, N. (2014).

Reducing the barrier to entry of complex robotic soft-

ware: a moveit! case study. pages 1–14.

Ganesan, P., Rajini, V., and Rajkumar, R. (2010). Segmen-

tation and edge detection of color images using cielab

color space and edge detectors. In International Con-

ference on ”Emerging Trends in Robotics and Com-

munication Technologies”, INTERACT-2010. IEEE.

Gil, P., Mateo, C. M., Delgado,

´

A., and Torres, F. (2016).

Visual/Tactile sensing to monitor grasps with robot-

hand for planar elastic objects. 47th International

Symposium on Robotics, ISR 2016, 2016:439–445.

Kampouris, C., Mariolis, I., Peleka, G., E. Skartados, E.,

Kargakos, A., Triantafyllou, D., and Malassiotis, S.

(2016). Multi-sensorial and explorative recognition

of garments and their material properties in uncon-

strained environment. In IEEE International Con-

ference on Robotics and Automation (ICRA), pages

1656–1663. IEEE.

Kass, M., Witkin, A., and Terzopoulos, D. (1988). Snakes:

Active contour models. International Journal of Com-

puter Vision, 1(4), pages 321—-331.

Marquez-Neila, P., Baumela, L., and Alvarez, L. (2014). A

morphological approach to curvature-based evolution

of curves and surfaces. In IEEE Transactions on Pat-

tern Analysis and Machine Intelligence, 36(1), pages

2—-17.

Mira, D., Delgado, A., Mateo, C. M., Puente, S. T., Can-

delas, F. A., and Torres, F. (2015). Study of dexter-

ous robotic grasping for deformable objects manipu-

lation. 2015 23rd Mediterranean Conference on Con-

trol and Automation, MED 2015 - Conference Pro-

ceedings, pages 262–266.

Petr

´

ık, V., Smutn

´

y, V., Krsek, P., and Hlav

´

a

ˇ

c, V. (2017).

Single arm robotic garment folding path generation.

Advanced Robotics, 31(23-24):1325–1337.

Quigley, M., Gerkey, B., Conley, K., Faust, J., Foote, T.,

Leibs, J., Berger, E., Wheeler, R., and Ng, A. (2009).

Ros: an open-source robot operating system. In IEEE

Intl. Conf. on Robotics and Automation (ICRA) Work-

shop on Open Source Robotics.

Ramisa, A., Alenya, G., Moreno-Noguer, F., and Torras, C.

(2011). Determining where to grasp cloth using depth

information. Frontiers in Artificial Intelligence and

Applications, 232:199–207.

Rusu, R. B. and Cousins, S. (2011). 3d is here: Point cloud

library (pcl). In IEEE International Conference on

Robotics and Automation, pages 19–22.

Shehawy, H., Rocco, P., and Zanchettin, A. (2021). Estimat-

ing a garment grasping point for robot. In 20th Inter-

national Conference on Advanced Robotics (ICAR).

IEEE.

Stria, J., Prusa, D., Hlavac, V., Wagner, L., Petrik, V., Krsek,

P., and Smutny, V. (2014). Garment perception and

its folding using a dual-arm robot. IEEE Interna-

tional Conference on Intelligent Robots and Systems,

(September):61–67.

Sun, L., Aragon-Camarasa, G., Rogers, S., and Siebert,

J. P. (2018). Autonomous Clothes Manipulation Us-

ing a Hierarchical Vision Architecture. IEEE Access,

6(October):76646–76662.

Sural, S., Qian, G., and Pramanik, S. (2002). Segmentation

and histogram generation using the hsv color space for

image retrieval. In IEEE International Conference on

Image Processing, pages 589–592.

Wang, J. and Olson, E. (2016). Apriltag 2: Efficient and ro-

bust fiducial detection,. In IEEE International Confer-

ence on Intelligent Robots and Systems, pages 4193—

-4198.

Willimon, B., Birchfield, S., and Walker, I. (2011a). Clas-

sification of clothing using interactive perception. In

IEEE International Conference on Robotics and Au-

tomation, pages 1862–1868.

Willimon, B., Birchfield, S., and Walker, I. (2011b). Model

for unfolding laundry using interactive perception. In

EEE International Conference on Intelligent Robots

and Systems, pages 4871–4876. IEEE.

Yamazaki, K., Nagahama, K., and Inaba, M. (2011). Daily

clothes observation from visible surfaces based on

wrinkle and cloth-overlap detection. Proceedings of

the 12th IAPR Conference on Machine Vision Appli-

cations, MVA 2011, pages 275–278.

Yamazaki, K., Oya, R., Nagahama, K., Okada, K., and In-

aba, M. (2014). Bottom dressing by a life-sized hu-

manoid robot provided failure detection and recovery

functions. 2014 IEEE/SICE International Symposium

on System Integration, SII 2014, pages 564–570.

Yamazaki, K., Ueda, R., Nozawa, S., Mori, Y., Maki, T.,

Hatao, N., Okada, K., and Inaba, M. (2010). Sys-

tem integration of a daily assistive robot and its ap-

plication to tidying and cleaning rooms. IEEE/RSJ

2010 International Conference on Intelligent Robots

and Systems, IROS 2010 - Conference Proceedings,

pages 1365–1371.

Yuan, W., Mo, Y., Wang, S., and Adelson, E. H. (2018).

Active clothing material perception using tactile sens-

ing and deep learning. In IEEE International Con-

ference on Robotics and Automation (ICRA), pages

4842–4849. IEEE.

Yuen, H., Princen, J., Illingworth, J., and Kittler, J.

(1990). Comparative study of hough transform meth-

ods for circle finding. Image and Vision Computing,

8(1):7177.

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

450