Toward Autonomous Mobile Robot Navigation in Early-Stage Crop

Growth

Luis Emmi

a

, Jesus Herrera-Diaz

b

and Pablo Gonzalez-de-Santos

c

Centre for Automation and Robotics (UPM-CSIC), Arganda del Rey, Madrid 28500, Spain

Keywords: Early-stage Crop-growth, Autonomous Navigation, Row following, Time-of-Flight Camera, Deep Learning.

Abstract: This paper presents a general procedure for enabling autonomous row following in crops during early-stage

growth, without relying on absolute localization systems. A model based on deep learning techniques (object

detection for wide-row crops and segmentation for narrow-row crops) was applied to accurately detect both

types of crops. Tests were performed using a manually operated mobile platform equipped with an RGB and

a time-of-flight (ToF) cameras. Data were acquired during different time periods and weather conditions, in

maize and wheat fields. The results showed the success on crop detection and enables the future development

of a fully autonomous navigation system in cultivated fields during early stage of crop growth.

1 INTRODUCTION

Autonomous vehicles for agriculture have drawn the

attention of farmers in recent decades and the activity

for developing robust, safe and eco-friendly

autonomous vehicles has increased significantly

(Gonzalez-de-Santos et al., 2020). However,

navigation is still a current challenge for autonomous

robotic systems (Sarmento et al., 2021) because

agricultural fields are unstructured, dynamic and

diverse environments where weather conditions,

luminosity, and stages of crop growth change

continuously.

Conventional localization and perception

technologies, such as the Global Navigation Satellite

System (GNSS), 2D and 3D LIDAR, and stereo

cameras, have proven their usefulness in ensuring

autonomous navigation in fields (Shalal et al., 2013).

Although they rely heavily on user intervention to

ensure accurate mapping and conditioning of the

working environment, they are not able, by

themselves, to develop a robust navigation system

capable of ensuring full autonomy in these

demanding environments. Precise mapping

(including crop location), setting up the working area,

luminosity variability, GNSS correction signal

a

https://orcid.org/0000-0003-4030-1038

b

https://orcid.org/0000-0002-8516-0550

c

https://orcid.org/0000-0002-0219-3155

failure, communications latency, and GNSS-denied

zones are currently some of the major challenges in

autonomous navigation.

Weed management is one of the operations that

has generated the most solutions in agriculture

(Oliveira et al., 2021). Machine vision and GNSS-

based mapping have been the preferred technologies

to distinguish weeds from crops and deliver precision

treatment (Mavridou et al., 2019). Site-specific weed

management techniques have gained considerable

popularity in the last few years, particularly those

based on high-power laser sources, which offer a

more sustainable and eco-friendlier alternative than

the other techniques (Rakhmatulin & Andreasen,

2020). These technologies have been shown to be

successful when weeds (and therefore crops) are in an

early stage of growth. Crop row following has been a

widely discussed topic in the literature (Bonadies &

Gadsden, 2019). However, most of the studies and

applications solve the problem when the crop is

already in a mature growth stage or in crop types with

an appropriate morphology for LIDAR-based

methods, such as vineyards (Emmi et al., 2021). The

early plant stage growth, together with the

unevenness in ground height and the presence of

weeds, make conventional perception systems unable

Emmi, L., Herrera-Diaz, J. and Gonzalez-de-Santos, P.

Toward Autonomous Mobile Robot Navigation in Early-Stage Crop Growth.

DOI: 10.5220/0011265600003271

In Proceedings of the 19th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2022), pages 411-418

ISBN: 978-989-758-585-2; ISSN: 2184-2809

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

411

to identify the crop properly, thus preventing its

further use for autonomous row guidance.

This paper presents an approach for developing

smart perception systems to enable autonomous

robots to navigate in cultivated fields in an early stage

of crop growth without relying on absolute

localization systems, such as GNSS.

2 RELATED WORK

Autonomous navigation in crop field is mainly

composed by following the crop lines, and at the end

of each pass, making a U-turn to return to the field.

The identification and following of crop rows are

subjects that still attract considerable interest. Diverse

strategies are found in the literature to solve these

problems that follows a quite general procedure: (i)

single crops or crop rows are detected; (ii) the crop row

central point or the equation of the line is extracted; and

(iii) the path to be followed by the mobile system is

planned and executed. The techniques commonly used

for identifying the crop rows are mainly based on a

combination of binary segmentation, greenness

identification (Woebbecke et al., 1995), morphological

operations, Otsu’s method (Otsu, 1979), and edge

detection techniques, such as the Hough transform

(Hough, 1962). Many studies have made use of these

techniques across different types of crops. For

example, Jiang & Zhao, (2010) applied these

techniques to identify the crop lines in a soybean field.

Romeo et al., (2012) developed an algorithm based on

green pixel accumulation for extracting crop lines in a

maize field that outperformed the Hough

transformation methods.

To increase the accuracy and robustness of these

techniques, Jiang et al., (2015) proposed a method

based on least squares and multiple regions of interest

(ROIs), where the data were split into horizontal

strips. They compared their proposal with the

standard Hough transform on soybean, wheat and

maize crops. Following the same strategy, Zhang et

al., (2018) applied a multi-ROI approach in a maize

field. As a novelty, they employed double

thresholding approach, using the Otsu method in

combination with particle swarm optimization to

improve the differentiate between weeds and crops.

There are also several studies that combined the

absolute navigation systems and computer vision

techniques previously mentioned. For example,

Bakker et al., (2011) employed an RTK-DGPS

system to navigate in a sugar beet field, and

Kanagasingham et al., (2020) proposed a combined

navigation strategy for a rice field weeding robot.

For vineyards and orchards in general, it is quite

common to use LIDAR-based systems in

combination with IMU data and odometers (Lan et

al., 2018) or color cameras (Benet et al., 2017) for

crop row following. The latest technological

advances have made it possible to incorporate other

technologies to obtain 3D information from the

environment, as is the case with infrared-based

cameras. Among these cameras, there is a growing

interest in the time-of-flight (ToF) cameras, that

provides a point cloud of the environment in a manner

that is similar to the way that LIDAR does. Gai et al.,

(2021) used this type of camera for navigation under

a canopy, where the GNSS signal may be denied.

Currently, as the above work stated, ToF cameras are

beginning to be used with great interest in outdoor

environments due to improvements in their light

sensors and wider vertical field-of-view (FoV)

capability than what is available with LIDAR sensors.

There are many research studies that use classical

techniques for in-field navigation, but these

techniques usually need to adjust certain system

parameters for navigating in new environments and

situations, which limits their generalizability. In

addition, for methods based on green detection, the

presence of weeds may be a major problem.

In recent years, techniques based on artificial

intelligence (AI) have gained much interest. Two

different techniques can be distinguished: (i) object

detection, which uses bounding boxes to identify the

classes, and (ii) segmentation, which is based on pixel

classification. Their selection depends on the type of

crop to be identified: (i) wide row crops (maize, sugar

beet) where the object detection is preferred and (ii)

narrow row crops (wheat, rice) where segmentation-

based classification is more suitable. Normally,

artificial intelligence-based techniques are used to

identify the crop, and then, some combination of the

aforementioned techniques, such as the Hough

transform or RANSAC (Fischler & Bolles, 1981), are

used to extract the crop lines. Ponnambalam et al.,

(2020) used a SegNet (Badrinarayanan et al., 2017)

with ResNet50 (He et al., 2015) convolution neural

network (CNN) in combination with a multi-ROI

strategy to segment and extract the row crops in

strawberry fields. Simon & Min, (2020) compared the

results of a method based on a neural network with

the classical method based on the Hough transform in

a maize field. The deep learning method obtained

higher accuracy and more robustness. Emmi et al.,

(2021) used a YOLOv3 (Redmon & Farhadi, 2018)

network for object detection in broccoli, cabbage and

vineyard trunks. de Silva et al., (2021) tested the

performance of a deep learning model based on U-

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

412

Net (Ronneberger et al., 2015) in a sugar beet field

under different scenarios, such as shadows, presence

of weeds, gaps in the crop row, intense sunlight

conditions, and different stages of crop growth. There

is also a special interest in AI on the edge, i.e., on

users' devices. For this purpose, MobileNet (Howard

et al., 2017) networks are the most suitable due to

their efficiency and speed, with the counterpart

having generally poorer accuracy.

In the search for new alternatives to weed

management, strategies based on high-power lasers

have emerged. This type of solution has been shown

to be subtle when plants are small (Rakhmatulin &

Andreasen, 2020). These types of strategies have

given way to alternatives for row following, where

the abovementioned examples may not achieve

accurate results and the robustness of the models may

be inadequate. To generalize the problem, it is

necessary define what an early-stage crop is. There

are different nomenclatures to define the different

growth stages of different crops, but these definitions

are normally specific to each type of crop. For the

sake of this development, maize and wheat are

selected as examples of crops sown in wide rows and

narrow rows, respectively, which coincide with this

case study. The growth stage in maize will be

identified using the classification made by (Zhao et

al., 2012), while the classification made by (Zadoks

et al., 1974) will be used for wheat. In this paper,

early growth stage crops will be considered as those

from the moment the perception system is able to

detect them until the moment of growth when the

weeding system based on high-power laser sources is

no longer efficient for the elimination of weeds,

assuming that crops and weeds grow at the same rate.

This stage corresponds to approximately the V2 stage

for maize (Zhao et al., 2012) and approximately the

12-seedling stage for wheat (Zadoks et al., 1974).

The literature on autonomous navigation in the

early growth stage crops is rather scarce, although

there are some significant studies. For maize, Wei et

al., (2022) built what they defined as the dataset row

anchor selection classification method (RASCM) for

tracking crop rows. García-Santillán et al., (2018)

developed a method for extracting curved and straight

crop rows based on greenness identification, double

thresholding and morphological operations that was

also tested in what one can consider early-stage maize

crops. Winterhalter et al., (2018), assuming that crop

rows are parallel and equidistant, proposed a method

based on an adaptation of the Hough transform that

was able to detect crop rows in early-stage sugar beet

crops. Finally, Ahmadi et al., (2021) developed a

method based on greenness identification and Otsu’s

method for multicrop row detection relying only on

on-board cameras. This proposal was tested in early-

stage sugar beet.

Therefore, this paper presents a strategy that

integrates several of the technologies mentioned

above, such as artificial intelligence for crop

detection, in conjunction with emerging perception

systems such as ToF cameras, to obtain a highly

accurate depth map and locate the detected crop with

respect to the mobile platform. The present work aims

to pave the way for the development of a system able

to autonomously navigate in cultivated fields in an

early stage of crop growth in a robust and efficient

way, with the capability to scale the system to

incorporate new crops or to be able to operate in

unforeseen environments.

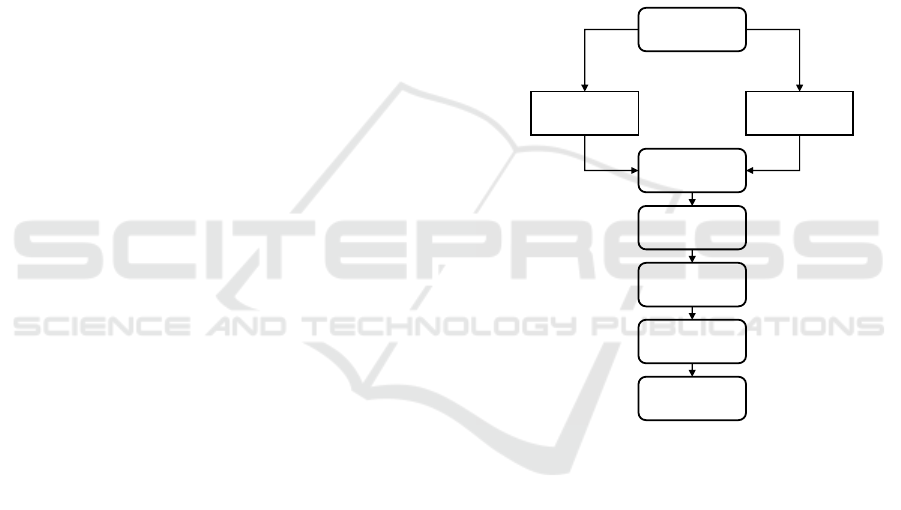

Figure 1: Diagram of the presented methodology.

3 MATERIALS AND METHODS

Figure 1 presents a general procedure for enabling

autonomous navigation in crops in an early growth

stage. First, data are acquired by the perception

systems, which consist of RGB and ToF cameras.

Then, depending on the type of crop, a different

approach is followed. For wide-row crops, object

detection is used, and for narrow-row crops,

segmentation is applied to identify the crops in the

RGB images, making use, in both cases, of deep

learning models. Once a crop is identified, a match

between the ToF point cloud and the output of the

respective deep learning model is made, obtaining the

relative distance between the detected crops and the

autonomous vehicle. Next, a filtering process is

Data

acquisition

Object detection

Path following

Lines extraction

Clustering

Matching with

ToF point cloud

Filtering

Segmentation

Wide-row crops

Narrow-row crops

Toward Autonomous Mobile Robot Navigation in Early-Stage Crop Growth

413

utilized to remove the outliers and the noise of the

matched point cloud, mostly produced by the

sunlight. Next, RANSAC is applied to obtain the

ground plane, the background points are removed,

and the points that correspond to the crops are

projected onto the plane. Later, a clustering algorithm

based on DBSCAN (Ester et al., 1996), using the

present and past points, is employed to obtain the crop

rows. Finally, the RANSAC algorithm is again

applied to compute the directions of each cluster, and

the final path that the mobile platform must follow is

calculated.

For crop identification, depending on the type of

crop, a different deep learning architecture was used.

In the case of maize, the YOLOv4 (Bochkovskiy et

al., 2020) model was employed to detect the plants.

For wheat, several combinations between the

segmentation models PSPNet (Zhao et al., 2017), U-

Net (Ronneberger et al., 2015), and SegNet

(Badrinarayanan et al., 2017) and the base models

ResNet50 (He et al., 2015), VGG16, MobileNet

(Howard et al., 2017) and CNN were tested (see Table

1). Their performance characteristics and

comparisons of the results of the different models will

be discussed in the results section.

Table 1: Segmentation models.

Base Model Segmentation Model

1 CNN PSPNet

2 VGG16 PSPNet

3 ResNet50 PSPNet

4 CNN U-Net

5 VGG16 U-Net

6 ResNet50 U-Net

7 MobileNet U-Net

8 CNN SegNet

9 VGG16 SegNet

10 ResNet50 SegNet

11 MobileNet SegNet

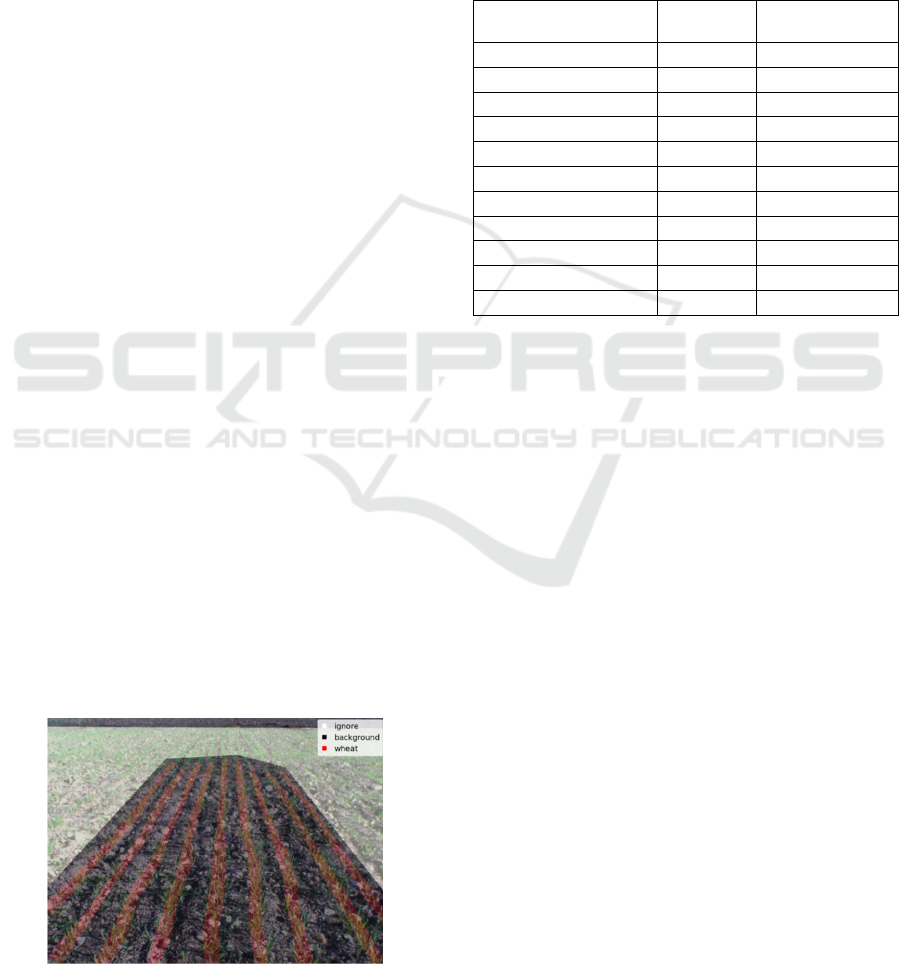

Figure 2: Example of the maize experimental fields.

Figure 3: Example of the wheat experimental fields.

The presented methodology was validated under

the European project named Sustainable Weed

Management in Agriculture with Laser-Based

Autonomous Tools (WeLASER). The WeLASER

project is a consortium of ten partners from Spain,

Germany, Denmark, France, Poland, Belgium, Italy

and the Netherlands. WeLASER aims to develop

precision weeding equipment based on applying

lethal doses of energy to weed meristems using a

high-power laser source with the main objective of

eliminating the use of herbicides while improving

productivity. The prototype consists of an

autonomous robot with an artificial intelligent vision

system that will differentiate between weeds and

crops. It will then detect the meristems of the weeds

and apply the laser to kill the plants. All the systems

will be coordinated by a smart controller based on the

Internet of Things (IoT) and cloud computing

techniques. The target crops will be wheat, maize, and

sugar beet (WeLASER, 2022).

Figure 4: CAROB robotic platform and perception system.

RGB

ToF

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

414

To validate the presented algorithm, data were

acquired in experimental fields of maize (see Fig. 2)

and wheat (see Fig. 3). The dimensions of each field

were 20 m × 60 m.

The CAROB robotic platform that was developed

by AgreenCulture (2022) was used for data

acquisition, where the perception system was

installed (see Fig. 4). The perception system consisted

of an RGB camera TRI016S-CC RGB equipped with

the SV-0614V lens (resolution: 1.6 MP; FoV: 54.6° ×

42.3°), and a ToF camera HLT003S-001 (resolution:

0.3 MP; FoV: 69° × 51°), of which both were

acquired from Lucid Vision Labs (2022).

The data were acquired by manually operating the

mobile platform during different time periods and

weather conditions in the same season. To build the

maize and wheat datasets 450 and 125 images were

labeled, respectively, using data augmentation

techniques, such as rotating, image cropping, blurring

and brightness changes, among others, were used to

increase the size of the dataset. In both cases, 80% of

the data was destined for the training group, 10% for

the validation group and 10% for the test group. Part

of the dataset used in this work has been published in

an open-access repository, for both maize

(https://doi.org/10.20350/digitalCSIC/14566) and

wheat (https://doi.org/10.20350/digitalCSIC/14567).

As shown in Fig. 5, depending on how the labeling

process is performed, this can lead to misleading

errors. In the dataset images, the crops that are located

in more distant regions or that are not clearly

recognizable have not been labeled. Consequently,

when the model was validated, in these regions false-

positives were detected, although they were unlabeled

crops, but because they had not been previously

labeled, the model would consider them as false

positives, decreasing the real performance of the

model. An alternative to mitigate this common

problem, ignoring masks, was used by specifying that

the model not consider these parts of the images

where the differences between crops and the

background may be ambiguous.

Figure 5: Example of a labeled wheat image.

The segmentation models were implemented with

Keras (https://github.com/fchollet/keras) using

TensorFlow (Abadi et al., 2016) as the backend

software tool, while the YOLOv4 model used

Darknet (Redmon, 2013) version. The training

process was performed on a Quadro RTX 6000

graphics card with 24 GB GDDR6 of RAM memory,

while the inference process was evaluated using

GeForce GTX 1650.

Table 2: Performance of the segmentation models.

Model IoU

Training time

[s] per epoch

MobileNet SegNet 0.6815 124

MobileNet U-Net 0.7347 124

ResNet50 PSPNet 0.7370 180

ResNet50 SegNet 0.7578 164

ResNet50 U-Net 0.7406 183

VGG16 PSPNet 0.7343 227

VGG16 SegNet 0.7461 196

VGG16 U-Net 0.6982 208

CNN PSPNet 0.7321 171

CNN SegNet 0.7364 156

CNN U-Net 0.7339 155

4 RESULTS

To assess the overall performance of the presented

methodology, it is first necessary to evaluate the crop

identification models. For the wheat crop, the

different models listed in Table 1 have been

compared. All models were trained for the same

initial number of epochs, although an early stopping

technique based on validation loss was applied to

avoid overfitting. The comparison between the

different segmentation models is summarized in

Table 2. As the dataset was imbalanced, the metric

chosen to evaluate the performance of the models was

a frequency weighted intersection over union (IoU)

determination. The model with the best performance

was ResNet50-SegNet. However, in these types of

applications in which the models are going to be used

in real time, apart from evaluating the performance of

the model, it is necessary to consider their inference

time. In this case, the differences between the

inference times of the considered models were not

notable, taking as a basis that, in general, the

inference time is smaller than the training time.

Hence, the final model that has been selected was the

aforementioned model. As expected, models based on

MobileNet are considerably faster, but at the cost of a

generally poorer performance.

Toward Autonomous Mobile Robot Navigation in Early-Stage Crop Growth

415

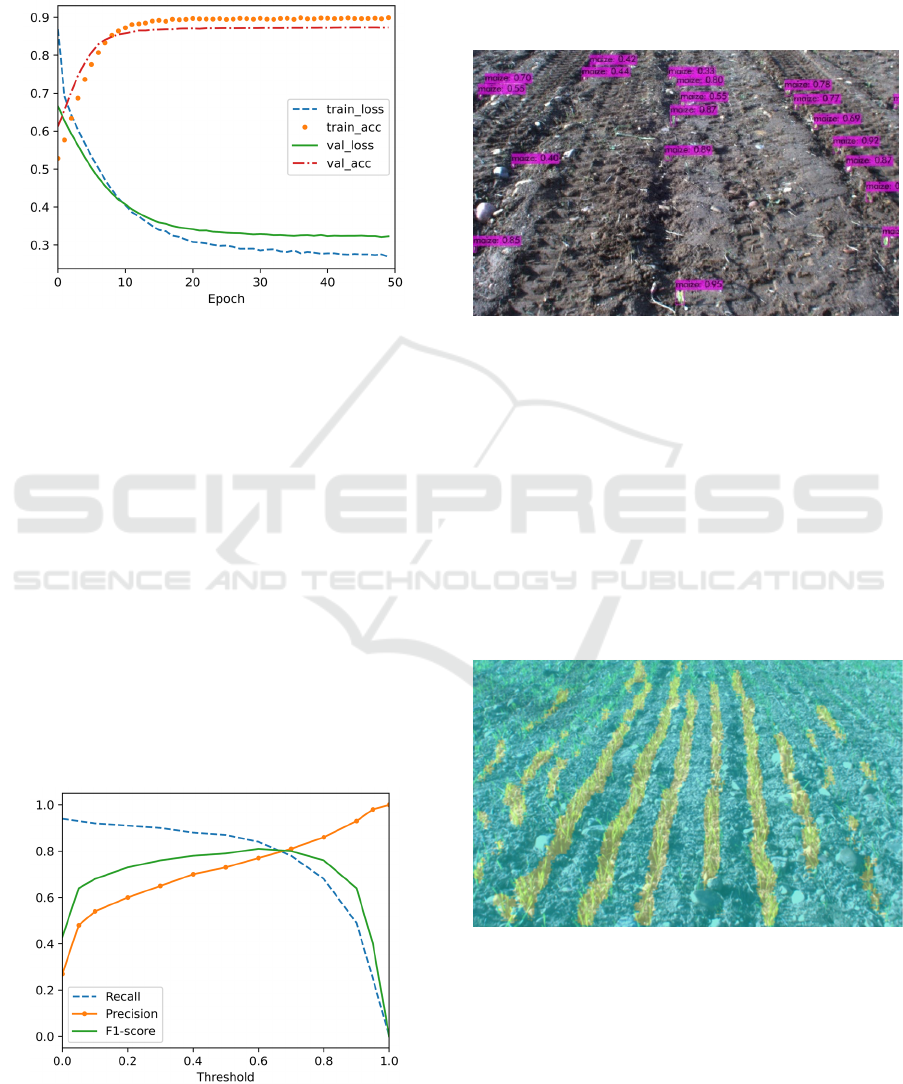

An example of the typical training curves is

presented in Fig. 6, for the training with the

ResNet50-SegNet network, where the loss curves for

both training (train_loss) and validation (val_loss) are

presented.

Figure 6: Example of training curves. Y-axis normalized to

compare loss curves and precision curves.

It can be seen in these curves that the network has

not been overfitted, the validation samples are

representative. Moreover, it can be seen that a point

is reached from which the training loss continues to

decrease, although the validation loss remains the

same. Furthermore, Fig. 6 presents also the accuracy

curves for both train (train_acc) and validation

(val_acc) which shows a proper fit of the model.

On the other hand, regarding object detection in

maize, a YOLOv4 model was selected for crop

identification. Average precision (AP) was the metric

chosen to assess the performance of the model, and

its values for IoU thresholds of 0.25, 0.5, and 0.75

were 0.9168, 0.8478 and 0.1496, respectively. In

addition, the precision and recall metrics have been

calculated for different thresholds, and the

comparative curves are presented in Fig. 7.

Figure 7: Recall, precision and F1-score for different

threshold values. Y-axis normalized.

The threshold is be chosen depending on whether

the recall or precision is desired to be higher, i.e.

whether a higher number of false positives (FP) or

false negatives (FN) is preferred. A trade-off between

precision and recall is selected based on the F1-score,

which is maximal for threshold values of

approximately 0.6.

Figure 8: Output of the object detection model (maize).

In both cases, the models are capable of properly

detecting the crops (see Fig. 8 and Fig. 9), thus

enabling crop line extraction. It is worth mentioning

that for autonomous navigation, the detection of all

crops in a single image is not an essential

requirement, given that strategies such as point

accumulation or particle filters can be applied to

reconstruct the crop line by taking information from

various epochs. In the absence of ground truth of the

row crops, a quantitative evaluation of the error

obtained by the process of crop line extraction is not

feasible.

Figure 9: Output of the segmentation model (wheat).

One could consider that the error will be similar

to the error obtained by other studies that have used a

similar procedure for line extraction, such as the work

presented by Emmi et al., (2021). Although the error

cannot be quantified (because at the time of the tests

the position of each crop was not available), it can be

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

416

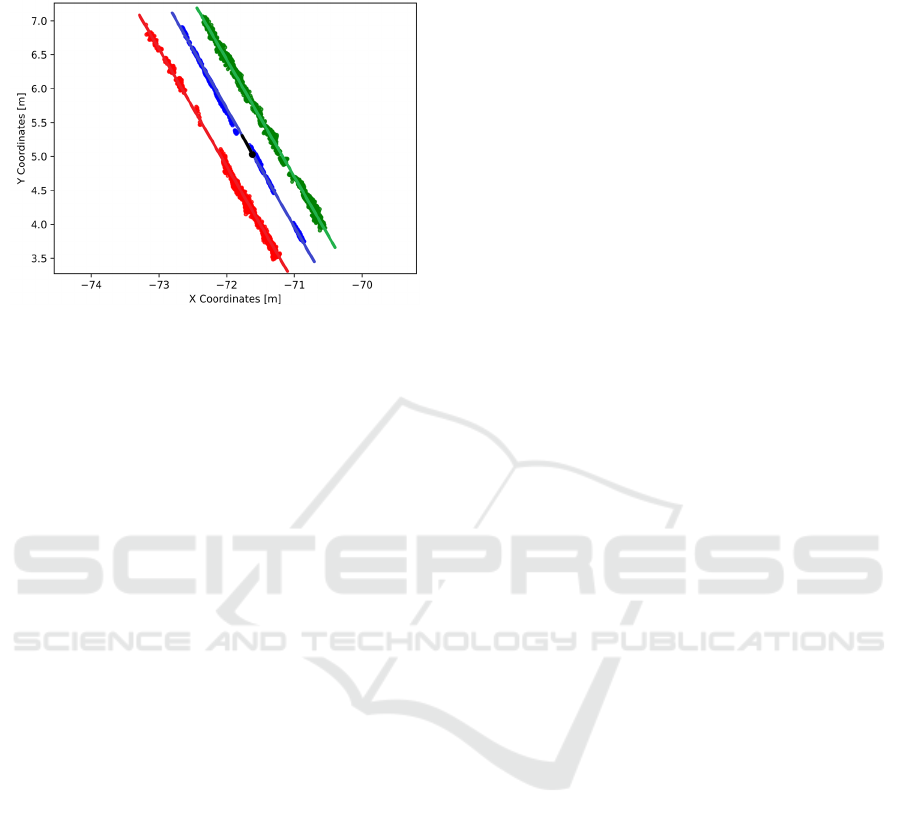

clearly established that the presented methodology is

able to identify the crop lines properly (see Fig. 10).

Figure 10: Clustering and lines extraction (maize).

Figure 10 presents the result of the application of

the methodology presented in Fig. 1, and by using a

line extraction such as RANSAC, it is possible to

extract the crop lines for the maize field, where the

position and direction of the mobile platform are

represented in black color in a cartesian coordinate

frame. Finally, the results have shown the

effectiveness of the presented methodology for

autonomous navigation in early-stage crop growth.

5 CONCLUSIONS

A general procedure for crop-row identification in

early-stage growth has been presented. This

methodology seeks not to depend on global

localization systems, which will enable robust

autonomous row following in both wide-row crops and

narrow-row crops. The methodology is based on crop

identification using state-of-the-art deep learning

models, validated in maize and wheat at an early

growth stage, although the methodology can be

extended to many more crops. This approach

demonstrates that it is possible to integrate in a single

methodology the identification and classification of

diverse wide-row and narrow-row crops to estimate the

row lines for later navigation while eliminating the

outliers. The presented method has been validated

using offline data gathered by a robotic platform during

different real working conditions. The results show the

robustness and effectiveness of the methodology in

identifying the crops, and later obtaining the

characterization of the crop lines, even considering

their early stage of growth. The presented approach

will enable the future development of a fully

autonomous navigation system for weed management

using high-power laser technology. Future work will

aim to expand the identification capacity for other

crops, such as sugar beet, which is one of the target

crops of the WeLASER project, as well as to validate

the presented methodology in real time.

ACKNOWLEDGEMENTS

This article is part of a the WeLASER project that has

received funding from the European Union’s Horizon

2020 research and innovation programme under grant

agreement No 101000256.

REFERENCES

Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean,

J., Devin, M., Ghemawat, S., Irving, G., Isard, M., &

others. (2016). TensorFlow: A System for Large-Scale

Machine Learning. OSDI, 16, 265–283.

AgreenCulture. (2022). AgreenCulture.

https://www.agreenculture.net/

Ahmadi, A., Halstead, M., & McCool, C. (2021). Towards

Autonomous Crop-Agnostic Visual Navigation in Arable

Fields. ArXiv:2109.11936 [Cs].

Badrinarayanan, V., Kendall, A., & Cipolla, R. (2017).

SegNet: A Deep Convolutional Encoder-Decoder

Architecture for Image Segmentation. IEEE Transactions

on Pattern Analysis and Machine Intelligence, 39(12),

2481–2495.

Bakker, T., van Asselt, K., Bontsema, J., Müller, J., & van

Straten, G. (2011). Autonomous navigation using a robot

platform in a sugar beet field. Biosystems Engineering,

109(4), 357–368.

Benet, B., Lenain, R., & Rousseau, V. (2017). Development

of a sensor fusion method for crop row tracking

operations. Advances in Animal Biosciences, 8(2), 583–

589.

Bochkovskiy, A., Wang, C. Y., & Liao, H. Y. M. (2020).

Yolov4: Optimal speed and accuracy of object detection.

arXiv preprint arXiv:2004.10934.

Bonadies, S., & Gadsden, S. A. (2019). An overview of

autonomous crop row navigation strategies for

unmanned ground vehicles. Engineering in Agriculture,

Environment and Food, 12(1), 24–31.

de Silva, R., Cielniak, G., & Gao, J. (2021). Towards

agricultural autonomy: Crop row detection under varying

field conditions using deep learning. ArXiv:2109.08247

[Cs].

Emmi, L., Le Flécher, E., Cadenat, V., & Devy, M. (2021).

A hybrid representation of the environment to improve

autonomous navigation of mobile robots in agriculture.

Precision Agriculture, 22(2), 524–549.

Ester, M., Kriegel, H.-P., Sander, J., & Xu, X. (1996). A

Density-Based Algorithm for Discovering Clusters in

Toward Autonomous Mobile Robot Navigation in Early-Stage Crop Growth

417

Large Spatial Databases with Noise. Proceedings of the

Second International Conference on Knowledge

Discovery and Data Mining, 226–231.

Fischler, M. A., & Bolles, R. C. (1981). Random sample

consensus: A paradigm for model fitting with

applications to image analysis and automated

cartography. Communications of the ACM, 24(6), 381–

395.

Gai, J., Xiang, L., & Tang, L. (2021). Using a depth camera

for crop row detection and mapping for under-canopy

navigation of agricultural robotic vehicle. Computers and

Electronics in Agriculture, 188, 106301.

García-Santillán, I., Guerrero, J. M., Montalvo, M., &

Pajares, G. (2018). Curved and straight crop row

detection by accumulation of green pixels from images

in maize fields. Precision Agriculture, 19(1), 18–41.

Gonzalez-de-Santos, P., Fernández, R., Sepúlveda, D.,

Navas, E., Emmi, L., & Armada, M. (2020). Field Robots

for Intelligent Farms—Inhering Features from Industry.

Agronomy, 10(11), 1638.

He, K., Zhang, X., Ren, S., & Sun, J. (2015). Deep Residual

Learning for Image Recognition. ArXiv:1512.03385

[Cs].

Hough, P. V. C. (1962). Method and means for recognizing

complex patterns. (US Patent Office Patent No.

3069654).

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang,

W., Weyand, T., Andreetto, M., & Adam, H. (2017).

MobileNets: Efficient Convolutional Neural Networks

for Mobile Vision Applications. ArXiv:1704.04861 [Cs].

Jiang, G. & Zhao, C. (2010). A vision system based crop rows

for agricultural mobile robot. 2010 International

Conference on Computer Application and System

Modeling (ICCASM 2010), V11-142-V11-145.

Jiang, G., Wang, Z., & Liu, H. (2015). Automatic detection

of crop rows based on multi-ROIs. Expert Systems with

Applications, 42(5), 2429–2441.

Kanagasingham, S., Ekpanyapong, M., & Chaihan, R.

(2020). Integrating machine vision-based row guidance

with GPS and compass-based routing to achieve

autonomous navigation for a rice field weeding robot.

Precision Agriculture, 21(4), 831–855.

Lan, Y., Geng, L., Li, W., Ran, W., Yin, X., & Yi, L. (2018).

Development of a robot with 3D perception for accurate

row following in vineyard. International Journal of

Precision Agricultural Aviation, 1(1), 14–21.

Lucid Vision Labs. (2022). Lucid Vision Labs.

https://thinklucid.com/

Mavridou, E., Vrochidou, E., Papakostas, G. A., Pachidis, T.,

& Kaburlasos, V. G. (2019). Machine Vision Systems in

Precision Agriculture for Crop Farming. Journal of

Imaging, 5(12), 89.

Oliveira, L. F. P., Moreira, A. P., & Silva, M. F. (2021).

Advances in Agriculture Robotics: A State-of-the-Art

Review and Challenges Ahead. Robotics, 10(2), 52.

Otsu, N. (1979). A Threshold Selection Method from Gray-

Level Histograms. IEEE Transactions on Systems, Man,

and Cybernetics, 9(1), 62–66.

Ponnambalam, V. R., Bakken, M., Moore, R. J. D., Glenn

Omholt Gjevestad, J., & Johan From, P. (2020).

Autonomous Crop Row Guidance Using Adaptive

Multi-ROI in Strawberry Fields. Sensors, 20(18), 5249.

Rakhmatulin, I., & Andreasen, C. (2020). A Concept of a

Compact and Inexpensive Device for Controlling Weeds

with Laser Beams. Agronomy, 10(10), 1616.

Redmon, J. (2013). Darknet: Open Source Neural Networks

in C. http://pjreddie.com/darknet/

Redmon, J., & Farhadi, A. (2018). YOLOv3: An Incremental

Improvement. ArXiv:1804.02767 [Cs].

Romeo, J., Pajares, G., Montalvo, M., Guerrero, J. M.,

Guijarro, M., & Ribeiro, A. (2012). Crop Row Detection

in Maize Fields Inspired on the Human Visual

Perception. The Scientific World Journal, 2012, 1–10.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net:

Convolutional Networks for Biomedical Image

Segmentation. ArXiv:1505.04597 [Cs].

Sarmento, J., Silva Aguiar, A., Neves dos Santos, F., &

Sousa, A. J. (2021). Autonomous Robot Visual-Only

Guidance in Agriculture Using Vanishing Point

Estimation. In G. Marreiros, F. S. Melo, N. Lau, H. Lopes

Cardoso, & L. P. Reis (Eds.), Progress in Artificial

Intelligence (Vol. 12981, pp. 3–15). Springer

International Publishing.

Shalal, N., Low, T., McCarthy, C., & Hancock, N. (2013). A

review of autonomous navigation systems in agricultural

environments.

Wei, C., Li, H., Shi, J., Zhao, G., Feng, H., & Quan, L.

(2022). Row anchor selection classification method for

early-stage crop row-following. Computers and

Electronics in Agriculture, 192, 106577.

Simon, N. A., & Min, C. H. (2020). Neural Network Based

Corn Field Furrow Detection for Autonomous

Navigation in Agriculture Vehicles. 2020 IEEE

International IOT, Electronics and Mechatronics

Conference (IEMTRONICS), 1–6.

WeLASER. (2022). WeLASER Project: Eco-Innovative

weeding with laser. https://welaser-project.eu/

Winterhalter, W., Fleckenstein, F. V., Dornhege, C., &

Burgard, W. (2018). Crop Row Detection on Tiny Plants

With the Pattern Hough Transform. IEEE Robotics and

Automation Letters, 3(4), 3394–3401.

Woebbecke, D. M., G. E. Meyer, K. Von Bargen, & D. A.

Mortensen. (1995). Color Indices for Weed Identification

Under Various Soil, Residue, and Lighting Conditions.

Transactions of the ASAE, 38(1), 259–269.

Zadoks, J. C., Chang, T. T., & Konzak, C. F. (1974). A

decimal code for the growth stages of cereals. Weed

Research, 14(6), 415–421.

Zhang, X., Li, X., Zhang, B., Zhou, J., Tian, G., Xiong, Y., &

Gu, B. (2018). Automated robust crop-row detection in

maize fields based on position clustering algorithm and

shortest path method. Computers and Electronics in

Agriculture, 154, 165–175.

Zhao, H., Shi, J., Qi, X., Wang, X., & Jia, J. (2017). Pyramid

Scene Parsing Network. ArXiv:1612.01105 [Cs].

Zhao, X., Tong, C., Pang, X., Wang, Z., Guo, Y., Du, F., &

Wu, R. (2012). Functional mapping of ontogeny in

flowering plants. Briefings in Bioinformatics, 13(3),

317–328.

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

418