An Innovative Partitioning Technology for Coupled Software Modules

Bernhard Peters

1 a

, Xavier Besseron

1 b

, Alice Peyraut

1

, Miriam Mehl

2 c

and Benjamin Ueckermann

2 d

1

Institute of Computational Engineering, University of Luxembourg, 6, rue Coudenhove-Kalergi, 1359 Luxembourg,

Luxembourg

2

Institute for Parallel and Distributed Systems, University of Stuttgart, Universit

¨

atssraße 38, 70569 Stuttgart, Gemany

Keywords:

Multi-physics, Software Coupling Technology, High Performance Computing.

Abstract:

Multi-physics simulation approaches by coupling various software modules is paramount to unveil the un-

derlying physics and thus leads to an improved design of equipment and a more efficient operation. These

simulations are in general to be carried out on small to massively parallelised computers for which highly

efficient partitioning techniques are required. An innovative partitioning technology is presented that relies

on a co-located partitioning of overlapping simulation domains meaning that the overlapping areas of each

simulation domain are located at one node. Thus, communication between modules is significantly reduced

as compared to an allocation of overlapping simulation domains on different nodes. A co-located partitioning

reduces both memory and inter-process communication.

1 INTRODUCTION

Particulate materials and their thermal processing

play an extremely important role in the worldwide

manufacturing industry and are as diverse as phar-

maceutical industry. processing and chemical indus-

try, mining, construction and agricultural machinery,

metal manufacturing, additive manufacturing, and re-

newable energy production. Particulate materials are

intermediates or products of approximately 60% of

the chemical industry (Ingram and Cameron, 2008).

These applications require a coupling between

discrete particles represented by the Discrete Element

Method (DEM) and a fluid phase referred to as Com-

putational Fluid Dynamics (CFD) through heat, mass

and momentum exchange and thus, constitute a multi-

physics and multi-scale coupled application. Over the

last decades, sophisticated methods and tools for sur-

face coupling of separate parallel codes have been

developed, in particular for continuum mechanical

fluid-structure interaction (FSI) (G

¨

otz et al., 2010;

Shunji et al., 2014; Mehl et al., 2016). Atomistic

scales have been successfully coupled to continuum

scales, both in concurrent (Tadmor et al., 1996; Xiao

a

https://orcid.org/0000-0002-7260-3623

b

https://orcid.org/0000-0002-7783-2185

c

https://orcid.org/0000-0003-4594-6142

d

https://orcid.org/0000-0002-1314-9969

and Belytschko, 2004; Fish et al., 2007; Knap and

Ortiz, 2001; Miller and Tadmor, 2002; Belytschko

and Xiao, 2003; Wagner and Liu, 2003) and hier-

archical (Fish, 2009; Luo et al., 2009; Bensoussan

et al., 1978; Sanchez-Palencia, 1980; Li et al., 2008)

modes. For very small particles, for which the La-

grangian point particle method (LPP) (Balachandar

and Eaton, 2010) is a valid approach, different meth-

ods have been proposed for ”relatively” large particles

(Deb and Tafti, 2013; Farzaneh et al., 2011; Sun and

Xiao, 2015; Wu et al., 2018; Capecelatro and Des-

jardins, 2013). However, these methods have mainly

been applied in pure fluid dynamics applications and

still struggle with heat transfer (Liu et al., 2019), not

to mention mass transfer as first principle transfer

properties. Hence, coupling CFD and DEM at large

scale has not been achieved, and represents a chal-

lenge (Belytschko and Xiao, 2003; Yang et al., 2019)

due to the highly dynamic data dependencies and the

fact that particles and fluid are coupled in the whole

domain instead of only across a lower-dimensional

surface as in FSI.

The use of coupling libraries is a straight-forward

and promising approach to achieve robust and stable

model component and software coupling in general.

However, generic coupling libraries fail to address the

problems induced by the CFD-DEM volume coupling

efficiently:

186

Peters, B., Besseron, X., Peyraut, A., Mehl, M. and Ueckermann, B.

An Innovative Partitioning Technology for Coupled Software Modules.

DOI: 10.5220/0011135200003274

In Proceedings of the 12th International Conference on Simulation and Modeling Methodologies, Technologies and Applications (SIMULTECH 2022), pages 186-193

ISBN: 978-989-758-578-4; ISSN: 2184-2841

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

LIME (Belcourt et al., 2011) has its domi-

nant applications in the nuclear reactor modeling;

CASL (Kothe, 2012) and MOOSE (Gaston et al.,

2009) apply the Jacobian-free Newton Krylov (JFNK)

method to handle multi-physics applications in a

tightly coupled mode; OASIS (Valcke, 2013) and

MCT (Larson et al., 2005) are dedicated to cli-

mate modeling including earth, ocean or atmo-

sphere; the Functional Mock-up Interface (FMI) sys-

tem (Blochwitz, 2014) has been initiated by Daimler

AG to exchange simulation models between Original

Equipment Manufacturers (OEMs) and suppliers, but

does not support explicit parallelism; MpCCI (Jop-

pich and K

¨

urschner, 2006) is a commercial tool to

couple various simulation software, but its central-

ized design represents a bottleneck for communica-

tion. Similarly, ADVENTURE (Shunji et al., 2014)

and EMPIRE (emp, ) have limited parallel scalability.

However, high performance computing (HPC) is

a critical component to resolve the underlying multi-

physical characteristics accurately within short com-

putational times. Recent efforts achieved a scale-up

of CFD-DEM coupled simulations to many hundreds

of cores, but experience a considerable communica-

tion overhead. While some interfaces are available

for coupling Fluent and EDEM (Spogis, 2008) and

LAMMPS with OpenFOAM (Phuc et al., 2016; Foun-

dation, ), they offer very limited parallelization capa-

bilities. A parallelized coupling for pharmaceutical

applications has been undertaken between FIRE and

XPS as proprietary software (XPS, ), but is restricted

to running XPS on GPU and FIRE on CPU archi-

tectures, respectively. In (Gopalakrishnan and Tafti,

2013), the authors developed a DEM solver within

their multiphase flow (CFD) solver MFIX. Their scal-

ability for up to 256 cores indicates an increase of

communication overhead by 160% between 64 and

256 cores. (Sun and Xiao, 2016) shows the result of

SediFoam, an ad-hoc coupling between OpenFOAM

(for CFD) and LAMMPS (for DEM). Also their ap-

proach is limited by the communication overhead,

which grows by 50% between executions from 128

to 512 cores. These performance issues are attributed

to the large amount of data that needs to be exchanged

between the CFD and DEM simulation domains due

to the volume coupling.

Available massively parallel coupling libraries

are OpenPALM, CWIPI and preCICE. OpenPALM,

CWIPI (Buis et al., 2006; Piacentini et al., 2011) and

MUI (Tang et al., 2015) have been used successfully

for volume coupling (Boulet et al., 2018; de Labor-

derie et al., 2018). However, very few adapters for

single physics software are publicly available. pre-

CICE (Gatzhammer et al., 2010; Bungartz et al.,

2016) implements fully parallel coupling numerics

and point-to-point communication. While preCICE

was primarily designed for surface coupling as used

in fluid-structure interaction (FSI) (Mehl et al., 2016),

recent work, has shown successful results for volume

coupling (Arya, 2020; Besseron et al., 2020).

A common drawback of the existing approaches

for partitioned volume coupling is that they are based

on the existing domain partitioning within each sin-

gle solver. This results in the above-mentioned huge

amount of communication as inter-solver communi-

cation is not considered in the target function of the

respective partitioning methods. To eliminate this is-

sue, approaches for common domain partitioning over

the entire domain of coupled solvers are required,

and should be combined with sophisticated schedul-

ing and load balancing methods within and across the

computing nodes.

First ideas to address the issue of joint partition-

ing over several solvers based on graph partition-

ers, but limited to surface coupling, have been pre-

sented in (Predari, 2016; Predari and Esnard, 2014).

Therefore, this contribution describes the so-called

co-located partitioning approach for a volume-based

coupling meaning that an exchange takes place within

the entire simulation domain as opposed to bound-

aries only. Co-located partitions result from a par-

titioning of the entire simulation domain composed

of the single-physics simulation domains. Conse-

quently, simulation domains of each module over-

lap on one node, and thus reduce both memory and

inter−process communication.

2 METHODOLOGY

Nowadays, software for both continuum and dis-

crete mechanics has reached a degree of maturity that

makes it a valuable tool for science and engineer-

ing in the respective domains. In order to address

complex simulations in a discrete-continuous envi-

ronment, two major approaches exist for coupling

model components:

• In the monolithic approach, the equations describ-

ing multi-physics phenomena are combined in a

large overall system of equations and solved by a

single targeted solver. This requires implementing

a new simulation code for each combination. Very

often a new numerical solver has to be developed

involving a dedicated pre-conditioner for the re-

spective large and in general ill-condition system.

• In a coupled approach, appropriately tailored

solvers for each physical domain are linked to

An Innovative Partitioning Technology for Coupled Software Modules

187

an overall simulation environment via suitable

communication, data mapping and numerical cou-

pling algorithms. This inherently encompasses a

large degree of flexibility by coupling a variety

of solvers. Furthermore, a more modular soft-

ware development is retained, that allows apply-

ing established and highly efficient solvers for

each physics addressed.

As outlined above, a coupling of ”best-of-the-

classes” software modules is preferred rather than

implementation of additional features in an already

available module. For this work, we will focus on

the coupling of three software modules, XDEM rep-

resents the extended discrete element method, Open-

Foam describe the fluid dynamics and deal.II (Arndt

et al., 2021) is a finite element code. Based on first

principles the data exchange between the modules

covers momentum, heat and mass transfer. A com-

mon feature for the coupling strategies considered is

that there is a large overlap between the individual

domains shown in Figures 5 and 8. In both cases the

particle domain covers also the CFD domain so that

an exchange of momentum takes place not only on

boundaries like in FSI but within the entire CFD and

DEM simulation area. In particular, the dam break

case with app. 2.35 · 10

6

particles and 10

7

CFD cells

requires an intensive exchange of data between the

CFD and particle domain.

In order to evaluate the momentum exchange

i.e. drag forces acting on particles, fluid density, vis-

cosity, specific heat, conductivity, and velocity com-

ponents have to be transmitted to the particle do-

main commonly referred to as scalar, vector and ten-

sor fields. Thus, each particle generates a momen-

tum source for the flow fields that requires a trans-

fer of the implicit and explicit part of the momentum

source in conjunction with the void fraction. If in ad-

dition, heat and mass transfer have to be taken into ac-

count, fluid temperature and composition referring to

species mass fractions also have to be provided. Ex-

changing this huge amount of data on massively par-

allel systems may lead to an unwanted side effect that

communication between nodes may turn out as bot-

tleneck for scalability as reported by (Gopalakrishnan

and Tafti, 2013) and (Sun and Xiao, 2016). This im-

mense communication overhead results from a ”sim-

ple” coupling of modules for which a data transfer has

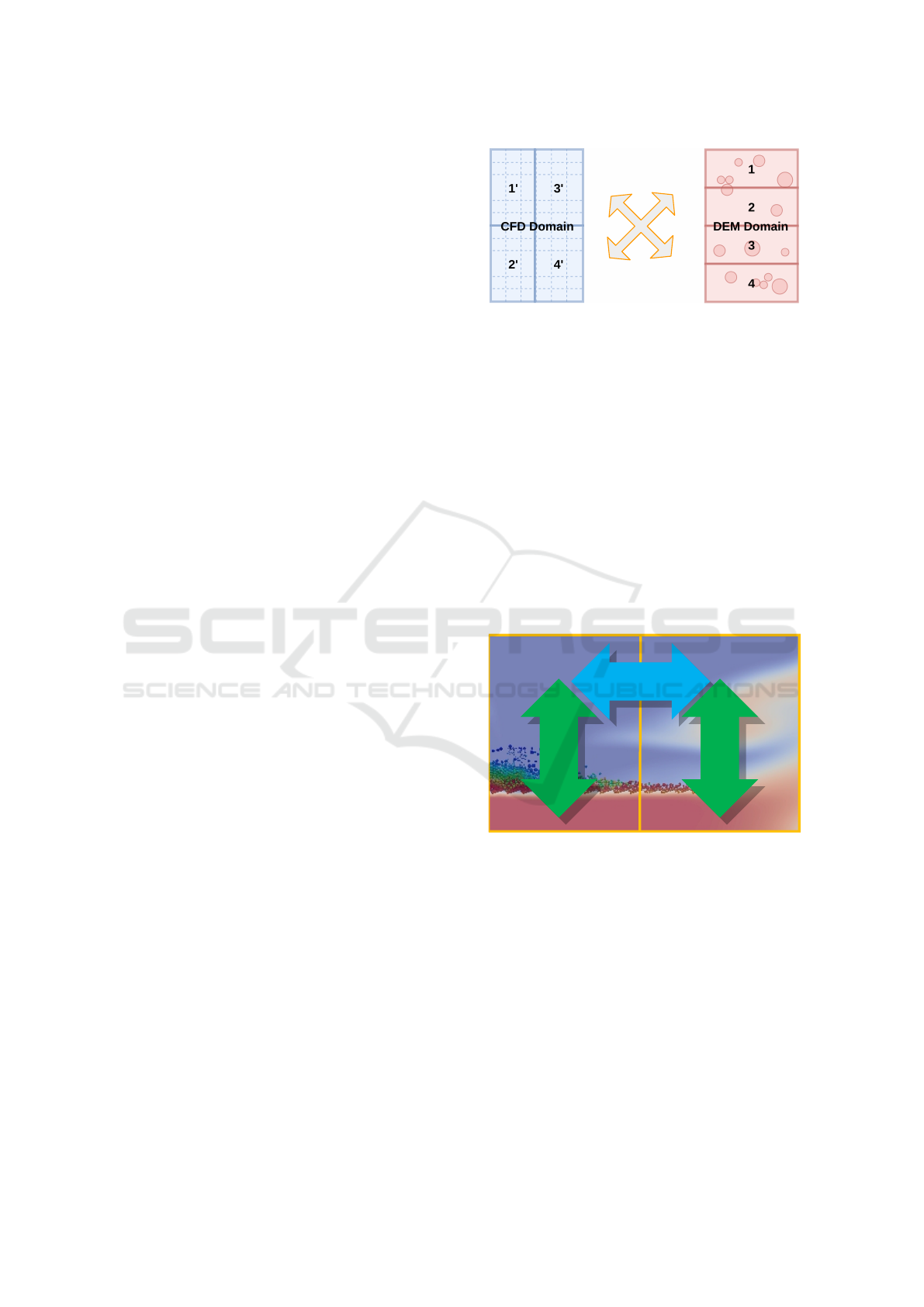

not been taken into account as is sketched in Figure 1.

Almost every CFD and DEM solver comes with

parallelisation capabilities that allow to run large

cases on massively parallel systems. For this pur-

pose, each software platform provides sophisticated

partitioning techniques tailored for the particular ap-

plication in mind that allows an efficient throughput

Figure 1: A ”simple” coupling between a CFD and DEM

module usually results in a large communication overhead

due to individual solver strategies.

on HPC machines. In order to reach best performance

and scalability, each software module follows its own

strategies which most of the times do not consider

communication between individual modules. Hence,

a constellation as shown in Figure 1 occurs, in which

each solver applies its individual partitioning inde-

pendent of each other that generates a large communi-

cation overhead and might even lead to unpredictable

or unphysical behaviour. However, the co-located

partitioning technique (Pozzetti et al., 2018) offers a

remedy to the unfavourable communication overhead

and is based on the principles depicted in Figures 2

and 3.

Partition 1

Partition 2

Inter-physics

Communication

Inter-partition

Communication

Inter-physics

Communication

Figure 2: Distinction between inter-physics and inter-

partition communication ina co-located partitioning.

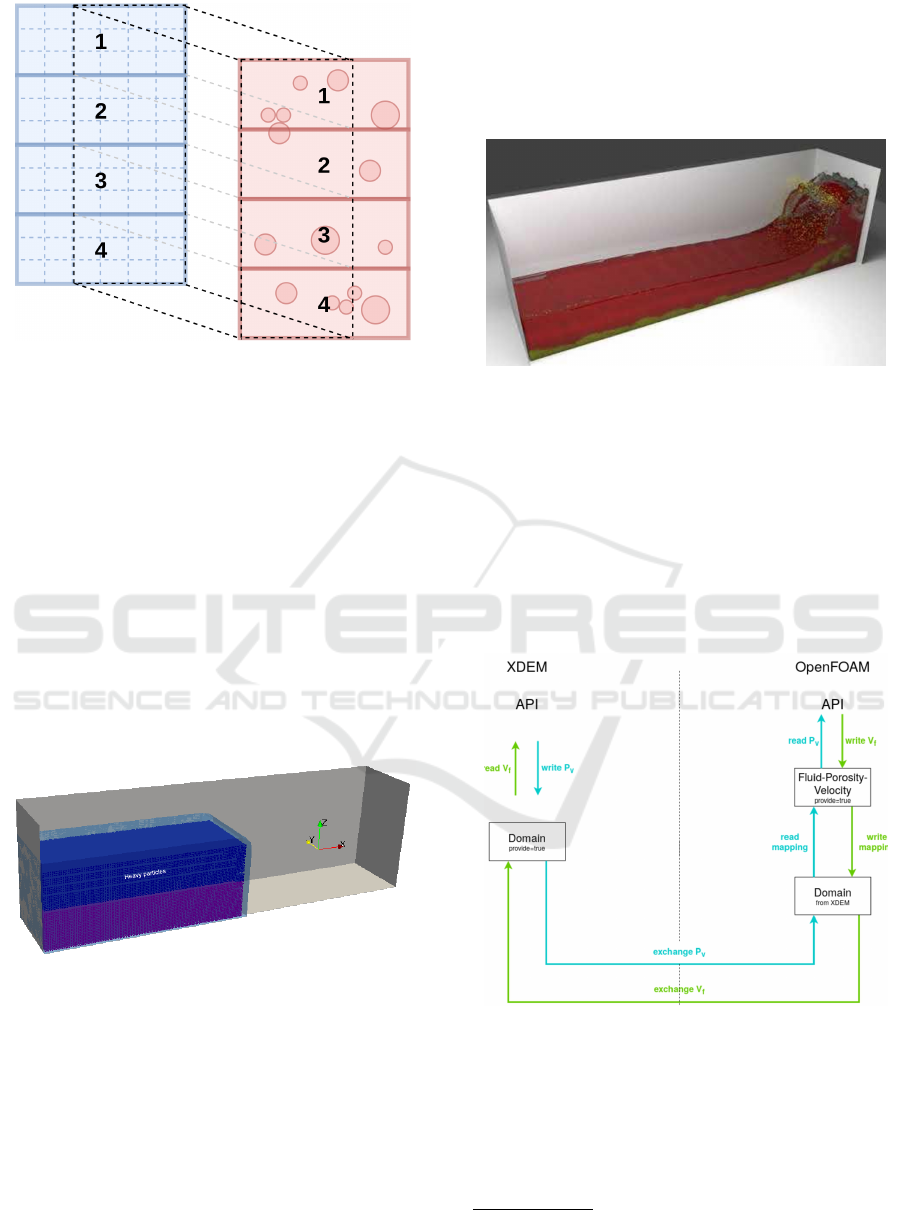

The simulation domains in Figure 2 include a flow

and motion of particles due to drag forces. An inter-

physics communication takes place between the par-

ticles and the flow meaning that physical quantities

as detailed above have to be exchanged to represent

the physics between particles and flow. Consequently,

the partitions of the particle module and flow module

have to overlap as much as possible as depicted Fig-

ure 3, so that the inter-physics communication is kept

within the same node. In an ideal arrangement, the

particle and flow partition are identical.

Hence, the vast amount of data exchange occurs

within the node for co-located partitions and a min-

SIMULTECH 2022 - 12th International Conference on Simulation and Modeling Methodologies, Technologies and Applications

188

Figure 3: Largely overlapping partitions for a particle and

CFD domain and, thus reducing communication signifi-

cantly as compared to a ”simple” partitioning based on in-

dividual solver strategies shown in Figure 1.

imum of data has to be communicated between the

partitions for both particles and flow which leads to a

significant reduction of the otherwise large communi-

cation overhead.

3 APPLICATIONS AND RESULTS

The co-located partitioning technology (Pozzetti

et al., 2019) was applied to a dam break with debris

e.g. particles as depicted in Figure 5 representing the

initial conditions.

C

o

n

t

a

i

n

e

r

C

o

l

u

m

n

o

f

w

a

t

e

r

L

i

g

h

t

p

a

r

t

i

c

l

e

s

Figure 4: Dam break as a benchmark including 10

7

CFD

cells and 2.35 · 10

6

particles.

A column of water contains particles of which

app. half the number is lighter than water and the re-

maining particles are heavier than water. Initially, the

heavier particles are located above the lighter parti-

cles so that an intensive mixing and therefore, reloca-

tion of particles takes place due to buoyancy forces.

Thus, the heavy particles sink to the bottom while the

light particles float and gather on the surface. This re-

arrangement of particles is superseded by the break-

ing water carrying particles due to drag forces. A

snapshot of the configuration in motion is shown in

Figure 5 in which particles undergo a rapid motion

and the water experiences the typical breaking wave

pattern at the right wall.

Figure 5: Snapshot of a water column enriched with parti-

cles breaking at a wall.

The above-mentioned results were obtained by

coupling the Extended Discrete Element Method

(XDEM) (Peters, 2013)

1

and OpenFoam

2

as CFD

solver. For detailed information on the simulation

platforms, the reader is referred to the respective in-

ternet pages (see footnotes) and the links provided

herein. The data exchange included the variables as

afore-mentioned and is schematically depicted in Fig-

ure 6.

Figure 6: Data exchange between XDEM and OpenFoam

labelled as 4-way-coupling.

Data between the CFD and particle domain is

communicated in both directions indicated by the blue

line for data travelling from the CFD domain to the

particle domain. It includes the fluid properties re-

quired to evaluate the drag forces acting on particles.

1

https://luxdem.uni.lu

2

www.openfoamwiki.net

An Innovative Partitioning Technology for Coupled Software Modules

189

These drag forces represent a momentum source for

the Navier-Stokes equations solved for in OpenFoam

and is transferred to the CFD domain into the opposite

direction represented by green lines. These quanti-

ties are all available on a co-located partition through

direct memory access and therefore, do not require

any effort for communication. In a ”simple” cou-

pling, these information is more than likely to reside

on an other node which then would require additional

and non-negligible communication that is avoided al-

together within the proposed co-located partitioning.

Only a minimum of communication is necessary be-

tween the partitions that covers both fluid and particle

properties. Hence, a good scaleability is achieved and

is shown in Figure 7.

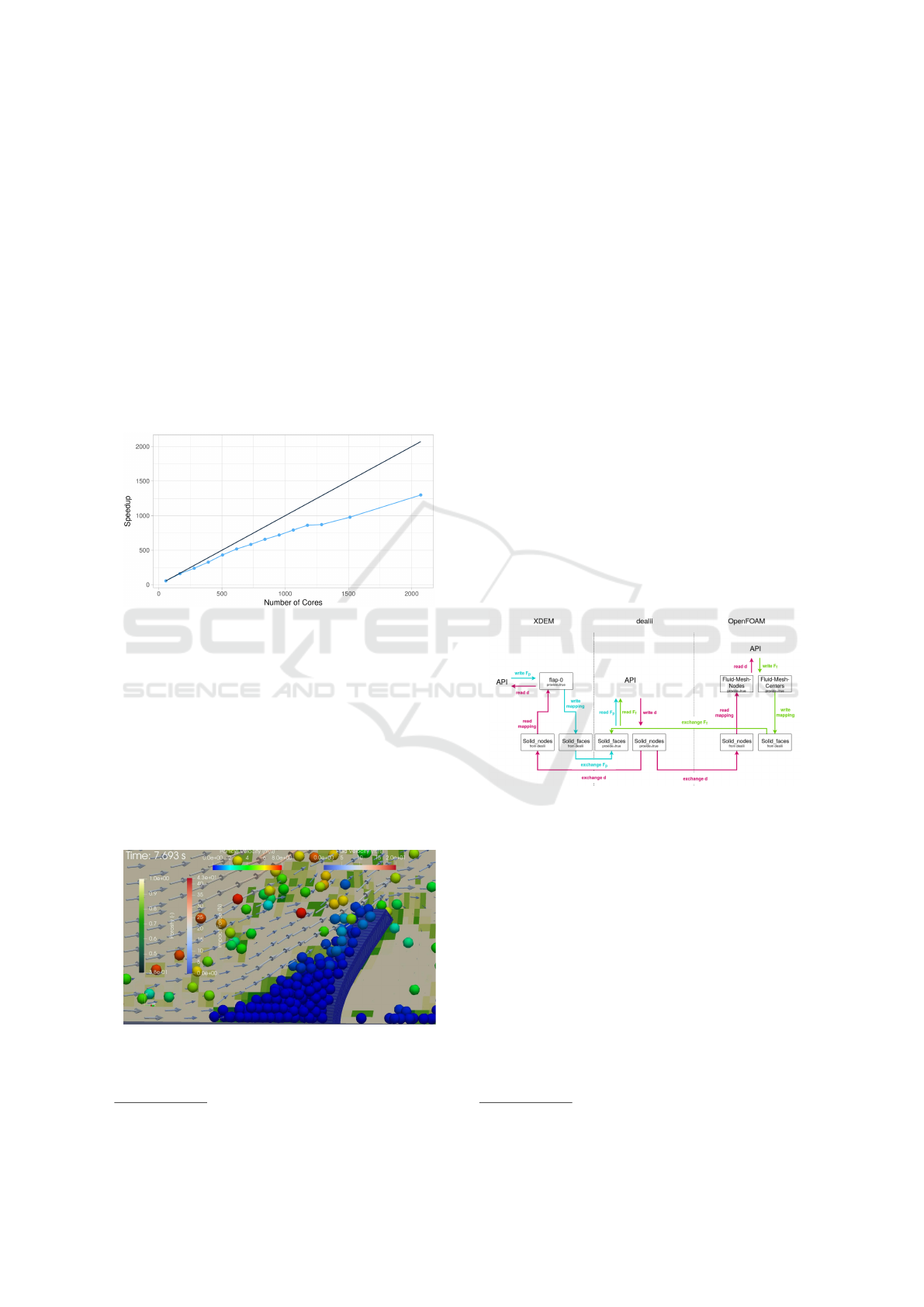

Figure 7: Speed-up versus number of cores for dam break

benchmark.

For this purpose, the dam break case was executed

on the HPC cluster

3

of the University of Luxembourg

that allowed using up to app. 2000 cores. Under these

initial conditions, a scalability of already 63 % was

achieved which amounts to only 2.3 % of communica-

tion overhead. This technology is equally well appli-

cable to more complex coupling scenarios as shown

in Figure 8 in which three domains namely fluid flow,

motion of particles and deformation of structures is

considered.

Figure 8: Predicted results of particle motion, flow and

deformation by the modules of XDEM, OpenFoam and

deal.II, respectively.

3

https://hpc.uni.lu/

Similarly to the dam break case, the particle do-

main includes heavier and lighter particles than the

fluid and thus, introducing buoyancy effects in addi-

tion to drag forces. Both, the particle and fluid simu-

lation domain interact with a flap through forces. The

fluid drag exerts forces on the flap, which itself causes

the fluid to develop a recirculation zone behind the

flap. Similarly, the particles generate impact forces on

contacting the flap which leads to a considerable de-

formation of the flap due to fluid and particle forces.

Particles in contact with the wall are stopped and slid-

ing down on the wall surface due to dominating grav-

ity. Hence, particles pile up in front of the wall and,

thus, having a further impact on wall deformation and

fluid flow. Similarly, particles reaching the wake re-

gion of the flow behind the flap form a pile due to

gravity and negligible drag forces at much reduced

flow velocities. Compared to the previous benchmark

of the dam break, a coupling of three solver domains

requires a more sophisticated coupling and commu-

nication of data structures and properties. Hence,

in addition to the data exchange between OpenFoam

and XDEM in Figure 6, both modules exchange data

i.e. forces with the finite element module deal.II de-

picted in Figure 9.

Figure 9: Data exchange between the modules of XDEM,

OpenFoam and deal.II labelled as 6-way-coupling.

deal.II

4

is included as an additional simulation

module to OpenFoam and XDEM. It is a finite ele-

ment solver and predicts the deformation of the flap

due to impact forces from the fluid and the particles.

In addition to the aforementioned data exchange be-

tween the fluid and particle domain (blue and green

lines) in Figure 6 both fluid and particle domain in-

teract with the structural domain i.e. flap and intro-

duce further communication lines between the mod-

ules (red lines). In order to evaluate an impact be-

tween the particles and the flap structure, geometry

data is exchanges that allows determining the point of

contact between a particle and the flap. The contact

force resulting from the impact is predicted in con-

4

www.dealii.org

SIMULTECH 2022 - 12th International Conference on Simulation and Modeling Methodologies, Technologies and Applications

190

junction with material exchanged properties of both

the particle and the flap e.g. Young’s modulo and Poi-

son ratio for simple linear Hooke-like material be-

haviour. The predicted impact force leads to defor-

mation of the flap and governs among other contact

forces between particles and gravity the trajectory of

particles. Similarly, the fluid pressure on the flap’s

surface generates a surface load contributing to fur-

ther deformation of the flap.

Hence, a well coordinated and efficient data trans-

fer between simulation domains allows investigating

complex multi-physics and multi-scale in space and

time applications. An analysis of predicted coupled

results unveils the underlying physics acting inside

the domains and between the domains. It provides an

insight of unprecedented quality and is the key to an

improved design of equipment and more efficient op-

eration. The communication pattern, however, grows

exponentially with each added module and therefore,

requires an additional framework to coordinate the the

communication lines correctly between the solvers.

Additionally, data is to be exchanged at consistent

times during iterations so that conservation principles

are obeyed and physical laws are satisfied.

4 CONCLUSIONS

The demand for multi-physics applications be it

multi-phase reacting flows as addressed in the current

contribution or inn addition combined fluid dynamics

under electro-magnetic influence such as magneto-

hydrodynamics or electro-/magneto-rheological flu-

ids (ERFs/MRFs) has grown substantially. A multi-

physics simulation environment is achieved through

two major concepts:

• Monolithic concept: The equations describing

multi-physics phenomena are solved simultane-

ously by a single solver producing a complete so-

lution.

• Staggered concept: The equations describing

multi-physics phenomena are solved sequentially

by appropriately tailored and distinct solvers with

passing the results of one analysis as a load to the

next.

The former concept requires a solver that in-

cludes a combination of all physical problems in-

volved, and therefore, requires a large implementa-

tion effort. However, there exist scenarios for which

it is difficult to arrange the coefficients of combined

differential equations in one matrix. A staggered con-

cept as a coupling between a number of solvers rep-

resenting individual aspects of physics offers distinc-

tive advantages over a monolithic concept. It inher-

ently encompasses a large degree of flexibility by cou-

pling an almost arbitrary number of solvers. Further-

more, a more modular software development is re-

tained that allows by far more specific solver tech-

niques adequate to the problems addressed. How-

ever, partitioned simulations impose stable and accu-

rate coupling algorithms that convince by their perva-

sive character.

The exchange of data between different solvers re-

quires a careful coordination and a complex feedback

loop so that the coupled analysis converges to an ac-

curate solution. This is performed by coupling algo-

rithms between the Discrete Particle Method to the Fi-

nite Volume e.g. Computational Fluid Dynamics and

the Finite Element Method e.g. structural engineering

for which two fundamental concepts are employed:

• An exchange of data at the boundaries between

discrete and continuous domains which represents

at the point of contact a transfer of forces or fluxes

such as heat or electrical charge.

• An exchange of data from particles submerged in

the continuous phases into the continuous domain

by volumetric sources.

The former defines additional boundary condi-

tions, while the latter coupling appears as source

terms in the relevant partial differential equations.

Hence, applying appropriate boundary conditions and

volumetric sources for the discrete and continuous

domain furnishes a consistent and effective coupling

mechanism.

However, the latter exchange of data impact par-

allel performance crucially. Not only that a data ex-

change over the entire simulation domain constitutes

a huge amount of data transfer, it also generates a

large communication effort. In order to reduce the

communication to a minimum, a co-located partition

strategy for coupling a number of solver modules has

been proposed. Thus strategy places the partitions of

the solvers on the same node so that the inter-physics

exchange of data between the solvers occurs locally

without inter-node communication. The latter is re-

duced to a minimum necessary to maintain the re-

quired communication between nodes.

Hence, inter-physics communication that other-

wise has to travel between nodes is reduced signifi-

cantly which is a fundamental step toward the large-

scale computation in an high performance computing

environment with hundreds of computing processes.

Consequently, rather than carrying out the partition-

ing of the simulation domains by each solver indepen-

dently and following its own requirements for perfor-

mance, an overall partitioning strategy has to be ap-

plied. This includes dedicated partitioning algorithms

An Innovative Partitioning Technology for Coupled Software Modules

191

that identify a trade-off between communication re-

quirements within the simulation domains and load-

balancing so that the overall execution time is min-

imised.

ACKNOWLEDGEMENTS

The authors would like to express their gratitude

to the administration, in particular Dr. S. Var-

rette, for the support while using the HPC facili-

ties of the University of Luxembourg. We thank

the Deutsche Forschungsgemeinschaft (DFG, Ger-

man Research Foundation) for supporting this work

by funding – EXC2075 – 390740016 under Ger-

many’s Excellence Strategy. Furthermore, we ac-

knowledge the support by the Stuttgart Center for

Simulation Science (SimTech).

REFERENCES

Enhanced multiphysics interface research engine.

https://github.com/DrStS/EMPIRE-Core. Accessed:

2019-03-14.

RCPE/AVL/CATRA: New Software for the Pharmaceutical

Industry. https://www.avl.com/-/rcpe-avl-catra-new-

software-for-the-pharmaceutical-industry. Accessed:

2022-01-11.

Arndt, D., Bangerth, W., Davydov, D., Heister, T., Heltai,

L., Kronbichler, M., Maier, M., Pelteret, J.-P., Tur-

cksin, B., and Wells, D. (2021). The deal.ii finite el-

ement library: Design, features, and insights. Com-

puters & Mathematics with Applications, 81:407–422.

Development and Application of Open-source Soft-

ware for Problems with Numerical PDEs.

Arya, N. (2020). Volume coupling using preCICE for an

aeroacoustic simulation. preCICE Workshop 2020.

https://www.youtube.com/watch?v=TrZD9SnG2Ts.

Balachandar, S. and Eaton, J. K. (2010). Turbulent dis-

persed multiphase flow. Annu. Rev. Fluid Mech.,

42:111 – 133.

Belcourt, N., Bartlett, R. A., Pawlowski, R., Schmidt, R.,

and Hooper, R. (2011). A theory manual for multi-

physics code coupling in LIME. Sandia Nationanal

Laboratories.

Belytschko, T. and Xiao, S. P. (2003). Coupling methods

for continuum model with molecular model. Inter-

national Journal for Multiscale Computational Engi-

neering, 1(1).

Bensoussan, A., Lions, J., and Papanicolaou, G. (1978).

Asymptotic Analysis for Periodic Structures. Stud-

ies in mathematics and its applications. North-Holland

Publishing Company.

Besseron, X., Rousset, A., Peyraut, A., and Peters,

B. (2020). 6-way coupling of DEM+CFD+FEM

with preCICE. preCICE Workshop 2020.

https://www.youtube.com/watch?v=3FP8y1Zjqns.

Blochwitz, T. (2014). Functional mock-up interface for

model exchange and co-simulation.

Boulet, L., B

´

enard, P., Lartigue, G., Moureau, V., Didorally,

S., Chauvet, N., and Duchaine, F. (2018). Modeling

of conjugate heat transfer in a kerosene/air spray flame

used for aeronautical fire resistance tests. Flow, Tur-

bulence and Combustion, 101(2):579–602.

Buis, S., Piacentini, A., and Declat, D. (2006). Palm:

A computational framework for assembling high-

performance computing applications. Concur-

rency and Computation: Practice and Experience,

18(2):231–245.

Bungartz, H.-J., Lindner, F., Gatzhammer, B., Mehl, M.,

Scheufele, K., Shukaev, A., and Uekermann, B.

(2016). precice – a fully parallel library for multi-

physics surface coupling. Computers & Fluids,

141:250–258. Advances in Fluid-Structure Interac-

tion.

Capecelatro, J. and Desjardins, O. (2013). An eu-

ler–lagrange strategy for simulating particle-laden

flows. Journal of Computational Physics, 238:1–31.

de Laborderie, J., Duchaine, F., Gicquel, L., Vermorel, O.,

Wang, G., and Moreau, S. (2018). Numerical analysis

of a high-order unstructured overset grid method for

compressible les of turbomachinery. Journal of Com-

putational Physics, 363:371 – 398.

Deb, S. and Tafti, D. K. (2013). A novel two-grid formu-

lation for fluid–particle systems using the discrete el-

ement method. Powder Technology, 246:601–616.

Farzaneh, M., Sasic, S., Almstedt, A.-E., Johnsson, F., and

Pallar

`

es, D. (2011). A novel multigrid technique for

lagrangian modeling of fuel mixing in fluidized beds.

Chemical Engineering Science, 66(22):5628–5637.

Fish, J. (2009). Multiscale Methods: Bridging the Scales

in Science and Engineering. Oxford University Press,

Inc., New York, NY, USA.

Fish, J., Nuggehally, M. A., Shephard, M. S., Picu, C. R.,

Badia, S., Parks, M. L., and Gunzburger, M. (2007).

Concurrent AtC coupling based on a blend of the con-

tinuum stress and the atomistic force. Computer Meth-

ods in Applied Mechanics and Engineering, (45–48).

Foundation, T. O. Openfoam v6 user guide.

https://cfd.direct/openfoam/user-guide. Accessed:

2019-03-14.

Gaston, D., Newman, C., Hansen, G., and Lebrun-Grandi

´

e,

D. (2009). Moose: A parallel computational frame-

work for coupled systems of nonlinear equations. Nu-

clear Engineering and Design, 239(10):1768 – 1778.

Gatzhammer, B., Mehl, M., and Neckel, T. (2010). A cou-

pling environment for partitioned multiphysics simu-

lations applied to fluid-structure interaction scenarios.

Procedia Comput. Sci., 1(1).

Gopalakrishnan, P. and Tafti, D. (2013). Development of

parallel dem for the open source code mfix. Powder

Technology, 235:33 – 41.

G

¨

otz, J., Iglberger, K., St

¨

urmer, M., and R

¨

ude, U. (2010).

Direct numerical simulation of particulate flows on

SIMULTECH 2022 - 12th International Conference on Simulation and Modeling Methodologies, Technologies and Applications

192

294912 processor cores. In 2010 ACM/IEEE Interna-

tional Conference for High Performance Computing,

Networking, Storage and Analysis, pages 1–11.

Ingram, G. D. and Cameron, I. T. (2008). Challenges in

multiscale modelling and its application to granulation

systems.

Joppich, W. and K

¨

urschner, M. (2006). MpCCI—a tool

for the simulation of coupled applications. Concur-

rency and computation: Practice and Experience,

18(2):183–192.

Knap, J. and Ortiz, M. (2001). An analysis of the quasicon-

tinuum method. Journal of the Mechanics and Physics

of Solids. The JW Hutchinson and JR Rice 60th An-

niversary Issue.

Kothe, D. (2012). Consortium for advanced simulation of

light water reactors (casl). http://www.casl.gov.

Larson, J., Jacob, R., and Ong, E. (2005). The model

coupling toolkit: A new fortran90 toolkit for build-

ing multiphysics parallel coupled models. Int’l Jnl of

High Perf Comp Applictns, 19(3):277–292.

Li, A., Li, R., and Fish, J. (2008). Generalized mathematical

homogenization: From theory to practice. Computer

Methods in Appl. Mechanics and Eng., 197(41):3225

– 3248. Recent Adv. in Comput. Study of Nanostruc-

tures.

Liu, K., Lakhote, M., and Balachandar, S. (2019). Self-

induced temperature correction for inter-phase heat

transfer in euler-lagrange point-particle simulation.

Journal of Computational Physics, 396:596–615.

Luo, X., Stylianopoulos, T., Barocas, V. H., and Shephard,

M. S. (2009). Multiscale computation for bioartifi-

cial soft tissues with complex geometries. Engineer-

ing with Computers, 25(1):87–96.

Mehl, M., Uekermann, B., Bijl, H., Blom, D., Gatzhammer,

B., and van Zuijlen, A. (2016). Parallel coupling nu-

merics for partitioned fluid–structure interaction sim-

ulations. Comp. & Mathematics with Applications.

Miller, R. E. and Tadmor, E. (2002). The quasicontin-

uum method: Overview, applications and current di-

rections. Journal of Computer-Aided Materials De-

sign, 9(3):203–239.

Peters, B. (2013). The extended discrete element method

(xdem) for multi-physics applications. Scholarly

Journal of Engineering Research, 2:1–20.

Phuc, P. V., Chiba, S., and Minami, K. (2016). Large scale

transient CFD simulations for buildings using Open-

FOAM on a world’s top-class supercomputer. In 4th

OpenFOAM User Conference 2016.

Piacentini, A., Morel, T., and Thevenin, A. (2011). O-

PALM: An open source dynamic parallel coupler. In

Coupled problems 2011, Kos Island, Greece.

Pozzetti, G., Besseron, X., Rousset, A., and Peters, B.

(2018). A co-located partitions strategy for parallel

cfd–dem couplings. Advanced Powder Technology,

29(12):3220–3232.

Pozzetti, G., Jasak, H., Besseron, X., Rousset, A., and Pe-

ters, B. (2019). A parallel dual-grid multiscale ap-

proach to cfd–dem couplings. Journal of Computa-

tional Physics, 378:708–722.

Predari, M. (2016). Load Balancing for Parallel Coupled

Simulations. Theses, Universit

´

e de Bordeaux, LaBRI

; Inria Bordeaux Sud-Ouest.

Predari, M. and Esnard, A. (2014). Coupling-Aware Graph

Partitioning Algorithms: Preliminary Study. In IEEE

Int. Conf. on High Performance Computing (HiPC

2014), Goa, India.

Sanchez-Palencia, E. (1980). Non-Homogeneous Media

and Vibration Theory. 0075-8450. Springer-Verlag

Berlin Heidelberg, 1 edition.

Shunji, K., Satsuki, M., Hiroshi, K., Tomonori, Y., and Shi-

nobu, Y. (2014). A parallel iterative partitioned cou-

pling analysis system for large-scale acoustic fluid–

structure interactions. Computational Mechanics,

53(6):1299–1310.

Spogis, N. (2008). Multiphase modeling using EDEM–

CFD coupling for FLUENT. CFD OIL.

Sun, R. and Xiao, H. (2015). Diffusion-based coarse grain-

ing in hybrid continuum–discrete solvers: Theoretical

formulation and a priori tests. International Journal

of Multiphase Flow, 77:142–157.

Sun, R. and Xiao, H. (2016). Sedifoam: A general-purpose,

open-source CFD–DEM solver for particle-laden flow

with emphasis on sediment transport. Computers &

Geosciences, 89:207 – 219.

Tadmor, E. B., Ortiz, M., and Phillips, R. (1996). Quasi-

continuum analysis of defects in solids. Philos. Mag.

A.

Tang, Y.-H., Kudo, S., Bian, X., Li, Z., and Karni-

adakis, G. E. (2015). Multiscale universal interface:

A concurrent framework for coupling heterogeneous

solvers. Journal of Computational Physics, 297:13–

31.

Valcke, S. (2013). The oasis3 coupler: a european climate

modelling community software. Geoscientific Model

Development, 6(2):373–388.

Wagner, G. J. and Liu, W. K. (2003). Coupling of atom-

istic and continuum simulations using a bridging scale

decomposition. Journal of Computational Physics,

190(1):249 – 274.

Wu, H., Gui, N., Yang, X., Tu, J., and Jiang, S. (2018). A

smoothed void fraction method for cfd-dem simula-

tion of packed pebble beds with particle thermal radi-

ation. International Journal of Heat and Mass Trans-

fer, 118:275–288.

Xiao, S. and Belytschko, T. (2004). A bridging domain

method for coupling continua with molecular dynam-

ics. Computer Methods in Applied Mechanics and En-

gineering, 193(17–20):1645 – 1669. Multiple Scale

Methods for Nanoscale Mechanics and Materials.

Yang, Q., Cheng, K., Wang, Y., and Ahmad, M. (2019).

Improvement of semi-resolved cfd-dem model for

seepage-induced fine-particle migration: Eliminate

limitation on mesh refinement. Computers and

Geotechnics, 110:1–18.

An Innovative Partitioning Technology for Coupled Software Modules

193