Attention! Transformer with Sentiment on Cryptocurrencies Price

Prediction

Huali Zhao, Martin Crane and Marija Bezbradica

School of Computing, Dublin City University, Dublin, Ireland

Keywords:

Transformer, Attention, Sentiment Analysis, Cryptocurrency Prediction.

Abstract:

Cryptocurrencies have won a lot of attention as an investment tool in recent years. Specific research has

been done on cryptocurrencies’ price prediction while the prices surge up. Classic models and recurrent

neural networks are applied for the time series forecast. However, there remains limited research on how

the Transformer works on forecasting cryptocurrencies price data. This paper investigated the forecasting

capability of the Transformer model on Bitcoin (BTC) price data and Ethereum (ETH) price data which are

time series with high fluctuation. Long short term memory model (LSTM) is employed for performance

comparison. The result shows that LSTM performs better than Transformer both on BTC and ETH price

prediction. Furthermore, in this paper, we also investigated if sentiment analysis can help improve the model’s

performance in forecasting future prices. Twitter data and Valence Aware Dictionary and sEntiment Reasoner

(VADER) is used for getting sentiment scores. The result shows that the sentiment analysis improves the

Transformer model’s performance on BTC price but not ETH price. For the LSTM model, the sentiment

analysis does not help with prediction results. Finally, this paper also shows that transfer learning can help on

improving the Transformer’s prediction ability on ETH price data.

1 INTRODUCTION

As a digital currency backed by cryptographic tech-

nology, cryptocurrency has held its place in invest-

ment portfolios (PWC, 2021). In 2020, the total as-

sets under management of crypto hedge funds glob-

ally increased to nearly US$3.8 billion from US$2

billion the previous year. Around a fifth of hedge

funds are investing in digital assets (21%). More than

85% of those hedge funds intend to deploy more cap-

ital into the asset class by the end of 2021 (PWC,

2021). Along with increasing interest in cryptocur-

rencies, the amount of research on cryptocurrency

price prediction is growing (Serafini et al., 2021) (Kil-

imci, 2020) (Raju and Tarif, 2020) (Prajapati, 2020)

(Guerra et al., 2020) (Pano and Kashef, 2020). Stud-

ies on applying statistical models and Recursive Neu-

ral Networks (RNN) models on Bitcoin(BTC) price

have been done(Ji et al., 2019) (Georgoula et al.,

2015) (Yi et al., 2018) (Yenidogan et al., 2018). In

the existing studies, the classic ARIMA model and

various RNN models are the favorite when predicting

future BTC price.

In addition to applying models on time series data,

how public sentiment is driving cryptocurrency price

is another popular topic. Studies found a strong cor-

relation between public sentiment and and BTC price

trend (Georgoula et al., 2015) (Serafini et al., 2021)

(Kilimci, 2020) (Chen et al., 2020) (Raju and Tarif,

2020) (Prajapati, 2020).

However, among the existing cryptocurrency price

prediction research, there is not much related to the

Transformer (Vaswani et al., 2017) model. Both Long

short term memory model (LSTM) and Transformer

is powerful and efficient on Natural Language Pro-

cessing(NLP). LSTM is also popular in time series

forecasting field where Transformer is still new. In

this paper, a study on how well the Transformer model

can perform on BTC and ETH price data is per-

formed. A comparison between the predictive perfor-

mance of LSTM model and Transformer is done with

6 years of BTC price data and 5 years of ETH price

data. We also investigated whether sentiment analysis

can help on improving Transformer and LSTM pre-

diction with sentiment data collected from Twitter.

This paper is organized as follows. In section

2, we review the related work done on time series

forecasting using various models along with senti-

ment analysis. How data is collected and prepared for

this study is illustrated in Section 3. Section 4 gives

98

Zhao, H., Crane, M. and Bezbradica, M.

Attention! Transformer with Sentiment on Cryptocurrencies Price Prediction.

DOI: 10.5220/0011103400003197

In Proceedings of the 7th International Conference on Complexity, Future Information Systems and Risk (COMPLEXIS 2022), pages 98-104

ISBN: 978-989-758-565-4; ISSN: 2184-5034

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

an overview of what Transformer is and how Trans-

former is utilized in this study. Results and findings

are presented in Section 5 with conclusions in Section

6.

2 RELATED WORK

Time series modeling is widely utilized across do-

mains like geography (Hu et al., 2018) and eco-

nomics (Nyoni, 2018). Applying various Deep neural

networks (DNN) for forecasting and pattern recog-

nition on BTC price has been popular in recent

years. Ji et al. (Ji et al., 2019) did a forecasting

capability comparison on BTC price within DNN,

LSTM (Hochreiter and Schmidhuber, 1997), Con-

volutional neural networks(CNN), and deep residual

networks (ResNet). Although there is no an over-

all winner in the competition, LSTM slightly outper-

forms in forecasting future BTC prices, while CNN

outperforms others on indicating price moving di-

rection. Facebook has created a regression model

called PROPHET which is optimized for the busi-

ness forecast tasks (Ben Letham, 2017). Yenidogan

et al. (Yenidogan et al., 2018) proved success of this

model in forecasting BTC future price by comparing

PROPHET with ARIMA model. The result shows

PROPHET outperforms ARIMA by 26% on R

2

.

Sentiment has been shown to be a factor that im-

pacts BTC future price. Guerra et al. (Guerra et al.,

2020) proved the correlation between BTC price and

web sentiment (Twitter sentiment, Wikipedia search

queries and Google search queries) by utilizing Sup-

port Vector Machine (SVM) model. By combin-

ing Fuzzy Transform on forecasting BTC price with

Google trend data, the authors’ study showed that web

searches data can help on short-term BTC price pre-

diction. Serafini et al. (Serafini et al., 2021) composed

a dataset which contains daily BTC weighted price,

BTC volume, sentiment from Twitter and tweets vol-

ume, applied Auto-Regressive Integrated Moving Av-

erage with eXogenous input (ARIMAX) and LSTM-

based RNN model on the data. They found that

the linear model ARIMAX performs better than the

LSTM-based RNN model on BTC price prediction.

They also discovered out that the tweets sentiment in-

stead of tweets volume is the most significant factor in

predicting BTC price. Raju and Tarif’s research (Raju

and Tarif, 2020) has also utilized sentiment analy-

sis. They collected sentiment data from two sources:

Twitter and Reddit. By applying both the LSTM and

ARIMA model on a dataset composed of BTC price

data and sentiment data, the authors found LSTM

did better on the BTC price forecasting regression

task. The study also indicated that combined senti-

ment data from different sources can improve the pre-

dicting result. Prajapati’s (Prajapati, 2020) research

compared CNN, (Gated Recursive Unit) GRU and

LSTM model’s performance on a dataset that com-

posed by BTC’s open, high, low, close, volume, Lit-

coin’s close, volume, ETH’s close, volume and senti-

ment data from Google news and Reddit. The result

shows LSTM can give the lowest Root mean squared

error (RMSE) on predicting BTC price.

Instead of looking at the regression problem, Kil-

imci et al. (Kilimci, 2020) is focused on BTC price

moving direction classification problem. A compari-

son between deep learning architectures(CNN, LSTM

and RNN ) and word embedding models(Word2Vec,

GloVe and FastText) on predicting BTC price moving

direction using Twitter sentiment data is done. The

research result shows that the word embedding model

FastText (Joulin et al., 2017) (Mikolov et al., 2019)

achieves the best result with 89.13% accuracy. The

performance order was FastText > LSTM > CNN >

RNN ∼= GloVe > Word2Vect.

Like FastText, Transformer (Vaswani et al., 2017)

is popular for NLP tasks. Transformer is based on

the multi-head attention mechanism (Vaswani et al.,

2017) which allows the model to understand coher-

ent relationships between the past tokens and the cur-

rent token in NLP tasks. Based on the assumption

that a time series is a sentence, a time point is a posi-

tion in the sentence, and the data at the time point can

be considered as the word in the position of the sen-

tence. Under this assumption, the Transformer with

multi-head attention can be utilized as a time series

forecasting tool.

Li et al. (Li et al., 2019) proved the assump-

tion. The authors implemented a model with a dual

attention layer to predict next time point public senti-

ment against P2P companies: Yucheng Group, Kuailu

Group and Zhongjin Group. The time series that the

model was applied on contains data points which are

composed of micro blog post content which contains

0-140 Chinese words, author, pubtime, number of

fans, and user category. LSTM, SVM, CNN and a

model composed by two layers of LSTM with SVM

were compared with the proposed model. The re-

sult shows that the proposed model with a dual atten-

tion layer was the winner. The study also suggested

Transformer can capture long-term dependencies not

captured by LSTM (Li et al., 2019). They also pro-

posed convolutional self-attention and sparse atten-

tion to further improve Transformer’s performance

by incorporating local context and reducing memory

cost.

Attention! Transformer with Sentiment on Cryptocurrencies Price Prediction

99

Inspired by the above studies, and considering

lack of studies on Transformers prediction ability on

cryptocurrency future price, this research explores

Transformer’s forecasting ability on BTC and ETH

time series data with sentiment analysis.

3 DATA

3.1 Data Collection

6 years of BTC price data from 2015/01/01 to

2021/04/27 and 5 years of ETH data from 2016/05/09

to 2021/04/27 is collected from CoinAPI

1

for this

study. The downloaded raw data contains the follow-

ing information: time period start, time period end,

time open, time close, price open, price high, price

low, price close, volume traded, and trades count. For

a single day, there are 8 data points collected with 3

hours intervals. In total, there are 18,454 BTC data

points and 14,200 ETH data points.

To gather daily sentiment against BTC and ETH

from Twitter, tweets are scraped using snscrape api

2

. 10,000 tweets are scraped for each day in

the date range (2015/01/01 to 2021/04/27 for BTC,

2016/05/09 to 2021/04/27 for ETH). A scraped tweet

contains the tweet text content, tweet id, user name,

tweet language, date time when the tweet is posted,

how many likes the tweet got and how many times

the tweet is retweeted.

3.2 Data Preparation

3.2.1 Sentiment Analysis

In Pano and Kashef’s study (Pano and Kashef, 2020),

13 text preprocessing strategies and 4 sentiment anal-

ysis methods are compared. By applying the various

techniques on scraped tweets using Tweepy

3

, Pano

and Kashef indicate that for text prepossessing, split-

ting sentences or removing Twitter-specific tags can

improve the correlation of sentiment scores with Bit-

coin prices. To get the sentiment score, the authors

employed Valence Aware Dictionary and sEntiment

Reasoner(VADER), a lexicon and rule-based senti-

ment analysis tool that is specifically attuned to senti-

ments expressed in social media

4

.

Following the above approach, in this study, the

sentiment score is obtained for a tweet scraped from

1

https://www.coinapi.io/

2

https://github.com/JustAnotherArchivist/snscrape

3

http://www.tweepy.org/

4

https://github.com/cjhutto/vaderSentiment

Twitter by 2 steps: 1) Cleaning the tweet text by re-

moving Twitter handles, URLs, and special charac-

ters. 2) Using VADER to assign sentiment polarity

score to the cleaned tweet. The polarity score is ei-

ther 1 (positive), 0 (neutral) or -1 (negative). To get

the sentiment score for a specific day, 10,000 tweets

are scraped for the day, and the top 100 most liked

and most retweeted tweets are selected for sentiment

analysis using VADER. The final sentiment score for

the day is the average score of the additive score of

the select 100 tweets.

3.2.2 Final Dataset for The Experiment

From the raw BTC and ETH price data, the following

columns are extracted respectively: time period start,

open, high, low, close (OHLC) and volume traded.

To normalize the data, MinMaxScaler from sklearn

library is applied. The calculated sentiment score for

each day is joined into the dataset to create the final

dataset for this research. The overall data processing

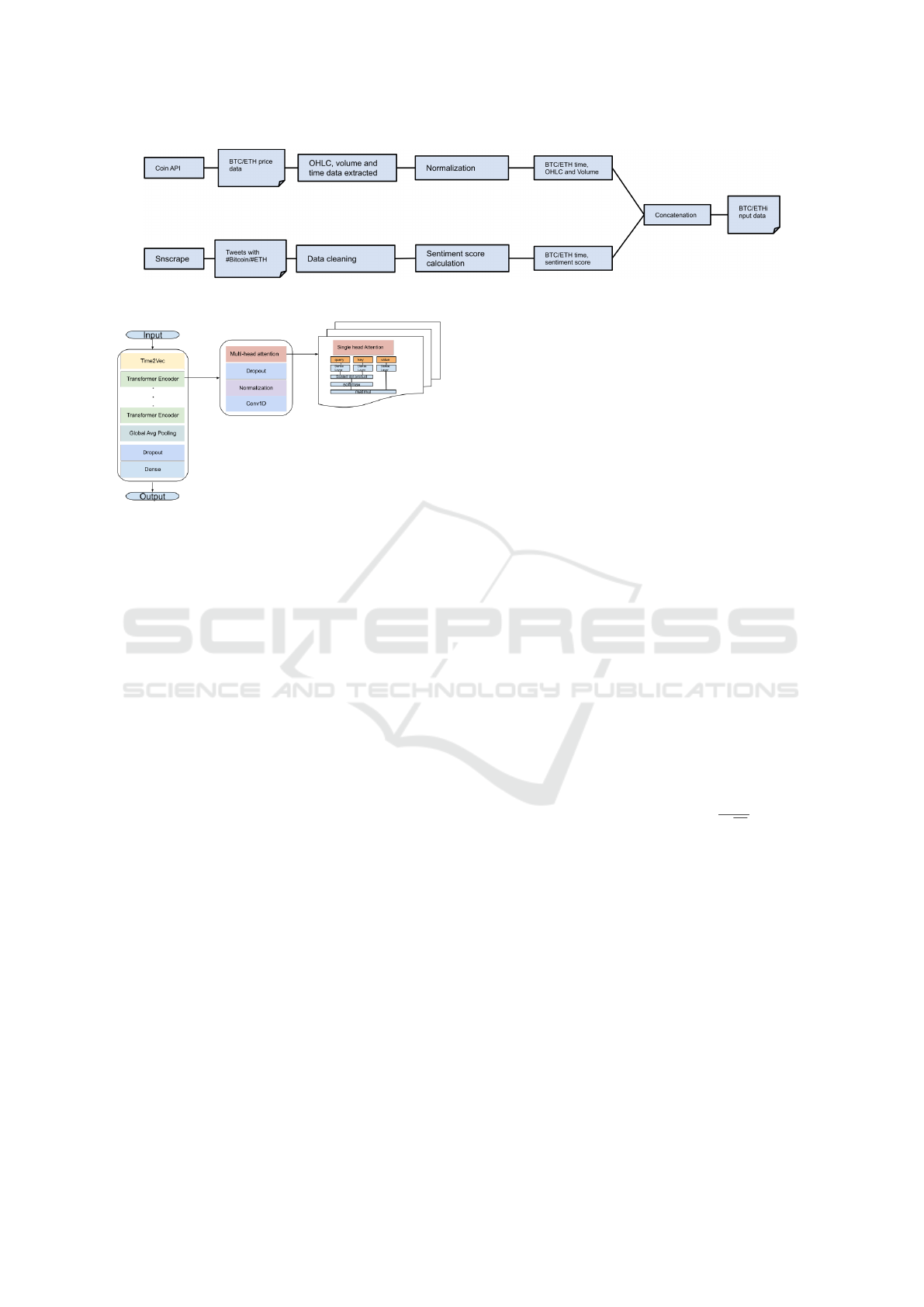

procedure illustrated in Figure 1.

The processed dataset is split into train and test

datasets with a ratio of 80:20. The sliding window

method is applied so the model can understand the

coherence of the data points in the time series. The

window size is set to be 240. The correspond output

is the 241

st

data point.

4 METHODOLOGY

4.1 Overview

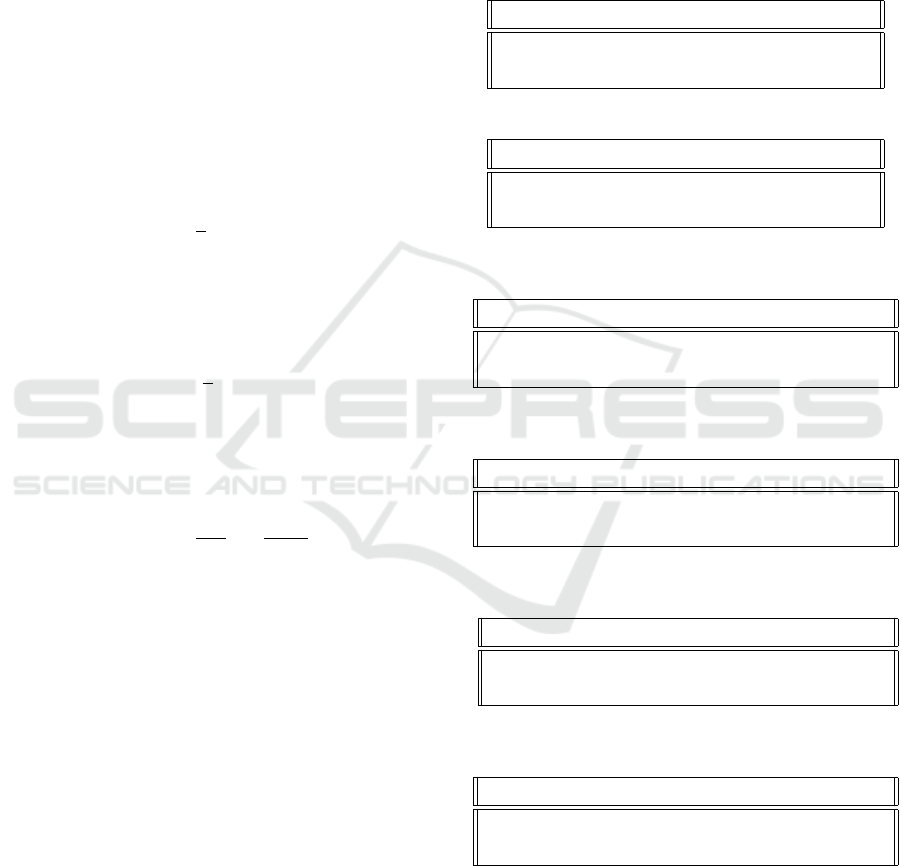

The design of the Transformer is shown in Figure 2.

The architecture starts with Time2Vec layer that adds

time embedding features to the input matrix. It fol-

lowed by a stacked of 6 Transformer encoder layers

that has a multi-head attention layer in the each of

the encoder layer. Every multi-head attention layer is

composed by 8 single-head attention layers. The fi-

nal output is produced after the output of the stacked

Transformer encoder layers passes through a Global

average pooling layer, a Dropout layer and a Dense

layer.

4.2 Model Design

4.2.1 Time Embedding - Position Encoding for

Time Series

Positional encoding is required for Transformer to un-

derstand the absolute or relative position information

of input words when the model is applied on NLP

COMPLEXIS 2022 - 7th International Conference on Complexity, Future Information Systems and Risk

100

Figure 1: How data is scraped and processed.

Figure 2: The overall system design of the Transformer for

cryptocurrency price prediction.

tasks. With time series inputs, time is arguably as

essential as word position is for NLP. Periodical and

non-periodical information in the dataset need to be

understood by the model. Inspired by positional en-

coding, Kazemi et al. (Kazemi et al., 2019) created a

vector representation of time called Time2Vec. This

method is described as ”a model-agnostic vector rep-

resentation for time” in Kazemi’s paper. It can be

potentially used as an extra layer in any architecture

(Kazemi et al., 2019). The idea behind Time2Vec is

that time representation should contain periodic pat-

terns and non-periodic patterns. It also should be in-

variant to time rescaling. In mathematical represen-

tation, Time2Vec can be represented by Equation 1

(Kazemi et al., 2019). Here, ω

i

τ + ϕ

i

is a linear func-

tion which represents non-periodical components of

the time series. ω is the slope of the linear function

and ϕ is the interception. f (ω

i

τ + ϕ

i

) is the periodical

part where f is a sine function which helps to capture

periodic behaviors without the need for feature engi-

neering (Kazemi et al., 2019).

t2v(τ)[i] =

(

ω

i

τ + ϕ

i

, if i=0

f (ω

i

τ + ϕ

i

) if 1 ≤ i ≤ k

(1)

After input data pass this Time2Vec layer, two out-

puts: periodical and non-periodically features are cal-

culated. These features are concatenated with the in-

put data to produce a new input matrix to feed Trans-

former model.

4.2.2 Single-head Attention - Transformer’s Self

& Scaled-dot Attention

An attention function can be described as mapping a

query and a set of key-value pairs to an output, where

the query, keys, values, and output are all vectors

(Vaswani et al., 2017). Query is calculated from the

current token. Keys are calculated from past tokens.

The query and the keys are compared to get attention

weights for the values. The sum of the weights is 1.

The attention mechanism firstly creates three vec-

tors: Q(query), K(key), and V(value) for each word

embedding. These vectors are created by multiplying

the input embedding x with three matrics W

Q

, W

K

and

W

V

, Equation 2, 3, 4. It then calculates the score for

each word in the input sentence, divides the scores by

the square root of the K dimension, softmax the div-

idend score, and finally multiples V vector with the

softmax score, Equation 5. Using a scaled dot func-

tion helps in getting more stable gradients and soft-

max function makes sure the scores at each input se-

quence position will add up to 1.

Q = x ∗W

Q

(2)

K = x ∗W

K

(3)

V = x ∗W

V

(4)

Attention(Q, K,V ) = So ftmax(

QK

T

√

d

k

)V (5)

4.2.3 Multi-head Attention

Multi-head attention is a process of concatenating h

single-head attention weights. Q, K and V are lin-

early projected h times. For each projected version of

K, V and Q pairs, an attention layer is applied. Each

attention layer produces output values in parallel for

each version of projected K, V and Q. These outputs

are concatenated and linearly projected to get the final

value. By doing this multi-head attention, the model

increases its ability to focus on different parts of the

input sequence by taking in information from differ-

ent representations jointly.

Attention! Transformer with Sentiment on Cryptocurrencies Price Prediction

101

MultiHead(Q, K,V ) = Concat(head

1

, ..., head

h

)W

o

(6)

where

head

i

= Attention(QW

Q

i

, KW

K

i

,VW

V

i

) (7)

5 RESULTS

5.1 Metrics

Mean Square Error (MSE), MAE (Mean Absolute Er-

ror) and Mean Absolute Percentage Error (MAPE) are

selected as metrics for the model accuracy.

MSE is the average value of the squared difference

between prediction and actual value. It gives variance

of the residuals, Equation 8. The value can be drasti-

cally changed from a large outlier.

MSE =

1

n

n

∑

i=1

(y

i

− ˆy)

2

(8)

MAE is the average value of the absolute differ-

ence between prediction and actual value, Equation 9.

It is average magnitude of error produced by models.

It is not sensitive to outliers.

MAE =

1

n

n

∑

i=1

|y

i

− ˆy| (9)

MAPE shows the distance between estimation and

reality in percentage, Equation 10. Compared with

MAE, it is normalized by the actual value. It can be

problematic when the actual value is 0.

MAPE =

100

n

n

∑

i=1

|

y

i

− ˆy

y

i

| (10)

5.2 LSTM vs Transfomer on BTC and

ETH Prices without Sentiment Data

As Table1 and Table2 show, applying the proposed

Transformer model on the processed BTC and ETH

price data does not achieve the same result as LSTM.

It shows LSTM is better than the proposed model on

BTC and ETH prices prediction. Like Ji (Ji et al.,

2019), Mohan (Mohan et al., 2019), and Raju (Raju

and Tarif, 2020)’s research, this study also proves

LSTM is an outstanding model on regression prob-

lems.

5.3 Will Sentiment Scores Improve

Transformer and LSTM’s

Performance?

After adding sentiment scores in BTC data, the pro-

posed Transformer’s model is improved by 0.001

on MSE, 0.1228 on MAPE and 0.01465 on MAE.

Adding sentiment scores in ETH data does not get

the same improvement. For the LSTM model, adding

sentiment scores introduced more outliers on ETH fu-

ture price prediction but no improvements on predic-

tions with both BTC and ETH data, Table 5 and Table

6.

Table 1: Transformer vs LSTM on BTC OHLC price.

Model MSE MAPE MAE

Transformer 0.00137 0.18096 0.02900

LSTM 0.00033 0.04343 0.01310

Table 2: Transformer vs LSTM on ETH OHLC price.

Model MSE MAPE MAE

Transformer 0.15987 0.66011 0.33890

LSTM 0.00126 0.06651 0.02931

Table 3: Transformer’s performance before and after adding

sentiment scores in BTC data.

Data MSE MAPE MAE

BTC 0.00137 0.18096 0.02900

BTC+sentiment 0.00037 0.05816 0.01435

Table 4: Transformer’s performance before and after adding

sentiment scores in ETH data.

Data MSE MAPE MAE

ETH 0.15987 0.66011 0.33890

ETH+sentiment 0.16289 0.65803 0.34170

Table 5: LSTM performance before and after adding senti-

ment scores in BTC data.

Data MSE MAPE MAE

BTC 0.00033 0.04343 0.01310

BTC+sentiment 0.00032 0.04613 0.01346

Table 6: LSTM performance before and after adding senti-

ment scores in ETH data.

Data MSE MAPE MAE

ETH 0.00126 0.06651 0.02931

ETH+sentiment 0.00586 0.03316 0.01656

5.4 Transfer Learning

An interesting finding in this study is that by applying

the Transformer model trained with BTC data on pre-

dicting ETH future price, better results are achieved

than by applying the Transformer model trained with

COMPLEXIS 2022 - 7th International Conference on Complexity, Future Information Systems and Risk

102

Figure 3: Normalized BTC and ETH price.

ETH data only. Table 7 and Table 8 show the big

jump on the metrics. It can be seen in Figure 3 that

ETH price movement has a very similar trend as BTC

price movement. The calculated Spearman’s correla-

tion between the BTC close price and ETH close price

is 0.732. It means the BTC close price is highly cor-

related with ETH close price. As leading cryptocur-

rencies in the market, ETH and BTC datasets have

similar nature. Predicting the future price of ETH and

BTC can be considered a related problem. Therefore

the knowledge Transformer model got when learn-

ing from BTC data can be applied to predicting ETH

price. Due to the limited amount of ETH data, the

ETH dataset has 4245 fewer data points when com-

pared with the BTC dataset. The Transformer model

learns more context from the BTC dataset.

Table 7: Transfomer model on ETH OHLC price.

Model MSE MAPE MAE

Trained with ETH data 0.15987 0.66011 0.33890

Trained with BTC data 0.00081 0.06093 0.02157

Table 8: Transfomer model on ETH time series with senti-

ment scores.

Model MSE MAPE MAE

Trained with ETH data 0.16289 0.65803 0.34170

Trained with BTC data 0.00567 0.06697 0.02412

6 CONCLUSION

In this study, we have implemented a Transformer

model with stacked Transformer encoder layers. In

contrast with the standard Transformer model applied

in NLP, the proposed model has a Time2Vec layer

to implement time embedding. In each of the Trans-

former encoder layers, there is one multi-head atten-

tion layer which is concatenated by 8 single-head at-

tention. We applied the proposed model to the prob-

lem of BTC and ETH price prediction. We also com-

pared the forecasting ability between the proposed

Transformer model and LSTM model. The result

shows that the LSTM model outperforms the pro-

posed model on predicting BTC and ETH future price

with or without sentiment scores data. With senti-

ment scores added in the BTC dataset, there is an

obvious improvement on the proposed model’s pre-

diction result. For ETH future prediction, there is

no improvement. Applying the LSTM model on both

dataset with sentiment scores, there is no performance

improvement but more outliers produced with ETH

dataset. An interesting finding on the proposed model

is that the model trained with BTC data can give better

prediction results on ETH future price than the model

trained with ETH data.

Future work can start from the following aspects.

First, the sentiment data collection. The collected sen-

timent data from this study is the latest 10,000 tweets

of each day. When calculating the sentiment score,

the popularity of the tweets is considered. Compared

with news from finance websites or newspapers with

more influence, the sentiment could be different from

what is collected from Twitter. In future study, sen-

timent from CoinDesk and Bloomberg might provide

a different angle of how people view the price move-

ment. Second, sentiment analysis. Instead of using

sentiment scores as a feature, the news text or tweets

text can be converted to a feature vector. The sen-

timent analysis can be carried out as a model layer

which takes in the text. Third, improving model per-

formance by introducing temporal pattern attention

(Shih et al., 2018) or sparse attention (Li et al., 2019).

REFERENCES

Ben Letham, S. J. T. (2017). Prophet: forecasting at scale.

Chen, C., Liu, L., and Zhao, N. (2020). Fear sentiment,

uncertainty, and Bitcoin price dynamics: The case of

COVID-19. Emerging Markets Finance and Trade.

Georgoula, I., Pournarakis, D., Bilanakos, C., Sotiropoulos,

D. N., and Giaglis, G. M. (2015). Using Time-Series

and Sentiment Analysis to Detect the Determinants of

Bitcoin Prices. SSRN Electronic Journal.

Guerra, M. L., Sorini, L., and Stefanini, L. (2020). Bitcoin

Analysis and Forecasting through Fuzzy Transform.

Axioms, 9(4):139.

Hochreiter, S. and Schmidhuber, J. (1997). Long Short-

Term Memory. Neural Computation, 9(8):1735–1780.

Hu, C., Qiang, W., Hui, L., Shengqi, J., Nan, L., and

Zhengzheng, L. (2018). Deep learning with a long

short-term memory networks approach for rainfall-

runoff simulation. Water (Switzerland), 10(11):1–16.

Ji, S., Kim, J., and Im, H. (2019). A comparative study of

bitcoin price prediction using deep learning. Mathe-

matics, 7(10).

Joulin, A., Grave, E., Bojanowski, P., and Mikolov, T.

(2017). Bag of tricks for efficient text classification.

Attention! Transformer with Sentiment on Cryptocurrencies Price Prediction

103

15th Conference of the European Chapter of the As-

sociation for Computational Linguistics, EACL 2017

- Proceedings of Conference, 2:427–431.

Kazemi, S. M., Goel, R., Eghbali, S., Ramanan, J., Sahota,

J., Thakur, S., Wu, S., Smyth, C., Poupart, P., and

Brubaker, M. (2019). Time2Vec: Learning a Vector

Representation of Time.

Kilimci, Z. H. (2020). Sentiment analysis based direction

prediction in bitcoin using deep learning algorithms

and word embedding models. International Journal of

Intelligent Systems and Applications in Engineering,

8(2):60–65.

Li, L., Wu, Y., Zhang, Y., and Zhao, T. (2019). Time+User

dual attention based sentiment prediction for multiple

social network texts with time series. IEEE Access,

7:17644–17653.

Mikolov, T., Grave, E., Bojanowski, P., Puhrsch, C., and

Joulin, A. (2019). Advances in pre-training distributed

word representations. LREC 2018 - 11th International

Conference on Language Resources and Evaluation,

(1):52–55.

Mohan, S., Mullapudi, S., Sammeta, S., Vijayvergia, P., and

Anastasiu, D. C. (2019). Stock price prediction using

news sentiment analysis. Proceedings - 5th IEEE In-

ternational Conference on Big Data Service and Ap-

plications, BigDataService 2019, Workshop on Big

Data in Water Resources, Environment, and Hydraulic

Engineering and Workshop on Medical, Healthcare,

Using Big Data Technologies, pages 205–208.

Nyoni, T. (2018). Modeling and forecasting inflation in

Kenya: recent insights from ARIMA and GARCH

analysis. Technical report.

Pano, T. and Kashef, R. (2020). A Complete VADER-Based

Sentiment Analysis of Bitcoin (BTC) Tweets during

the Era of COVID-19. Big Data and Cognitive Com-

puting, 4(4):33.

Prajapati, P. (2020). Predictive analysis of Bitcoin price

considering social sentiments. Technical report.

PWC (2021). 3rd Annual Global Crypto Hedge Fund Re-

port 2021.

Raju, S. M. and Tarif, A. M. (2020). Real-Time Predic-

tion of BITCOIN Price using Machine Learning Tech-

niques and Public Sentiment Analysis. Technical re-

port.

Serafini, G., Yi, P., Zhang, Q., Brambilla, M., Wang, J., Hu,

Y., and Li, B. (2021). Sentiment-Driven Price Pre-

diction of the Bitcoin based on Statistical and Deep

Learning Approaches.

Shih, S.-Y., Sun, F.-K., and Lee, H.-y. (2018). Temporal

Pattern Attention for Multivariate Time Series Fore-

casting.

Vaswani, A., Brain, G., Shazeer, N., Parmar, N., Uszkor-

eit, J., Jones, L., Gomez, A. N., Kaiser, Ł., and Polo-

sukhin, I. (2017). Attention Is All You Need. Techni-

cal report.

Yenidogan, I., Cayir, A., Kozan, O., Dag, T., and Arslan,

C. (2018). Bitcoin Forecasting Using ARIMA and

PROPHET. UBMK 2018 - 3rd International Confer-

ence on Computer Science and Engineering, (Febru-

ary 2019):621–624.

Yi, S., Xu, Z., and Wang, G. J. (2018). Volatility connected-

ness in the cryptocurrency market: Is Bitcoin a domi-

nant cryptocurrency? International Review of Finan-

cial Analysis, 60(May):98–114.

COMPLEXIS 2022 - 7th International Conference on Complexity, Future Information Systems and Risk

104