UAV Path Planning based on Road Extraction

Chang Liu

1a

and Tamás Szirányi

1,2 b

1

Department of Networked Systems and Services, Budapest University of Technology and Economics,

Műegyetem rkp. 3, Budapest, Hungary

2

Machine Perception Research Laboratory of Institute for Computer Science and Control (SZTAKI),

Kende u. 13-17, Budapest, Hungary

Keywords: Image Segmentation, Road Extraction, Weighted Path Planning, A Star Algorithm, UAV, SAR.

Abstract: With the development of science and technology, UAVs are increasingly being used and serving humans,

especially in the wilderness environment, due to their portability and the ease with which they can reach

places that are beyond human reach. In this paper, we present a technique for drones to help humans

intelligently plan routes in a field environment. Our approach is firstly based on road extraction techniques in

the field of image segmentation, using state-of-the-art D-LinkNet to extract roads from images captured by

real-time UAVs. Secondly, the extracted road information is analyzed, the set of main roads and that of the

secondary road are distinguished according to the width and the real-time road conditions on the ground, and

different weights are assigned to them. Finally, the A star algorithm is used to calculate a route plan with

weights based on the human-defined starting and ending points to obtain the optimal route. The results of our

task are the simulations on publicly available datasets to show that the method works well to provide the

optimal intelligent routes in real-time for people in the field.

1 INTRODUCTION

With the development of computer vision technology,

drone vision technology is increasingly used in

various fields of human life, providing convenience

for human daily activity. For example, in agriculture,

drones can help farmers to estimate the yield and size

of citrus fruits (Apolo, 2020); in the field of medicine,

the fleet of drones available for logistics to deliver

medical items (Ghelichi, 2021); in the field of disaster

relief, drones can detect fires (Moumgiakmas, 2021)

and floods (Rizk, 2022), and so on. In the field of

wilderness rescue, one of the biggest advantages of

drones is their flexibility, as they can easily reach

places that are inaccessible to humans, i.e. rescuers,

making the use of GPU-equipped drone vision

technology a viable option for rescue in difficult

environments in the field, especially where there is no

internet. The development of drone technology

allows for endless possibilities in the future, but of

course, we also have to take into account the

performance of the GPU we are equipped with and

a

https://orcid.org/0000-0001-6610-5348

b

https://orcid.org/0000-0003-2989-0214

the battery life, among other things (Galkin, 2019),

which are closely related. When it comes to the

wilderness, which can be accompanied by poorly

developed networks and roads that are not in good

condition, or even roads that are not included in

Google Maps (Ciepłuch, 2010), we consider the use

of flexible drone vision technology to provide

intelligent route planning for these people in the

wilderness. In addition to its flexibility, the images

captured by drones have a higher resolution than

those captured by satellites and are more practical in

people's daily lives, as they are still more accessible

and on time to humans than satellites, and they can

better serve people's lives. Based on our previous

work (Liu, 2021), drones can interact well with

humans in the wild and can recognize some hand

gestures, and communicate more easily.

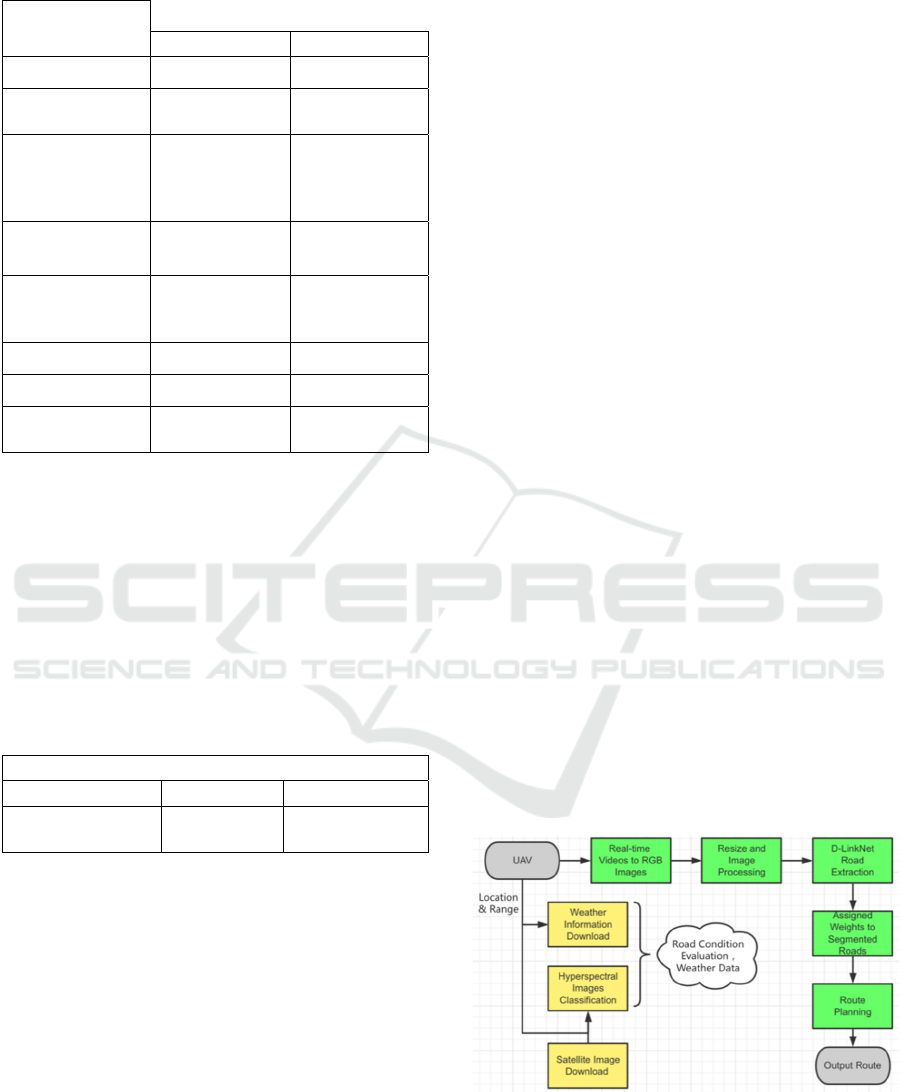

This work proposes a method for UAVs to plan

intelligent routes in real-time for people in the wild

field. The desired usage scenarios and the

introduction diagram are shown in Figure 1. The

method mainly extracts map images from the real-

time video sequences captured by the UAV, converts

202

Liu, C. and Szirányi, T.

UAV Path Planning based on Road Extraction.

DOI: 10.5220/0011089900003209

In Proceedings of the 2nd International Conference on Image Processing and Vision Engineering (IMPROVE 2022), pages 202-210

ISBN: 978-989-758-563-0; ISSN: 2795-4943

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

them into three-channel fixed resolution images

through image processing techniques, these images

are used as input for the road extraction process,

outputs the road extraction results through D-LinkNet

(Zhou, 2018), and finally gives different weights for

intelligent route planning through road information

analysis. Combining weather information with road

information, it is important to assign weights to the

proposed roads to help humans avoid roads in bad

condition (e.g. muddy areas in the rain or snowy after

snowing, or sandy after sand-storm) and choose roads

in better condition. This is the starting point for

assigning weights to different roads of specific

conditions. Road information mainly includes road

width, road surface material, and the environmental

pollution covering the road surface (flood, mud,

rubble), estimated from satellite hyperspectral data

and the weather conditions. Generally speaking,

large, spacious roads are in better condition than

small, narrow roads on rainy days, and roads with

asphalt surface material are in better condition than

soil roads. Hyperspectral images’ classification

seems to offer a solution for the detection of road

material (Hong, 2020). Hyperspectral images can also

be used to classify and determine the condition of

roads (Mohammadi,2020). Some fusion of satellite

hyperspectral images with UAV RGB images are

used to detect the constituent materials of the road

surface (Jenerowicz, 2017). The fusion technique is

also used very successfully in other areas

(Maimaitijiang, 2017). In the weather stations in the

map, we can get information on the amount of

precipitation in the area and thus determine the

muddy, flooded, snowy, or sandy state of the soil or

soil roads or contaminated or spilled with soil based

on the amount of precipitation. (Kim, 2021) provides

a viable solution for predicting road conditions in

rainy weather using artificial neural networks. Soil

properties (Ben-Dor, 2002) can also be obtained by

imaging spectroscopy (Ben-Dor, 2009). All of the

above provide a firm basis for the road weighting

process.

Figure 1: Desired usage scenarios and the introduction

diagram.

Another point worth mentioning is that for the

field environment, the network connection can

sometimes be poor, even if the user has downloaded

a map of the area in advance. For some unavoidable

reasons, such as those mentioned above, some

feasible roads in the field environment are not

included in the map, and the roads contained in that

map are not weighted with information, so it is not

possible to plan an intelligent route in real-time, but

the user can provide us with the departure location

and destination, and we input the two coordinate

points on this map into the A star algorithm of (Cui,

2012) and use the weights to perform a route search.

An optimal route with good road conditions can then

be quickly fed back to the user. If there is no road, we

can generate paths, as tracks through the terrain,

estimating the “road” usability from the UAV-based

scanning and searching for tracks, and the

hyperspectral soil evaluation from earlier satellite

data for these paths.

2 BACKGROUND

2.1 Related Dataset and Assumptions

We test our proposed approach to intelligent road

route planning on the DeepGlobe Road Extraction

dataset (Demir, 2018), which is publicly available and

consists of 6226 training images, 1243 validation

images, and 1101 test images. Each RGB image has

a resolution of 1024 * 1024. Roads in this dataset are

labeled as foreground and other objects are labeled as

background. The imagery has 50cm pixel resolution,

collected by DigitalGlobe's satellite (PGC, 2018).

This also means that each picture corresponds to an

actual true distance of 512 m * 512 m, and each

picture covers an area of 262,144 m

2

. Specific

satellite information for data collection is shown in

Table 1, which shows the satellite's altitude, sensor

resolution, dynamic range, and other information. In

Table 1, in addition to the satellite data Word-View 1

used for the collection of the above public dataset,

information on Word-View 3 is shown as it provides

30cm panchromatic resolution and 1.24m

multispectral resolution which can be used for

pavement soil information estimation.

UAV Path Planning based on Road Extraction

203

Table 1: DigitalGlobe Satellite (PGC, 2018).

Satellite

Specifications

WorldView-1 WorldView-3

Launched: 2007 2014

Operational

Altitude:

496 km 617 km

Spectral

Characteristics:

Panchromatic

Panchromatic +

8 Multispectral +

8 SWIR + 12

CAVIS

Sensor

Resolution:

50 cm GSD at

nadir

31 cm GSD at

nadir

Dynamic Range: 11-bits per pixel

11-bits per pixel,

14-bits per pixel

SWIR

Swath Width: 17.7 km at nadir 13.1 km at nadir

Capacity: 1.3 million km

2

680000 km

2

per day Stereo

Collection:

Yes Yes

The dataset mentioned above was collected by

satellite and, considering the practical applicability

and implementation ability for real-life humans, we

also tested it on an open-source dataset provided by

senseFly UAV (SenseFly, 2009). This is an example

dataset of a small Swiss village called Merlishachen.

The imagery was collected during a single eBee

Classic drone flight. The number of images in this

dataset is 297, each image is (4608*3456*3), and

other specific information is given in Table 2.

Table 2: eBee Classic drone dataset (SenseFly, 2009).

Technical data

Ground resolution Coverage Flight height

5 cm (1.96 in)/px

0.57 sq. km

(0.22 sq. mi)

162 m (531.4 ft)

The hypothetical scenarios set for this study are

as follows:

The user is in a wild and uninhabited

environment, preferably after rainy or

otherwise bad weather.

Even if the user is in a place with poor or no

internet connection, the user can provide the

drone with its preferred starting and ending

coordinates via a previously downloaded map.

It can be done through a WiFi connection or by

using hand gestures (Liu, 2021).

The drone has sufficient range onboard and

with a sufficiently charged battery.

Except in the case of fog or other conditions

that obstruct the drone's view.

2.2 Proposed System

The overall flow chart of this system is shown in

Figure 2, the input is the real-time video sequence

captured by the UAV camera, which flies at a high

altitude in the sky. The captured video sequence is

segmented into images by frame. The RGB image

obtained is turned into a three-channel image with a

resolution of 1024*1024 after image processing, at

this time the data preparation work is completed. The

next step is to input the processed images into D-

LinkNet for road extraction. The extracted part of the

image with the road labeled as foreground and other

objects are labeled as background.

By combining this with the original RGB image

and other supporting data, we weigh the extracted

roads, where the main considerations are the width of

the road, the connectivity of the road, and the material

of the road. We mark the roads in good condition as

green, meaning that the road is in better condition

than the rest and that humans walk faster than the rest

after rain. The rest of the roads that have not been

given green priority remain white. Most of the white

roads are very narrow and muddy after rainy, flooded,

snowy or sandy, and are not suitable for humans to

walk on. As this paper is the basis of our current

research work, this section mainly presents ideas and

feasible solutions, and the implementation work will

be carried out in detail for the road weighting section

in the future.

Finally, according to the most commonly used A

star algorithm (Cui, 2012), we assign different values

to the green and white pixel parts and use the A star

algorithm to calculate the shortest and/or fastest path

from the start point to the endpoint, to provide the best

route for people in the field in any weather conditions,

like being after a muddy rain.

Figure 2: Flowchart of the proposed system.

IMPROVE 2022 - 2nd International Conference on Image Processing and Vision Engineering

204

3 METHODOLOGY

Figure 3 shows the key steps of this work: after the

video sequence is cut into images and image

processing in data preparation, the input RGB drone

image is passed through D-LinkNet's road extraction

(Zhou, 2018) to get the extracted road-map, next the

different results are shown, the right side is given the

green priority road with good condition and the left

side is the white normal road. For the results, the blue

circles are marked with the starting point and the

yellow circles with the destination, as shown by the

labels on the enlarged image.

Figure 3: Key steps and comparison of results.

3.1 Data Preparation and Road

Extraction Analysis

According to weather information, when bad weather

has passed, which means that some roads in the field

are muddy, flooded, snowy or sandy, GPU-equipped

drones can capture video in real-time from high

altitudes to provide route planning assistance to

humans in the air below that view. The video

sequence captured in real-time can be split into

different images according to the frame, which is an

RGB image, and then through image processing such

as resize, we can get a three-channel image of the

ground in the current state with a resolution of

1024*1024. This step is the data preparation stage. It

lays the foundation for the subsequent road extraction

and road condition analysis. The main purpose of this

process is to unify, different UAVs capture images at

different resolutions so the images are unified using

the data processing part. For example, the images in

dataset 2 will change from (4608*3456*3) to

(1024*1024*3) after this process and thus enter as

input into the subsequent processes of the system.

Figure 4 shows the processed RGB image input to

the road extraction network. D-LinkNet can perform

road segmentation well, labeling the roads as

foreground and the others as background. The grey-

scale image is combined with the original color map

for road analysis, where we mainly consider the width

of the road, the connectivity of the road, and the

material of the road surface, because generally

speaking if the road is spacious, well connected, and

made of asphalt, the road will be more suitable for

pedestrians or vehicles after muddy, flooded, snowy

or sandy weather. Conversely, if the road is narrow,

poorly connected, and composed of soil, such roads

can become muddy after heavy rainfall or snowy or

sandy weather. The pedestrians or drivers will find it

difficult to walk or drive on them. It is therefore

important to choose a road that is in good condition

after the bad weather to save some time and bring

convenience to humans. However, road-sections of

bad conditions can also be considered if it can connect

other road networks to make shortening of the path

with acceptable difficulties.

Figure 4: Road extraction, analysis, and marking of green

priority roads.

It is important to note here that the D-LinkNet

segmentation does not reach 100%, so there is a

difference between the results of the road extraction

and the real situation of the original image. For road

material detection, researchers (Hong, 2020) have

been able to identify asphalt and soil based on

hyperspectral image segmentation techniques,

although the two tasks are based on different data sets.

Hyperspectral images can also be used to classify and

determine the condition of roads (Mohammadi, 2020).

Last but not least, weather information is also

important and we can get relevant real-time and past

period weather information from the radar. The

weather information was downloaded by the scouting

UAV before starting into the wild. It can provide the

amount of precipitation in the area, and there are

precipitation values whose magnitude directly affects

the road conditions of the material is soil, so this

information coupled with the fusion of hyperspectral

imagery with drone imagery will be added to our

research work in the future. By combining these

elements, we can assign weights to the roads extracted

from the map in a very comprehensive way. And now

we compare only the original RGB image with the

segmented road grey image, which is given priority

based on the two factors of road connectivity and width

and is labeled as a green road, the rest remaining white.

UAV Path Planning based on Road Extraction

205

3.2 A Star Algorithm and Weighted

Route Planning

The A-star algorithm (Cui, 2012) is a heuristic search

algorithm for global path planning. It has been

successfully implemented and tested as a path

planning algorithm for mobile robots. The results can

be found in (Kuswadi, 2018). This algorithm uses a

combination of heuristic searching and searching

based on the shortest path. It is defined as the best-

first algorithm because each cell in the configuration

space is evaluated by the value:

(1)

where g(n) represents the cost from the starting

point to the current node; h(n) represents the

estimated cost from the current node to the ending

point; n is the current node.

The definition of a white node is a no-priority road

and the green node is the road with priority. Figure 5

shows the assignment of values for g(n) in different

pixel cases when the node is surrounded by white

pixels, which means the node without priority and be

accessible, then the point is the top and bottom left

and right nodes are each assigned a value of 100, the

diagonal length of the four points in the diagonal

direction of the node is 140. The other case, when the

node is surrounded by green pixel points, that is, with

priority access to the road, then the corresponding

value is reduced by a factor of 100, again when both

are present as shown in Figure 5, the algorithm gives

preference to the green node with a small loss value

since the minimum f- value is to be obtained. In

Figure 5, the leftmost plot shows that the pixel is on a

road that is not given priority and that the pixel is

surrounded by non-priority roads, the middle plot

shows that the pixel is on a road that is given priority

and that it is surrounded by pixels that are given

priority, and the rightmost plot shows a critical state

where the intersection of the two, the road that is

given priority and the road that is not given priority

We randomly select the top right two pixel points to

be labelled as priority roads and the rest as non-

priority roads, then the algorithm comes into play,

and this is where the assignment of values at the pixel

level in the g(n) function comes into play.

Figure 5: Different assignment of g(n) cost value to

different pixel points.

There are several well-known heuristic

mathematical functions h(n) that can be used

(Heuristics, 2019), the most commonly used are

Euclidean distance h

E

, Manhattan distance h

M

, or

Diagonal distance h

D

. In this work, we have chosen to

use the h

D

to calculate the diagonal distance with the

weighted modification:

(2)

(3)

(4)

(5)

∗,

∗,

(6)

Where (x

n

,y

n

) is the coordinate of the current node

n; (x

g

, y

g

) is the coordinate of the end node n; For

green cell d

1

=1 and d

2

=1.4 (octile distance), white cell

d

1

=100 and d

2

=140.

Put the 8 adjacent nodes of the starting point into

the open list and if the adjacent node is unreachable,

then remove this node from the open list. Using

Equation (1) to calculate the cost function formula for

the adjacent nodes, the one with the smallest f-value

is chosen as the next node and the previous nodes are

put into the closed list. The sequence continues until

the current node is the end position, and finally, a path

with the smallest f-value from the start to the end will

be found, which is the optimal path.

4 EXPERIMENTS

We tested the main part of this work on the publicly

available DeepGlobe Road Extraction dataset (Demir,

2018) and SenseFly dataset (SenseFly, 2009). We

also evaluated the time required for each phase of this

work. Three sets of experiments were carried out in

the DeepGlobe Road Extraction dataset and the

results can be found in Table 3 the three tests are

shown in Figure 6, Figure 7, and Figure 8. In these

figures, blue circles indicate the starting point, yellow

circles indicate the destination and the route is in red.

The route is pixel level, so we have intercepted a

portion of the map to zoom in on the results. For better

presentation, we have deepened the route color by

also labeling the 8 neighboring points near each pixel

of the route as red.

In the data preparation phase, the time required for

this part of the conversion of the live video sequence

IMPROVE 2022 - 2nd International Conference on Image Processing and Vision Engineering

206

captured by the UAV onboard camera into the input

image needed for the road extraction part is directly

related to the duration of the video sequence, in

general for a video with a duration of 17 seconds, the

real-time time required for data processing is 1.25

seconds, and the time required for road extraction by

D-LinkNet is about 5 seconds, followed by the

assignment of road priority, which will be extended

to be done automatically in the future, based on the

research of other researchers (Hong, 2020). We were

able to extract the two indices they had classified

well, namely asphalt and soil in the Pavia University

data set (Zhu, 2021), and assign road priority based

on the results of the road material classification and

road conditions. We also combine weather

information and soil information from the fusion of

satellite and drone imagery to carry out automatic

road weighting. The time required for the final route

planning is around 20 seconds, which is related to the

location of the starting point and ending point.

Table 3: Testing results on the DeepGlobe Road Extraction

dataset.

Table

Head

Comparison

Starting

point

Ending

point

f -value

Fig 6

(left)

(663,673) (975,445) 36110

Fig 6

(right)

(663,673) (975,445) 24436

Fig 7

(left)

(719,545) (566,127) 4733

Fig 7

(right)

(719,545) (566,127) 1004

Fig 8

(left)

(450,440) (890,264) 36230

Fig 8

(right)

(450,440) (890,264) 36230

In Figure 6, the starting point is at (663, 673) and

the ending point is at (975, 445). For the graph

without priority road assignment, the final f-value

from the starting point to the ending point is 36110

based on the A star algorithm, noting that the value of

f here only represents the cost value calculated under

a specific parameter setting and does not represent the

real length of the route, which is positively correlated

with each other. The relationship between them is

positive. On the right-hand side of Figure 6, when the

map has green roads, i.e. roads with priority, it is clear

that the route length increases, but the f-value

decreases, with a value of 24,436 and 32.3% less than

on the left-hand side. A smaller f-value means that the

user can reach the destination faster.

Figure 6: Test result 1 on the DeepGlobe Road Extraction

dataset (The right half comes with road priority, while the

left half does not).

Figure 7 shows the same experiment in another

map with the coordinates of (719,545) for the starting

location and (566,127) for the ending location. The

range of this experiment is smaller compared to that

of test 1 in Figure 6, which means that the place the

user needs to go is not very far away, it is nearby.

From the results in Figure 7, the f-value without road

priority is 4733 and the f-value with road priority is

1044, which is 78% lower than the former, which

largely helps the user to choose the best route.

Figure 7: Test result 2 on the DeepGlobe Road Extraction

dataset (The right half comes with road priority, while the

left half does not.).

Figure 8 shows another representative aspect of

the experiment, when the destination that the user

wants to go to and the priority path that can be

resorted to are in opposite directions, the results of the

experiment also show that the path with priority does

not come into play at this point, i.e. the two results are

the same, and from Figure 8 we can see that the

coordinates of the position of the starting point are

UAV Path Planning based on Road Extraction

207

(450,440). The position coordinates of the endpoint

are (890,264) and the value of f is the same in both

cases. Similarly, if the user is on a road in poor

condition, but his or her destination is close, or even

insight, then the user will certainly not take a detour

to a road in good condition to reach his or her

destination. The final decision depends on the

smallest f-value.

Figure 8: Test result 3 on the DeepGlobe Road Extraction

dataset (The right half comes with road priority, while the

left half does not).

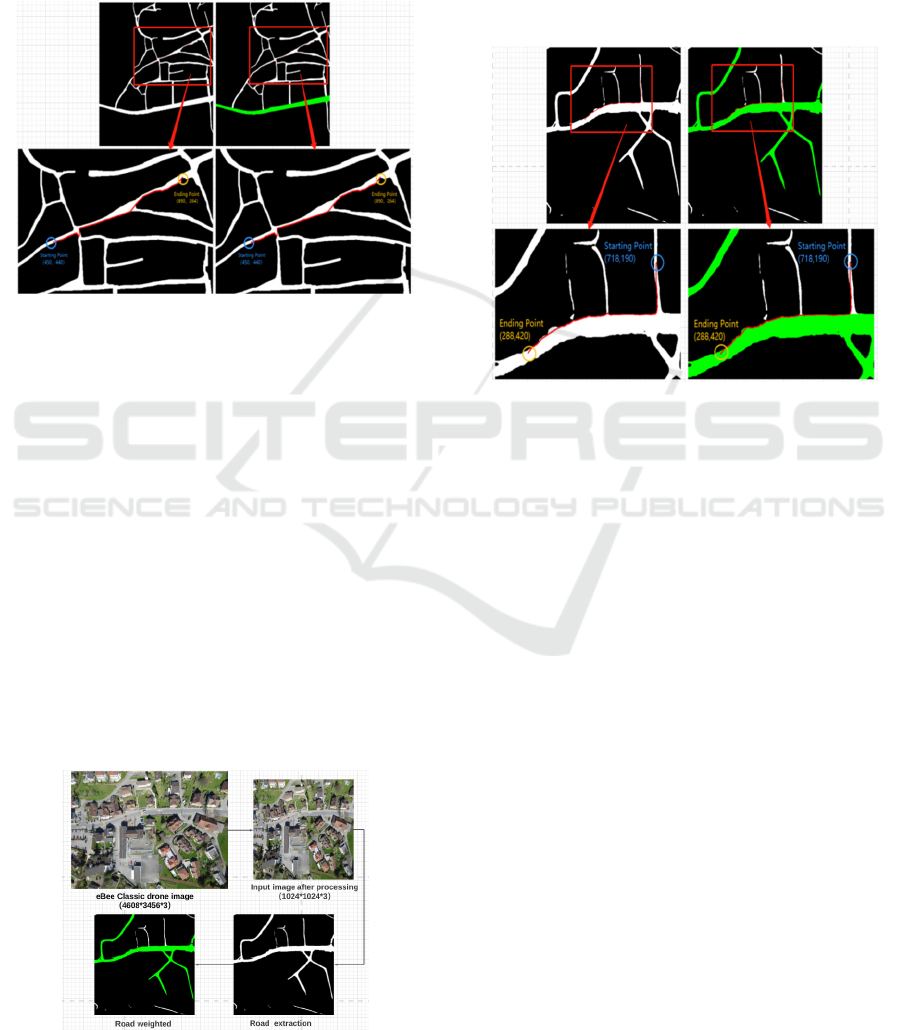

As the main application of this system is for

UAVs rather than satellites, we also tested it in

dataset 2, and the results obtained are shown in Figure

9 and Figure 10. Figure 9 illustrates the change in the

size of the drone image from (4608*3456*3) to

(1024*1024*3) after basic image processing. After

D-LinkNet 's road extraction the greyscale image

containing the roads is obtained and finally, the

weighting of the roads is assigned. As the data images

collected for this dataset are small villages, in this

image unlike the satellite image above, where asphalt

roads are predominant, most of the roads are given

priority. The actual application environment is a

sparsely populated wilderness where the roads are not

in such good condition and the area covered is larger

than this.

Figure 9: Test result on eBee Classic drone dataset.

Figure 10 shows the results of route planning,

where the coordinates of the starting point are

(718,190) and the coordinates of the ending point are

(288,420). The left half represents the real-time route

planned when the road is not given priority, and the

final f-value obtained is 45250. In contrast, the right

half of Figure 12 shows the route planned for the

green road given priority, and the f-value obtained in

this case is 560, which shows that there is an

improvement of 98%.

Figure 10: Test result on eBee Classic drone dataset (route

planning).

5 DISCUSSION AND

CONCLUSION

This paper focuses on a project to provide users with

optimal route planning based on the latest road

extraction techniques, which are of interest in the

field after heavy rain or the strong snowing, or heavy

sandstorm contaminating the road surface. The

satellite hyperspectral info can address the

information of road conditions as the close

neighborhood soil, vegetation hiding, 3D info for

floods. Drones offer a great deal of flexibility, and

GPU-equipped drones can fly in the field in real-time

to provide some assistance to users in that

environment by WiFi or human gesture recognition.

The images captured by the UAV also have a high

resolution, and drone communicates more easily with

people than satellites. In this paper, we have tested

both on publicly available satellite images and on

smaller publicly available UAV images, both of

which achieved the desired results. The first step in

this work is to segment the live video sequence

captured by the UAV into a fixed pixel RGB three-

channel image. The next step is to input this map

IMPROVE 2022 - 2nd International Conference on Image Processing and Vision Engineering

208

image into D-LinkNet for road extraction, resulting in

a grey image with white-labeled as the road and the

rest black as the background. Finally, the roads are

given weight after some road analysis, where the road

information refers to the width, connectivity, and road

surface material. Roads with green pixels have

priority. The A star algorithm was used for route

planning and the results were compared between the

map image with priority roads and the map image

without priority roads.

This work also has some limitations due to the

presence of many assumptions in this work. For

example, the environment to which this work applies

would ideally be in the wild and after bad weather,

when some roads in the wild are in a very muddy,

flooded, snowy or sandy state unsuitable for human

walking. Next, we need to automate this part of the

road weighting process. Based on the weather

information provided by the weather stations on the

map, the amount of precipitation can be further

assessed. The value of precipitation directly affects

the road condition of a soil road in a field environment,

which is one of the factors to be considered. Secondly,

according to the mature hyperspectral classification

technology, we can choose to fuse hyperspectral

images of satellites and recent UAV RGB images to

extract the index of asphalt and soil, which is the

second point of the basis for weighting, and finally,

we can integrate the length and width information of

the segmented road to achieve the automated road

weighting. In the future, a comparative analysis of the

impact of different h(n) functions on route planning

will also be carried out, as well as some

improvements to the algorithm. In the end, we also

need to test this in the real world with GPU-equipped

drones rather than on publicly available datasets.

ACKNOWLEDGMENTS

The work is carried out at Institute for Computer

Science and Control (SZTAKI), Hungary, and the

authors would like to thank their colleague László

Spórás for the technical support. This research was

funded by the Stipendium Hungaricum scholarship

and China Scholarship Council. The research was

supported by the Hungarian Ministry of Innovation

and Technology and the National Research,

Development and Innovation Office within the

framework of the National Lab for Autonomous

Systems.

REFERENCES

Apolo-Apolo, O. E., J. Martínez-Guanter, G. Egea, P. Raja,

and M. Pérez-Ruiz. (2020)"Deep learning techniques

for estimation of the yield and size of citrus fruits using

a UAV." European Journal of Agronomy 115 126030.

Ghelichi, Zabih, Monica Gentili, and Pitu B. Mirchandani.

(2021) "Logistics for a fleet of drones for medical item

delivery: A case study for Louisville, KY." Computers

& Operations Research 135 105443.

Moumgiakmas, Seraphim S., Gerasimos G. Samatas, and

George A. Papakostas. (2021) "Computer vision for fire

detection on UAVs—From software to

hardware." Future Internet 13, no. 8 200.

Rizk, Hamada, Yukako Nishimur, Hirozumi Yamaguchi,

and Teruo Higashino. (2022) "Drone-Based Water

Level Detection in Flood Disasters." International

Journal of Environmental Research and Public

Health 19, no. 1 237.

Galkin, Boris, Jacek Kibilda, and Luiz A. DaSilva. (2019)

"UAVs as mobile infrastructure: Addressing battery

lifetime." IEEE Communications Magazine 57, no. 6

132-137.

Ciepłuch, Błażej, Ricky Jacob, Peter Mooney, and Adam C.

Winstanley.(2010) "Comparison of the accuracy of

OpenStreetMap for Ireland with Google Maps and Bing

Maps." In Proceedings of the Ninth International

Symposium on Spatial Accuracy Assessment in Natural

Resuorces and Enviromental Sciences 20-23rd July

2010, p. 337. University of Leicester, 2010.

Liu, Chang, and Tamás Szirányi. (2021) "Real-Time

Human Detection and Gesture Recognition for On-

Board UAV Rescue." Sensors 21, no. 6 2180.

Zhou, Lichen, Chuang Zhang, and Ming Wu. (2018) "D-

linknet: Linknet with pretrained encoder and dilated

convolution for high resolution satellite imagery road

extraction." In Proceedings of the IEEE Conference on

Computer Vision and Pattern Recognition Workshops,

pp. 182-186.

Hong, Danfeng, Lianru Gao, Jing Yao, Bing Zhang,

Antonio Plaza, and Jocelyn Chanussot. (2020) "Graph

convolutional networks for hyperspectral image

classification." IEEE Transactions on Geoscience and

Remote Sensing.

Mohammadi, M. (2012) "Road classification and condition

determination using hyperspectral imagery." Int. Arch.

Photogramm. Remote Sens. Spatial Inf. Sci 39 B7.

Jenerowicz, Agnieszka, Katarzyna Siok, Malgorzata

Woroszkiewicz, and Agata Orych. (2017) "The fusion

of satellite and UAV data: simulation of high spatial

resolution band." In Remote Sensing for Agriculture,

Ecosystems, and Hydrology XIX, vol. 10421, p.

104211Z. International Society for Optics and

Photonics.

Maimaitijiang, Maitiniyazi, Vasit Sagan, Paheding Sidike,

Ahmad M. Daloye, Hasanjan Erkbol, and Felix B.

Fritschi. (2020) "Crop Monitoring Using Satellite/UAV

Data Fusion and Machine Learning." Remote

Sensing 12, no. 9 1357.

UAV Path Planning based on Road Extraction

209

Kim, Sangyoup, Jonghak Lee, and Taekwan Yoon. (2021)

"Road surface conditions forecasting in rainy weather

using artificial neural networks." Safety science 140

105302.

Ben-Dor, E. (2002) "Quantitative remote sensing of soil

properties." 173-243.

Ben-Dor, E., Sabine Chabrillat, J. Al M. Demattê, G. R.

Taylor, J. Hill, M. L. Whiting, and S. Sommer. (2009)

"Using imaging spectroscopy to study soil

properties." Remote sensing of environment 113 S38-

S55.

Cui, Shi-Gang, Hui Wang, and Li Yang. (2012) "A

simulation study of A-star algorithm for robot path

planning." In 16th international conference on

mechatronics technology, pp. 506-510.

Demir, Ilke, Krzysztof Koperski, David Lindenbaum, Guan

Pang, Jing Huang, Saikat Basu, Forest Hughes, Devis

Tuia, and Ramesh Raskar. (2018) "Deepglobe 2018: A

challenge to parse the earth through satellite images."

In Proceedings of the IEEE Conference on Computer

Vision and Pattern Recognition Workshops, pp. 172-

181.

PGC. “DigitalGlobe Satellite Constellation – Polar

Geospatial Center.” Www.pgc.umn.edu, 20 Mar. 2018,

www.pgc.umn.edu/guides/commercial-

imagery/digitalglobe-satellite-constellation/. Accessed

27 Jan. 2022.

“Discover a Wide Range of Drone Datasets.” SenseFly,

www.sensefly.com/education/datasets/?dataset=1419

&sensors%5B%5D=24. Accessed 25 Jan. 2022.

Kuswadi, Son, Jeffri Wahyu Santoso, M. Nasyir Tamara,

and Mohammad Nuh. (2018) "Application SLAM and

path planning using A-star algorithm for mobile robot

in indoor disaster area." In 2018 International

Electronics Symposium on Engineering Technology

and Applications (IES-ETA), pp. 270-274. IEEE.

“Heuristics.” Stanford.edu, 2019, theory.stanford.edu/

~amitp/GameProgramming/Heuristics.html.

Zhu, Qiqi, Weihuan Deng, Zhuo Zheng, Yanfei Zhong,

Qingfeng Guan, Weihua Lin, Liangpei Zhang, and

Deren Li. (2021) "A Spectral-Spatial-Dependent

Global Learning Framework for Insufficient and

Imbalanced Hyperspectral Image Classification." IEEE

Transactions on Cybernetics.

IMPROVE 2022 - 2nd International Conference on Image Processing and Vision Engineering

210