The Future of Higher Education Is Social and Personalized!

Experience Report and Perspectives

Sven Strickroth

a

and Franc¸ois Bry

b

Institute for Informatics, Ludwig-Maximilian University of Munich, Germany

Keywords:

Technology-enhanced Learning, Peer Review, Automatic Feedback, Student Activation, Learning Analytics,

CSCL.

Abstract:

This position paper is devoted to learning and teaching technologies aimed at alleviating problems and ad-

dressing challenges faced by learners or teachers in mass classes. It reports on practical experiments run

over the last terms at the Institute for Informatics of Ludwig-Maximilian University of Munich, Germany.

Furthermore, perspectives for higher education opened by technology are discussed: Digital social learning

and/or experimentation spaces and technology-based personalization. It is further argued that such approaches

provide specific advantages that are not only desirable for teaching mass classes.

1 INTRODUCTION

Higher education in most countries of continental

Europe and other world regions is nowadays char-

acterized by mass classes, that is, courses attended

by one to several hundred students. According to

the constructivism learning theory and connectivism

(Goldie, 2016), learning is an inherently social pro-

cess. However, social interactions and discussions be-

come rather limited when it comes to mass teaching.

Mass classes make frontal lectures a last resort what

often results in rather inactive students and high drop-

out rates. Furthermore, mass classes often limit the

feedback that can be provided by teachers and tutors

and make the grading of examinations lengthy and

therefore challenging. This position paper is devoted

to learning and teaching technologies aimed at alle-

viating the aforementioned problems and addressing

the outlined challenge of mass teaching.

This position paper reports on experiences gained

at the Institute for Informatics of Ludwig-Maximilian

University of Munich, Germany, through deploy-

ing both established and novel Technology-Enhanced

Learning (TEL) methods to alleviate many disad-

vantages of mass teaching for students and teachers

alike in three dimensions: First, approaches to acti-

vate students in mass classes such as learning-specific

backchannels and peer teaching; second, approaches

a

https://orcid.org/0000-0002-9647-300X

b

https://orcid.org/0000-0002-0532-6005

to crowdsource teaching tasks such as giving feed-

back, correction of submitted solutions and support-

ing tutors for collaborative feedback provisioning;

third, automatized feedback provisioning and exam-

ination pre-corrections.

Furthermore, this article reports on experiences

of applying data science in education investigated at

the same institute: Improving learners’ self-regulated

learning by nudging them to peer reviews, by report-

ing on their learning activities, and by predicting the

correlations of their examination performances with

their learning activities and, finally, detection of sys-

temic errors among learners by human computation

and collaboration.

The research questions addressed in this paper are:

(1) How to activate students and increase interactitity

in mass classes? (2) How can large numbers of stu-

dents be exploited (e.g., croudsource teaching tasks)?

(3) How to (semi)-automate teaching tasks such as

feedback provisioning and correcting? and (4) How

to nudge students to active learning?

Finally, perspectives for exploiting TEL in higher

education are presented. The discussed perspectives

are: The need for social learning spaces, personaliza-

tion and spaces for experimentation and discussions.

The contributions of this position paper are an

overview of deployed approaches to tackle the afore-

mentioned challenges and perspectives for TEL-

based social and personalized learning in higher ed-

ucation.

Strickroth, S. and Bry, F.

The Future of Higher Education Is Social and Personalized! Experience Report and Perspectives.

DOI: 10.5220/0011087700003182

In Proceedings of the 14th International Conference on Computer Supported Education (CSEDU 2022) - Volume 1, pages 389-396

ISBN: 978-989-758-562-3; ISSN: 2184-5026

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

389

2 ENABLING INTERACTION IN

MASS CLASSES

One of the main issues with mass lectures is the

limited interactivity. In this section, experiences for

two approaches are outlined: (1) learning-specific

backchannels from the students to the teacher and (2)

TEL approaches to activate students during lectures.

One of the goals for developing the tool Back-

stage (Gehlen-Baum et al., 2012) was to provide a

connected and interacting community dedicated to a

single course. The reason for not using existing so-

cial media, such as Twitter, was to avoid distraction

from off-topic content and to exploit novel learning-

specific features. The first version of Backstage of-

fered a digital backchannel to connect lectures’ par-

ticipants (both students and teachers alike). Back-

stage provided a forum-like structure with which stu-

dents could ask questions, answer questions of their

peers, and comment on the lecture or annotate lec-

ture slides. Posts by students could be up and down

voted by the students and teachers as well as catego-

rized by the lecture participants as ”question”, ”an-

swer”, ”remark”, and ”off-topic”. This tagging has

shown to be effective at preventing an off-topic use

of the backchannel through a social control by the

student community itself. Backstage has proven to

foster interactivity and awareness in large-class lec-

tures and to encourage lecture-relevant communica-

tion in different courses (Bry and Pohl, 2017). Com-

pared to a simple chat such as provided by video-

conference systems like Zoom Backstage’s backchan-

nel is much more effective at focusing the communi-

cation at lecture-relevant contents and at creating so-

cial interactions like several students progressively re-

fining a question to a teacher or answering questions

posed by fellow students.

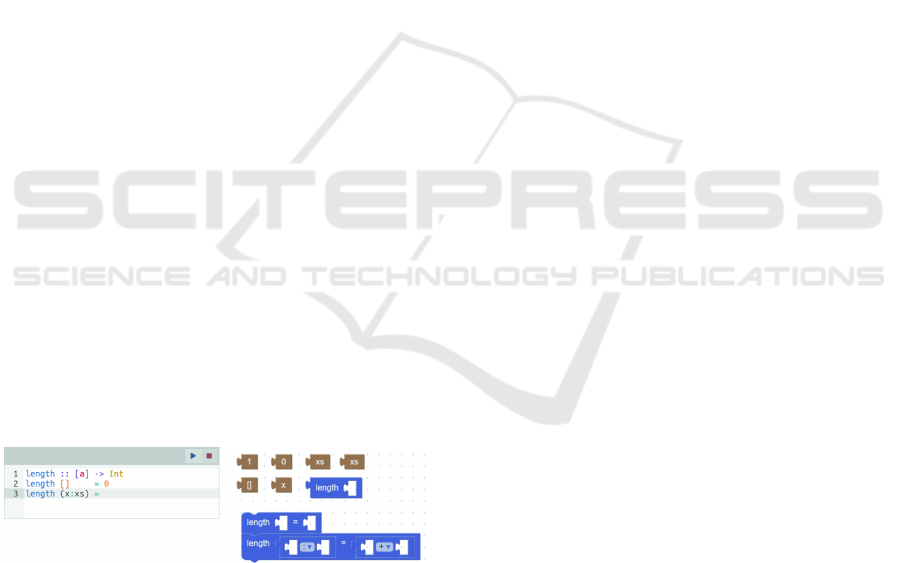

Figure 1: Domain-specific puzzle-like question; taken from

(Mader and Bry, 2019a), Fig. 4, p. 213.

A second version of Backstage (Mader and

Bry, 2019a) has extended the system with an au-

dience response system (ARS) supporting sophisti-

cated quizzes. Backstage’s ARS is aimed at acti-

vating students within and without lectures. Back-

stage is a web-based system and can therefore be used

by students with their smartphones or mobile com-

puters. Unlike simple ARS, various quiz types are

supported, ranging from simple multiple-choice ques-

tions over the identification of hot-spots in images

(useful for, e.g. medicine education or geography) to

domain-specific question types such as creating logic

proofs and puzzle-like programming tasks (cf. Fig-

ure 1). Furthermore, Backstage’s quizzes are adaptive

regarding the student’s needs and knowledge. Back-

stage also supports ”phased quizzes” which can run

over a longer period of time so as to support self-

learning. On the one hand Backstage’s quizzes give

teachers an immediate feedback on the students’ un-

derstanding and competencies. On the other hand,

they also activate students and give them instant feed-

back. Backstage, like other ARS in general, have

been proven to have positive effects on attendance

and engagement (Kay and LeSage, 2009; Oigara and

Keengwe, 2013) and can also be used to initiate and

foster (offline) peer discussion (Mazur and Somers,

1999) in class.

3 CROWDSOURCING TEACHING

TASKS

The main issue with mass teaching is that the num-

ber of students is increasing but the number of teach-

ers and tutors cannot be increased accordingly due to

limited resources. This directly limits the provision-

ing of (timely) feedback, which, according to Hattie

is one of the most important factors for learning suc-

cess (Hattie and Timperley, 2007). However, a large

class also makes it possible to leverage manpower by

crowdsourcing certain teaching tasks.

A first approach to cope with an insufficient num-

ber of teachers is peer review. Peer review is a learn-

ing activity in which students evaluate and deliver

written feedback on the work of their peer students

(Nicol, 2010). Peer review and peer feedback is of-

ten used in essay writing (Cho and Schunn, 2007),

however, it can be used if the tasks to be reviewed

require some creativity. Peer review can be operated

asynchronously on and as homework, synchronously

in class, as a pre-correction for the teacher, or as

the final feedback for the students. Peer review and

peer feedback have been successfully tested with the

systems Backstage (Heller and Bry, 2019b; Mader

and Bry, 2019d) and GATE (Strickroth et al., 2011),

designed and deployed by the authors in different

scenarios such as introductory programming courses

(teaching Haskell and Java). The authors’ experience

with programming assignments is that students good

at spotting their peers’ errors are also good at iden-

tifying correct peers’ submissions and therefore are

little prone to give false feedback (Heller and Bry,

CSEDU 2022 - 14th International Conference on Computer Supported Education

390

2019b). However, about 22 % of the given feedback

turned out to be partially incorrect – further research

on how to support students is necessary. In general,

peer review has shown to be a powerful method as it

allows students to receive timely and extensive feed-

back; furthermore, multiple reviews by different peers

have been shown to be better than a single review of

a expert (Cho and Schunn, 2007). Peer feedback and

review also have other advantages that are discussed

in Section 5.

Another approach to providing feedback has been

tested with a prototype system for reducing the teach-

ers’ and tutors’ workload for correcting homework

(Heller and Bry, 2021). This approach relies on the

fact that (1) mass teaching also requires multiple tu-

tors who correct submitted solutions and (2) students

often make similar mistakes (e. g., common miscon-

ceptions). In order to support teachers, the proposed

software uses text processing, collaborative filtering,

and teacher collaboration in a wiki-like environment

and suggests feedback fragments directly while typ-

ing them in. The approach has demonstrated its fea-

sibility and its effectiveness in multiple case studies

(Heller and Bry, 2021).

4 AUTOMATED FEEDBACK AND

COMPUTER-ASSISTED

CORRECTION

Learning requires assessment (Kirkwood and Price,

2008). One can distinguish between assessment for

learning (also often referred to as ”formative assess-

ment”) and assessment of learning (also often re-

ferred to as ”summative assessment”). In this sec-

tion experiences with two formative assessment ap-

proaches (automated feedback generation and in-

class feedback for the teacher) and one summative

assessment approach using computer-assisted pre-

corrections which both have been tested in two pro-

gramming courses (in Java and Haskell, respectively)

are reported.

Learning requires that students do their homework

– regardless of whether the homework is correct or in-

correct. In addition, learning is improved by immedi-

ate and delayed feedback (Narciss, 2008). Hence, an

approach combining both types of feedback has been

tested.

It is worth stressing that both immediate and de-

layed feedback are hardly possible in large classes

without the help of technology. For programming

tasks, the approach tested consisted of two automated

checks that could be requested twice by every student

before the final submission deadline. This limitation

to two requests aimed at preventing misuses such as

gaming-the-system approaches (Baker et al., 2008).

The first check was a syntax check using a com-

piler. The second check was a unit test. A former

study with the GATE system has shown that even

simple feedback such as knowledge of result/response

(Narciss, 2008) (i.e., whether a program compiles or

a unit test passed or failed) where each test can be re-

quested by students only once significantly reduced

errors in their final submissions (Strickroth et al.,

2011). Additionally, the test results were presented

to the tutors for each submission as an aid for their

manual inspection. This was rated as very helpful by

the tutors. In both courses, the correct solutions were

discussed in dedicated sessions.

Such automated evaluations can also be used in

synchronous settings: A challenge in large classes is

the identification of students who need help the most.

This is particularly the case in synchronous program-

ming labs: It turns out that it is not sufficient to have

a teacher and a few tutors going through the seating

rows to help students. A majority of students has

questions and expects feedback. Therefore, it often

happens that teachers do not recognize, or do not rec-

ognize early enough, the most struggling students. To

address this issue, a learning analytics dashboard was

developed for Backstage that provides teachers with

a real-time overview of the progress of all students

(Mader and Bry, 2019c). This dashboard allowed the

most struggling students to be easily identified by the

teacher. The results of a comparative study in which

the progress of all students with and without this dash-

board were available, are promising (Mader and Bry,

2019c).

Finally, this sections reports on recent experi-

ments to support mass examinations for two differ-

ent programming courses (with Haskell and Java, re-

spectively). Corrections and gradings should be com-

pleted within a specific time frame so that the students

can take notice of their performances early enough

and, if necessary, can register and learn for a second-

chance examination. Traditional paper-and-pen ex-

aminations have several drawbacks: First, handwrit-

ten text is rarely easy to read. Second, open-ended

questions are complicated to grade, and are also prone

to lengthy discussions during the inspection of the

graded examination – in Germany, students have the

right to inspect their graded examination and to raise

any objections. Therefore, a fully digital decentral-

ized open-book examination using the GATE system

was used. The questions were designed as fill-in-the-

gap tasks with one to a few correct solutions or as

multiple-choice questions. This also had the side-

The Future of Higher Education Is Social and Personalized! Experience Report and Perspectives

391

effect that the examination was more realistic and

competency-oriented as the students do not need to

program on a sheet of paper with a pen, but can use

a compiler and test their solutions. Another design

choice was to use a binary marking schema without

issuing partial marks. This way the submitted solu-

tions could be evaluated automatically in real-time –

this also provided the teachers a real-time view on the

progress of the students during the examination. A fi-

nal human verification of every answer marked by the

software as ”probably incorrect” ensured a fair and

thorough correction. The automatic pre-correction

ensures that only a fraction of the submitted solutions

needed to be inspected by the tutors which resulted in

a significant reduction of the time needed for the cor-

rection. In a first investigation, no significant differ-

ence to traditional examinations regarding the pass-

failure-rates or achieved grades could be observed.

5 NUDGING STUDENTS TO

ACTIVE LEARNING

In this section three approaches to nudging students

to actively engage in learning are presented. These

go beyond common approaches to nudge students to

their homework such as is declaring the homework a

condition of participation in an examination or to by

grading based on both homework and examination.

Both traditional approaches are not always possible

due to examination regulations.

Peer review can not only be used to crowdsource

teacher tasks (cf. Section 3) but also to provide feed-

back which improves the learning process by acti-

vating students (Li et al., 2019; Zheng et al., 2019).

However, an important prerequisite for using peer re-

view is that students actually write feedback and not

just try to get feedback from others. A low partici-

pation rate poses a significant threat for the entire ap-

proach, as students may receive little to no feedback.

A first experiment tested with Backstage in a

Bachelor’s introductory programming course a low

participation rate was observed, however, most stu-

dents indicated that receiving and delivering peer re-

views was “mostly helpful” to their learning and that

seeing and thinking about different solutions was re-

marked positively by the students (Heller and Bry,

2019b).

In a second experiment currently underway using

GATE, a restriction was introduced to motivate stu-

dents to submit their solutions and to deliver feedback

regularly (skipping twice results exclusion from fur-

ther peer feedback). Students’ responses to peer feed-

back are promising: Participation in peer reviewed as-

signments is higher than participation to assignments

which are not peer reviewed. This suggests that re-

viewing different peers’ solutions and receiving feed-

back from peers probably is a motivating factor to do

the assignments. An in-depth analysis of the collected

data is underway.

Audience response systems also can not only im-

prove interactivity in class but can also nudge students

to actively participate in a lecture and reflect on spe-

cific problems. Studies suggest that the use of ARS

can lead to higher retention rates and also has pos-

itive effects on overall class examination scores, es-

pecially for students whose performances are in the

lower quartile (Hoyt et al., 2010; Kay and LeSage,

2009). Using Backstage, a team-based social com-

petition with quizzes aimed at boosting participation

were tested (Mader and Bry, 2019b). A first evalua-

tion in a small class demonstrated the effectiveness of

the approach, and a second evaluation suggests that

for use in large classes teams have to be built in a spe-

cific way (Mader and Bry, 2019b).

Finally, this sections reports on a case study that

uses learning analytics to encourage students not to

skip homework and to increase their participation in

class (Heller and Bry, 2019a). In a Bachelor course

on theoretical computer science, students were given

individual predictions of their withdrawal, or “skip-

ping” of assignments, and their examination perfor-

mances (called ”examination fitness”). The evalua-

tion shows that this course had the lowest skipping

rates compared with the same courses over the for-

mer three years – for two years the difference is also

statistically significant (Heller and Bry, 2019a). Atti-

tudes toward such predictions were also investigated:

The students did not find any of the predictions dis-

couraging, nor did they report being motivated to a

higher participation to do homework. However, the

examination fitness prediction was perceived as more

interesting than the skipping prediction.

6 PERSPECTIVES

While TEL is often used to address only pressing

classroom organization and management issues (e.g.,

managing examination registrations and distributing

PDF files) (Markova et al., 2017; Henderson et al.,

2017), the experience reported in the previous section

shows that TEL can also be used to support social as-

pects of learning, such as computer-supported collab-

orative learning, co-regulation and peer teaching. In

this section the authors present their vision for TEL-

based social and personalized learning in higher edu-

cation that is not only relevant to mass teaching.

CSEDU 2022 - 14th International Conference on Computer Supported Education

392

6.1 Social Learning Spaces

Good learning, and therefore good teaching, require

an intensive, active exchange among students as well

as between students and teachers. Such an exchange,

however, is what disappears first in mass teaching e. g.

when there are hundreds of students in a course. Not

all students dare to raise issues or ask questions in

front of large audiences. Moreover, it can also be dif-

ficult to find learning partners when you sit next to a

different student in every lecture.

Social media specialized for learning can be used

to create social learning spaces that would be oth-

erwise hardly possible. As described in the previ-

ous sections, such systems allow students to ask and,

more importantly, structure questions and possible an-

swers. Structuring can also be improved by allow-

ing students to vote for specific questions which they

share. This way, the teacher does not have to deal with

a dozen questions, but can gain a quick overview and

focus on the most urgent ones. Similar approaches

could also be used in asynchronous scenarios where

students have a shared digital workspace like a wiki

where they can collaboratively collect and optimize

their questions and answers or provide peer feedback.

TEL allows to mitigate the bottleneck in communica-

tion.

The last example shows that it is possible to turn

mass teaching into an advantage: A large number of

students makes it possible to involve them and take

advantage of their heterogeneity by forming a com-

munity of practice (Wenger et al., 2002). The students

have a common goal and can work collaboratively to

solve difficult problems. The greater heterogeneity in

the group of students is also likely to lead to more

questions, and again, collaboration can lead to better

questions and to standing up for each other (among

others, peer reviews or collaboratively collecting, de-

veloping, and optimizing learning materials e. g. in

a wiki). Technology also allows students at different

locations to collaborate who are not at the same uni-

versity or even not on the same continent.

The challenge is to design that community and to

foster the exchanges – among students and between

students and teachers alike – both on the pedagogi-

cal and technical sides. Furthermore, there are many

more social aspects that can be supported such as how

to find other students who share the same interests or

are struggling with the same issues.

There are two further issues to address: First,

given the speed at which STEM (Science, Technol-

ogy, Engineering, and Mathematics) is evolving, life-

long learning is becoming increasingly important.

Therefore, universities should go beyond alumni por-

tals where graduates have to register manually and

create social spaces that allow active exchange with

their (former) students to learn and collaborate.

Second, social (learning and collaboration) spaces

for teachers are also needed that go beyond a single

department, university, or even country. Professional

social media can enable teachers with similar interests

to connect and share experience as well as collabo-

ratively develop teaching materials (Bothmann et al.,

2021; Strickroth et al., 2015). For example, special-

ized systems and repositories that allow peer review-

ing and collaboratively optimizing examination ques-

tions should be considered. Such approaches are not

possible without the use of technology.

6.2 Personalization

Personalization is probably best achieved by teachers

who are in direct contact with their students (cf. pre-

vious section). However, such direct interaction is not

always possible, e. g., with a students-to-teacher ratio

over 800 for professors, and over 70 for teaching as-

sistants (Heller and Bry, 2019b). Here, automation

and support through technology can not only free up

time that can then be used for more interaction with

students, but also provide new insights (on both the

small and larger scale) that would hardly be possible

without technology.

For learning, students build their own digital per-

sonal learning environment with systems provided by

the university, such as learning management systems

or systems described in the previous sections, and

with systems that they selected for specific tasks. Ev-

ery interaction with these systems such as solving

(manual or auto generated) assignments, data traces

are generated on the underlying systems. Not only

do students generate data when they interact with the

systems, but so do teachers when they assess student

contributions. This data is often not used systemati-

cally, and if it is, it is only used within a single system.

The data collected can be useful in two ways:

First, students’ submitted solutions, previous at-

tempts and attached meta-data such as marks, grades,

or feedback given by teachers can be used for person-

alization on a small scale: Learning analytics can be

applied to the available data in a system to adapt to the

needs and knowledge levels of specific students or to

aid tutors to give timely feedback. There is a wide

spectrum for possible personalization approaches, in-

cluding generating feedback (e.g., based on similar

submissions or shared misconceptions in real-time),

generating questions based on the detected miscon-

ceptions or knowledge gaps, and using the data of

previous venues as a basis for predictions in subse-

The Future of Higher Education Is Social and Personalized! Experience Report and Perspectives

393

quent venues of courses to warn and support students

at risk. Pre-corrections and learning analytics can also

be used to support tutors or their collaboration while

inspecting student solutions. Additionally, one can

analyze how the students interact with the system and

with each other, identify usage and learning patterns

that can help to adjust the teaching method and to

optimize the learning environment including the used

software.

Second, students’ (life cycle) data such as their

participation in exams, their grades in previously at-

tended courses, etc. can be harnessed using data sci-

ence techniques. On the one hand, this makes it possi-

ble to identify students at risk, providing personalized

warnings and recommendations for their studies. On

the other hand, it can also be used to identify difficult

courses and better prepare students for these.

Besides advantages, there are also challenges:

Such Learning analytics approaches have only been

rarely used in Europe, possibly because of a

widespread fear among European higher education

teachers to violate privacy regulations. Local uni-

versity or statewide initiatives are needed to establish

trusted learning analytics that engage all stakehold-

ers (cf. (Drachsler and Greller, 2016)). A good start-

ing point could be one study program and then, ex-

tend it other study programs and then develop plans

on how the data can be exchanged across institutions

(e.g., school to university). In addition to privacy, so-

lutions are also needed to avoid possible bias in the

data (Riazy and Simbeck, 2019) and to train teachers

and students on how to interpret and make use of the

analyses (Slade and Prinsloo, 2013).

6.3 Space for Experimenting and

Discussions

Finally, spaces for experimenting with innovative ap-

proaches to teaching are desirable. This needs to be

seen on two different levels:

First, a change in the culture (at least in continen-

tal Europe) regarding teaching is needed. Teaching is

often not discussed between teachers outside the al-

ready interested communities. There are also inhibi-

tions to experimentation, such as data protection reg-

ulations that are perceived as too complicated. The

COVID-19 pandemic triggered many experiments,

however, teachers should not completely fall back to

old habits. Rather, teachers should learn from their

attempts to deal with teaching in the pandemic and

use this as a foundation for further discussion and

experimentation. There is an enormous pedagogical

potential. Teachers also need to be more creative to

think of scenarios that are not or hardly possible with-

out the use of technology such as making use of the

heterogeneity of a large class. Again, an exchange

between teachers and Technology-Enhanced Learn-

ing researchers is necessary to join forces to build up

and develop new ideas (cf. Section 6.1). A side ef-

fect, or even a specific goal, could be that the tech-

nology enables people with disabilities or otherwise

time/location-constrained persons such as single par-

ents to take part in courses or learning scenarios.

Second, (flexible) technological infrastructures

are needed! This is a rather technical point of view

but it is equally important as the first point. On the

one hand an infrastructure is required so that also non-

tech-savvy teachers can set up and use software that

fits their needs in a privacy-conform manner that runs

within the university (Strickroth et al., 2021). On the

other hand there will be prototypes that evolve over

time and show to be effective. A challenge here is to

bring these into production and transfer the operating

to data centers as researchers cannot operate technol-

ogy for a whole university (Bußler et al., 2021; Kiy

et al., 2017).

7 SUMMARY AND

CONCLUSIONS

In practice TEL is often used to address only press-

ing classroom organization and management issues,

however, the experience reported in this position pa-

per shows that TEL can not only be used to support

social aspects of learning but can also turn certain as-

pects of mass teaching into an advantage.

The paper discussed experiences and different ap-

proaches for enabling interaction in mass classes,

crowdsourcing teaching tasks, automated feedback

and computer-assisted correction, and nudging stu-

dents to active learning. Finally, the paper presents

perspectives on digital social learning and/or experi-

mentation spaces and technology-based personaliza-

tion for higher education opened by technology. The

experiments presented in this paper are first steps to-

wards this vision.

The presented results also outline further direc-

tions for researching and optimizing the described ap-

proaches such as how to better support students in

peer reviews on programming assignments. The em-

ployed technologies are research prototypes. Limita-

tions and drawbacks are discussed in the respective

papers and cannot be presented in detail here due to

page limitations.

Almost all studies deal with Computer Science

contexts and, therefore, employ domain specific ap-

proaches such as unit tests for automatically evaluat-

CSEDU 2022 - 14th International Conference on Computer Supported Education

394

ing programming assignments that are not available

in other domains. However, the authors argue that

comparable automatic tests can be used in STEM con-

texts. For other domains such as languages heuris-

tics based on metrics or upcoming machine learning

approaches might be applicable as an aid for semi-

automated grading or formative feedback (e.g., (Stab

and Gurevych, 2017)). Nevertheless, such approaches

need to be further investigated. ARS and peer re-

view have already been used in various contexts (e.g.,

(Keough, 2012; van Popta et al., 2017)).

Most of the approaches described are not lim-

ited to mass teaching but can also be used in smaller

classes. Here, related disciplines or practices in a

school context with about 30 learners are classroom

orchestration (cf. (Dillenbourg, 2013)) and learning

engineering (cf. (Baker et al., 2021)) which can-

not be discussed in detail here due to page limita-

tions. Note, however, that the use of technology

should not replace the human component in learn-

ing and teaching but should enable or comprehend

it. That means that technology can provide timely

personalized feedback to students, allow students at

different locations to collaborate, enable disabled or

time/locations-constrained students to learn and inter-

act with each other, free up time from certain (often

recurring and/or tedious) tasks by using automation,

or to provide insights into learning processes etc. that

are hardly possible otherwise. TEL offers an enor-

mous social and pedagogical potential that needs to

be explored. . .

ACKNOWLEDGEMENTS

The authors are thankful to over 10.000 students who,

over the last decade actively participated in testing

and discussing the approaches to learning and teach-

ing reported about in this article. The authors are

also thankful to their colleagues and (doctoral) stu-

dents, especially to Dr. Niels Heller and Dr. Sebastian

Mader, for their feedback, advice, and contributions

to the research reported about in this article. The au-

thors also thank the reviewers for their valuable feed-

back.

REFERENCES

Baker, R., Walonoski, J., Heffernan, N., Roll, I., Corbett,

A., and Koedinger, K. (2008). Why students engage

in “gaming the system” behavior in interactive learn-

ing environments. Journal of Interactive Learning Re-

search, 19(2):185–224.

Baker, R. S., Boser, U., and Snow, E. L. (2021). Learning

engineering: A view on where the field is at, where

it’s going, and the research needed. Available online:

https://www.upenn.edu/learninganalytics/ryanbaker/

TMB Learning%20Engineering v12rsb.pdf.

Bothmann, L., Strickroth, S., Casalicchio, G., R

¨

ugamer, D.,

Lindauer, M., Scheipl, F., and Bischl, B. (2021). De-

veloping open source educational resources for ma-

chine learning and data science. arXiv:2107.14330v2.

Bry, F. and Pohl, A. Y.-S. (2017). Large class teaching with

Backstage. Journal of Applied Research in Higher Ed-

ucation (Special Issue on reviewing the performance

and impact of social media tools in higher education),

9(1):105–128.

Bußler, D., Lucke, U., Strickroth, S., and Weihmann, L.

(2021). Managing the transition of educational tech-

nology from a research project to productive use.

In SE-SE2021 - Software Engineering 2021 Satel-

lite Events - Workshops and Tools & Demos. Pro-

ceedings of the Software Engineering 2021 Satellite

Events., volume 2814 of CEUR Workshop Proceed-

ings. CEUR-WS.org.

Cho, K. and Schunn, C. D. (2007). Scaffolded writing

and rewriting in the discipline: A web-based recip-

rocal peer review system. Computers & Education,

48(3):409–426.

Dillenbourg, P. (2013). Design for classroom orchestration.

Computers & Education, 69:485–492.

Drachsler, H. and Greller, W. (2016). Privacy and analytics:

it’s a DELICATE issue a checklist for trusted learning

analytics. In Proceedings of the sixth international

conference on learning analytics & knowledge, pages

89–98.

Gehlen-Baum, V., Pohl, A., Weinberger, A., and Bry,

F. (2012). Backstage – designing a backchannel

for large lectures. In Proceedings of the Euro-

pean Conference on Technology Enhanced Learning,

Saarbr

¨

ucken, Germany (18-21 September 2012).

Goldie, J. G. S. (2016). Connectivism: A knowledge learn-

ing theory for the digital age? Medical Teacher,

38(10):1064–1069.

Hattie, J. and Timperley, H. (2007). The power of feedback.

Review of Educational Research, 77(1):81–112.

Heller, N. and Bry, F. (2019a). Nudging by predicting: A

case study. In Proceedings of the 11th International

Conference on Computer Supported Education - Vol-

ume 2: CSEDU, pages 236–243. SciTePress.

Heller, N. and Bry, F. (2019b). Organizing peer correction

in tertiary stem education: An approach and its evalu-

ation. International Journal of Engineering Pedagogy

(iJEP), 9(4):16–32.

Heller, N. and Bry, F. (2021). Chapter 14 - human computa-

tion for learning and teaching or collaborative tracking

of learners’ misconceptions. In Caball

´

e, S., Demetri-

adis, S. N., G

´

omez-S

´

anchez, E., Papadopoulos, P. M.,

and Weinberger, A., editors, Intelligent Systems and

Learning Data Analytics in Online Education, Intel-

ligent Data-Centric Systems, pages 323–343. Aca-

demic Press.

The Future of Higher Education Is Social and Personalized! Experience Report and Perspectives

395

Henderson, M., Selwyn, N., and Aston, R. (2017). What

works and why? student perceptions of ‘useful’digital

technology in university teaching and learning. Stud-

ies in Higher Education, 42(8):1567–1579.

Hoyt, A., McNulty, J. A., Gruener, G., Chandrasekhar, A.,

Espiritu, B., Ensminger, D., Price Jr, R., and Naheedy,

R. (2010). An audience response system may in-

fluence student performance on anatomy examination

questions. Anatomical Sciences Education, 3(6):295–

299.

Kay, R. H. and LeSage, A. (2009). Examining the benefits

and challenges of using audience response systems:

A review of the literature. Computers & Education,

53(3):819–827.

Keough, S. M. (2012). Clickers in the classroom: A review

and a replication. Journal of Management Education,

36(6):822–847.

Kirkwood, A. and Price, L. (2008). Assessment and student

learning: a fundamental relationship and the role of

information and communication technologies. Open

Learning: The Journal of Open, Distance and e-

Learning, 23(1):5–16.

Kiy, A., List, C., and Lucke, U. (2017). A virtual environ-

ment and infrastructure to ensure future readiness of

data centers. European Journal of Higher Education

IT (EJHEIT), (2017-1).

Li, H., Xiong, Y., Hunter, C. V., Guo, X., and Tywoniw, R.

(2019). Does peer assessment promote student learn-

ing? a meta-analysis. Assessment & Evaluation in

Higher Education, 45(2):193–211.

Mader, S. and Bry, F. (2019a). Audience response sys-

tems reimagined. In Herzog, M. A., Kubincov

´

a, Z.,

Han, P., and Temperini, M., editors, Advances in Web-

Based Learning – ICWL 2019, pages 203–216, Cham.

Springer International Publishing.

Mader, S. and Bry, F. (2019b). Fun and engagement in lec-

ture halls through social gamification. International

Journal of Engineering Pedagogy, 15(2):117–136.

Mader, S. and Bry, F. (2019c). Phased classroom instruc-

tion: A case study on teaching programming lan-

guages. In Proceedings of the 11th International Con-

ference on Computer Supported Education - Volume

1: CSEDU, pages 241–251. SciTePress.

Mader, S. and Bry, F. (2019d). Towards an annotation

system for collaborative peer review. In Interna-

tional Conference in Methodologies and intelligent

Systems for Techhnology Enhanced Learning, pages

1–10. Springer.

Markova, T., Glazkova, I., and Zaborova, E. (2017). Qual-

ity issues of online distance learning. Procedia-Social

and Behavioral Sciences, 237:685–691.

Mazur, E. and Somers, M. D. (1999). Peer instruc-

tion: A user’s manual. American Journal of Physics,

67(4):359–360.

Narciss, S. (2008). Feedback strategies for interactive learn-

ing tasks. Handbook of research on educational com-

munications and technology, 3:125–144.

Nicol, D. (2010). From monologue to dialogue: improv-

ing written feedback processes in mass higher educa-

tion. Assessment & Evaluation in Higher Education,

35(5):501–517.

Oigara, J. and Keengwe, J. (2013). Students’ perceptions

of clickers as an instructional tool to promote active

learning. Education and Information Technologies,

18(1):15–28.

Riazy, S. and Simbeck, K. (2019). Predictive algorithms in

learning analytics and their fairness. In Pinkwart, N.

and Konert, J., editors, DELFI 2019, pages 223–228,

Bonn. Gesellschaft f

¨

ur Informatik e.V.

Slade, S. and Prinsloo, P. (2013). Learning analytics: Ethi-

cal issues and dilemmas. American Behavioral Scien-

tist, 57(10):1510–1529.

Stab, C. and Gurevych, I. (2017). Recognizing insuffi-

ciently supported arguments in argumentative essays.

In Proceedings of the 15th Conference of the Euro-

pean Chapter of the Association for Computational

Linguistics: Volume 1, Long Papers, pages 980–990.

Strickroth, S., Bußler, D., and Lucke, U. (2021).

Container-based dynamic infrastructure for education

on-demand. In Kienle, A., Harrer, A., Haake, J. M.,

and Lingnau, A., editors, DELFI 2021 – Die 19. Fach-

tagung Bildungstechnologien, Lecture Notes in Infor-

matics (LNI), pages 205–216, Bonn. Gesellschaft f

¨

ur

Informatik e.V.

Strickroth, S., Olivier, H., and Pinkwart, N. (2011).

Das GATE-System: Qualit

¨

atssteigerung durch Selb-

sttests f

¨

ur Studenten bei der Onlineabgabe von

¨

Ubungsaufgaben? In Rohland, H., Kienle, A.,

and Friedrich, S., editors, Tagungsband der 9. e-

Learning Fachtagung Informatik (DeLFI), GI Lecture

Notes in Informatics, pages 115–126, Bonn, Germany.

Gesellschaft f

¨

ur Informatik e.V.

Strickroth, S., Striewe, M., M

¨

uller, O., Priss, U., Becker, S.,

Rod, O., Garmann, R., Bott, O. J., and Pinkwart, N.

(2015). ProFormA: An XML-based exchange format

for programming tasks. eleed, 11(1).

van Popta, E., Kral, M., Camp, G., Martens, R. L., and Si-

mons, P. R.-J. (2017). Exploring the value of peer

feedback in online learning for the provider. Educa-

tional Research Review, 20:24–34.

Wenger, E., McDermott, R., and Snyder, W. M. (2002). Cul-

tivating Communities of Practice. Harvard Business

School Press, Boston, USA.

Zheng, L., Zhang, X., and Cui, P. (2019). The role of

technology-facilitated peer assessment and supporting

strategies: a meta-analysis. Assessment & Evaluation

in Higher Education, 45(3):372–386.

CSEDU 2022 - 14th International Conference on Computer Supported Education

396