Latency-aware Privacy-preserving Service Migration in Federated Edges

Paulo Souza

1 a

,

ˆ

Angelo Crestani

1 b

, Felipe Rubin

1 c

, Tiago Ferreto

1 d

and F

´

abio Rossi

2 e

1

Pontifical Catholic University of Rio Grande do Sul, Porto Alegre, Brazil

2

Federal Institute Farroupilha, Alegrete, Brazil

Keywords:

Edge Computing, Microservices, Edge Federation, Infrastructure Providers, Service Migration.

Abstract:

Edge computing has been in the spotlight for bringing data processing nearby data sources to support the re-

quirements of latency-sensitive and bandwidth-hungry applications. Previous investigations have explored the

coordination between multiple edge infrastructure providers to maximize profit while delivering enhanced ap-

plications’ performance and availability. However, existing solutions overlook potential conflicts between data

protection policies implemented by infrastructure providers and security requirements of privacy-sensitive ap-

plications (e.g., databases and payment gateways). Therefore, this paper presents Argos, a heuristic algorithm

that migrates applications according to their latency and privacy requirements in federated edges. Experimen-

tal results demonstrate that Argos can reduce latency and privacy issues by 16.98% and 7.95%, respectively,

compared to state-of-the-art approaches.

1 INTRODUCTION

Edge computing brings the idea of extending the

cloud, decentralizing computing resources from large

data centers to the network’s edge (Satyanarayanan

et al., 2009). In practice, servers and other de-

vices with processing capabilities are placed near

data sources, reducing the communication delay im-

posed by the physical distance between end devices

and computing resources that process data (Satya-

narayanan, 2017).

Unlike traditional cloud deployments, edge com-

puting sites comprise computing resources geograph-

ically distributed across the environment, possibly

managed by different providers in a federated man-

ner (Mor et al., 2019). This heterogeneous nature

allows distributed software architectures such as mi-

croservices to run ahead of traditional monoliths as

they enable multiple edge servers to handle the de-

mand of resource-intensive applications (Mahmud

et al., 2020a). At the same time, resource wastage

can be avoided by scaling up/down microservices in-

dividually based on their demand, which is pleasing

given the resource scarcity of the edge infrastructure.

a

https://orcid.org/0000-0003-4945-3329

b

https://orcid.org/0000-0002-1806-7241

c

https://orcid.org/0000-0003-1612-078X

d

https://orcid.org/0000-0001-8485-529X

e

https://orcid.org/0000-0002-2450-1024

The heterogeneous nature of edge computing en-

vironments and the dynamics of moving users in-

crease the complexity of deciding where to allocate

microservices. From a performance perspective, mi-

croservices should remain close enough to their users

to ensure low latency while avoiding network satura-

tion, which implies that migrations should take place

according to users’ mobility (Ahmed et al., 2015).

While deciding when and where to migrate microser-

vices based on users’ mobility is already challeng-

ing, these allocation decisions get even harder to per-

form as infrastructures with multiple providers raise

concerns about the level of trustworthiness of edge

servers.

There has been considerable prior work regarding

resource allocation in federated edge computing sce-

narios considering applications’ privacy (Wang et al.,

2020; He et al., 2019; Xu et al., 2019). Despite their

contributions, migrating microservices according to

performance and privacy goals still represents an open

research topic. By studying the state-of-the-art, we

identified some significant shortcomings in the exist-

ing migration strategies focused on enforcing the pri-

vacy of applications running on edge computing in-

frastructures:

• Most strategies equalize the level of trust of entire

regions while making allocation decisions, over-

looking the existence of servers managed by dif-

ferent providers in the same region yielding pos-

288

Souza, P., Crestani, Â., Rubin, F., Ferreto, T. and Rossi, F.

Latency-aware Privacy-preserving Service Migration in Federated Edges.

DOI: 10.5220/0011084500003200

In Proceedings of the 12th International Conference on Cloud Computing and Services Science (CLOSER 2022), pages 288-295

ISBN: 978-989-758-570-8; ISSN: 2184-5042

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

sibly different levels of trust.

• Existing solutions assume the same privacy re-

quirements for all microservices from an applica-

tion, neglecting that microservices with different

responsibilities may have distinct privacy require-

ments (e.g., database microservices may demand

more privacy than front-end microservices).

This work presents Argos, a heuristic algorithm

that coordinates the privacy requirements of applica-

tion microservices and the levels of trustworthiness

of edge servers while performing migrations based on

users’ mobility. In summary, we make the following

contributions:

• We propose a novel heuristic, called Argos,

for migrating services in edge computing which

strengthens the safety of applications considering

the privacy requirements of each application mi-

croservice and the level of trust between the user

that accesses the application and edge servers.

• We quantitatively demonstrate the effectiveness of

Argos through a set of experiments which shows

that our solution can reduce violations in maxi-

mum latency and privacy requirements by 16.98%

and 7.95%, respectively, against a state-of-the-art

approach.

The remainder of this paper is organized as fol-

lows. In Section 2, we present a theoretical back-

ground on resource allocation in edge computing en-

vironments. In Section 3, we list the related work.

Section 4 details the system model. Section 5 presents

Argos, our proposal for enhancing the privacy of ap-

plications in federated edges. Section 6 describes a

set of experiments used to validate Argos. Finally,

Section 7 presents our final remarks.

2 BACKGROUND

The emergence of mobile and IoT applications (e.g.,

augmented and virtual reality, online gaming, and

media streaming) increased the demand for low-

latency resources and locality-aware content distribu-

tion in recent years (Satyanarayanan, 2017). Since

the limited computational power and battery capac-

ity available at clients’ devices (mobile and IoT) can-

not meet these applications’ requirements, offload-

ing computational-intensive tasks to remote process-

ing units such as cloud data centers becomes neces-

sary. However, despite the resiliency and seemingly

unlimited resources of cloud computing, the inherent

latency of long-distance transmissions cannot meet

the ever-increasing performance requirements of ap-

plications (Satyanarayanan, 2017).

Edge computing tackles the latency issues of mo-

bile and IoT applications, bringing data processing

to the network’s edge in proximity to end-user de-

vices (Satyanarayanan et al., 2009). In contrast to

centralized cloud data centers, edge servers are dis-

persed in the environment, possibly connected to the

available power grid, allowing nearby devices to of-

fload resource-intensive tasks with reduced latency,

which grants improved performance while reducing

the battery consumption of end-user devices.

To deliver services and process users’ requests,

edge infrastructure providers rely on virtualization

techniques such as virtual machines (VMs) and con-

tainers (He et al., 2018). The virtualization of phys-

ical resources supports the design and implementa-

tion of resource allocation strategies for infrastruc-

ture providers, tailored accordingly for energy effi-

ciency, network performance, monetary costs, and

performance contracts such as Service Level Agree-

ments (SLAs). However, there is a limit to the re-

sources available from a single provider, especially

on edge scenarios, that have more limited resources

than their cloud counterparts (Li et al., 2021). A vi-

able approach to mitigate capacity limitations is to

allocate resources from multiple providers. In this

context, edge federations employ strategies that incor-

porate resources from many providers, which benefit

both providers and their clients (Anglano et al., 2020).

Whereas clients benefit from increased availabil-

ity and scalability, infrastructure providers can re-

duce operational costs by combining owned and

leased resources to meet their clients’ demands. The

advantages of a federation of edge providers are

even more evident considering industry trends such

as microservice-based applications, which comprise

several loosely-coupled modules (also known as mi-

croservices), each with different performance, secu-

rity, and privacy requirements. As microservices

work independently, they can be distributed across re-

sources from different providers to enhance the use of

the infrastructure (Faticanti et al., 2020).

While the emergence of decoupled software archi-

tectures brings several benefits, it also yields resource

allocation challenges for infrastructure providers. In

addition to allocation problems inherited from the

cloud, edge computing has unique privacy and se-

curity issues. Organizations in the same federa-

tion have different policies that affect allocation deci-

sions. For instance, proactive caching of content us-

ing users’ locations and usage patterns can lead to per-

formance improvements but might not be acceptable

for privacy-sensitive users (Zhu et al., 2021). Accord-

ingly, there is a need for resource management strate-

gies that drive allocation decisions not only by per-

Latency-aware Privacy-preserving Service Migration in Federated Edges

289

formance goals but also based on providers’ data pro-

tection policies and microservices’ privacy require-

ments to avoid potential security flaws on federated

edge computing infrastructures.

3 RELATED WORK

This section discusses existing work in two areas that

intersect the scope of our study. Section 3.1 reviews

studies that consider privacy requirements of edge ap-

plications. Section 3.2 presents studies focused on

provisioning resources in federated edges. Finally,

Section 3.3 compares our study to the related work.

3.1 Privacy-aware Service Allocation

As edge environments are typically regarded as a

group of highly distributed nodes interconnected with

network infrastructures less robust than their cloud

counterparts (Aral and Brandi

´

c, 2020), proper secu-

rity mechanisms are needed to ensure that network

transmissions remain reliable. In this context, Xu et

al. (Xu et al., 2019) present allocation strategies to

ensure the privacy of applications’ data transmitted

over the edge network infrastructure. The proposed

method splits and shuffles the application’s data be-

fore transferring it throughout the network. Conse-

quently, data leakage is less likely if some data chunks

are compromised.

In typical edge computing scenarios with mobile

users, latency-sensitive applications are migrated as

users move across the map to ensure that latency

keeps as low as possible. In this case, if attackers

manage to identify the target host of applications be-

ing migrated, they can infer the location of nearby

users in the map. To tackle this problem, Wang et

al. (Wang et al., 2020) present a migration strategy

that avoids migrating applications to hosts either too

far or too close to their users. Thus, applications’ la-

tency keeps low while users’ location remains secure.

As edge servers usually present resource con-

straints, applications can be accommodated on cloud

servers when users do not request them after a while.

In such a scenario, Qian et al. (Qian et al., 2019) ar-

gue that whether to migrate services from the cloud to

the edge and vice-versa depends on users’ preference

for the services, which is known by analyzing their

historical usage data. However, the authors discuss

the risks of data leakage from transferring historical

usage data across the network to external processing.

Therefore, the authors propose a federated learning

scheme that enables users to collect and analyze their

own data usage and send updated offload parameters

to edge servers to determine services’ locations ac-

cordingly.

Li et al. (Li et al., 2020) demonstrate the security

challenges of offloading and transmitting data across

edge network infrastructures, where attackers can es-

timate users’ location based on their communication

pattern to a given server. Consequently, the authors

argue that always offloading applications to the clos-

est server may not be the optimal privacy-preserving

decision to make. Accordingly, the authors present

an offloading strategy that finds the best trade-off be-

tween users’ privacy, communication delay between

users and their applications, and the infrastructure’s

energy consumption cost.

3.2 Resource Allocation in Federated

Edges

In federated edges, an infrastructure provider can

lease resources from other providers if it lacks re-

sources to host all the applications. In situations like

that, one of the main goals is finding the provision-

ing scheme that incurs the least amount of external

resources used so that the allocation cost is as low

as possible. To address that scenario, Faticanti et

al. (Faticanti et al., 2020) present a placement strat-

egy that allocates microservice-based applications on

federated edges based on topological sorting schemes

that define the order in which microservices are allo-

cated by their position in their application’s workflow.

For infrastructure providers that own cloud and

edge resources, choosing the right place to host appli-

cations is key to balancing allocation cost and appli-

cation performance. Whereas allocating applications

at the edge guarantees low latency at high costs as re-

sources are limited, cloud resources are abundant but

incur in higher latencies. In such a scenario, Mah-

mud et al. (Mahmud et al., 2020b) present an alloca-

tion strategy that defines whether applications should

be hosted by edge or cloud resources to increase the

profit of infrastructure providers while delivering sat-

isfactory performance levels for end-users.

Whereas low occupation periods are concerning

for infrastructure providers, as the allocation cost can

exceed the price paid by users, demand peaks may

overload the infrastructure leading to revenue losses.

In such a scenario, Anglano et al. (Anglano et al.,

2020) present an allocation strategy where infrastruc-

ture providers cooperate to maximize their profit by

sharing resources to process time-varying workloads.

As the popularity of mobile and IoT applications

grows significantly, resource-constrained edge infras-

tructures may lack resources to accommodate all ap-

plications simultaneously. In such scenarios, deter-

CLOSER 2022 - 12th International Conference on Cloud Computing and Services Science

290

mining proper placement and scheduling for applica-

tions is critical to ensure users satisfaction and min-

imize allocation costs. With that goal, Li et al. (Li

et al., 2021) present a placement and scheduling

framework called JCPS, which leverages the coopera-

tion between multiple edge clouds to host applications

based on their deadline and allocation cost.

3.3 Our Contributions

As discussed in previous sections, considerable prior

work has targeted privacy concerns of edge applica-

tions and the heterogeneity of federated edge infras-

tructures. However, existing solutions present some

significant shortcomings, discussed as follows.

First, most strategies assume the same privacy re-

quirement for entire applications, overlooking that ap-

plications can be composed of multiple independent

components that yield distinct privacy requirements.

Second, existing solutions equalize the level of trust

of entire regions, overlooking that each region can

have servers managed by different providers with pos-

sibly distinct levels of trust.

Our solution, called Argos, represents the first

steps toward performing service migrations according

to users’ mobility while considering service privacy

requirements and users’ level of trust on infrastruc-

ture providers. Table 1 summarizes the main differ-

ences between Argos and the related work.

Table 1: Comparison between Argos and related studies.

Work

Edge

Type

Privacy

Awareness

Allocation

Decision

(Xu et al., 2019) Private 3 Migration

(Wang et al., 2020) Private 3 Migration

(Qian et al., 2019) Private 3 Placement

(Li et al., 2020) Private 3 Migration

(Faticanti et al., 2020) Federated 5 Placement

(Mahmud et al., 2020b) Federated 5 Placement

(Anglano et al., 2020) Federated 5 Migration

(Li et al., 2021) Federated 5 Migration

Argos (This Work) Federated 3 Migration

4 SYSTEM MODEL

This section presents our system model. First, we de-

scribe the edge scenario considered in our modeling.

Then, we formulate the application provisioning pro-

cess. Table 2 summarizes the notations.

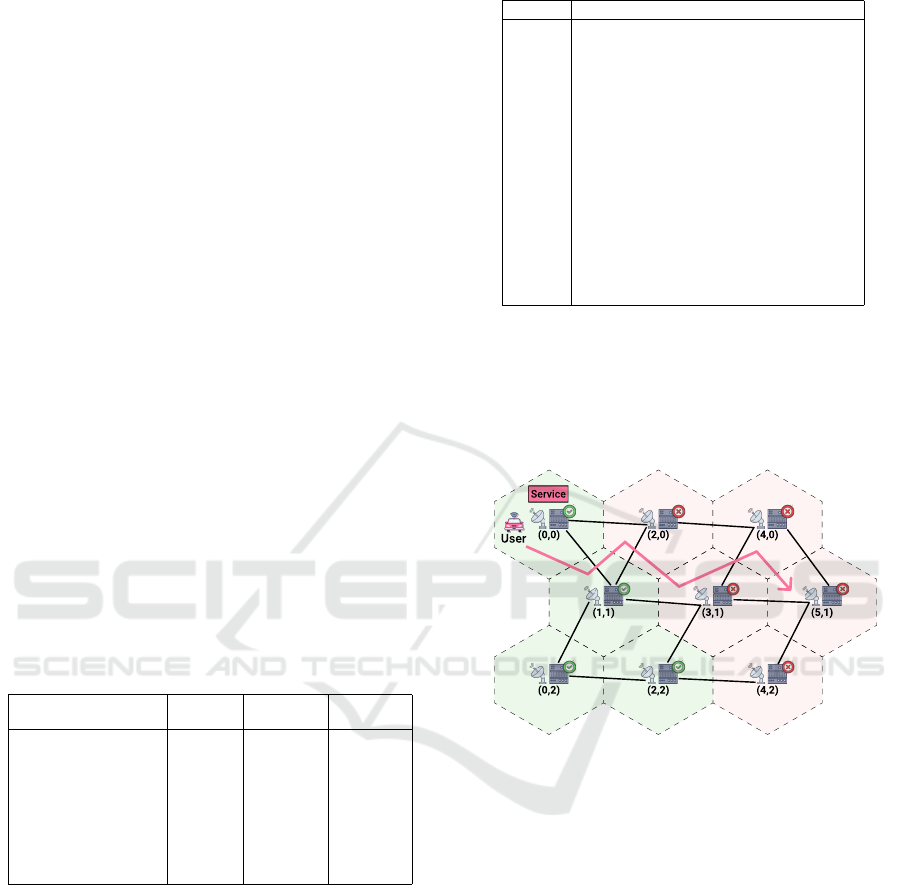

We represent the environment as in Figure 1,

adopting the model by Aral et al. (Aral et al., 2021),

which divides the map into hexagonal cells. In such

a scenario, the edge infrastructure comprises a set of

links L interconnecting base stations B equipped with

edge servers E, positioned at each map cell. Whereas

Table 2: List of notations used in the paper.

Symbol Description

w

f

Wireless delay of base station B

f

d

u

Delay of link L

u

b

u

Bandwidth capacity of link L

u

c

i

Capacity of edge server E

i

o

i

Demand of edge server E

i

p

i

Provider that manages edge server E

i

r

i

Base station equipped with edge server E

i

℘

e

Infrastructure providers trusted by user U

e

α

e

Application accessed by user U

e

β

e

Base station to which user U

e

is connected

l

j

Latency of application A

j

t

j

Latency threshold of application A

j

s

j

List of services that compose application A

j

ρ

k

Capacity demand of service S

k

µ

k

Privacy requirement of service S

k

x

o,i,k

Matrix that represents the service placement

base stations provide wireless connectivity to a set of

users U, edge servers accommodate the set of user ap-

plications A. We model a base station as B

f

= (w

f

),

where w

f

denotes B

f

’s wireless latency, and a net-

work link as L

u

= (d

u

, b

u

) where attributes d

u

and b

u

represent L

u

’s latency and bandwidth, respectively.

Figure 1: Sample scenario with a mobile user where migra-

tions need to be performed based on the service latency and

the user’s level of trust in infrastructure providers.

We assume that the edge infrastructure is feder-

ated. Accordingly, the set of edge servers E is man-

aged by a group of infrastructure providers P . We

model an edge server as E

i

= (c

i

, o

i

, p

i

, r

i

). While at-

tributes c

i

and o

i

represent E

i

’s capacity and occu-

pation, respectively, p

i

references the infrastructure

provider that manages E

i

, and r

i

references the base

station to which E

i

is connected.

As infrastructure providers may adopt different

data protection policies, we assume users trust a spe-

cific group of providers to host their services. Al-

though not mandatory, it is desirable to host the ser-

vices accessed by a user U

e

on edge servers managed

by infrastructure providers that U

e

trusts. We model

a user as U

e

= (℘

e

, α

e

, β

e

), where ℘

e

denotes the list

of infrastructure providers U

e

trusts, α

e

references

the application accessed by U

e

, and β

e

references the

Latency-aware Privacy-preserving Service Migration in Federated Edges

291

base station to which U

e

is connected. In our sce-

nario, we consider a one-to-one relationship between

users and applications in the edge infrastructure.

We model applications as directed acyclic graphs

composed of a set of services. An application is de-

noted as A

j

= (l

j

,t

j

, s

j

). While attributes l

j

and t

j

represent A

j

’s latency at a time step T

o

∈ T and A

j

’s

maximum latency threshold, respectively, s

j

refer-

ences the list of services that compose A

j

. We assume

that application services have specific responsibilities

(e.g., one microservice might be responsible for the

database, another for the front end, and so on). Thus,

different services within an application may have dif-

ferent capacity and privacy requirements depending

on the tasks they perform.

A service is modeled as S

k

= (ρ

k

, µ

k

), where at-

tributes ρ

k

and µ

k

denote the amount of resources

and the degree of privacy required by S

k

, respec-

tively. More specifically, µ

k

= 1 if S

k

has a spe-

cial privacy requirement and 0 otherwise. In such

a scenario, privacy violations occur when services

with special privacy requirements (i.e., services with

µ

k

= 1) are hosted by edge servers managed by in-

frastructure providers not trusted by the service users.

The placement of services on edge servers at a given

time step T

o

∈ T is given by x

o,i,k

, where:

x

o,i,k

=

(

1 if E

i

hosts S

k

at time step T

o

0 otherwise.

We assume the absence of shared storage in the

edge infrastructure. Therefore, migrating a service

S

k

to an edge server E

i

implies transferring S

k

’s ca-

pacity demand from S

k

’s current host to E

i

through-

out a set of links Ω(S

k

, E

i

) that interconnect the edge

servers’ base stations. We define Ω(S

k

, E

i

) with the

Dijkstra shortest path algorithm (Dijkstra et al., 1959)

using link bandwidths as path weight. The migration

time of S

k

to E

i

is given by φ(S

k

, E

i

), as denoted in

Equation 1. Assuming that links in the network in-

frastructure may have heterogeneous configurations,

the lowest bandwidth available between the links in

Ω(S

k

, E

i

) is considered as the available bandwidth to

perform the migration.

φ(S

k

, E

i

) =

ρ

k

min{b

u

| u ∈ Ω(S

k

, E

i

)}

(1)

We model the latency of an application A

j

in

Equation 2, considering the wireless latency of the

base station of A

j

’s user (denoted as B

f

) and the ag-

gregated latency of a set of network links κ, used to

route the data from B

f

across each of A

j

’s services

in the infrastructure. If all A

j

’s services are hosted

by B

f

’s edge server, |κ| = 0, meaning that no links

are needed to route A

j

’s data throughout the network.

We define the set of links κ through the Dijkstra short-

est path algorithm (links’ latency are used as path

weight) (Dijkstra et al., 1959). We assume that SLA

violations occur whenever A

j

’s latency l

j

surpasses

A

j

’s latency threshold t

j

.

l

j

= w

f

+

|κ|

∑

u=1

d

u

(2)

In such a scenario, our goal consists in migrat-

ing services in the edge infrastructure as users move

across the map to minimize the number of latency and

privacy violations. Latency violations are minimized

by hosting application services on edge servers close

enough to the application users. And for privacy vi-

olations the heuristic aims at putting as many appli-

cation services with special privacy requirements as

possible on edge servers managed by infrastructure

providers trusted by the applications’ users.

5 ARGOS DESIGN

This section presents Argos, our migration strategy

that considers user mobility and service privacy re-

quirements on federated edges. Algorithm 1 describes

Argos, which is executed at each time step T

o

∈ T .

Algorithm 1: Argos migration algorithm.

1 η = (A

j

∈ A | l

j

> t

j

) sorted by Eq. 3 (asc.)

2 foreach application η

j

∈ η do

3 λ = Services that compose η

j

sorted by privacy

requirement (desc.) and demand (desc.)

4 ξ = List of servers trusted by η

j

’s user sorted by latency

5 χ = List of servers not trusted by η

j

’s user sorted by latency

6 ψ = ξ ∪ χ

7 foreach service λ

k

∈ λ do

8 foreach edge server ψ

i

∈ ψ do

9 if x

o,i,k

= 1 then

10 break

11 else

12 if c

i

− o

i

≥ ρ

k

then

13 Migrate service λ

k

to edge server ψ

i

14 break

15 end

16 end

17 end

18 end

19 end

Argos initially gets the list of applications whose

latency exceeds their latency thresholds (Algorithm 1,

line 1), sorting these applications based on their la-

tency and latency thresholds. More specifically, each

application A

j

receives a weight ∂

j

(denoted in Equa-

tion 3) according to the tightness of their latency

threshold and on how much their actual latency ex-

ceeds their latency threshold. Accordingly, Argos

CLOSER 2022 - 12th International Conference on Cloud Computing and Services Science

292

avoids unnecessary migrations by migrating only the

applications presenting latency bottlenecks while pri-

oritizing applications with the most critical latency is-

sues (i.e., those whose latency most exceeds their la-

tency threshold).

∂

j

= t

j

− l

j

(3)

After selecting and sorting applications with la-

tency issues, Argos sorts the services of each of these

applications based on their privacy requirement and

demand (Algorithm 1, line 4). The first sorting pa-

rameter (services’ privacy requirement) prevents ser-

vices without special requirements from saturating

all edge servers managed by trusted infrastructure

providers while services with special privacy require-

ments are hosted on edge servers from not trusted in-

frastructure providers. The second sorting parameter

(demand) prioritizes services with a higher demand to

ensure better use of edge server resources.

Once Argos arranges the services from an appli-

cation, it sorts edge servers based on the trust of the

application’s user in their infrastructure providers and

their delay (Algorithm 1, lines 5–7). Specifically on

the edge servers’ delay, we estimate what would be

the application’s delay if the edge servers were cho-

sen to host one or more of the application’s services

(according to Equation 2). Then, Argos iterates over

the sorted list of edge servers, choosing the first edge

server with enough capacity as the new host of each

application service (Algorithm 1, lines 9–18).

6 PERFORMANCE EVALUATION

6.1 Methodology

The dataset used during our experiments is described

as follows. Unless stated otherwise, parameters in the

dataset are distributed according to an uniform distri-

bution. We consider a federated edge computing in-

frastructure comprising 100 base stations with wire-

less delay = 10 equipped with 60 edge servers (we

randomly distribute the edge servers to 60 base sta-

tions). We assume that edge servers are managed by

two infrastructure providers (each provider managing

30 servers). Edge servers have capacity = {100, 200}.

The network topology is defined according to the

Barab

´

asi-Albert model (Barab

´

asi and Albert, 1999),

where links have delays = {4, 8} and bandwidths =

{2, 4}. In our scenario, we have a set of 120 users

accessing an application of two services each. Users

move across the map according to the Pathway mo-

bility model (Bai and Helmy, 2004). Each user in

the scenario trusts one of the infrastructure providers.

Applications have latency thresholds = {60, 120}.

The set of 240 services have capacities = {10, 20, 30,

40, 50}. We assume that 120 out of the 240 services

have special privacy requirements. The initial service

placement is defined according to the First-Fit heuris-

tic described in Algorithm 2.

Algorithm 2: Initial service placement scheme.

1 S

0

← List of services in S arranged randomly

2 E

0

← List of edge servers in E

3 foreach S

0

k

∈ S

0

do

4 foreach edge server E

0

i

∈ E

0

do

5 if c

i

− o

i

≥ ρ

k

then

6 Host service S

0

k

on edge server E

0

i

7 break

8 end

9 end

10 end

We compare Argos against two naive strategies

called Never Follow and Follow User, presented by

Yao et al. (Yao et al., 2015), and the strategy proposed

by Faticanti et al. (Faticanti et al., 2020), described in

Section 3. Whereas Never Follow performs no migra-

tion, Follow User migrates services every time users

move around the map, regardless of applications’ la-

tency. We chose to compare Argos against Faticanti’s

strategy as it allocates resources on federated edge

computing scenarios while minimizing the number

of services on specific infrastructure providers. As

Faticanti’s strategy was designed to decide the ini-

tial placement of services, we adapted it to migrate

services whenever the latency of applications exceeds

their latency threshold, as Argos does.

We evaluate the selected strategies regarding SLA

violations, privacy violations, and number of migra-

tions. Whereas SLA violation refers to the number

of applications whose latency exceeds their latency

thresholds, privacy violation refers to the number of

services hosted by edge servers managed by infras-

tructure providers not trusted by services’ users. The

results presented next are the sum of latency and pri-

vacy violations during each simulation time step. The

source code of our simulator and the dataset are avail-

able at our GitHub repository

1

.

6.2 Results and Discussion

6.2.1 SLA Violations

Figure 2(a) shows the number of SLA violations dur-

ing the execution of the evaluated strategies. As ex-

pected, the two naive strategies presented the worst

results. Never Follow had the worst result for not per-

1

https://github.com/paulosevero/argos.

Latency-aware Privacy-preserving Service Migration in Federated Edges

293

1 6 6

7 0

5 3

4 4

N e v e r F o l l o w F o l l o w U s e r F a t i c a n t i e t a l . A r g o s

0

3 0

6 0

9 0

1 2 0

1 5 0

1 8 0

2 1 0

S L A v i o l a t i o n s

A l g o r i t h m

(a) SLA Violations

1 4 5 7

1 5 6 4

1 3 2 0

1 2 1 5

N e v e r F o l l o w F o l l o w U s e r F a t i c a n t i e t a l . A r g o s

0

2 5 0

5 0 0

7 5 0

1 0 0 0

1 2 5 0

1 5 0 0

1 7 5 0

P r i v a c y V i o l a t i o n s

A l g o r i t h m

(b) Privacy Violations

0

4 5 3 5

5 0

7 9

N e v e r F o l l o w F o l l o w U s e r F a t i c a n t i e t a l . A r g o s

0

7 5 0

1 5 0 0

2 2 5 0

3 0 0 0

3 7 5 0

4 5 0 0

5 2 5 0

M i g r a t i o n s

A l g o r i t h m

(c) Number of Migrations

Figure 2: Simulation results regarding the number of SLA violations, privacy violations, and migrations.

forming migrations, and Follow User had the second-

worst result for performing migrations excessively,

benefiting some applications but harming others.

Argos and Faticanti’s strategy obtained the best

and second-best results, respectively. The main dif-

ference between them was the order of migrations.

While Faticanti’s strategy migrates services according

to their position in their application’s topology (mi-

grating the first services of each application, then the

second services of each application, etc.), Argos mi-

grates services of each application at a time, prioritiz-

ing applications with more severe latency bottlenecks.

Accordingly, when users are close to each other,

Faticanti’s topological sort wastes the resources of

nearby edge servers with the first services of each

of these users’ applications, placing the last services

in more distant edge servers as the closer ones are

filled. Consequently, it penalizes the applications

with tighter SLAs instead of prioritizing them when

occupying the nearby edge servers as Argos does.

6.2.2 Privacy Violations

Figure 2(b) presents the number of privacy viola-

tions of the evaluated strategies. Follow User and

Never Follow obtained the worst results by overlook-

ing the existence of different infrastructure providers

in the environment. Compared to them, Faticanti’s

strategy reduced the number of privacy violations by

15.6% and 9.4%, respectively, by prioritizing migrat-

ing services to edge servers managed by infrastructure

providers trusted by the services’ users.

Argos obtained the best result among the strate-

gies evaluated, reducing the number of privacy vi-

olations by 7.95% compared to Faticanti’s strategy,

which presented the second-best result. Unlike Fati-

canti’s strategy, which migrates as many services as

possible to edge servers trusted by its users, Argos

prioritizes the available space on trusted edge servers

with services that hold special privacy requirements.

As a result, Argos uses the resources managed by re-

liable infrastructure providers in a better way, which

makes a significant difference, especially when there

are few trusted edge servers nearby users.

6.2.3 Service Migrations

Figure 2(c) shows the migration results. While Never

Follow performed no migration, Follow User was the

strategy with most migrations, relocating services 151

times per step on average. Compared to Follow User,

Argos reduced the number of migrations by 98%, mi-

grating only those services from applications suffer-

ing from latency issues. However, it performed 36.7%

more migrations than Faticanti’s strategy.

While Argos migrates all the services of an ap-

plication after identifying it has an excessively high

latency, Faticanti’s strategy migrates each of the ser-

vices of an application at a time according to its topo-

logical order. Thus, it avoids unnecessary migrations

when only just some of the services of an application

need to be migrated to mitigate latency issues.

7 CONCLUSIONS

As edge computing becomes more popular, it is ex-

pected the emergence of infrastructure providers rent-

ing edge resources on a pay-as-you-go basis as in the

cloud, allowing multi-sized organizations to benefit

from edge computing without the need of managing

their own infrastructure (Anglano et al., 2020).

Previous studies aim at different goals when allo-

cating resources on federated edges, such as maximiz-

ing profit by managing the cooperation among infras-

tructure providers. However, existing solutions over-

look that providers may implement distinct data pro-

tection policies, limiting the number of resources suit-

able for hosting services with strict privacy require-

ments like databases and payment gateways.

To address existing limitations in the state-of-the-

art, this paper presents Argos, a heuristic algorithm

that migrates microservice-based applications accord-

ing to users’ mobility in federated edges while consid-

ering services’ privacy requirements and users’ trust

levels on infrastructure providers. Experimental re-

sults demonstrate that Argos outperforms state-of-

the-art approaches, reducing latency and privacy is-

sues by 16.98% and 7.95%, respectively. As future

work, we intend to extend our heuristic to minimize

CLOSER 2022 - 12th International Conference on Cloud Computing and Services Science

294

the allocation cost of privacy-sensitive microservice-

based applications on federated edges.

ACKNOWLEDGEMENTS

This work was supported by the PDTI Program,

funded by Dell Computadores do Brasil Ltda (Law

8.248 / 91). The authors acknowledge the High-

Performance Computing Laboratory of the Pontifical

Catholic University of Rio Grande do Sul for provid-

ing resources for this project.

REFERENCES

Ahmed, E., Gani, A., Khan, M. K., Buyya, R., and Khan,

S. U. (2015). Seamless application execution in mo-

bile cloud computing: Motivation, taxonomy, and

open challenges. Journal of Network and Computer

Applications, 52:154–172.

Anglano, C., Canonico, M., Castagno, P., Guazzone, M.,

and Sereno, M. (2020). Profit-aware coalition forma-

tion in fog computing providers: A game-theoretic ap-

proach. Concurrency and Computation: Practice and

Experience, 32(21):e5220.

Aral, A. and Brandi

´

c, I. (2020). Learning spatiotemporal

failure dependencies for resilient edge computing ser-

vices. IEEE Transactions on Parallel and Distributed

Systems, 32(7):1578–1590.

Aral, A., Demaio, V., and Brandic, I. (2021). Ares: Re-

liable and sustainable edge provisioning for wireless

sensor networks. IEEE Transactions on Sustainable

Computing, pages 1–12.

Bai, F. and Helmy, A. (2004). A survey of mobility mod-

els. Wireless Adhoc Networks. University of Southern

California, USA, 206:147.

Barab

´

asi, A.-L. and Albert, R. (1999). Emergence of scal-

ing in random networks. science, 286(5439):509–512.

Dijkstra, E. W. et al. (1959). A note on two problems

in connexion with graphs. Numerische mathematik,

1(1):269–271.

Faticanti, F., Savi, M., De Pellegrini, F., Kochovski, P.,

Stankovski, V., and Siracusa, D. (2020). Deployment

of application microservices in multi-domain feder-

ated fog environments. In International Conference

on Omni-layer Intelligent Systems, pages 1–6. IEEE.

He, T., Khamfroush, H., Wang, S., La Porta, T., and Stein,

S. (2018). It’s hard to share: Joint service placement

and request scheduling in edge clouds with sharable

and non-sharable resources. In International Confer-

ence on Distributed Computing Systems, pages 365–

375. IEEE.

He, X., Jin, R., and Dai, H. (2019). Peace: Privacy-

preserving and cost-efficient task offloading for

mobile-edge computing. IEEE Transactions on Wire-

less Communications, 19(3):1814–1824.

Li, T., Liu, H., Liang, J., Zhang, H., Geng, L., and Liu,

Y. (2020). Privacy-aware online task offloading for

mobile-edge computing. In International Conference

on Wireless Algorithms, Systems, and Applications,

pages 244–255. Springer.

Li, Y., Dai, W., Gan, X., Jin, H., Fu, L., Ma, H., and

Wang, X. (2021). Cooperative service placement

and scheduling in edge clouds: A deadline-driven ap-

proach. IEEE Transactions on Mobile Computing.

Mahmud, R., Ramamohanarao, K., and Buyya, R. (2020a).

Application management in fog computing environ-

ments: A taxonomy, review and future directions.

ACM Computing Surveys, 53(4):1–43.

Mahmud, R., Srirama, S. N., Ramamohanarao, K., and

Buyya, R. (2020b). Profit-aware application place-

ment for integrated fog–cloud computing environ-

ments. Journal of Parallel and Distributed Comput-

ing, 135:177–190.

Mor, N., Pratt, R., Allman, E., Lutz, K., and Kubiatowicz, J.

(2019). Global data plane: A federated vision for se-

cure data in edge computing. In International Confer-

ence on Distributed Computing Systems, pages 1652–

1663. IEEE.

Qian, Y., Hu, L., Chen, J., Guan, X., Hassan, M. M., and

Alelaiwi, A. (2019). Privacy-aware service placement

for mobile edge computing via federated learning. In-

formation Sciences, 505:562–570.

Satyanarayanan, M. (2017). The emergence of edge com-

puting. Computer, 50(1):30–39.

Satyanarayanan, M., Bahl, P., Caceres, R., and Davies, N.

(2009). The case for vm-based cloudlets in mobile

computing. IEEE pervasive Computing, 8(4):14–23.

Wang, W., Ge, S., and Zhou, X. (2020). Location-privacy-

aware service migration in mobile edge computing.

In Wireless Communications and Networking Confer-

ence, pages 1–6. IEEE.

Xu, X., He, C., Xu, Z., Qi, L., Wan, S., and Bhuiyan, M.

Z. A. (2019). Joint optimization of offloading utility

and privacy for edge computing enabled iot. IEEE

Internet of Things Journal, 7(4):2622–2629.

Yao, H., Bai, C., Zeng, D., Liang, Q., and Fan, Y. (2015).

Migrate or not? exploring virtual machine migration

in roadside cloudlet-based vehicular cloud. Concur-

rency and Computation: Practice and Experience,

27(18):5780–5792.

Zhu, D., Li, T., Liu, H., Sun, J., Geng, L., and Liu,

Y. (2021). Privacy-aware online task offloading for

mobile-edge computing. Wireless Communications

and Mobile Computing, 2021.

Latency-aware Privacy-preserving Service Migration in Federated Edges

295