Towards a Work Task Simulation Supporting Training of Work

Design Skills during Qualification-based Learning

Ramona Srbecky

1

, Michael Winterhagen

1

, Benjamin Wallenborn

3

, Matthias Then

3

, Binh Vu

1

,

Wieland Fraas

2

, Jan Dettmers

2

and Matthias Hemmje

1

1

Department of Multimedia and Internet Applications, FernUniversität Hagen, Germany

2

Department of Work and Organizational Psychology, FernUniversität Hagen, Germany

3

Zentrum für Digitalisierung und IT (ZDI), FernUniversität Hagen, Germany

Keywords: QBLM, Qualifications-based Learning, Learning Analytics, Work Task Simulation, Work Design,

Serious/Applied Gaming, ACT-R, Applied Games, Game-based Learning, Simulation-based Learning,

Competence-based Knowledge Space Theory.

Abstract: This paper describes a novel approach towards integrating work task simulation-based training of skills related

to configuring relevant features for work design with the Qualifications-Based Learning Model (QBLM)

approach. To achieve this, nine psychologically relevant work design characteristics from work content,

workflow/organization, and social relations can be manipulated in the simulated work training tasks and their

training context. The concretization of these work design characteristics requires extensive psychological

testing and fine-tuning of the parameters for simulating the respective working conditions. For this purpose,

Kirkpatrick's evaluation model from 1998 will be used. Therefore, the existing approach of QBLM will be

used to develop an Applied Game for a simulation of work tasks. The existing tools and systems for QBLM

will be extended by a QBLM-oriented gaming and learning analytics framework and the approach of QBLM-

based Structural Didactical Templates. Besides the relevant state of the art, the conceptual modelling for the

approach as well as a first set of initial visual prototypes of the system image will be presented following a

user centered design methodology. Furthermore, a cognitive walkthrough of the visual prototype will be

performed to support a first formative evaluation. The paper concludes with a summary and the remaining

challenges of the approach.

1 INTRODUCTION

A central content focus of the study module "Work

and Organizational Psychology," in the bachelor's

degree program in Psychology at the University of

Hagen (FeU) is job design (LG AuO, 2021), which

deals with the effect of work on the working person.

The critical teaching of a theoretical basics of

psychological work design, which is mainly done by

reading and discussing relevant theories and research

results, is unfortunately mostly lacking in the

experience of practical job design training during

these studies. This can only be achieved by

experiencing a simulated training situation and trying

out job design skills as well as experiening the effects

of different forms of job design. Nevertheless, direct

confrontation, one's own experience and trying out

ones own job design skills on the one hand, as well as

intensive reflection on what is experienced during

such a work design simulation is an essential

prerequisite for the acquisition of action

competencies (Kolb, 1984), i.e. job design skills as

they are also demanded within the framework of the

recommendations of the German Psychological

Society for the design of psychology studies (cf.

Erdfelder et al., 2021; Spinath et al., 2018).

According to (Ulich, 2005), the main tasks of

work psychology consist of analysis, evaluation, and

design of work activities and systems according to

defined human criteria. Accordingly, theories and

models are taught in the study of work psychology

that explain and predict the effect of specific

characteristics of work (characteristics of work

content, work processes, or social interactions,

(GDA, 2018)) on people, their work performance,

their motivation, and their health (e.g., action

regulation theory, job demand-control model, JDR

model, effort-reward imbalance, (cf. Lehrbrief Modul

534

Srbecky, R., Winterhagen, M., Wallenborn, B., Then, M., Vu, B., Fraas, W., Dettmers, J. and Hemmje, M.

Towards a Work Task Simulation Supporting Training of Work Design Skills during Qualification-based Learning.

DOI: 10.5220/0011072800003182

In Proceedings of the 14th International Conference on Computer Supported Education (CSEDU 2022) - Volume 2, pages 534-542

ISBN: 978-989-758-562-3; ISSN: 2184-5026

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

AF A Grundlagen und Arbeitspsychologie: p.66,

p.126f, p.132ff.)). The topic has gained relevance due

to an increased social focus on psychological stress at

work, which has also been reflected in consideration

of the subject in the Occupational Health and Safety

Act. In addition, a growing field of work for (work)

psychologists has emerged in this area.

The primary learning and training objective of the

planned didactic innovation is acquiring

qualifications based on competencies and skills in

work psychology in the sense of analyzing,

evaluating, and designing work tasks according to

defined human criteria (Ulich, 2005). In addition,

going through the corresponding job design

simulation task and the subsequent reflection should

lead to a deeper and better understanding of the

differentiation between structural and behavioral

prevention, which is central in occupational health

psychology, as well as condition-related and person-

related interventions (Lohman-Haislah, 2012).

Through minor adjustments, other learning objectives

can also be focused on (e.g., employees' leadership,

communication organization, and information flow).

Methodological qualifications based on

corresponding competencies and skills are also

developed through a systematic work analysis, which

the students have to carry out following a work task

they have experienced themselves. For example, the

development of digital technologies in the form of so-

called Serious/Applied Gaming (SG/AG) (Marr,

2010) allows the use of computer-based simulations

to enable experiences to complete work task trainings

quasi-virtually, which are typically only possible in

actual practical real-word training activities. These

simulated, i.e., virtual experiences are at least similar

to those in real life and allow the reflection of

unexpected or surprising results.

Several Problem Statements (PS) can be derived

from the objectives and motivation mentioned above.

PS1 is that currently, the Qualifications-Based

Learning Model (QBLM) (Then, 2020; Wallenborn,

2018) cannot support the assessment, i.e.,

measurement and mapping of the learning objectives

and learning successes of the game/simulation

sequences within an integrated Applied Learning

Game (ALG). The Learning Management System

(LMS) used at the FeU is Moodle (Moodle.org,

2021). This LMS already offers digital learning

content at the FUH. Therefore, the already existing

LMS will be used in this work (Srbecky, 2021b). A

didactical structural template supporting QBLM can

used as a starting point to measure the success of

achieving learning objectives regarding competencies

together with the success of training skills on

different proficiency levels in a game-based

simulation and training activity. PS2 is that

professionally relevant action competencies, i.e.

skills at certain professionally relevant proficiency

levels can so far only be acquired in the context of

practical experiences after the theoretical studies.

Still, at this point, there is a lack of appropriate

supervision to reflect on the experiences adequately

and to classify them in the scientific state of

knowledge correctly. Especially the distance study

programme at the FeU (FuH, 2021) is confronted with

special challenges regarding Competence/

Qualification (CQ) orientation (cf. Erdfelder et al.,

2021). In this context, a CQ is a synonym for

competence and qualifications (Then, 2020).

Accordingly, didactic methods must be found and

created here in particular, which allow students to a)

gain experience of different ways of working, b)

enable the independent design of work

characteristics, and c) reflect on these experiences

and their classification in theoretical models already

during their (distance) studies. PS3 is that currently,

it is impossible to assess the gained factual

knowledge, i.e., competencies, and action

knowledge, i.e., professional skills at certain

proficiency levels in a work task simulation regarding

the achievement of a relevant professional action CQ

in the sense of a QBLM-based CQ (Wallenborn,

2018; Then, 2019). The CQs gained through digital

innovation will be recorded and attested, thus

obtaining study evidence. PS4 is that there is

currently no possibility to determine the users' free

text input regarding the fulfilment of the task and the

achievement of a CQ. In the simulation context, the

users have to complete various tasks and orders. For

this purpose, the players in the work task simulation

have to answer messages from potential fellow

players. When answering the messages, it is to be

determined to what extent the answer fulfils the tasks

and orders of the message. PS5 is that the tasks given

in the applied game are static and hardcoded into the

game. To adapt certain tasks, an authoring tool for the

simulation and training tasks for applied games is

needed. Previous publications (Srbecky,

Frangenberg, et al., 2021a, 2021b) stated that in-game

tasks are currently impossible to be modified and

assigned to game scenes and CQs without significant

effort. The PSs mentioned above result in the

following Research Questions (RQs). RQ1: "How

can QBLM-based structural course-patterns be

extended with didactical structural patterns to support

measuring learning objectives and success within the

work simulation?", RQ2: "How can professionally

relevant action competencies be gained during studies

Towards a Work Task Simulation Supporting Training of Work Design Skills during Qualification-based Learning

535

using SG/AG technologies to simulate practical

training and work experience?", RQ3: "How can the

factual domain knowledge and action-oriented

professional skills on different proficiency levels be

measured during the simulation, based on the

learners’ behaviour during the theoretical learning

and practical training, to assess whether the learners

have achieved certain CQs?", RQ4: "Can an

algorithm be implemented which automatically

analyses the free texts entered from the players during

reflection of their experience regarding the fulfilment

of orders and the corresponding tasks?", and RQ5:

"Can an authoring environment for tasks in a applied

game be developed with which it is possible to create,

edit, and map training tasks to certain task simulation

game scenes and corresponding CQs?"

Based on the research methodology of [Ncp90],

the following Research Objectives (ROs) were

derived from the RQs. RO1 is assigned to the

Observation Phase (OP). This phase identifies a

suitable CQ model to map the learning outcomes to

QBLM CQs. Also, suitable systems and tools are

identified. RO2 is assigned to the Theory Building

Phase (TBP). A concept is designed that shows what

system components and interfaces are needed. The

System Development Phase (SDP) moves the concept

into a prototype and is assigned to RO3. The result of

the SDP is evaluated in the Evaluation Phase (EP) in

the context of a Cognitive Walkthrough (CW)

(Wilson, 2013). Finally, the EP is assigned to RO4. In

this phase, all RQs are evaluated.

The remainder of this paper is structured

according to the ROs. This means that in the State-of-

the-Art section, the OP is described. In the

Conceptual Design section, the TBP is described, and

the SDP phase is presented in this paper in the Proof-

of-Concept implementation section. Finally, in the

Evaluation section, the EP is presented. Finally, the

paper concludes with a summary and indications of

future developments.

2 STATE OF THE ART

The previous section has already mentioned some

research projects and software systems related to the

research goals. In the following, the most important

are described in more detail.

Different approaches to simulation work tasks

exist in work psychological experimental studies.

Here, isolated single work tasks are simulated (e.g.,

the simulation of a computer store with the task of

assembling ordered hardware packages within a

certain cost; Hertel et al., 2003). Building on the job

demand-control model (Karasek, 1979),

systematically defined task characteristics (time

pressure, scope of action) are manipulated, and the

effect is tested (Häusser et al. 2011). Although the

described simulation works well in laboratory

settings, more complex, lifelike work tasks are

needed for (online) teaching, both to keep motivation

high among students and to demonstrate more

complex features of job design and at least to better

reflect the central features of job design (GDA, 2018)

at work.

Developing the planned digital teaching

innovation can rely on extensive previous experience

and pre-existing tools and methods of the department

Multimedia and Internet Applications (MMIA) of the

FeU (LG MMIA, 2021), both conceptually

didactically and regarding the technical

implementation. The department MMIA has already

applied game environments and simulation and

training environments, which have been developed in

the projects Realising an Applied Gaming Eco-

system (RAGE) (European Commission, 2020) and

Immerse2Learn (Immerse2Learn, 2021). In the

Immerse2Learn project (Immerse2Learn, 2021), the

game environments were used to integrate simulation

and training environments for vocational and

industrial training applications into a Moodle LMS

(Moodle.org, 2021). Tools were also developed in the

project RAGE to integrate the planned applied game

environment with the Moodle environment. In

addition, the RAGE project created a course authoring

environment (Course Authoring Toolkit, CAT)

(Wallenborn, 2018). The CAT can be used to provide

instruction to participants in the form of a course. This

uses the QBLM approach (Wallenborn, 2018; Then,

2019), which allows learning environments to be

designed dynamically so that learners' prior knowledge

can be addressed, as it supports both a CQ-Profile

(CQP) for the learners and a CQP for the instructional

materials (Then, 2020). This would be used mainly in

the instruction for task simulation. The participants are

prepared and instructed individually for the task, e.g.,

by separately training and coaching the divergent

working tools and contexts.

Furthermore, a pattern-based course-author

support approach based on course patterns and

Didactical Structural Templates was elaborated in

Immerse2Learn. This means that basic patterns of

courses or also basic patterns of didactical structures

within courses and applied games can be offered to

course authors and game developers (Winterhagen,

Heutelbeck, et al., 2020; Winterhagen, Hoang,

Lersch, et al., 2020; Winterhagen, Hoang,

Wallenborn, et al., 2020; Winterhagen, Salman, et al.,

CSEDU 2022 - 14th International Conference on Computer Supported Education

536

2020; Hoang 2020; Lersch 2020). This mechanism

will allow instructors to design and integrate the

course environments and work simulation

environments based on course patterns and Didactical

Structural Templates within the present project.

In recent years the research interest in Learning

Analytics has increased (Wagner, 2012). As shown in

(Freire, 2016) one of the most widely used and

precise descriptions of Learning Analytics is the

definition from the first Learning and Knowledge

(LAK) Conference in 2011: "Learning Analytics is

the measurement, collection, analysis, and reporting

of data about learners and their contexts, for purposes

of understanding and optimizing learning and the

environments in which it occurs" (SoLAR, 2011).

Based on the given definition and the recommended

combination of Adaptive Control of Thought-

Rational (ACT-R) theory (Anderson, 2000) and

Competence-based Knowledge Space Theory

(CbKST) (Albert, 1999) from (Albert, 2007) will

build the foundation for the design of a framework

(Greching, 2010) for Learning Analytics at the

MMIA department. This framework will automate

the measurement and mapping of learners' outcomes

to CQs (Srbecky, Frangenberg, et al., 2021a, 2021b;

Srbecky, Krapf, et al., 2021a, 2021b; Srbecky, Then,

et al., 2021). Based on the definition of Learning

Analytics from (SoLAR, 2011), the Learning

Analytics mechanism will measure learners' follow-

up/simulated training success and map it to training

outcomes and corresponding qualifications in terms

of factual knowledge (competencies) and action

knowledge (skills and proficiency levels) on the topic

area of job design. The factual knowledge and action

knowledge refer to the definitions of declarative and

procedural knowledge of the ACT-R theory

(Anderson, 2000). Action knowledge refers to the

procedural knowledge that indicates how something

should be executed. This is the knowledge about the

appropriate execution of an action (Schönpflug,

2008). The factual knowledge refers to the declarative

knowledge of the ACT-R theory (Urhahne, 2019). To

accomplish a task or problem, an interplay of both

bits of knowledge is needed (Albert, 2007).

As shown in (Freire, 2016), an extension of

Gaming Analytics with Learning Analytics leads to a

better understanding of the actual learning of the

players (Freire, 2016). In this context, Gaming

Analytics refers to the definition of (Seif El-Nasr et

al. 2013). "Gaming analytics is the application of

analytics to game development and research. The

goal of game analytics is to support decision making,

at operational, tactical and strategic levels and within

all levels of organization - design, art, programming,

marketing, user research, etc." (Seif El-Nasr et al.

2013). Various data can be collected in the context of

gaming analytics. According to (Freire, 2016), this

data can be divided into the two categories of

technical data of a game and user and experience data

of a game. The technical data (Freire, 2016) mentions

data as the code itself, or the bugs reported. Also, data

about the memory usage or system performance are

covered by the term of technical data according to

(Freire, 2016). The user and experience data can be

more preciously described as the game metrics (Freire,

2016). According to (Seif El-Nasr et al. 2013), game

metrics "are quantitative measures of attributes of

objects." (Seif El-Nasr et al. 2013) according to (Seif

El-Nasr et al. 2013), the raw players' behavior data

tracked in the game can be transformed into the game

metrics such as "total playtime or daily active users"

(Seif El-Nasr et al. 2013).

For the combination of Gaming Analytics and

Learning Analytics, the so-called Game Learning

Analytics (Freire, 2016) therefore suggests that "the

educational goals of Learning Analytics and the tools

and technologies from Game Analytics should be

combined" (Freire, 2016). In terms of this paper, the

procedural knowledge should be measured and

evaluated using Gaming Analytics. Learning Analytics

should be combined with the outcomes and results of

Gaming Analytics to analyze declarative knowledge.

This is exactly where the planned digital teaching

innovation comes in. Building on existing task

simulations, a complex, generalizable work task

context that is relevant to many domains of work is to

be simulated. This is to be achieved digitally within an

AG environment that enables students to experience

and reflect on content-related, organization-related,

workflow-related, and social features of job design.

They should be enabled to reflect their experiences for

themselves after completing the simulated training

tasks in a playful manner. This reflection should also

enable them to develop work new design configuration

proposals, to test their own design variants and to

analyze the respective effects. The digital task

simulation will be based on the idea of the pre-existing

"computer store task" (Hertel et al., 2003) but

integrated into a social context and extended to include

interaction with colleagues and later superiors.

Furthermore, the task simulation is to be supplemented

by additional tasks like research tasks, processing of

colleague inquiries, and made more realistic through

additional complexity. In addition to the processing of

customer orders, inquiries from supposedly

cooperating colleagues are to be considered, and thus a

complex structure of target work context criteria is to

be achieved.

Towards a Work Task Simulation Supporting Training of Work Design Skills during Qualification-based Learning

537

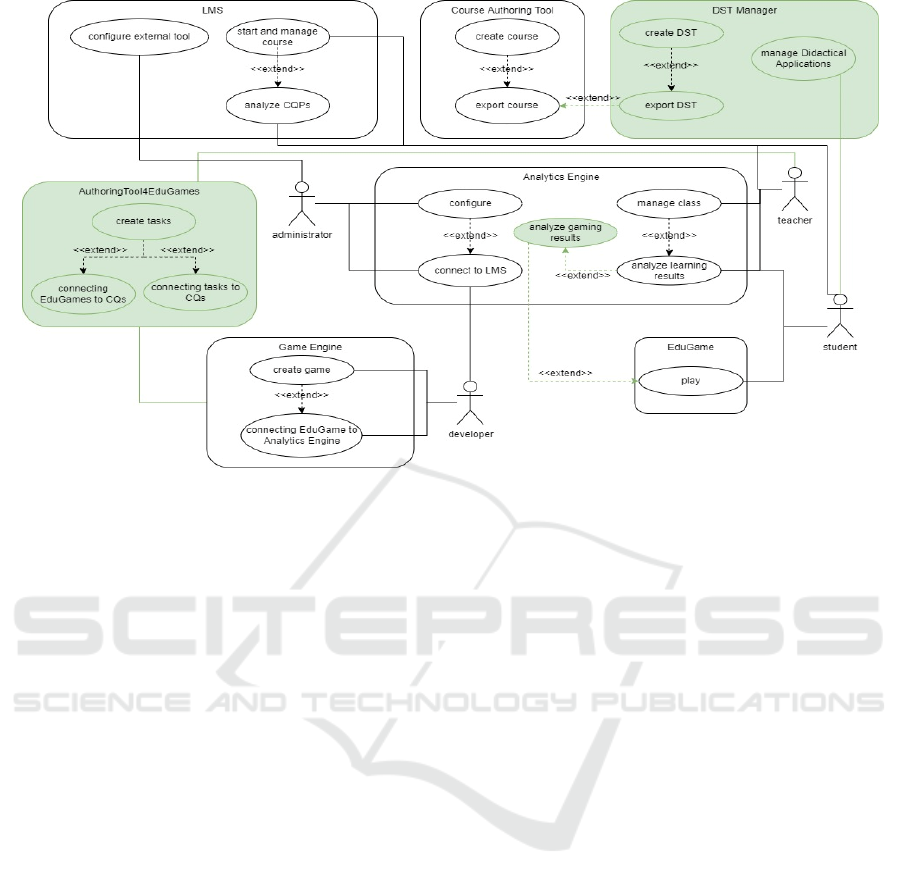

Figure 1: Use cases for the system.

3 CONCEPTUAL DESIGN

In the following section, the technical and didactical

concepts of our approach will be derived.

3.1 Overall Technical Concept and Use

Cases

In the following section, the use cases for the system

(see figure 1) will be described and explained. To

create the course, at first, a so-called Didactical

Structural Template (DST) has to be created. This

new DST will be implemented by means of a course

or a course template, which can be edited with the

CAT. To support this approach in a QBLM-based

way, a QBLM-capable course pattern is used, which

first converts the learning contents into course units.

Within the corresponding Moodle course checks to

what extent the learning contents have been

understood, i.e., to what extent the prerequisites for

participation in the actual work simulation have been

met. This can, e.g., be achieved through self-testing

tasks in the form of automated QBLM assessment

functions (pre-testing). These initial basic QBLM

CQs can then already be stored in the students' QBLM

CQP with the help of the QBLM approach and the

initial CQs can afterwards be used for later progress

evaluation. Classically, the assessments could also be

carried out through a Moodle learning quiz (Moodle

docs, 2021).

Before playing the applied game, the teachers, as

shown in figure 1, need to create the work simulation

tasks and their specific job design parameter

configuration in an authoring tool for applied games.

Here the teachers need to be enabled to create the

tasks for the applied game and map the tasks to the

game scenes including the respective job design

parameter configuration. Also, the CQs from the CQ

framework should be mapped to the tasks. Finally, an

export function for exporting the tasks and the

corresponding mapping should be created. This

functionality will be needed to analyze the

achievement of the CQs. Finally, the game scenes

files' import and export functionality are needed to

map the tasks to the applied game scenes.

Once the students have acquired the relevant

theoretical knowledge with the study manuals, they

can start playing the work task simulation to

experience the effects of their work design and

corresponding parameter configuration by

themselves. Afterwards, students can re-start the

actual ALG at any time in the sense of an additional

training "exercise" or training task "submission".

Within this automated ALG, a QBLM-based

Didactical Structural Template needs to be used,

which extends the QBLM course pattern of the

Moodle course by measuring the learning objectives

and learning successes of the game/simulation

sequences within the ALG. In particular, the active

parts of the ALG (reflection of the professional job

CSEDU 2022 - 14th International Conference on Computer Supported Education

538

design, systematic work analyses, and generation of

alternative work design configuration solutions) can

be used as the "exercise outcome". This result is

automatically reported back to the Moodle system so

that it can automatically decide whether the

"exercise/final task" has been passed and can be

continued in the course, or the "exercise/final task"

needs to be repeated to achieve a different/better

outcome. As a prerequisite for the analysis of the data,

a CQ framework has to be defined and maintained in

the CAT. After this, appropriate measurement values

for the game metrics have to be defined and

implemented for the game. The gained factual

knowledge should be evaluated with the help of the

game metrics and gaming analytics. Potential metrics

can be the number of processed customer requests,

duration of processing a request, number of words,

etc. The metrics will be defined and explained in

more detail in later publications. The analytics

components from the RAGE project (European

Commission, 2020) will be used and further

evaluated to measure the game metrics. To measure

the game metrics for the fulfillment of customer

requests, an algorithm needs to be implemented that

can analyze the free text answers based on sample

solutions. After the game is played, players have to

reflect on the measured game metrics. Here, the

action knowledge is measured using learning

analytics. The players need to explain how their

results came about by applying the theoretical

knowledge they gained before playing the applied

game. Those answers are free-text answers. Those

answers should be analyzed automatically regarding

the achievement of CQs. If the CQ has been achieved,

it should then be transferred to the CQP and entered.

3.2 Didactical Elements

A central element of the planned learning game is the

students' own experience of well and poorly designed

work tasks in terms of the specific manifestation of

psychologically relevant work characteristics (e.g.,

psychological stress). For this purpose, the students

will process one or more specifically designed work

tasks which are digitally simulated. Psychological

stresses, such as interruptions, time pressure,

variability, or social support, are systematically

varied, and their effects are thus made tangible.

Furthermore, the reflection of the own experience and

the effect of the processed work tasks, the reflection

of causes (person-related and condition-related), and

the independent generation and testing of design

solutions are promoted by targeted open questions.

These questions are to be answered utilizing the

previously gained theoretical knowledge. For this

purpose, the measured values are to be explained

based on theoretical knowledge. Finally, the students

can experience the exact effect of the different design

solutions regarding work performance, motivation,

and stress based on their own work example and

receive processed feedback.

In the end, an explanation of the overall process

is provided for all simulation elements. It explains

how specific psychological stresses manifested

themselves in concrete terms in the simulated work

situation and what alternative concrete designs would

have been possible. In this way, the understanding is

sharpened that the design of working conditions is not

unchangeable, but that there is almost always scope

for design, which must be found in the practice of job

design (e.g., in risk assessment). In addition to the

pure recording of the characteristics of specific work

features, the students also have to reflect and evaluate

the results and independently develop suggestions for

improvement. Psychologically relevant task features

(e.g., time pressure, work interruptions, information

overload, social support, feedback, task variability)

can be systematically manipulated from the outside.

After the processing, the feedback of the results, the

own reflection of individual and condition causes for

specific results, and the debriefing with a systematic

analysis of the work situation and the independent

derivation of solution suggestions for a better work

design takes place.

4 IMPLEMENTATION

The previously mentioned software components and

systems are used and extended to realize the

conceptual design. The basic gameplay and the

corresponding mock-ups for the graphical user

interfaces are shown below. Future publications will

describe the detailed realization of the software

components and interfaces in more detail.

The simulated task is a typical processing task in

which customer inquiries are fulfilled to the fullest

possible satisfaction by transforming the

requirements described in the customer's inquiry into

concrete offers. The inquiry, e.g., requests for a

hardware package, consists of content like a

computer, monitor, printer with specific

requirements, price ideas, and time specifications for

delivery times. To process the request and create a

suitable offer, the players must research certain

information. For this purpose, first research relevant

information in a file directory with lists and

Towards a Work Task Simulation Supporting Training of Work Design Skills during Qualification-based Learning

539

descriptions for an offer, and second ask specialized

colleagues for specific information.

In addition to creating their own offer, the players

have to answer parallel inquiries from colleagues who

are supposed teammates with the same tasks about

information only available to the players. The

supposed colleagues need to process their customer

orders. The goal is to process as many orders as

possible, to achieve a high level of customer

satisfaction by fulfilling the requirements as best as

possible, and to achieve a high level of colleague

satisfaction by processing the corresponding requests

quickly. For processing, the players have a desktop

with the following basic tools at their disposal. An

Email program for receiving customer inquiries and

sending offers. A Messenger/Chat tool for

communication with colleagues. Further, a File

Explorer/virtual library with information on various

products will be implemented and an editor to create

offers.

To ensure sufficient qualification and the same

starting point of the players for the actual task

simulation, the players are first familiarized with the

operation of the basic tools and then trained in the

processing of the actual work tasks of the work

simulation environment in a more comprehensive

tutorial. This is followed by a pre-testing, in which

the skills in the operation of the basic tools and the

actual processing of the task are tested. In addition,

the current stress level, fatigue, is recorded to

determine the starting point for determining the stress

level. The start screen of the applied game

environment of the work simulation, contains the

virtual desktop with the basic tools email program,

messenger, virtual library, and editor, as well as a

clock combined with info panel (number of processed

orders, number of waiting customer orders, colleague

requests, etc.). New customer requests can be read by

opening the email program, and their processing can

be started. For processing, information searches in the

virtual library and inquiries to colleagues via

messenger are necessary. Based on all the

information obtained in this way, an offer as suitable

as possible can then be created in the editor and sent

to the customer. At the same time as the players are

processing their own customer orders, inquiries from

colleagues who need information for processing their

customer inquiries are sent via messenger. The player

has to answer these requests by obtaining the desired

information in the virtual library and sending it to

colleagues. During the game phase of about 45

minutes, customer orders and requests from

colleagues arrive at different times and must be

processed as quickly as possible and by the

requirements. The actual work task should basically

be feasible without any stress. Nevertheless, task

performance is influenced by several systematically

variable work characteristics like psychological

stresses, which thus determine either positively or

negatively the effect of the work task on the players.

Finally, the characteristics of the tasks presented here

are initially set at random and can later be

systematically varied by the players.

After completing the task simulation, the players

have to fill out a short questionnaire on misuse

(fatigue) and motivation (work engagement). This is

followed by feedback on the results regarding work

performance (number of orders processed, customer

satisfaction, colleague satisfaction) as bar charts with

comparison bars (norm values) and regarding stress

(comparison to the baseline before the task) and

motivation. Reflection on one's own results, one's

own experience, the effects of the work task, and

reflection on the causes for certain results (person-

related and condition-related) is done by answering

specific open questions in a text field.

5 EVALUATIONS

Before implementing the system, an evaluation in the

form of a CW of the visual mock-ups will be

performed.

In the form of a CW (Wilson, 2013), an initial

evaluation of the Proof-of-Concept implementation

will be accomplished by domain experts in the field

of education in Computer Science. The evaluation's

primary goal is to estimate the productive capacity of

the implementation and orientate future development.

In addition, nine psychologically relevant work

characteristics from work content, workflow/

organization, and social relations can be manipulated

in the task. The concretization of these changes

requires extensive psychological testing and fine-

tuning of the parameters for simulating the working

conditions, e.g., feature "work interruptions": How

many work interruptions must there be for the agents

to speak of "high intensity of work interruptions".

This requires some testing with subsequent

evaluation and adjustments of the task simulation

regarding the effect of the task features.

Nevertheless, even more critical are the tests of

the actual effectiveness of the task simulation

regarding the learning objectives. For this purpose,

following the evaluation model of (Kirkpatrick,

1998), an evaluation is carried out on 3 of 4 levels:

The participants' reactions will be evaluated with

a short questionnaire about the simulation experience

CSEDU 2022 - 14th International Conference on Computer Supported Education

540

at the first level. The students should answer the

following questions: How do students experience the

task? Was the simulation interesting and stimulating?

Do you think you learned something important? In

the second level, the so-called learning level, a

knowledge test on job design with a randomized wait-

control group will be designed. Therefore, students

will be randomly selected as participants of the

control group and later compared against the other

students. At the third level, the behaviour level, a

transfer test will assess typically applied job design

and job analysis problems and assess work analysis

tools. The 4th level in Kirkpatrick's evaluation

scheme (outcomes), e.g., better grades in the final

exam or later career success, is not possible for both

ethical and practical reasons (no control group, no

access to students after the end of the study, as well

as contamination of the target criteria by numerous

other factors). In addition to the effectiveness

evaluation, a formative evaluation will take place via

a qualitative approach (guideline-based interviews

with participants) to optimize individual elements of

the task simulation.

6 CONCLUSIONS

This paper introduces a novel approach to a work task

simulation considering relevant features for job

design and the QBLM approach. Future work

includes the implementation and evaluation of the

described systems. Also, algorithms and concepts for

assessing the CQs based on the learning game

outcomes and the behaviour of the learners in the

game will be designed, implemented, and evaluated.

Furthermore, a literature review and evaluations for

RQ5 need to be performed. Also, further evaluations

regarding the usage of RAGE Analytics as the

Analytics Engine will be performed. This involves

checking whether RAGE Analytics provides all the

necessary functionalities for the tasks required. The

remaining challenges are the development,

integration, and evaluation of the components and

systems into the tool landscape of FeU for productive

usage. Since today QBLM-based approaches are only

integrated into a development and research

environment. In addition, the analytics framework

and tools need to be developed and integrated.

Finally, the approach of the didactical structural

templates needs to be integrated and evaluated.

Therefore, the Applied Game needs to be

implemented and evaluated.

REFERENCES

Albert, D., Lukas, J. (1999). Knowledge Spaces: Theories,

Empirical Research, and Applications, Psychology

Press, Mahwah, NJ: Erlbaum.

Albert, D., Hockemeyer, C., Mayer, B., & Steiner, C. M.

(2007). Cognitive Structural Modelling of Skills for

Technology-Enhanced Learning. In J. Spector, D.

Sampson, T. Okamoto, Kinshuk, S. Cerri, M. Ueono &

A. Kashihara (Eds.), Proceedings of the 7th IEEE

International Conference on Advanced Learning

Technologies (ICALT). Niigata, Japan (pp. 322-324).

IEEE Computer Society.

Anderson, J.R., & Schunn, C.D. (2000). Implications of the

ACT-R Learning Theory: No Magic Bullets. In R.

Glaser (ed.), Advances in instructional psychology

(Vol. 5). Mahwah, NJ: Erlbaum.

Dettmers, J. (2020). AF-A Arbeits- und

Organisationspsychologie: Grundlagen und Methoden.

Hagen: FernUniversität in Hagen, Fakultät für

Psychologie.

Dettmers, J., & Krause, A. (2020). Der Fragebogen zur

Gefährdungsbeurteilung psychischer Belastungen

(FGBU). Zeitschrift für Arbeits- und

Organisationspsychologie A&O.

Erdfelder, E., Antoni, C. H., Bermeitinger, C., Bühner, M.,

Elsner, B., Fydrich, T., Vaterrodt, B. (2021). Präsenz-

veranstaltungen: Unverzichtbarer Kernbestandteil einer

qualitativ hochwertigen universitären Psychologie-

ausbildung. Psychologische Rundschau, 72(1), 19–26.

European Commission (2020). CORDIS EU research

results – Realising an Applied Gaming Eco-System,

[online] shorturl.at/yETW0.

FuH (2021). Startseite FuH, [online] shorturl.at/blABJ.

Freire, M., Serrano-Laguna, Á., Iglesias, B. M., Martínez-

Ortiz, I., Moreno-Ger, P., & Fernández-Manjón, B.

(2016). Game Learning Analytics: Learning Analytics

for Serious Games. In Learning, Design, and

Technology (pp. 1–29). Cham: Springer International

Publishing.

GDA (2018). Leitlinie Beratung und Überwachung bei

psychischer Belastung am Arbeitsplatz. Berlin:

Geschäftsstelle der Nationalen Arbeitsschutzkonferenz.

shorturl.at/hozV7.

Greching, T., Bernhart, M. Breiteneder, R., Kappel, K.

(2010). Softwaretechnik, PEARSON Studium,

München.

Häusser, J. A., Mojzisch, A., & Schulz-Hardt, S. (2011).

Endocrinological and psychological responses to job

stressors: An experimental test of the job demand-

control model. Psychoneuroendocrinology, 36(7),

1021–1031.

Hertel, G., Deter, C., & Konradt, U. (2003). Motivation

Gains in Computer-Supported Groups1. Journal of

Applied Social Psychology, 33(10), 2080–2105.

Hoang, M. D. (2020). Management of Structural Templates

in association with Competence-Based Learning and

the LMS Moodle.

Immerse2Learn (2021). Projekt „Immerse2Learn“, [online]

https://immerse2learn.de/.

Towards a Work Task Simulation Supporting Training of Work Design Skills during Qualification-based Learning

541

Karasek, R. A. (1979). Job Demands, Job Decision

Latitude, and Mental Strain: Implications for Job

Redesign. Administrative Science Quarterly, 24(2),

285.

Kirkpatrick, D. L. (1998). Evaluating training programs:

The four levels (2 Aufl.). Berrett-Koehler Publishers.

Kolb, D.A. (1985). Experiential learning. Experience as the

source of learning and development. Upper Saddle

River, NJ: Prentice-Hall.

LG AuO (2021). Arbeits- und Organisationspyschologie,

[online] shorturl.at/lCRZ9.

LG MMIA (2021). MMIA, [online] shorturl.at/ivyE9.

Lersch, H. (2020). Implement an extension of the KM-EP

Course Authoring Tool (CAT) to associate

competencies with learning activities and an interface

to export competencies to the LMS Moodle.

Lohman-Haislah, A. (2012). Psychische Belastungen was

tun? Verhältnisprävention geht vor Verhaltens-

prävention. BAuA: Aktuell, 2, 6-7. Bonifatius.

Marr, A. C. (2010). Serious Games für die Informations-

und Wissensvermittlung - Bibliotheken auf neuen

Wegen. Deutschland: Dinges & Frick.

Moodle docs (2021). Moodle- Quiz activity, [online]

https://docs.moodle.org/311/en/Quiz_activity.

Moodle.org (2021). Moodle- Der Einstieg ist leicht,

[online] https://moodle.org/?lang=de.

Schönpflug, U., Hoy, A. W. (2008). Pädagogische

Psychologie. Deutschland: Pearson Deutschland.

Seif El-Nasr, M., Drachen, A., & Canossa, A. (Eds.).

(2013). Game Analytics - Maximizing the Value of

Player Data. London: Springer-Verlag.

SoLAR (2011). What is learning analytics? [online]

shorturl.at/joBW4.

Spinath, B., Antoni, C., Bühner, M., Elsner, B., Erdfelder,

E., Fydrich, T., Vaterrodt, B. (2018). Empfehlungen zur

Qualitätssicherung in Studium und Lehre.

Psychologische Rundschau, 69(3), 183–192.

Srbecky R., Frangenberg, M., Wallenborn, B., Then, M.,

Perez-Colado, I. J., Alonso-Fernandez, C., Fernandez-

Manjon, B., & Hemmje, M. (2021a). Supporting the

Mapping of Educational Game Events with

Competency Models considering Qualifications-Based

Learning. CERC 2021 Proceedings.

Srbecky, R., Frangenberg, M., Wallenborn, B., Then, M.,

Perez-Colado, I. J., Alonso-Fernandez, C., Fernandez-

Manjon, B. & Hemmje, M., (2021b). Supporting

Learning Analytics in Educational Games in

consideration of Qualifications-Based Learning. In:

Kienle, A., Harrer, A., Haake, J. M. & Lingnau, A.

(Hrsg.), DELFI 2021. Bonn: Gesellschaft für

Informatik e.V. (S. 187-192).

Srbecky, R., Krapf, M., Wallenborn, B., Then, M., &

Hemmje, M. (2021a). Prototypical Implementation of

an Applied Game with a Game-Based Learning

Framework. ECGBL 2021 - Proceedings of the 15th

European Conference on Game Based Learning.

Srbecky, R., Krapf, M., Wallenborn, B., Then, M. &

Hemmje, M. (2021b). Realization of a Framework for

Game-based Learning Units Using a Unity

Environment. ECGBL 2021 - Proceedings of the 15th

European Conference on Game Based Learning.

Srbecky, R., Then, M., Wallenborn, B., M. Hemmje (2021).

Towards Learning Analytics In Higher Educational

Platforms In Consideration of Qualification-based

Learning. Edulearn21 Proceedings, doi:

10.21125/edulearn.2021.1046.

Then, M., Hoang, M., Hemmje, M. (2019). A Moodle-

based software solution for Qualifications-Based

Learning (QBL). Hagen, FernUniversität in Hagen.

Then, M. (2020). Supporting Qualifications-Based

Learning (QBL) in a Higher Education Institution's IT-

Infrastructure. Hagen, FernUniversität in Hagen.

Ulich, E. (2005). Arbeitspsychologie (6., überarb. u. erw.

Aufl.). Stuttgart: Schäffer-Poeschel.

Urhahne, D., Dresel, M., Fischer, F. (2019). Psychologie

für den Lehrberuf, Springer-Verlag GmbH

Deutschland, ein Teil von Springer Nature, Springer,

Berlin, Geidelberg.

Wagner, E., Ice, P. (2012). Data Changes Everything:

Delivering on the Promise of Learning Analytics in

Higher Education. Educause Review. [online]

shorturl.at/bfqGR.

Wallenborn, B. (2018). Entwicklung einer innovativen

Autorenumgebung für die universitäre Fernlehre.

University Library Hagen.

Wilson, C. (2013). User Interface Inspection Methods: A

User-Centered Design Method, Elsevier Science,

Niederlande.

Winterhagen, M., Heutelbeck, D., Wallenborn, B., &

Hemmje, M. (2020). Integration of Pattern-Based

Learning Management Systems with Immersive

Learning Content. CERC2020 Proceedings. (13), 211–

221.

Winterhagen, M., Hoang, M. D., Lersch, H., Fischman, F.,

Then, M., Wallenborn, B., Hemmje, M. (2020).

Supporting Structural Templates For Multiple Learning

Systems With Standardized Qualifications. In L.

Gómez Chova, A. López Martínez, & I. Candel Torres

(Eds.), EDULEARN Proceedings, EDULEARN20

Proceedings (pp. 2280–2289). IATED.

Winterhagen, M., Hoang, M. D., Wallenborn, B.,

Heutelbeck, D., & Hemmje, M. L. (2021). Supporting

Qualification Based Didactical Structural Templates for

Multiple Learning Platforms. In H. R. Arabnia, L.

Deligiannidis, F. G. Tinetti, & Q.-N. Tran (Eds.),

Springer eBook Collection. Advances in Software

Engineering, Education, and e-Learning: Proceedings

from FECS'20, FCS'20, SERP'20, and EEE'20 (1st ed.,

pp. 793–808). Cham: Springer International Publishing;

Imprint Springer.

Winterhagen, M., Salman, M., Then, M., Wallenborn,

B., Neuber, T., Heutelbeck, D., Hemmje, M. (2020).

LTI-Connections Between Learning Management

Systems and Gaming Platforms. Journal of Information

Technology Research, 13(4), 47–62.

https://doi.org/10.4018/JITR.2020100104.

CSEDU 2022 - 14th International Conference on Computer Supported Education

542