Real-time Statistical Log Anomaly Detection with Continuous AIOps

Learning

Lu An

a

, An-Jie Tu, Xiaotong Liu and Rama Akkiraju

IBM Watson, 555 Bailey Ave, San Jose, U.S.A.

Keywords:

AI for IT Operations, Log Anomaly Detection, Online Statistical Learning, Error Entity Extraction, Continu-

ous Model Updating.

Abstract:

Anomaly detection from logs is a fundamental Information Technology Operations (ITOps) management task.

It aims to detect anomalous system behaviours and find signals that can provide clues to the reasons and the

anatomy of a system’s failure. Applying advanced, explainable Artificial Intelligence (AI) models throughout

the entire ITOps is critical to confidently assess, diagnose and resolve such system failures. In this paper, we

describe a new online log anomaly detection algorithm which helps significantly reduce the time-to-value of

Log Anomaly Detection. This algorithm is able to continuously update the Log Anomaly Detection model

at run-time and automatically avoid potential biased model caused by contaminated log data. The methods

described here have shown 60% improvement on average F1-scores from experiments for multiple datasets

comparing to the existing method in the product pipeline, which demonstrates the efficacy of our proposed

methods.

1 INTRODUCTION

The exploding growth of Information Technology

(IT) systems and services make the systems and ap-

plications become increasingly more complex to op-

erate, manage and monitor. By utilizing log process-

ing, machine learning and other advanced analytics

technologies, Artificial Intelligence for IT Operations

(AIOps) (Lerner, 2017) provides a promising solution

to enhance the reliability of the IT operations. Today,

most planet-scale service operators employ their own

AIOps to collect logs, traces and telemetry data, and

analyze the collected data to enhance their offerings

(Levin et al., 2019). One of the critical tasks in AIOps

is the anomaly detection which is the essential step to

detect anomalous system behaviours and find signals

that can provide clues to the reasons and the anatomy

of a system’s failure (Goldberg and Shan, 2015; Gu

et al., 2017; Chandola et al., 2009).

As system logs are records of the system states

and events at various critical points and log data is

universally available in nearly all IT systems, it is a

valuable resource for the AIOps to process, analyze

and perform anomaly detection algorithms. We call

the anomaly detection methods utilizing logs as data

source as Log Anomaly Detection (LAD). The tradi-

a

https://orcid.org/0000-0003-4050-3625

tional LAD methods were mostly manual operations

and rule-based methods, while such methods were

no long suitable for the large-scale IT systems with

sophisticated system incidents. In the recent years,

with the development of AI technologies, machine

learning based anomaly detection methods have re-

ceived more and more attention. For instance, some

works utilized unsupervised clustering-based meth-

ods (Givental et al., 2021a; Givental et al., 2021b) to

detect outliers. Though such methods do not require

labeled data for training, the anomaly detection per-

formance is not guaranteed and unstable. Moreover,

it is hard to apply such methods onto streaming log

data as the log patterns are changing over time.

Another popular and widely used LAD approach

is to first collect enough labeled training data during

the system’s normal operation period and adopt log

templates-based method for feature engineering, and

then employ Principal Component Analysis (PCA)

based methods to learn normal log patterns from

labeled training data and find anomalous log pat-

terns during inference streaming log data (Liu et al.,

2020; Liu et al., 2021). Even though the PCA-based

method is successful in certain scenarios, it still suf-

fers from some limitation in practice. Firstly, log tem-

plates learning often requires customers to provide

one week’s worth of training logs without incidents

An, L., Tu, A., Liu, X. and Akkiraju, R.

Real-time Statistical Log Anomaly Detection with Continuous AIOps Learning.

DOI: 10.5220/0011069200003200

In Proceedings of the 12th International Conference on Cloud Computing and Services Science (CLOSER 2022), pages 223-230

ISBN: 978-989-758-570-8; ISSN: 2184-5042

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

223

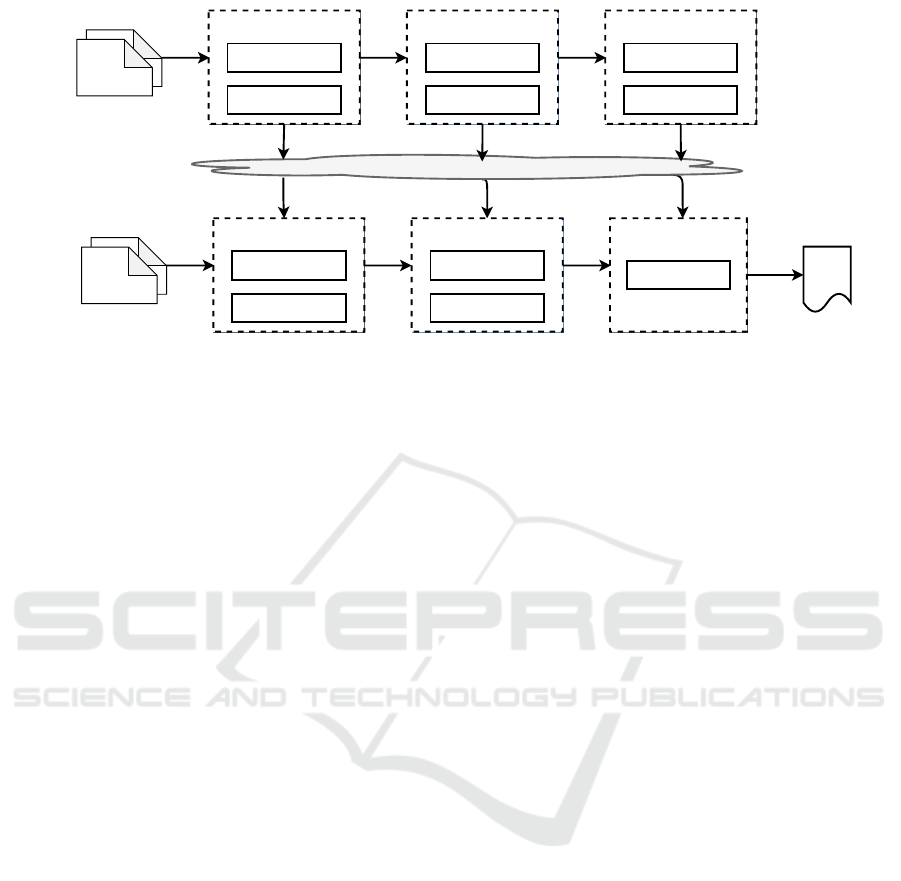

Aggregation

Encoding

Feature Engineering

Templates Learning

Embedding Extraction

Log Parsing

Normal Patterns

Anomaly Thresholds

PCA Model Training

Sampled

Historical

Logs

Data Lake - AI Models

Aggregation

Encoding

Trained Feature Extractor

Templates Learning

Embedding Extraction

Trained Log Parser

Anomaly Detection

PCA Model Inference

Streaming

Logs

Log Anomaly Training

Log Anomaly Detection

Alerts

Figure 1: LAD pipeline for PCA-based Method.

which may need some time to collect thus training

data is not available on Day 0. Moreover, the cus-

tomers sometimes may not know if the training logs

they provided are pure normal logs. Secondly, the log

templates learning process takes hours or days to fin-

ish, depending on the size of the datasets.

In this paper, we propose a Real-Time Statisti-

cal Model (RSM) based LAD method, which aims

to reduce the training time and achieve faster “time-

to-value” while performing excellent online anomaly

detection. The major contributions of this new algo-

rithm include:

(i) We introduce a fast error entity extraction method

to extract different types of error entities including er-

ror codes and exceptions at run-time. In addition, this

method is able to quickly categorize if an incoming

log contains any faults.

(ii) Instead of utilizing log templates for feature engi-

neering, the RSM algorithm adopts the extracted error

entities to build feature count vectors to learn normal

log patterns.

(iii) The proposed RSM method is able to keep the

model up-to-date by kick-starting the accumulative

retraining periodically, so that the model is able to

continuously improve itself by learning from more

and more log data.

(iv) We introduce an automatic skipping mechanism

in the model updating which can help avoid biased

model generated by contaminated log data.

The remainder of the paper is organized as fol-

lows: Section 2 outlines the log anomaly detection

system. Section 3 describes the technical details of

the RSM method. Section 4 shows the experiment re-

sults of the proposed RSM method on multiple bench-

mark datasets. Finally, Section 5 concludes the paper

and summarizes the future directions of the work.

2 LAD SYSTEM DESCRIPTION

In this section, we introduce the major steps of the

LAD pipeline and how the new RSM algorithm af-

fected the steps of log anomaly detection compared

with the PCA-based method.

2.1 PCA-based Method

The PCA-based method is illustrated in Fig. 1 and it

mainly includes the following steps:

1) Log Anomaly Training: We first ingested histori-

cal normal log data from log aggregators or streaming

data from Apache Kafka. Then the data preparation

component will apply data preprocessing to generate

a clean, normalized log data and upload them into the

cluster for further log template training. A tree-based

templates learning algorithm is then applied onto the

selected training logs to generate log templates, and

these templates are used for feature engineering and

building template/embedding count vectors.

After such count vectors are generated, they will

be aggregated and encoded for a PCA model training

where the model learns the normal patterns and the

anomaly threshold from the training logs. After the

model training, such models will be stored in the

cluster for future inference.

2) Log Anomaly Detection: During the inference,

the data preparation component first utilizes the

trained log templates generated in the training step to

perform feature engineering on the streaming infer-

ence log data. Such template/embedding count vec-

tors are aggregated and encoded and then sent to the

log anomaly detector with other metadata.

CLOSER 2022 - 12th International Conference on Cloud Computing and Services Science

224

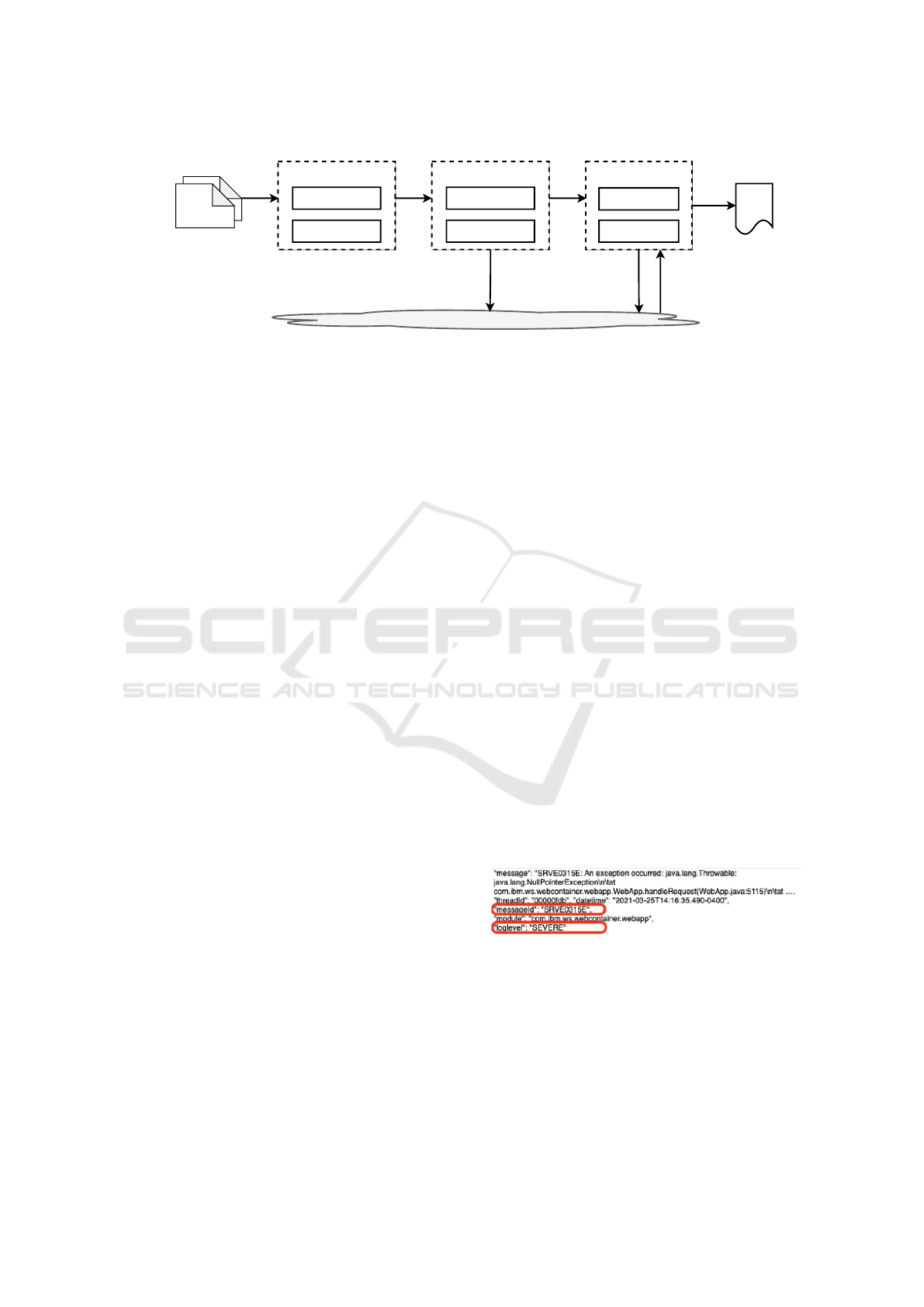

Data Lake - Historical Log Data & AI Models

Aggregation

Encoding

Feature Engineering

RSM entity Extraction

Embedding Extraction

Data Preperation

Model Update

RSM Inference

Anomaly Detection

Streaming

Logs

Log Anomaly Detection

Alerts

Save encoded

historical data

Retrieve

Historical data

&

Update RSM

models

Load latest

RSM models

Figure 2: LAD pipeline for RSM-based Method.

Log anomaly detector then retrieves the relative

PCA models from the cluster and applies them onto

the encoded inference data. Those inference logs with

PCA scores exceeding the trained threshold will be

tagged as anomalies.

2.2 RSM-based Method

By introducing the RSM-based method into the

product pipeline, the training step is eliminated and

the major phases of the LAD pipeline become as

follows and are shown in Fig. 2:

1) Data Preparation: In RSM-based method, data

preparation component will directly apply entity ex-

traction algorithm onto the streaming inference data.

Instead of utilizing log templates, these extracted

entities will be aggregated and encoded to generate

feature count vectors. Along with other metadata,

the encoded feature count vectors will be stored into

the clusters and sent out to log anomaly detector

simultaneously for inference.

2) Model Updating: After a preset time period,

the LAD is able to retrieve all the encoded log data

from the cluster ingested during the last time period

and compute the statistical distribution for all the

entities. Such statistical distribution information for

both entity and embedding count vectors are stored

as RSM models in the cluster for future anomaly

detection. The model updating is scheduled to

happen periodically unless contaminated log data

was detected in the last period. Moreover, the model

updating is accumulative so that the latest model

always represents all the historical data the LAD has

seen.

3) Log Anomaly Detection: Once the initial RSM

models are available, the log anomaly detector is able

to load the latest models from the cluster and utilize

them to perform statistical testing and determine if the

inference logs contain significantly different entities

or embedding distributions than the normal patterns.

If yes, the inference logs will be tagged as anomalies

and generate alerts.

From the above description of PCA-based and

RSM-based methods, we can notice that the log

anomaly training step in PCA-based method will need

customers to provide some normal training log data.

On the one hand, one week log data is typically

required to guarantee a good quality of log train-

ing. On the other hand, larger size of dataset may

cause long time template learning and model training.

While in the RSM-based method, the online learn-

ing method utilizes entity extraction instead of log

templates learning so that the time-consuming train-

ing step is eliminated which can significantly reduce

“time-to-value” of the LAD pipeline.

3 THE DESIGN OF RSM-BASED

METHOD

In this section, we will discuss the technical key-

points of the Real-Time Statistical Model based log

anomaly detection method proposed in this work in

details.

3.1 Log Entity Extraction

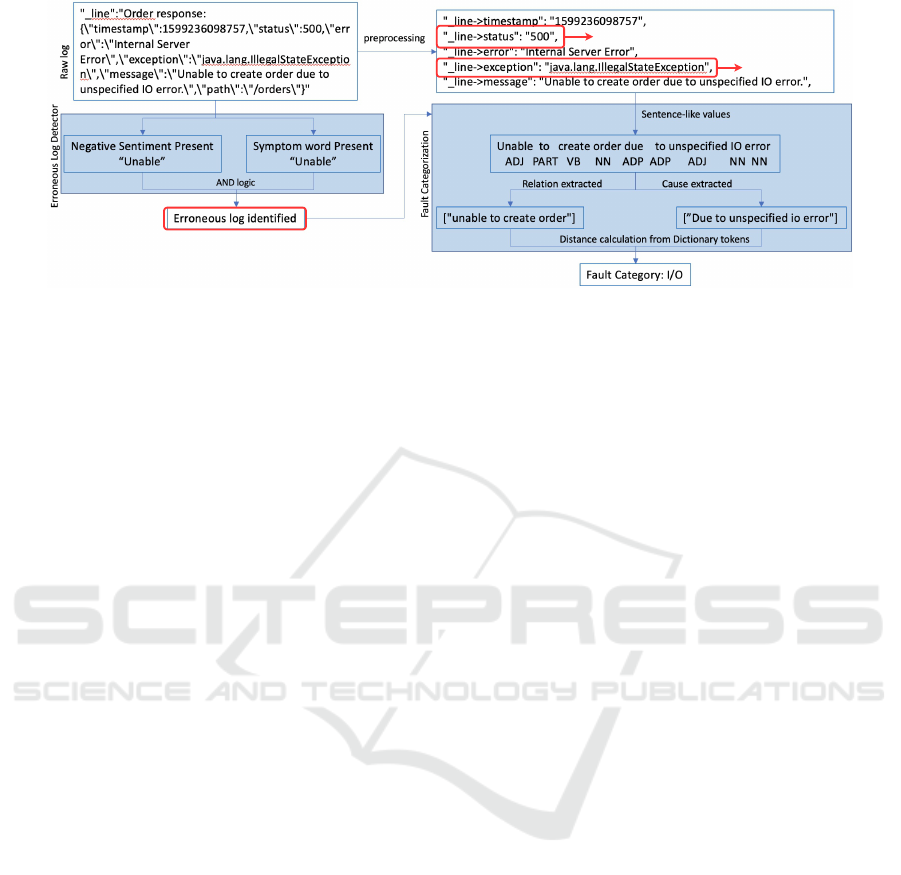

Figure 3: A log example from WebSphere Application

Server.

The RSM-based LAD product pipeline utilizes log

entities for feature engineering. Such log entities

could be the domain information from specific types

of logs, e.g. the logs from IBM WebSphere Applica-

tion Server (IBM, 2022). In addition, the log entities

could be specific errors, such as HTTP error response

code or specific exceptions which can be the cause or

Real-time Statistical Log Anomaly Detection with Continuous AIOps Learning

225

Error Code

Exception type

Figure 4: An example of error entity extraction for general logs.

symptoms for system incidents. During the data pre-

processing phase, the data preparation component in

the LAD pipeline is responsible for extracting such

log entities in real-time and then aggregating and en-

coding them to form the feature count vectors for fur-

ther anomaly detection.

3.1.1 WebSphere Logs

The IBM WebSphere Application Server is a flexible,

security-rich Java server runtime environment for en-

terprise applications. Each WebSphere log contains

a designated message ID or log level, or both. Fig. 3

shows an example of a WebSphere log which contains

a specific message ID “SRVE0315E” and the log level

“SEVERE”. Based on the prior domain knowledge of

the WebSphere, such message ID’s and log levels are

indicators of specific types of abnormal system be-

haviors. The task of the data preparation component

is to identify if an incoming log is from a Websphere

Application Server and then extract any shown mes-

sage ID’s and log levels for feature engineering.

3.1.2 General Logs

For all other non-WebSphere types of logs, during

the log data preparation stage, we utilize the SystemT

framework (Krishnamurthy et al., 2009) to define the

rules for log entity extraction (Mohapatra et al., 2018;

Aggarwal et al., 2021; Aggarwal and Nagar, 2021).

First, the sentiment analysis is performed on the log

as log messages usually contain missing or faulty at-

tributes while encountering any system errors. In or-

der to satisfy the run-time analysis requirement, we

adopt a dictionary-based approach as opposed to a

full-blown machine learning approach. The nega-

tive sentiment dictionary used in our product pipeline

was built based on our technical domain leveraging

on open-source sentiment dictionaries such as Vader

(Hutto and Gilbert, 2014) and SentiWordNet (Bac-

cianella et al., 2010). We have discarded some nouns

candidates and added some words denoting negation

because in log data, negative sentiments are mostly

associated with actions which mostly comprise of

verbs, adverbs or adjectives.

In addition, the relation and cause extraction are

performed to extract any detected error codes and

exceptions. Relation extraction includes the follow-

ing steps: (1) Dependency parsing; (2) Verb filtra-

tion and Clause/Predicate selection; (3) Extension

of Noun phrase; (4) Negative sentiment; (5) Rela-

tion clause/phrase generation. For cause extraction,

we consider the following four rules: (1) Presence

of Causative Verbs; (2) Presence of Phrasal Verbs;

(3) Presence of prepositional Adjective or Adverbial

Phrases; (4) Absence of Noun Phrase.

With the above sentiment analysis, relation and

cause extraction tools, we are able to extract error

codes, exceptions’ types from the logs if present, and

also identify if a log message indicates erroneous sys-

tem behaviour based on symptoms or negative sen-

timent dictionaries. Fig. 4 shows an example of the

entity extraction for general logs. From Fig. 4, we can

observe that the extracted error code is “500” and the

exception type is “java.lang.IllegalStateException”

and this log message is identified as an erroneous log

based on the sentiment and symptoms.

3.2 Feature Encoding

By employing the above proposed log entity extrac-

tion method, the data preparation component is able

to extract message ID’s and log levels for WebSphere

logs and extract error entities for general erroneous

logs. Those general logs without any error entities

will be tagged as “normal” logs. We use these ex-

tracted entities to build the feature count vectors.

To build the feature count vectors, we first group

any logs that occurred in a preset time period T to-

CLOSER 2022 - 12th International Conference on Cloud Computing and Services Science

226

gether, e.g. every 10 seconds, thus all logs in one time

period forms a time window. Assuming there are M

logs in the time window t, labeled as L

1

, L

2

, · ·· , L

M

,

and there are total of N different possible entities that

can be extracted out for all the logs in time window t.

If the n

th

entity is extracted from the m

th

log L

m

, then

we denoted e

n

m

= 1, otherwise e

n

m

= 0. Thus, the fea-

ture count vector for this time window t is constructed

as X

t

= [x

1

, x

2

, · ·· , x

N

] where the count of the n

th

fea-

ture is given by:

x

n

=

M

∑

m=1

e

n

m

. (1)

3.3 RSM Model Update

After the customer connects streaming logs to the sys-

tem, LAD will start running with an empty statisti-

cal baseline log anomaly detection model. The RSM

model updating happens periodically, e.g. every 30

minutes. The Data lake shown in Fig. 2 stores the en-

coded historical log data and the corresponding fea-

ture count vectors happened during the last period.

Thus, the first RSM model should be ready in 30 min-

utes based on the historical data within the previous

30 minutes.

RSM is a statistical-based log anomaly detection

method, where the RSM model contains all the ex-

tracted entities’ and embedding vectors’ statistical

metrics, such as mean value, standard deviation (std),

co-variance, sample size, etc. Given a batch of en-

coded and time-windowed logs [X

1

, X

2

, · ·· , X

T

] up-

loaded by the data preparation components during the

last period and a previous RSM model M

n−1

, we are

able to compute and update all the above statistical

metrics which form a new RSM model M

n

, which re-

flects the latest statistical distribution during normal

operation period.

Another key feature of the RSM model updating

is the automatic skipping mechanism. Before the

model updating, the LAD will automatically check

the anomaly-to-windowed-log ratio, which is defined

as the ratio of number of detected anomalies to the

number of total windowed logs ingested in the last pe-

riod. If the anomaly-to-windowed-log ratio exceeds a

preset threshold, then the LAD will tag the last period

as an incident period and skip the model update tem-

porarily for this round to avoid potential biased RSM

models. With this automatic skipping mechanism, the

RSM model is able to remember only the statistical

distribution for logs in normal operation period, with-

out manual human interference.

3.4 RSM Anomaly Detection

RSM anomaly detection is comprised of two inde-

pendent anomaly detection methods: an entity-based

detection method and an embedding-based detection

method. The entity-based method uses the statistical

metrics from extracted entities shown in Section 3.1,

while the embedding-based detection method uses

the statistical metrics from extracted embeddings (Liu

et al., 2020). The inference results from the two indi-

vidual detection methods are aggregated together to

produce a single output that forms the RSM anomaly

detection’s final inference result.

3.4.1 Entity-based Detection

In a RSM model M , using historical data, we store

the sample mean µ

e

n

, the sample standard deviation,

σ

e

n

, the sample size N

e

n

, and the indicator variable

I

e

n

for the n

th

extracted entity e

n

. I

e

n

= 1 if e

n

cor-

responds to an error entity otherwise I

e

n

= 0. Given

a time-windowed log X

t

, let x

n

be the corresponding

counts for entity e

n

in that given time-window, then

time-windowed log X

t

is flagged as an anomaly if it

is the case that

1 − Φ(

µ

e

n

− x

n

σ

e

n

) < ε | I

e

n

= 1 (2)

for any entity e

n

and for some threshold ε, e.g. 0.05.

Φ denotes the CDF for the standard normal distribu-

tion.

3.4.2 Embedding-based Detection

The RSM model M will also store an sample em-

bedding mean vector M

n

= [µ

1

, µ

2

·· · , µ

n

] for some

dimension n , e.g. 20, and a n × n covariance matrix

Σ with element (i, j) representing the covariance be-

tween dimension i and dimension j. These two met-

rics are also computed via the historical data that the

RSM model observes. An incoming time-windowed

log X

t

will contain a n dimension embedding vector,

X

t,emb

, that corresponds to that time-window’s em-

bedding, we denote the Mahalanobis distance of X

t

,

MD(X

t

) as

MD(X

t

) =

q

(X

t,emb

− M

n

)Σ

−1

(X

t,emb

− M

n

)

|

. (3)

Under the assumption that the underlying n dimen-

sion embedding vector is a multivariate normal ran-

dom variable, then it follows that

MD(X

t

) ∼

q

χ

2

n

, (4)

with χ

2

n

being a chi squared distribution with n de-

grees of freedom. We flag time-windowed log X

t

as

Real-time Statistical Log Anomaly Detection with Continuous AIOps Learning

227

an anomaly if MD(X

t

) exceeds a certain threshold,

e.g. the square root of the 95 percentile of a χ

2

n

distri-

bution.

3.4.3 Aggregation Rule

Let our entity-based detection method’s inference

result on time-windowed log X

t

be denoted as

RSM

entity

(X

t

). RSM

entity

(X

t

) = 1 if X

t

is flagged as

an anomaly by the entity-based model and 0 other-

wise. Correspondingly, let our embedding-based de-

tection method’s inference result on X

t

be denoted as

RSM

embedding

(X

t

) and also be equal to 1 if flagged as

anomaly by the embedding-based model and 0 other-

wise. We aggregate the two models’ results to create

our RSM model’s inference result on X

t

, RSM(X

t

) to

be

RSM(X

t

) = RSM

entity

(X

t

) ∨ RSM

embedding

(X

t

). (5)

4 EXPERIMENT RESULTS

In our experiments, We compare the anomaly detec-

tion performance of the LAD product pipeline be-

fore and after integrating the proposed RSM-based

method with multiple datasets. The previous version

of the LAD product pipeline adopted the PCA-based

method only. By integrating the proposed RSM-

based method, the LAD product pipeline is able to

turn on/off either PCA-based or RSM-based methods

through system configuration. Thus, in this section,

we will show evaluation results for multiple datasets

with the following three system configuration: (1)

PCA-based method only (2) RSM-based method only

(3) PCA-based & RSM-based methods.

4.1 Datasets

• Sockshop: The sockshop application is a user-

facing part of an online shop that sells socks,

which contains many microservice components

including management of the user account, cat-

alog, cart, orders, payment, shipping, etc. The

training data for PCA-based method was collected

when the application was running in normal oper-

ation with simulated user flows for one week. The

testing data was collected by running the system

in normal operation first for at least 30 minutes

and then manually ingested different types of sys-

tem incidents, with recording the timestamps of

abnormal periods to generate ground truth labels.

• Quote of the Day (QotD): The QotD applica-

tion is another simulated micro-service applica-

tion which randomly selects a quote from a fa-

mous person. The application contains many

micro-services including rating, image, author,

etc., which manage the different attributes of the

quotes. Similarly, we first collect one week nor-

mal data for PCA-based log training. The testing

data was collected in the similar way: normal logs

first, followed by abnormal logs caused by manu-

ally ingested system errors.

• IBM Watson AI service (WA): In contrast to the

above two simulated systems, we also evaluate the

LAD performance using the logs generated by the

IBM Watson AI service which is a real system

with 48 cloud micro-services and 15 applications,

ranging from distributed systems, supercomput-

ers, operating systems to server applications. The

training data and testing data for WA were col-

lected via a data collection method similar to what

was shown previously for the other two datasets.

However, the WA system is much more complex,

which results in a much larger size of logs than

Sockshop and QotD datasets.

4.2 Metrics

Log anomaly detection is one type of binary classifi-

cation problems, where the positives are the anoma-

lous log data and the negatives are those normal

log data. However, unlike other binary classification

problems, the log data for anomaly detection is very

imbalanced as the positives are usually far way less

than the negatives. The reason is that exceptions or

incidents are rare cases for the mature systems com-

pared to the normal operations. Thus, for such imbal-

anced testing log data, we evaluate the LAD perfor-

mance with the following metrics:

• True Negative Rate (TNR):

T NR =

T N

N

(6)

• False Positive Rate (FPR):

FPR =

FP

N

(7)

• Recall or True Positive Rate (TPR)

Recall =

T P

N

(8)

• F1-score

F1 =

2T P

2T P + FP + FN

(9)

CLOSER 2022 - 12th International Conference on Cloud Computing and Services Science

228

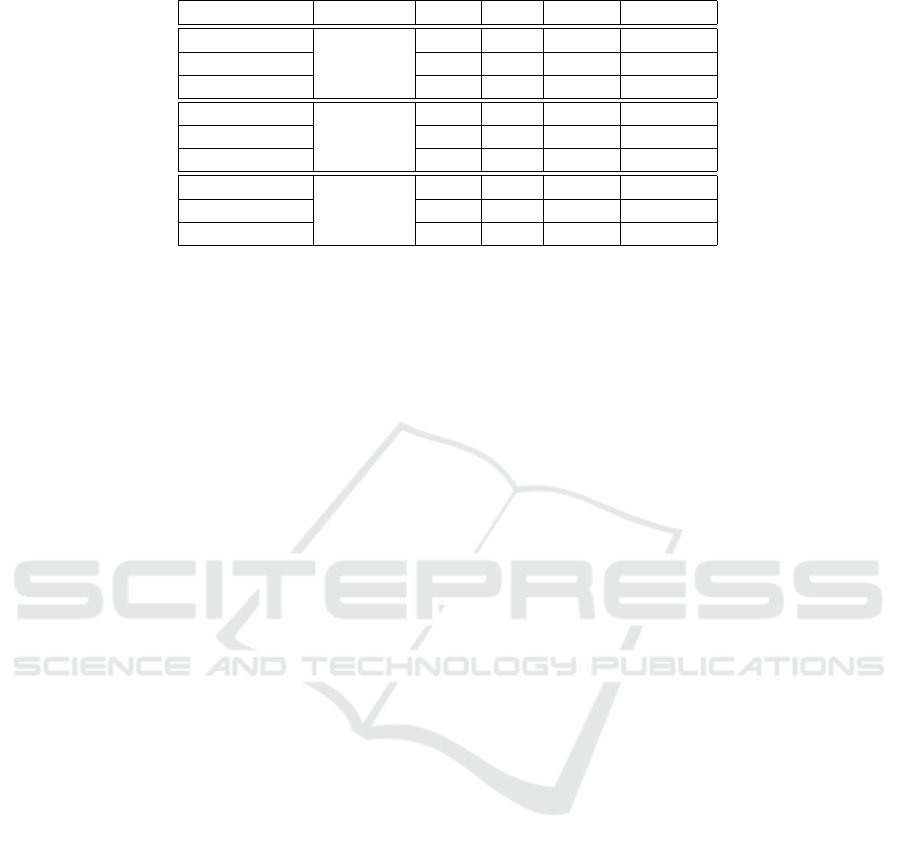

Table 1: The experiment results for three datasets with different LAD methods.

LAD method Datasets TNR FPR Recall F1-score

PCA-based 0.73 0.27 0.5 0.04

RSM-based

Sockshop

1 0 0.17 0.29

PCA & RSM 0.99 0.01 0.67 0.73

PCA-based 0.99 0.01 1 0.99

RSM-based

QotD

0.99 0.01 1 0.99

PCA & RSM 0.99 0.01 1 0.99

PCA-based 0.98 0.02 0.29 0.33

RSM-based

WA

0.84 0.16 0.66 0.35

PCA & RSM 1 0 0.35 0.52

4.3 Results

Table 1 shows the experiment results comparison for

the three datasets with different LAD methods. First,

we observe that the PCA-based LAD method per-

forms very well on QotD dataset due to the relatively

simpler log structure. However, PCA-based method

performs bad on the Sockshop and WA dataset where

the log structure is more comprehensive and informa-

tion or parameters inside the log are more fluctuat-

ing. From Table 1, we observe that compared with the

PCA-based LAD method, the proposed RSM-based

method can improve the F1-score on both Sockshop

and WA datasets without downgrading the LAD per-

formance on QotD dataset. In addition, the LAD

product pipeline allows both models to be enabled to

further improve the detection accuracy. We can ob-

serve that by enabling both methods, the False Posi-

tive Rate (FPR) has significantly reduced for all three

datasets while the Recall is improved, thus leading to

an overall improvement on the F1-score. Comparing

to the PCA-based method, we can observe the aver-

age F1-score over all three dataset is improved around

60% when both PCA-based and RSM-based methods

are enabled in the LAD product pipeline.

5 CONCLUSION AND FUTURE

WORK

Training data is not always available or sufficient, and

identifying if the training data is contaminated, or not,

may need a lot of human effort. It is important to in-

troduce an online algorithm in the LAD which can

provide predictive insights without off-line training

data and learn a system’s normal behaviours gradu-

ally. In the meantime, it is vital for the LAD sys-

tem to be smart enough to automatically identify in-

cident periods and avoid potentially biased models.

All such features are included by enabling the RSM-

based log anomaly detection method in our current

product pipeline. Experiments on multiple datasets

demonstrate the efficacy of the proposed method.

While we made significant progress in delivering a

more robust and reliable LAD pipeline, the journey of

continuous improvement of our LAD pipeline never

stops. Here are a few things we are actively working

on for future iterations.

• Enrich ensembled models for different LAD algo-

rithms to work hand in hand to further enhance the

accuracy of the model.

• Expose precision-recall tradeoff knobs to improve

the transparency of LAD algorithms to gain trust

of AI.

• Improve log comprehension so better representa-

tion can be learned from a mixture of formats.

• Seek and leverage user feedback to fine-tune indi-

vidual LAD models and prediction aggregation.

• Customize our models to handle seasonality of log

volumes and maintenance windows better.

• Differentiate anomalies, alerts and incidents to tell

a meaningful incident story.

• Correlating alerts to golden signals, service level

objectives, and error budgets for better separating

alerts from incidents and for better incident pre-

diction.

REFERENCES

Aggarwal, P., Bansal, S., Mohapatra, P., and Kumar, A.

(2021). Mining domain-specific component-action

links for technical support documents. In 8th ACM

IKDD CODS and 26th COMAD, CODS COMAD

2021, page 323–331, New York, NY, USA. Associ-

ation for Computing Machinery.

Aggarwal, P. and Nagar, S. (2021). Fault localization in

cloud systems using golden signals. Management,

21:4.

Baccianella, S., Esuli, A., and Sebastiani, F. (2010). Sen-

tiWordNet 3.0: An enhanced lexical resource for sen-

timent analysis and opinion mining. In Proceedings

Real-time Statistical Log Anomaly Detection with Continuous AIOps Learning

229

of the Seventh International Conference on Language

Resources and Evaluation (LREC’10). European Lan-

guage Resources Association (ELRA).

Chandola, V., Banerjee, A., and Kumar, V. (2009).

Anomaly detection: A survey. ACM Comput. Surv.,

41(3).

Givental, G., Bhatia, A., and An, L. (2021a). Hybrid ma-

chine learning to detect anomalies. https://patents.

google.com/patent/US20210281592A1/. Pub. No.:

US 2021/0281592 A1.

Givental, G., Bhatia, A., and An, L. (2021b). Modifi-

cation of machine learning model ensembles based

on user feedback. https://patents.google.com/patent/

US20210279644A1/. Pub. No.: US 2021/0279644

A1.

Goldberg, D. and Shan, Y. (2015). The importance of

features for statistical anomaly detection. In 7th

{USENIX} Workshop on Hot Topics in Cloud Com-

puting (HotCloud 15).

Gu, T., Dolan-Gavitt, B., and Garg, S. (2017). Bad-

nets: Identifying vulnerabilities in the machine

learning model supply chain. arXiv preprint

arXiv:1708.06733.

Hutto, C. and Gilbert, E. (2014). Vader: A parsimonious

rule-based model for sentiment analysis of social me-

dia text. In Proceedings of the International AAAI

Conference on Web and Social Media, pages 216–225.

IBM (2022). IBM WebSphere Application Server. https:

//www.ibm.com/cloud/websphere-application-server.

Krishnamurthy, R., Li, Y., Raghavan, S., Reiss, F.,

Vaithyanathan, S., and Zhu, H. (2009). Systemt: a

system for declarative information extraction. ACM

SIGMOD Record, 37(4):7–13.

Lerner, A. (2017). AIOps platforms. https://blogs.gartner.

com/andrew-lerner/2017/08/09/aiops-platforms/.

Levin, A., Garion, S., Kolodner, E. K., Lorenz, D. H.,

Barabash, K., Kugler, M., and McShane, N. (2019).

Aiops for a cloud object storage service. In 2019

IEEE International Congress on Big Data (BigDat-

aCongress), pages 165–169.

Liu, X., Tong, Y., Xu, A., and Akkiraju, R. (2020). Us-

ing language models to pre-train features for opti-

mizing information technology operations manage-

ment tasks. In International Conference on Service-

Oriented Computing, pages 150–161. Springer.

Liu, X., Tong, Y., Xu, A., and Akkiraju, R. (2021). Pre-

dicting information technology outages from hetero-

geneous logs. In IEEE International Conference On

Service-Oriented System Engineering.

Mohapatra, P., Deng, Y., Gupta, A., Dasgupta, G., Paradkar,

A., Mahindru, R., Rosu, D., Tao, S., and Aggarwal, P.

(2018). Domain knowledge driven key term extraction

for it services. In Pahl, C., Vukovic, M., Yin, J., and

Yu, Q., editors, Service-Oriented Computing, pages

489–504, Cham. Springer International Publishing.

CLOSER 2022 - 12th International Conference on Cloud Computing and Services Science

230