Development, Implementation and Acceptance of an AI-based

Tutoring System: A Research-Led Methodology

Tobias Schmohl

a

, Kathrin Schelling, Stefanie Go, Katrin Jana Thaler and Alice Watanabe

Institute of Science Dialogue, OWL University of Applied Sciences and Arts, Campusallee 12, 32657 Lemgo, Germany

Keywords: Artificial Intelligence in Higher Education, Design-based Research, Intelligent Tutoring System, Participatory

Technology Design, Scoping Review.

Abstract: This Design-Based Research (DBR) project aims to develop an intelligent tutoring system (ITS) for higher

education. The system will collect teaching and learning materials in audio and video formats (e.g., podcasts,

lecture recordings, screencasts, and explainer videos), and store them on a learning experience platform

(LXP). Then, the ITS will process them with the help of speech recognition to gain data which, in turn, will

be used to power further applications: Using artificial intelligence (AI), the platform will allow users to search

the materials, automatically compiling them according to criteria like lesson subject, language, medium, or

required prior knowledge. By the end of the last DBR cycle, the ITS will also provide a more active form of

support: It will automatically generate exercises based on predefined patterns and teaching materials, thus

allowing learners to check up on their learning progress autonomously. In order to closely match the ITS’s

features to the needs and learning habits of students in higher education, the development of this AI-based

tutoring system is accompanied by an interdisciplinary team which will continuously re-evaluate and adapt

the concept over the course of several DBR cycles. Our goal is to derive implications for the system’s technical

development by collecting and evaluating educational research data (mixed methods design; primary and

secondary research methods).

1 INTRODUCTION

As the digital transformation of higher education

progresses, more and more teaching/learning

materials (TLM) are made available online, both open

access and within the universities’ learning

management systems (LMS). These materials allow

students to create learning environments best suited

to their specific interests and needs. Wherever,

whenever and whatever they want to learn: Thanks to

the constantly growing number of online materials,

they can now study or review materials at their own

pace.

When it comes to audio and video recordings,

however, finding materials dealing with the exact

topic on which a student has chosen to focus may still

prove surprisingly challenging—even for the tech-

savvy students of today. On the one hand, search

engines, open educational repositories (e.g.,

databases like cccoer.org or oercommons.org), and

LMS (e.g., Blackboard, Canvas, or Moodle) still rely

a

https://orcid.org/0000-0002-7043-5582

on manually created metadata. If this metadata does

not contain a comprehensive list of keywords

covering all of the topics presented in a recording,

students will often fail to find appropriate learning

materials. On the other hand, the platforms allocating

the recordings rarely provide more than rudimentary

assistance to users who are researching topics in the

context of self-study. For example, students looking

for a definition of “singular value decomposition”

might find a promising mathematics lecture available

online. However, a 90-minute lecture on linear

algebra might only dedicate a few sentences to

singular value decomposition, leaving students to

manually sift through the entire recording to find out

what time frame provides the information they need.

Considering the importance of efficient self-study

in higher education, it would be desirable for video

and audio TLM to support a faster, more intuitive

mode of research. Ideally, students would directly

find the fifteen minutes of a recording dealing with

their topic. But what if a more sophisticated search

Schmohl, T., Schelling, K., Go, S., Thaler, K. and Watanabe, A.

Development, Implementation and Acceptance of an AI-based Tutoring System: A Research-Led Methodology.

DOI: 10.5220/0011068500003182

In Proceedings of the 14th International Conference on Computer Supported Education (CSEDU 2022) - Volume 2, pages 179-186

ISBN: 978-989-758-562-3; ISSN: 2184-5026

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

179

was not the only feature of an LMS that supported

self-study? What if students could filter the materials

by topic and by learning objective? And what if they

could receive recommendations on further materials

for in-depth study—including exercises tailored to fit

their previous knowledge? The more precisely TLM

could match individual learning processes, the more

easily students could focus on the content.

Cue modern technology: “AI-based tools and

services have a high potential to support students,

faculty members and administrators throughout the

student lifecycle” (Zawacki-Richter et al., 2019, p.

20). Applied to the problem of finding audio and

video TLM online, AI makes it possible to optimise

search processes and support students adaptively by

providing individual feedback. This way, intelligent

tutoring systems (ITS) can help students review their

lessons, prepare for exams or acquire entirely new

skills through self-study.

The ideas are certainly out there, but the reality in

higher education leaves much to be desired. To date,

no German university uses intelligent search

functions to help students identify recordings they

might want to use as instructional materials. And

although there is a growing demand for AI-based

applications in higher education—which in Germany

is currently backed by an equally growing number of

research grants (Bundesministerium für Bildung und

Forschung, 2020, 2021; de Witt et al., 2020)—none

of the systems developed thus far used instructional

design to shape their frameworks, thereby ensuring

that the AI-based generation of exercises closely

matches students’ habits and needs (e.g. Zawacki-

Richter et al., 2019).

2 CONCEPTUAL DESIGN

The project described in this article aims to create an

ITS called HAnS (short for “Hochschul-Assistenz-

System”, i.e., “assistance system for higher

education”) which is meant to support students from

different disciplines in their quest for self-directed

digital learning. Developed and implemented

collectively from 2021 to 2025 by twelve cooperating

German universities and research institutes, it will

exemplify the benefits of AI and Big Data in higher

education and—ideally—serve to drive innovation

within the field of technology-based learning

The system itself builds upon existing learning

materials and addresses three educational and/or

technical potentials: (1) automatic transcription and

indexing of audio-visual educational resources (e.g.,

lecture recordings, instructional videos, screencasts,

and podcasts), (2) personalised search and

recommendation of learning materials, and (3)

dynamic generation and gamification of individual

learning offers. These three potentials will be

combined to create the framework of an ITS that both

students and teachers can use to improve the

effectiveness of self-study in higher education.

The developmental goals of the project are the

science-driven design and integration of a learning

experience platform, including components for

natural language processing, speech recognition, and

indexing. To train the ITS, we use authentic audio-

visual TLM provided by teachers from various

German universities. The system adaptively

assembles these materials based on user information

and educational guidelines embedded in the system to

generate dossiers on specific topics and individual

exercises for self-study.

Per its design-based research framework, the

project pursues three processual goals: The creation

of the AI-based system, its iterative evaluation and

adaptation. Therefore, the integration of the ITS

prototype into existing learning ecosystems will be

accompanied by educational research, continuous

testing, and formative evaluation.

Usage goals, in turn, are interactions between

users and the ITS. As the HAnS system will become

part of everyday teaching and learning processes at

the twelve universities involved in the project, the

sheer number of interactions will significantly

improve the AI.

2.1 Agile Development Guided by

Educational Research and

Formative Evaluation

The technical development of the ITS will be guided

and continuously evaluated by a group of researchers

specialising in higher education. Their analyses serve

several purposes. During the first stages of

development, they will provide a more thorough

understanding of the initial situation: How have AI-

based technologies been applied to post-secondary

education so far? Which theoretical models were used

to create their frameworks—did they involve

instructional design? And how have the applications

affected teaching and learning processes? To gain an

overview of projects and concepts which have already

been published, we will create a scoping review

(Levac et al., 2010) of the research on the application

of AI in higher education.

Scoping reviews are still considered a relatively

new approach to examining the state of research.

Focusing on the scope of information available on a

CSEDU 2022 - 14th International Conference on Computer Supported Education

180

given topic, they provide a comprehensive overview

of the existing literature (Peters et al., 2020; Munn et

al., 2018). Unlike systematic literature reviews,

however, they include neither an evaluation of the

results nor critical analyses of the methodology used

in the gathered literature. Instead, scoping reviews

provide a way to map a field of research quickly yet

thoroughly. The wide range of results inherent to this

approach proves especially useful when few studies

deal with the exact topic and methodology of a

project, forcing researchers to collect and compare

findings from different fields.

As a starting point for a joint DBR project to

which several teams will contribute their expertise,

the scoping review has three distinct advantages.

Firstly, its methodology allows the research team in

charge of this preparatory study to collate data from

various academic fields and leave the evaluation of

the results to the specialists taking on the different

aspects of the design during the later stages.

Secondly, scoping reviews are particularly well-

suited for mapping quickly evolving fields of research

such as AI in higher education: “[A] systematic

review might typically focus on a well-defined

question where appropriate study designs can be

identified in advance, whilst a scoping study tends to

address broader topics where many different study

designs might be applicable” (Arksey and O’Malley,

2005, p. 20). Thirdly, the open-end structure of the

scoping review can be adapted to match the iterative

structure of DBR development cycles. If during later

cycles new areas of research become relevant to the

project, more topics and keywords can easily be

added to expand the scoping review.

In order to test the HAnS system as development

progresses, we will initially implement prototypes of

the learning materials, evaluate them formatively, and

experimentally test them under controlled conditions

with small groups of learners. This way, the system

improves with each cycle. At the same time, constant

educational analysis ensures that data protection,

transparency, and ethics are used as crucial guiding

factors for the development and implementation of

the tutoring system. Students and teachers will be

included explicitly in this process as future users, so

their concerns and hopes can be comprehensively

addressed as development progresses. Once the

automatic modules for the creation of exercises and

monitoring users’ achievement of learning objectives

have reached a satisfactory level of maturity, a

summative evaluation will follow.

What sets the HAnS apart from other ITS is its

focus on audio and video recordings. Automatic

transcription helps users identify learning materials

that deal with specific topics. However, an improved

search function is only the first step towards the

intended learning experience platform. HAnS also

uses the transcripts to automatically create exercises

that will help users review the information provided

in the recordings. This might, for example, allow

students to prepare for exams by revisiting online

lectures and using quizzes generated by the AI to

check whether they remember the technical terms

introduced in these lectures.

Overall, HAnS aims to provide a quick and

efficient way to structure self-directed learning

throughout higher education. To ensure that students

can use the ITS from their first semester until their

final exam, the system must accommodate different

learning objectives (e.g., gaining new knowledge vs.

re-activating or expanding existing knowledge) and

skill levels. Therefore, the system will supplement the

multiple-choice tasks, cloze tests, and

question/answer catalogues with recommendations

for further study. On top of this, related learning

materials will be pointed out to users as links to

recordings available via HAnS or as automatically

created cross-references to external sources.

Furthermore, the AI will generate a ranking of

individual sections taken from different video or

audio files linked to specific metadata. This

contributes to a more nuanced search function,

allowing students to filter the learning materials for

specific content (e.g., related academic fields,

recommended semester, or theory vs. application)

and choose materials in accordance with their

personal study preferences (e.g., text-based,

numerical or graphical visualisation of concepts

explained in the recordings). We expect this tailoring

of learning materials to students’ objectives, needs,

and preferences to improve academic performance

significantly once the AI-based recommendation

feature reaches a sufficient degree of maturity.

Through users’ constant interaction with the ITS,

innovative learning materials are created and

continuously adapted to the current state of

educational technology. Students will, for example,

be able to rate whether they have reached their

learning objectives and leave feedback for the

teachers who have created the learning materials. At

the same time, users can add their recommendations

for further study on a particular topic to the HAnS

database. This feedback loop will help us assess the

quality of the materials and the accuracy of the

educational design framework.

The algorithms for the personalised search and

individualised generation of exercises and

recommendations are continuously and automatically

Development, Implementation and Acceptance of an AI-based Tutoring System: A Research-Led Methodology

181

adapted through a collaborative evaluation process.

Both will become more customised through user

interaction. HAnS workshops for students and

teachers will accelerate this part of the development

process: The more users interact with the AI, the

faster the ITS can grow into a system that offers spot-

on individual support. Easy access to the system must

therefore also be one of the main concerns guiding the

development of the HAnS interface. Successful

implementation of the ITS at the twelve universities

participating in the project requires an AI-based

tutoring system that can be connected to different

LMS. For this reason, compatibility with a variety of

systems will be one of the basic features of the

software—and may later serve as the cornerstone for

the expansion and transfer of HAnS to other virtual

learning environments and institutional contexts.

2.2 Design-based Research

Methodology

The HAnS project combines agile technological

development with the equally agile methodology of

design-based research (DBR). As a framework, DBR

allows researchers to generate theoretical insights

through a hands-on approach (Design-Based

Research Collective, 2003; McKenney and Reeves,

2012; Reimann, 2013; Bakker and van Eerde, 2015).

Applied to the learning sciences, this usually means

that researchers identify a specific issue within a

learning context and create an intervention to solve it.

Then, they put their solution to the test, documenting

and evaluating the results so they can be used as the

starting point for another development cycle. Refined

over the course of several DBR cycles, the

intervention comes closer and closer to an ideal

solution—and in the meantime, it also provides

researchers with new insights and data (Jahn, 2017).

Thanks to this two-pronged approach to teaching and

learning, “[d]esign-based research is increasingly

used as a research approach that succeeds in

advancing current teaching-learning research and

pedagogical practice in equal measure through

theory-based design processes” (Knogler and

Lewalter, 2014, p. 2; cf. also Hasselhorn et al., 2014).

Our ITS is meant to solve a core problem of

digital self-study: Students have to possess advanced

research skills and invest a lot of time to find learning

materials that suit their interests and needs. This

applies particularly to audio and video recordings. As

an intervention, we will create an ITS that supplies

students with well-indexed learning materials and

individually generated exercises.

The development of HAnS follows Easterday’s

(2018) approach

to DBR, which adapts iterative

structures used in software development for research

purposes.

By synchronising the workflows of

research and technical development, the specialist

groups can coordinate their tasks and create synergy

between the different departments of this

interdisciplinary project. The procedure is iterative

and cyclical, i.e., there will be multiple alternations

between exploration, design, and evaluation.

Educational research will monitor the development of

HAnS and adapt the system to potential user groups’

preferences, habits, and needs. Continuous evaluation

will allow the more research-oriented groups within

the project team to derive design recommendations

which we will then use to shape the next iteration of

the prototype.

The development of HAnS comprises three

survey phases. Survey phase I evaluates

conclusiveness and feasibility of the project, survey

phase II assesses the initial local benefit and

theoretical soundness of the assistance system, and

survey phase III evaluates both the verifiable

effectiveness of the system and its guiding principles,

which may then be generalised and applied to other

learning contexts. As the system reaches higher levels

of maturity, the test scenarios and methods used to

gauge the effectiveness of the ITS will also have to

change.

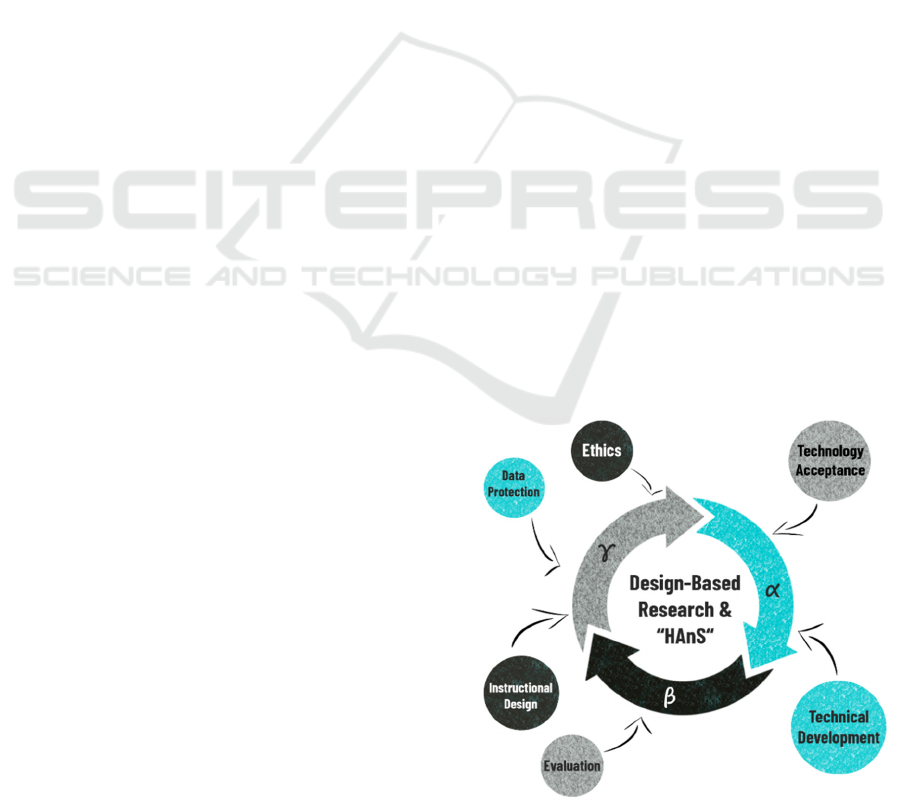

Within each of these three phases, three DBR

cycles will take place (α cycle, β cycle, ɣ cycle). Since

complex interventions such as HAnS usually

comprise several components (e.g., automated

practice tasks, learning level checks, or feedback

processes), there will also be several so-called micro

cycles as each of those components is created within

Figure 1: DBR cycle of “HAnS”.

CSEDU 2022 - 14th International Conference on Computer Supported Education

182

its own, smaller DBR cycle running parallel to the

main development cycles.

The α cycle focuses on mapping students’ study

conditions and learning requirements as well as the

personal, social, and cultural contexts which affect

(digital) self-study. Additionally, we will conduct

decoding interviews with teachers according to the

guidelines developed by Riegler and Palfreymann

(2019) to establish which intended learning outcomes

(ILO) and subject-specific requirements teachers

anticipate when they create learning materials for

higher education. By comparing these ILO with

students’ actual learning outcomes (ALO), we aim to

identify so-called bottlenecks, i.e., challenging

learning situations in which students might profit

from additional support and explanation the ITS

could provide in lieu of absent teachers (Riegler and

Palfreymann, 2019).

In the β cycle, the focus shifts from the success

factors of digital self-study to the ITS prototype in

use. Here, we assess the quality of the learning

materials, students’ decision for or against the AI-

based tutoring system, and their interaction with the

assistant. This includes students’ subjective

interpretation of their experience with HAnS and their

wishes regarding design and functionality. Within the

same cycle, we will again interview teachers,

focusing this time on the selection and evaluation of

their teaching materials. We will also interview both

user groups about their acceptance of relevant project

components.

In the ɣ cycle, we will determine the effects of the

ITS on students’ knowledge, supra-disciplinary

cognitive effects, and key competencies necessary for

self-organised learning by way of an impact analysis

including both the ALO and the underlying

mechanisms responsible for the learning

environment’s impact. From this evaluation, we will

derive design recommendations for effective

learning. In addition, the third DBR cycle also

contains a “bottom-up ethics” approach to users’

perspectives on the ITS. We will systematically

evaluate learners’ and teachers’ opinions on the AI-

based tutoring system to incorporate their hopes and

concerns into the next iteration of the prototype.

2.3 Empirical Methods

The following empirical mixed-methods approaches

are used within the DBR framework to guide the

HAnS project through its development cycles:

We will use impact analyses with a quasi-

experimental (waiting) control group design to

identify predictors of success and conditions for the

transfer of the HAnS concept to other subjects and

framework conditions (scaling). We will derive

statements on possible adaptations and the

generalisability of the system from the results. The

data for these analyses will be collected from teachers

and learners. To guide the inquiry, we will develop

impact models with both groups. Within the

framework of the impact analyses, the question of

impact mechanisms will also be addressed through

“process tracing” (Beach, 2017).

Longitudinally structured, quantitative online

surveys will evaluate students’ ALO and the

achievement of learning objectives as seen by

teachers and learners (triangulation). Besides

identifying changes in learning behaviour and

academic success over time, the longitudinal design

of these studies will also allow us to contrast survey

results of students and teachers from different

academic disciplines. This will provide additional

information on the effectiveness of the prototype and,

more importantly, the potential of HAnS as a learning

tool in particular fields of study. Central dimensions

and indicators for these surveys, therefore, include

target group characteristics, media, and content of the

learning materials, planning and implementation with

usage situation, learning location, and reflection

methods.

Parameterisation creates reliable data from

subjective information provided by HAnS users and

developers. For this, we will compare the self-reports

collected as part of the longitudinal section with

objective parameters or methods of analysis, such as

frequency analysis, interaction analysis, causal

modelling, sentiment analysis (via text mining), or

topic analysis in the text material (via Dirichlet

analysis). The data basis is the HAnS data protocol,

i.e., the interaction of developers and users with the

different iterations of the prototype.

An evaluation of already existing digital

teaching materials from different disciplines will

provide a baseline for the development of the

prototype. In later stages, we will evaluate the ITS

through a representative survey with probabilistic

sampling, based on purposive case type selection,

qualitative sampling plans, and descriptive data with

a view to teacher and learner perspectives. At the

beginning of the project, however, we will evaluate

how audio and video recordings are used as learning

materials without any AI-based support. The criteria

used in this analysis—such as the use of additional

media, students’ motivation, and the ILO teachers

associate with certain learning materials—will later

be used to compare the effectiveness of HAnS to that

Development, Implementation and Acceptance of an AI-based Tutoring System: A Research-Led Methodology

183

of learning materials provided without the AI-based

features of the ITS.

We will apply a reconstructive documentary

analysis to records of online group discussions,

asking students and teachers to share their opinions

and knowledge regarding the potentials and

challenges of AI in higher education. This analysis

aims to identify the explicit and implicit value

systems guiding the potential HAnS users.

Considering the project’s duration, these group

discussions can also be used to effectively counteract

the onset of tunnel vision in later research and

development cycles. By comparing their expert

knowledge of the AI-based tutoring system with the

application-oriented perspective of students and

teachers, our developers and research teams will gain

a deeper understanding of what potential users expect

from an ITS such as HAnS.

Ethnographic case studies will further address

students’ use of the AI-based tutoring system. Based

on ethnographic workplace studies covering

computer labs at universities and students’ private

learning spaces, document analyses of the learning

units, and subsequent interviews with students will be

used to record practises of learning and individual

user experiences with the implemented AI-based

learning materials.

3 DISCUSSION

HAnS aims to expand the horizon of ITS projects in

higher education by creating a comprehensive and,

above all, fully functional intelligent learning aid that

will be implemented at twelve German universities.

To create and evaluate a system as complex as this,

we will utilise the combined expertise of twelve

groups of specialists from different academic

disciplines—ranging from IT professionals and

experts on ethics to researchers from the educational

sciences. Of course, coordinating such a large and

heterogeneous research team presents a challenge. In

order to integrate evaluation, research design,

methodology, and data, the shared workflows will

have to be structured systematically. For this reason,

we have decided to utilise a highly innovative

methodology. By combining several relatively new,

agile approaches, we can apply models from the

educational sciences and partial surveys in a way that

allows research and evaluation to keep pace with

agile software development.

With DBR as its cornerstone, this methodology

allows us to combine the different methods of

evaluation in which the teams specialise into a shared

framework of agile research. The iterative cycles of a

DBR project establish a reciprocal link between the

evaluation results, the results of the didactic analyses,

and the progress of technological development.

Processing the findings from the partial analyses of

users’ needs, wants, and interactions with the

prototype which will be contributed by the

participating universities, we can derive

recommendations for the iterative re-design and

adaptation of the ITS. In order to gain a critical

perspective on our own findings, we will also create

a data feedback loop that will present the results of

qualitative and quantitative research to the

investigated user groups, creating additional evidence

for the plausibility of our interpretations.

Communicative Member Checks (Koelsch, 2013)

will add another layer of transactional and

transformational validity to the results.

The iterative DBR design is framed by a scoping

review which will compile and present relevant

models and concepts of educational theory. These

will guide both the empirical methods and the design

of an educational framework for HAnS. Since the

expert groups must work in parallel to complete

interlocking DBR cycles, we have chosen the scoping

review as a method for mapping the existing

literature. Consecutive partial reviews would cause

the DBR cycles to stagger, but a scoping approach

allows us to quickly compile large amounts of

research and, consequently, start the first cycle

without needing one team to prepare their first

contribution to the project months in advance.

Instead, we can use the scoping review to form causal

models for the first impact analyses as well as the

study groups and subjects for the evaluation—and, if

necessary, we can still expand our review during later

stages, adapting the scope of theoretical research to

the results of the empirical studies and the progress of

the prototype.

Finally, we must consider that in DBR projects, the

context is part of the intervention. Consequently,

context variables are not “confounding variables”:

Instead, they are indispensable for cognition.

Generalisations are not based on visible activities but

on connections between interventions, contextual

conditions and effects about which one makes

corresponding assumptions to guide the design, testing

and evaluation process (Wozniak, 2015, p. 602). Our

goal is to create highly transparent documentation of

the entire DBR process so HAnS will not only be

developed as a particular ITS, but as a template for an

intelligent learning support software embedded in a

learning experience platform that is easy to transfer to

other learning and teaching contexts.

CSEDU 2022 - 14th International Conference on Computer Supported Education

184

4 CONCLUSIONS

The particular design challenge of the HAnS project

is to develop a digital learning space that takes into

account the individual educational requirements and

the different cognitive practices of students in higher

education. To create an AI-based ITS that generates

individualised learning materials, we will have to

assess existing courses as well as students’ and

teachers’ situations, skills, and opinions. On top of

that, we will also have to find ways to identify locally

functioning partial solutions which can be used as

starting points for more generalised design principles.

From theory formation through application to

verification, we intend to cover all of these stages

within a DBR framework which allows us to use a

problem-solving strategy that is both agile and

holistic, drawing inspiration and expertise from the

various specialisations present within our team of

twelve expert groups.

As a result of this agile approach, we expect to

derive design principles that can be directly

implemented (exemplarily) in our AI-based tutoring

system HAnS, but also provide guidance for future

projects: Ideally, our design principles will be easily

transferred and adapted to new cross-institutional

learning architectures and the educational research

which will shape them.

REFERENCES

Arksey, H. & O’Malley, L. (2005). Scoping studies:

towards a methodological framework. International

Journal of Social Research Methodology, (8), 19–32.

https://doi.org/10.1080/1364557032000119616

Bakker, A. & van Eerde, D. (2015). An introduction to

design-based research with an example from statistics

education. In A. Bikner-Ahsbahs, C. Knipping & N.

Presmeg (Eds.), Approaches to qualitative research in

mathematics education. Advances in Mathematics

Education. Springer, Dordrecht.

Beach, D. (2017). Process-Tracing Methods in Social

Science. Oxford Research Encyclopedia of Politics.

https://doi.org/10.1093/acrefore/9780190228637.013.1

76

Bundesministerium für Bildung und Forschung (2020).

Künstliche Intelligenz. https://www.bmbf.de/de/

kuenstliche-intelligenz-5965.html

Bundesministerium für Bildung und Forschung (2021).

Richtlinie zur Bund-Länder-Initiative zur Förderung

der Künstlichen Intelligenz in der Hochschulbildung.

https://www.bmbf.de/foerderungen/bekanntmachung-

3409.html.

Design-Based Research Collective. (2003). Design-based

research: An Emerging Paradigm for Educational

Inquiry. Educational Researcher, 32(1), 5-8.

https://doi.org/10.3102%2F0013189X032001005

de Witt, C., Rampelt, F. & Pinkwart, N. (2020). Künstliche

Intelligenz in der Hochschulbildung. Whitepaper.

Berlin, KI-Campus. doi: 10.5281/zenodo.4063722.

Easterday, M. W., Rees Lewis, D. G. & Gerber, E. M.

(2018). The logic of design research. Learning:

Research and Practice, 4(2), 131–160.

https://doi.org/10.1080/23735082.2017.1286367

Hasselhorn, M., Köller, O., Maaz, K. & Zimmer, K. (2014).

Implementation wirksamer Handlungskonzepte im

Bildungsbereich als Forschungsaufgabe.

Psychologische Rundschau, 65(3), 140-149.

https://doi.org/10.1026/0033-3042/a000216

Jahn, D. (2017). Entwicklungsforschung aus einer

handlungstheoretischen Perspektive: Was Design

Based Research von Hannah Arendt lernen könnte.

EDeR - Educational Design Research, 1(2), 1-17.

https://doi.org/10.15460/eder.1.2.1144

Koelsch, L. E. (2013). Reconceptualizing the Member

Check Interview. International Journal of Qualitative

Methods, 12(1), 168-179. https://doi.org/10.1177%2F1

60940691301200105

Knogler, M. & Lewalter, D. (2014). Design-Based

Research im naturwissenschaftlichen Unterricht. Das

motivationsfördernde Potenzial situierter

Lernumgebungen im Fokus. Psychologie in Erziehung

und Unterricht, 61, 2-14. http://dx.doi.org/10.2378/

peu2014.art02d

Levac, D., Colquhoun, H. & O’Brien, K. K. (2010).

Scoping studies: advancing the methodology.

Implementation Science: IS, 5(69), 1–9.

https://doi.org/10.1186/1748-5908-5-69

McKenney, S. & Reeves, T. (2012). Conducting

Educational Design Research. London: Routledge.

Munn, Z., Peters, M.D.J., Stern, .C, Tufanaru, C.,

McArthur, A. & Aromataris, E. (2018). Systematic

review or scoping review? Guidance for authors when

choosing between a systematic or scoping review

approach. BMC Med Res Methodol 18, 143.

https://doi.org/10.1186/s12874-018-0611-x

Peters, M. D. J., Marnie, C., Tricco, A. C., Pollock, D.,

Munn, Z., Alexander, L., McInerney, P., Godfrey, C.

M. & Khalil, H. (2020). Updated methodological

guidance for the conduct of scoping reviews. JBI

Evidence Synthesis, 18(10), 2119-2126.

https://doi.org/10.11124/JBIES-20-00167

Reimann, P. (2013). Design-based Research – Designing as

Research. In R. Luckin, S. Puntambekar, P. Goodyear,

B. L. Grabowski, J. Underwood & N. Winters (Eds.),

Handbook of Design in Educational Technology. New

York: Routlege.

Riegler, P. & Palfreymann, N. (2019). Decoding the

Disciplines: Entwicklung effektiver Lernaktivitäten

durch fachbezogene Lerngespräche. In B. Meissner, ,

C. Walter, B. Zinger, J. Haubner & F. Waldherr (Eds.),

Tagungsband zum 4. Symposium zur Hochschullehre in

den MINT-Fächern. Nürnberg, Technische Hochschule

Nürnberg Georg Simon Ohm & Zentrum für

Hochschuldidaktik (DiZ).

Development, Implementation and Acceptance of an AI-based Tutoring System: A Research-Led Methodology

185

Sandoval, W. (2014). Conjecture Mapping: An Approach

to Systematic Educational Design. Journal of the

Learning Sciences, 23(1), 18-36.

https://doi.org/10.1080/10508406.2013.778204

Wozniak, H. (2015). Conjecture mapping to optimize the

design-based research process. Australasian Journal of

Educational Technology, 31(5), 597-612.

https://doi.org/10.14742/ajet.2505

Zawacki-Richter, O., Marin, V. I., Bond, M. & Gouverneur,

F. (2019). Systematic review of research on artificial

intelligence applications in higher education – where

are the educators? International Journal of Educational

Technology in Higher Education, 16(39), 1-27.

https://doi.org/10.1186/s41239-019-0171-0

CSEDU 2022 - 14th International Conference on Computer Supported Education

186