Guidelines’ Parametrization to Assess AAL Ecosystems' Usability

Carlos Romeiro

2

and Pedro Araújo

1,2

1

Instituto de Telecomunicações (IT-UBI), Universidade da Beira Interior, Covilhã, Portugal

2

Department of Computing, Beira Interior University, Covilhã, Portugal

Keywords: Usability, Ambient Assisted Living, User Interaction, Automation, Heuristics Analysis.

Abstract: With the aging of the population, healthcare services worldwide are faced with new economic, technical, and

demographic challenges. Indeed, an effort has been made to develop viable alternatives capable of mitigating

current services’ bottlenecks and of assisting/improving end-user’s life quality. Through a combination of

information and communication technologies, specialized ecosystems have been developed; however,

multiple challenges (ecosystems autonomy, robustness, security, integration, human-computer interactions

and usability) have arisen, compromising their adoption and acceptance among the main stakeholders.

Dealing with the technical related flaws has led to a shift in the focus of the development process from the

end-user towards the ecosystem’s technological impairments. Although many issues, namely usability, have

been reported, solutions are still lacking. This article proposes a set of metrics based on the parametrization

of literature guidelines, with the aim of providing a consistent and accurate way of using the heuristic

methodology not only to evaluate the ecosystem’s usability compliance level, but also to create the building

blocks required to include automation mechanisms.

1 INTRODUCTION

The age pyramid has been shifting in both western

and eastern civilizations. The decrease in birth-rates

and the overall improvement of health care services,

combined with higher life expectancy, have led to the

current population distribution tendency on a

worldwide scale (Eurostat, 2019).

This phenomenon poses new challenges and

opportunities in several sectors, specially in the health

sector, where there have been growing demands to

ensure the elderly’s wellbeing. These demands,

combined with resources shortage and lack of a

patient-oriented approach, compromise both the

efficiency and availability of these core services. As

a consequence, the scientific and industrial

communities have attempted to develop an ICT based

solution able to improve the user’s quality of life,

ensure his/her autonomy, optimize the economic

sustainability of medical assistance services and

address the healthcare services specific needs – the

Ambient Assisted Living Ecosystems (Curran, 2014).

Despite their improvement, multiple challenges

should be tackled in order to make their widespread

adoption feasible and secure. Challenges related with

multiple topics, such as security, usability, aytonomy,

data management among others (Curran, 2014; A. V.

Gundlapalli, M.-C. Jaulent, 2018; Van Den Broek,

G., Cavallo, F., Wehrmann, 2010; Peek et al., 2014;

Greenhalgh et al., 2013; Duarte et al., 2018; Mkpa et

al., 2019; Ismail & Shehab, 2019; Vimarlund &

Wass, 2014).

Concerning the system’s usability, multiple

studies have attempted to identify the key factors

compromising it, and indeed several approaches have

been proposed to aid in its multiple context analysis

(Macis et al., 2019; Martins & Cerqueira, 2018;

Hallewell Haslwanter, Neureiter, et al., 2018;

Hallewell Haslwanter, Fitzpatrick, et al., 2018;

Holthe et al., 2018). To tackle this issue the author’s

proposition was to empower the product

manufacturers and provide them a simple and feasible

way of evaluating and monitoring the product’s

usability. From all the available methodologies, the

one eligible to be executed in an enterprise setup, due

to its ihnerent cost and speed of execution, was the

heuristic-based. Alas, it also presents limitations that

compromise its adoption, namely its results’ accuracy

and its restricted applicability.

Considering the challenges presented, this article

provides a parametrization of the usability guidelines

depicted in literature. Our aim is to: 1) optimize the

Romeiro, C. and Araújo, P.

Guidelines’ Parametrization to Assess AAL Ecosystems’ Usability.

DOI: 10.5220/0011043800003179

In Proceedings of the 24th International Conference on Enterprise Information Systems (ICEIS 2022) - Volume 2, pages 309-316

ISBN: 978-989-758-569-2; ISSN: 2184-4992

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

309

subjectivity level typically found in heuristic-based

methodologies, 2) optimize their overall accuracy and

results consistency, 3) extend its accessibility/

aplicability to non-usability experts and 4) minimize

the effort typically related to their automation.

A thorough search in literature was conducted in

order to identify what can be learned from the best

practices depicted, how they can be applied in a

practical scenario and how the inclusion of

automation can be a feasible option in an enterprise

context.

2 MULTIDIMENSIONALITY OF

USABILITY

Usability is a multidimensional property that reflects

the scope in which a product/service is expected to be

used (Application, 2016; ISO 9241-11, 1998; Cruz et

al., 2015). Typically, both User Experience and

Usability are mixed during the product analysis

phase, given their broad scope and definition.

However, these properties have different purposes:

while User Experience focuses on the analysis of how

behavioural, social or environmental factors

influence the user’s product perception (Saeed et al.,

2015; Martins et al., 2015a; Quiñones et al., 2018),

Usability focuses on the efficiency, effectiveness and

satisfaction in which the user is able to accomplish a

certain goal during the product’s interaction process

(Quiñones et al., 2018). To ensure the product

usability from an early stage, it is mandatory to define

a set of guidelines to be adopted during the interface’s

implementation phase and methodologies to identify

usability bottlenecks.

2.1 Guidelines

The search for a set of golden rules that could assist

the team during the development cycle was explored

from an early stage.

In 1990 the authors Jakob Nielsen and Rolf

Molich proposed 10 heuristic principles (Molich &

Nielsen, 1990). In 1996 the author Jill Gerhardt-

Powals proposed 10 cognitive principles (Powalsa,

1996) focused on a holistic analysis of the usability

evaluation process. In 1998 the author Ben

Shneiderman proposed a set of 8 golden rules

(Shneiderman, 2010). In 2000 the authors Susan

Weinschenk and Dean Barker combined Jakob

Nielsen’s principles with vendor specific guidelines

to achieve a set of 20 principles (Science, 2016) that

intended to bridge the gap between the defined

principles and the typical environments in which they

were to be applied.

2.2 Methodologies

Regarded as an intrinsic part of the design and

development lifecycle, the usability methodologies’

main role is the identification and mitigation of

usability bottlenecks (Martins et al., 2015b).

From the multiple methodologies available –

enquiries, inspection and test-based - this article

focuses on an inspection-based methodology –

heuristic methodology. However, before applying

any guideline breakdown, it is important to identify

the main benefits and drawbacks, in order to address

what motivated the selection of such methodology in

the first place.

In terms of benefits, the heuristic methodology is

a quick and low cost approach that provides feedback

to the designers in an early development stage,

without the direct intervention of end-users. This

approach uses literature guidelines to evaluate the

interface and this assists the designers in identifying

correction measures to solve usability bottlenecks

detected. Regarding drawbacks, the ones most

frequently highlighted are: 1) the efficiency and

viability of any approach depend on expert’s know-

how regarding usability guidelines and best practises;

2) inability to evaluate usability in its full extent –

indeed, the approaches evaluation scope does not

include user related metrics, such as user’s

satisfaction; 3) the uncertainty regarding the end-

results reliability, which can be tackled by including

a significant number of specialists with the proper

know-how in the development cycle, and 4)

costs/expenses (Molich & Nielsen, n.d.)(Federal

Aviation Administration, n.d.).

The dependency on expert’s know-how and the

execution restrictions identified in the heuristic

approach are challenges that the proposed

parametrization intends to mitigate, so as to ensure

that its execution is accessible to any non-usability

expert. However, it should be noted that applying a

set of well defined metrics to manually evaluate an

interface in terms of guidelines compliance level is a

time consuming task. Since parametrization is a first

step to define business rules to be consumed by a yet

to define tool, it is reasonable to explore the use of

automation mechanisms to handle such procedure.

ICEIS 2022 - 24th International Conference on Enterprise Information Systems

310

3 HEURISTIC AUTOMATION

The use of automation in the heuristic methodology

is an explored topic in the literature, that brings

several benefits such as: evaluation cost reductions,

maximization of the interface’s test coverage,

provisioning of mechanism to accurately assess the

gap between the actual and the expected results and

to predict design changes side effects and

independence from usability expert’s know-how

(Bakaev, Mamysheva, et al., 2017; Ivory & Hearst,

2001; Quade, 2015).

Nonetheless, the inclusion of automation neither

discards the need for manual testing, nor provides

mechanisms capable of evaluating usability to its full

extent (Ivory & Hearst, 2001). There are user related

metrics which are out of the scope of the heuristic

methodology approach, namely the user’s satisfaction

level - unmeasurable by currently available automatic

mechanisms.

Note that the advantages that automation ihnerent

brings to the heuristic methodology applicability

motivated the scientific community to explore and

create tools to assist developers and end-users in the

usability evaluation process. The direct result of such

analysis was the definition of four tool categories,

each one with their unique characteristics:

interaction-based – focused on the use of users’

interactions to evaluate the interface’s usability

(Bakaev, Mamysheva, et al., 2017; Bakaev,

Khvorostov, et al., 2017; Type & Chapter, 2021;

Limaylla Lunarejo et al., 2020; Paternò et al., 2017),

metric-based – focused on the definition metrics used

to quantify the interface’s compliance level with

usability guidelines defined in literature (Bakaev,

Mamysheva, et al., 2017; Bakaev, Khvorostov, et al.,

2017; Type & Chapter, 2021), model-based – focused

on the definition of interaction models through the

use of Artificial Intelligence mechanisms to evaluate

the interface (Bakaev, Mamysheva, et al., 2017;

Bakaev, Khvorostov, et al., 2017; Type & Chapter,

2021; Todi et al., 2021), and the hybrid-based

(Bakaev, Mamysheva, et al., 2017; Bakaev,

Khvorostov, et al., 2017).

According to the environment in which these

solutions are integrated, a tendency towards the type

of categories adopted can be noticed.

1

https://dynomapper.com/

2

https://achecker.ca/checker/index.php

3

https://developer.android.com/training/testing/ui-automa

tor#ui-automator-viewer

3.1 Enterprise Context

In an enterprise context, the available options are

metric-based standalone tools that corroborate the

interface’s compliance level with the accessibility

guidelines. The elapsed time required to manually

check each individual guideline, as well as the

government accessibility guidelines compliance

policy (European Commission, 2010) (Pădure &

Independentei, 2019), were the two reasons that

further fostered the development of several tools for

web and mobile applications between 2010 and

2020 (Dynomapper

1

, AChecker

2

, UI Automator

Viewer

3

, WCAG Accessibility Checklist

4

among

others).

3.2 Academic Context

In an academic context, the solutions developed

focused on the evaluation of the multiple features

which define usability. An analysis of 96 scientific

articles ranging from 1997 to 2021 provides an

overview of the trends in the usability automation

domain (41% interaction-based, 26% model-based,

25% metric-based and 2% hybrid-base proposals).

The approach that is explored in the article intends

to follow the hybrid-based approach. We will

combine a metric-based approach with a model-based

approach; within the former, we have used the

definition of metrics to assert the interfaces’

compliance level with defined guidelines; within the

latter, we have checked the compliance level of the

actions executed within the interface of the

interaction models created and trained. By combining

the characteristics of both approaches, we have thus

created a heuristic methodology capable of checking

interface’s components and actions with neither the

external expert’s direct intervention based know how,

nor end-users’.

4 HEURISTICS OPTIMIZATION

4.1 Principles’ Breakdown

Considering the highlighted principles and with the

objective of identifying aspects in common, a

parallelism between each author’s specific set and the

principles/definitions unique of each subset has been

4

https://apps.apple.com/us/app/wcag-accessibility-check

list/id1130086539

Guidelines’ Parametrization to Assess AAL Ecosystems’ Usability

311

established. The generated output provided the

insights required to minimize the subjectivity in the

principles analysis and consequently define the

parametrization building blocks.

The considered guidelines within the

parametrization scope were the following: 1) Jakob

Nielsen’s principles, 2) the Shneiderman’s golden

rules and 3) the Weinschenk and Barker’s cognitive

principles. Each principle was grouped according to

its scope within the interface. Segmentation that took

into account the interface main building blocks: the

components and the actions (Galitz, 2002). As a

direct result the analysis was divided into three

scopes: component oriented (CO), action oriented

(AO); and section oriented (SeO).

For each guideline, the respective parametrization

is presented in Table 1, Table 2 and Table 3.

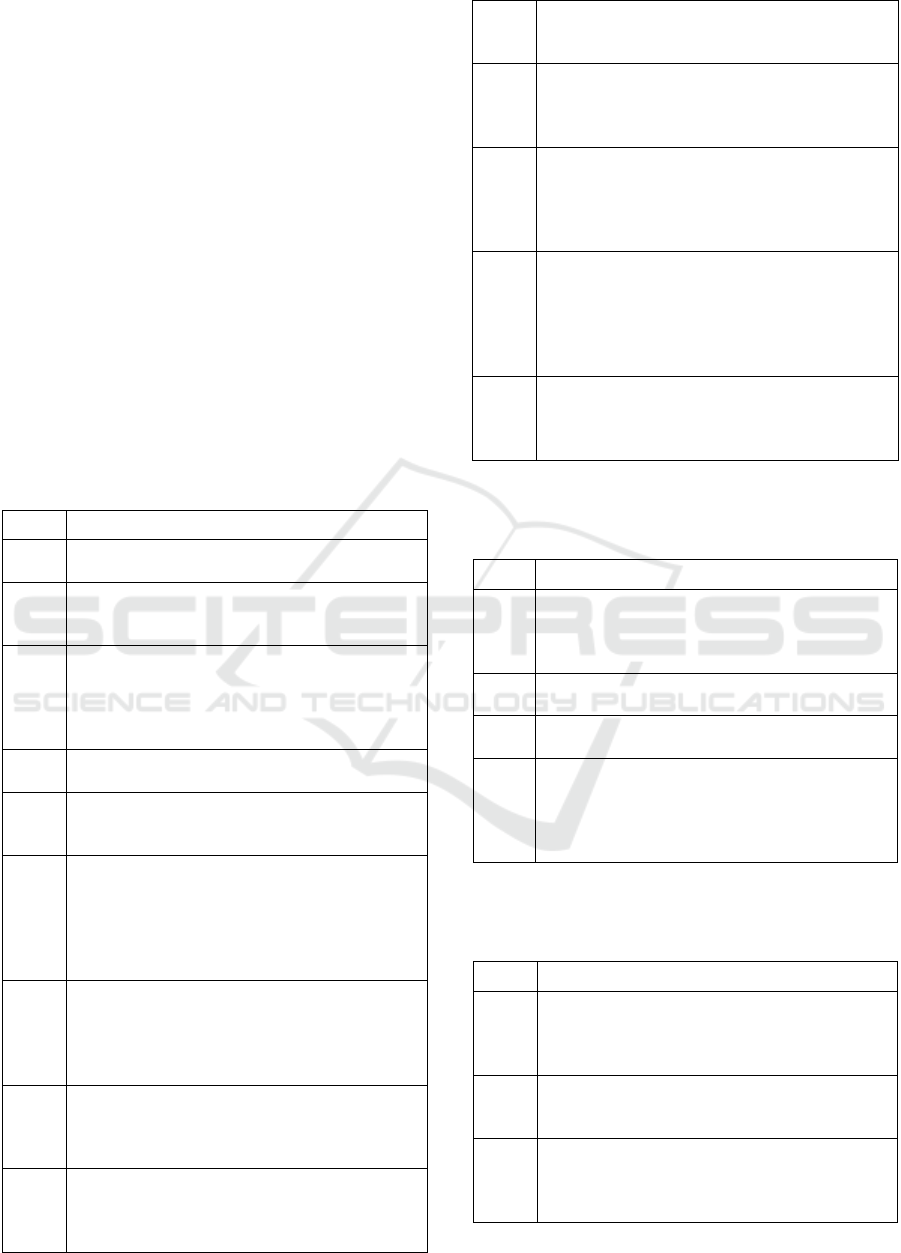

Table 1: Jakob Nielsen and Rolf Molich’s principles

parametrization (Galitz, 2002; Harley, 2018; Kaley, 2018;

Nielsen, 1999; Laubheimer, 2015; Budiu, 2014; Moran,

2015; Nielsen, 2001).

Type Parameters

CO

Visibility of system status - Providing feedback

for each unique interactive state.

AO

Visibility of system status - Providing a

progress bar indicator and a closure dialogue

when applicable.

AO

Match between system and real world -

Including an icon within the component that

provides a real-world visual representation of

the component’s purpose; Excluding system

terminology from component’s text content.

CO

User control and freedom - Ensuring action

reversibility.

AO

Consistency and standards - Ensuring the

compliance of the components structure, look

and feel properties with pre-set values.

CO

Error prevention - Restricting the user’s input;

Providing defaults; Disabling a control when

mandatory data is missing; Presenting warning

messages reporting any unconformities

regarding the input provided before action

closure.

AO

Error prevention - Providing a confirmation

dialog; Including a resolution within the error

messages presented to the user; Providing an

option to cancel the action execution in any

given time.

SeO

Error prevention - Including a mechanism that

automatically saves the user’s work when an

abnormal event that prevents the interface

stability occurs.

CO

Recognition rather than recall - Including hints

that identify the data type required, tooltips

with the description of the component’s action,

labels/icons that clarif

y

the com

p

onent’s action

purpose; Ensuring components’ consistency to

help the user recollect the component’s purpose

through its aesthetic.

CO

Flexibility and efficiency of use - Providing

short keys to navigate across the interface

components and interact with them

accordin

g

l

y

.

CO

Aesthetic and minimalist design - Avoiding the

use of highlights, shadows, glossy effects, and

3D effects; Including colour contrasts that

consider the accessibility guidelines defined for

the interface t

yp

e created.

AO

Help users recognize, diagnose, and recover

from errors - Providing a non-technical error

message that includes the reason which led to

the abnormal event and some advice on how to

recover from it; Ensuring that error messages

p

rovided do not exceed the 20 words limit.

SeO

Help and documentation - Providing, in the

global navigation menu, a dedicated option

where the user can access the interface’s

official documentation.

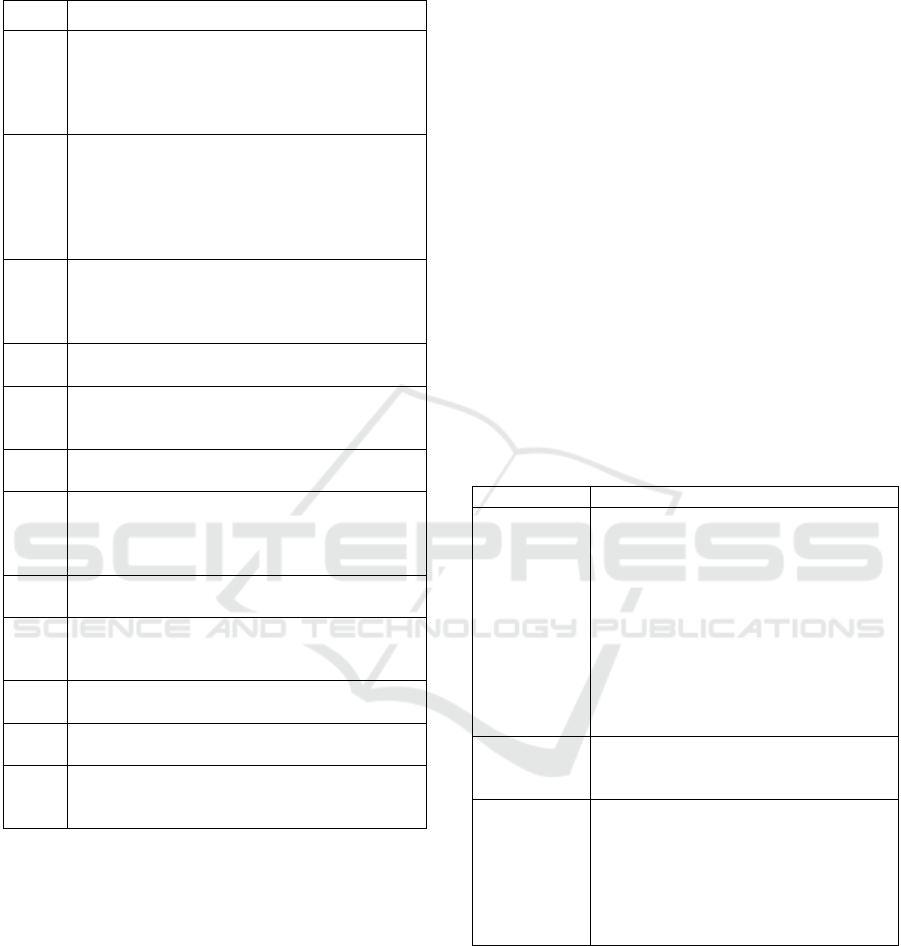

Table 2: Shneiderman’s golden rules parametrization

(Mazumder & Das, 2014; Rozanski & Haake, 2017;

Shneiderman et al., 2017).

Type Parameters

AO

Offer informative feedback - Providing a task’s

completion rate and a progress bar for time

consuming operations; Including a clear

indication of the current section.

AO

Design dialog to yield closure - Providing a

closure dialo

g

ue.

AO

Support internal locus of control - Providing a

confirmation dialogue.

SeO

Support internal locus of control - Including a

clear indication of the user’s position and the

interface navigation hierarchy through

breadcrumbs; Providing a global navigation

menu.

Table 3: Weinschenk and Barker’s cognitive principles

parametrization (Nayebi et al., 2013; Nielsen, 2010;

Rempel, 2015; Kvasnicová et al., 2015).

Type Parameters

AO

User Control - Ensuring action reversibility;

Providing a task’s completion rate and a

confirmation dialogue during the actions

execution.

SeO

User Control - Including a clear indication of

the current section; Providing a global

navigation menu.

AO

Human Limitations - Ensuring interface

response time lower than 10s; Providing

stateful component’s capable of giving

feedback to the user.

ICEIS 2022 - 24th International Conference on Enterprise Information Systems

312

Table 3: Weinschenk and Barker’s cognitive principles

parametrization (Nayebi et al., 2013; Nielsen, 2010;

Rempel, 2015; Kvasnicová et al., 2015) (cont.).

Type Parameters

SeO

Human Limitations - Providing text content in

a simple and direct manner; Avoiding

flourished font families and redundant

hyperlinks; Avoiding the use of unrelated

images within the section’s context.

CO

Linguistic Clarity - Including hints that identify

the data type required and tooltips/labels with

the description of the component’s action

purpose; Avoiding the use of foreign words or

acronyms in the text content provided;

Avoidin

g

s

p

ellin

g

errors.

CO

Aesthetic Integrity - Ensuring aesthetic

similarity, proximity, and continuity across

components from the same family or used to

p

erform a similar action.

CO

Simplicity - Providing default in the multiple-

choice fields.

SeO

Simplicity - Providing mechanisms to display in

a gradual fashion the interface functionalities,

from a basic to an advanced settin

g

.

CO

Predictability - Ensuring the component’s

consistency.

SeO

Predictability - Including a clear indication of

the user’s position and the interface navigation

hierarchy through breadcrumbs; Providing a

g

lobal navi

g

ation menu.

SeO

Interpretation - Including mechanisms to

p

redict the user’s intents.

SeO

Technical Clarity - Presenting trustworthy

information according to the domain being

modelled b

y

the interface.

SeO

Flexibility - Providing mechanisms which allow

the user to chan

g

e the interface look and feel.

AO

Precision - Ensuring that results/feedback

p

rovided matches user’s expectations.

AO

Forgiveness - Providing mechanisms that allow

for reversion/recovery from any action

executed within the interface.

Note that there were principles in the Shneiderman

and Weinschenk and Barker’s principle subset that

have not been described, since they are conceptually

similar to principles whose evaluation process had

already been discussed. Principles such as 1) “Strive

for consistency”, 2) “Seek universal usability”, 3)

“Prevent errors”, 4) “Permit easy reversal of actions”

and 5) “Reduce short-term memory load” share the

same evaluation process described for their

counterparts in the Jakob Nielsen and Rolf Molich’s

set (respectively “Consistency and standards”,

“Flexibility and efficiency of use”, “Error

prevention”, “User control and freedom” and

“Recognition rather than recall”).

4.2 Real Environment Applicability

The parametrization defined provided a rule set to

assert the interface’s compliance level with the major

guidelines depicted in literature. However, its

applicability in a real environment is mandatory to

identify challenges behind its quantification in a real

use case. For this purpose, two e-health applications

were used: an academic prototype and an enterprise

solution available in the market.

The analysis evaluated the unique principles

within the guideline subsets selected for each

interface.

4.2.1 Academic Prototype

The evaluation of the academic prototype considered

the 106 actions and 356 components available in the

15 screens of the entire interface, and covered the

Jakob Nielsen and Rolf Molich, Shneiderman and

Weinschenk and Barker guidelines. The end-results

are presented in Table 4.

Table 4: Academic prototype evaluation results.

Subset Evaluation

Jakob

Nielsen

Visibility of system status – 69%;

Match between system and the real

world – 89%; User control and freedom

– 73%; Consistency and standards –

90%; Error prevention – 35%;

Recognition rather than recall – 76%;

Flexibility and efficiency of use - 85%;

Aesthetic and minimalist design – 84%;

Help users recognize, diagnose, and

recover from errors – 79%; Help and

documentation

–

50%.

Shneiderman

Offer informative feedback – 83%;

Design dialog to yield closure – 74%;

Su

pp

ort internal locus of control

–

60%.

Weinschenk

and Barker

User Control – 83%; Human

Limitations – 63%; Linguistic Clarity –

100%; Aesthetic Integrity – 100%;

Simplicity – 78%; Predictability – 92%;

Interpretation – 0%; Technical Clarity –

83%; Flexibility – 100%; and Precision

–

89%.

According to the results obtained it was detected

a total amount of 1781 usability smells. From the

principles evaluated the lowest scores (<70%) were

related to “Error Prevention” in the Jakob Nielsen

subset, “Human Limitations” and “Interpretation” for

the Weinchenk and Barker subset.

For the Jakob Nielsen subset in terms of “Error

Prevention” the main bottlenecks identified are

related with the lack of an autocomplete mechanism

Guidelines’ Parametrization to Assess AAL Ecosystems’ Usability

313

in any of the components that receive an input from

the user, the lack of a mechanism that automatically

save the user’s work, the lack of error messages

providing clear indications of the type of

inconformities detected in the user’s input and the

lack of mechanisms capable of disabling the action

related controls when the view requirements are not

met.

For the Weinchenk and Barker subset in terms of

“Human Limitation” it should be emphasized the

components’ lack of capability to store their previous

state, in order to make the user aware of his/her

previous interactions without forcing the user of

his/her memory. Regarding “Interpretation” principle

context the main bottleneck detect was related with

the lack of a mechanism in the system capable to

predict the user’s intentions or user’s input when the

interaction process is taking place.

4.2.2 Enterprise Application

SmartAL

5

is the name of the enterprise application

selected to be part of the parameter evaluation effort.

The evaluation took into account 523 actions and

1918 components from a total of 103 screens of the

entire interface. Regarding the principles covered due

to its technical depth it was opted to cover the Jakob

Nielsen and Rolf Molich subset, which already

provided a proper overview of the interface state. The

end-results are presented in Table 5.

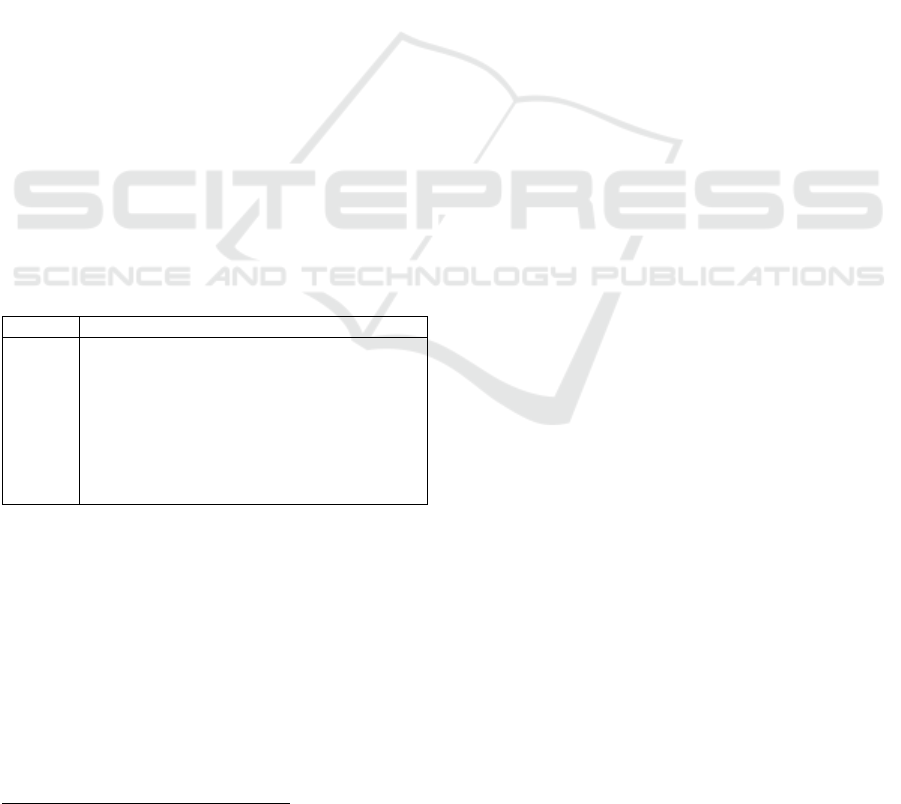

Table 5: SmartAL evaluation results.

Subset Evaluation

Jakob

Nielsen

Visibility of system status – 52%; Match

between system and the real world – 74%;

User control and freedom – 59%; Error

prevention – 23%; Recognition rather than

recall – 73%; Flexibility and efficiency of use

- 0%; Help users recognize, diagnose, and

recover from errors – 30%; Help and

documentation

–

49%.

The analysis performed allowed the identification

of a total amount of 2844 usability smells. From the

principles evaluated the scores below the minimum

quality threshold defined (70%) were related with the

“Visibility of system status”, “User control and

freedom”, “Error prevention”, “Aesthetic and

minimalist design”, “Help users recognize, diagnose,

and recover from errors” and “Help and

documentation” ” in the Jakob Nielsen subset.

5

https://www.alticelabs.com/site/smartal/

5 CONCLUSIONS

The parametrization proposed compiled the

knowledge depicted in the literature to provide an

objective approach to interpret the usability

guidelines to be applied in a heuristic evaluation

process. Its main differentiating factor is related to the

metrics definition process. Each guideline was

explored thoroughly to identify ideal practices that

enforce such principles. Practices that are typically

applied to address usability bottlenecks in each

guideline scope. By isolating the typical approaches

used it was possible to define binary metrics that

allow to check the compliance level of the interface

with the guidelines parametrized in this study. Thus

maximizing the evaluation’s results accuracy and

consistency, and the overall accessibility of the

heuristic methodology to users without expert

usability know-how.

6 FUTURE WORK

The next iterations will be focused over checking how

the results obtained compare with the end-users

feedback. Information required to assert the metrics

validity and accuracy in the detection of critical

usability issues. Additionally the automation of the

current manual evaluation process is another topic to

be tackled to ensure the process applicability and

feasibility in an enterprise environment. Therefore an

effort will be performed to identify which metrics

defined are eligible to be automated to create a tool

capable of taking an interface, run a static analysis,

identify possible botlenecks and suggest

optimizations based on the metrics defined.

ACKNOWLEDGEMENTS

This work was funded by FCT/MCTES through

national funds and when applicable co-funded EU

funds under the project UIDB/50008/2020.

REFERENCES

Application, A. (2016). Mobile Application Usability

Research Case Study of a Video Recording and

Annotation Application.

ICEIS 2022 - 24th International Conference on Enterprise Information Systems

314

A.V. Gundlapalli, M.-C. Jaulent, D. Z. (Ed.). (2018).

MEDINFO 2017: Precision Healthcare Through

Informatics: Proceedings of the 16th World Congress on

Medical and Health Informatics.

Bakaev, M., Khvorostov, V., & Laricheva, T. (2017).

Assessing Subjective Quality of Web Interaction with

Neural Network as Context of Use Model.

Communications in Computer and Information Science,

745, 185–195.

Bakaev, M., Mamysheva, T., & Gaedke, M. (2017). Current

trends in automating usability evaluation of websites:

Can you manage what you can’t measure? Proceedings -

2016 11th International Forum on Strategic Technology,

IFOST 2016, June, 510–514.

Budiu, R. (2014). Memory Recognition and Recall in User

Interfaces. https://www.nngroup.com/

articles/recognition-and-recall/

Cruz, Y. P., Collazos, C. A., & Granollers, T. (2015). The

Thin Red Line Between Usability and User Experiences.

Proceedings of the XVI International Conference on

Human Computer Interaction.

Curran, K. (2014). Recent Advances in Ambient Intelligence

and Context-Aware Computing (1

a

Edição). IGI Global.

Duarte, P. A. S., Barreto, F. M., Aguilar, P. A. C., Boudy, J.,

Andrade, R. M. C., & Viana, W. (2018). AAL Platforms

challenges in IoT era: A tertiary study. 2018 13th System

of Systems Engineering Conference, SoSE 2018, 106–

113.

European Commission. (2010). A Digital Agenda for

Europe. Communication, 5(245 final/2), 42.

Eurostat. (2019). Increase in the share of the population aged

65 years or over between 2008 and 2018.

https://ec.europa.eu/eurostat/statistics-

explained/index.php/Population_structure_and_ageing

Federal Aviation Administration. (n.d.). Heuristic

Evaluation. Retrieved May 2, 2016, from

http://www.hf.faa.gov/workbenchtools/default.aspx?rPa

ge=Tooldetails&subCatId=13&toolID=78

Galitz, W. O. (2002). The Essential Guide to User Interface

Design: An Introduction to GUI Design.

Greenhalgh, T., Wherton, J., Sugarhood, P., Hinder, S.,

Procter, R., & Stones, R. (2013). What matters to older

people with assisted living needs? A phenomenological

analysis of the use and non-use of telehealth and telecare.

Social Science and Medicine, 93, 86–94.

Hallewell Haslwanter, J. D., Fitzpatrick, G., & Miesenberger,

K. (2018). Key factors in the engineering process for

systems for aging in place contributing to low usability

and success. Journal of Enabling Technologies, 12(4),

186–196.

Hallewell Haslwanter, J. D., Neureiter, K., & Garschall, M.

(2018). User-centered design in AAL: Usage, knowledge

of and perceived suitability of methods. Universal Access

in the Information Society, 0(0), 0.

Harley, A. (2018). Visibility of System Status (Usability

Heuristic #1). https://www.nngroup.

com/articles/visibility-system-status/

Holthe, T., Halvorsrud, L., Karterud, D., Hoel, K. A., &

Lund, A. (2018). Usability and acceptability of

technology for community-dwelling older adults with

mild cognitive impairment and dementia: A systematic

literature review. Clinical Interventions in Aging, 13,

863–886.

Ismail, A., & Shehab, A. (2019). Security in Smart Cities:

Models, Applications, and Challenges (Issue November).

ISO 9241-11. (1998). Ergonomic requirements for office

work with visual display terminals (VDTs) -- Part 11:

Guidance on usability.

http://www.iso.org/iso/catalogue_detail.htm?csnumber=

16883

Ivory, M. Y., & Hearst, M. A. (2001). The state of the art in

automating usability evaluation of user interfaces. ACM

Computing Surveys, 33(4), 470–516.

Kaley, A. (2018). Match Between the System and the Real

World: The 2nd Usability Heuristic Explained.

https://www.nngroup.com/articles/ match-system-real-

world/

Kvasnicová, T., Kremeňová, I., & Fabuš, J. (2015). The use

of heuristic method to assess the usability of university

website. Congress Proceedings EUNIS.

Laubheimer, P. (2015). Preventing User Errors: Avoiding

Unconscious Slips. https://www.

nngroup.com/articles/slips/

Limaylla Lunarejo, M. I., Santos Neto, P. de A. dos, Avelino,

G., & Britto, R. de S. (2020). Automatic Detection of

Usability Smells in Web Applications Running in Mobile

Devices. XVI Brazilian Symposium on Information

Systems.

Macis, S., Loi, D., Ulgheri, A., Pani, D., Solinas, G., La

Manna, S., Cestone, V., Guerri, D., & Raffo, L. (2019).

Design and usability assessment of a multi-device SOA-

based telecare framework for the elderly. IEEE Journal

of Biomedical and Health Informatics, PP(c), 1–1.

Martins, A. I., & Cerqueira, M. (2018). Ambient Assisted

Living – A Multi-method Data Collection Approach to

Evaluate the Usability of AAL Solutions. 65–74.

Martins, A. I., Rosa, A. F., Queirós, A., Silva, A., & Rocha,

N. P. (2015a). European Portuguese Validation of the

System Usability Scale (SUS). Procedia Computer

Science, 67(Dsai), 293–300.

Martins, A. I., Rosa, A. F., Queirós, A., Silva, A., & Rocha,

N. P. (2015b). European Portuguese Validation of the

System Usability Scale (SUS). Proceedings of the 6th

International Conference on Software Development and

Technologies for Enhancing Accessibility and Fighting

Info-Exclusion, 293–300.

Mazumder, F. K., & Das, U. K. (2014). Usability Guidelines

for usable user interface. International Journal of

Research in Engineering and Technology, 3(9), 2319–

2322.

Mkpa, A., Chin, J., & Winckles, A. (2019). Holistic

Blockchain Approach to Foster Trust, Privacy and

Security in IoT based Ambient Assisted Living

Environment. January.

Molich, R., & Nielsen, J. (n.d.). Heuristic Evaluations and

Expert Reviews. Retrieved May 2, 2016, from

http://www.usability.gov/how-to-and-

tools/methods/heuristic-evaluation.html

Molich, R., & Nielsen, J. (1990, March). Communications of

the ACM. 3, 338–348.

Guidelines’ Parametrization to Assess AAL Ecosystems’ Usability

315

Moran, K. (2015). The Characteristics of Minimalism in Web

Design. https://www.nngroup.com/

articles/characteristics-minimalism/

Nayebi, F., Desharnais, J. M., & Abran, A. (2013). An expert-

based framework for evaluating iOS application

usability. Proceedings - Joint Conference of the 23rd

International Workshop on Software Measurement and

the 8th International Conference on Software Process

and Product Measurement, IWSM-MENSURA 2013,

January 2014, 147–155.

Nielsen, J. (1999). Do Interface Standards Stifle Design

Creativity? https://www.nngroup.com/ articles/do-

interface-standards-stifle-design-creativity/

Nielsen, J. (2001). Error Message Guidelines.

https://www.nngroup.com/articles/error-message-

guidelines/

Nielsen, J. (2010). Website Response Times.

https://www.nngroup.com/articles/website-response-

times/

Pădure, M., & Independentei, S. (2019). Exploring the

differences between five accessibility evaluation tools

Costin Pribeanu Academy of Romanian Scientists. 87–

90.

Paternò, F., Schiavone, A. G., & Conte, A. (2017).

Customizable automatic detection of bad usability smells

in mobile accessed web applications. Proceedings of the

19th International Conference on Human-Computer

Interaction with Mobile Devices and Services,

MobileHCI 2017.

Peek, S. T. M., Wouters, E. J. M., van Hoof, J., Luijkx, K. G.,

Boeije, H. R., & Vrijhoef, H. J. M. (2014). Factors

influencing acceptance of technology for aging in place:

A systematic review. International Journal of Medical

Informatics, 83(4), 235–248.

Powalsa, J. G. (1996). Cognitive engineering principles for

enhancing human‐computer performance. International

Journal of Human-Computer Interaction, 8(2), 189–211.

Quade, M. (2015). Automation in model-based usability

evaluation of adaptive user interfaces by simulating user

interaction.

Quiñones, D., Rusu, C., & Rusu, V. (2018). A methodology

to develop usability/user experience heuristics.

Computer Standards and Interfaces, 59, 109–129.

Rempel, G. (2015). Defining standards for web page

performance in business applications. ICPE 2015 -

Proceedings of the 6th ACM/SPEC International

Conference on Performance Engineering, 245–252.

Rozanski, E. P., & Haake, A. R. (2017). Human–computer

interaction. In Systems, Controls, Embedded Systems,

Energy, and Machines.

Saeed, S., Bajwa, I. S., & Mahmood, Z. (2015). Human

factors in software development and design. Human

Factors in Software Development and Design, i, 1–354.

Science, I. (2016). Institutionen för datavetenskap Post-

Deployment Usability Opportunities : Gaining User

Insight From UX-Related Support Cases Final thesis

Post-Deployment Usability Opportunities : Gaining

User Insight From UX-Related Support Cases.

Shneiderman, B. (2010). The Eight Golden Rules of Interface

Design. https://www.cs.umd.edu/users/

ben/goldenrules.html

Shneiderman, B., Plaisant, C., Cohen, M., & Jacobs, S.

(2017). Designing the User Interface: Strategies for

Effective Human-Computer Interaction. Pearson

Education.

Todi, K., Bailly, G., Leiva, L., & Oulasvirta, A. (2021).

Adapting User Interfaces with Model-based

Reinforcement Learning. 1–13.

Type, I., & Chapter, B. (2021). Evaluation of Software

Usability. International Encyclopedia of Ergonomics

and Human Factors - 3 Volume Set, 1978–1981.

Van Den Broek, G., Cavallo, F., Wehrmann, C. (2010).

AALIANCE Ambient Assisted Living Roadmap. IOS

Press.

Vimarlund, V., & Wass, S. (2014). Big data, smart homes and

ambient assisted living. Yearbook of Medical

Informatics, 9(1), 143–149.

ICEIS 2022 - 24th International Conference on Enterprise Information Systems

316