An Unified Testing Process Framework for Small and Medium Size

Tech Enterprise with Incorporation of CMMI-SVC to Improve

Maturity of Software Testing Process

Md. Tarek Hasan

1

, Somania Nur Mahal

1

, Nabil Mohammad Abu Bakar

1

, Md. Mehedee Hasan

1

,

Noushin Islam

1

, Farzana Sadia

2

and Mahady Hasan

1

1

Department of Computer Science and Engineering, Independent University Bangladesh, Dhaka, Bangladesh

2

Department of Software Engineering, Daffodil International University, Dhaka, Bangladesh

Keywords: Software Testing, Testing Services, CMMI-SVC, Test Maturity.

Abstract: Software testing service provides a quality assurance approach for evaluating and improving the quality of

software. While various obstacles may arise in the software testing services context. Most of the time, testing

service quality is not always as expected.

This paper illustrates the main motive is to improve the practice

areas of the software testing process so that small software companies can enhance the maturity levels. We

conducted surveys and collected data from 11 small software companies in Bangladesh to assess the current

testing service. The survey’s results revealed the gaps in their CMMI-SVC practice areas and also pinpointed

potential improvement of practice areas. This document presents a made strides system pointing at the

exposure of how to tailor key practice areas of CMMI SVC in the testing process. We proposed a framework

that is based on a unified testing model. By following the proposed steps any software companies can enhance

their maturity level.

1 INTRODUCTION

Software testing assumes a significant part to ensure

the effective performance of software applications. At

the same time, it could be costly in the future or at a

later stage of development (Wen-Hong,Liu & Xin,

2012).

According to studies, tiny software business or

start-ups are not capable of dealing with risk

management in terms of time

and cash for chance examination due to low budgets

and less manpower (Sharma & Dadhich, 2020). To

minimize risk, testing should hire competent

personnel to experiment internal data and put the

experimental data for client's use

(Silva,Soares,Peres,de Azevedo,Pinto, & de Lemos

Meira, 2014).

Therefore, CMMI-SVC provides a view of the

impact of implementing a service-delivery procedure.

For that, a standard process model can facilitate

sharing of common understanding of advanced

technology (Kusakabe, 2015). “The CenPRA testing

cycle” can be the reason for working on the testing in

terms of technical aspects so that organizations can

resort the CMMI model to enhance the testing process

(Bueno,Crespo, & Jino, 2006).

There could be a variety of issues in the field of

software testing services. In this manner, it is

significant for the two suppliers and customers to

survey the quality and development of test

administrations and thusly further develop them.

CMMI for services can be used as guidance for

organizations with effective process areas (PAs)

(Raksawat, & Charoenporn, 2021). Our purpose is to

connect CMMI-SVC process areas with testing best

practices to achieve maturity level 02 at least.

In our country, many organizations seasoning

their in-house software testing activities which are

devoted to giving software testing services

(Raksawat, & Charoenporn, 2021). If they need, they

can revaluate their product testing needs to different

firms which will concentrate on software

development activities with experts. This offer may

improve predictable software quality, maintain the

deadlines and increase time to concentrate on

development (Raksawat, & Charoenporn, 2021).

Today, testing is the most challenging activity

used by organizations, but they have a lack of

Hasan, M., Mahal, S., Bakar, N., Hasan, M., Islam, N., Sadia, F. and Hasan, M.

An Unified Testing Process Framework for Small and Medium Size Tech Enterprise with Incorporation of CMMI-SVC to Improve Maturity of Software Testing Process.

DOI: 10.5220/0011037400003176

In Proceedings of the 17th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE 2022), pages 327-334

ISBN: 978-989-758-568-5; ISSN: 2184-4895

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

327

knowledge about testing services. According to our

survey, almost 52.62% of companies follow the

quality assurance approach and 43.56% follow

validation and verification. Only 34.65% of the

company partially maintains the quality and process

performance objectives for the work.

To understand the challenges of the testing

services, we conducted an online survey with 11

small software firms (SMEs). Project Manager, Test

Manager, CTO, System Analyst, Tester, Software

Engineer and Developer were the main roles in the

survey. We prepared questionnaires for them and

questions were based on software testing best practice

areas mapping with CMMI-SVC process areas.

The key questions of this research is given below,

Q1. How organizations maintain the quality

assurance activities?

Q2. What’s their approach to convey

administrations as per administration

arrangements?

The prime targets of this paper is to recognize the

major testing services challenges of current practice

and provide them guidelines to enhance their maturity

level. For that, we reviewed other research papers.

Then we tried to find out the gaps through conducting

surveys with 11 small software firms. As a result, we

get that most of the company doesn’t follow testing

process areas properly; some of them partially

maintain the quality and process performance

objectives for the work, the rest of them unable to

follow as they belong to start up business.

To eradicate this problem, we propose a

framework that would consider the budget limitation,

resources, testing timeline and eventually maximize

the maturity level to 2 and above.

2 RESEARCH BACKROUND

Software testing plays a vital role in delivering a

complete bug-free product. Various types of

exploration have been directed all over the world to

perceive different types of practices and issues related

to CMMI-SVC.

The major goal of this paper is to improve the

practice areas of the software testing process so that

small and medium software organizations can

achieve higher degrees of maturity. For that, we have

concentrated on last ten years’ research. Those

researches were conducted to improve services,

performance & customer satisfaction which actually

suggest following certain terms to improve processes

(Kundu,Manohar,& Bairi, 2011).

There are several test process improvement

frameworks available, but they are too vast and

complex for smaller firms to use. A minimum test

practice must be followed in small and medium

software company.

They suggest avoiding the negative effects of

perspectives and process distortion by including the

entire organization in the test practices and their

improvement are other concerns made in the creation

of the practice framework. Their framework is

evaluated by actual use within the same company and

observations were noted during the first year of use.

The minimal test practice framework was originally

developed in a case study at a small and emerging

company in Sweden. They suggest a potential

extension of the study would be to try to implement

the framework in other organizations in comparable

situations. It will be fascinating to see how the

structure is modified to accommodate new scenarios

(Daniel Karlstr, Per Runeson & Sara Nord,2010).

Some researchers discover their own testing

process like CenPRA, SPI, AgileQA-RM under the

process perspective improvement of CMMI model

(Silva,Soares,Peres,de Azevedo,Pinto, & de Lemos

Meira, 2014), (Chunli, & Rongbin, 2016), (Bueno,

Crespo, & Jino, 2006). Software testing needs to

identify the best-used models and integrate those

models in process activity for improvement (Wen-

Hong,Liu,& Xin, 2012) while considering risk factors

to reduce the risk and manage with efficiency

(Sharma,& Dadhich, 2020). Different test phases

would be able to detect defect processes (Garousi,

Arkan,Urul,Karapıçak, & Felderer, 2020), focused on

existing problems, and discussed how CMMI helps

improve quality control (Chunli & Rongbin,

2016).They discussed analyzing the process area

using related process areas components and

facilitating them by sharing a common understanding

with comparison by using new technology to examine

each maturity model (Hashmi,Lane, Karastoyanova,

& Richardson, 2010). As we are discussing the

existing processes of the company and identifying

what could be the practice areas of the software

testing process so that small and medium software

organizations can achieve higher degrees of maturity

or at least follow minimum process. The goal of our

survey is to suggest a unified testing model for small

and medium software companies to improve their

processes.

ENASE 2022 - 17th International Conference on Evaluation of Novel Approaches to Software Engineering

328

3 RESEARCH DESIGN AND

METHODLOGY

The prime intension of this perusal is to improve the

practice areas of the software testing service so that

small software firms will be able to reach maturity

level 3 and above.

As we are concerned about software testing

services, we are trying to follow key questions Q1 and

Q2 which are mentioned in the introduction part. We

are analysing scenarios of current procedures

followed by small software organizations by using

these research questions.

In previous research, many researchers were

conducting their research based on improvement of

services, performance, and customer satisfaction and

suggested following certain terms to improve in

processes (Kundu, Manohar & Bairi, 2011). They

also analysed the process area using related process

areas components and facilitating them by sharing the

common understanding by using new technology

(Hashmi,Lane,Karastoyanova, & Richardson, 2010).

Overall, all the related work was based on the

improvement of better performance in terms of

CMMI-SVC. But we are discussing the existing

processes that small software firms follow and

providing them suggestions to improve services,

performance and customer satisfaction. According to

our objectives of this paper which is based on the

major testing process challenges of current practice

and evaluate the maturity of small software firms in

Bangladesh, we reviewed other research papers so

that we can get a clear concept regarding this topic.

Then we tried to find out the gaps through conducting

surveys with 11tech SMEs. We have prepared a

survey questionnaire and sent it to the lead tester of

these SMEs and those 11tech SMEs are classified

based upon the following metrics: Age, Size, Project

Based/Service Based, Number of Employees and

Location (City). After analysing the data, we can

understand the process or methods that company’s

currently following and the gaps of their activities.

Finally, we proposed a framework which would

eradicate their lacking and consider the budget

limitation, resources, testing timeline and eventually

maximize the maturity level to 2 & above.

11tech SMEs have been chosen for our research

and classified based upon the following metrics: Age,

Size, Project Based/Service Based, Number of

Employees and Location (City). We have prepared a

survey questionnaire and sent it to the lead tester of

these SMEs also.

To evaluate the existing testing practices, we

collect data, though both online and offline survey.

To evaluate the data, we followed two methods,

Analysing Factors & Reliability analysis.

Factor analysis used to assess the observable

variables such as performance on specific practice

areas. It’s also useful for summarizing a large amount

of observations into a smaller number of factors. At

the early stage of the survey, we set the following 3-

scale for each question: 1-No (Not followed), 2-

Partial (Partially followed), 3-Yes (Followed). Then

we calculate the average value of each practice area

to assess the maturity of those organizations and be

able to understand the gaps of their following process

areas (PAs).

After collecting data, we followed Reliability

analysis. Basically, reliability analysis refers to the

consistency of measurement. This method can be

used to evaluate the survey questionnaire. The mean,

median, and mode are 3 ways of calculating the

average. We can use the scale for each question to

assess responses from surveys and each respondent

represents their activities, whether they conduct

testing services or not.

4 FINDINGS

4.1 Trend Followed by Companies

11 tech SMEs have been chosen for our research and

classified based upon the following metrics: Age,

Size, Project Based/Service Based, Number of

employees and Location. We have got 24 responses

from 11 companies different roles for 23 questions set

considering different practice areas of CMMI-SVC.

We considered 11 practice areas for our research,

such as PLAN, PQA, IRP, MPM, WMC, RDM, PR,

VV, II, ROM and EST.

We tried to know the trends of small software

companies, therefore we conducted a survey. For that,

we calculate the average value of each question which

is based on practice areas of CMMI-SVC. Table 1

shows the percentage of process areas, which refers

to small software company trends.

In table 1, we analyse the average value of each

process area that a small company usually follows for

testing services. We can summarize that almost

52.62% of companies follow the quality assurance

approach and 34.65% of the company partially

maintains the quality and process performance

objectives for work. We can understand the trends

and reason behind fluctuation maturity levels. The

percentage of LI goes downward when the maturity

level goes up; The PI has an upward trend with the

level. NI has the significant value for Level 0 & 2.

An Unified Testing Process Framework for Small and Medium Size Tech Enterprise with Incorporation of CMMI-SVC to Improve Maturity

of Software Testing Process

329

Table 1: Percentage of following process areas.

Name of

p

rocess areas

Followed Partially

followe

d

Not

followe

d

(PQA)

52.62% 22.88% 27.98%

(WMC)

76.9% 7.7% 15.4%

(IRP)

46.2% 7.7% 46.2%

(MPM)

34.65% 34.65% 30.75%

(PR)

61.5% 38.5% 0%

(VV)

43.56% 0% 33.3%

(RDM)

69.2% 0% 30.8%

(PLAN)

36.4% 27.3% 36.4%

(II)

30.8% 34.65% 38.42%

(ROM)

53.8% 7.7% 38.5%

(EST)

30.8% 30.8% 30.7%

Who is not following any testing process our

proposed solution will guide them to follow and

improve testing processes.

4.2 Reliability Analysis

Reliability analysis alludes to the properties of

estimation scales and the things that create the scales

(Gemino, Horner Reich, & Serrador, 2021). Using

reliability analysis, we can verify our questionnaire is

related to practice areas or not. Basically, our survey

questionnaire refers to 23 questions which are based

on 11 practice areas of CMMI-SVC. To measure the

scale of reliability for those questionnaires, we

followed Cronbach's Alpha method that is a

coefficient of reliability. Cronbach’s Alpha is a model

of inside firmness, in view of the normal between

thing connection.

In table 2, Cronbach’s Alpha refers to the average

inter-item correlation. Number of items refers to 23

survey questionnaires. According to general rule, the

acceptable level of reliability α is 0.6-1.0 range

(Gemino, Horner Reich, & Serrador, 2021). But we

got a value of alpha 0.943 which indicates a strong

satisfactory level from the survey questionnaire.

Table 2: Unwavering quality insights.

Cronbach's Alpha Cronbach's α Based on

Standardized Thin

g

s

Item

No.

0.943 0.940 23

4.3 SCAMPI

SCAMPI is a standard method to evaluate each

process area of CMMI (Rahmani,Sami, & Khalili,

2016). It consists of a series of activities including

interviews, checking documents, and analysing the

results of questionnaires and surveys. The weighting

can be seen in table 3.

Table 3: Scampi weighting.

Abbreviation Criteria Weight

NI Not implemented 0

PI Partially Implemented 1

LI Largely Implemented 2

4.4 Questionnaire Result

The survey questionnaire was distributed to 11 tech

SMEs. Project Manager, CTO, Test Manager, System

Analyst, Tester, Software Engineer and Developer

were the key roles of this survey. The distribution of

the questionnaires was assessed based on the

SCAMPI method. Here we marked each question and

tried to figure out the percentage of SCAMPI value

so that we can assess maturity level. Since most

companies are new, that’s why we focused on

maturity level 1, level 2 and level 3.

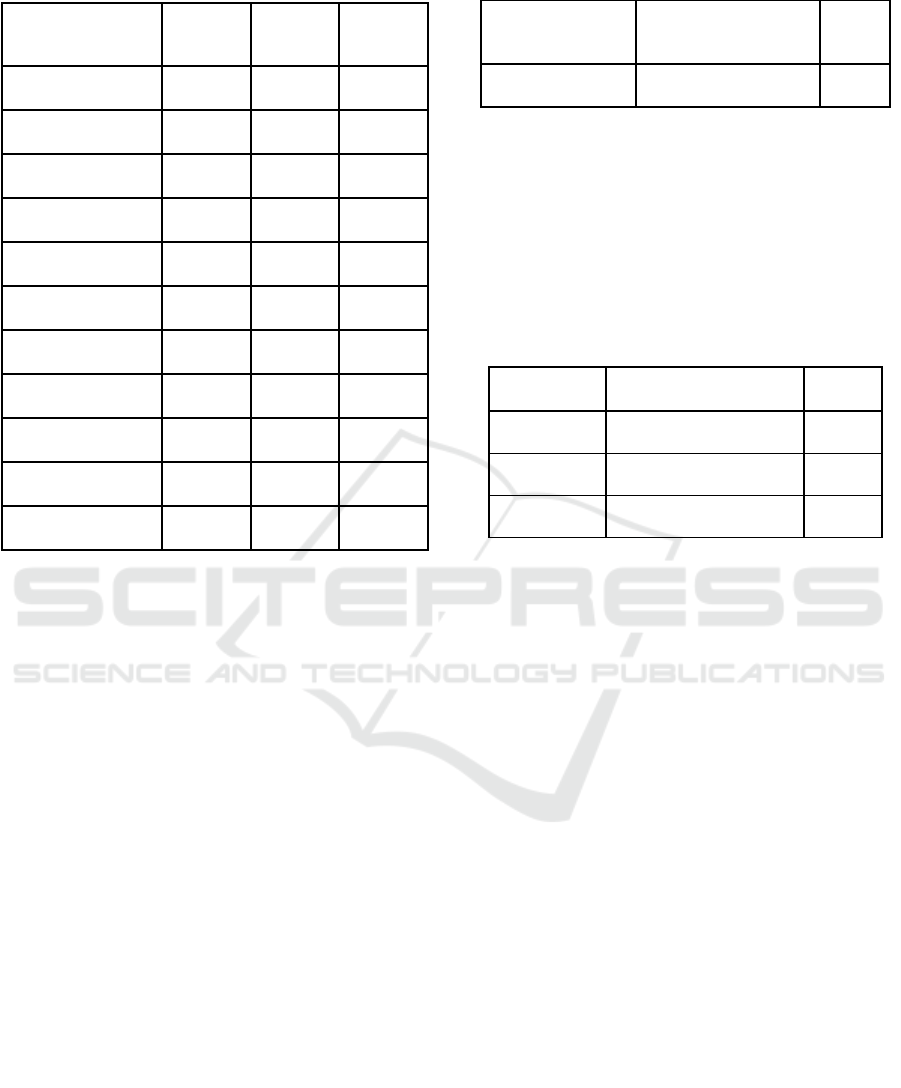

Figure 1 is showing the score of SCAMPI value

based on the practice area of level1. Here we can

summarize that, ‘E’, ‘F’, 'G', ’K', ’A’ software firms

achieve 65-100% of level 1. As ‘D’ I ‘H’ ‘J’,'C’

achieve 30%-60% of Level 1.’C’ and ‘D’ companies

have lower scores than other companies, they need

improvement on practice areas. Through Figure1, we

can understand the trends of practice areas followed

by small software firms also.

ENASE 2022 - 17th International Conference on Evaluation of Novel Approaches to Software Engineering

330

Figure 1: Score and percentage of SCAMPI value based

CMMI-SVC level-1.

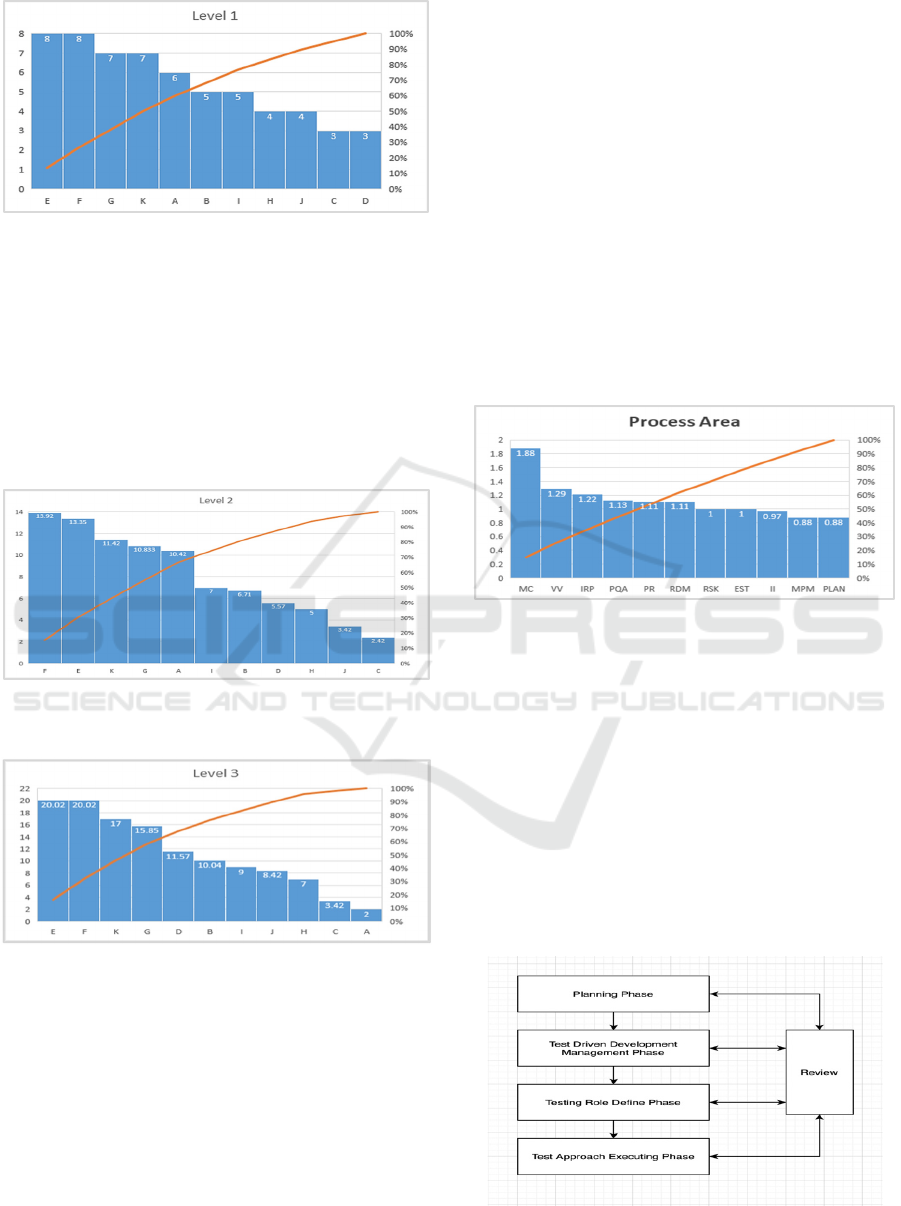

Figure 2 is showing the score of each company

based on the practice area of CMMI level 2. As a

result, ‘E’, ‘F’,’G’,’K’ companies achieve 70%-95%

of Level2 approximately. ‘D’, ‘B’,’I’,’J’ achieve

almost 30%- 45%f Level2. Due to less percentage of

SCAMPI score, ‘D’,’H’,’C’,’J’ companies have to be

more concerned about the practice area of CMMI

level-2.

Figure 2: Score and percentage of SCAMPI value based

CMMI-SVC level-2.

Figure 3: Score and percentage of SCAMPI value based

CMMI-SVC level-3.

The score of SCAMPI value of level 3 has been

analysed in Figure 3. As a result, ‘E’,’F’,’G’,’K'

achieve 65%-90% of maturity level 3 only. We can

understand ’A’,’C’,’H’,’J’,’I’,’B’,’G’ to achieve

10%-60% of Level-3 only. As they are unable to

follow the practice area of CMMI-SVC Level-3. So

they have to be more concerned with enhancing their

testing services properly.

4.5 Questionnaire Result

In this section, we try to visualize the overall trend of

Tech SMEs so that we can understand which process

areas they need to improve. For that, we calculate the

average value from each question based on each

process area.

Figure 4 is showing the trend of the company

being analysed. Our survey questionnaire was based

on 11 practices of CMMI-SVC. From Figure-4, we

can summarize that most of the small software firms

follow MC for software testing. But the average value

of PLAN, MPM, II is below 50% (below avg. value

1) which indicates their failure on those practice

areas. As most SMEs are facing difficulties in

providing testing services due to lack of planning on

their project.

Figure 4: Visualization on overall trends of each process

area.

5 PROPOSED SOLUTION

After analysing the data, we propose a framework

which consider the budget limitation, resources,

testing timeline and eventually maximize the maturity

level to 2 & above. The

framework for

little and

medium program companies is designed to improve

practice areas of CMMI-SVC standardized software

testing services along with software testing best

practices.

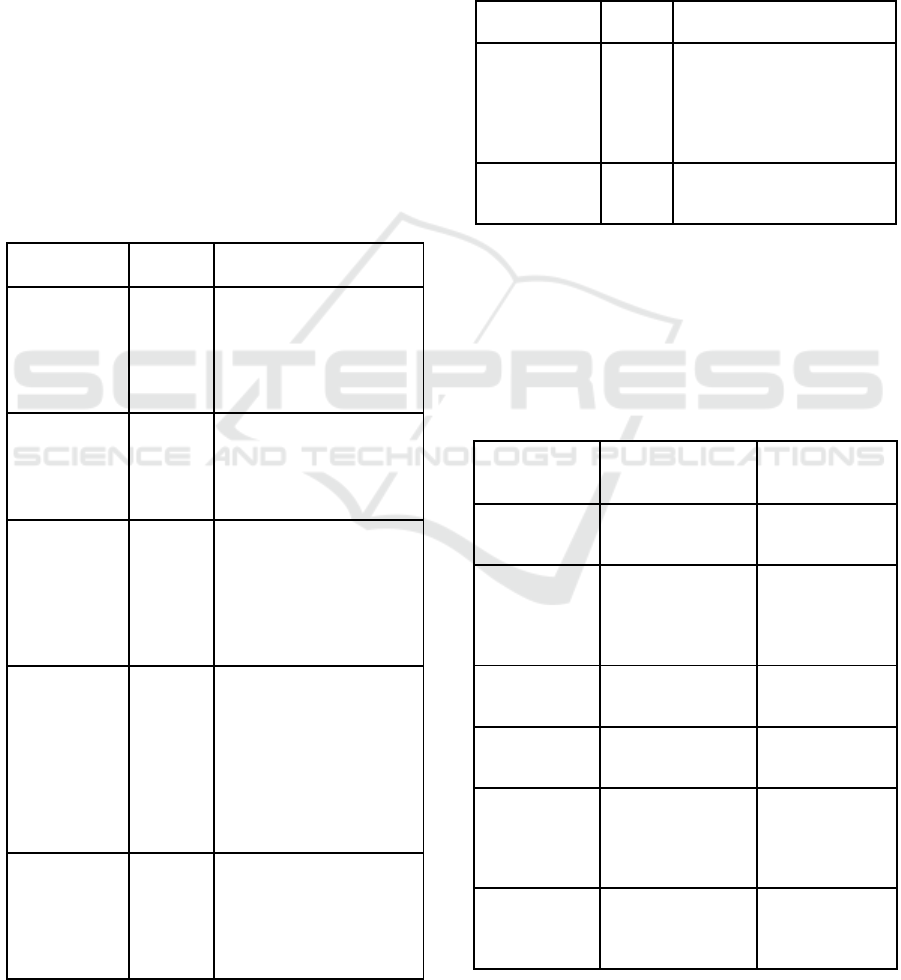

Figure 5: Proposed framework.

An Unified Testing Process Framework for Small and Medium Size Tech Enterprise with Incorporation of CMMI-SVC to Improve Maturity

of Software Testing Process

331

We proposed a framework that divided the testing

process into different phases that are interrelated to

each other. Our approach is to review each process so

that it will make a loop to have a longer lasting

solution.

Composition of our proposed framework is shown

in Figure 5.

Testing process is divided into different phases

that are interrelated to each other. Different phases are

given below.

5.1 Planning Phase

In the planning phase we actually plan the objective,

what we are going to test on the priority basis. By

maintaining standard & using tools who is going to

test what and how. By the plan objective we must

satisfy mentioned attributes.

Table 4: Prioritization of List of Testing Objectives of Start-

ups.

Objective Priority Key attributes

Plan the test

policy and

prepare policy

document

High

a. Test standards

b. Testing tools

c. Testing improvements

d. Test budget estimation

e. Risk Analysis

Prepare Quality

Management

Plan

Medium

a. Quality objectives

b. Ensure key deliverables

c. Roles & responsibilities

d. Testing tools

Define test

strategies and

align QA with

product

business

ob

j

ectives

Medium

a. Test scope

b. Setting Industry

standards

c. Time constraints

d. Budget constraints

Prepare test

Plan

High

a. Test items

b. Setting pass & fail

criteria

c. Test approaches

d. Schedule

e. Risks

f. Responsibilities

g. Deliverables

Prepare Test

Cases

High

a. Feature test

b. Description

c. Test steps

d. Test data

e. Result Data

5.2 Test Driven Development

Management Phase

This phase is based on two main objectives Test-

Driven Development and Pair Programming. Since

we are in development phase we have to test & review

codes as much as possible to optimize re-work and

improve process which save both the time and money.

Table 5: Phase of test-driven development management.

Ob

j

ective Priorit

y

Ke

y

attributes

Employ

Test- Driven

Development

High

a. High quality

Optimization of development

costs.

b. Simplification of code

c. Executable documentation

Employ Pair

Pro

g

rammin

g

Low

a. High quality code

b

. Knowledge sharing

5.3 Testing Role Define Phase

In role defining phase job should distribute as precise

as possible, share the responsibility while having less

resource where multiple responsibilities can be

looked over by single role.

Table 6: Testing role define phase.

Standard Role Core

Res

p

onsibilities

Start-ups Shared

resources

Software Test

Enginee

r

Test overall system Tester,

Develope

r

Test analyst Identify test

conditions and

features to develop

test scenarios

Test lead, Tester,

Developer

Test automation

enginee

r

Develop scripts to run

automated tes

t

Tester, Developer

Software De-

veloper in Tes

t

Develop Tool to

support testing

Tester, developer

Test architect Design Complex test

infrastructure, select

tools for

implementatio

n

Lead tester,

Tester

Test architect Prepare test strategy,

control testing process

and team members

Lead tester,

project manager

ENASE 2022 - 17th International Conference on Evaluation of Novel Approaches to Software Engineering

332

5.4 Testing Approaches Execution

Phase

After having the planned objective and role based

resource, now we can approach the tasks to execution

to achieve certain goals. In the below mention table

we are going to pick a task based on priority and

would try to achieve specified goal.

Table 7: Phase of testing approaches.

Key tasks Priority Achievable goal

Arranging a

testing plan from

the early stages of

the advancement

High Distinguish and settle bug

s and glitches as

Before long as

conceivable

Reviewing

requirements

High Engage testers in with

stakeholders to review &

anal

y

se re

q

uirements

Testing Regularly Low a. Doing littler tests more

regularly all through

the improvement stages

b. Making a ceaseless

criticism stream

permits for quick

approval and enhancem

ent of the framewor

k

Team

collaboration

Medium Tightly collaborate to

achieve broad skill sets.

Involve testers within

the advancement prepare

and designers in testing

exercises, making an item

with testability

in intellect.

5.5 Review Phase

Above mentioned Each phase will go through this

review phase, there must be a schedule review phase

to have each phase review & outcome to enrich any

process. By this phase we actually review all other

phases

6 CONCLUSION

The main goal of the study is to extend the maturity

of small and medium software firms based on testing

service perspective. For that, we conduct a survey on

software testing best practice areas mapping with

Table 8: Task of review phase.

Key tasks Priority Achievable goal

Conduct

review

meeting

Medium a. Present the item to the rest

of the commentators.

b. All the members need

to acknowledge the item, re

commend alterations,

and examine timeframes.

Walk

through

Assembly

High a. Reviewers look at the code

of the item alluded to, its plan,

and recorded prerequisites

b. Detect bugs in the code

c. Q & A with developer

Inspection

Session

High a. Decides the extra properties

of the item agreeing to the

prerequisites

b. Grow starting benchmarks

c. Check to see in case past

bugs are still display

CMMI-SVC process areas. Basically, our research is

based on software testing and a few specific practice

areas like PPQA, REQM, SD, WMC, WP, IRP, QPM.

While the different research methodology proved

to be successful as the following process and practice

area. We have gone through their existing practices &

processes, relate them with standard practices &

processes to provide suggestions to have a better

maturity model, better performance & client

satisfaction.

We received 24 responses from 11 tech SME. As

a result, we can understand their gaps and activities

regarding software testing service. Through result

analysis, we elaborate on the practices of CMMI-

SVC based on software testing. Finally, we propose a

framework that would consider the budget limitation,

resources, testing timeline and eventually maximize

the maturity level to 2 & above.

For future work, we suggest focusing more on

those practice areas to optimize gaps which has been

less followed and bigger impact. We can learn from

the trend, how and why that percentage of the

following practices fluctuate. Last but not the least the

NI has a significant percentage which means that they

are not following those practice areas. So we must

research on those practices why they are not followed

at all. Need to figure out common aspects and guide

them in such a way that they must at least follow those

partially. We will conduct another survey to validated

An Unified Testing Process Framework for Small and Medium Size Tech Enterprise with Incorporation of CMMI-SVC to Improve Maturity

of Software Testing Process

333

proposed plan and focus on more comparative

evaluation.

This action is a continuing improvement process

for any organization. But standard processes and

existing processes have to parallel. To improve any

certain process or practice one has to dive deep into

that specific domain and find out all possible tasks

related to that. The future work will have a significant

impact regarding performance improvement and

enhance their maturity model.

REFERENCES

Bueno, P. M., Crespo, A. N., & Jino, M. (2006, June).

Analysis of an Artifact Oriented test process model and

of testing aspects of CMMI. In International

Conference on Product Focused Software Process

Improvement (pp. 263-277). Springer, Berlin,

Heidelberg.

Chunli, S., & Rongbin, W. (2016, August). Research on

Software Project Quality Management Based on

CMMI. In 2016 International Conference on Robots &

Intelligent System (ICRIS) (pp. 381-383). IEEE.

Garousi, V., Arkan, S., Urul, G., Karapıçak, Ç. M., &

Felderer, M. (2020). Assessing the maturity of software

testing services using CMMI-SVC: An industrial case

study. arXiv preprint arXiv:2005.12570.

Garzás, J., & Paulk, M. C. (2013). A case study of software

process improvement with CMMI‐DEV and Scrum in

Spanish companies. Journal of Software: Evolution and

Process, 25(12), 1325-1333.

Gemino, A., Horner Reich, B., & Serrador, P. M. (2021).

Agile, traditional, and hybrid approaches to project

success: is hybrid a poor second choice ?. Project

Management Journal, 52(2), 161-175.

Hashmi S. I., Lane S., Karastoyanova D., and Richardson I.

(2010). A CMMI Based Configuration Management

Framework to Manage the Quality of Service Based

Applications.

Kundu, G. K., Manohar, B. M., & Bairi, J. (2011). A

comparison of lean and CMMI for services (CMMI‐

SVC v1. 2) best practices. Asian Journal on Quality.

Kusakabe, S. (2015, July). Analyzing Key Process Areas in

Process Improvement Model for Service Provider

Organization, CMMI-SVC. In 2015 IIAI 4th

International Congress on Advanced Applied

Informatics (pp. 103-108). IEEE.

Qinhua, L. I. N. (2015). Research on Software Testing

Technology and Methodology based on Revised and

Modified Capability Maturity Model: A Novel

Approach.

Peters, G. J. (2014). The alpha and the omega of scale

reliability and validity: why and how to abandon

Cronbach’s alpha and the route towards more

comprehensive assessment of scale quality.

Raksawat, C., & Charoenporn, P. (2021). Software Testing

System Development Based on ISO 29119. Journal of

Advances in Information Technology Vol, 12(2).

Rahmani, H., Sami, A., & Khalili, A. (2016). CIP-UQIM:

A unified model for quality improvement in software

SME's based on CMMI level 2 and 3. Information and

Software Technology, 71, 27-57.

Sharma, R., & Dadhich, R. (2020). Analyzing CMMI

RSKM with small software industries at level-

1. Journal of Discrete Mathematical Sciences and

Cryptography, 23(1), 249-261.

Silva, F. S., Soares, F. S. F., Peres, A. L., de Azevedo, I.

M., Pinto, P. P., & de Lemos Meira, S. R. (2014,

September). A reference model for agile quality

assurance: combining agile methodologies and maturity

models. In 2014 9th International Conference on the

Quality of Information and Communications

Technology (pp. 139-144). IEEE.

Singh, D. (2016). Software Testing Using CMM Level

5. International Journal of Computer Science Trends

and Technology, 233-242.

Wen-Hong, L., & Xin, W. (2012, August). The software

quality evaluation method based on software testing.

In 2012 International conference on Computer Science

and Service System (pp. 1467-1471). IEEE.

ENASE 2022 - 17th International Conference on Evaluation of Novel Approaches to Software Engineering

334