Individualizing Learning Pathways with Adaptive Learning

Strategies: Design, Implementation and Scale

Ana Donevska-Todorova

a

, Katrin Dziergwa

b

and Katharina Simbeck

c

University of Applied Sciences HTW Berlin, Treskowallee 8, 10318 Berlin, Germany

Keywords: Individualized Learning Paths (ILP), Adaptive Learning Strategies, Feedback Adaptations, Adaptive

Educational Systems, Learning Management Systems (LMS), Microlearning, Task Design, Task Sequence,

Design Research (DR), University Education, Applied Mathematics, e-Learning, COVID-19 Pandemic.

Abstract: Individual undergraduate learners have heterogeneous knowledge backgrounds and undergo diverse learning

experiences during their university studies. Consequently, designs of virtual learning environments should

adjust to learners’ needs and competencies, especially in the current pandemic crisis. This paper discusses

pedagogical aspects of personalized and self-regulated learning and situates its focus on design,

implementation, and scale of e-content and e-activities for individualized learning pathways (ILP).

Characteristics of ILP such as shape, length, and turning points enabled through adaptive features of existing

Learning Management Systems (LMS) have seldom been discussed in the literature. We tackle this issue from

a didactical perspective of microlearning with regards to three adaptive learning strategies: 1) Feedback

Adaptations, 2) Task Design, and 3) Task Sequence Design. Within a first phase of a complete initial Design

Research (DR) cycle, we have collected and analysed data which enable us to generate, cluster and label

queries and differentiated items for each of the three strategies. Further on, we offer a visualization of possible

ILP illustrated with contextual examples of productive, technology-based task and feedback designs

applicable and scalable in higher education settings.

1 INTRODUCTION

The big number of students and their diversity in

background knowledge challenges university leaders

and teaching staff to provide learning opportunities

that are various and flexible in content, time, and

space. During the emergency remote teaching phase

of the COVID-19 pandemic outbreak, academic

educators urged themselves to create e-learning

content and digital activities for inhomogeneous

groups of students. The dynamicity of change and

digitalization accelerated through the pandemic led to

responses that were rapid, but not always of high

quality for learners in asynchronous distant or hybrid

learning contexts. Many of the produced e-learning

contents seem now to be sporadic, unstructured, and

isolated one from another. The unprecedented

demand for automated tasks and digitally generated

activities such as e-tests for autonomous learning has

a

https://orcid.org/0000-0003-1755-7182

b

https://orcid.org/0000-0000-0000-0000

c

https://orcid.org/0000-0001-6792-461X

grown so promptly that it far outperforms the current

supply.

To approach such research problem, we dedicate

ourselves to (re-)create, implement, and scale

curricular e-elements of university courses, which

will enable students to gain content-specific and

personal competencies in their own studying tempo.

In doing so, we consider development of learning

opportunities at a macro level (e.g., as by Morze,

Varchenko-Trotsenko, Terletska, & Smyrnova-

Trybulska, 2021) in the frame of a course curriculum

or several courses’ curricula and at a micro level

within a task, a task sequence, and an e-activity. This

proposition for distinction enables concretization and

detailed description of the subject-specific and

didactical appearances of the ILP. In the constrains of

this paper, we focus on design and adaptively aspects

related to microlearning. Microlearning is related to

learners’ engagement in low degrees of time

Donevska-Todorova, A., Dziergwa, K. and Simbeck, K.

Individualizing Learning Pathways with Adaptive Learning Strategies: Design, Implementation and Scale.

DOI: 10.5220/0010995100003182

In Proceedings of the 14th International Conference on Computer Supported Education (CSEDU 2022) - Volume 2, pages 575-585

ISBN: 978-989-758-562-3; ISSN: 2184-5026

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

575

consumption and consists of micro-content and

micro-activities (Lindner, 2006) that can be

distributed across LMS and Web 2.0 technologies

(Grevtseva, Willems, & Adachi, 2017, p. 132).

Firstly, the paper presents a specification of the

identified terminology in the literature regarding the

variety of learning types like personalized learning,

adaptive learning, and individualized learning. It

then continues with explanations about adaptive

learning strategies that can secure opportunities for

learning in individually chosen paths. This literature

review suggests that there is a growing research body

justifying the need for individualized approaches, but

there is vague evidence of how individualized digital

learning trajectories (may) look like in practice,

which are their characteristics and didactical

potentials.

The paper further expands around the questions:

which adaptive learning strategies and what kind of

tasks can be designed and applied for supporting

individualization of students’ learning pathways on a

micro level and outlines results of a pre-study in a first

cycle of a Design Research methodological approach.

Shortly summarized, this paper contributes to the

design and research body about adaptive

microlearning with existing Learning Management

Systems (LMS) in the following way. It considers

three adaptive learning strategies for individualizing

students’ learning trajectories:

Feedback Adaptations,

Task Design and

Task Sequence Design.

Moreover, it shows how we have:

created item responses for various types of

feedback adaptations for tasks and task

sequence designs encouraging ILP, analysed in

connection to relevant literature,

generated, clustered and labelled queries and

differentiated entries for task design supporting

ILP,

described requirements and characteristics of

ILP and their visualization,

offered contextualized and implemented

examples of productive, technology-based

feedback and task designs for ILP in higher

education,

suggested ways for scale and further sustainable

re-design of micro-content and micro-activities for

ILP in LMS.

2 PERSONALIZED, ADAPTIVE,

AND INDIVIDUALIZED

LEARNING

The modern learner in higher education needs

dynamic learning contents and educational activities

that can be adjusted according to an individual

rhythm of learning. Personalized learning, primarily

mentioned by the Organisation for Economic Co-

operation and Development OECD (2006) is

characterized by changes concerning several aspects

such as assessment providing individual feedback

related to learning objectives, teaching, and learning

strategies referring to the individual needs,

curriculum adoptions, and student-centered

approaches (Shemshack & Spector, 2020).

Personalized learning is also defined through the

instructors’ perspective as an approach that optimizes

pieces of content and their sequencing, and

engagement with this content according to the

requirements, interests and self-initiation of each

learner following learning objectives (U.S.

Department of Education, Office of Educational

Technology (2017). “Personalized learning considers

students’ interests, needs, readiness, and motivation

and adapts to their progress by situating the learner at

the centre of the learning process” (Shemshack &

Spector, 2020, p. 5). Most of the current research

acknowledges the role of technology in supporting

personalization of learning processes. In this regard,

adaptive learning as a way of learning that tries to

best familiarise with learner’s strengths and

weaknesses and accordingly regulate the learning

processes of the individual with digital tools is

perceived to be appropriate for increasing the chances

of success. Further, a recent systematic literature

review for Information Systems Research

distinguishes between personalized learning,

adaptive learning, individualized instruction, and

customized learning (Shemshack & Spector, 2020).

On the one hand, individualization can be considered

as a component of personalized learning, on the other

hand, it can be used in place of personalized learning.

Individualized learning permits individualization

grounded on the learner’s unique necessities

(Cavanagh, 2014; Lockspeiser & Kaul, 2016). While

in ubiquitous learning environments, users

completely and freely shape their own trails of

education according to their personal interests,

institutionalized learning follows sets of

prerequisites, formal regulations, and curricula.

In differentiated and individualized instruction,

students are provided with real-time individualized

CSEDU 2022 - 14th International Conference on Computer Supported Education

576

feedback by an instructional and didactical design that

allows them to undertake some control over their own

learning.

One way to enable adaptive learning is to create

a Learner Model and a multi-agents-system that

defines intelligent interactive agents, which can

investigate learner’s traces, estimate numerous

indicators, and suggest the best fitting adaptations for

the individua (Ajroud, Tnazefti-Kerkeni, & Talon,

2021). Nonetheless, an adaptive multimedia system

developed by using empirically determined

thresholds for the adaptation algorithm providing

adaptive support in real-time proved to be successful

in improving transfer for stronger learners, but neither

effective nor harmful for weaker learners (Scheiter,

K., Schubert, C., Schüler, A., et al., 2019). Another

way to create possibilities for adaptive learning is by

adaptive tutoring systems that modify according to

the learning styles of the users, students, or tutors,

based on the Felder Silverman Model (e.g., Boussaha

& Drissi, 2021). Other authors have reported benefits

of adaptive e-learning systems based on users’

personal information such as gender, age, educational

level, and background data, learning styles, and

preferences to avoid the ‘one-size-fits-all’ teaching

approach (e.g., Al-Azawei & Badii, 2014). A review

of the existing Adaptive Learning Systems for the

Formation of Individual Educational Trajectory

considers several criteria for ratings such as: area of

application, type of adaptation, functional

persistence, integration within an existing LMS,

utilization of contemporary technologies of

generation, and discernment of natural language and

courseware characteristics (Osadcha, Osadchyi,

Semerikov, Chemerys, & Chorna, 2020).

However, evidence-based research remains

insufficient, as adaptive learning appears to be an

evolving research field (Liu, McKelroy, Corliss, and

Carrigan, 2017). Furthermore, there is a need for

research studies that indicate appropriate

combinations of different types of media and their

influence on shapes and lengths of ILP. Our aim is not

to develop an intelligent tutoring system or an

adaptive educational hypermedia system, through

algorithms (e.g., Vanitha and Krishnan, 2019) or

neural networks (e.g., Saito and Watanobe, 2020). It

is rather to create and research adaptive micro-content

and adaptive micro-activities that can facilitate

competence growth for individual learners using an

existing LMS according to learning theories. Moodle,

as a relatively widely spread LMS at higher education

institutions is suitable for such development and

research.

3 ADAPTIVE LEARNING

STRATEGIES

Adaptive e-learning is associated with robust

pedagogical affordances because it fosters

multifaceted student-centred approaches. Adaptive

presentation techniques to enhance learning

outcomes in higher education related to web-based

learning environments have already been discussed in

the literature, e.g., by Elmabaredy, Elkholy, & Tolba

(2020). Further, Towle & Halm (2005) have

discussed three adaptive strategies related to

synchronous vs. asynchronous learning, rule-example

vs. example-rule, and Feedback adaptation and

concluded that some of the adaptive strategies proved

to be insufficient when being implemented with

students. Out of these three adaptive strategies, we

focus on developing and implementing feedback

adaptation for enabling ILP aiming to embrace the

necessities of all students including low-achieving

students or those with a lower content-knowledge.

3.1 Adaptive Feedback

Appropriate and timely feedback is important for

students towards competence gain and growth. It

supports learners to operate and monitor their own

learning process and to self-control individual

educational decisions (Gutl, Lankmayr, Weinhofer,

& Hofler, 2011). Beside different sources of feedback

such as AI-generated feedback, instructor’s feedback,

or peer feedback, there are also a variety of types of

feedback. While direct, authentic, and individualized

feedback from an instructor is valuable but

considerably time-consuming, tailored feedback can

also be provided by an automated feedback system.

Thus, while manual feedback, given by the instructor,

is usually delayed and might have imperfect timing,

automated feedback which is continuously improving

due to advances in machine learning and natural

language processing, is provided in real-time. What

type of feedback is the most efficient in supporting

the development of appropriate students’ trajectories

in length and durations? Some authors suggest that

feedback plays a significant role as an integrative part

of an adaptive system and emotions and personality

should be considered for its construction (Fatahi,

2019). Rather than choosing one exact type of

feedback, we argue that a proper combination of

several types of feedback, for example behavioural

and cognitive feedback can be the most beneficial.

Cognitive feedback is corrective, epistemic, and

suggestive and supports self-regulated learning.

Corrective and epistemic feedback relate to

Individualizing Learning Pathways with Adaptive Learning Strategies: Design, Implementation and Scale

577

descriptive learning analytics, whereas suggestive

feedback relates to prescriptive analytics. Behavioral

feedback should be instantaneous, automated, and

equally valuable for monitoring, preparation, and

adjustments (Alasalmi, 2021, p. 136). Further,

generic feedback is context-independent and

contextualized feedback is dependent on the context.

While the general/overall feedback is displayed

immediately with task fulfilment and is independent

of the given solution, the specific feedback is

dependent on the 'correctness' of the given answer.

Therefore, the general feedback aims to provide hints

or links that could lead to further information for

clarification if the task/question has not been

understood well enough. We elaborate these

distinctions with examples in the context of our

sample course in section 4 of this paper. The literature

further differentiates between self-referenced and

reward-based feedback (Maier, 2021), or separation

depending on the complexity of the feedback. So,

feedback can be simple and detailed (elaborated)

(Makhlouf & Mine, 2021). Complex feedback

provides guidance towards the solution, whereas

simple feedback affords short facts about the

accuracy of the result (Belicová, Lacsný, & Teleki,

2018), etc.

3.2 Task Design for ILP Microlearning

Environments

The current intensified consumption of Moodle-

based quizzes may lead to an enormous quantity of

produced asynchronous activities and a hyper-

production of tasks. Automatically generated tasks

items are auspicious and comparable to those

generated by humans (Gutl, Lankmayr, Weinhofer, &

Hofler, 2011). Automated processes to generate

content-specific test items are useful for educational

measurement (Gierl & Lai, 2013). On the one hand,

macros and scripts allow for the automated generation

of a vast number of tasks which is beneficial for

otherwise extensive and time-consuming

engagements of instructors. On the other hand, there

is a threat that the manufactured tasks are fragile to

support the gain and growth of specific subject-

related competencies in their completeness. It further

appears that this trend will continue to keep hectic in

the circles of educators, researchers, and designers

because the need for such offers for learning will

continue to grow. To respond to this need, the next

question that deserves attention is how to secure the

quality of the task designs. The quality of task designs

here does not refer only to the types of the tasks,

whether they are single or multiple-choice tasks,

textual or numerical tasks, open questions or to the

linguistic complexity of the items but brings up the

curricular purposes of the task designs and their

didactical potentials into the focus (Donevska-

Todorova, Trgalová, Schreiber, & Rojano, 2021).

Attempts and standards for generating quality task

items aligned to the Common Core State Standards

already exist for example in K-12 mathematics

education (e.g., by Gierl, Lai, Hogan, & Matovinovic,

2015). This paper presents adaptive strategies for

individualized learning through quality designs that

value curricular goals at university education.

3.3 Requirements to Quality Task

Design for ILPs on a Micro Level

Limitations of some types of tasks, e.g., MCQs have

been identified from a pedagogical standpoint in the

literature. Nevertheless, when they are implemented

within a framework including a set of feedback

principles, they support self-regulated learning

(Nicol, 2007). This is also evident when MCQs are

authored and evaluated by students (Bottomley &

Denny, 2011) because collaborative peer activities in

the LMS contribute to individual progress in learning

(Donevska-Todorova and Turgut, 2022).

Other types of tasks require complex

mathematical formulas and symbolic language for

their design and usage. For such tasks, Maxima-based

STACK Assessment tools are applied.

Further in this sub-section, we compare tasks for

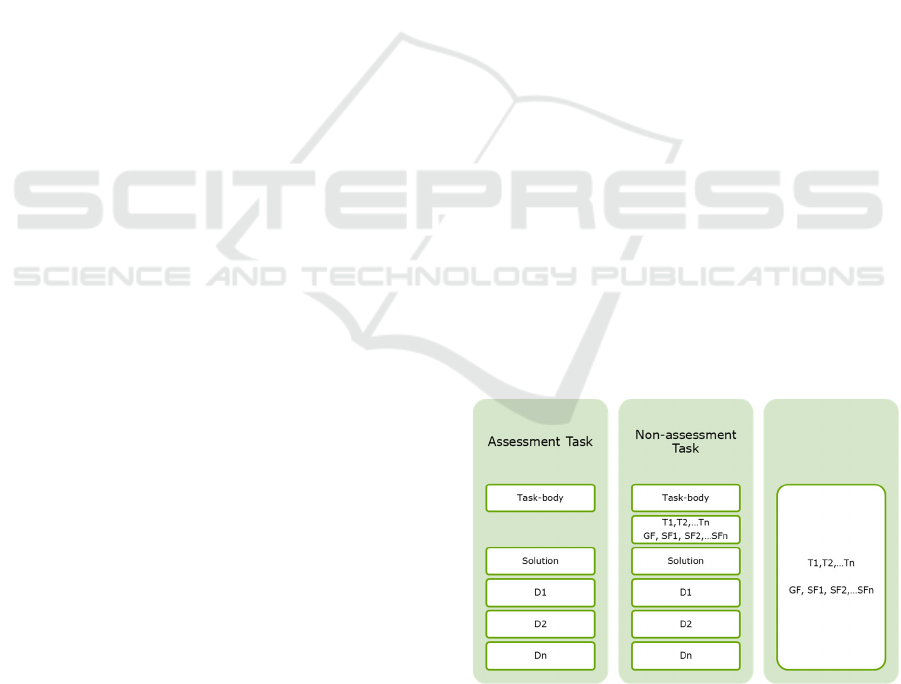

purposes of e-assessment and digital non-assessment

tasks (Figure 1). Identified differences aim at the

design of new tasks or interventions in the design of

existing tasks for quizzes in LMS, e.g., Moodle.

Figure 1: Comparison of tasks for e-assessment and digital

non-assessment task designs in e-learning environments

with LMS.

Assessment tasks (Figure 1, left) consist of a task

body and a solution. Any other entry different than

CSEDU 2022 - 14th International Conference on Computer Supported Education

578

the solution is a distractor. In closed types of tasks,

e.g., SCQs, MCQs, drag-and-drop and fill-in-the-

blanks, the number of distractors is finite and defined

by the task designer. In an assessment situation,

usually only one attempt for submitting the solution

is allowed. In comparison to this type of tasks, digital

non-assessment tasks have more constituents and are

more complex for creation (Figure 1, right). They

have distinctive characteristics and a broader

spectrum of aims: to support exercises and training

skills, development of problem-solving strategies,

advances of other competencies, and so forth.

Therefore, by this type of tasks, it is interesting to

consider is what learning opportunities can be

designed between these two stages: undertaking a

particular task and receiving an automated correct

solution. This gap is of particular importance when

the given student’s solution is not the correct one and

we tackle this issue. We argue that it is worth

allowing multiple attempts to the students for solving

the task. Moreover, it is valuable to invest time and

effort in creating hints as parts of the task design that

can support students along their individualized

learning process. They have the potential to sustain

students’ motivation and prevent early dropouts. It is

of particular importance that these hints should be

appropriate, specific, and content-related.

Aiming at supporting self-directed learning,

besides accessibility options that permit learning

anytime and anywhere, alignment to the curriculum

and accuracy of the content, the designs should meet

the following requirements:

(1) provide task items for answers/ solutions and

distractors (where applicable and as shown in Figure

1) that contribute to learners’ competencies growth

according to a competence model and curricular

goals,

(2) afford overall and specific feedback of diverse

types (as discussed in sub-section 3.1),

(3) pose user-friendly displays for easy navigation

(provided by the Moodle interface unique to the

university, e.g., toolbar menus, colour, etc.),

(4) are scalable and empower sustainability

(discussed below in sub-section 5.2).

Some LMS, e.g., Moodle, have embedded options

regarding the first requirement, where competency

frameworks can initially be established on a global

level by administrators and then linked with lesson

plans and activities in one or more courses by

instructors. Such approach allows students receive

reports about their competency growth across a span

of modules along their study.

The third requirement is related to the user

experiences and the potential of the digital learning

environment in supporting affective aspects of

learning as motivation, prevention of boredom, or

similar.

3.4 Design of Task Sequences for ILP

Once a non-assessment task (as shown in Figure 1)

fulfills the above quality requirements, it may be

considered for implementation in a task sequence

(Figure 2) that aims to support individual training or

exercising.

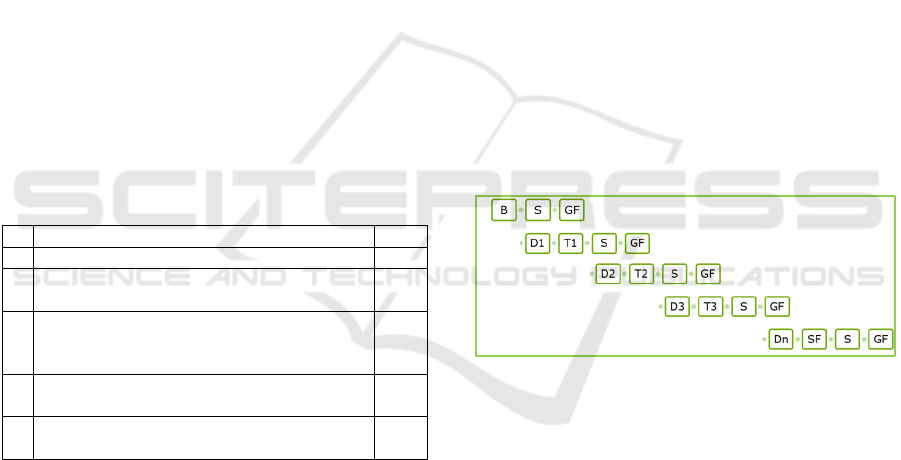

Figure 2: Adaptive task sequencing within a self-regulated

activity, e.g., Quiz in LMS with automated double

randomization.

The appearance of each of the tasks in the

sequence is randomized in every new trial with a

default option provided by the LMS. Additional

randomization appears within a task, e.g., in “fill in

the blanks” tasks, multiple true/false questions, MCQ

questions, etc. through randomization of the

distractors. This double randomization allows altered

appearances of the same subject-specific content

through automated combinations (Ramos De Melo,

et. al., 2014). The number of attempts per task

sequence is in our case set to unlimited, because they

aim to support learning and not assessment.

Advanced and evaluated task sequences, that are

developed, tested, and evaluated through the

iterations in a complete DR cycle (Psillos &

Kariotoglou, 2015) may be offered to the students for

self-assessment.

4 RESEARCH QUESTIONS AND

METHODOLOGY

Drawing on the theoretical background that considers

adaptive and individual microlearning presented in

the second section and the literature discussing the

current state of research about adaptive strategies in

the third section, this paper considers the following

research question:

RQ: How can adaptive strategies as feedback

adaptations, task design, and task sequence design in

the LMS Moodle affect individualization of

microlearning pathways of undergraduate students?

The complexity of the research question in view

of subject-specific, pedagogical, and technical

aspects requires a methodological approach with an

iterative nature that can secure development,

Individualizing Learning Pathways with Adaptive Learning Strategies: Design, Implementation and Scale

579

implementation, and evaluation elements. Therefore,

this work is based on the principles of Design

Research (DR) (Kelly, Lesh, & Baek, 2008)

involving mixed methods and this paper explains a

pre-study that is the first of a total of seven phases of

a complete DR initial cycle. The pre-study is followed

by a pilot study in the second phase, and it is also

briefly outlined in the upcoming sub-section.

4.1 Data Collection and Data Analysis

In phase one of the complete DR cycle, a pre-study

took place in the first half of the winter semester

2021/22 at the University of Applied Sciences HTW

Berlin in Germany.

Besides on findings from a literature review and

theoretical grounding, the pre-study relies on two

sources for data collection: an internal system for

teaching, learning, and research LSF at the university

and the LMS Moodle. Four out of the eleven courses

in the LSF data pool were selected for the analysis

(Table 1). In addition, a Moodle course was

established for design and trials of new activities and

question banks.

Table 1: Data Collection in the Pre-study: Courses for

Investment and Financing in the winter semester 2021/22 in

the university LSF and the LMS Moodle.

1 Number of courses in LSF 11

2 Selected Moodle courses for

p

re-stud

y

4

3 Additional Moodle course for the aims of

the pre-stud

y

1

4 Question bank with categories and labels

according to competencies, task types and

levels of difficult

y

1

5 Generated task queries (task text, task

solution and destructors)

134

6 Generated item responses for various types

of feedback adaptations

74

All generated items are categorized and labelled

according to three criteria: subject-specific

competences, type of the task and level of difficulty

of the task. This categorization enables easier

structuring of the question bank and randomization of

the tasks in the task sequences.

In the second phase of the complete DR cycle, a

pilot experimental trial is planned for the second half

of the winter semester 2021/22 at the University of

Applied Sciences HTW Berlin in the frame of one

module. The data collection and data analysis in this

phase are two-step processes having two goals.

The first set of data provided on a basis of a

questionnaire for the university educators serves for

the creation of a competence model related to a

revised Bloom Taxonomy specifically developed for

the module. Further, based on the answers given by

the participants and the competence model, Moodle-

based task items and activities for microlearning can

be (re)designed.

The second set of data will be collected via the

LMS Moodle-Course. This set of data aims to provide

reactions and commentaries about the quality of the

prototype of the design including the feedback

adaptations, that was described in the previous two

sections of this paper.

The process of (re)creating and iterative experimental

testing of the tasks and activities will undergo the

other five phases of the DR cycle.

5 RESULTS AND DISCUSSION

Learning possibilities in the LMS Moodle at our

university are grouped as resources and activities.

Likewise, in the processes of design and

implementation, we distinguish between these two

groups of learning opportunities. Here, we ‘zoom in’

a single task design, with embedded feedback

alternations enabling individualization of learning

trajectories (Figure 3), for a Moodle quiz activity.

Figure 3: Feedback adaptations and micro-level sequencing

in hypothetical individual learning pathways (ILP).

Instead of presenting the development of the

various ILPs in a typical algorithmic if-then loop and

cyclic manner, the visualization in Figure 3 offers an

outline of the effects of the feedbacks on the length

and the shape of the individual learning trajectories.

Hence, each micro-ILP beginning with a task body

(notated with B in Figure 3, called question text in

Moodle) directly ends with a direct correct solution

(S in Figure 3) immediately accompanied with both

behavioural and overall feedback (GF in Figure 3) or

continues with a distractor (Di, i=1,2,…,n in Figure

3) supplemented with a specific cognitive feedback

Ti, i=1,2,…,n in Figure 3). Thus, the shortest length

of a possible ILP is three steps: B-S-GF, and the

longest individual path depends on n, where n is the

maximal number of allowed trials, which is the same

as the number of feedbacks embedded in the task

CSEDU 2022 - 14th International Conference on Computer Supported Education

580

design, and the student’s choices. In our design, n is

set to 3. So, the student is allowed to undertake the

same task in a single task sequence for a up to three

times and each time he/she enters a wrong answer Di,

an immediate feedback Ti of altered sort as described

in sub-section 3.1 follows. Meanwhile, every new

entry following feedback decreases the full score of

total points for the task by one third. This fosters the

student to make decisions about the distinct further

steps that they prefer to make. In this way, the student

is triggered to take responsibility about own decision

making which fosters self-regulation of learning

processes and as a result thereof, a development of

personal competencies, besides content-specific

competencies and knowledge growth. This is in line

with recommendations that “technology must not

take away control from the learner, but instead

provide stimuli to increase competencies for self-

directed learning” (Gutl, Lankmayr, Weinhofer, &

Hofler, 2011, p. 323). This suggests a didactical

adaptation of the individual's profile in the LMS as a

compulsion strengthening differentiation of the ILPs.

In the next section, we proceed by presenting mall

well-planned portions of content for cognitive

activation and motivational continuity during

engagement with those units created with the LMS

Moodle at our university.

5.1 Contextual Examples of Task

Designs and the Feedback

Adaptation for ILP

To illustrate the task designs including feedback

adaptations for supporting ILP, we provide three

contextualized examples in Financial Mathematics

and investment decisions created with the LMS

Moodle at our university.

The question bank of the created tasks includes

single choice, multiple-choice, fill in the blanks, and

open-ended textual and numerical questions. The

sample can easily be accustomed to supporting

numerous variations of the tasks and the feedback.

The question bank is structured, and the tasks are

categorized and labelled according to curricular

competencies for the module and as described in sub-

section 4.1.

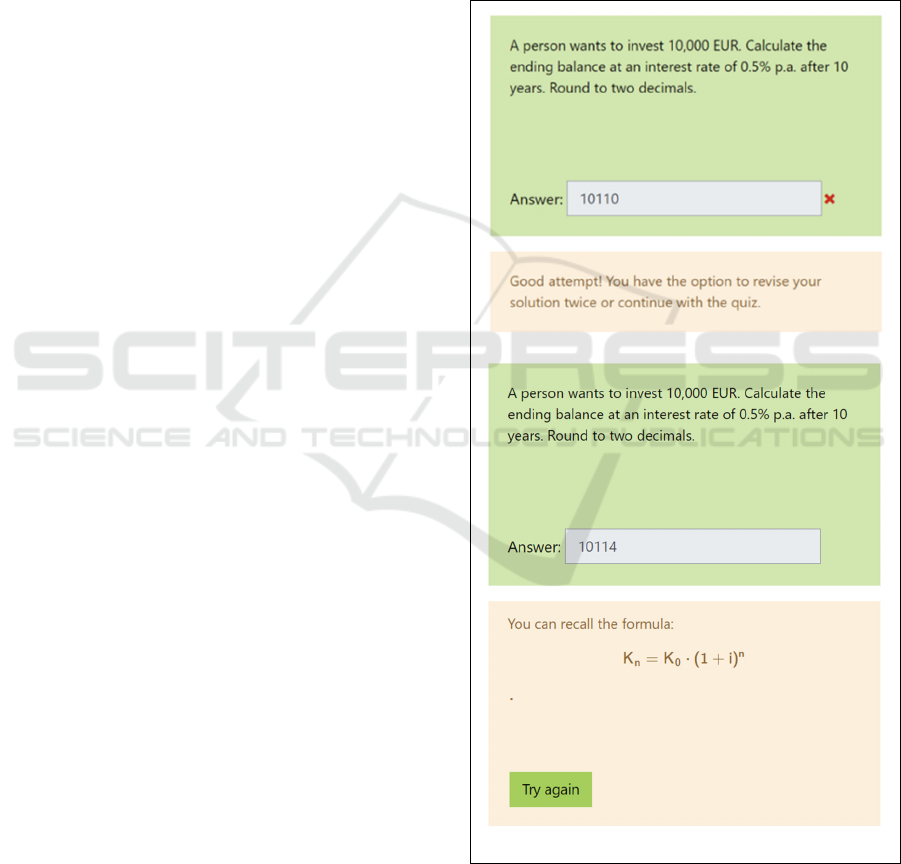

In sub-section 3.3 (Figure 1) we explained that the

attention is on the ‘hidden’ activities and adaptive

feedbacks that happen when an improper answer of a

non-assessment task is given by the student. To

exemplify this, the first example showcases a task

design (Figure 4. a)) with an open short numerical

answer and immediate general behavioural feedback

(as discussed in sub-section 3.1) appearing with an

incorrect solution. The feedback is shown in the

orange rectangle in Figure 4. a). Below it, in Figure 4.

b) there is cognitive feedback containing a

mathematical formula inserted in Moodle with Latex

as a hint. An interval for a tolerated mistake in the

rounding is also restricted and defined in the Moodle

task. An interactive combination of feedback and

hints provides meaningful helpful information and

guidance for the students. The correct solution

appears only in the final attempt, so when n=3

according to the description of the ILP on Figure 3.

a)

b)

Figure 4: a) Preview of the immediate general behavioural

feedback in an open numeric task design appearing with an

incorrect solution. b) Preview of cognitive feedback

containing a mathematical formula with Latex.

Individualizing Learning Pathways with Adaptive Learning Strategies: Design, Implementation and Scale

581

The second example (Figure 5. a), b) c), and d))

displays a “drag and drop into text” task with six

choices, combined feedback, and multiple trials. The

combined feedback consists of cognitive corrective

and cognitive epistemic feedback. It illustrates a

possible ILP in which a correct solution and overall

feedback are accomplished in a second attempt. So,

the ILP looks like: B - D1/F1 - D2/F2 – S/GF in

relation to the micro-level sequencing shown in

a)

b

)

c)

d)

Figure 5: Preview of a ILP: B - D1/F1 - D2/F2 – S/GF.

Figure 3 in the previous section and the research

question about the effects of the feedback adaptations

of the formation and length of a ILP (in section 4).

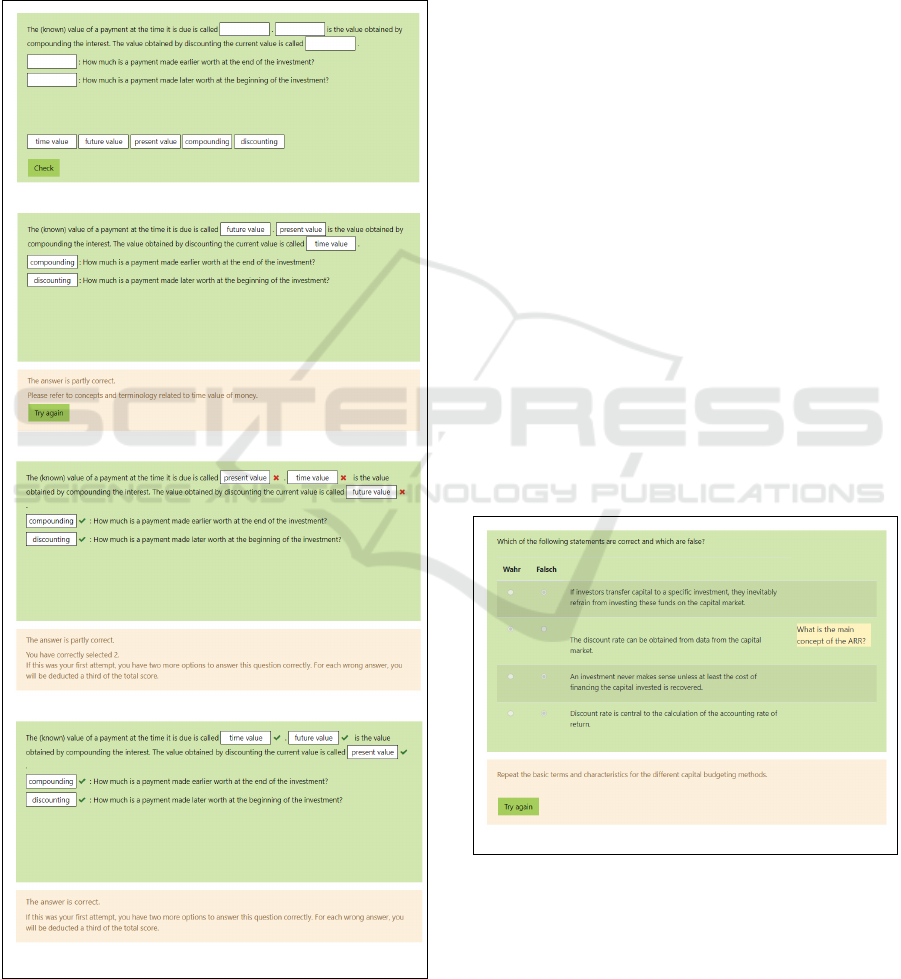

The third example demonstrates a design for a

True/Falls task (Figure 6) and two types of feedback:

cognitive epistemic feedback (above on the right with

yellow colour on the figure) and cognitive suggestive

feedback (below with orange colour on the figure), as

discussed in sub-section 3.1. Based on the feedback,

the student can decide in which direction can his/her

individual path continue. By following the epistemic

feedback, which is formulated as a question, the

student is tempted to make a decision based on

reflection on own knowledge and reconsideration or

consolidation. If the student succeeds in recalling

knowledge, which is a subject-specific competence

(defined in the competence model mentioned in

section 4), the student can move forward in the ILP.

Alternatively, the suggestive feedback guides the

student to read again or repeat already familiar basic

concepts, thus, to redo some of the previous steps in

the ILP. So, it suggests a redirection to a resource

instead of continuation with a new trial for the task or

new task in the sequence. In this way, these two

feedback items deliver different proposals for further

adequate activities regarding the type and the

complexity degree, refine and shape the ILP, and

determine its length in the microlearning process,

which is related to the posed RQ about the influence

of the task design and the feedback adaptations on the

student’s ILP stated in section 4.

Figure 6: Preview of specific cognitive epistemic and

suggestive feedbacks in a single solution true/false task.

A look back on these three tasks with accompanying

adaptive feedback, enables us to briefly evaluate them

whether they meet the requirements of quality task

design for ILP on a micro-level discussed in sub-

section 3.3. Each of the tasks provides task items for

CSEDU 2022 - 14th International Conference on Computer Supported Education

582

answers/ solutions and distractors and afford overall

and specific feedback of diverse types. Therefore,

they fulfil the first two criteria. The visual appearance

of the tasks’ items, the appropriate feedbacks, their

arrangement, and the colour is university-unique,

which fulfils the third criterion. The fourth criterion

for the quality of the task design is related to

scalability of the tasks and is discussed in the next

sub-section.

Let us now summarize the above discussion with

regards to the research question. The adaptive

strategies effect the ILP in the following ways:

The adaptive feedback acts as a turning point in

decision making and with that shapes the ILP.

The adaptive feedback influences the number

of steps that individual learners make and with

that optimizes not only the length of the ILP,

but also stimulates the duration and the

continuity of the engagement.

Besides the standard task text/body, a quality

task design involving precise distractors and

embedded feedback (which is not the case in

assessment tasks) can support training and

contribute to deeper understanding along an

ILP.

Additionally, to the randomization possibilities

provided by the LMS, task sequences are adjustable

and allow students’ decision-making and assuming

personal responsibility for their ILP.

5.2 Further Considerations for

Re-design, Evaluation, and Scale

In the previous sub-section, we have discussed

possible designs and exemplified contextual

implementation of micro-content and micro-activities

that enable individual knowledge building and exploit

benefits of adaptive micro-learning in higher

education settings in line with the approach suggested

by Gherman, Turcu, C.E., & Turcu C.O. (2021). Yet,

personalized, and adaptive learning, are not without

limitations (Tan, Soler, Pivot, Zhang, Wang, 2020).

We contemplate that a two-part process for reviewing

and evaluating (Gierl & Lai, 2016) of the designed

tasks and feedback adaptations is vital for their high

quality and it will be undertaken during a later phase

in the DR cycle. Further, transferability of the applied

adaptive strategies in other courses is also not

straightforward. Some issues of item generation and

scale with regards to sustainability are mentioned by

Soares, Lopes, & Nunes (2019). We currently

consider two ways for scale: through competency

frameworks, either on an administrative or on a

course level and through automatization with pivot

tables and additional modifications. With the

competency frameworks, students achivements can

be digitaly traced and reported towards an outcome-

based education across many courses on the long term

during the whole study programm which is valueable

for their furture carriers. Further on, we point out that

these results can be extrapolated beyond educational

coursework because these aspects ay cross−apply to

professional working environments. Lastly, novel

mobile touch devices, such as smart phones, may

require re-design of the adaptive strategies or

technical interventions in the LMS, which is in line

with (Papadakis, Kalogiannakis, Sifaki, & Vidakis,

2018).

6 CONCLUSIONS

The availability of subject-specific content structured

in a usual weekly manner in the LMS is no longer

equally effective for the students and does not

correspond to their necessities. This paper

emphasizes the importance of shifting the educational

focus from content delivery towards didactically solid

adaptive design of micro-content and micro-activities

in innovative tertiary education (discussed in section

3). Individualization of learning pathways is

didactically made possible using adaptive learning

strategies, like feedback adaptations, task design and

task sequence design that were technically

implemented through the intelligent features of the

LMS Moodle for modern education delivery and

illustrated with contextual examples (in section 5).

The proposed fine-grained learning activities were

designed in a pre-study based on literature review and

learning theory about competencies at higher

education. The further (re)design, testing, and

evaluation will undergo a complete DR cycle

including iterative design experiments (methodology

presented in section 4). Challenges of detection,

recognition, and support of all realistic multiplicities

of individuals’ learning styles, mutable learning

progress, and contextually dependent learning

accessibility open avenues for further research.

ACKNOWLEDGEMENTS

The research work presented in this paper is

undertaken at the University of Applied Sciences,

Hochschule für Technik und Wirtschaft Berlin,

Individualizing Learning Pathways with Adaptive Learning Strategies: Design, Implementation and Scale

583

Germany in the frame of the project” Curriculum

Innovation Hub” granted by Stiftung Innovation in

der Hochschullehre.

REFERENCES

Alasalmi, T. (2021). Students Expectations on Learning

Analytics: Learning Platform Features Supporting Self-

regulated Learning. In CSEDU (2) (pp. 131-140).

Ajroud, H. B., Tnazefti-Kerkeni, I., & Talon, B. (2021).

ADOPT: A Trace based Adaptive System. In CSEDU

(2) (pp. 233-239).

Al-Azawei, A., & Badii, A. (2014). State of the art of

Learning Style Based Adaptive Educational

Hypermedia Systems (LS-BAEHSS), International

Journal of Computer Science & Information

Technology, 6(3), 1-19.

Belicová, S., Lacsný, B, & Teleki, A. (2018). E-Homework

with Feedback on the Topic of Vector Sum of Forces

and Vectors in the E-Learning Environment Moodle

and its Analysis, EDULEARN18 Proceedings, pp.

8271-8274.

Bottomley, S. & Denny, P. (2011). A participatory learning

approach to biochemistry using student authored and

evaluated multiple-choice questions. doi:

10.1002/bmb.20526.

Boussaha, K., & Drissi, S. (2021). Collaborative Tutoring

System Adaptive for Tutor's Learning Styles based on

Felder Silverman Model. In CSEDU (2) (pp. 200-207).

Cavanagh, S. (2014). What is “personalized learning”?

Educators seek clarity. Education Week, 34(9), S2–S4.

Donevska-Todorova, A., Trgalová, J., Schreiber, C., &

Rojano, T. (2021). Quality of task design in technology-

enhanced resources for teaching and learning

mathematics. In Mathematics Education in the Digital

Age: Learning, Practice and Theory (pp. 23-41).

Routledge.

Donevska-Todorova, A. & Turgut, M. (2022). Epistemic

Potentials and Challenges with Digital Collaborative

Concept Maps in Undergraduate Linear Algebra. In

The Proceedings of the Twelveth Congress of the

European Society for Research in Mathematics

Education TWG14 (CERME12, February 2 – 5, 2022).

Bolzano, Italy (in press).

Elmabaredy, A., Elkholy, E., & Tolba, A. A. (2020). Web-

based adaptive presentation techniques to enhance

learning outcomes in higher education. Research and

Practice in Technology Enhanced Learning, 15(1), 1-

18.

Fatahi, S. (2019). An experimental study on an adaptive e-

learning environment based on learner’s personality

and emotion. Education and Information Technologies,

24(4), 2225–2241. https://doi.org/10.1007/s10639-019-

09868-5.

Gherman, O., Turcu, C.E., & Turcu C.O. (2021). An

Approach to Adaptive Microlearning in Higher

Education, INTED2021 Proceedings, pp. 7049-7056.

Gierl, M. J., & Lai, H. (2013). Instructional topics in

educational measurement (ITEMS) module: Using

automated processes to generate test items. Educational

Measurement: Issues and Practice, 32(3), 36-50.

Gierl, M. J., Lai, H., Hogan, J. B., & Matovinovic, D.

(2015). A method for generating educational test items

that are aligned to the common core state standards.

Journal of Applied Testing Technology, 16(1), 1-18.

Gierl, M. J., & Lai, H. (2016). A process for reviewing and

evaluating generated test items. Educational

Measurement: Issues and Practice, 35(4), 6-20.

https://doi.org/10.1111/emip.12129.

Grevtseva, Y., Willems, J., & Adachi, C. (2017, July).

Social media as a tool for microlearning in the context

of higher education. In Proceedings of European

Conference on Social Media (pp. 131-139).

Gutl, C., Lankmayr, K., Weinhofer, J., & Hofler, M. (2011).

Enhanced Automatic Question Creator--EAQC:

Concept, Development and Evaluation of an Automatic

Test Item Creation Tool to Foster Modern e-Education.

Electronic Journal of e-Learning, 9(1), 23-38.

Kelly, A. E., Lesh, R. A., & Baek, J. Y. (Eds.) (2008).

Handbook of design research methods in education:

Innovations in science, technology, engineering, and

mathematics education. New York: Routledge.

Lindner, M. (2006). Use these tools, your mind will follow.

Learning in immersive micromedia and

microknowledge environments. In: The Next

Generation: Research Proceedings of the 13th ALT-C

Conference, pp. 41–49 (2006).

Liu, M., McKelroy, E., Corliss, S. B., & Carrigan, J. (2017).

Investigating the effect of an adaptive learning

intervention on students’ learning. Educational

Technology Research and Development, 65(6), 1605–

1625. https://doi.org/10.1007/s11423-017-9542-1.

Lockspeiser, T. M., & Kaul, P. (2016). Using

individualized learning plans to facilitate learner-

centered teaching. Journal of Pediatric and Adolescent

Gynecology, 29(3), 214–217.

https://doi.org/10.1016/j.jpag.2015.10.020.

Maier, U. (2021). Self-referenced vs. reward-based

feedback messages in online courses with formative

mastery assessments: A randomized controlled trial in

secondary classrooms. Computers & Education, 174,

104306.

Makhlouf, J. and Mine, T. (2021). Mining Students’

Comments to Build an Automated Feedback System. In

Proceedings of the 13th International Conference on

Computer Supported Education (CSEDU 2021) -

Volume 1, pp. 15-25. DOI:

10.5220/0010372200150025.

Morze, N., Varchenko-Trotsenko, L., Terletska, T., &

Smyrnova-Trybulska, E. (2021). Implementation of

adaptive learning at higher education institutions by

means of Moodle LMS. In Journal of Physics:

Conference Series (Vol. 1840, No. 1, p. 012062). IOP

Publishing.

Nicol, D., (2007). E-assessment by design: using multiple-

choice tests to good effect. DOI:

10.1080/03098770601167922

CSEDU 2022 - 14th International Conference on Computer Supported Education

584

Organisation for Economic Co-operation and Development

(OECD). (2006). Are students ready for a technology-

rich world? What PISA studies tell us. Retrieved from

http://www.oecd.org on 25.11.2021.

Osadcha, K., Osadchyi, V., Semerikov, S., Chemerys, H.,

& Chorna, A. (2020). The review of the adaptive

learning systems for the formation of individual

educational trajectory. CEUR Workshop Proceedings.

Papadakis, S., Kalogiannakis, M., Sifaki, E., & Vidakis, N.

(2018). Evaluating Moodle use via Smart Mobile

Phones. A case study in a Greek University. EAI

Endorsed Transactions on Creative Technologies,

5(16).

Psillos, D., & Kariotoglou, P. (Eds.). (2015). Iterative

design of teaching-learning sequences: introducing the

science of materials in European schools. Springer.

Ramos De Melo, F., Flôres, E. L., Diniz De Carvalho, S.,

Gonçalves De Teixeira, R. A., Batista Loja, L. F., & De

Sousa Gomide, R. (2014). Computational organization

of didactic contents for personalized virtual learning

environments. Computers & Education, 79, 126–137.

https://doi.org/10.1016/j.compedu.2014.07.012.

Saito, T. & Watanobe, Y. (2020). “Learning path

recommendation system for programming education

based on neural networks,” International Journal of

Distance Education Technologies (IJDET), 18(1), pp.

36–64.

Scheiter, K., Schubert, C., Schüler, A., Schmidt, H.,

Zimmermann, G., Wassermann, B., Krebs, M.-C. &

Eder, T. (2019). Adaptive multimedia: Using gaze-

contingent instructional guidance to provide

personalized processing support. Computers &

Education, 139, 31-47.

https://doi.org/10.1016/j.compedu.2019.05.005.

Shemshack, A., & Spector, J. M. (2020). A systematic

literature review of personalized learning terms. Smart

Learning Environments, 7(1), 1-20.

Soares, F., Lopes, A.P., & Nunes, M.P., (2019). Teaching

and Learning Through Adaptive Strategies – A Case in

Higher Education, EDULEARN19 Proceedings, pp.

170-179.

Tan, Q., Soler, R., Pivot, F., Zhang, X., & Wang, H. (2020).

Introspection of Personalized and Adaptive Learning,

INTED2020 Proceedings, pp. 8054-8061.

Towle B. & Halm M. (2005). Designing Adaptive Learning

Environments with Learning Design. In: Koper R.,

Tattersall C. (eds) Learning Design. Springer, Berlin,

Heidelberg. https://doi.org/10.1007/3-540-27360-

3_12.

U.S. Department of Education, Office of Educational

Technology (2017). Reimagining the role of technology

in education: 2017 national education technology plan

update. Retrieved from https://tech.ed.gov/files/2017/

01/NETP17.pdf on 25.11.2021.

Vanitha, V. & Krishnan, P. (2019). “A modified ant colony

algorithm for personalized learning path construction,”

Journal of Intelligent & Fuzzy Systems, 37(5), pp.

6785–6800.

Individualizing Learning Pathways with Adaptive Learning Strategies: Design, Implementation and Scale

585