Training AI to Recognize Realizable Gauss Diagrams: The Same

Instances Confound AI and Human Mathematicians

Abdullah Khan

1 a

, Alexei Lisitsa

2 b

and Alexei Vernitski

1 c

1

Department of Mathematical Sciences, University of Essex, Essex, U.K.

2

Department of Computer Science, University of Liverpool, Liverpool, U.K.

Keywords:

Computational Topology, Gauss Diagrams, Realizable Diagrams, Machine Learning.

Abstract:

Recent research in computational topology found sets of counterexamples demonstrating that several recent

mathematical articles purporting to describe a mathematical concept of realizable Gauss diagrams contain a

mistake. In this study we propose several ways of encoding Gauss diagrams as binary matrices, and train

several classical ML models to recognise whether a Gauss diagram is realizable or unrealizable. We test their

accuracy in general, on the one hand, and on the counterexamples, on the other hand. Intriguingly, accuracy

is good in general and surprisingly bad on the counterexamples. Thus, although human mathematicians and

AI perceive Gauss diagrams completely differently, they tend to make the same mistake when describing

realizable Gauss diagrams.

1 INTRODUCTION

The concept of realizable Gauss diagrams belongs to

the mathematical area of topology and, more specifi-

cally, the study of closed planar curves. For a closed

planar curve, such as shown at (Fig. 1, a) its Gauss

code (or Gauss word) can be obtained by labelling all

intersection points by different symbols (or numbers)

and then by travelling all the way along the curve and

writing down the labels encountered on the way. For

example, one of the Gauss codes of the curve shown

at (Fig. 1, a) is 123123. It is easy to see that the Gauss

code of a curve with n intersection points has a length

2n and it is a double occurrence word, that is, each

symbol occurs exactly twice in it. With any double

occurrence word w one can associate its chord dia-

gram; it consists of a circle with all symbols of w

placed clockwise around the circle and chords which

link the points labelled by the same symbol, as in

(Fig. 1,b).

If a double occurrence word and its corresponding

chord diagram can be obtained from a planar curve,

both the word and the diagram are called realizable.

Not every Gauss diagram is realizable; for example,

the diagrams in (Fig. 2) and (Fig. 3) are not realizable.

a

https://orcid.org/0000-0002-3056-008x

b

https://orcid.org/0000-0002-3820-643x

c

https://orcid.org/0000-0003-0179-9099

1

2

2

2

2

3

3

3

3

1

1

1

(a)

(b)

(c)

Figure 1: A) a planar curve; b) its Gauss diagram and c)

its interlacement graph. The corresponding Gauss word is

123123.

In the 1840s Gauss (Gauss, 1900) asked which

chords diagrams are realizable, and gave a neces-

sary, but not sufficient condition; in a realizable dia-

gram every chord intersects an even number of other

chords. We will refer to the chord diagrams satis-

fying this condition as Gauss diagrams. Full char-

acterisation of realizability was first provided in the

1930s by Dehn (Dehn, 1936). Dozens of variants

and re-reformulations of the criteria and algorithms

for checking realizability have appeared since then,

see e.g. (Marx, 1969; Francis, 1969; Lov

´

asz and

Marx, 1976; Rosenstiehl, 1976; Rosenstiehl and Tar-

jan, 1984; Dowker and Thistlethwaite, 1983; Kurlin,

2008; Shtylla et al., 2009). It was shown in (Rosen-

stiehl, 1976) that the realizability of a Gauss diagram

can be decided just using its interlacement graph. An

interlacement graph of a chord diagram is an undi-

990

Khan, A., Lisitsa, A. and Vernitski, A.

Training AI to Recognize Realizable Gauss Diagrams: The Same Instances Confound AI and Human Mathematicians.

DOI: 10.5220/0010992700003116

In Proceedings of the 14th International Conference on Agents and Artificial Intelligence (ICAART 2022) - Volume 3, pages 990-995

ISBN: 978-989-758-547-0; ISSN: 2184-433X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

rected graph whose vertices are the chords of the di-

agram, and in which there is an edge between two

vertices, if the chords corresponding to these vertices

intersect. For example, (Fig. 1, c) is the interlacement

graphs of (Fig. 1, b). In experiments in this paper we

will be dealing only with prime Gauss diagrams, that

is those whose interlacement graph is connected (as

the name suggests, if a Gauss diagram is not prime

then it can be decomposed into parts which are prime

Gauss diagrams, so if one wants to check whether the

Gauss diagram is realizable, it can be done individu-

ally for each part).

In recent studies (Grinblat and Lopatkin, 2018;

Grinblat and Lopatkin, 2020; Biryukov, 2019) very

simple realizability conditions were formulated, ex-

pressible in terms of the interlacement graphs. How-

ever, it was later shown in (Khan et al., 2021b; Khan

et al., 2021a) that these conditions are necessary

but not sufficient, and explicit counterexamples were

found. It is not the only situation when a mistake in

mathematical publications is found, but it has created

a unique opportunity because these counterexamples

are numerous and reasonably small, therefore, can be

included in datasets for machine learning.

In this paper we approach the classical concept of

Gauss realizability from a new angle, that of machine

learning. Specifically, we explore learnability of be-

ing realizable by a classical model of multi-layered

perceptron using four different encodings of Gauss

diagrams. We show that encodings which we denote

by IG and SM yield the highest accuracy of trained

models. We further show that the accuracy of trained

models drops dramatically when tested on those dia-

grams from (Khan et al., 2021b; Khan et al., 2021a)

which are counterexamples to the realizability con-

ditions in (Grinblat and Lopatkin, 2018; Biryukov,

2019; Grinblat and Lopatkin, 2020). Thus, although

human mathematicians and AI perceive Gauss dia-

grams completely differently, it seems that they ex-

perience difficulties on the same family of Gauss dia-

grams.

2 STUDY DESIGN

2.1 Encodings of Gauss Diagrams

Before we can use machine learning to recognize

properties of Gauss diagrams, we need to represent

Gauss diagrams using suitable encodings. We have

identified four natural encoding for Gauss diagrams,

which we denote OH, SM, PM, IG. They are de-

scribed below. A priori it is not clear which encoding

will perform best in machine learning and be most ac-

curate.

Note that from the mathematical point of view,

each of these encodings is a binary matrix, but for

the purposes of machine learning, in our experiments

we concatenate all rows of this matrix into a one-

dimensional binary array.

As you will see, the size of the four encodings is

not the same. Let us list the sizes here together, for

convenience.

• OH: n × 2n = 2n

2

• SM: 2n × 2n = 4n

2

• PM: n × n = n

2

• IG: n × n = n

2

2.1.1 One-hot Encoding of Gauss Words (OH)

Given a Gauss diagram with n chords, label its chords

with elements of {1, . . . , n} and represent it as a Gauss

word w (recall that a Gauss word is built by travelling

around the Gauss diagram and recording what chords

are observed). Then encode the symbols of the Gauss

word using one-hot encoding (see e.g. (Zheng and

Casari, 2018)). This means that we build a binary

matrix OH of size n × 2n such that OH

i j

= 1 (or 0) if

the j-th symbol in w is (is not) i.

2.1.2 Sparse Matrix Encoding (SM)

Here again, first use a Gauss word w to represent a

given Gauss diagram, but then w is encoded differ-

ently. Build a binary matrix SM of size 2n × 2n such

that SM

i j

= 1 (or 0) if the i-th symbol in w is equal to

(is not equal to) the j-th symbol in w.

2.1.3 Permutation Matrix Encoding (PM)

Again, given a Gauss diagram, represent it by a Gauss

word w. When we use this encoding, we assume

that the diagram is a Gauss diagram, that is, satisfies

Gauss’s condition stated in Section 1, and not merely

a chord diagram. Then each symbol {1, . . . , n} oc-

curring in w occurs exactly once at an odd-numbered

position in w and exactly once at an even-numbered

position in w. Build a binary matrix PM of size

n × n such that PM

i j

= 1 (or 0) if the symbol at the

i-th odd position in w (that is, the symbol at position

2i − 1) is equal to (is not equal to) the symbol at the

j-th even position in w (that is, the symbol at posi-

tion 2 j). Equivalently, one can say that matrix PM is

produced from the 2n × 2n matrix SM of the SM en-

coding by deleting all even-numbered rows and odd-

numbered columns in SM. Yet another way to think

of this matrix is to notice that all symbols {1, . . . , n}

Training AI to Recognize Realizable Gauss Diagrams: The Same Instances Confound AI and Human Mathematicians

991

occur in odd-numbered position in w, and all sym-

bols {1, . . . , n} occur in even-numbered position in w;

however, the order in which they appear is different;

the matrix PM is the permutation matrix changing one

of these orders into the other.

2.1.4 Interlacement Graph Encoding (IG)

The adjacency matrix of the interlacement graph of a

Gauss diagram is used as an encoding. For a diagram

with n chords its size is n

2

. A practical way of con-

structing this matrix is this: given a Gauss diagram,

represent it by a Gauss word w; then build a binary

matrix IG of size n × n such that IG

i j

= 1 (or 0) if

in w there is an odd (even) number of occurrences of

symbol i between the two occurrences of symbol j.

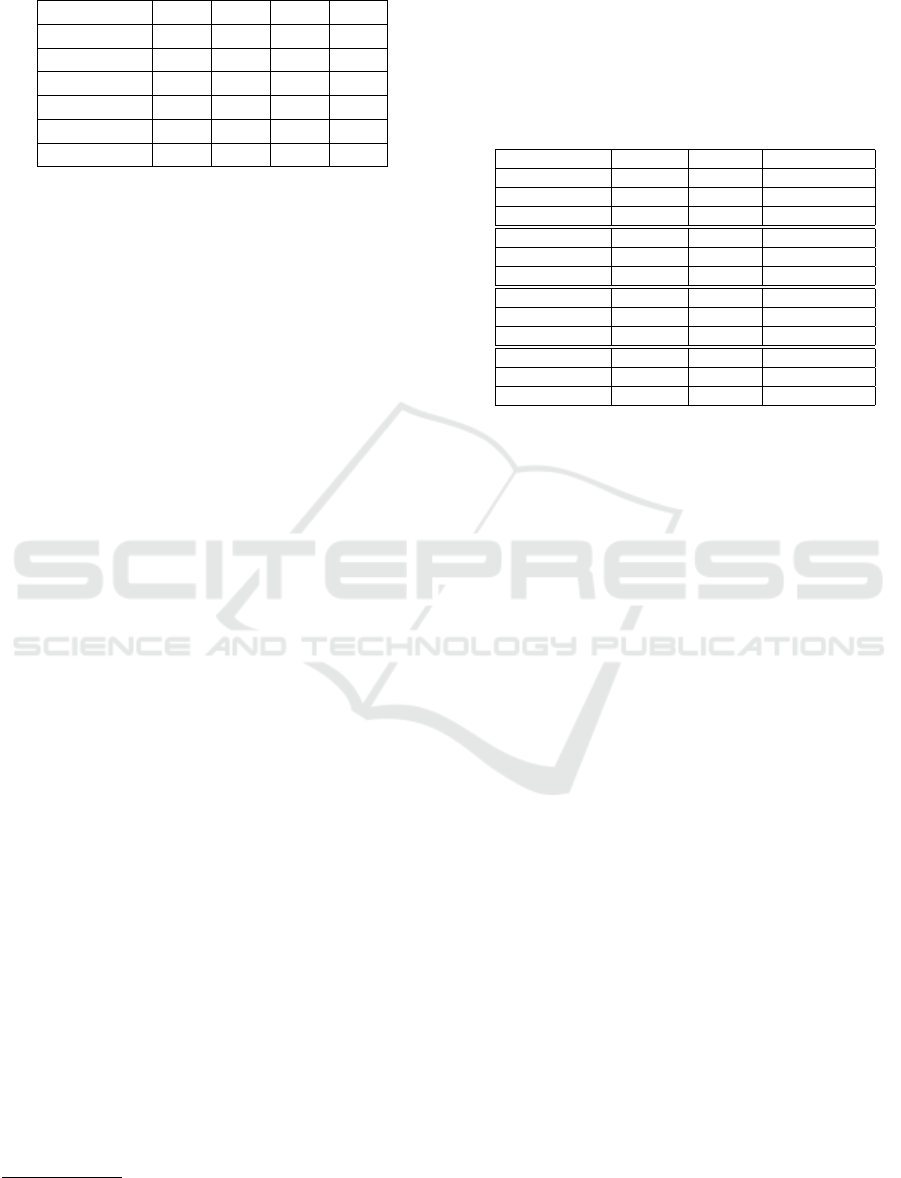

Table 1: Different encodings of the “trefoil” planar curve

shown at Fig.1 a): i) one-hot; ii) sparse matrix; iii) permu-

tation matrix; iv) interlacement graph. Incidentally iii) and

iv) are the same for this example. In general PM and IG

encodings are different.

1 0 0 1 0 0 0 1 0

0 1 0 0 1 0 0 0 1

0 0 1 0 0 1 1 0 0

i) iii)

1 0 0 1 0 0 0 1 0

0 1 0 0 1 0 0 0 1

0 0 1 0 0 1 1 0 0

1 0 0 1 0 0

0 1 0 0 1 0 iv)

0 0 1 0 0 1

ii)

2.2 Machine Learning Model

We consider a problem of supervised learning of the

binary classifier of realizability property of Gauss di-

agrams. While there many possible machine learning

approaches which could be applied for this task we

confine ourselves with the classical model of multi-

layer perceptron (Rosenblatt, 1958; Rosenblatt, 1961;

Rumelhart et al., 1986) and its implementation in

WEKA Workbench for Data Mining (Witten et al.,

2016). Multi-layer perceptron (MLP) is a kind of a

feedforward neural network models which supports

supervised learning using backpropagation (Rumel-

hart et al., 1986). It is one of the oldest and well-

studied models of machine learning, which is also

known to be an universal approximator (Cybenko,

1989; Hornik et al., 1989). MLP implementation

in WEKA supports sigmoid activation function (Han

and Moraga, 1995). In the initial experiments we have

used default settings of MLP in WEKA Workbench:

L = 0.3 (learning rate)

M = 0.2 (momentum rate)

N = 500 (number of epochs to train)

V = 0 (percentage size of validation set to use to

terminate training)

S = 0 (seed for Random Number Generator)

E = 20 (threshold for number of consecutive er-

rors to use to terminate training)

H = a (one hidden layer with (attribs + classes) /

2 many nodes)

2.3 Data Sets

We have used Gauss-lintel open source tool (Khan

et al., 2021c) to generate various datasets. For that

purpose the tool was extended to handle new types of

encodings. The main encoding used in Gauss-lintel is

permutation based (Khan et al., 2021d), so its transla-

tion to PM is trivial, while OH, SM and IG encodings

introduced in the previous Section, were implemented

additionally by translations from PM.

Originally Gauss-lintel was used for exhaustive

generation of classes of Gauss diagrams satisfying

different properties. For the purpose of this work

it was extended by the procedure for generation

of random Gauss diagrams using built-in predicate

random_permutation(+List, -Permutation) in

SWI-Prolog (Wielemaker et al., 2012).

The datasets were generated in Attribute-Relation

File Format (ARFF) acceptable by WEKA. We use

notation like IG-9-1000x2 to denote a dataset of

Gauss diagrams with 9 chords in IG encoding con-

taining 1000 random realizable and 1000 random

non-realizable Gauss diagrams.

3 EXPERIMENTS AND

DISCUSSION

We have performed two types of the experiments re-

ported in the following subsections.

3.1 Encodings Comparison

We have trained MLP binary classifier models for the

sets of random Gauss diagrams of various sizes for all

four encodings. We have used 80%/20% random split

of the datasets into training/testing datasets.

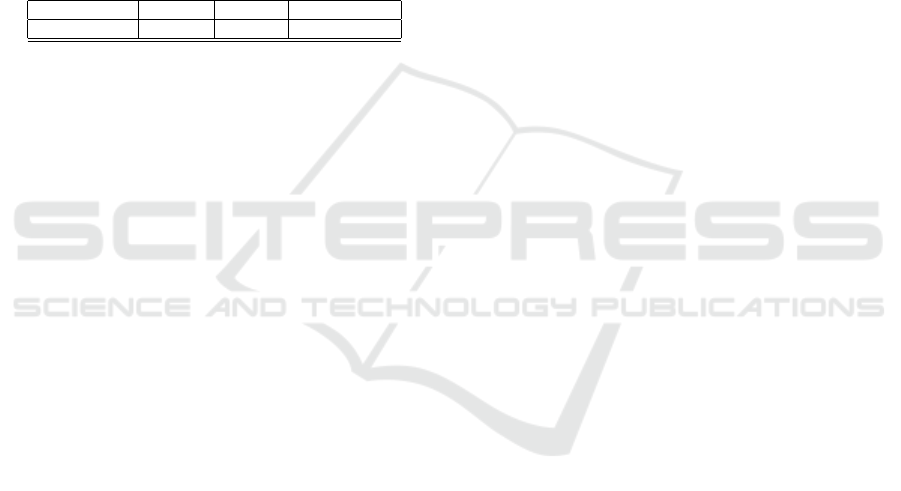

The results are summarized in the following table

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

992

Table 2: The precision of MLP models trained with differ-

ent Gauss diagrams encodings.

Dataset OH SM PM IG

8-1000x2 0.88 0.87 0.85 0.91

9-1000x2 0.77 0.78 0.76 0.84

10-1000x2 0.72 0.74 0.70 0.75

10-2000x2 0.76 0.80 0.75 0.80

11-2000x2 0.67 0.77 0.74 0.76

12-2000x2 0.67 0.77 0.71 0.75

The table presents only weighted average preci-

sion, more detailed summaries of all experiments in-

cluding TP rate, FP rate, Recall, F measure and con-

fusion matrices can be found online together with

all datasets used in this study

1

. The reported results

suggest that all encodings yield similar precision of

learned models. For smaller sizes 8-10 IG encod-

ing consistently outperforms other encodings, while

starting from size 11 SM encoding slightly outper-

forms IG. One speculative explanation of IG perfor-

mance might be that the properties of interlacement

graphs, which are themselves abstracted codes of the

diagrams, determine realiziability of the correspond-

ing diagrams. So having “direct access” to the graph

properties/features might be beneficial for machine

learning of realizability. As to SM encoding, its good

performance might be attributed to the fact that it has

largest size of all encodings (4n

2

for n-crossing dia-

grams) and the largest size of the hidden layer of neu-

rons in the corresponding perceptron. This advantage

of SM goes though with the much longer training time

due to the increased size of the neural network.

The exploration of the wider class of ML models

and more rigorous account of the speculative expla-

nations are the topics for our further research here.

3.2 Models Behaviour on Special

Counterexamples Datasets

In (Khan et al., 2021a) using computational approach

and Gauss-lintel tool the special sets of Gauss dia-

grams have been identified. They satisfy all crite-

ria from (Grinblat and Lopatkin, 2018; Grinblat and

Lopatkin, 2020; Biryukov, 2019) to be realiziable, but

are not in fact realisable. Thus these counterexamples

invalidate mentioned criteria and informally speaking

have non-trivial reasons to be non-realizable. There

are exactly 1, 6, 36, 235 of such diagrams of sizes

9,10,11,12 respectively. The diagrams of sizes 9 and

10 as well as realizability conditions from (Biryukov,

2019) can be seen in Appendix A. In the second series

of experiments we compared the accuracy of learned

1

https://doi.org/10.5281/zenodo.5797950

MLP models when tested on random sets of diagrams

(using 80%/20% split as before) and on these special

sets.

The results are summarised in the following table.

Table 3: The accuracy of MLP models when tested on ran-

dom (R) and on special (S) sets of diagrams. The num-

bers of diagrams misclassified in the latter case are shown

in Misclassified column.

Dataset R testing S testing Misclassified

IG-10-2000x2 0.80 0.00 6 out of 6

IG-11-2000x2 0.76 0.22 28 out of 36

IG-12-2000x2 0.75 0.07 218 out of 235

OH-10-2000x2 0.76 0.50 3 out of 6

OH-11-2000x2 0.67 0.19 29 out of 36

OH-12-2000x2 0.67 0.41 139 out of 235

SM-10-2000x2 0.80 0.50 3 out of 6

SM-11-2000x2 0.77 0.31 25 out of 36

SM-12-2000x2 0.77 0.19 190 out of 235

PM-10-2000x2 0.75 0.00 6 out of 6

PM-11-2000x2 0.74 0.11 32 out of 36

PM-12-2000x2 0.71 0.20 189 out of 235

Quite surprising outcome of these results is that

ML struggles to classify correctly the diagrams from

these special sets confirming thereby inherent diffi-

culty of the problem of their recognition. The hu-

man mathematicians have made mistakes for these di-

agrams, ML appears to be following humans. The

speculative explanation of such a behaviour of ML

might be based 1) on the observation that these special

diagrams are rare and 2) the majority of the diagrams

have “simpler reasons” for non-realizability. So, if

trained on random diagrams, ML can learn the sim-

pler conditions covering majority of the diagrams, but

might have no chance to learn more complicated con-

ditions for the rare special diagrams due to not seeing

such diagrams during training process.

To provide some empirical evidence for such rea-

soning we have performed one more experiment. We

have split the set S of 235 special diagrams of size

12 into two subsets S

1

and S

2

of sizes 100 and

135, respectively. S

1

is then used to replace 100

non-realizable diagrams in the dataset 12-2000x2.

Then we used such modified dataset 12-2000x2M for

training. The resulting model was tested on ran-

dom diagrams (using 80/20 split) and on diagrams

from S

2

. The first testing returned average precision

0.73 (slight drop from original 0.75 for unmodified

dataset), but the testing on S

2

yielded the rise of pre-

cision to 0.62 from the original 0.07 (for unmodified

dataset and testing on S). So, it appears that indeed

seeing the special diagrams during the training helps

to increase considerably the chances of correct classi-

fication of other diagrams from this set.

We believe that more rigorous account of such ex-

planations can be given by the application of the re-

Training AI to Recognize Realizable Gauss Diagrams: The Same Instances Confound AI and Human Mathematicians

993

cent approach to the learnability of relational proper-

ties using model counting, proposed in (Usman et al.,

2020). This is a topic of our ongoing research.

One of the limitations of the presented study is

that the only one model of ML has been used. We

have tested quickly all implemented in WEKA classi-

fiers in default settings on the instances of the realiz-

ability problem above and the preliminary results sug-

gest that only Random Forest (RF)(Breiman, 2001)

has comparable with MLP precision of learned mod-

els. Interestingly, in the observed cases RF also strug-

gles to recognise special diagrams.

Table 4: The accuracy of Random Forest model when tested

on random (R) and on special (S) sets of diagrams. The

numbers of diagrams misclassified in the latter case are

shown in Misclassified column.

Dataset R testing S testing Misclassified

IG-12-2000x2 0.84 0.03 227 out of 235

Systematic exploration of the experiments with al-

ternative ML models will be presented in the extended

version of this paper.

4 CONCLUSION

In this paper we have presented our initial experi-

ments with machine learning applied to the classical

problem of computational topology, that is a recog-

nition of realizable Gauss diagrams. We have ex-

perimented with four various encodings and iden-

tified the encodings enabling the highest precision

of learned models. We have discovered an inter-

esting phenomenon where trained ML models drop

their performance dramatically when tested on spe-

cial recently discovered sets of diagrams, which are

counterexamples to the published relaiziability crite-

ria. We proposed some speculative explanations and

outlined further research directions to get more rigor-

ous account of the observed phenomena.

ACKNOWLEDGMENTS

This work was supported by the Leverhulme Trust

Research Project Grant RPG-2019-313.

REFERENCES

Biryukov, O. N. (2019). Parity conditions for realizability

of Gauss diagrams. Journal of Knot Theory and Its

Ramifications, 28(01):1950015.

Breiman, L. (2001). Random forests. Machine Learning,

45(1):5–32.

Cybenko, G. (1989). Approximation by superpositions of a

sigmoidal function. Mathematics of Control, Signals,

and Systems (MCSS), 2(4):303–314.

Dehn, M. (1936). Uber kombinatorische topologie. Acta

Math., 67:123–168.

Dowker, C. and Thistlethwaite, M. B. (1983). Classification

of knot projections. Topology and its Applications,

16(1):19 – 31.

Francis, G. K. (1969). Null genus realizability criterion for

abstract intersection sequences. Journal of Combina-

torial Theory, 7(4):331 – 341.

Gauss, C. (1900). Werke.

Grinblat, A. and Lopatkin, V. (2018). On realizability

of Gauss diagrams and constructions of meanders.

arxiv:1808.08542.

Grinblat, A. and Lopatkin, V. (2020). On realiz-

abilty of Gauss diagrams and constructions of mean-

ders. Journal of Knot Theory and Its Ramifications,

29(05):2050031.

Han, J. and Moraga, C. (1995). The influence of the sigmoid

function parameters on the speed of backpropagation

learning. In J., M. and F., S., editors, From Natural to

Artificial Neural Computation, LNCS, vol 930, pages

195–201. Springer Berlin Heidelberg.

Hornik, K., Stinchcombe, M., and White, H. (1989). Multi-

layer feedforward networks are universal approxima-

tors. Neural Networks, 2(5):359–366.

Khan, A., Lisitsa, A., Lopatkin, V., and Vernitski,

A. (2021a). Circle graphs (chord interlacement

graphs) of Gauss diagrams: Descriptions of re-

alizable gauss diagrams, algorithms, enumeration.

arxiv:2108.02873.

Khan, A., Lisitsa, A., and Vernitski, A. (2021b). Experi-

mental mathematics approach to Gauss diagrams real-

izability. arxiv:2103.02102.

Khan, A., Lisitsa, A., and Vernitski, A. (2021c). Gauss-

lint algorithms suite for Gauss diagrams generation

and analysis. Zenodo, 10.5281/zenodo.4574590,

https://doi.org/10.5281/zenodo.4574590.

Khan, A., Lisitsa, A., and Vernitski, A. (2021d). Gauss-

lintel, an algorithm suite for exploring chord dia-

grams. In Kamareddine, F. and Sacerdoti Coen,

C., editors, Intelligent Computer Mathematics, pages

197–202, Cham. Springer International Publishing.

Kurlin, V. (2008). Gauss paragraphs of classical links and a

characterization of virtual link groups. Mathematical

Proceedings of the Cambridge Philosophical Society,

145(1):129–140.

Lov

´

asz, L. and Marx, M. L. (1976). A forbidden sub-

structure characterization of Gauss codes. Bull. Amer.

Math. Soc., 82(1):121–122.

Marx, M. L. (1969). The Gauss realizability problem.

Proceedings of the American Mathematical Society,

22(3):610–613.

Rosenblatt, F. (1958). The perceptron: A probabilistic

model for information storage and organization in the

brain. Psychological Review, 65(6):386–408.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

994

Rosenblatt, F. (1961). Principles of Neurodynamics: Per-

ceptrons and the Theory of Brain Mechanisms. Spar-

tan Books.

Rosenstiehl, P. (1976). Solution alg

´

ebrique du probl

`

eme

de Gauss sur la permutation des points d’intersection

d’une ou plusieurs courbes ferm

´

ees du plan. C.R.

Acad. Sci., t. 283, s

´

erie A:551–553.

Rosenstiehl, P. and Tarjan, R. E. (1984). Gauss codes, pla-

nar hamiltonian graphs, and stack-sortable permuta-

tions. J. Algorithms, 5(3):375–390.

Rumelhart, D. E., Hinton, G. E., and Williams, R. J. (1986).

Learning internal representations by error propaga-

tion. In Rumelhart, D. E. and Mcclelland, J. L., edi-

tors, Parallel Distributed Processing: Explorations in

the Microstructure of Cognition, Volume 1: Founda-

tions, pages 318–362. MIT Press, Cambridge, MA.

Shtylla, B., Traldi, L., and Zulli, L. (2009). On the real-

ization of double occurrence words. Discret. Math.,

309(6):1769–1773.

Usman, M., Wang, W., Vasic, M., Wang, K., Vikalo, H.,

and Khurshid, S. (2020). A study of the learnability of

relational properties: model counting meets machine

learning (mcml). Proceedings of the 41st ACM SIG-

PLAN Conference on Programming Language Design

and Implementation.

Wielemaker, J., Schrijvers, T., Triska, M., and Lager, T.

(2012). SWI-Prolog. Theory and Practice of Logic

Programming, 12(1-2):67–96.

Witten, I. H., Frank, E., Hall, M. A., and Pal, C. J. (2016).

The WEKA Workbench. Online Appendix for Data

Mining, Fourth Edition: Practical Machine Learning

Tools and Techniques. Morgan Kaufmann Publishers

Inc., San Francisco, CA, USA, 4th edition.

Zheng, A. and Casari (2018). Feature Engineering for Ma-

chine Learning. O’Reilly Media, Inc.

APPENDIX A

We present the realiziability conditions for prime

Gauss diagrams from (Biryukov, 2019), equivalent to

those from (Grinblat and Lopatkin, 2018; Grinblat

and Lopatkin, 2020) and formulated in terms of in-

terlacement graphs. According to these conditions, a

prime Gauss diagram g is realizable if and only if in

its interlacement graph:

• each vertex has even degree (original Gauss con-

dition)

• each pair of non-neighbouring vertices has an

even number of common neighbours (possibly,

zero);

• for any three pairwise connected vertices a, b, c ∈

V the sum of the number of vertices adjacent to

a, but not adjacent to b nor c, and the number of

vertices adjacent to b and c, but not adjacent to a,

is even.

The following figures present counterexamples to

these conditions (n=9,10), that is diagrams which sat-

isfy the conditions, but are not realizable.

Figure 2: A counterexample diagram, n=9 (Khan et al.,

2021b).

Figure 3: All counterexamples for n=10 (Khan et al.,

2021b).

Training AI to Recognize Realizable Gauss Diagrams: The Same Instances Confound AI and Human Mathematicians

995