Mobile Outdoor AR Application for Precise Visualization of Wind

Turbines using Digital Surface Models

Simon Burkard

a

and Frank Fuchs-Kittowski

b

Institute of Environmental Computer Science, Hochschule für Technik und Wirtschaft (HTW) Berlin,

University of Applied Sciences, Wilhelminenhofstr. 75a, 12459 Berlin, Germany

Keywords: Mobile Outdoor Augmented Reality, Geospatial Data, Digital Surface Model, Visualization, Wind Turbine.

Abstract: Realistic visualizations illustrating the visual impact of planned large structures and buildings in the landscape

are challenging and often difficult to create. In this paper, a mobile outdoor augmented reality application is

presented that enables realistic and immediate on-site visualization of planned wind turbines at their intended

geographic location superimposed on the live camera image of mobile devices. For this purpose, a manual

localization procedure is described that uses 3D geospatial models (e.g., digital surface models) displayed in

the camera view to enable precise global orientation and positioning of the mobile device resulting in very

realistic AR visualizations. In addition, the functions, implementation details and evaluation results of the

mobile application are presented.

1 INTRODUCTION

Geospatial data in Geographic Information Systems

(GIS) are an important basis for planning, monitoring

and controlling work processes in business and public

administration. Mobile Augmented Reality (mAR)

offers a new Graphical User Interface (GUI)

paradigm (Höllerer & Feiner, 2001) for even more

intuitive and versatile geospatial data display and

manipulation in the immediate application context

(Hugues et al., 2011): with mAR, geospatial data are

superimposed directly into the camera image of a

mobile device, overlaying the real environment, e.g.,

to display previous factual states, target values, or

planned actions in the live image during planning and

acquisition tasks (Langlotz et al., 2012; Schall et al.,,

2009). Due to this true-to-life representation of

geospatial data directly in the camera view of the real

environment, there is an enormous potential to make

work processes with geospatial data in the field

easier, more efficient, and more effective (Fuchs-

Kittowski & Burkard, 2019).

Mobile AR visualizations are therefore also a

suitable tool for flexibly showing the visual impact of

planned large structures and buildings (e.g.

skyscrapers, bridges, power lines etc.) in the

a

https://orcid.org/0000-0001-6038-0891

b

https://orcid.org/0000-0002-5445-3764

landscape on site. Compared to traditional forms of

visualization (e.g. paper-based photo montage), AR

visualizations allow a direct view of the impact of

such buildings on landscape aesthetics, on-site,

immediate, and from multiple angles.

This paper presents an outdoor augmented reality

application that enables accurate and realistic three-

dimensional visualization of planned wind turbines

(WT) in real time on site in the camera image of the

mobile device in the user's immediate environment.

The application is intended to support landscape

planners and wind turbine project planners in their

work process as well as to contribute to improving

public information and acceptance. The AR display

offers an advantage over current visualization

techniques, as low-threshold information about the

impact of planned wind turbines can be obtained by

any person and is not limited to pre-defined view

positions and number of images.

A great challenge for building realistic mobile AR

scenarios is precise global localization of the mobile

AR display. This is necessary for accurately

displaying the virtual 3D model of the wind turbine

on-screen at their actual planned geographic location.

To address the global localization, this application

uses widely available 3D geospatial data, including

Burkard, S. and Fuchs-Kittowski, F.

Mobile Outdoor AR Application for Precise Visualization of Wind Turbines using Digital Surface Models.

DOI: 10.5220/0010989600003185

In Proceedings of the 8th International Conference on Geographical Information Systems Theory, Applications and Management (GISTAM 2022), pages 15-24

ISBN: 978-989-758-571-5; ISSN: 2184-500X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

15

digital surface and terrain models as well as digital

3D building data. This 3D data is processed into

small-scale 3D tiles and displayed in a live AR

camera view. Using two common mobile touch

gestures (drag and pinch-zoom gestures), the

generated virtual models can be interactively aligned

to match the actual perception of the real environment

eventually enabling robust and precise global

localization.

Therefore, the two key contributions of this paper

are: 1) The presentation of a flexible user-aided

localization technique using 3D geospatial data, e.g.

digital surface models, and 2) the presentation of a

mobile application for AR-based visualization of

planned wind turbines illustrating the functions of the

app based on the designed user interface, results of

the implementation and the evaluation of the app.

The paper is structured as follows: In the

following section 2, the application scope of the app

is outlined and the development of the app is

motivated. Then, in Section 3, the state of the art in

research and technology for the visualization of wind

turbines as well as for the realistic representation of

virtual objects in outdoor environments using mobile

augmented reality is presented. Then, in Section 4, the

architecture of the mAR app system is presented and

important design decisions regarding geospatial data

integration, AR calibration, and realistic visualization

are described. In Section 5, the functions of the app

are described based on the design of the user

interface. Finally, key aspects for the implementation

(Section 6) and evaluation (Section 7) of the app are

presented. The paper ends with a summary and an

outlook on further research in Section 8.

2 SCOPE AND MOTIVATION OF

THE mAR APP

In order to achieve climate protection goals, a steady

expansion of renewable energy plants and thus also

the planning and construction of new wind turbines

are part of possible climate change strategies.

Although a majority of the population in Germany

finds the increased use and expansion of renewable

energies important (Agentur, 2019), large sections of

the local population are often rather dismissive of

concrete plans for new wind turbines. Reasons for this

negative attitude include uncertainty and fear of

impending adverse effects from acoustic emissions

(noise) or visual emissions (lighting, shadows cast) or

from feared changes to the landscape (Hübner et al.,

2019). However, the actual impacts of new facilities

(e.g., landscape impacts) often remain unclear to

many non-experts and may also be influenced by

misconceptions or faulty representations. Measures to

achieve a higher understanding of such projects

within the local population are therefore necessary.

An important tool is therefore a realistic and

objective representation of the effects of planned

construction projects. In addition to traditional

visualization methods (static photo montage/

simulation/construction sketch), mobile augmented

reality (mAR) technology offers an innovative and

novel method to make planned projects mobile and

tangible on site in the real landscape. In this way, a

mAR application could provide a realistic picture of

the impact of new wind turbines on the landscape

from any position within the planning phase of new

wind turbines. Therefore, in order to avoid a possible

influence by erroneous reporting or faulty

representations, the developed mAR application

should start as early as possible in the planning phase

of new wind turbines.

Furthermore, with the help of such an application,

additional functions (evaluation, communication,

feedback) could be realized and further information

(technical data of the plants, etc.) could be

communicated in order to enable a transparent design

of the planning process. The main users of the

application would be residents near planned wind

farms as well as municipalities or municipal

authorities and engineering and planning offices.

3 STATE OF RESEARCH AND

RELATED WORK

3.1 Visualization of Planned Wind

Turbines

Photorealistic visualizations of construction projects

have so far usually been created in advance with the

help of "special software" on a PC, for example by

photomontages, e.g. with Photoshop (a virtual model

is retouched into a real landscape photograph), or by

3D simulations in virtual landscape models, either by

visualization tools within established planning

software (e.g. 3D animator of the planning software

"WindPro") or by specially developed software

solutions (e.g. 3D analysis in the Energy Atlas of

Bavaria (Nefzger, 2018)). While such visualizations

provide a relatively realistic view of planned

construction projects, the creation of these graphics

usually has to be done by "experts" in advance.

Furthermore, the visualization is limited to certain

GISTAM 2022 - 8th International Conference on Geographical Information Systems Theory, Applications and Management

16

previously defined viewpoints (e.g., in the case of

photomontage) or purely virtual environments (e.g.,

in the Energy Atlas of Bavaria).

3.2 Mobile Augmented Reality for the

Outdoor Area

With the help of mAR applications, visualization can

be done "on site" using commercially available

smartphones; the integration of the virtual content

takes place within the real landscape view at any

location on site, so that an even more realistic

impression of possible landscape changes caused by

the construction project on site is possible.

AR applications and developer SDKs for realistic

AR display of virtual objects or information at close

range ("indoor") are established and work quite

reliably and robustly, for example for displaying

virtual furniture in one's own home (e.g., the app

IKEA Place). The idea of mAR-based representation

of virtual objects outdoors (mobile outdoor AR) has

also been analyzed and also implemented in several

other projects. For example, several example

applications for mobile outdoor AR visualizations

can now also be found in the environmental field (see

e.g. (Burkard et al., 2021) and (Rambach et al.,

2021)). Many of these applications visualize virtual

content outdoors but within a known, small-scale

environment, e.g., for AR-based visualization of

flood hazards (Haynes et al., 2016) or 3D

visualization of historic (Panou et al., 2018) or

planned buildings (Zollmann et al., 2014). For precise

registration of the mobile device within the known,

local environment as a prerequisite for correct

placement of virtual AR content in the camera image,

this can be done by relying on artificial markers or

natural reference images (e.g., house facades) known

in advance (Haynes et al., 2016; Panou et al., 2018)

or 3D models (3D point clouds) of the environment

created in advance (Zollmann et al., 2014).

In contrast, the realistic representation of

information at a specific geographical location within

a large-scale environment (e.g., planned wind

turbines) is particularly challenging: This requires not

only local tracking of the mobile camera for a stable

AR representation, but also precise localization with

respect to a global geo-coordinate system (geo-

localization; global registration).

3.3 Methods for Global AR

Localization and Registration

Applications for outdoor mAR rendering of

geospatial objects often do not use image-based

localization methods for global registration, but

simplified positioning methods primarily based on

GPS signal and digital compass (location-based AR).

However, these methods are too inaccurate to enable

precise 3D visualizations (Schmid & Langerenken,

2014). Therefore, a realistic visualization of the

impact of new wind turbines is not possible with such

AR technology. While accuracy can be increased by

using external D-GNSS receivers for precise

positioning, using such external sensors for mobile

AR visualizations would be complex and costly

(Schall et al., 2013).

Image-based Global Localization Methods

(SLAM-based) represent a more elaborate but precise

approach to realistically display virtual geospatial

objects in the outdoor real world at correct

geographical positions. However, this would require

a high-resolution georeferenced 3D point cloud of the

entire environment to be available or created in

advance (Zamir et al., 2018; Kim et al., 2018). Due to

the high effort required to create, store, and deploy

such 3D models for large areas, these AR positioning

approaches have so far only been used and explored

in selected urban areas (e.g., Google Maps Live

View).

Alternatively, already available georeferenced

data (e.g., 3D terrain models, 3D city models) can be

used for global registration of the mobile device, e.g.,

for automatic image-based (SLAM-based) global

localization using 3D building models from

OpenStreetMaps datasets (Liu et al., 2019). Other

approaches use digital terrain models for global

image-based registration, e.g., by automatically

matching the horizon silhouette (Baatz et al., 2012) or

automatic image tracking of prominent terrain points

(Brejcha et al., 2020). However, the functionality of

these automatic image-based tracking approaches is

limited, in part because these image recognition

approaches only work accurately in mountainous,

prominent environments with distant views or are

constrained by other general conditions, e.g., the

presence of planar house facades.

In contrast to such automatic image-based

registration strategies, few approaches are found that

use user-driven forms of AR-based interaction to

register mobile devices in global reference systems.

For example, Kilimann et. al. implemented a method

to align virtual AR markers with highly visible,

manually defined landmarks while correcting for a

single rotation angle of the global camera orientation

(Kilimann et al., 2019). As an alternative to point-

based reference markers in the environment, available

3D geospatial models can also be reshaped and

incorporated to allow manual geolocation using these

Mobile Outdoor AR Application for Precise Visualization of Wind Turbines using Digital Surface Models

17

models. Combined with image-based tracking

methods (SLAM) at close range, realistic AR

representations, e.g. visualizations of virtual wind

turbines, can be created. This approach is also part of

current research and was developed as part of the

implementation of the app presented here. Similar to

the method presented here are the manual, touch-

based AR registration approaches presented by

Gazcón et al. (Gazcón et al. 2018) and the method

used in the commercially available PeakFinderAR

application (Soldati, 2021). However, compared to

our system, both systems are limited to mountainous

environments with coarse terrain models and do not

use additional local image-based tracking for greater

stability.

3.4 Systems for AR Visualization of

Wind Turbines

For the mAR-based visualization of planned wind

turbines, no practical systems are available on the

market so far.

A first app for AR visualization of wind turbines

was already available in the UK in 2012 by the

company LinkNode (Hoult, 2012). Although this app

performs localization solely based on the internal

localization sensor technology of the mobile device

and the visualization is therefore quite inaccurate, the

potential of such mAR application could be

demonstrated, as significant improvements in the

assessment possibilities of the impacts of planned

wind turbines by laypersons could be shown

(Szymanek & Simmons, 2015).

In addition, only two other prototype

implementations are now known from research

projects: First, an implementation by LandPlan OS

GmbH (research project "MoDal-MR",

https://www.landplanos.de/forschung.html;

Kilimann et al., 2019), in which global device

registration is performed by manual calibration using

point-like reference markers (e.g., church steeples) in

the landscape that have to be defined manually. On

the other hand, a prototypical visualization tool exists

from the project "Linthwind" of Echtzeit GmbH and

ZHAW Switzerland

(https://echtzeit.swiss/index.html#projects_AR). In

this case, a manual global device registration is

performed based on previously defined mountain

peaks of the environment. Both systems are prototype

applications with basic functionalities whose

practical suitability has not been investigated in more

detail.

4 GEOSPATIAL DATA

INTEGRATION AND

LOCALIZATION

The main goal of the proposed localization method is

to use 3D geospatial models as a virtual aid for

efficient user-assisted global registration.

Accurate determination of global camera position

and orientation (global camera registration) is not

only a requirement for this mAR app (see requirement

R3 in Section 5), but a key requirement for accurate

AR visualizations in mobile outdoor AR applications

in general. In the system presented here, a novel user-

assisted registration method - developed by the

authors of this paper (Burkard & Fuchs-Kittowski

2020) - was used, which uses georeferenced data to

accurately register mobile devices with respect to a

global geographic reference system. In this method,

the calibration of the device pose is based on visible

objects, e.g. on the terrain. Digital terrain, 3D

building and surface models are integrated and used

for this purpose. The user manually moves - via two

common mobile touch gestures (drag-touch and

pinch-zoom gesture) - the projected model of the

environment (e.g. terrain model) on the screen so that

it matches the actual real-world view in the live

camera video. This user-controlled shift of the virtual

environment thus results in a correction of the global

device position and orientation.

This section describes the underlying technical

design of the mAR application for visualizing

planned wind turbines. For this purpose, the

architecture of the mAR app system is presented and

important design decisions regarding the geospatial

data integration as well as the geospatial data-based

AR registration and calibration are explained.

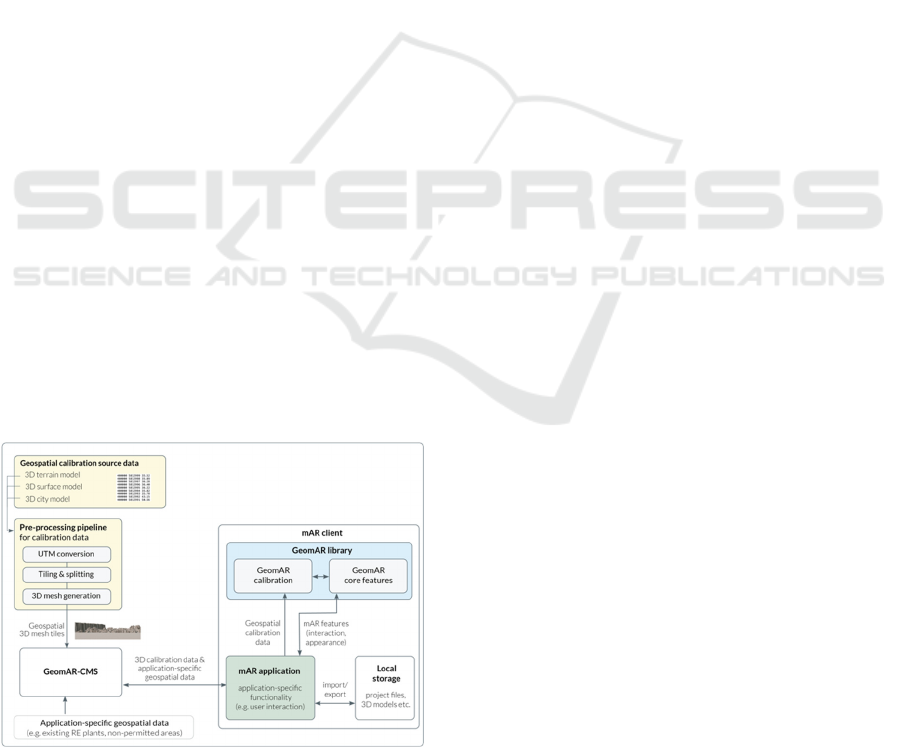

4.1 Architecture

The technical architecture of the designed mAR

application is roughly sketched in Figure 1. The

architecture consists of the following three main

components:

1) mAR Client: the mobile AR client represents all

components of the mobile AR application on the

mobile device, these include:

• mAR Application: This application-specific

component implements all the required user

interface (UI) functionalities to operate and

control the mobile mAR application (see GUI

design in Section 5). It also controls the server

communication for retrieving and

administering the required geospatial data. To

GISTAM 2022 - 8th International Conference on Geographical Information Systems Theory, Applications and Management

18

realize these functions, the application

component uses several application-

independent components (Local Storage,

GeoCMS as well as GeoAR Library).

• Local Storage: Previously stored AR views,

3D models of the wind turbines as well as

temporarily stored calibration data are stored

locally within the AR client and can be loaded

from there into the mAR application.

• GeoAR Library: This client-side library

provides all necessary GeoAR core

functionalities (placement and manipulation of

virtual wind turbine models) as well as

functions for device calibration (geo-

localization). The technology used for device

calibration is presented in detail in section 4.3.

2) GeomAR-CMS: The main task of this server-

based component is the storage and administration of

geospatial data needed to run the mAR application.

For this purpose, this server component provides:

• on the one hand, interfaces to add, modify, and

retrieve application-specific geospatial data

(e.g., wind turbine data) as well as geospatial

data needed for device calibration (e.g., 3D

terrain models) (REST API and GUI front-end

for administration), and

• on the other hand, a database for persistent

storage of geospatial data.

3) Pre-processing Pipeline for Geospatial Data:

Since 3D geospatial data for device calibration is

often not initially available in formats suitable for

immediate server-based storage and AR integration,

the overall system also includes an offline component

to convert the 3D geospatial data into smaller-scale

3D tiles that can be efficiently displayed as a 3D

model (3D mesh) in the mobile AR client (see Section

4.2).

Figure 1: Architecture and interfaces of the mAR app.

4.2 Geospatial Data Pipeline

The component for converting 3D geospatial data into

AR-enabled 3D models for manual AR calibration is

designed to support three different types of 3D

geospatial data that virtually represent the user's

outdoor environment, each with different properties

and resolutions:

• Digital Surface Model (DSM): 3D point cloud

commonly acquired by LiDAR systems to

model the earth's surface including immobile

objects (vegetation, buildings).

• Digital Terrain Model (DTM): Description of

the earth's surface excluding vegetation and

man-made features.

• Digital 3D City Model: three-dimensional

description of building outlines (3D building

models).

Both the type of geospatial data used for calibration

and the extent of the area covered by the virtual

models can be determined by the user based on the

spatial nature of the environment (e.g., rural vs.

urban) and the availability of each geospatial model

(e.g., 3D city model in urban areas and 3D terrain

model in mountainous areas with distant views).

Usually, 3D data is provided in text-based form

by GIS data providers. To convert them into 3D

models suitable for AR display within the mobile AR

client, several conversion steps are required:

UTM Conversion: First, the source data is

transformed into a Universal Transverse Mercator

(UTM) coordinate system that uses a metric grid

(meter).

Tiling & Splitting: For more efficient data

handling, the source files are then split into smaller

parts with defined square dimensions. This way,

when rendering the virtual user environment, the

mobile client only needs to load and process tiles with

smaller file sizes. This processing step employs a

customized naïve tiling approach without

incorporating open standards for 3D tiles.

3D Mesh Generation: Using incremental

Delaunay triangulation (Heckbert & Garland, 1997),

the generated 3D tiles are finally transformed into

optimized Triangulated Irregular Network (TIN)

surface meshes with different levels of detail.

Wavefront OBJ is used as the target file format for the

textureless 3D meshes.

Storage in GeomAR-CMS: These obj files are

finally stored in the server-based management system

(GeomAR-CMS) together with the associated

metadata (position, size and type of 3D tile). From

there, they can be provided to the mobile AR client

on demand.

Mobile Outdoor AR Application for Precise Visualization of Wind Turbines using Digital Surface Models

19

4.3 AR Registration and Calibration

Accurate determination of global camera position and

orientation (global camera registration) is a key

requirement for accurate AR visualizations in outdoor

mobile AR applications. In the system presented here,

a novel user-assisted registration method was

developed that uses georeferenced 3D data to

accurately register mobile devices with respect to a

global geo-reference system (geo-coordinate system).

Before starting this user-driven calibration

process, suitable 3D geospatial models of the user

environment are first loaded as calibration data from

the GeomAR-CMS. An initial rough estimation of the

device position and orientation is possible using the

localization sensors (GNSS receiver for rough

position determination and IMU sensor for rough

determination of the device orientation) installed in

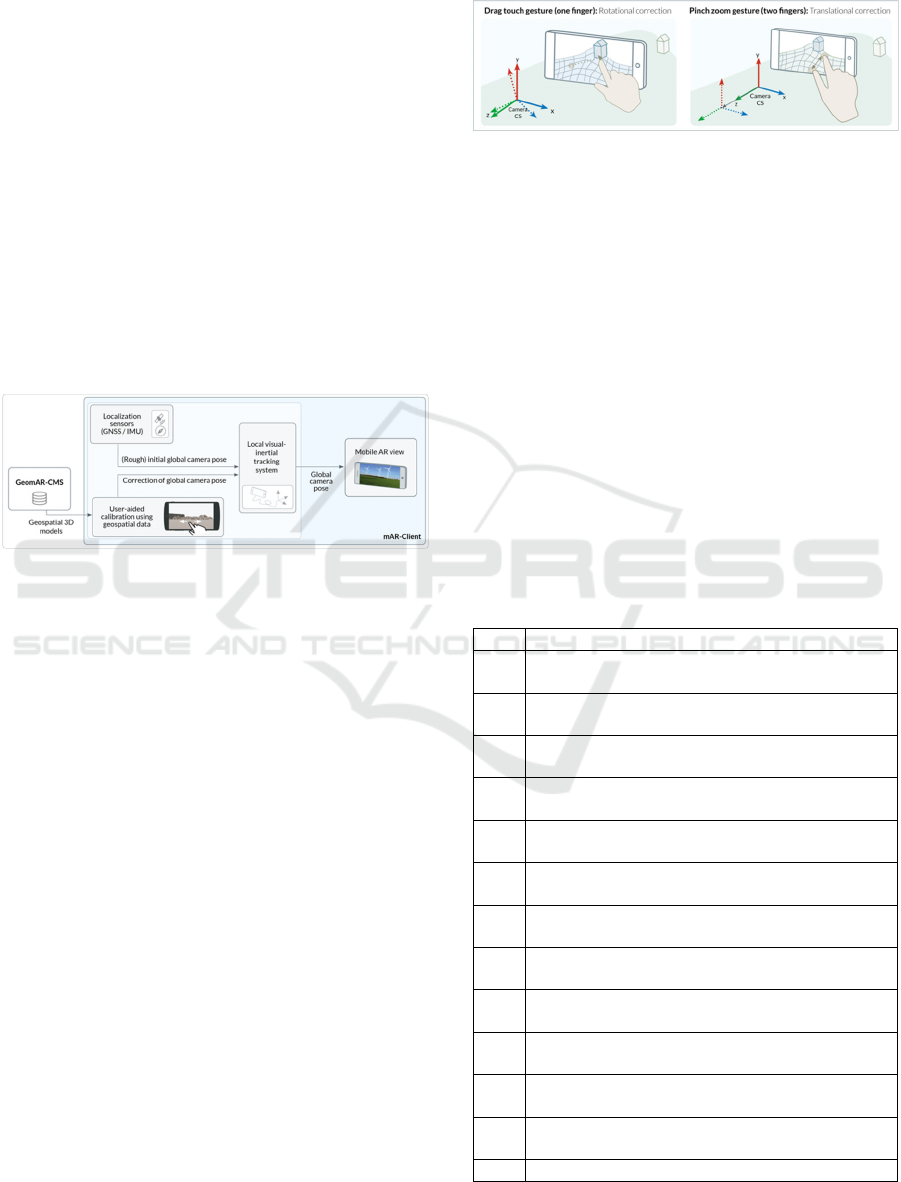

the mobile device (see Figure 2).

Figure 2: Real-time registration and tracking system.

Thus, the 3D geospatial models can be displayed

as a rough - but usually still very flawed - virtual 3D

projection of the user's environment in the camera

image, e.g., as a rough virtual representation of the

terrain surface structure or the 3D structures of nearby

buildings. Then, the user manually moves the

projected 3D environment on the screen to match the

actual real-world view in the live camera video. Using

two common mobile touch gestures (drag-touch and

pinch-zoom gestures), the generated virtual models

can be interactively aligned to match the actual

perception of the real environment (see Figure 3.):

• A drag-touch gesture (one finger) can be used

to move the virtual geospatial model on the

screen, resulting in a rotation correction of the

global camera orientation.

• A pinch-zoom gesture (two fingers) can be

used to scale the virtual geospatial model,

resulting in a correction of the global camera

position.

This user-controlled shift of the virtual environment

thus leads to a correction of the global device position

and orientation. In addition, a state-of-the-art image-

based tracking system (visual inertial odometry

system; VIO tracking) continuously tracks device

movements in the local space in the background. This

ensures a consistently stable AR projection of the

virtual user environment without drift effects.

Figure 3: User interaction gestures for aligning the virtual

geospatial data models to correct global camera orientation

(left) and position (right).

5 MOBILE AR-APPLICATION

FOR THE VISUALIZATION OF

WIND TURBINES

The following section will describe the functionalities

and interface design of the mAR app for 3D

visualization of wind turbines in the landscape. The

functionalities and graphical user interface were

developed based on requirements identified in

discussions and workshops with representatives of

user groups. A structured overview of the collected

requirements is presented in Table 1.

Table 1: Functional requirements for a mAR app for

visualization of planned wind energy plants.

No. Re

q

uirement

R1

Placement of planned wind turbines on map and

in camera image.

R2

mAR visualization of planned wind turbines as

a 3D model.

R3

Precise and correct placement of AR content in

camera ima

g

e.

R4

mAR visualization of wind turbine rotor

movement in 3D model

(

animation

)

R5

Simultaneous mAR visualization of multiple

p

lanned WTs.

R6

mAR visualization of meta data of a planned

wind turbines

R7

Modification of the appearance of the planned

wind turbine

(

model t

yp

e and hei

g

ht

)

R8

Modification of the orientation and location of

the planned wind turbines

R9

Consideration of non-permitted areas when

p

lacin

g

wind turbines

R10

mAR visualization of planned wind turbines as

POI markers

R11

mAR visualization of already existing

renewable energy

p

lants as POI markers

R12

Local import/export of the current mAR

p

lanning configuration

R13 Video recording of the current AR view

GISTAM 2022 - 8th International Conference on Geographical Information Systems Theory, Applications and Management

20

Table 1: Functional requirements for a mAR app for

visualization of planned wind energy plants (Cont.).

R14

Mobile AR hardware without special sensor

technology (commercially available

smartphones/tablets)

R15

Server-based data provision and online

ca

p

abilit

y

.

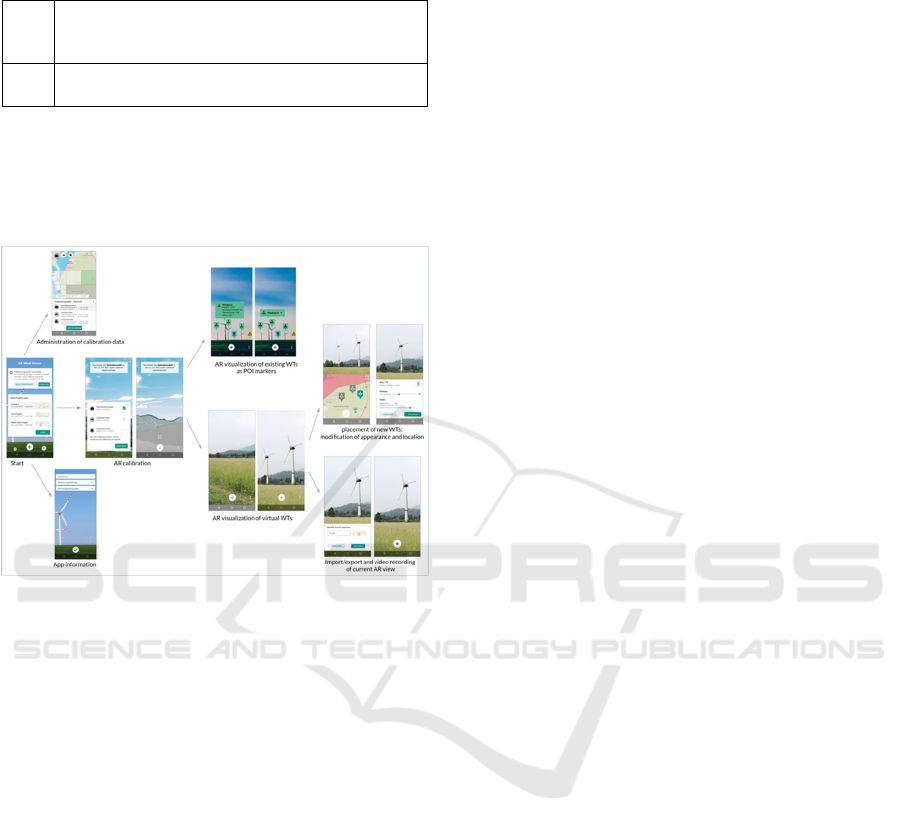

With the designed graphical user interface (GUI) of

the mAR application for visualization of planned

wind turbines, all essential functional requirements

were implemented. The designed screen designs as

well as their interrelationships are shown in Figure 4.

Figure 4: Graphical user interface (GUI) designs for a

mobile app for mAR visualization of wind turbines.

They are briefly described in the following:

Start Screen: This is the first screen after

launching the application. It provides a quick

selection of the last saved AR visualizations (AR

projects), the option to open the calibration data

management and view app information, and the

option to start the main AR functions (visualizing

virtual wind turbines as well as AR information about

existing renewable energy plants).

Calibration Data Management: Before AR

rendering of virtual content is possible, AR

calibration data of the environment (e.g., 3D

geospatial models) must be loaded. A dedicated

screen provides expert options for custom display,

loading, and activation of individual calibration data.

AR Calibration: As prerequisite for precise AR

visualizations a manual calibration needs to be

performed to precisely register the AR display

globally. During calibration, the AR view is to be

aligned via user interaction so that the virtual view

and the real view match. This is done by moving the

virtual calibration objects (3D geospatial models).

The user can also open a settings menu in the AR

calibration view to determine the type of calibration

data to be displayed. After manual alignment is

complete, the user confirms the calibration, which

launches the main AR view.

View Virtual Wind Turbine Models in the

Landscape (AR Main View): On this main screen,

one or more wind turbines are displayed using AR

visualization at specified geographical positions in

the camera view. Depending on the distance, the

display is either a 3D model or a POI. From this main

view, further functionalities related to AR

visualization of wind turbines in the landscape can be

started via button.

Adding a New Virtual Wind Turbine to the AR

View: In the AR main view, a new wind turbine

model can be added in the user's field of view via

button. Clicking the "New wind turbine" button will

automatically add a standard wind turbine model

directly in the user's field of view to the AR view.

Changing the Location (Position) of the Wind

Turbine Model: Via a map view, the position of the

placed virtual wind turbines can be adjusted

manually. Unsuitable areas are marked accordingly

on the map via map overlay. By moving a wind

turbine icon in the map view, the global position of

the virtual model changes. This is immediately

reflected in the AR view.

Changing the Appearance of the Wind

Turbine Model: The user has the possibility to

change the size (height), the orientation (rotation) as

well as the model of the virtually placed wind turbines

in order to view or compare the effects of wind

turbines with different appearance or height. For this

purpose, the user selects a placed wind turbine model

in the AR view or the map view, so that a wind turbine

settings menu appears. There, a 3D wind turbine

model can be selected from a list of predefined 3D

models and the size (hub height) and orientation can

be specified.

Loading and Saving AR Projects: The current

AR configuration, i.e. the wind turbine models

(location and appearance) currently placed in the AR

view, can be saved as a project file in order to restore

and view this AR configuration at another time and/or

location.

View Existing Renewable Energy Plants as AR

POI Representation: The user has the possibility via

button in the start screen to view meta-information

about relevant renewable energy plants in the

surrounding area as POI-AR representation to get an

impression of availability and type of renewable

energy plants in the surrounding area. When clicking

on an AR POI marker, the marker view expands with

additional meta-information about the selected plant.

Clicking on a "list button" opens a (non-AR-based)

Mobile Outdoor AR Application for Precise Visualization of Wind Turbines using Digital Surface Models

21

list view of renewable energy plants in the

surrounding area.

6 IMPLEMENTATION

The presented components for the realization of the

mobile application were implemented for usage on

commercially available smartphones and tablets

within predefined test areas (Land Berlin and region

Augsburg). Users of the application thus receive an

impression of the potential impact of newly planned

wind turbines on the landscape within these areas.

Figure 5: Technologies used in the implementation of the

mAR system.

The app was implemented as a native Android mobile

application that can run on commercially available

mobile devices with built-in IMU and GNSS sensors

and using Google ARCore SDK as a local VIO

tracking system. The rendering of the AR content in

the camera image was realized OpenGL-based using

the Sceneform SDK. The raw data of the 3D

geospatial models for AR calibration were provided

by the Bavarian Surveying Administration (LDBV)

for testing purposes as part of the research work.

Alternatively, the - often freely available - raw data

of other state surveying offices can be integrated in

the same way.

Figure 6: Screenshots from the mAR app - manage

calibration data, move 3D geospatial models, display wind

turbine as AR visualization, place wind turbine, modify

appearance of wind turbine.

The geospatial data pipeline was realized based on

open-source tools for geospatial data processing, in

particular based on the GDAL library. These tools

were encapsulated in Docker containers and

automated using Python scripts. The GeomAR-CMS

for geospatial data management, storage, and

provisioning was implemented based on the

GeoServer software and using a Ruby-on-Rails web

framework. Figure 5 shows an overview of the

technologies used in the system. Screenshots from the

implemented application can be seen in Figure 6.

7 EVALUATION

The functionality of the mAR app as well as the GUI

were developed from the very beginning in

cooperation with representatives of the target user

group. In initial tests with potential end users, the app

was evaluated positively. In particular, the realistic

visualization as well as the correct, robust positioning

of the wind turbines were emphasized. In particular,

the high degree of realism is achieved due to the

positionally accurate integration of 3D environment

models leading to correct considerations of possible

occlusions of the wind turbines through vegetation,

terrain or buildings.

The additional manual effort for the user required

for calibration, i.e., correcting the position and

orientation of the wind turbines, was rated as

acceptable. This manual effort does not make the app

as easy to use as users would like, but due to the

inaccuracies of the sensors on commercially available

mobile devices, such additional calibration effort is

mandatory to ensure correct visualization. While this

provides a source of error due to incorrect use, similar

user errors can occur with other visualization methods

(e.g., photomontages). The experimental results have

nevertheless shown that the user-driven calibration

approach - combined with a robust local VIO tracking

system - can achieve efficient and accurate global

registration of mobile devices in various outdoor

environments and with reasonable user effort,

determining the device’s orientation with less than

one degree deviation.

Also, our approach enables a fast global

registration solution as the shifting of the pre-loaded

virtual environment models to the correct on-screen

position can be achieved ideally within a few seconds.

This process therefore is not simpler and more

straightforward, but also more time-saving compared

to other similar manual calibration techniques, e.g.,

the approach developed by Kilimann et al. (2019)

where the user has to manually select suitable

landmarks as reference points on a map and before

moving them in the camera image to the correct

position.

GISTAM 2022 - 8th International Conference on Geographical Information Systems Theory, Applications and Management

22

8 SUMMARY AND OUTLOOK

The objective of this paper was the development of a

mobile mAR application for the visualization of

planned wind turbines. For this purpose, the functions

of the mAR app were designed, implemented and

evaluated. In particular, the underlying technical

concepts of the geospatial data pipeline for the

generation of AR-suitable 3D geospatial models and

the global registration approach for correct

positioning of the mAR objects in the camera image

were explained.

The major advantage of a mAR app - especially

compared to classical visualization processes - lies in

an easier and immediate visualization on-site in the

real application context. Using the example of the

visualization of planned wind turbines, this more

flexible visualization technique could be clearly

demonstrated. However, this advantage could also be

used for further mAR applications in the renewable

energy sector, e.g., for the mAR representation of

planned power lines or photovoltaic systems.

Limitations of the proposed solution arise from

the complexity of the geospatial data incorporation as

well as the partial lack of availability of such data. In

order to make the geospatial data available for large-

scale areas, large infrastructure has to be available to

manage and provide these data. In this work, the

application has only been tested in a small test area as

the focus was on the technical feasibility and the

implementation of the functional requirements.

In order to achieve greater practicality and appeal

to broader user groups, the next step would also need

to focus more on usability - especially for non-

experts. In this context, a more intensive user

evaluation is planned in the next step to identify and

implement optimization approaches for better

usability and to make the calibration process easier

and more user-friendly. These possible further

developments also include the idea of integrating and

testing additional types of geospatial 3D models, e.g.,

textured colored surface and city models.

ACKNOWLEDGEMENTS

The authors gratefully acknowledge financial support

from the German Federal Ministry of Education and

Research (BMBF) within the research project

"mARGo" (grant number 01IS17090B) and the

German Federal Ministry for Economic Affairs and

Energy (BMWi) within the research project

"AR4WIND" (grant number 03EE3046B). In

addition, the authors would like to express their

sincere thanks to the Bavarian State Office for the

Environment and the Bavarian State Ministry of

Economic Affairs, Regional Development and

Energy for their very fruitful cooperation and support,

as well as to the Bavarian Surveying Administration

for providing the 3D geospatial data used for the AR

calibration.

REFERENCES

Agentur für erneuerbare Energien (2019). Akzeptanz-

Umfrage 2019 - Wichtig für den Kampf gegen

den Klimawandel: Bürger*innen wollen mehr

Erneuerbare Energien. https://www.unendlich-viel-

energie.de/themen/akzeptanz-erneuerbarer/akzeptanz-

umfrage/akzeptanzumfrage-2019.

Baatz, G., Saurer, O., Köser, K., & Pollefeys, M. (2012).

Large Scale Visual Geo-Localization of Images in

Mountainous Terrain. In A. Fitzgibbon, S. Lazebnik, P.

Perona, Y. Sato & C. Schmid (eds.), Computer Vision

– ECCV 2012. Lecture Notes in Computer Science,

Vol. 7573 (pp. 517-530). Berlin, Heidelberg: Springer.

https://doi.org/10.1007/978-3-642-33709-3_37

Brejcha, J., Lukáč, M., Hold-Geoffroy, Y., Wang, O., &

Čadík, M. (2020). LandscapeAR: Large Scale Outdoor

Augmented Reality by Matching Photographs with

Terrain Models Using Learned Descriptors. In A.

Vedaldi, H. Bischof, T. Brox, & J.M. Frahm (eds.)

Computer Vision – ECCV 2020. Lecture Notes in

Computer Science, Vol. 12374 (pp. 295-312). Cham:

Springer. https://doi.org/10.1007/978-3-030-58526-

6_18

Burkard, S., Fuchs-Kittowski, F., Abecker, A., Heise, F.,

Miller, R., Runte, K., & Hosenfeld, F. (2021).

Grundbegriffe, Anwendungsbeispiele und

Nutzungspotenziale von geodatenbasierter mobiler

Augmented Reality. In U. Freitag, F. Fuchs-Kittowski,

A. Abecker, & F. Hosenfeld (eds.),

Umweltinformationssysteme

‐

Wie verändert die

Digitalisierung unsere Gesellschaft? (pp. 243-260).

Wiesbaden: Springer Vieweg. https://doi.org/10.1007/

978-3-658-30889-6_15

Fuchs-Kittowski, & F., Burkard, S. (2019). Potential

Analysis for the Identification of Application Scenarios

for Mobile Augmented Reality Technologies - with an

Example from Water Management. In R. Schaldach,

KH. Simon, J. Weismüller, & V. Wohlgemuth (eds.),

Environmental Informatics - Computational

Sustainability: ICT methods to achieve the UN

Sustainable Development Goals (pp. 372-380).

Aachen: Shaker.

Gazcón, N.F., Nagel, JMT., Bjerg, EA., & Castro, SM.

(2018). Fieldwork in Geosciences assisted by ARGeo:

A mobile augmented reality system. Computers &

Geosciences, 121, 30–38. https://doi.org/10.1016/

j.cageo.2018.09.004

Mobile Outdoor AR Application for Precise Visualization of Wind Turbines using Digital Surface Models

23

Haynes, P., Hehl-Lange, S., & Lange, E. (2018). Mobile

Augmented Reality for Flood Visualisation.

Environmental Modelling and Software, 109, 380-389.

https://doi.org/10.1016/j.envsoft.2018.05.012

Heckbert, P.S., & Garland, M., (1997). Survey of polygonal

surface simplification algorithms. Technical report,

Pittsburgh: Carnegie-Mellon University.

Höllerer, T., & Feiner, S. (2004). Mobile augmented reality.

In H. Karimi & A. Hammad (eds.): Telegeoinformatics:

Location-based computing and services (pp. 221-260).

London: Taylor & Francis Books Ltd.

Hoult, C. (2012). Geospatial Data for Augmented Reality.

In AGI GeoCommunity '12: Sharing the Power of

Place, Association for Geographic Information,

https://citeseerx.ist.psu.edu/viewdoc/download?doi=10

.1.1.367.9092&rep=rep1&type=pdf

Hugues, O., J.-M. Cieutat, & P. Guitton (2011). Gis and

augmented reality: State of the art and issues In B. Furth

(ed.), Handbook of augmented reality (pp. 721-740),

New York: Springer. https://doi.org/10.1007/978-1-

4614-0064-6_33

Hübner, G., Pohl, J., Warode, J., Gotchev, B., Nanz, P.,

Ohlhorst, D., Krug, M., Salecki, S., & Peters, W.

(2019). Naturverträgliche Energiewende. Akzeptanz

und Erfahrungen vor Ort. Bonn: Bundesamt für

Naturschutz (BfN).

Kilimann, J.E., Heitkamp, D., & Lensing, P. (2019). An

Augmented Reality Application for Mobile

Visualization of GIS-Referenced Landscape Planning

Projects. In 17th International Conference on Virtual-

Reality Continuum and its Applications in Industry

(VRCAI 2019). (article 23, pp. 1-5). New York: ACM,

https://doi.org/10.1145/3359997.3365712

Kim, K., Billinghurst, M., Bruder, G., Duh, H., & Welch,

G. (2018). Revisiting Trends in Augmented Reality

Research: A Review of the 2nd Decade of ISMAR

(2008–2017). IEEE Transactions on Visualization and

Computer Graphics, 24(11), 2947-2962.

https://doi.org/10.1109/TVCG.2018.2868591

Langlotz, T., Mooslechner, S., Zollmann, S., Degendorfer,

C., Reitmayr, G., & Schmalstieg, D. (2012). Sketching

up the world: In-situ authoring for mobile augmented

reality. Personal and Ubiquitous Computing, 16, 623–

630.

Liu, R., Zhang, J., Chen, S., & Arth, C. (2019). Towards

SLAM-Based Outdoor Localization using Poor

GPS and 2.5D Building Models. IEEE

International Symposium on Mixed and Augmented

Reality (ISMAR2019), (1-7). https://doi.org/10.1109/

ISMAR.2019.00016

Nefzger, A. (2018). 3D-Visualisierung von

Windenergieanlagen in der Landschaft –

Webanwendung „3D-Analyse“. In U. Freitag, F. Fuchs-

Kittowski, F. Hosenfeld, A. Abecker, & A. Reineke

(eds.), Umweltinformationssysteme 2018 -

Umweltbeobachtung: Nah und Fern (pp. 159 – 177).

Nürnberg: CEUR-WS.org, Vol. 2197. http://ceur-

ws.org/Vol-2197/paper12.pdf.

Panou, C., Ragia, L., Dimelli, D., & Mania, K. (2018).

Outdoors Mobile Augmented Reality Application

Visualizing 3D Reconstructed Historical Monuments.

In 4th International Conference on Geographical

Information Systems Theory, Applications and

Management (GISTAM), (59-67) Scitepress.

https://doi.org/10.5220/0006701800590067

Rambach, J.R., Lilligreen, G., Schäfer, A., Bankanal, R.,

Wiebel, A., & Stricker, D. (2021). A Survey on

Applications of Augmented, Mixed and Virtual Reality

for Nature and Environment. In 23rd International

Conference on Human-Computer Interaction (HCII-

2021), Springer. https://arxiv.org/pdf/2008.12024.pdf

Schall, G., Mendez, E., Kruijff, E., Veas, E., Junghanns, S.,

Reitinger, B., & Schmalstieg, D. (2009). Handheld

augmented reality for underground infrastructure

visualization. Personal and Ubiquitous Computing, 13,

281–291.

Schall, G., Zollmann, S., & Reitmayr, G. (2013). Smart

Vidente: advances in mobile augmented reality for

interactive visualization of underground infrastructure.

Personal and Ubiquitous Computing, 17(7), 1533–

1549. https://doi.org/10.1007/s00779-012-0599-x

Schmid, F., & Langerenken, D. (2014). Augmented reality

and GIS: On the possibilities and limits of markerless

AR. In Huerta, J., Schade, S., & Granell, C. (eds.):

Connecting a Digital Europe through Location and

Place. 17th AGILE International Conference on

Geographic Information Science. https://agile-

online.org/conference_paper/cds/agile_2014/agile2014

_87.pdf

Szymanek, L., & Simmons, D. R. (2015). The effectiveness

of augmented reality in enhancing the experience of

visual impact assessment for wind turbine

development. In 38

th

European Conference of

Visual Perception. Glasgow. https://doi.org/10.7490/

f1000research.1110764.1

Soldati, F. (2021). PeakFinder AR.

http://www.peakfinder.org/mobile

Zamir, A.R., Hakeem, A., Van Gool, L., Shah, M., &

Szeliski. R. (2018). Large-Scale Visual Geo-

Localization. Advances in Computer Vision and Pattern

Recognition, Basel: Springer.

Zollmann, S., Hoppe, C., Kluckner, S., Poglitsch, C.

Bischof, H., & Reitmayr, G. (2014). Augmented

Reality for Construction Site Monitoring and

Documentation. Proceedings of the IEEE, 102(2), 137-

154. https://doi.org/10.1109/JPROC.2013.2294314

GISTAM 2022 - 8th International Conference on Geographical Information Systems Theory, Applications and Management

24