Small Objects Manipulation in Immersive Virtual Reality

Eros Viola

a

, Fabio Solari

b

and Manuela Chessa

c

Dept. of Informatics, Bioengineering, Robotics, and Systems Engineering, University of Genoa, Italy

Keywords:

Interaction in VR, Controllers, Leap Motion, VR Gloves, Assembling Task.

Abstract:

In this pilot study, we analyze how the user approaches the manipulation of small virtual objects using different

technologies, such as the HTC Vive controllers, the Leap Motion, and the Manus Prime haptic gloves. The

aim of the study is to quantitatively assess the effectiveness of the three devices in a pick-and-place and simple

manipulation task, specifically the assembling of a three-dimensional object composed of several mechanical

parts of different shapes and sizes. 12 subjects perform the proposed experiment in a within-subjects study.

We measured the total time to complete the entire task, the partial timing and the errors as the number of

objects lost, to understand which are the most difficult actions. Moreover, we analyze the user feelings with

the User Experience Questionnaire (UEQ), and the System Usability Scale (SUS). Both timing measurements

and user experience reveal the weaknesses of the gloves, which suffer from problem in correctly tracking the

thumb, thus in allowing grasping actions. Controllers are still a good compromise, though some fine tasks

could not be correctly performed. The vision-based solution of the Leap Motion is appreciated by users, and

it is stable enough to perform the given task.

1 INTRODUCTION

The field of Virtual Reality (VR) has approached a

huge step further in these last years thanks to the re-

cent improvement of VR technologies and low-cost

head-mounted displays (HMDs). With its widespread

success, the development of embodiment and new in-

teraction techniques for immersive simulation using

the latest technologies available is being requested.

Indeed, today the controllers are the standard tools for

interacting in the virtual world, but their use is still a

subject of discussion: on one hand, we are used to

them and they provide a stable and effective interac-

tion with objects; on the other hand, they represent a

physical link between the real and virtual world that

could break the illusion of being in VR. Also, they do

not provide a naturalistic interaction behavior since

the user needs to press a button to perform an action

in VR. Other types of technologies were studied to

find a valid alternative. For example, the Leap Mo-

tion

1

, a non-wearable hand tracking device, with sev-

eral limitations such as occlusion, environment condi-

tion, and limited field of view (Weichert et al., 2013;

a

https://orcid.org/0000-0001-7447-7918

b

https://orcid.org/0000-0002-8111-0409

c

https://orcid.org/0000-0003-3098-5894

1

https://www.ultraleap.com/

Potter et al., 2013). Much more cumbersome solu-

tions, such as gloves with haptic feedback (as Manus

Prime haptic gloves

2

), were developed with a high

cost for purchasing that ensure high tracking quality

and realistic hands models. Newest solutions include

gloves with force feedback such as Dexmo

3

or the

Teslasuit gloves

4

.

A different number of interactions can be per-

formed in a VR environment (e.g. navigation, travel

selection, manipulation and system control (Frohlich

et al., 2006)), and in this paper we focus on manip-

ulation, which corresponds to modifying an object’s

position, orientation, scale, or shape. In particular,

we address the problem of manipulating small ob-

jects, which is one of the steps necessary to perform

manual assembling tasks in industrial contexts (Hoedt

et al., 2017; Eriksson et al., 2020), or to perform com-

plex actions in medical and surgical simulation sys-

tems (Girau et al., 2019; Mao et al., 2021).

Manipulation of small virtual objects recalls the

concept of dexterous manipulation (DM) that is an

area of robotics in which the fingers cooperate with

the aim of grasping and manipulating an object (Oka-

mura et al., 2000). In this context, one challenge is

2

https://www.manus-vr.com/

3

https://www.dextarobotics.com/

4

https://teslasuit.io/

Viola, E., Solari, F. and Chessa, M.

Small Objects Manipulation in Immersive Virtual Reality.

DOI: 10.5220/0010905200003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 2: HUCAPP, pages

233-240

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

233

to accurately capture the motion of the human hand

configurations and fine control of the fingertips to

perform stable grasps and inside-hand manipulation

(Mizera et al., 2019).

In this study, we aim at comparing three straight-

forward approaches, i.e. the HTC Vive controllers,

the Leap Motion, and the Manus Prime haptic gloves.

Even if HTC Vive controllers do not provide fingers

tracking as the other two technologies, we decided to

keep it in consideration since it is one of the most used

type of controllers. The considered tasks consist in

picking several small virtual objects (models of Mec-

cano

5

components) and positioning them to create a

given three-dimensional structure. The total time to

complete the task, the partial times (corresponding to

picking and correctly positioning the single objects)

and the errors as the number of objects involuntary

lost by the users during the task are measured. More-

over, user experience is assessed with the User Ex-

perience Questionnaire, the System Usability Scale,

and open-ended questions to collect the feelings of

the users. The considered task has different layers of

complexity, ranging from a standard pick-and-place

task, to more complex manipulation actions, like ro-

tating a washer around a screw. The goal of this study

is to analyze the strengths and weaknesses of the three

solutions in a quantitative way, opening a further dis-

cussion towards the implementation of systems where

natural and ecological interaction and manipulation

are possible.

2 RELATED WORKS

To manipulate objects in VR in a similar way to what

happens real in world, it is necessary to capture and

track the position of the hands’ joints in real-time and

with the highest possible accuracy. This could be-

come a challenging task when manipulation involves

small objects since positioning errors could become

relatively consistent.

Many methods in the literature are based on kine-

matic Gesture-Based approaches, based on predefined

gestures used to perform some actions. Common ges-

tures include circle, swipe, pinch, screen tap, and key

tap gestures. One popular example is the Microsoft

HoloLens (Avila and Bailey, 2016). While this ap-

proach is valid for certain VR interactions and envi-

ronments, it does not simulate a physically accurate

pinching or grasping interaction with an object, thus

not sufficient for direct object manipulation.

Heuristic-Based approaches are based on a pri-

5

https://www.meccano.com/

ori information about the hands and objects, thus ob-

jects interaction is only possible for a set of predeter-

mined object-hand configurations. This significantly

limits the practical application of such an approach

in unconstrained environments with unknown objects,

where this a priori information is not available (Oprea

et al., 2019).

It is worth noting that in the real world, our abil-

ity to interact with objects is due to the presence of

friction between the surfaces of the objects and our

hands. The physics-based approaches simulate forces

involved in object grasping to obtain more natural-

istic hand interaction in VR. This kind of approach

is very accurate but usually computationally expen-

sive, which limits its applicability in VR where real-

time performance is required. Recently, an efficient

physics-based approach is implemented in (H

¨

oll et al.,

2018).

When dealing with interactions in VR, several

studies can be found that focus on different aspects

of this fundamental action. Some focus their atten-

tion on the importance of the size of the hands (Lin

et al., 2019) and how they affect the interaction also

considering hands appearance (Van Veldhuizen and

Yang, 2021) and fingers configurations (Sorli et al.,

2021). Some others focus on the feedback we can

provide to the user such as audio and visual feedback

as in (Blaga et al., 2020) where they show how vi-

sual thermal feedback had an influence on grasp aper-

ture, grasp location and grasp type. Furthermore hap-

tic and force feedback can be considered using gloves

or developing new techniques and technologies (Yoon

et al., 2020). Others focus also on normal-sized ob-

jects considering the differences between real and vir-

tual grasping and the level of presence in the environ-

ment, adding in some cases also a self-avatar of the

user (Viola et al., 2021).

This parallel line of research studies the effect

of the hand representation on the level of presence

and on the embodiment of the VR user usually re-

implementing in several ways the so called rubber

hand illusion experiment. In (Argelaguet et al., 2016;

Lougiakis et al., 2020), the authors pointed out that

different hand models can have an impact on both

the sense of ownership and the performances. For

this reason, we decided to use the same robotic hand

model for each technology to not influence in any

ways the proposed task.

Considering the methods and devices to track the

users’ hands and fingers, there are works in the lit-

erature aiming to compare the different technological

solutions.

In (Masurovsky et al., 2020; Gusai et al., 2017;

Fahmi et al., 2020; Viola et al., 2021) performed a

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

234

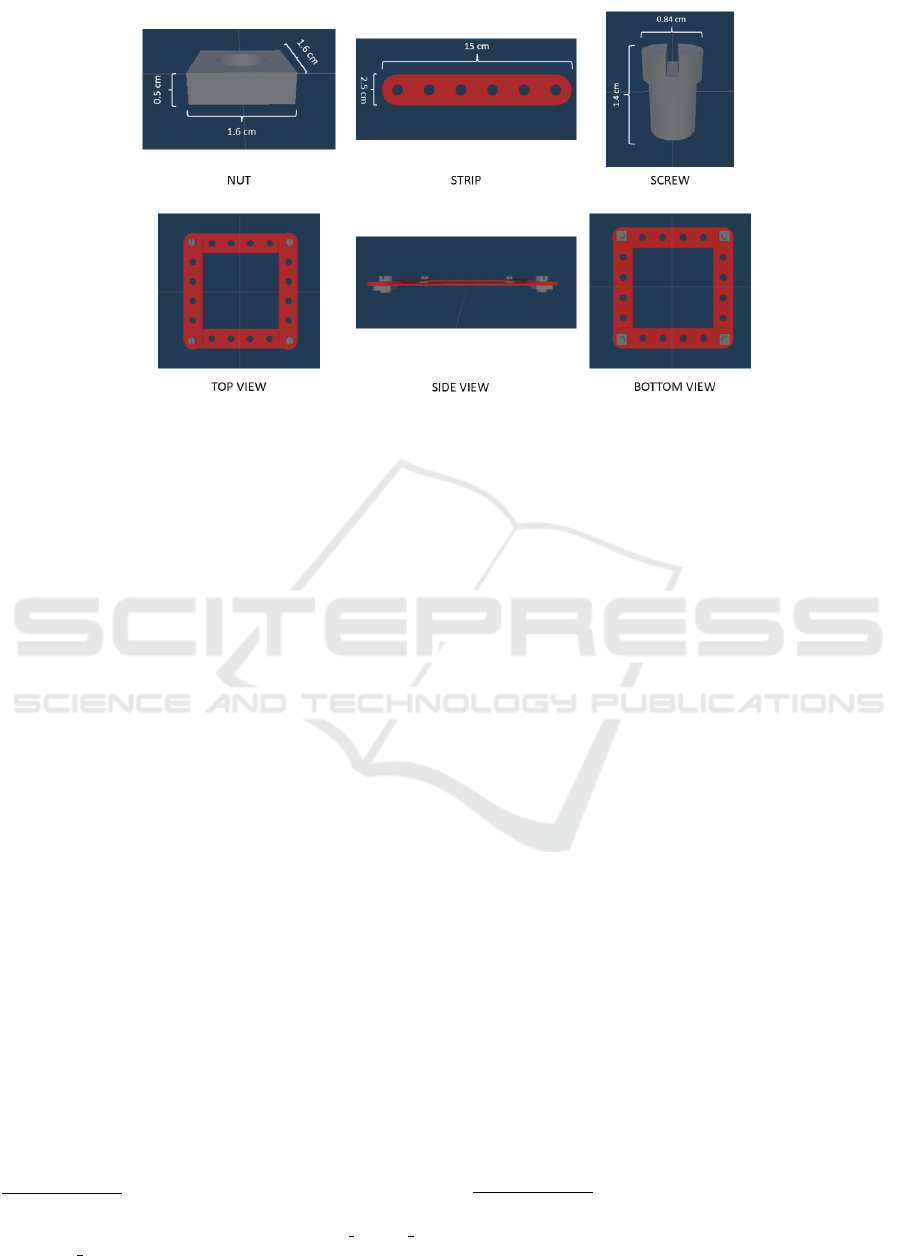

Figure 1: Top: the Meccano parts. Bottom: the final object.

comparative analysis of consumer devices, by consid-

ering grasping and pick-and-place tasks. Neither of

these studies considered the manipulation and the in-

teraction with small objects.

3 MATERIAL AND METHODS

3.1 Hardware Components

The device used for displaying Virtual Reality is the

HTC Vive Pro

6

. The headset has a refresh rate of 90

Hz and a 110 degree field of view. The device uses

two OLED panels, one per eye, each having a display

resolution of 1440 × 1600 (2880 x 1600 combined

pixels). To manage the data flow from the different

devices simultaneously, we used the following ma-

chine: a PC equipped with an NVIDIA GeForce 3080

graphic card, an AMD Ryzen 9 5900x processor, 32

GB of RAM, and Microsoft Windows 10 Home 64 bit

as the operating system.

For the detection and tracking of the hands and

the fingers, we used the Vive Controllers, the Leap

Motion, and the Manus Prime haptic gloves.

• The Vive controllers use the Lighthouse track-

ing system with 24 sensors, they have a multi-

function trackpad and a dual-stage trigger. In this

experiment, we do not use the haptic feedback.

• The Leap Motion

7

is a non-wearable device that

acquires the hands with two cameras and fits the

6

https://www.vive.com/us/product/vive-pro-full-kit/

7

https://www.ultraleap.com/datasheets/Leap Motion

Controller Datasheet.pdf

data with a model of the hands. Then it computes

the 3D position of each finger and of the center of

the hand. It has a field of view of 140°× 120°. As

with any vision-based device, it has some limita-

tions such as occlusions and noise due to illumi-

nation and image processing. In our setup, we at-

tached the Leap Motion device to the headset with

a 3D printed support designed for the HTC Vive

Pro

8

. The version of the SDK is Orion 4.1.0, the

Unity plugin version is 4.8.0.

• The Manus Prime haptic gloves

9

tracks the users’

hands and fingers by combining the measure-

ments of an HTC Vive tracker (attached on the

back of the hand), and the inertial measurements

of sensors attached on the fingers. The gloves

could provide haptic feedback transmitted by lin-

ear resonance actuators on the fingertips, but in

our setup, we did not consider it.

3.2 Software Components

The virtual environment has been implemented in

Unity 2019, with the following plugins: SteamVR

tool, so that our software is compatible with all the

supported HMDs, Virtual Reality Toolkit (VRTK),

the Leap Motion Unity module, and the Manus plu-

gins for Unity, to implement the interaction with the

virtual objects. The same 3D model of a robotic hand

has been used for all the three methods to remove pos-

sible influences of the predefined models of each tech-

nology.

8

https://www.thingiverse.com/thing:3119186

9

https://www.unipos.net/download/ManusVR-Prime.

pdf

Small Objects Manipulation in Immersive Virtual Reality

235

3.3 The Interaction Task

The task consists in building a square using the pro-

vided strips, bolts and nuts. They are part of a stan-

dard Meccano set, their shape and real size are shown

in Figure 1(top). The final square to be assembled is

shown in Figure 1(bottom). Meccano set was chosen

due to the freedom of creating either simple or com-

plex objects from small parts.

All the virtual objects have two Unity component

attached, a rigid body that simulates a real-world sce-

nario (i.e. use gravity is set to true and its kinematics

set to false) and a box collider with its shape and set

to trigger.

To pick-and-place the objects we use the same

hands model for all the interaction devices. It is worth

noting that the virtual hands are the visual feedback of

the interaction also for the HTC controllers.

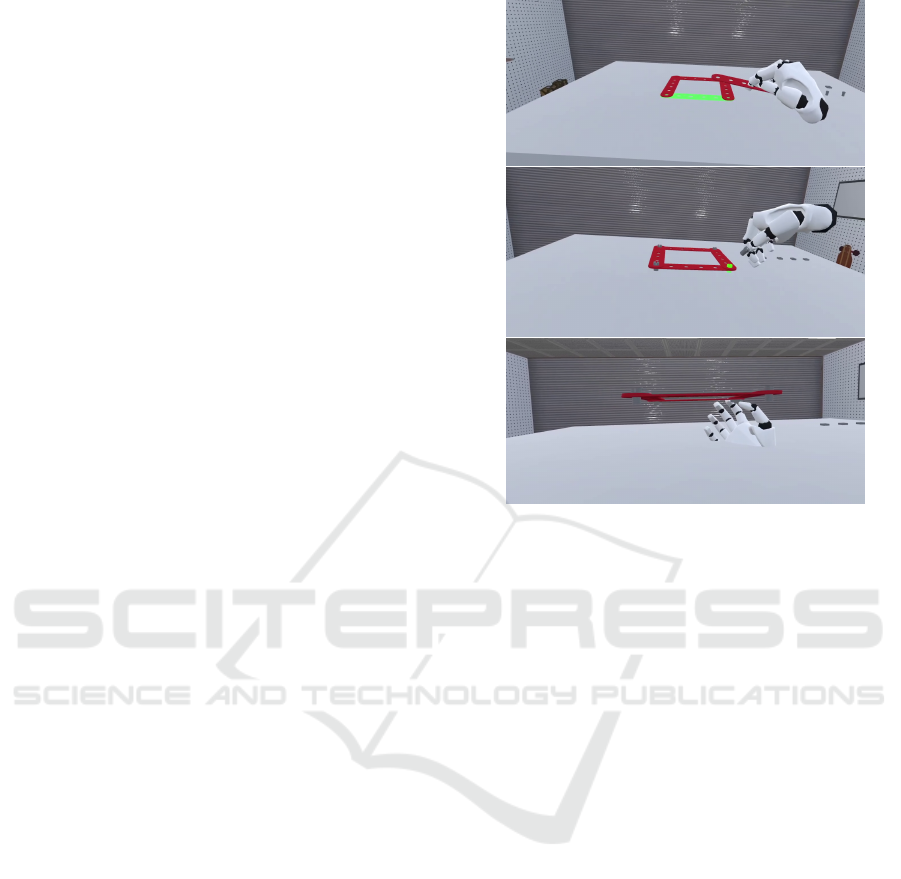

When an object is in its final position, highlighted

by a green light of the shape of the object during the

grasping (see Fig. 2), we constraint the rigid body’s

position and rotation and we set its kinematics to true.

In each scene, both strips and bolts use a lerp (linear

interpolation) function to slowly move to the correct

position and rotation when they are closer enough to

the green area in Fig. 2. Since the rotation of the nuts

involves the use of the fingers, and it is not easy to

be implemented with the HTC Vive controllers, we

implemented it in the following way:

• HTC Vive Controllers: We use gestures to detect

if the controller is rotating counterclockwise or

clockwise in order to move the nuts in the cor-

rect position. This is actually the common way of

implementing this type of interaction in industrial

applications.

• Leap Motion/Manus Gloves: The user uses his

index finger to rotate the nut through colliders.

We then detect if the nut is rotating counterclock-

wise or clockwise and move it up or down conse-

quently.

4 EXPERIMENT

4.1 Participants

To compare the three devices in the proposed task, we

performed a within-subjects experimental session and

collected data from 12 subjects (10 males, 2 females).

The participants, aged from 24 to 54 (30.25 ± 8.65)

and with normal or corrected-to-normal vision, were

all with low to medium experience with VR and the

Figure 2: Three snapshots of the assembling task. Top: po-

sitioning of a strip. Center: positioning of a screw. Bottom:

positioning of a nut. The final positions are highlighted in

green.

used technologies. Each subject performed all the ex-

perimental conditions in a randomized order to avoid

learning or habituation effects.

4.2 Procedure

The experiment is performed as follows. Before start-

ing, the experimenter shows how to properly wear the

HMD, how to wear/use the given hand tracking de-

vice, and explains the task. The user has to com-

plete the assembly task that consists of interacting and

grabbing pieces of the Meccano game such as strip,

bolt, and nut, with the aim of building a square. The

simulation starts when the user presses a button on

the table in front of him. At this point, the user should

grasp the appeared pieces, and put them in the high-

lighted areas. The task ends when all the pieces are

correctly positioned. At the end of the assembly task,

the user removes the HMD and they are asked to fill

the questionnaires.

4.3 Measurements

During the task, both the total time to comple-

tion (TTC) and the partial time of each piece were

recorded. Also, the number of time a piece falls from

the user’s hands were saved to compute the errors’

number.

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

236

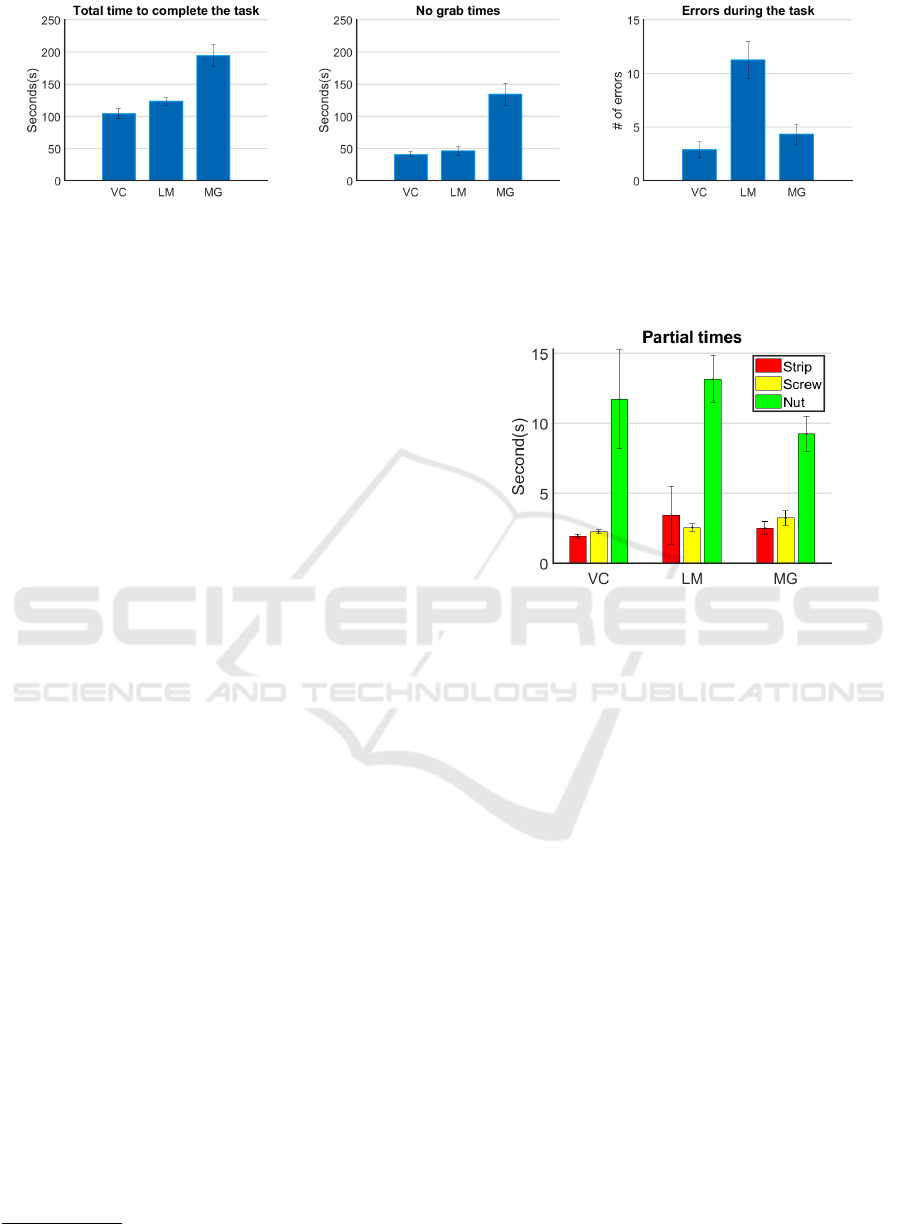

(a) (b) (c)

Figure 3: (a) Time (seconds) to complete the task. (b) Time (seconds) without a grabbed object. (c) The number of errors.

Values are averaged across participants and the standard error of the mean is shown. VC: Vive Controllers, LM: Leap Motion,

MG: Manus Gloves.

After each trial of a device, the users compile

the User Experience Questionnaire (UEQ) (Laug-

witz et al., 2008), and the System Usability Scale

(SUS) (Lewis, 2018).

The UEQ consists of 26 statements, to be evalu-

ated by the users with a score from 1 to 7. The state-

ments are then analyzed with respect to 6 domains

which are attractiveness, perspicuity, efficiency, de-

pendability, stimulation, and novelty. In this paper,

we used the tools provided by the developers and

available in the official website

10

.

The SUS consists of a 10-item questionnaire with

five response options for respondents: from Strongly

agree to Strongly disagree, or viceversa. The analysis

is performed by averaging the answers (subtracting

5 to the ones with the opposite order), summing the

results, and multiplying by 2.5. The state-of-the-art

reports that the average score of the SUS is 68. Sys-

tems that obtain a score higher than 68 are above the

average. In particular, systems above 80 are highly

recommended, while systems under 51 must be care-

fully checked before use.

5 RESULTS

Figure 3(a) shows the total time to complete the task

with the three devices. The Vive Controllers (VC)

and the Leap Motion (LM) have similar performance,

while with the Manus Gloves (MG) users need almost

a double time to complete the task (ttest confirmed the

statistical difference with p < 0.01). It is interesting

to note in Figure 3(b) that the difference is due to the

fact that most of the time spent in the MG condition

is without grabbing any object. This is confirmed by

the observation of the experiment: with the Manus

Gloves users experienced many problems in grabbing

the objects. This is due to a specific malfunction of

10

https://www.ueq-online.org/

Figure 4: Partial time (in seconds, averaged across partici-

pants, with standard error of the mean) to put in the correct

position the three considered objects. VC: Vive Controllers,

LM: Leap Motion, MG: Manus Gloves.

the gloves that may happen during the experiment. In-

deed, it is often impossible to close the thumb and to

do a real pinch action. For this reason, people spent

a big amount of time trying to grab the objects. Af-

ter objects are grabbed, the position and all the other

actions are very easy to be completed.

By considering the errors, i.e. the number of

objects lost by the users during the action, the per-

formance of the Vive Controllers and of the Manus

gloves are similar. Indeed, they use the same track-

ing technology, and errors are mainly due to tracking

instability, which mainly affects the Leap Motion.

The experiment has been designed to consider the

manipulation of three objects with different features.

The positioning of the strip is a simple pick-and-

place task, performed with a quite big object. The

three devices have similar performance (see Fig. 4 red

bars). Also the positioning of the screw is a pick-

and-place task, though the objects are considerably

smaller. Again, the three devices perform similarly

(see Fig. 4 yellow bars). The correct positioning of

the nut requires more time (see Fig. 4 green bars),

and for this specific task performances of the Manus

Gloves are better than the other devices. In all the

Small Objects Manipulation in Immersive Virtual Reality

237

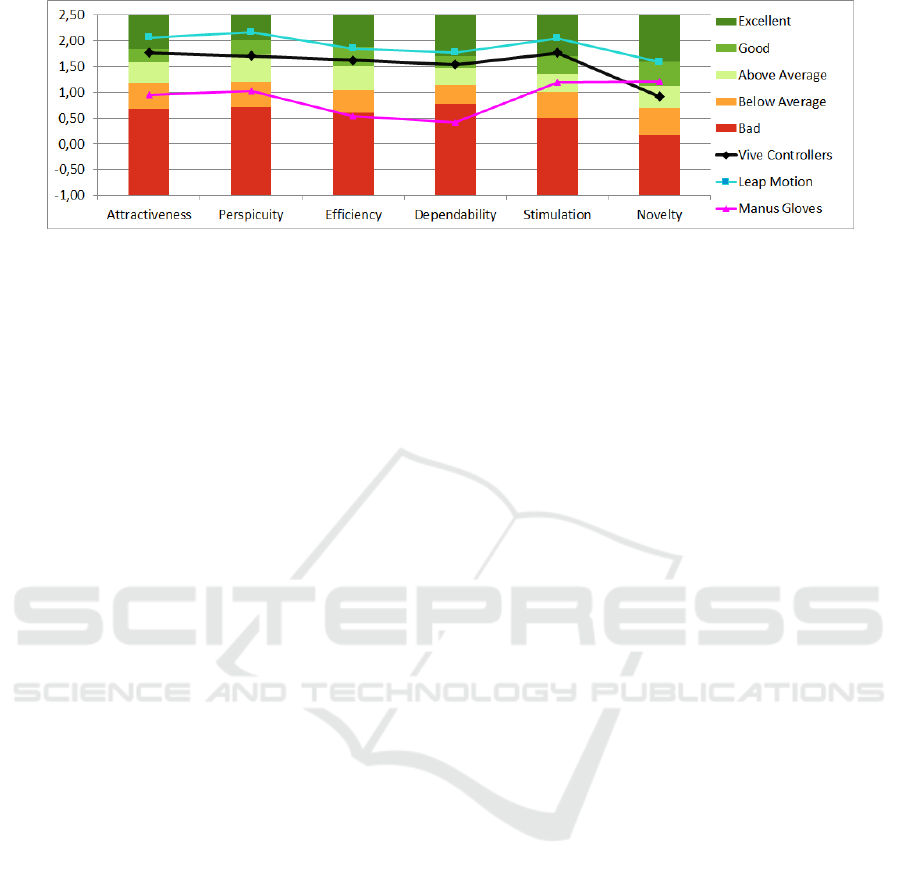

Figure 5: User experience evaluation with the UEQ questionnaire for the three considered devices. Mean values, averaged

across all the participants, are plotted on the visualization scales provided by the official website.

cases, variability among users is very high, indicating

a subjectivity in using the devices and performing the

task.

The user experience evaluation with the UEQ

questionnaire reported good results in terms of attrac-

tiveness, perspicuity, efficiency, dependability, and

stimulation for the Vive Controllers. For the same as-

pects, the Leap Motion obtained an excellent evalu-

ation. Users found the Leap Motion better in terms

of novelty. The Manus Gloves are in general be-

low average for all the evaluation domains, except for

stimulation and novelty. In Figure 5, it is possible to

see the mean values, averaged across all the partici-

pants, plotted on the visualization scales provided by

the UEQ official website.

Similar results are obtained with the SUS ques-

tionnaire. Both the Vive Controllers and the Leap

Motion are above the threshold. In particular, the con-

troller obtained a score of 77 and the Leap Motion a

score of 84. The Manus Gloves obtained a score of

54, resulting in below the threshold.

6 CONCLUSION

In this paper, we have analyzed three consumer de-

vices for tracking the users’ hands and fingers, thus

interacting in VR environments, by taking into con-

sideration an assembling task with small objects.

This work is motivated by the fact that one of the

main application domains of immersive VR is train-

ing, e.g. in industrial contexts.

The 12 participants were all able to complete the

required task, but the analysis of the timings shows

that with the Manus Gloves almost a double-time is

necessary. Further analysis shows that the main prob-

lem of the gloves is the fact that the thumbs are not

tracked correctly, thus hampering the grasping ac-

tions. This is also reported by almost all the users in a

post-experiment interview, and it affects the user ex-

perience evaluation performed with the UEQ and the

SUS. It is worth noting that commercial gloves are

available in several sizes and need an ad-hoc calibra-

tion for each user (Caeiro-Rodr

´

ıguez et al., 2021). We

performed the calibration for each user, but our gloves

are of a standard size, thus fitting may not be ideal

for all the users. Actions not involving the use of the

thumb were performed correctly and quickly (e.g. the

assembling of the nuts). The Vive Controllers have

similar performance compared with the Leap motion,

in terms of timing (both total time, time without a

grabbed object, and partial times). The vision-base

technique of the Leap Motion makes the device less

robust in terms of number of errors. When the track-

ing is too noisy, object could fall down. Nevertheless,

users appreciate this device.

The lesson learned from this experiment is that

none of the considered devices may allow us to in-

teract in an ecological way in virtual environments.

Indeed, the task was simplified by adding visual hints

(highlighting in green the final position of the objects)

and by moving the objects in the final position when

getting close to it. It would be interesting to remove

such hints, and to investigate interaction in more com-

plex tasks and with smaller objects.

It is worth noting that researchers are now pur-

suing physically-based approaches, thus improving

grasping capabilities of small and complex objects in

VR (Delrieu et al., 2020).

Finally, the naturalness of actions in VR should be

analyzed with more complex metrics, e.g. by looking

at the users’ behaviour, as in (Ragusa et al., 2021).

ACKNOWLEDGEMENTS

This work has been partially supported by the Inter-

reg Alcotra projects PRO-SOL We-Pro (n. 4298) and

CLIP E-Sante’ (n. 4793).

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

238

REFERENCES

Argelaguet, F., Hoyet, L., Trico, M., and Lecuyer, A.

(2016). The role of interaction in virtual embodiment:

Effects of the virtual hand representation. In 2016

IEEE Virtual Reality (VR), pages 3–10.

Avila, L. and Bailey, M. (2016). Augment your reality.

IEEE computer graphics and applications, 36(1):6–7.

Blaga, A. D., Frutos-Pascual, M., Creed, C., and Williams,

I. (2020). Too hot to handle: An evaluation of the

effect of thermal visual representation on user grasp-

ing interaction in virtual reality. In Proceedings of the

2020 CHI Conference on Human Factors in Comput-

ing Systems, pages 1–16.

Caeiro-Rodr

´

ıguez, M., Otero-Gonz

´

alez, I., Mikic-Fonte,

F. A., and Llamas-Nistal, M. (2021). A systematic re-

view of commercial smart gloves: Current status and

applications. Sensors, 21(8):2667.

Delrieu, T., Weistroffer, V., and Gazeau, J. P. (2020). Pre-

cise and realistic grasping and manipulation in virtual

reality without force feedback. In 2020 IEEE Confer-

ence on Virtual Reality and 3D User Interfaces (VR),

pages 266–274. IEEE.

Eriksson, Y., Sj

¨

olinder, M., Wallberg, A., and S

¨

oderberg, J.

(2020). VR for assembly tasks in the manufacturing

industry–interaction and behaviour. In Proceedings of

the Design Society: DESIGN Conference, volume 1,

pages 1697–1706. Cambridge University Press.

Fahmi, F., Tanjung, K., Nainggolan, F., Siregar, B.,

Mubarakah, N., and Zarlis, M. (2020). Comparison

study of user experience between virtual reality con-

trollers, leap motion controllers, and senso glove for

anatomy learning systems in a virtual reality environ-

ment. In IOP Conference Series: Materials Science

and Engineering, volume 851, page 012024. IOP Pub-

lishing.

Frohlich, B., Hochstrate, J., Kulik, A., and Huckauf, A.

(2006). On 3d input devices. IEEE computer graphics

and applications, 26(2):15–19.

Girau, E., Mura, F., Bazurro, S., Casadio, M., Chirico, M.,

Solari, F., and Chessa, M. (2019). A mixed reality sys-

tem for the simulation of emergency and first-aid sce-

narios. In 2019 41st Annual International Conference

of the IEEE Engineering in Medicine and Biology So-

ciety (EMBC), pages 5690–5695. IEEE.

Gusai, E., Bassano, C., Solari, F., and Chessa, M. (2017).

Interaction in an immersive collaborative virtual re-

ality environment: a comparison between Leap Mo-

tion and HTC controllers. In International Confer-

ence on Image Analysis and Processing, pages 290–

300. Springer.

Hoedt, S., Claeys, A., Van Landeghem, H., and Cottyn, J.

(2017). The evaluation of an elementary virtual train-

ing system for manual assembly. International Jour-

nal of Production Research, 55(24):7496–7508.

H

¨

oll, M., Oberweger, M., Arth, C., and Lepetit, V. (2018).

Efficient physics-based implementation for realistic

hand-object interaction in virtual reality. In 2018

IEEE Conference on Virtual Reality and 3D User In-

terfaces (VR), pages 175–182. IEEE.

Laugwitz, B., Held, T., and Schrepp, M. (2008). Construc-

tion and evaluation of a user experience questionnaire.

In Symposium of the Austrian HCI and usability engi-

neering group, pages 63–76. Springer.

Lewis, J. R. (2018). The system usability scale: Past,

present, and future. International Journal of Hu-

man–Computer Interaction, 34(7):577–590.

Lin, L., Normoyle, A., Adkins, A., Sun, Y., Robb, A., Ye,

Y., Di Luca, M., and J

¨

org, S. (2019). The effect of

hand size and interaction modality on the virtual hand

illusion. In 2019 IEEE Conference on Virtual Reality

and 3D User Interfaces (VR), pages 510–518.

Lougiakis, C., Katifori, A., Roussou, M., and Ioannidis, I.-

P. (2020). Effects of virtual hand representation on

interaction and embodiment in hmd-based virtual en-

vironments using controllers. In 2020 IEEE Confer-

ence on Virtual Reality and 3D User Interfaces (VR),

pages 510–518. IEEE.

Mao, R. Q., Lan, L., Kay, J., Lohre, R., Ayeni, O. R., Goel,

D. P., et al. (2021). Immersive virtual reality for surgi-

cal training: a systematic review. Journal of Surgical

Research, 268:40–58.

Masurovsky, A., Chojecki, P., Runde, D., Lafci, M., Prze-

wozny, D., and Gaebler, M. (2020). Controller-free

hand tracking for grab-and-place tasks in immersive

virtual reality: Design elements and their empiri-

cal study. Multimodal Technologies and Interaction,

4(4):91.

Mizera, C., Delrieu, T., Weistroffer, V., Andriot, C., De-

catoire, A., and Gazeau, J.-P. (2019). Evaluation

of hand-tracking systems in teleoperation and vir-

tual dexterous manipulation. IEEE Sensors Journal,

20(3):1642–1655.

Okamura, A. M., Smaby, N., and Cutkosky, M. R. (2000).

An overview of dexterous manipulation. In Proceed-

ings 2000 ICRA. Millennium Conference. IEEE In-

ternational Conference on Robotics and Automation.

Symposia Proceedings (Cat. No. 00CH37065), vol-

ume 1, pages 255–262. IEEE.

Oprea, S., Martinez-Gonzalez, P., Garcia-Garcia, A.,

Castro-Vargas, J. A., Orts-Escolano, S., and Garcia-

Rodriguez, J. (2019). A visually realistic grasping sys-

tem for object manipulation and interaction in virtual

reality environments. Computers & Graphics, 83:77–

86.

Potter, L. E., Araullo, J., and Carter, L. (2013). The leap

motion controller: a view on sign language. In Pro-

ceedings of the 25th Australian computer-human in-

teraction conference: augmentation, application, in-

novation, collaboration, pages 175–178.

Ragusa, F., Furnari, A., Livatino, S., and Farinella,

G. M. (2021). The Meccano dataset: Understand-

ing human-object interactions from egocentric videos

in an industrial-like domain. In Proceedings of

the IEEE/CVF Winter Conference on Applications of

Computer Vision, pages 1569–1578.

Sorli, S., Casas, D., Verschoor, M., Tajadura-Jim

´

enez, A.,

and Otaduy, M. A. (2021). Fine virtual manipulation

with hands of different sizes. In Proc. of the Interna-

tional Symposium on Mixed and Augmented Reality

(ISMAR).

Small Objects Manipulation in Immersive Virtual Reality

239

Van Veldhuizen, M. and Yang, X. (2021). The effect

of semi-transparent and interpenetrable hands on ob-

ject manipulation in virtual reality. IEEE Access,

9:17572–17583.

Viola, E., Solari, F., and Chessa, M. (2021). Self represen-

tation and interaction in immersive virtual reality. In

VISIGRAPP (2: HUCAPP), pages 237–244.

Weichert, F., Bachmann, D., Rudak, B., and Fisseler, D.

(2013). Analysis of the accuracy and robustness of the

leap motion controller. Sensors, 13(5):6380–6393.

Yoon, Y., Moon, D., and Chin, S. (2020). Fine tactile rep-

resentation of materials for virtual reality. Journal of

Sensors, 2020.

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

240