Underwater Image Enhancement by the Retinex Inspired Contrast

Enhancer STRESS

Michela Lecca

a

Fondazione Bruno Kessler, Digital Industry Center, via Sommarive 18, 38123 Trento, Italy

Keywords:

Underwater Images, Image Enhancement, Retinex.

Abstract:

Underwater images are often affected by undesired effects, like noise, color casts and poor detail visibility,

hampering the understanding of the image content. This work proposes to improve the quality of such images

by means of STRESS, a Retinex inspired contrast enhancer originally designed to process general, real-world

pictures. STRESS, which is based on a local color spatial processing inspired to the human vision mechanism,

is here tested and compared with other approaches on the public underwater image dataset UIEB. The exper-

iments show that in general STRESS remarkably increases the quality of the input image, while preserving

its local structure. The images enhanced by STRESS are released for free to enable visual inspection, further

analysis and comparisons.

1 INTRODUCTION

Underwater imaging is an important support for nav-

igation and for many activities of marine exploration,

such as rescue missions, ecological monitoring, ma-

rine life studying, hand-made structure inspections

(Manzanilla et al., 2019), (Bonin-Font et al., 2008),

(Selby et al., 2011), (Lu et al., 2017), (Pedersen et al.,

2019), (Matos et al., 2016), (McLellan, 2015), (Shee-

han et al., 2020), (Ho et al., 2020), (Yin, 2021). For all

these task, images with good visibility of details and

content are required, but unfortunately underwater

images are usually affected by low-light, non-uniform

illumination, green-bluish color cast, haze and noise.

These issues are mainly due to three physical phe-

nomena of the transmission of the light through the

water medium. First, in the water, the red compo-

nent is absorbed faster than the green and the blue

ones and this generates the green-bluish color that

characterizes the underwater images. Second, there

is the light background scattering, i.e. the diffusion

of the light from sources others than the sample of

interest, including also suspended particles that often

generate noise. Third, there is the light forward scat-

tering, i.e. the deviation of the light from its origi-

nal angle of transmission, that attenuates the light en-

ergy aggravating the visibility of the details and con-

tent of the observed scene. Many image enhancement

techniques have been developed and two recent, in-

a

https://orcid.org/0000-0001-7961-0212

teresting reviews are presented in (Li et al., 2019a),

(Wang et al., 2019). In (Li et al., 2019a), these tech-

niques are divided in two groups, named respectively

the IFM-free image enhancement methods and the

IFM-based image restoration methods, where ’IFM’

stands for image formation model. The methods of the

first class enhance underwater images without con-

sidering any physical model of the image formation

in the marine environment. They usually process the

input image by reworking its color distribution and

contrast. Some examples of these methods are the

works in (Hummel, 1977), (Zuiderveld, 1994), (Iqbal

et al., 2010), (Huang et al., 2018), (Vasamsetti et al.,

2017), (Jamadandi and Mudenagudi, 2019), (Fabbri

et al., 2018). On the contrary, the methods of the

second class rely on physical principles of the under-

water imaging process. Basically, they assume that

the image signal is determined by two energy compo-

nents relative to the light directly transmitted from the

observed scene to the camera and to the light back-

ground scattering. Some examples of these methods

are the works in (Chao and Wang, 2010), (Serikawa

and Lu, 2014), (Zhao et al., 2015), (Li et al., 2016a),

(Li et al., 2016b), (Wang et al., 2017), (Shi et al.,

2018) (Barbosa et al., 2018). Anyway, despite the

many efforts on underwater image enhancement, this

task is not yet solved and remains still today an active

research field.

This work investigates the usage of the Retinex-

inspired, real-world image enhancer STRESS (Kol˚as

Lecca, M.

Underwater Image Enhancement by the Retinex Inspired Contrast Enhancer STRESS.

DOI: 10.5220/0010895700003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 4: VISAPP, pages

627-634

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

627

et al., 2011) as an IFM-free tool for improving the

quality of underwater pictures. STRESS basically

implements a local equalization of the red, green

and blue channels. For each channel and for each

pixel x, STRESS randomly samples a set of pixels

around x and stretches the channel intensity distri-

bution around x between two values selected from

the sampled pixels. These values vary from pixel to

pixel and form two surfaces bounding the channel and

called envelopes. Stretching the channel between its

envelopes increases the contrast granting a better vis-

ibility of the image details and content. The name

STRESS is an acronym of the expression ’Spatio-

temporal Retinex-like Envelope with Stochastic Sam-

pling’ which refers to the main characteristics of this

algorithm and with the fact that it was originally ap-

plied for time sequence enhancement. Developed as

a contrast enhancer for general, real-world image en-

hancer, STRESS is here tested as an IFM-free under-

water image enhancer on the public, popular dataset

UIEB (Li et al., 2019b), which contains 890 marine

images with corresponding high quality references.

The experiments show that STRESS remarkably im-

proves the quality of the input image, increasing its

contrast and dynamic range while preserving as much

as possible its original structure. Moreover,compared

with other algorithms of the same class, STRESS ex-

hibits similar or better performance.

The paper is organized as follows: Section 2 de-

scribes STRESS; Section 3 explains how STRESS is

evaluated in the framework of underwater image en-

hancement; Section 4 reports the results, while Sec-

tion 5 draws some conclusions and future work.

2 STRESS

STRESS is a spatial color algorithm inspired by

Retinex theory (Land et al., 1971), (Land, 1964).

Figure 1: An example of simultaneous contrast: a same tri-

angle is displayed against two different backgrounds, but

the triangle seems to be brighter on left than on right. This

is because the color of a point as perceived by humans is

influenced by the surrounding colors.

Retinex was developed by Land and McCann at

the end of 1970s as a model to predict how humans

see colors. Based on a set of empirical evidences,

Retinex highlighted that human vision system and

cameras work differently. In fact, when looking at a

point of a scene, humans and cameras may report very

different colors, as the simultaneous contrast phe-

nomenon proves (see Figure 1). This is because the

color of a point as perceived by the human vision sys-

tem depends not only on the photometric properties

of that point, but also on the surrounding colors. This

fact suggested to Land and McCann to reproduce the

human color sensation from a digital image by an al-

gorithm, namely the Retinex algorithm, that reworks

the colors of each pixel based on the spatial distribu-

tion of the colors of its neighbors. Local color pro-

cessing is performed independently on the red, green

and blue channels of the input image. This is in line

with the empirical evidence that humans acquire and

process separately the short, medium and long wave-

lengths of the light. The Retinex algorithm has been

widely studied because its local spatial color analysis

can be used to improve the visibility of image details

and content as well as to attenuate or even remove

possible color casts due to the light. Many variants of

this processing mechanism have been developed and

others are still under development, proposing differ-

ent approaches to combine local spatial and color in-

formation with the final purpose to enhance the input

image.

STRESS arises from the Milano Retinex family

(Rizzi and Bonanomi, 2017), (Lecca, 2020), a set

of algorithms grounded on Retinex principles and

mainly used for image enhancement. These algo-

rithms differ to each other in the definition of the spa-

tial support where to compute the local spatial color

distribution, in the way the spatial and channel in-

tensity information are combined for improving the

input image, and consequently in the final enhance-

ment level. Precisely, STRESS is a variant of the Mi-

lano Retinex algorithm Random Spray Retinex (RSR)

(Provenzi et al., 2007). RSR takes as input a color

image and works separately on its color channels. For

each channel I of the input image and for each pixel

x of I with channel intensity I(x), RSR computes N

random sprays, i.e. N sets S

1

(x), . . . , S

N

(x) of k pixels

randomly selected with radial distribution around x.

RSR extracts from each S

i

(x) the maximum intensity

value M

i

and maps the intensity I(x) onto a new value

L(x), called the lightness of I at x and defined as:

L(x) =

1

N

N

∑

i=1

I(x)

M

i

(1)

where I is of course processed in order to prevent di-

vision by zero. The number k models the locality

of the color processing, i.e. the higher k the higher

the points sampled around x and closer to x. Con-

sidering more than one spray (i.e. N > 1) enables

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

628

to mitigate possible chromatic noise due to the ran-

dom sampling. The final enhanced image is obtained

by rescaling the values of each channel lightness over

{0, . . . ,255} and packing the rescaled lightnesses as

a RGB image. Rescaling the value I(x) by the max-

imum values over each sprays enables stretching the

local distribution of the channel intensity, increasing

the channel brightness and contrast, and, according

to (Lecca, 2014), lowering eventual chromatic domi-

nants of the light.

The algorithm STRESS originated from RSR

to further improve the image contrast. As RSR,

STRESS processes the channels of the input image

separately and for each channel I it implements the

pixel-wise random sampling scheme of RSR. Differ-

ently from RSR, STRESS extracts both the minimum

and the maximum intensity of each spray sampled on

I and uses these values to define two surfaces E

low

and E

up

, called envelopes and bounding the channel.

The intensity values of the channels are then stretched

pixel-wise between the corresponding values in the

lower and upper envelopes. This operation locally

stretches the local distribution of I, thus increases the

contrast of the image improving the visibility of the

image details.

Mathematically, given a pixel x of I and the N sprays

S

i

(x)(i = 1, .., N), let E

i

low

(x) and E

i

up

(x) be the min-

imum and maximum intensities over the spray S

i

(x)

and let R

i

(x) be their distance, i.e.:

E

i

low

(x) = min{I(y) : y ∈ S

i

(x)}, (2)

E

i

up

(x) = max{I(y) : y ∈ S

i

(x)}, (3)

R

i

(x) = E

i

up

(x) − E

i

low

(x). (4)

STRESS maps the value I(x) onto a new value v

i

(x)

basically obtained by stretching I(x) between E

i

low

(x)

and E

i

up

(x) when these are different, while set to 0.5

(i.e. in the middle of the possible intensity range) oth-

erwise:

v

i

(x) =

(

1

2

if R

i

(x) = 0

I(x)−E

i

low

(x)

R

i

(x)

otherwise

(5)

As in RSR, more sprays are considered to reduce un-

desired effects of the random sampling. Specifically,

the values R

i

(x) and v

i

(x) are averaged over N:

R(x) =

1

N

N

∑

i=1

R

i

(x), v(x) =

1

N

N

∑

i=1

v

i

(x). (6)

The envelopes containing the channel I are computed

as follows:

E

low

(x) = I(x) − R(x)v(x), (7)

E

up

(x) = E

low

(x) + R(x), (8)

and the value of I(x) is mapped on the value

STRESS(x) defined as:

STRESS(x) =

(

1

2

if E

up

(x) = E

low

(x)

I(x)−E

low

(x)

E

up

(x)−E

low

(x)

otherwise

(9)

Figure 2 shows an image and its enhancement by

STRESS, along with an example of random spray

computed around the barycenter of the red channel

pixels and the lower and upper envelopes of the red

channel.

3 EVALUATION

The evaluation of the performance of STRESS em-

ployed as an IFM-free underwater image enhancer is

carried out on the public dataset UIEB

1

. This dataset

contains 890 real-world images, some of them cap-

tured with the camera oriented from the seabed to the

water surface, others acquired in the opposite direc-

tion. The UIEB scenes have been taken under differ-

ent illuminations, including both natural and artificial

lights as well as a combination of them. The images

present different issues for enhancement, like e.g. low

light, veil, noise, green-bluish color cast. For each

image J, the dataset contains also the reference of J,

i.e. a ’ground-truth’ version of J having good qual-

ity. This reference has been selected by 50 volunteers

from a set of versions of J enhanced by different al-

gorithms. Some examples of images from UIEB are

shown in Figure 3.

Assessing a quality of an image is a hard task, not

yet fully agreed (Pedersen and Hardeberg, 2012) and

in general a single measure does not suffice to capture

all the features concurring to the image quality (Bar-

ricelli et al., 2020). Therefore, here the performance

of STRESS has been evaluated by analyzing both no-

reference and full-reference measures.

The no-reference measures considered here are:

1. the mean brightness of J (B): B is the mean value

of the intensity of the mono-chromatic image BJ

obtained by averaging pixel by pixel the three

color channels of J;

2. the mean multi-resolution contrast (C) (Rizzi

et al., 2004): C is the mean value of pixel-wise

contrasts c computed on multiple scaled versions

of BJ; in particular, the contrast c(x) of a pixel x

of any rescaled version of BJ is defined as the av-

erage of the absolute differences between the in-

tensity at x and the intensities of the pixels located

in a 3×3 window centered at x;

1

https://li-chongyi.github.io/proj

benchmark.html

Underwater Image Enhancement by the Retinex Inspired Contrast Enhancer STRESS

629

Figure 2: On top: an image, its enhancement by STRESS and its red channel, where the red circles belong to a random spray

centered at the barycenter of the channel. On bottom: the lower and upper envelopes of the red channel computed using 10

sprays each with 250 pixels.

3. the histogram flatness (f): f measures the entropy

of the intensity distribution of BJ and is defined

as the L

1

distance between the probability density

function of the intensity of BJ and the uniform

probability density function;

4. the human vision system vision inspired metric

UIQM (Panetta et al., 2015): this measure ac-

counts for three features highly relevant to the hu-

man vision system to assess the readability of an

underwater image, i.e. the colorfulness, the sharp-

ness and the contrast of the image. UIQM has

been designed to correlate with the human percep-

tion of the quality of an underwater image;

5. the underwater color image quality evaluation

metric UCIQE (Yang and Sowmya, 2015): this

measure, related to human perception, accounts

for the chroma, the saturation and the contrast of

the input image.

The values of B, C and f computed on the input

image are compared with those computed on the out-

put image. In general, an enhancer is expected to in-

crease the brightness and the contrast, while decreas-

ing the histogram flatness. The values of UIQM and

UCIQE are expected to be proportional with the im-

age quality, i.e. they should be high for images with

good quality and low otherwise.

The full-reference metrics considered here are:

1. the mean square root (MSE): this is the L

2

dis-

tance between the reference image and the pro-

cessed one and thus captures color distortions pos-

sibly introduced by enhancing;

2. the peak-signal-to-noise-ratio (PSNR): PSNR

also measures differences between the reference

and the processed image but in a logarithmic

space;

3. the structural similarity (SSIM) (Wang et al.,

2004): this measure captures local distortions of

the image structure accounting for the covariance

of the image channel intensities in image sub-

windows;

4. the percentage of enhanced images with red-

distored pixels (SP) and the percentage of red-

distorted pixels in these images (MSP): in general,

the red component of underwater images has low

values and variance with respect to the other com-

ponents. Stretching the variability range of the red

channel in order to augment the information con-

tained in may generate saturation. This means that

the red values of some pixels are moved towards

their maximum value, i.e. 255, introducing arti-

facts and making the image poorly natural. Such

color distortions are here evaluated by detecting

the pixels in the enhanced image such that (a) the

red (R), green (G) and blue (B) components are

close to red, i.e.:

R > 200 & R > 10G & R > 10B, (10)

and (b) the inequalities in (10) do not hold for

the corresponding pixels in the reference image.

Mathematically, let p be a pixel in the enhanced

image satisfying the inequalities of formula (10)

and let p

′

be its corresponding pixel in the refer-

ence. If the color of p

′

does not match the condi-

tions in (10), then p is considered as red-distorted.

SP measures the percentage of enhanced images

containing red-distorted pixels, while MSP is the

percentage of red-distorted pixels in these images.

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

630

These measures compare the reference to the en-

hanced image. The lower the MSE, the higher the

PSNR and the SSIM, the closer the enhancedimage to

the reference is. The higher the value of SP, the higher

the quantity of red-distorted pixels is and the worse

the quality of the enhanced image is. The higher MSP,

the worse the enhancer performance on the test set is.

4 RESULTS

The images of UIEB have been processed by

STRESS, where the number of sprays per pixel N is

set to 10 and each spray contains k = 250 pixels. The

images enhanced by STRESS are available for free

by citing this paper

2

to enable visual inspection, fur-

ther analysis and comparison. By the way, the perfor-

mance of STRESS is compared with those obtained

by six IFM-free underwater image enhancers, i.e.:

the histogram equalization (HE) (Hummel, 1977),

the contrast-limited adaptive histogram equalization

(CLAHE) (Zuiderveld, 1994), the unsupervised von

Kries based color correction via histogram stretch-

ing (UCM) (Iqbal et al., 2010), the global histogram

stretching for underwater images working in the CIE

Lab color space (RGHS) (Huang et al., 2018), the

gamma correction algorithm (GC), the optimized ver-

sion of RSR called Light-RSR (Bani´c and Lonˇcari´c,

2013) and the algorithm SuPeR (Lecca and Mes-

selodi, 2019) fromthe Milano Retinexfamily. HE and

CLAHE are popular algorithms processing the color

histogram of the input image respectively globally

and locally in order to maximize the image bright-

ness and contrast. UCM corrects the image color and

contrast by diminishing the blue intensity, increasing

the red one, and reworking intensity and saturation

on the HSI color space. RGHS increases the contrast

by equalizing the green and blue components, redis-

tributing the channel histograms and applying a bilat-

eral filter to remove noise. GC increases the bright-

ness and contrast of low light images by raising the

channel components of the image by a factor γ, usu-

ally set to 2.2. Light-RSR is an optimized version of

RSR, which uses a single spray per pixel (N = 1) and

attenuates the chromatic noise by box filters. By this

way, Light-RSR enhances the image similarly to RSR

but with a much lower computation time. SuPeR is

another, fast Milano Retinex algorithm, that improves

the image by rescaling the channel intensities of any

pixel x by a factor inversely proportional to the aver-

age of a set of intensities selected from tiles defined

over the image and weighted by their distance from x.

2

https://drive.google.com/file/d/1XGjmhBaeuKuc

YSE3v6ekYPjkQgYlFEEX/view?usp=sharing

All these algorithms have been run on UIEB by using

their implementation available on the net. For Light-

RSR, k is set to 250, for SuPeR the number of tiles per

image is fixed to 25, while for the other algorithms the

default parameters provided in their codes have been

used. Figure 3 shows some examples of enhancement

of UIEB images by these algorithms.

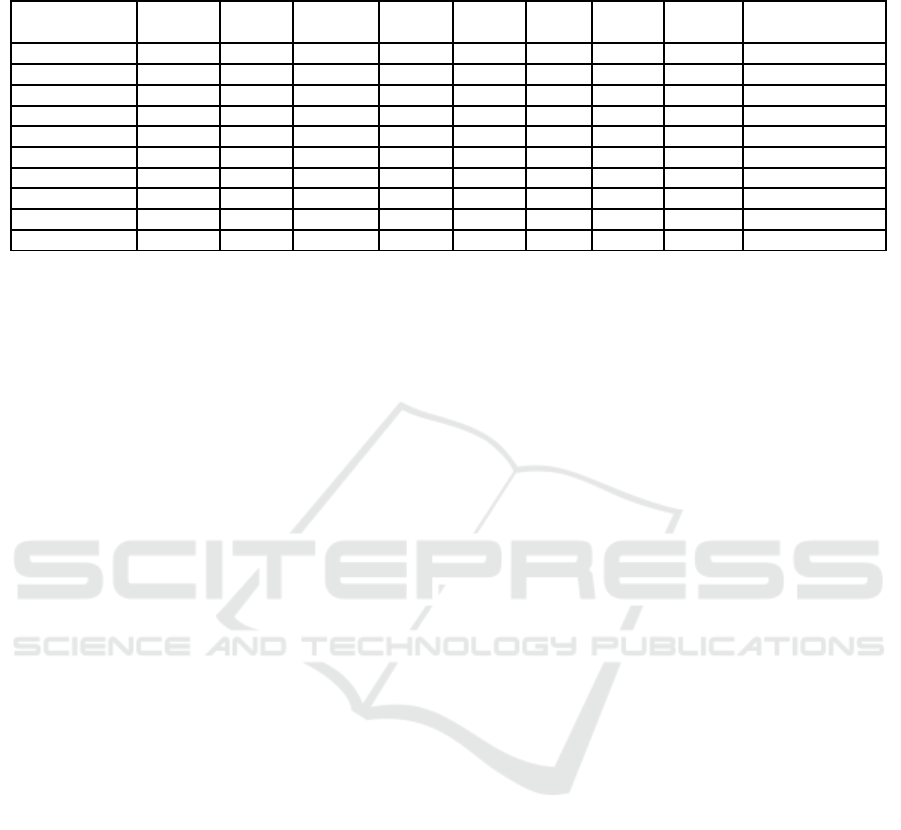

Table 1 reports the performance of STRESS in

comparison with the other enhancers. The values of

the different measures described in the previous Sec-

tion are averaged on the number of images of UIEB

and computed also for the original and reference im-

ages where this makes sense. On average, STRESS

remarkably increases the image contrast, making it

very close to that of the UIEB references, while the

brightness remains close to that of the input images.

STRESS also decreases the value of f making it close

to that of the references, while returns the lowest

(highest, resp.) value of MSE (PSNR, resp.) and a

quite high value of SSIM, meaning that in the col-

ors and the local structure of the enhanced images are

close to those of the corresponding references. More-

over, STRESS returns quite high values of UIQM and

UCIQE, close to those computed on the references.

This indicates that STRESS provides in general natu-

ral images. Nevertheless, as already observed in (Li

et al., 2019b), in some cases, the values of UIQM and

UCIQE does not reproduce correctly the human per-

ception about the naturalness of the underwater im-

ages. In fact, the algorithm HE often over-enhances

the image, returning very bright pictures with many

red-distorted pixels (see SP), but reports the highest

values of UIQM and UCIQE, exceeding also those

measured on the references. This unexpected be-

haviour is ascribed in (Li et al., 2019b) to the fact that

the human perception in underwater environments is

not yet fully studied and this adversely affects the

modeling of metrics for the assessment of underwa-

ter images. A qualitative visual inspection of the

results shows that HE and UCM are the worse al-

gorithms in terms of red-distorted pixels and noise,

while STRESS reports on average the 0.0158 % red-

distorted pixels in the 5.73% of the images. The high-

est values of MSP are measured on images with very

low-light regions or with very narrow red distribution

(see for instance the last example in Figure 3).

On average, STRESS performs similarly to UCM

and RGHS in terms of brightness, to CLAHE, RGHS

and UCM in terms of contrast and flatness, to CLAHE

and UCM in terms of UIQM, to RGHS and UCM in

terms of UCIQE and to CLAHE and Light-RSR in

terms of MSP. These observations, based on the quan-

titative analysis of the values in Table 1, are inline

Underwater Image Enhancement by the Retinex Inspired Contrast Enhancer STRESS

631

Figure 3: Examples of underwater image enhancement by different algorithms.

with the visual inspection of the images enhanced by

these algorithms (see Figure 3 for an example).

5 CONCLUSIONS

This work presents the use of STRESS as underwater

image enhancer, tests and compares it on the public

data UIEB, making the results freely available to en-

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

632

Table 1: Image quality measures for different image enhancers applied on UIEB. INPUT and REFERENCE report respectively

the values of B, C, f, UIQM and ICQE computed on the images and references of UIEB.

Algorithm B C f MSE PSNR SSIM UIQM UCIQE (SP, MSP)

[×10

−3

] [×10

3

]

REFERENCE 111.572 19.896 2.428 - - - 0.960 5.339 -

INPUT 106.405 11.869 3.936 1.768 17.355 0.773 0.542 4.189 -

HE 126.280 23.618 1.188 1.827 16.627 0.775 1.266 7.075 (28.764, 0.3535)

CLAHE 112.466 20.276 2.777 1.175 18.551 0.849 0.940 4.862 (1.124, 0.0122)

GC 136.915 11.756 3.982 2.423 15.519 0.753 0.702 3.687 (0, 0)

RGHS 104.232 17.476 2.617 1.152 19.222 0.825 0.768 5.775 (0.449, 0.0334)

UCM 104.406 18.366 2.708 1.662 17.464 0.799 0.934 6.044 (17.30 0.3429)

LIGHT-RSR 132.524 14.869 3.321 1.997 17.339 0.808 0.816 4.596 (1.124, 0.0141)

SuPeR 133.517 13.678 3.565 2.159 16.804 0.783 0.797 4.219 (0.225, 0.0006)

STRESS 105.747 18.449 2.559 0.792 21.300 0.804 0.942 5.816 (5.730, 0.0158)

able visual inspection and further analysis and com-

parison. The experiments show that STRESS, origi-

nally devised as a real-world image enhancer and in-

spired by the local spatial color processing performed

by the human vision system, effectively improves the

contrast and the dynamic range of underwater images,

preserving also their local structure and fidelity to ref-

erences. The quality of the enhanced images is in gen-

eral good and in line to or even better than other al-

gorithms not based on underwater imaging physical

models but used for this task. Nevertheless, the ex-

periments also highlight one main issue that should

be addressed in future work to guarantee better per-

formance, i.e. the generation of red-distorted pix-

els that affect the quality of the enhanced images.

Anyway, this problems is critical also for other algo-

rithms, as shown by the results obtained by HE and

UCM. In this respect, integrating in STRESS some

physical information may help to overcome this prob-

lem, although this has been observed also for some

IFM-based approaches, e.g. (Li et al., 2016b). In

this framework, future work will theoretically and

practically analyze and compare IFM-based methods

against STRESS.

REFERENCES

Bani´c, N. and Lonˇcari´c, S. (2013). Light random sprays

retinex: exploiting the noisy illumination estimation.

IEEE Signal Processing Letters, 20(12):1240–1243.

Barbosa, W. V., Amaral, H. G., Rocha, T. L., and Nasci-

mento, E. R. (2018). Visual-quality-driven learning

for underwater vision enhancement. In 2018 25th

IEEE Int. Conference on Image Processing (ICIP),

Athens, Greece, pages 3933–3937. IEEE.

Barricelli, B. R., Casiraghi, E., Lecca, M., Plutino, A., and

Rizzi, A. (2020). A cockpit of multiple measures for

assessing film restoration quality. Pattern Recognition

Letters, 131:178–184.

Bonin-Font, F., Ortiz, A., and Oliver, G. (2008). Visual

navigation for mobile robots: A survey. Journal of

intelligent and robotic systems, 53(3):263–296.

Chao, L. and Wang, M. (2010). Removal of water scatter-

ing. In 2010 2nd int. conference on computer engi-

neering and technology, Chengdu, China, volume 2,

pages V2–35. IEEE.

Fabbri, C., Islam, M. J., and Sattar, J. (2018). Enhancing

underwater imagery using generative adversarial net-

works. In 2018 IEEE Int. Conference on Robotics and

Automation (ICRA), Brisbane, Australia, pages 7159–

7165. IEEE.

Ho, M., El-Borgi, S., Patil, D., and Song, G. (2020). Inspec-

tion and monitoring systems subsea pipelines: A re-

view paper. Structural Health Monitoring, 19(2):606–

645.

Huang, D., Wang, Y., Song, W., Sequeira, J., and Mavro-

matis, S. (2018). Shallow-water image enhancement

using relative global histogram stretching based on

adaptive parameter acquisition. In Int. conference on

multimedia modeling, Bangkik, Turkey, pages 453–

465. Springer.

Hummel, R. (1977). Image enhancement by histogram

transformation. Computer Graphics and Image Pro-

cessing, 6(2):184–195.

Iqbal, K., Odetayo, M., James, A., Salam, R. A., and Talib,

A. Z. H. (2010). Enhancing the low quality images

using unsupervised colour correction method. In 2010

IEEE Int. Conference on Systems, Man and Cybernet-

ics, Instanbul, Turkey, pages 1703–1709. IEEE.

Jamadandi, A. and Mudenagudi, U. (2019). Exemplar-

based underwater image enhancement augmented

by wavelet corrected transforms. In Proc. of the

IEEE/CVF Conference on Computer Vision and Pat-

tern Recognition Workshops, Long Beach, CA, USA,

pages 11–17.

Kol˚as, Ø., Farup, I., and Rizzi, A. (2011). Spatio-temporal

Retinex-inspired envelope with stochastic sampling:

A framework for spatial color algorithms. Journal of

Imaging Science and Technology, 55(4):1–10.

Land, E. (1964). The Retinex. American Scientistg,

52(2):247–264.

Land, E. H., John, and McCann, J. (1971). Lightness and

Retinex theory. Journal of the Optical Society of

America, 1:1–11.

Underwater Image Enhancement by the Retinex Inspired Contrast Enhancer STRESS

633

Lecca, M. (2014). On the von Kries Model: Estimation,

Dependence on Light and Device, and Applications,

pages 95–135. Springer Netherlands, Dordrecht.

Lecca, M. (2020). Generalized equation for real-world im-

age enhancement by Milano Retinex family. J. Opt.

Soc. Am. A, 37(5):849–858.

Lecca, M. and Messelodi, S. (2019). Super: Milano retinex

implementation exploiting a regular image grid. JOSA

A, 36(8):1423–1432.

Li, C., Guo, C., Ren, W., Cong, R., Hou, J., Kwong, S., and

Tao, D. (2019a). An underwater image enhancement

benchmark dataset and beyond. IEEE Transactions on

Image Processing, 29:4376–4389.

Li, C., Guo, C., Ren, W., Cong, R., Hou, J., Kwong, S., and

Tao, D. (2019b). An underwater image enhancement

benchmark dataset and beyond. IEEE Transactions on

Image Processing, 29:4376–4389.

Li, C., Quo, J., Pang, Y., Chen, S., and Wang, J. (2016a).

Single underwater image restoration by blue-green

channels dehazing and red channel correction. In

IEEE Int. Conference on Acoustics, Speech and Signal

Processing (ICASSP), Shanghai, China, pages 1731–

1735.

Li, C.-Y., Guo, J.-C., Cong, R.-M., Pang, Y.-W., and Wang,

B. (2016b). Underwater image enhancement by de-

hazing with minimum information loss and histogram

distribution prior. IEEE Transactions on Image Pro-

cessing, 25(12):5664–5677.

Lu, H., Li, Y., and Serikawa, S. (2017). Computer vision for

ocean observing. In Artificial Intelligence and Com-

puter Vision, pages 1–16. Springer.

Manzanilla, A., Reyes, S., Garcia, M., Mercado, D., and

Lozano, R. (2019). Autonomous navigation for un-

manned underwater vehicles: Real-time experiments

using computer vision. IEEE Robotics and Automa-

tion Letters, 4(2):1351–1356.

Matos, A., Martins, A., Dias, A., Ferreira, B., Almeida,

J. M., Ferreira, H., Amaral, G., Figueiredo, A.,

Almeida, R., and Silva, F. (2016). Multiple robot op-

erations for maritime search and rescue in eurathlon

2015 competition. In OCEANS 2016-Shanghai, pages

1–7. IEEE.

McLellan, B. C. (2015). Sustainability assessment of deep

ocean resources. Procedia Environmental Sciences,

28:502–508. The 5th Sustainable Future for Human

Security (SustaiN 2014).

Panetta, K., Gao, C., and Agaian, S. (2015). Human-visual-

system-inspired underwater image quality measures.

IEEE Journal of Oceanic Engineering, 41(3):541–

551.

Pedersen, M., Bruslund Haurum, J., Gade, R., and Moes-

lund, T. B. (2019). Detection of marine animals in

a new underwater dataset with varying visibility. In

Proc. of the IEEE/CVF Conf. on Computer Vision

and Pattern Recognition Workshops, Long Beach, CA,

USA, pages 18–26.

Pedersen, M. and Hardeberg, J. Y. (2012). Full-reference

image quality metrics: Classification and evaluation.

Foundations and Trends in Computer Graphics and

Vision, 7(1):1–80.

Provenzi, E., Fierro, M., Rizzi, A., De Carli, L., Gadia, D.,

and Marini, D. (2007). Random Spray Retinex: A

new Retinex implementation to investigate the local

properties of the model. Trans. Img. Proc., 16(1):162–

171.

Rizzi, A., Algeri, T., Medeghini, G., and Marini, D. (2004).

A proposal for contrast measure in digital images. In

Conference on colour in graphics, imaging, and vi-

sion, Aachen, Germany, volume 2004, pages 187–

192. Society for Imaging Science and Technology.

Rizzi, A. and Bonanomi, C. (2017). Milano Retinex fam-

ily. Journal of Electronic Imaging, 26(3):031207–

031207.

Selby, W., Corke, P., and Rus, D. (2011). Autonomous

aerial navigation and tracking of marine animals. In

Proc. of the Australian Conference on Robotics and

Automation (ACRA), Melbourne, Australia.

Serikawa, S. and Lu, H. (2014). Underwater image dehaz-

ing using joint trilateral filter. Computers & Electrical

Engineering, 40(1):41–50.

Sheehan, E. V., Bridger, D., Nancollas, S. J., and Pittman,

S. J. (2020). Pelagicam: a novel underwater imag-

ing system with computer vision for semi-automated

monitoring of mobile marine fauna at offshore struc-

tures. Environmental monitoring and assessment,

192(1):1–13.

Shi, X., Ueno, K., Oshikiri, T., Sun, Q., Sasaki, K., and

Misawa, H. (2018). Enhanced water splitting under

modal strong coupling conditions. Nature nanotech-

nology, 13(10):953–958.

Vasamsetti, S., Mittal, N., Neelapu, B. C., and Sardana,

H. K. (2017). Wavelet based perspective on vari-

ational enhancement technique for underwater im-

agery. Ocean Engineering, 141:88–100.

Wang, N., Zheng, H., and Zheng, B. (2017). Underwater

image restoration via maximum attenuation identifi-

cation. IEEE Access, 5:18941–18952.

Wang, Y., Song, W., Fortino, G., Qi, L.-Z., Zhang, W.,

and Liotta, A. (2019). An experimental-based review

of image enhancement and image restoration meth-

ods for underwater imaging. IEEE Access, 7:140233–

140251.

Wang, Z., Bovik, A. C., Sheikh, H. R., and Simoncelli, E. P.

(2004). Image quality assessment: from error visi-

bility to structural similarity. IEEE transactions on

image processing, 13(4):600–612.

Yang, M. and Sowmya, A. (2015). An underwater color

image quality evaluation metric. IEEE Transactions

on Image Processing, 24(12):6062–6071.

Yin, F. (2021). Inspection robot for submarine pipeline

based on machine vision. Journal of Physics: Con-

ference Series, 1952:022034.

Zhao, X., Jin, T., and Qu, S. (2015). Deriving inherent op-

tical properties from background color and underwa-

ter image enhancement. Ocean Engineering, 94:163–

172.

Zuiderveld, K. (1994). Contrast limited adaptive histogram

equalization. Graphics gems, pages 474–485.

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

634