Towards a Formal Framework for Social Robots with Theory of Mind

Filippos Gouidis

1

, Alexandros Vassiliades

1,2

, Nena Basina

1

and Theodore Patkos

1

1

Institute of Computer Science, Foundation for Research and Technology, Hellas, Greece

2

School of Informatics, Aristotle University of Thessaloniki, Greece

Keywords:

Social Robotics, Theory of Mind, Epistemic Reasoning, Reasoning about Action, Event Calculus.

Abstract:

A key factor of success for future social robotics entities is going to be their ability to operate in tight col-

laboration with non-expert human users in open environments. Apart from physical skills, these entities will

have to exhibit intelligent behavior, in order both to understand the dynamics of the domain they inhabit

and to interpret human intuition and needs. In this paper, we discuss work in progress towards developing

a formal framework for endowing intelligent autonomous agents with advanced cognitive skills, central to

human-machine interaction, such as Theory of Mind. We argue that this line of work can lay the ground for

both theoretical and practical research, and present a number of areas, where such a framework can achieve

essential impact for future social and intelligent systems.

1 INTRODUCTION

Modeling the behavior and the mental state of oth-

ers is an essential cognitive ability of humans, central

to their social interactions. From a very young age,

people unconsciously generate meta-representations

associated with what others believe, in addition to

their own beliefs, and use these comparative men-

tal models when they attempt to make sense or pre-

dict the behavior of others (Apperly, 2012). The pro-

cesses involved in recognizing that people have dif-

ferent mental states, goals and plans, and in inferring

others’ mental states, is collectively known as Theory

of Mind (ToM).

ToM is also crucial for developing autonomous

systems that operate in tight collaboration with hu-

mans, in order to anticipate their needs and inten-

tions, and proactively respond to future actions. From

the Artificial Intelligence (AI) standpoint, the sym-

biosis of intelligent agents, such as social and com-

panion robots, with humans introduces a multitude

of challenges, at the core of which is the modeling

of how the world works, what knowledge humans

consider commonsense, and which their own abili-

ties -physical or mental- and the abilities of others are

(Marcus and Davis, 2019); or, in the language of cog-

nitive psychologists, this means that the agents need

to be equipped with a rich cognitive model.

(a) Top view (b) Observer’s perspective

Figure 1: A scene observed from different angles generates

diverse beliefs about the existence and position of objects.

Motivation

In this paper, we aim to highlight the importance of

endowing social agents with ToM, considering sce-

narios of everyday life. We also present work in

progress towards developing a formal, generic frame-

work for generating agents that can reason about

knowledge and causality, using an expressive, as well

as efficient, in terms of computational complexity,

formalization.

Consider the following toy setting that will mo-

tivate our analysis in the sequel: Figure 1a shows

a desk in a meeting room with laptops and various

items scattered around, such as pens, mugs, etc. The

persons working at the office, as well as an assistant

robot, may change their position around the desk. Let

us assume that, from a given moment on, all entities

only have a sideways, and not a top-down view of the

desk (Figure 1b). Apparently, for the person sitting

Gouidis, F., Vassiliades, A., Basina, N. and Patkos, T.

Towards a Formal Framework for Social Robots with Theory of Mind.

DOI: 10.5220/0010893300003116

In Proceedings of the 14th International Conference on Agents and Artificial Intelligence (ICAART 2022) - Volume 3, pages 689-696

ISBN: 978-989-758-547-0; ISSN: 2184-433X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

689

in front of an open laptop, any item behind the screen

is occluded. The robot, positioned at a different an-

gle, should be able to make simple inferences, such

as which objects are visible to each person consider-

ing their current positions, as well as more complex

inferences, such as whether the position of occluded

objects is known, due to the previous positions of the

persons around the desk. The robot should also ap-

propriately update the different mental states, based

on both the physical (ontic) actions that take place,

such as that someone picked up the mobile phone,

and the epistemic actions, such as announcements or

distractions. For instance, a person concentrated on

watching a presentation may not notice certain ac-

tions, leading to potentially erroneous beliefs.

While the goal to endow agents with at least basic

ToM capabilities, rich cognitive models and the ca-

pacity to make commonsense inferences, is not new

to the field of AI, existing social-cognitive agents ei-

ther lack such skills or develop ad hoc solutions that

are difficult to generalize or verify. In (Chen et al.,

2021) for instance, a deep neural network is devel-

oped to predict the long term behavior of an actor

with ToM using raw video data; the explainability

of the outcome or the verification of the process is

rather limited though. Classical AI, based on sym-

bolic methods, has long ago devised expressive for-

malisms that enable an agent to make epistemic infer-

ences about their own mental state (1

st

-order beliefs)

and about the mental state of the others (2

nd

-order

beliefs) in causal domains (e.g., see (D’Asaro et al.,

2020; Schwering et al., 2015; Ma et al., 2013; Shapiro

et al., 2011; Ditmarsch et al., 2007; Liu and Levesque,

2005; Davis and Morgenstern, 2005; Scherl, 2003)).

The majority of such formalisms is based on the pos-

sible worlds model, which although elegant in gener-

ating expressive epistemic statements, is well known

for the high computational complexity, as well as for

certain logical irregularities, such as the logical om-

niscience problem. Other approaches, as in (Suchan

et al., 2018), do model beliefs in formal languages,

but adopt a domain-dependent modeling, making it

difficult to prove generic properties, e.g., about nested

beliefs, action ramifications etc.

Contribution and Impact

The aim of this study is of both theoretical and prac-

tical interest. Our main contribution is a formal and

declarative implementation of a theory for reasoning

about action, knowledge and time for dynamic do-

mains, which does not rely in the possible-worlds se-

mantics. We deliver an axiomatization that has a num-

ber of advantages, in comparison to existing frame-

works. First, the theory is able to support epistemic

reasoning about a multitude of commensense phe-

nomena, such as direct and indirect effects of actions,

default knowledge, inertia etc. Second, our imple-

mentation enables approximate epistemic reasoning,

in order to tackle issues related to high computational

complexity. Last, we develop a means to automat-

ically transform non-epistemic domain axiomatiza-

tions into a formal encoding with well-defined prop-

erties that enables reasoning with belief, thus simpli-

fying the task of the knowledge engineer when mod-

eling the dynamics of causal domains.

We argue that such a system can impact various

aspects of practical research in fields related to social

robotics and computer vision, especially for interpret-

ing scenes that involve human-machine interaction.

Omitting the technical details, we discuss cases that

signal how an agent with ToM can prove beneficial

in a range of situations, from intuitive communica-

tion and advanced decision making to the analysis of

human-object interaction videos.

Next, we introduce the main formalisms that form

the basis for our framework (Section 2), and present

our methodology and initial implementation results

(Section 3). Section 4 showcases a number of areas,

where such a framework can accomplish impact. The

paper concludes in Section 5 with remarks on the di-

rections of future research that lies ahead.

2 BACKGROUND

Our framework builds on and extends two for-

malisms, a discrete time non-epistemic dialect of the

Event Calculus, capable of modeling a multitude of

commonsense phenomena, and an epistemic exten-

sion of this dialect that does not rely on the possible

worlds semantics.

1

2.1 Non-epistemic Notions

Reasoning about actions, change and causality is an

active field of research since the early days of AI.

Among the various formalisms that have been pro-

posed is the Event Calculus (EC) (Kowalski and

Sergot, 1986; Miller and Shanahan, 2002), a well-

established technique for reasoning about causal and

narrative information in dynamic environments. It is a

1

Epistemic logics represent knowledge, i.e., facts that

are true, while doxastic logics are used for reasoning about

potentially erroneous beliefs of agents. Although our main

goal is to model an agent’s belief state, we occasionally re-

fer to knowledge for convenience, as commonly done in rel-

evant literature too, but without necessarily being restricted

to epistemic logics exclusively.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

690

Table 1: Event Calculus Types of Formulae.

Domain Signature

F , E, T Fluents, Events and Timepoints E.g., f , f

i

, e, e

i

, N

0

Axioms

DEC Domain-independent Axioms See (Mueller, 2015)

Σ Positive Effect Axioms

V

[(¬)holdsAt( f

i

, T )] ⇒ initiates(e, f , T )

Σ Negative Effect Axioms

V

[(¬)holdsAt( f

i

, T )] ⇒ terminates(e, f , T )

Trigger Axioms

V

[(¬)holdsAt( f

i

, T )]∧

∆

2

V

[(¬)happens(e

j

, T )] ⇒ happens(e, T )

Γ Initial State and Observations holdsAt( f , 0), ¬holdsAt( f

1

, 1), ...

∆

1

Event Occurrences happens(e, 0), happens(e

1

, 3), ...

many-sorted first-order language for reasoning about

action and change, which explicitly represents tem-

poral knowledge. It also relies on a non-monotonic

treatment of events.

Many EC dialects have been proposed over the

years; for our purposes, we will use the non-epistemic

discrete time Event Calculus dialect (DEC), axioma-

tized in (Mueller, 2015). Formally, DEC defines a

sort E of events indicating changes in the environ-

ment, a sort F of fluents denoting time-varying prop-

erties and a sort T of timepoints, used to implement

a linear time structure. The calculus applies the prin-

ciple of inertia for fluents, in order to solve the frame

problem, which captures the property that things tend

to persist over time unless affected by some event.

For instance, the fluent f aces(Agent, Orientation) in-

dicates the point of view of an agent, while the event

turnsTowards(Agent, Orientation) denotes a change

in orientation.

2

A set of predicates express which fluents hold

when (holdsAt ⊆ F × T ), which events happen

(happens ⊆ E × T ), which their effects are (initiates,

terminates, releases ⊆ E ×F ×T ) and whether a flu-

ent is subject to the law of inertia or released from it

(releasedAt ⊆ F ×T ). For example, initiates(e, f , T )

means that if action e happens at som timepoint T it

gives cause for fluent f to be true at timepoint T + 1.

The commonsense notions of persistence and

causality are captured in a set of domain independent

axioms, referred to as DEC , that define the influence

of events on fluents and the enforcement of inertia

for the holdsAt and releasedAt predicates. In brief,

DE C states that a fluent that is not released from in-

ertia has a particular truth value at a particular time if

at the previous timepoint either it was given a cause

2

Variables start with a upper-case letter and are implic-

itly universally quantified, unless otherwise stated. Predi-

cates and constants start with a lower-case letter.

to take that value or it already had that value.

In addition to domain independent axioms, a par-

ticular domain axiomatization describes the common-

sense domain of interest (Σ and ∆

2

set of axioms),

observations of world properties at various times (Γ

axioms) and a narrative of known world events (∆

1

axioms) (see Table 1). Action occurrences, as well as

their effects may be context-dependent, i.e., they may

depend on preconditions. For instance, the domain

effect axiom

holdsAt( f aces(A, O), T ) ⇒

terminates(turnsTowards(A, O

new

), f aces(A, O), T )∧

initiates(turnsTowards(A, O

new

), f aces(A, O

new

), T )

implements the change in orientation of an agent,

when the event turnsTowards occurs.

2.2 Epistemic Notions

To support reasoning about the mental state of agents,

theories like DEC need to be extended with epis-

temic modalities (e.g., knows, believes), in order to

represent the properties of both ontic and epistemic

fluents and events. The epistemic extensions enable

the reasoning agent to make inferences even in cases

when the state of preconditions is unknown upon ac-

tion occurrence. Lately, a number of epistemic EC

dialects have been proposed, most of which rely on

the possible-worlds semantics to assign meaning to

the epistemic notions, e.g., (Ma et al., 2013; D’Asaro

et al., 2020). This semantics provide intuitiveness

and highly expressive models, but come at a cost: the

computational complexity is exponential to the num-

ber of unknown parameters, while certain counter-

intuitive assumptions, such as logical omniscience,

need to be tolerated. Moreover, although in princi-

ple nested beliefs can be supported, most existing im-

plementations of these formalisms are limited to 1

st

-

order epistemic statements.

Towards a Formal Framework for Social Robots with Theory of Mind

691

Table 2: The ASP modules that constitute the epistemic EC reasoner.

Non-epistemic 1

st

-order ToM 2

nd

-order ToM

DE C

Core DE C K T

2

nd

-order DE C K T

Domain-independent

Axioms

Hidden Causal Dependencies

Domain Axiomatization Meta-domain Axiomatization

Initial State Initial State Initial State

Domain-dependent

Axioms

Observations Observations Observations

The Discrete time Event Calculus Knowledge

Theory (DE C K T ) on the other hand, first proposed

in (Patkos and Plexousakis, 2009), is an epistemic ex-

tension of DEC that adopts a deductive approach to

modeling knowledge. Rather than producing knowl-

edge by contrasting the truth value of fluents that be-

long to different possible worlds, DEC K T defines a

set of meta-axioms that, in brief, capture the follow-

ing: i) when an action occurs, if all preconditions of

an effect axiom triggered by this action are known,

the effect will also become known, ii) if at least one

precondition is known not to hold, no belief change

regarding the effect will occur; iii) in all other cases,

i.e., when at least one precondition is unknown, but

none is known not to hold, then the state of the effect

will become unknown too; at the same time, a causal

dependency, called hidden causal dependency (HCD),

will be created between the unknown precondition(s)

and the effect. The idea behind HCDs is that if it turns

out that the unknown preconditions did indeed hold,

then so should the effect, given that no action affected

these fluents in-between. DEC K T also axiomatizes

the conditions under which such causal dependencies

are expanded or eliminated, considering the interplay

of the effects of events as time progresses.

The theory is sound and complete with respect to

possible-worlds theories under specific assumptions,

e.g., deterministic domains. The explicit treatment

of epistemic fluents as ordinary domain fluents in-

troduces advantages, as we explain next. Yet, there

are certain limitations, which we wish to overcome

with our current work. First, to the best of our knowl-

edge, the only implementation of DEC K T to date is

a rule-based system (see (Patkos et al., 2016)) with

procedural, rather than declarative semantics; in this

work, we deliver an encoding in the language of An-

swer Set Programming (ASP), based on formal, sta-

ble models semantics. Second, DEC K T only mod-

els knowledge, without any support for nested knowl-

edge statements; our implementation offers the abil-

ity to expand the formalism with nested statements.

This encoding lays the ground for modeling also be-

lief, rather than knowledge. Third, our implemen-

tation of DE C K T helps perform approximate epis-

temic reasoning, a task that is not trivial for possible

world-based implementations, offering sound but po-

tentially incomplete inferences, to alleviate computa-

tional complexity issues. Last, as we show next, we

also axiomatize epistemic events, such as notices, not

supported by the original theory.

3 METHODOLOGY

3.1 The Cognitive Model

The constituent parts of our approximate epistemic

EC reasoner are presented in Table 2. The logi-

cal program is broken down into modules (rulesets),

each of which corresponds to a particular set of ax-

ioms with well-specified properties.

3

The encoding

of all axiomatizations has been done in the Answer

Set Programming (ASP) language (Gelfond and Lif-

schitz, 1988; Marek and Truszczynski, 1999). ASP

is a declarative problem solving paradigm oriented

towards complex combinatorial search problems. A

domain is represented as a set of logical rules, whose

models, called answer sets, correspond to solutions to

a reasoning task. Sets of such rules, or answer set pro-

grams, come with an intuitive, well-defined seman-

tics, having its roots in research in knowledge repre-

sentation, in particular non-monotonic reasoning. Our

system implements a translation of all the EC theories

into ASP rules, which are then executed by the state-

of-the-art Clingo ASP reasoner

4

.

As shown in Table 2, there are three sets of mod-

ules, one for non-epistemic reasoning, one for 1

st

-

order epistemic inferencing and a third one for 2

nd

-

order, nested epistemic statements. Each set contains

a domain-independent axiomatization, needed for im-

plementing the appropriate commonsense behavior,

regardless of the domain of interest. For the first set,

this module is the encoding of the DE C set of ax-

ioms. The second part splits DEC K T into the core

DE C K T set and the HCD axioms, whereas in the

3

Code URL: https://socola.ics.forth.gr/tools/

4

Clingo URL: https://potassco.org/

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

692

third part, the 2

nd

-order DEC K T module is an adap-

tation of the DEC K T axioms appropriate for nested

statements. For instance, the following two encodings

specify how knowledge is generated when all precon-

ditions of an effect axiom are known to the agent

initiates(notices(Observer, Event),

believes(Observer, Effect), T) :-

axiomEvent(ID, Event),

happens(notices(Observer, Event), T),

allPrecBelievedTrue(ID, Observer, T),

axiomEffectPos(ID, Effect).

initiates(notices(Observer, Event),

believesNot(Observer, Effect), T) :-

axiomEvent(ID, Event),

happens(notices(Observer, Event), T),

allPrecBelievedTrue(ID, Observer, T),

axiomEffectNeg(ID, Effect).

Informally, the rules state that when an observer no-

tices the occurrence of an event that may cause a cer-

tain effect and she also believes that all preconditions

for that effect hold, then she will also believe that

the effect holds after the event, i.e., the observer will

believe the effect to be true, for positive effect ax-

ioms (first rule) or she will believe the effect to be

false, for negative effect axioms (second rule). A

unique ID is assigned to each effect axiom, that is

used for rules, such as the above, to generate domain-

independent epistemic inferences (this also explains

why DE C K T is considered a meta-theory).

Similar rules specify how the mental state of

agents should change when partial information about

the preconditions is available. Note that these rules do

not assume that the beliefs are correct; false initial be-

liefs or events not observed by the agents may lead to

the generation of erroneous conclusions. The axiom-

atization only ensures sound belief inference given a

specific state of mind.

As already mentioned, these rules are generic and

apply to any effect axiom, regardless of the domain.

The actual domain axiomatization, the part that de-

fines the dynamics of a specific environment of inter-

est inhabited by the agents and humans, is captured

by a different module that encodes rules, such as:

terminates(turnsTowards(Agent, Dir),

faces(Agent, DirInitial), T) :-

holdsAt(faces(Agent, DirInitial), T),

orientation(Dir),

DirInitial != Dir,

time(T).

In order for the epistemic parts to utilize such non-

epistemic domain axiomatization, i.e., in order for

DE C K T to apply its meta-axiomatization approach,

we developed a parser that automatically generates a

set of rules for each domain axiom, which specify the

constituent parts of this axiom. The parser assigns

a unique identifier to each effect axiom and defines

meta-predicates that capture which the preconditions

are, which event triggers the axiom and which the ef-

fect is. Care needs to be taken during this decom-

position process to correctly maintain the binding of

variables between the different parts of the original

axiom. This is one of the main contributions of this

work, as it relieves the knowledge engineer from hav-

ing to model complex epistemic rules. In practice,

this means that non-epistemic EC theories can now

be translated for epistemic reasoning, with no addi-

tional manual modeling effort. For the time being,

our implementation only translates effect axioms, but

we currently expand the types of axioms supported.

A final note about our methodology in building the

epistemic reasoner concerns its modularity. Some of

the modules are mandatory, in order for the inferences

to be sound. Others though can be omitted, according

to the type of reasoning one wishes to perform. For

instance, DEC and core DE C K T are sufficient for

1

st

-order statement inference; the omission of HCD

axioms, which are computationally intensive, do not

affect soundness, but may lead to partial conclusions

(fluents that could be inferred to be true or false will

remain unknown). As a result, this modularity of the

encoding helps support approximate reasoning. Note

that such a flexibility is not easily accomplished with

possible worlds-based theories, as it is not always

straightforward how to decide which worlds to main-

tain and which to drop, in order to reduce complexity

without losing soundness of inference.

3.2 Implementation

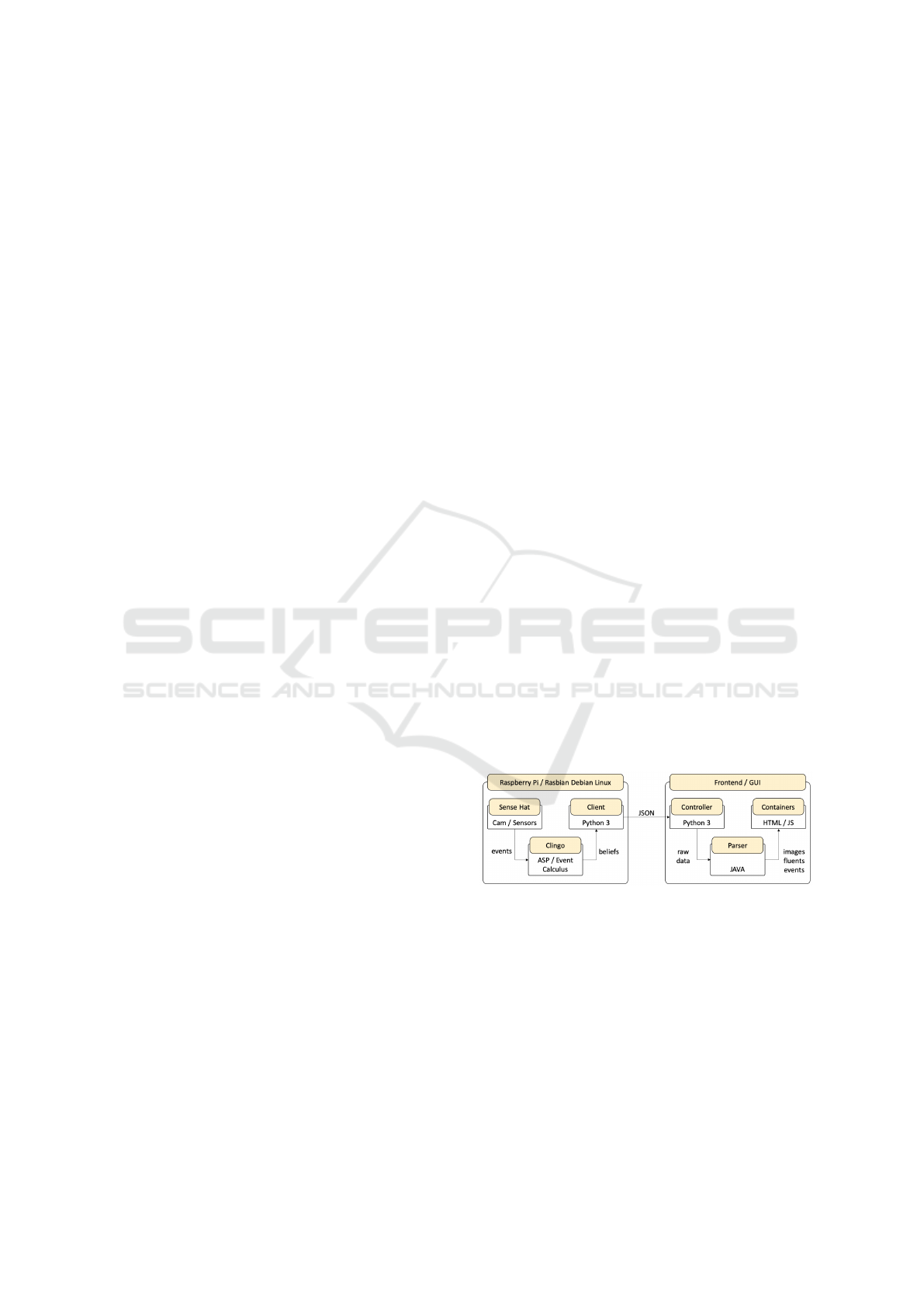

Figure 2: The system architecture.

To test our cognitive model, we are implementing a

system that can be used as the basis for experimenting

with diverse scenarios (Figure 2). The system com-

prises a Raspberry Pi computing environment (named

Raspie from now on) that plays the role of a social

robot operating in the environment. We used a Rasp-

berry Pi 4 Model B 8GB, equipped with various sen-

sors, such as camera, gyroscope and acceleromenter.

We also installed the Clingo 5.5 ASP reasoner on-

board, so that all epistemic inferrencing needed to

support ToM behavior is executed at run-time locally.

Towards a Formal Framework for Social Robots with Theory of Mind

693

Figure 3: The fronend displays different world views: the actual world state, the humans beliefs, Raspie’s beliefs and Raspie’s

beliefs about what the human believes.

In addition to Raspie, we assume that a human user

is positioned behind the desk. Any event, such as

change in the location of Raspie or the human user,

will trigger the reasoner, which will generate new be-

liefs about where each entity is, what can be observed

by each entity, which objects are known to each entity

to be on the table, which their spatial relations are, etc.

The new belief states are then sent to the fron-

tend, which groups beliefs of the same type together

and displays them in dedicated panels (Figure 3).

Apart from Raspie’s beliefs about the environment

(1

st

-order belief statements) and about the human user

(2

nd

-order belief statements), the frontend also dis-

plays the actual world state and the human’s beliefs,

based on separate axiomatizations provided from a

different channel. These latter world views are not di-

rectly accessible to Raspie, but help us better under-

stand the epistemic inferences, when sense or com-

munication actions take place.

4 DISCUSSION

In this section, we briefly discuss different scenarios

that highlight both the expressive power and the im-

pact that such a ToM-enabled robot can have in sup-

porting complex, real-world situations. For the pur-

pose of the current position paper, we omit most of

the technical details. The goal is to showcase situa-

tions that cannot easily be implemented without a rich

cognitive model or cases where ToM can provide im-

portant leverage to intelligent systems. While most of

the modeling requirements described next are already

known to the research community working on classi-

cal AI, the fact that the proposed framework comes

with a unified solution to these phenomena, while

taking into consideration how to reduce the compu-

tational complexity, is, to our opinion, a step forward.

False Beliefs: Variations of the classic “Sally and

Anne test” are often being used to model the state

of mind of an observer, when modeling facets of so-

cial cognition. The office desk example can offer an

adaptation of such a setting: imagine that the human

believes that, from her point of view, a pen is located

behind the screen:

holdsAt(believes(human,

loc(human, behindO f (pen, laptop))), 0)

Raspie, on the other hand, from its current position,

has no knowledge about objects located there:

¬holdsAt(believes(raspie,

loc(raspie, le f tO f (Ob ject, laptop))), 0)

¬holdsAt(believesNot(raspie,

loc(raspie, le f tO f (Ob ject, laptop))), 0)

Yet, it also believes that the human does not believe

there is a pen behind the screen (2

nd

-order statement)

holdsAt(believes(raspie, believesNot(human,

loc(human, behindO f (Ob ject, laptop)))), 0)

Such a representation can capture the subjectivity of

each entity, as well as the ability of agents to engage in

perspective-taking, ascribing a mental state to another

that they themselves believe to be false.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

694

The fact that the actual state of the world may be

such that no pen is placed there makes things even

more interesting: considering the above belief states,

as well as the position of all observing entities around

the table, one can see that the result of a sense ac-

tion may significantly differ from the result of a com-

munication action. In general, the proper handling of

ontic actions, such as move, pick up, grab, etc., and

epistemic actions that only change one’s perspective

about the state of the world, e.g., sense, announce,

ask, distract, constitute essential ingredients for any

cognitive entity operating in causal domains.

Intuitive Communication and Explainability:

When two humans engage in a dialogue, a lot of

information is left out, because it is considered

too obvious to be shared (in rhetorical syllogism,

such statements are called enthymemes). This is

a cognitive ability that is particularly difficult for

an intelligent agent to master, as it requires both

a wealth of background knowledge to be held and

a good understanding of what can be considered

common knowledge between the discussing parties.

For social robots, deciding when to ask the human

user for information or to provide guidance, as well

as how to express an utterance, can make the differ-

ence between providing assistance or becoming an

obstruction. A rich cognitive model, enhanced with

ToM capabilities, can drive the agent to only place

questions if it believes that the human may know the

answer, based on her current or past activity. It can

also help the agent become more elaborate (“You can

use the blue pen behind the carton box on your right”)

or abstract (“You can use the blue pen”), based on

the level of common information the two entities it is

believed they share.

More importantly, the ability of AI agents to ex-

plain their actions and decision making processes is

becoming more urgent lately. The transparency and

provability of formal methods and the scrutiny of be-

liefs grounded not only on the perspective of the dif-

ferent observers, but also on the type of beliefs, as

discussed next, can significantly impact the trustwor-

thiness of a system interacting with non-expert users.

Revision based on Types of Beliefs: The example

so far has revealed three types of belief: beliefs com-

ing from observation (sense actions), beliefs commu-

nicated by other entities (announce actions) and be-

liefs inferred, based on logical inference. Addition-

ally, theories, such as the EC, allow for defaults to

be modelled, e.g., agents may typically believe that

pencils can be found in a pencil box, if one is lo-

cated on the desk. Defaults constitute big part of hu-

man intuition and reflect the experiences and back-

ground knowledge of humans when they operate in

familiar to them environments. Apparently, an ob-

servation may invalidate such beliefs. The point is

that, in certain cases, some types of knowledge or be-

liefs can be considered more trusted than others. This

is proven helpful when the agent’s beliefs contradict

each other; although statistical methods try to find

quantitative measures, in order to assign confidence

values from contradicting sources of information, a

qualitative approach that takes into consideration the

type of knowledge manipulated can lead to more in-

tuitive and efficient revision schemes. For instance,

preference-based models are often used in relevant lit-

erature, and have recently been applied to action for-

malisms, such as the EC (Tsampanaki et al., 2021).

Action Prediction: Inferences such as the ones dis-

cusses so far constitute the first step towards accom-

plishing complex reasoning tasks. By relying on a

rich cognitive model of human beliefs, along with

past interactions with objects in a given domain, an in-

telligent system can go one step further and try to an-

ticipate human needs and intentions, predict future ac-

tions and, in general, provide timely assistance, rather

than just respond to commands.

Consider the following statement: “Typically, a

human will a) look for an object she needs, based

on her currently committed intentions, b) reach for

the object that is closer to her/easier to reach, and

c) choose the object that is working properly (not

broken)/is clean/is fresh etc.”. Template statements

such as this are both generic enough to capture typ-

ical user behavior and can easily be adapted to par-

ticular domain-specific requirements (part (c) of the

statement). Endowing social agents with generic hu-

man behavior prescriptions can help in interpreting

scenes, predicting the human’s next actions, and ul-

timately identifying opportunities for offering assis-

tance (“There is a pencil behind the screen, in case

you haven’t noticed it”) or for informing the user

about false beliefs (“While your attention was on your

mobile, the cat run away with the laptop mouse”).

5 CONCLUSIONS

In this paper, we discussed work in progress towards

developing a formal framework for intelligent agents

capable of exhibiting ToM. We argued about the im-

portance of such cognitive skills for autonomous enti-

ties operating close to the human and we further pro-

vided initial implementation directions that build on

existing research in epistemic action languages.

This initial work lays the ground for both theo-

retical and practical advancement. For start, given

that we introduced new features to DE C K T (new

Towards a Formal Framework for Social Robots with Theory of Mind

695

epistemic actions, nested epistemic statements etc.),

we also need to update the formal proofs regard-

ing the equivalence with possible worlds-based the-

ories. We also identified numerous ways of extend-

ing the expressive power of DE C K T , to account for

more complex cases, such as revision of beliefs (re-

call that DE C K T only supports knowledge, i.e., in

the presence of contradicting statements, the theory

collapses), potential action occurrences, beliefs of di-

verse types, among others.

From the practical standpoint, our main goal is

to evaluate how ToM can improve typical prediction

tasks that are of interest in the field of Computer Vi-

sion. Already recent studies, as by (Ji et al., 2021), try

to take advantage of past human-object interactions,

including where the user looked at, in order to pre-

dict future actions in videos. Datasets, such as Action

Genome, that provide annotations about attentional

relationships (whether a person is looking at some-

thing), in addition to spatial and contact relationships,

can help build cognitive models about the mental state

of users. In addition to such experiments, we also plan

to evaluate the proposed formalism in terms of scala-

bility and to further explore efficient means of imple-

menting HCDs, the main component that introduces

exponential complexity to the epistemic reasoner.

ACKNOWLEDGEMENTS

This project has received funding from the Hellenic

Foundation for Research and Innovation (HFRI) and

the General Secretariat for Research and Technology

(GSRT), under grant agreement No 188.

REFERENCES

Apperly, I. A. (2012). What is “theory of mind”? concepts,

cognitive processes and individual differences. Quar-

terly Journal of Experimental Psychology, 65(5):825–

839.

Chen, B., Vondrick, C., and Lipson, H. (2021). Visual be-

havior modelling for robotic theory of mind. Scientific

Reports, 11(1):424.

D’Asaro, F. A., Bikakis, A., Dickens, L., and Miller, R.

(2020). Probabilistic reasoning about epistemic action

narratives. Artificial Intelligence, 287:103352.

Davis, E. and Morgenstern, L. (2005). A First-order Theory

of Communication and Multi-agent Plans. Journal of

Logic and Computation, 15(5):701–749.

Ditmarsch, H. v., van der Hoek, W., and Kooi, B. (2007).

Dynamic Epistemic Logic. Springer Publishing Com-

pany, Incorporated, 1st edition.

Gelfond, M. and Lifschitz, V. (1988). The stable model

semantics for logic programming. In Proc. 5th Inter-

national Joint Conference and Symposium on Logic

Programming, IJCSLP 1988, pages 1070–1080.

Ji, J., Desai, R., and Niebles, J. C. (2021). Detecting human-

object relationships in videos. In Proceedings of the

IEEE/CVF International Conference on Computer Vi-

sion (ICCV), pages 8106–8116.

Kowalski, R. and Sergot, M. (1986). A logic-based calculus

of events. newgeneration computing 4.

Liu, Y. and Levesque, H. (2005). Tractable reasoning with

incomplete first-order knowledge in dynamic systems

with context-dependent actions. In IJCAI-05, pages

522–527.

Ma, J., Miller, R., Morgenstern, L., and Patkos, T. (2013).

An epistemic event calculus for asp-based reasoning

about knowledge of the past, present and future. In

LPAR 2013, 19th International Conference on Logic

for Programming, volume 26, pages 75–87.

Marcus, G. and Davis, E. (2019). Rebooting AI: Building

Artificial Intelligence We Can Trust. Pantheon Books,

USA.

Marek, V. W. and Truszczynski, M. (1999). Stable models

and an alternative logic programming paradigm. In

The Logic Programming Paradigm: A 25-Year Per-

spective, pages 375–398. Springer Berlin Heidelberg.

Miller, R. and Shanahan, M. (2002). Some alternative for-

mulations of the event calculus. In Computational

logic: logic programming and beyond, pages 452–

490. Springer.

Mueller, E. (2015). Commonsense Reasoning: An Event

Calculus Based Approach. Morgan Kaufmann Pub-

lishers Inc., San Francisco, CA, USA, 2

nd

edition.

Patkos, T. and Plexousakis, D. (2009). Reasoning with

knowledge, action and time in dynamic and uncertain

domains. In IJCAI-09.

Patkos, T., Plexousakis, D., Chibani, A., and Amirat, Y.

(2016). An event calculus production rule system for

reasoning in dynamic and uncertain domains. Theory

Pract. Log. Program., 16(3):325–352.

Scherl, R. (2003). Reasoning about the interaction of

knowlege, time and concurrent actions in the situation

calculus. In IJCAI-03, pages 1091–1096.

Schwering, C., Lakemeyer, G., and Pagnucco, M. (2015).

Belief revision and progression of knowledge bases in

the epistemic situation calculus. In IJCAI-15.

Shapiro, S., Pagnucco, M., Lesp

´

erance, Y., and Levesque,

H. (2011). Iterated belief change in the situation cal-

culus. Artificial Intelligence, 175(1):165–192.

Suchan, J., Bhatt, M., Wałega, P., and Schultz, C.

(2018). Visual explanation by high-level abduction:

On answer-set programming driven reasoning about

moving objects. In AAAI Conference on Artificial In-

telligence, pages 1965–1972.

Tsampanaki, N., Patkos, T., Flouris, G., and Plexousakis,

D. (2021). Revising event calculus theories to recover

from unexpected observations. Annals of Mathematics

and Artificial Intelligence, 89(1-2):209–236.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

696