Impact of Error-making Peer Agent Behaviours in a Multi-agent Shared

Learning Interaction for Self-Regulated Learning

Sooraj Krishna

1 a

and Catherine Pelachaud

2 b

1

ISIR, Sorbonne University, Paris, France

2

CNRS-ISIR, Sorbonne University, Paris, France

Keywords:

Pedagogical Agents, Collaborative Learning, Self-Regulated Learning, Human-agent Interaction.

Abstract:

Agents in a learning environment can have various roles and social behaviours that can influence the goals

and motivation of the learners in distinct ways. Self-regulated learning (SRL) is a comprehensive conceptual

framework that encapsulates the cognitive, metacognitive, behavioural, motivational and affective aspects of

learning and entails the processes of goal setting, monitoring progress, analyzing feedback, adjustment of

goals and actions by the learner. The study aims to understand how error-making behaviours in the peer agent

role would influence the learner perceptions of agent roles, related behaviours and self-regulation. We present

a multi-agent learning interaction involving the pedagogical agent roles of tutor and peer learner defined by

their social attitudes and competence characteristics, delivering specific regulation scaffolding strategies for

the learner. The results from the study suggests the effectiveness of error-making behaviours in peer agent for

clearly establishing the pedagogical roles in a multi-agent learning interaction context along with significant

influences in self-regulation and agent competency perceptions in the learner.

1 INTRODUCTION

Computer-supported collaborative learning environ-

ments have enabled interventions in the social pro-

cesses during learning by the means of artificial ped-

agogical agents such as virtual agents or social robots

and their roles and behaviours towards the learner.

Pedagogical agents can be described as intelligent

artificial learning partners that can support the stu-

dent’s learning and use various strategies in an inter-

active learning environment. According to (Stone and

Lester, 1996), a pedagogical agent should essentially

exhibit the properties of contextuality (providing ex-

planations and responding appropriately in the social

and problem-solving context), continuity(maintaining

pedagogical, verbal and behavioural coherency in ac-

tions and utterances) and temporality(timely interven-

tion in learning to communicate concepts and rela-

tionships) to be effective in a learning interaction. So-

cially shared regulation in learning (SSRL) (J

¨

arvel

¨

a

et al., 2013) constitutes multiple learning partners reg-

ulating themselves as a collective unit, through ne-

gotiations, decision making and knowledge sharing.

a

https://orcid.org/0000-0002-6171-1573

b

https://orcid.org/0000-0002-7257-0761

Such a shared learning environment would involve

entities of different social attitudes and competencies

which makes the learning interaction interesting in

terms of the types of regulation behaviours emerging

from each learning partner. The shared regulation of

learning may involve various distinct types of regula-

tion scaffolding such as:

• External regulation: facilitated by more capable

or knowledgeable learning partners such as an ex-

pert or tutor providing instructions, feedbacks or

prompting strategies that can enhance regulation

of the learner.

• Co-regulation: when a peer learner influence

the regulation behaviours in the learner through

jointly constructed goals and decisions. Artificial

pedagogical agents have great potential to be used

for learning interactions with specific regulation

goals and behaviours.

Accurate perception of the pedagogical roles asso-

ciated with each agent in the learning interaction is

essential to ensure that the learner receives and pro-

cesses the regulation strategies as intended by the de-

sign of learning interaction ((Baylor and Kim, 2005)).

To date, multi-agent learning interactions involving

different role of pedagogical agents in SSRL context

Krishna, S. and Pelachaud, C.

Impact of Error-making Peer Agent Behaviours in a Multi-agent Shared Learning Interaction for Self-Regulated Learning.

DOI: 10.5220/0010881400003116

In Proceedings of the 14th International Conference on Agents and Artificial Intelligence (ICAART 2022) - Volume 1, pages 337-344

ISBN: 978-989-758-547-0; ISSN: 2184-433X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

337

remains largely unexplored (Panadero, 2017), and

in this research, we aim to address this opportunity

for orchestrating shared regulation interactions with

agents of various roles and related regulation scaffold-

ing strategies.

In general, a learning interaction with pedagogi-

cal agent(s) in socially shared regulation context can

be broken down into three elements which are (i) a

human learner, (ii) pedagogical agent learning part-

ner(s) and (iii) a collaborative learning activity. For

our research, we design the shared learning interac-

tion with two pedagogical agents, where one agent as-

sumes the role of a tutor, the more knowledged other

(MKO), providing external regulation support and the

other is presented in the role of a peer learner facilitat-

ing co-regulation functions. We have operationalized

the roles of tutor and peer collaborator to represent

sources of external regulation and co-regulation re-

spectively. Hence the proposed learning interaction

would involve a human learner, and two agents with

distinct regulation behaviours engaging in a collabo-

rative learning task as represented in Figure 1.

Figure 1: Triadic learning interaction elements constituting

SSRL.

2 RELATED WORK

2.1 Error-making Peer Agents

In the self-regulation context, the role of peer is

considered as the source for co-regulation strate-

gies which involves supporting and influencing each

other’s regulation of learning, typically in an inde-

pendent and reciprocal manner through behaviours

such as thinking aloud, seeking help, suggesting al-

ternatives etc. Prior research on pedagogical agents

has shown that the learners apply social judgements

to agent behaviours and respond to their social cues

in accordance to the attributed roles ((Kory-Westlund

and Breazeal, 2019); (Breazeal et al., 2016); (Kim

et al., 2006)). For instance, (Weiss et al., 2010) found

a significant drop in the credibility of the peer agent

when it provided an incorrect hint to the learner dur-

ing the game. (Yadollahi et al., 2018) designed an

interaction with a robot peer reading to the child and

sometimes making mistakes which the learner is sup-

posed to correct. The mistakes committed by the peer

in the task were either contextual, representational or

concerning the pronunciation or syntax of the read-

ing material. The study results suggested that the use

of pointing gestures by the agent helped the learners

to identify and respond to the peer’s mistakes. In an-

other study, (Ogan et al., 2012) regarding the inter-

action with teachable agents where the learners were

instructed to narrate their experiences in teaching the

virtual agent Stacy, it was found that the agent’s task

errors were a significant predictor for learner engage-

ment. The authors also suggested making the er-

ror more realistic and making the agents acknowl-

edge their errors socially to correctly convey the agent

competency level. Concerning the trust factor of the

agents, (Geiskkovitch et al., 2019) explored the effect

of informational error by the peer in a learning task

which observed that the error behaviours do impact

the learner’s trust on the agent although the effect was

limited to the task phase involving the mistake.

Regarding the effect of error behaviours of agents

on the regulation of the learner, (Coppola and Pon-

trello, 2014) observed that learning from error can

be an explicit strategy for teaching and can pro-

mote self-monitoring and reflection. (Okita, 2014)

examined self-training and self-other training in a

computer-supported learning environment, designed

to assist the learners in assessing and correcting their

own learning. The self-training practice involved the

learners solving problems on their own while self-

other training involved working with a virtual char-

acter, taking turns to solve problems and monitoring

each other’s mistakes. The results suggested that self-

other training involving a peer agent which made task

errors helped the learners in engaging in metacogni-

tive activities of self-monitoring and correction. Thus

error making behaviours by a peer agent can comple-

ment the regulation aspects as well signify the associ-

ated competency level of the peer.

3 RESEARCH QUESTIONS

The proposed multi-agent learning interaction in

SSRL context involves a virtual tutor agent respon-

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

338

sible for external regulation and a virtual robot peer

exhibiting co-regulation behaviours. The results from

the previous perceptive studies that we conducted for

understanding the learner perceptions of agent roles

and associated behaviours suggested the potential of

a multi-agent learning interaction in promoting self-

regulation. However, some participants who were

observed to have held a wrong perception about the

agent roles were reported to be confused over the cor-

rect judgement of the peer agent’s role. According to

the design of the learning interaction, it is necessary

for the learner to associate the role of tutor and peer to

the intended agents in order to avoid misinterpretation

of the regulation strategies and behaviours. Hence,

the objective of this study is to introduce error-making

behaviours in the peer agent during the learning activ-

ity and to understand how it influences the agent role

perception and self-regulation in the learner.

3.1 Hypotheses

• H1: Error making behaviour of the peer agent

would promote the correct perception of agent

roles and associated qualities.

• H1a: The peer agent will be perceived to be less

intelligent than the tutor agent after the activity.

• H1b: The learner’s role perception of peer agent

will improve after the activity.

• H2: Error making behaviours of the peer agent

would promote better regulation in learners.

4 METHODOLOGY

We have conceived a perceptive study that employs

within-subjects design to understand the effect of in-

troducing error making behaviours in the peer agent

on the perception of agent roles, associated qualities

and the regulation of the learner. The participants

were asked to watch videos of tutor and peer agents

presenting, performing and regulating themselves or

each other during the learning task and to answer

questionnaires about their perception of both agents,

learning activity and their regulation behaviours.

4.1 System Design

The learning interaction for the study was based on

the FRACTOS learning task, which involves build-

ing fractions using virtual LEGO blocks (Figure 2),

in which the virtual agent (named Alice) is presented

as the tutor and the virtual robot (named Bot) is in-

troduced in the role of a peer learner. The Tutor

agent is modelled in GRETA platform (Pelachaud,

2015) and animated in Unity3D environment and is

characterised by behaviours of moderate dominance

and friendliness that defines the social attitude and

external regulation strategies that present the agent

as a more knowledgeable entity. The peer agent

is characterised with moderate levels of dominance

and friendliness through multimodal behaviours such

as informal, emotional and inquisitive speech, ex-

pressive gestures, mutual and joint gaze behaviours.

The speech of the virtual peer agent were driven by

IBM Watson services platform which associated rele-

vant gestures and animations based on sentiment and

semantic analysis of the agent’s intended dialogues

were realised in Unity3d game environment.

Figure 2: FRACTOS learning task interface.

4.2 Questionnaires

Before the agents were introduced, the participants

were introduced to the context of the study and were

asked to answer a NARS (Negative Attitudes towards

Robots Scale)(Nomura et al., 2006) questionnaire on

user’s attitudes and prejudices towards virtual charac-

ters. The participants gave their ratings for 6 items on

a 5-points Likert scale, from 1 = ”I completely dis-

agree” to 5 = ”I completely agree”.

4.2.1 Pre-activity Questionnaire

The pre-activity questionnaire consisted of 17 items

in total and was composed of selected items from

Godspeed questionnaire (Bartneck et al., 2009) on

agent perception (12 items), questions on agent role

perception (2 items) and a knowledge test on frac-

tions (3 items). The items of Godspeed scale looked

at the aspect of perceived intelligence (3 items) and

likeability (3 items) of the tutor and peer agents sepa-

rately and the participants rated the items on a 5-point

Likert scale.

4.2.2 Post-activity Questionnaire

After the activity, the participants were instructed to

provide their basic demographic information(gender,

Impact of Error-making Peer Agent Behaviours in a Multi-agent Shared Learning Interaction for Self-Regulated Learning

339

age group, education level, first language and eth-

nic identity) and a were given a 35 item question-

naire, which measure the agent perception, activity

perception, learner’s self-regulation, role perception

and knowledge on learning topic. The questionnaire

contained selected items from Godspeed question-

naire on agent perception (12 items on perceived in-

telligence and likeability of both agents), Intrinsic

Motivation Inventory (IMI) scale (6 items on activity

interest and value) (Ryan and Deci, 2000), Academic

Self-Regulation Questionnaire (SRQ-A) (12 items of

identified regulation, intrinsic motivation, introjected

regulation and external regulation) ((Ryan and Con-

nell, 1989); (Deci et al., 1992)), role perception (2

items) and knowledge test (3 items). After answering

the questionnaire the participants could also provide

general feedback on the activity experience in a sepa-

rate text field, if interested.

4.3 Procedure

The study aimed at improving the role perception

of the agents in the learning interaction and the

regulation of the learner by introducing an episode of

error making by the peer agent during the learning

task (Figure 3). The study was conducted online and

involved the participants watching the videos of both

agents introducing themselves and explaining about

the game activity in the beginning and later observing

the virtual peer agent performing in the game, while

being instructed by the tutor agent. The participants

were instructed to actively observe the interaction

and attend to the questions and tasks emerging in the

game. The participant and the peer agent were often

addressed together as a team during the interaction

by the tutor agent to emphasize the peer learning

scenario. The learning interaction could be broken

down into three stages of introduction, activity and

wrap-up sessions.

Figure 3: Peer agent making error in the FRACTOS learn-

ing task.

S1 Introduction: The participants were introduced

to a video of the tutor and peer agents introducing

themselves and engaging in a social talk. Later, the

tutor agent explains the fundamentals of the concept

of fraction and introduces the game elements to the

participant and the peer agent. This is followed by an

example video of building a fraction using the virtual

LEGO blocks present in the game. At, the end of

the introduction session, the participants are asked to

answer the pre-activity questionnaire.

S2 Activity: The activity session involves the partic-

ipants watching a video of the tutor agent presenting

the three distinct fractions and asking the peer agent

to build them using the virtual LEGO blocks of values

1/2, 1/4 and 1/8. The peer agent attends to the tutor’s

instructions and suggestions during the activity and

demonstrates traits of co-regulation such as thinking

aloud, seeking help etc. The peer agent doesn’t

completely follow all the suggestions and hints given

by the tutor and thus acts more self-directed in the

actions and choices. The activity involves the task of

building three distinct fractions and the error making

episode occurs in the turn of building the second

fraction value of 3/4.

Error Making Episode: The tutor agent presents

the task of building the fraction 3/4 and asks the

peer agent to try it. In the planning phase, the peer

agent makes the wrong choice of using 1/2 blocks

for building the fraction and goes ahead building

the fraction though the tutor suggests using the 1/4

blocks for the solution. After building the fraction,

the tutor gives feedback to the peer agent and the

learner that the solution was wrong and shows the

correct solution to both. Later the tutor agent asks

the peer agent and the learner to observe the number

of 1/4 blocks required to build the solution and thus

reflect on the mistake that occurred.

Alice: Do you know how to build this fraction?

Bot: I think we can use the 1/2 blocks for this. I mean

the red ones.

Alice: Are you sure? Why don’t you try using the 1/4

blocks instead?

Bot: I think three blocks of red should make this frac-

tion. Let me try it.

Alice: Okay. Let’s see if it is the correct solution

[Correct solution appears]

Alice: That was not correct. Three blocks of 1/4 make

the 3/4 fraction. I hope you understood the mistake.

Bot: Yes, I understand now.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

340

S3 Wrap-up: The learning interaction is concluded

by both agents thanking the participants and bidding

farewell reminding them to continue learning about

fractions. After the wrap-up session, the participant

is given the post-activity questionnaire on percep-

tions of agent roles, features, learning task and self-

regulation along with a knowledge test on fractions.

5 ANALYSIS AND RESULTS

5.1 Participants

The study involved 30 adult participants (19 Female,

10 Male, 1 not disclosed) recruited online using a sur-

vey hosting platform named Prolific. Since the in-

teraction happens in English language, we recruited

participants who had English as their first language.

Analysis of demographic information collected from

the participants shows that the most number of par-

ticipants belonged to the age group of 21-30 years

(43.3%) and 31-40 years (36.7%). Regarding the ed-

ucation level, majority of the participants had an un-

dergraduate degree (43.3%).

5.2 Attitude towards Agents

The NARS scale on attitudes toward situations and

interactions with the agents gave a Cronbach’s alpha

value of 0.82 on the test for reliability, indicating the

good reliability of the measure. We computed the

means of the item scores to obtain the overall mean

NARS score for each participant and divided them

into two groups, ”Positive” and ”Negative”, according

to the overall mean (M = 2.31, SD= 0.80). The ”Posi-

tive” attitude group had 20 participants and the ”Neg-

ative” attitude group had 10 participants. On perform-

ing an independent t-test between these two groups,

we found significantly higher scores for the factors

of perceived intelligence (p(Tutor) = 0.01, p(Peer)

= 0.029) and likeability (p(Tutor) = 0.032, p(Peer)

= 0.001) of both agents prior to the activity. Also

the activity interest was higher for the ”Positive” at-

titude group (M = 3.72, SD = 0.59) as compared to

the ”Negative” attitude group (M = 3.2, SD = 0.34).

The results suggest that the positive a-priori attitude

towards the agents can promote a better perception of

the agent qualities as well as the interest in the learn-

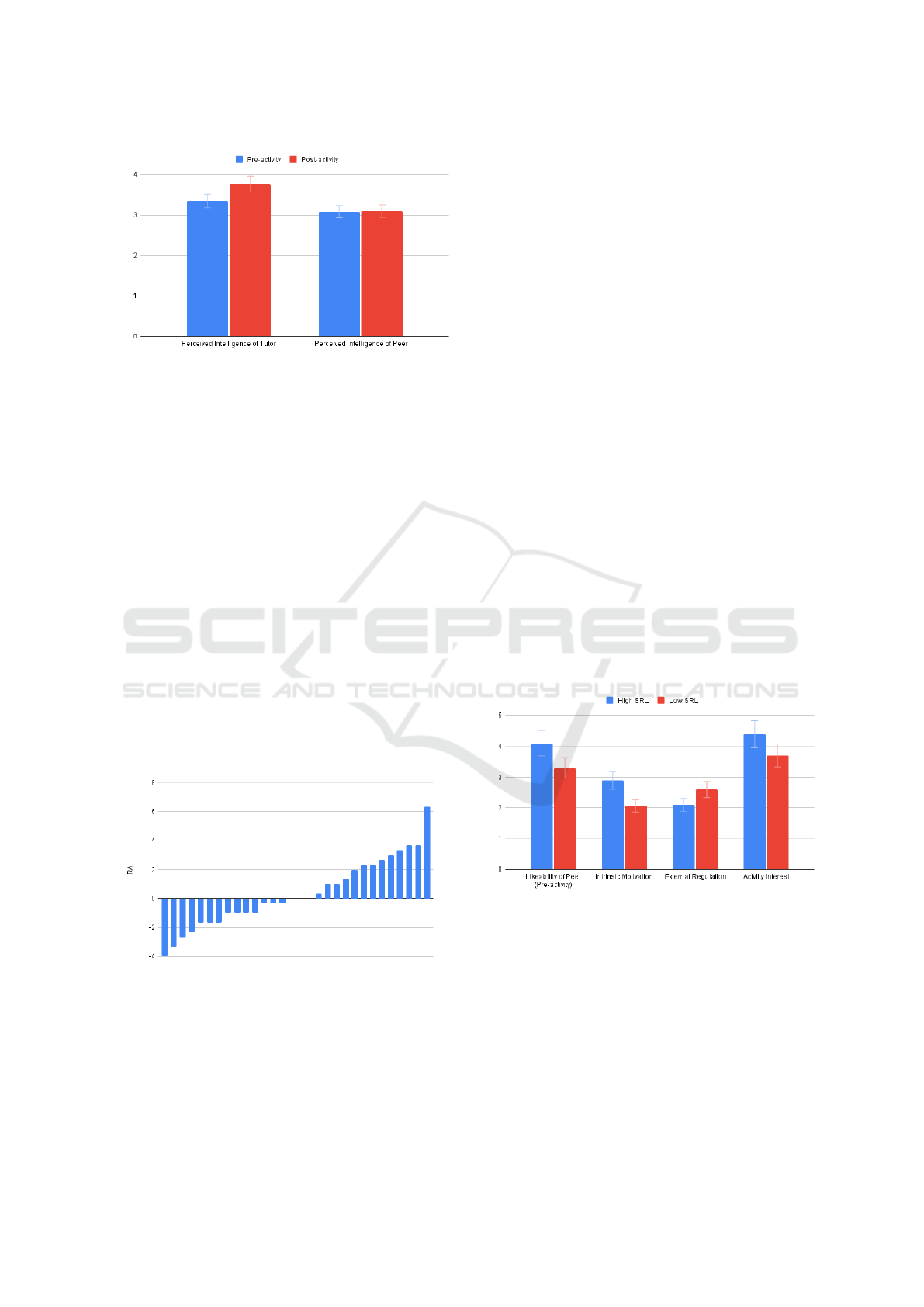

ing activity (Figure 4).

5.3 Activity Perception

The IMI scale on activity perception was found to

have good reliability only for the activity interest fac-

Figure 4: Comparing the groups of positive and negative

attitudes towards agents.

tor (α = 0.874 ) and hence the activity value factor

was not considered for further analysis. In general,

among all the participants in the study, the FRACTOS

learning task based interaction reported high activity

interest score (M = 3.55, SD = 0.57). This indicates

that the proposed learning interaction was engaging

for the learners, in general.

5.4 Agent Perception

The Godspeed questionnaires on agent perception

was found to have good reliability for pre-activity (Tu-

tor = 0.92, Peer = 0.87) and post-activity (Tutor =

0.94, Peer = 0.92) for both factors of perceived in-

telligence (Tutor = 0.87, Peer = 0.75) and likeabil-

ity (Tutor = 0.91, Peer = 0.91). On conducting a

paired samples t-test on the agent perception vari-

ables of both agents, only the perceived intelligence

of the tutor agent was found to have improved signif-

icantly (p = 0.003) while the perceived intelligence

of the peer agent remained relatively the same (Fig-

ure 5). Post-activity perceived intelligence of Tutor

agent was higher (M =3.76, SD = 0.9) than before

the activity (M = 3.34, SD = 0.80). The difference

in the perception of intelligence associated with both

agents can be attributed to the introduction of error

making behaviours by the peer since the perceived in-

telligence of the peer agent is observed to have not

improved after the activity. Thus our hypothesis H1a

that the peer agent with error making behaviour will

be perceived to be less intelligent than the tutor agent,

as intended in the design of their roles, is supported

by the study results.

5.5 Role Perception

The analysis of the role perception questions for the

tutor and peer agent roles given before the learning

activity shows that only one participant wrongly per-

ceived the virtual tutor agent in the role of a peer while

Impact of Error-making Peer Agent Behaviours in a Multi-agent Shared Learning Interaction for Self-Regulated Learning

341

Figure 5: Perceived intelligence factor means of tutor and

peer agents along the interaction.

five participants wrongly perceived the virtual robot

agent as a tutor and three participants remained un-

decided on their role perception. All other 21 partic-

ipants reported the roles of the tutor and peer agents

as intended by the design of the learning interaction.

However, after the learning activity, all participants

who had the initial wrong perception of the agent

roles except one improved by assigning the role of

peer correctly to the virtual robot agent. The only

participant who maintained the wrong perception of

peer agent after the activity still considered the virtual

robot agent as a tutor. Since 88% percentage of the

participants improved to gain the correct perception

of agent roles and behaviours, we can conclude that

the hypothesis H1b, which expects the error making

behaviours of the peer agent to improve the agent role

perception of the learner, is sufficiently supported by

the results.

5.6 Self-regulation Behaviour

Figure 6: Distribution of RAI scores of participants in the

study.

The subscales of SRQ-A showed good reliability

scores for identified regulation (α = 0.80), intrin-

sic motivation (α = 0.74), introjected regulation (α

= 0.70) and external regulation (α = 0.67). The

Relative autonomy index(RAI) score was calculated

from these subscales for each participant and based

on the RAI scores, the participants were divided into

two groups, ”High” (N = 13) and ”Low” (N = 17),

which indicated their self-regulation potential (Figure

6). We then conducted an independent t-test between

these two groups which concluded that the differences

in the factors of pre-activity likeability (p = 0.049)

of the peer agent, intrinsic motivation (p = 0.003),

external regulation (p = 0.048) and activity interestp

= 0.045 were statistically significant. The perceived

likeability (M = 4.10, SD = 0.47) of the peer agent

was higher for in the group of ”High” self-regulated

learner. The ”High” self-regulation group also were

associated with higher intrinsic motivation (M = 2.89,

SD = 0.51), higher activity interest (M = 4.46, SD =

0.90) and lower external regulation (M = 2.10, SD =

0.67) as compared to the ”Low” self-regulation group

of participants (Figure 7). This suggests that the peer

agent influenced the regulation better and facilitated

higher engagement and motivation for the learning ac-

tivity.

A multiple linear regression analysis was per-

formed with RAI score as the outcome and pre-

activity likeability of Peer along with activity interest

as predictors. The multiple regression model statisti-

cally significantly predicted the RAI score (F(2,27) =

8.456, p = .019, adj.R

2

= 0.253) where both variables

added significantly to the prediction. Thus the results

from the study support the hypothesis H2 which states

that the error making behaviours by the peer agent can

promote better regulation in learners.

Figure 7: Significant differences between the Higher and

Lower Self-regulation groups of learners.

5.7 Learning Gain

On calculating the learning gain from the knowledge

test conducted before and after the activity, it was ob-

served that five participants improved their scores and

two participants got lesser scores after the learning in-

teraction while all the remaining 23 participants were

able to answer all questions correctly both in the pre-

activity and post-activity tests.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

342

5.8 Comparison with Previous

Perceptive Study

The first perceptive study that we conducted looked

at the learner’s perception’s of the agent roles, quali-

ties, learning task and the associated self-regulation

behaviours emerging from the learning interaction.

The second study differs from the previous one in the

aspect of error making behaviours of the peer agent,

which was introduced to convey the role of peer and

the associated competency level more clearly.

A comparison of the results from both studies re-

veals some interesting observations on the aspects of

regulation, agent perception and activity interest. We

conducted a paired samples t-test on the perceived in-

telligence and likeability of both agents, activity in-

terest and regulation factors between the participant

groups of both studies to find that differences in per-

ceived intelligence of peer agent (p = 0.013) and ac-

tivity interest (p = 0.039) were significant. The per-

ceived intelligence of the peer agent was significantly

lower(M = 3.1, SD = 0.88) for the participants who

interacted with the peer agent that made mistakes.

Similarly, the activity interest also dropped signifi-

cantly(M = 3.55, SD = 0.57) in the group from sec-

ond study as compared to the study without the error

making episode. It can be concluded from the results

that the error making behaviour by the peer agent has

helped in conveying the role of virtual robot correctly

by making the learner to perceive it as less competent

compared to the tutor, but the activity interest seems

to have dropped in the case of having mistakes in the

learning interaction.

6 DISCUSSION

The objective of this study was to understand how er-

ror making behaviours by the peer agent would help

the learner to perceive the pedagogical roles in the

interaction as intended. Regarding the impact of a-

priori attitudes of the learners on the agent percep-

tions, the factors of perceived intelligence and like-

ability of both agents as well as the interest in the

learning activity were observed to be higher in the

group of learners with positive a-priori attitudes for

virtual agents in general. Thus familiarising the learn-

ers with virtual agents can help in ensuring that the

learning interaction turns out to be effective and en-

gaging. The learning activity was perceived to be in-

teresting by the majority of the participants.

Regarding hypothesis H1, we observed that the er-

ror making behaviour by the peer agent had signif-

icant effects on the perception of agent qualities as

well as the associated pedagogical roles. The per-

ceived intelligence of the tutor agent improved after

the activity while that of the peer remained almost the

same. This shows that the learners perceived the peer

agent to be less intelligent than the tutor after seeing

the error making instances during the learning activ-

ity. Hence, it can be concluded that the hypothesis

H1a is supported by the study results. Concerning the

perception of agent roles, the study started with 8 par-

ticipants having the wrong role perceptions but after

the learning interaction, 7 among those participants

gained the correct association of tutor role to the vir-

tual agent and peer role to the virtual robot agent. This

supports our hypothesis H1b that the error making be-

haviour of peer agent would help in improving the

role perception. It can be concluded that the differ-

ence in perceived intelligence of both agents has com-

plimented the role perception by the learners, since

peer agent behaviours were designed to convey lesser

competence level as compared to the tutor. Thus the

study results support the hypothesis that error mak-

ing behaviour of the peer agent would promote correct

perception of agent roles and associated qualities.

Regarding the self-regulation behaviour of the

participants, the learner who had higher self-

regulation scores were seen to have higher likeability

of the peer agent before the activity as well as higher

intrinsic motivation and interest in the learning activ-

ity. The hypothesis H2 expected the error making

episode by the peer to promote better regulation be-

haviours in the learner. The results suggest that the

RAI score, which an indicator for the self-regulation

potential of the learner, is influenced by the agent per-

ceptions as well as the activity interest. The factors

of likeability of the peer agent and activity interest

prove to be significant predictors for the regulation of

the learner and hence the hypothesis H2 is supported

by the study findings.

7 CONCLUSION

The perceptual study was conducted in the context

of introducing error making behaviours in the peer

agent for promoting better perception of agent roles

and overall self-regulation of the learner in a multi-

agent shared learning interaction. The study hypoth-

esised that the error making peer would be perceived

less competent compared to the tutor agent, thus con-

veying its pedagogical role clearly to the learner. The

results confirmed the hypotheses and suggest that the

error making behaviours by the peer agent as an ef-

fective way of manipulating the learner perceptions

and thus the regulation. In this study the peer agent

Impact of Error-making Peer Agent Behaviours in a Multi-agent Shared Learning Interaction for Self-Regulated Learning

343

was observed to have influenced the learner more than

the tutor. The findings from the results and compar-

isons across both user studies shows the effectiveness

of the proposed learning task and the multi-agent in-

teraction in promoting self-regulation in the learner.

Altogether, the insights on perceptions and influence

of agent roles and related regulation behaviours in the

proposed interaction would act as the basis of our fur-

ther studies towards understanding distinct regulation

strategies and their relevance in various phases of self-

regulated learning.

REFERENCES

Bartneck, C., Kuli

´

c, D., Croft, E., and Zoghbi, S. (2009).

Measurement instruments for the anthropomorphism,

animacy, likeability, perceived intelligence, and per-

ceived safety of robots. International journal of social

robotics, 1(1):71–81.

Baylor, A. L. and Kim, Y. (2005). Simulating instructional

roles through pedagogical agents. International Jour-

nal of Artificial Intelligence in Education, 15(1):95.

Breazeal, C., Harris, P. L., DeSteno, D., Kory Westlund,

J. M., Dickens, L., and Jeong, S. (2016). Young chil-

dren treat robots as informants. Topics in cognitive

science, 8(2):481–491.

Coppola, B. P. and Pontrello, J. K. (2014). Using errors to

teach through a two-staged, structured review: Peer-

reviewed quizzes and “what’s wrong with me?”. Jour-

nal of Chemical Education, 91(12):2148–2154.

Deci, E. L., Hodges, R., Pierson, L., and Tomassone, J.

(1992). Autonomy and competence as motivational

factors in students with learning disabilities and emo-

tional handicaps. Journal of learning disabilities,

25(7):457–471.

Geiskkovitch, D. Y., Thiessen, R., Young, J. E., and Glen-

wright, M. R. (2019). What? that’s not a chair!: How

robot informational errors affect children’s trust to-

wards robots. In 2019 14th ACM/IEEE International

Conference on Human-Robot Interaction (HRI), pages

48–56. IEEE.

J

¨

arvel

¨

a, S., J

¨

arvenoja, H., Malmberg, J., and Hadwin, A. F.

(2013). Exploring socially shared regulation in the

context of collaboration. Journal of Cognitive Educa-

tion and Psychology, 12(3):267–286.

Kim, Y., Baylor, A. L., Group, P., et al. (2006). Pedagogi-

cal agents as learning companions: The role of agent

competency and type of interaction. Educational tech-

nology research and development, 54(3):223–243.

Kory-Westlund, J. M. and Breazeal, C. (2019). Assess-

ing children’s perceptions and acceptance of a so-

cial robot. In Proceedings of the 18th ACM Inter-

national Conference on Interaction Design and Chil-

dren, pages 38–50.

Nomura, T., Kanda, T., and Suzuki, T. (2006). Experimen-

tal investigation into influence of negative attitudes to-

ward robots on human–robot interaction. Ai & Soci-

ety, 20(2):138–150.

Ogan, A., Finkelstein, S., Mayfield, E., D’Adamo, C., Mat-

suda, N., and Cassell, J. (2012). ” oh dear stacy!” so-

cial interaction, elaboration, and learning with teach-

able agents. In Proceedings of the SIGCHI conference

on human factors in computing systems, pages 39–48.

Okita, S. Y. (2014). Learning from the folly of others:

Learning to self-correct by monitoring the reasoning

of virtual characters in a computer-supported mathe-

matics learning environment. Computers & Educa-

tion, 71:257–278.

Panadero, E. (2017). A review of self-regulated learning:

Six models and four directions for research. Frontiers

in psychology, 8:422.

Pelachaud, C. (2015). Greta: an interactive expressive

embodied conversational agent. In Proceedings of

the 2015 International Conference on Autonomous

Agents and Multiagent Systems, pages 5–5.

Ryan, R. M. and Connell, J. P. (1989). Perceived locus of

causality and internalization: examining reasons for

acting in two domains. Journal of personality and so-

cial psychology, 57(5):749.

Ryan, R. M. and Deci, E. L. (2000). Self-determination the-

ory and the facilitation of intrinsic motivation, social

development, and well-being. American psychologist,

55(1):68.

Stone, B. A. and Lester, J. C. (1996). Dynamically sequenc-

ing an animated pedagogical agent. In AAAI/IAAI, Vol.

1, pages 424–431.

Weiss, A., Scherndl, T., Buchner, R., and Tscheligi, M.

(2010). A robot as persuasive social actor a field

trial on child–robot interaction. In Proceedings of

the 2nd international symposium on new frontiers in

human–robot interaction—a symposium at the AISB

2010 convention, pages 136–142.

Yadollahi, E., Johal, W., Paiva, A., and Dillenbourg, P.

(2018). When deictic gestures in a robot can harm

child-robot collaboration. In Proceedings of the 17th

ACM Conference on Interaction Design and Children,

pages 195–206.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

344