An Efficient Contact Lens Spoofing Classification

Guilherme Silva

1,† a

, Pedro Silva

1,† b

, Mariana Mota

2 c

, Eduardo Luz

1 d

and Gladston Moreira

1 e

1

Computing Department, Federal University of Ouro Preto (UFOP), MG, Brazil

2

Department of Control and Automation Engineering, UFOP, MG, Brazil

Keywords:

Spoofing, CNN, EfficientNets.

Abstract:

Spoofing detection, when differentiating illegitimate users from genuine ones, is a major problem for biometric

systems and these techniques could be an enhancement in the industry. Nowadays iris recognition systems

are very popular, once it is more precise for person authentication when compared to fingerprints and other

biometric modalities. Nevertheless, iris recognition systems are vulnerable to spoofing via textured cosmetic

contact lenses and techniques to avoid those attacks are imperative for a well system behavior and could be

embedded. In this work, attention is centered on a three-class iris spoofing detection problem: textured/colored

contact lenses, soft contact lenses, and no lenses. Our approach adapts the Inverted Bottleneck Convolution

blocks from the EfficientNets to build deep image representation. Experiments are conducted in comparison

with the literature on two public iris image databases for contact lens detection: Notre Dame and IIIT-Delhi.

With transfer learning, we surpass previous approaches in most of the cases for both databases with very

promising results.

1 INTRODUCTION

Nowadays, the term biometry (bio, life, plus metry,

measure, the measure of life) has been associated with

the measurement of physical, psychological or be-

havioral features of a living being. Biometric-based

person identification systems have been developed

rapidly in the last two decades. It is commonly ap-

plied not only to distinguish but also to identify some-

one based on their uniqueness, physical and biologi-

cal characteristics (Prabhakar et al., 2003).

What makes biometric measurements reliable is

the premise that each one is unique and has differ-

ent physical and behavioral characteristics (the voice,

way of walking, etc.). Today, various parts of the hu-

man body can be used, such as fingerprint, face, iris

and palm.

Among all the body parts, the iris is considered the

most promising, reliable, and accurate biometric trait,

a

https://orcid.org/0000-0002-5525-6121

b

https://orcid.org/0000-0002-4964-5710

c

https://orcid.org/0000-0001-8558-5957

d

https://orcid.org/0000-0001-5249-1559

e

https://orcid.org/0000-0001-7747-5926

†

These authors contributed equally to this work.

providing a rich texture that allows high discrimina-

tion among subjects and its uniqueness factor (Flom

and Safir, 1987; Daugman, 1993).

Daugman (1993), proposed the first functional

iris recognition method, whereas, in (Flom and Safir,

1987) the first patent using iris texture as biometric

modality are presented. Since then, several iris recog-

nition approaches have been proposed in the litera-

ture (Bowyer et al., 2008; Song et al., 2014) and the

modality has become popular in commercial biomet-

ric systems. Hence, iris modality becomes a target

for attacks (Sequeira et al., 2014b; Yadav et al., 2014;

Bowyer and Doyle, 2014) due to its use in forensics

systems (Yang et al., 2020).

There are several manners to attack an iris biomet-

ric system (Agarwal and Jalal, 2021; Morales et al.,

2021), such as using printed iris images (Sequeira

et al., 2014b), or by contact lenses (Yadav et al.,

2014; Bowyer and Doyle, 2014), for instance. These

sort of attacks are usually referred to in the literature

as a spoofing attack (Ming et al., 2020), specific as

iris spoofing. Upon this fact, Daugman (2003) pre-

sented a method for contact lens patterns detection.

Some works were proposed to dealing with this prob-

lem (Bowyer and Doyle, 2014; Menotti et al., 2015;

Raghavendra and Busch, 2015). However, some au-

Silva, G., Silva, P., Mota, M., Luz, E. and Moreira, G.

An Efficient Contact Lens Spoofing Classification.

DOI: 10.5220/0010868500003179

In Proceedings of the 24th International Conference on Enterprise Information Systems (ICEIS 2022) - Volume 1, pages 441-448

ISBN: 978-989-758-569-2; ISSN: 2184-4992

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

441

thors use different definitions of iris spoofing detec-

tion, where liveness and counterfeit detection terms

are used with different meanings and, in some cases,

interchangeably (Sun et al., 2014). Works as in (Se-

queira et al., 2014a; Menotti et al., 2015; Galbally

et al., 2014) classify an iris image as real/live or as

fake, where a fake image is not a live one (e.g., printed

image). In addition, some works consider counterfeit

iris with printed color contact lenses as fake images

and iris images with soft/clear or no lenses as real im-

ages (Wei et al., 2008; Baker et al., 2010; Zhang et al.,

2010; Kohli et al., 2013; Doyle et al., 2013; Komu-

lainen et al., 2014).

Several works in the literature emerged to solve

the contact lens detection issue (Zin et al., 2021),

and several of them have reported accuracy over

98% (Wei et al., 2008; He et al., 2009; Zhang et al.,

2010). However, since contact lens technology is un-

der constant development, robust detection has be-

come a difficult task (Bowyer and Doyle, 2014). In

addition to this, studies found in the literature are fa-

vored by their methodology due to the use of datasets

containing contact lenses from a single manufacturer

among both training and test data (Bowyer and Doyle,

2014; Wei et al., 2008). According to (Doyle et al.,

2013), in a more realistic scenario, methods whose

accuracy is close to 100% could decrease to below

60% when a cross-sensor evaluation is performed.

In that sense, the datasets presented in (Yadav

et al., 2014) introduces a more complex three-class

image detection problem, in which iris images may

appear with textured (colored) contact lenses, soft

contact lenses (prescripted) and without lenses (no).

Addressing that, Raghavendra et al. (2017) proposed

an approach using a Deep Convolution Neural Net-

work (D-CNN) for contact lens detection. A new

architecture called ContlensNet is elaborated, which

consists of fifteen layers. The proposed approach is

tested in three scenarios: Intra-sensor (training and

testing with data from the same sensor), Inter-sensor

(training and testing with data from different sen-

sors), and Multi-sensor (training and testing with data

from multiple iris sensors combined). The authors

reported a Correct Classification Rates (CCR) which

overcomes the current state-of-the-art for the two well

known datasets for iris spoofing (both used in this

work).

Choudhary et al. (2019) introduced a Densely

Connected Contact Lens Detection Network

(DCLNet) to detect contact lenses in iris images

captured from heterogeneous sensors. The authors

customized DenseNet121 and fine-tuned it with Near

Infra-Red (NIR) iris images. The experimental CCR

results reported for the IIITD Cogent dataset are

of 99.10% and 92.10% for the intra-sensor and the

multi-sensor scenario, respectively.

This work addresses a three-class problem, as well

as the one presented in (Silva et al., 2015), explor-

ing the Inverted Bottleneck Convolution (MBconv)

blocks (Tan and Le, 2019) which were used to report

state-of-the-art results for the ImageNet dataset (Tan

and Le, 2019). Our approach is compared against

three works on the literature (Yadav et al., 2014; Silva

et al., 2015; Raghavendra et al., 2017) in three evalu-

ation scenarios using two public datasets: 2013 Notre

Dame Contact Lens Detection (NDCL) dataset and

IIIT-Delhi Contact Lens Iris (IIIT-D). The proposed

approach outperformed the current state-of-the-art ap-

proach in five out of ten scenarios and presents com-

parable results in the five remaining.

The paper is organized as follows. First of all, we

present and describe in Section 2 the datasets used in

our experiments. The methodology proposed to han-

dle the spoofing detection is detailed in Section 3. Ex-

periments and results are described and discussed in

Section 4. Finally, conclusions and directions for fu-

ture work are outlined in Section 5.

2 DATASETS

In (Yadav et al., 2014), a three-class dataset is created

to evaluate an approach for contact lens iris detec-

tion. In this section, the characteristics of the datasets

used in our experiments are summarized in Table 1

and the following subsections. Both datasets, NDCL

and IIIT-D, are available upon public request and all

images are gray-scale with 640 × 480 pixels.

2.1 Notre Dame Contact Lens Dataset

The 2013 Notre Dame Contact Lens Detection (ND-

CLD’13 or simply NDCL) is a dataset where all im-

ages were acquired under near-IR illumination using

two sensors, LG4000 and IrisGuard AD100. The

dataset is divided into two subsets according to this

two sensors: LG4000 with 3000 images for training

and 1200 for verification; AD100 with 600 for train-

ing and 300, verification. This dataset contains a total

of 5100 images (Doyle and Kevin, 2014), all of them

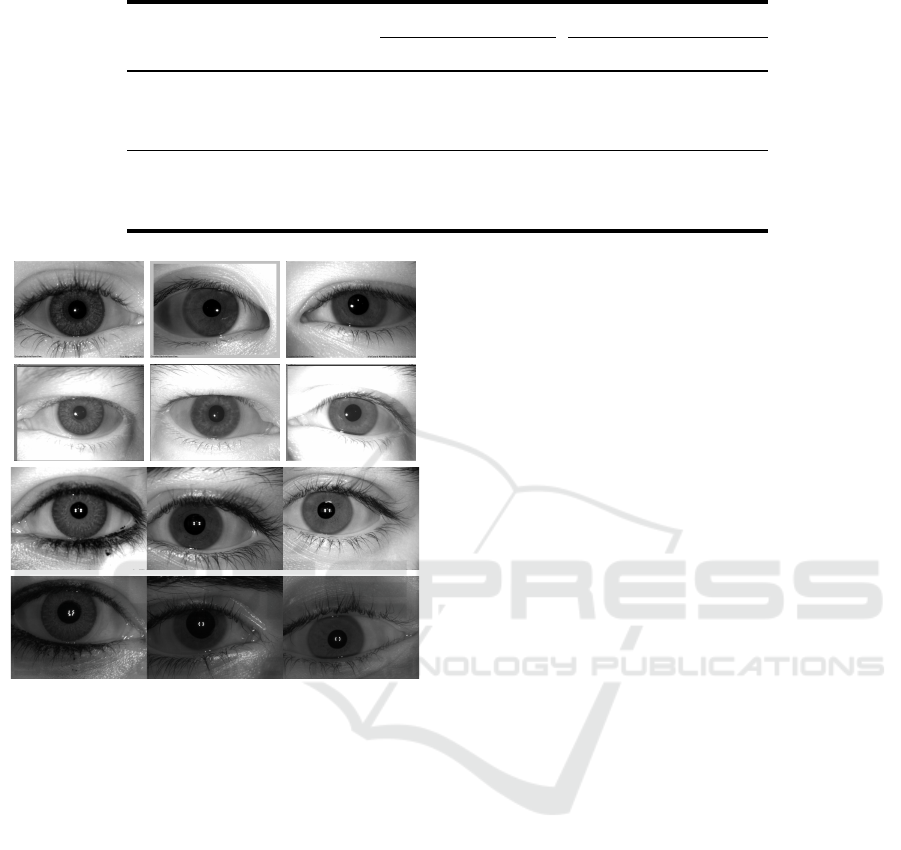

are 640×480 pixels. Some samples of the NDCL and

its cameras and classes can be found in Fig. 1.

The images are equally divided among three

classes: (1) wearing cosmetic contact lenses, (2)

wearing clear soft contact lenses, and (3) wearing no

contact lenses. Each image is annotated with some

information: an ID to the subject it belongs, eye (left

ICEIS 2022 - 24th International Conference on Enterprise Information Systems

442

Table 1: Main features of the datasets considered herein and introduced in (Yadav et al., 2014).

Dataset Sensor

# Training # Testing/Verification

Text. Soft No Total Text. Soft No Total

NDCL

IrisGuard AD100 200 200 200 600 100 100 100 300

LG4000 iris camera 1000 1000 1000 3000 400 400 400 1200

Multi-camera 1200 1200 1200 3600 500 500 500 1500

IIIT-D

Cogent Scanner 589 569 563 1721 613 574 600 1787

Vista Scanner 535 500 500 1535 530 510 500 1540

Multi-scanner 1124 1069 1063 3256 1143 1084 1100 3327

Figure 1: Samples of images in the 2013 Notre Dame Con-

tact Lens Detection (NDCL) dataset and IIIT-Delhi Con-

tact Lens Iris (IIIT-D). In the first row present samples from

NDCL IrisGuard AD100, the second, NDCL LG4000 iris

camera, while in the third, IIIT-D Cogent Scanner and the

last line, IIIT-D Vista Scanner. The first column presents

samples with textured/cosmetic contact lenses. The second

column presents samples with soft/clear/prescript contact

lenses. The third column presents samples with no contact

lenses.

and right), the subject’s gender, race, the type of con-

tact lenses used, and the coordinates of pupil and

iris. More specific details of this dataset can be found

in (Doyle and Kevin, 2014, Section II.B).

2.2 IIIT-D Contact Lens Iris Dataset

The Indraprastha Institute of Information Technology

(IIIT)-Delhi Contact Lens Iris (IIIT-D) is a dataset

where the iris location information is not provided.

Nevertheless, for this work, we manually annotate all

images to assess segmentation impact. There is a total

of 6583 iris images from 101 different subjects. For

each individual: (1) 202 iris classes (different iris) be-

cause both eyes, left and right, were captured; (2) it

was used different textured lenses (colors and manu-

facturers); (3) the iris images were captured with soft

and textured lens and without - the three classes con-

sidered here. Images of this dataset are illustrated in

Fig. 1. More specific details of this dataset can be

found in (Doyle and Kevin, 2014, Section II.A).

3 PROPOSED APPROACH

This work methodology for iris contact lens detection

is based on deep representations with the state-of-the-

art inverted bottleneck convolutional blocks (Sandler

et al., 2018) and transfer learning (Goodfellow et al.,

2016).

We adapt the EfficientNets architecture (Tan and

Le, 2019) for the current problem, preserving some

pre-trained layers (from ImageNet). This section

presents a brief explanation of EfficientNets as well

as the new architecture proposed. The evaluation pro-

tocol is also detailed.

3.1 EfficientNet and Proposed

Architecture

EfficientNet is a set of architectures based on

Mobile Inverted Bottleneck Convolution (MBconv)

blocks (Sandler et al., 2018). They were first pre-

sented in (Tan and Le, 2019), where the authors

proposed a uniform scaling of the network’s width,

depth, and resolution using a compound coefficient

(φ) that determines the dimensions of the model as

follow:

depth = α

φ

(1)

width = β

φ

(2)

resolution = γ

φ

(3)

s.tα · β

2

· γ

2

≈ 2

α ≥ 1, β ≥ 1, γ ≥ 1

An Efficient Contact Lens Spoofing Classification

443

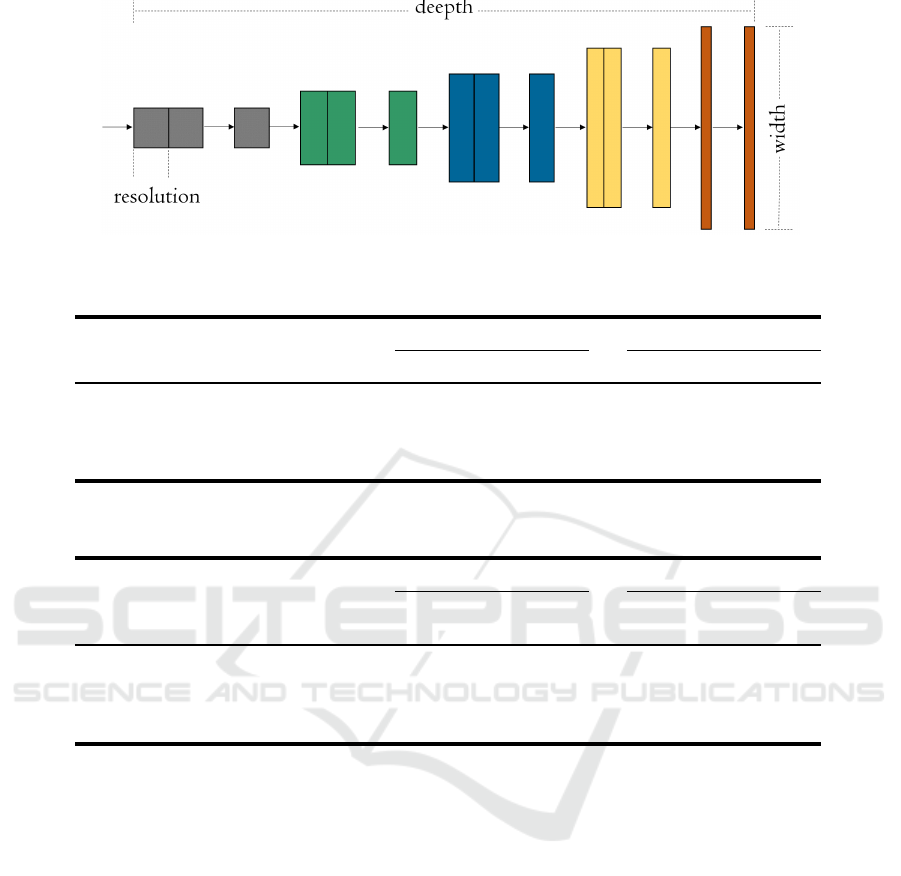

in which α, β and γ are constants obtained with a grid

search performed in (Tan and Le, 2019). Figure 2 ex-

emplifies the proposed scaling effect on the network

dimensions.

The EfficientNet is used in this work due to a su-

perior accuracy performance compared to previous

CNN on ImageNet (Russakovsky et al., 2015) while

being 6.1x faster and 8.4x smaller (Tan and Le, 2019).

It is worth mentioning that in (Tan and Le, 2019), the

authors proposed a CNN family with eight architec-

tures (B0 – B7) obtained with different φ values.

In this work, we have empirically chosen to use

the EfficientNet - B3 model, as it demonstrated the

best cost-benefit ratio between CRR and network size.

More details of the architecture are found in (Tan and

Le, 2019).

The EfficientNet models were originally proposed

to be used on ImageNet (Russakovsky et al., 2015).

However, we propose its use in a new classification

problem based on iris and contact lens. To better suit

our specific problem, we included extra blocks at the

end of original architecture. A fully connected lay-

ers (FC) is included to adjust the final classification

to the new classes and other layers with activation,

optimization, and regularization. Among these, we

have Dropout, a technique widely used in neural net-

works to avoid overfitting by dropping random units

during the training (Srivastava et al., 2014) and batch

normalization (BN), used to normalize the values in

intermediate layers to zero-mean and constant stan-

dard deviation (Bjorck et al., 2018). The activation

used in these blocks was the Switch activation func-

tion (Ramachandran et al., 2017). Table 2 summarizes

the model obtained named as EfficientNet B3 Lens

Detection (EB3LD).

Table 2: Proposed architecture, considering B3 EfficientNet

model as the base model. (NC = Number of Classes).

Stage Operator Resolution # channels # layers

1-9 EfficientNet 300 x 300 3 1

10 BN/Dropout 10 x 10 1536 1

11 FC/BN/Swich/Dropout 1 512 1

12 FC/BN/Swich 1 512 1

13 FC/Softmax 1 NC 1

The new layers are added after the Average Pool

Layer (“avg pool”) of the original EfficientNet - B3.

3.2 Pre-processing and Transfer

Learning

The Convolutional Neural Networks are capable of

extracting relevant features from input images, so they

do not require heavy pre-processing in many cases.

In this way, the only processing performed in our

database was the clipping of the iris in the image,

adding a 10% margin around it. The NDCL base al-

ready comes with the annotation of the iris coordi-

nates. However, the IIIT-D database required manual

marking of the same for later clipping.

To improve the model’s performance without the

need to generate new samples, we chose to use trans-

fer learning. In transfer learning, the weights learned

in a different problem are used for fine-tuning in an-

other one (Pan and Yang, 2010). This technique is

based on the idea that the initial layers can extract in-

termediate features from the input images, thus, the

weights learned from a wide database can be reused

as a starting point in training a new network. It is use-

ful when the training data is scarce and also to speed

up training convergence (Pan and Yang, 2010). Once

the pre-trained weights were loaded, the network was

trained with the new classification layers and blocks

specified in Table 2.

3.3 Evaluation

We evaluate the performance of our system in

three scenarios: intra-sensor, inter-sensor, and multi-

sensor. In the first case, we trained the proposed

model with samples from a single sensor and tested

with different samples from the same sensor and

database. Thus, we trained and tested the model with

sensor LG400 from the NDCL database and repeated

the process for the IrisGuard AD100 sensor. We also

trained and tested the model with the IIIT-D Cogent

Scanner and Vista Scanner, separately.

The second scenario proposed here is the inter-

sensor scenario. In this case, we used one sensor for

training and tested with a different one, both from the

same database. So, we trained with LG400 and tested

with AD100, and vice versa, and performed the ana-

log experiment with IIIT-D sensors. The objective

here is to evaluate a scenario closer to real applica-

tions, where the system is not always used with the

same sensor used to capture the training samples.

Finally, we investigated the impact of stacking

samples captured with different sensors for training,

characterizing the multi-sensor scenario. For this, we

trained the network with images obtained with both

NDCL sensors and tested with both as well, but never

using the same image for training and testing. Like-

wise, we trained the network with the two IIIT-D sen-

sors and evaluated the model with samples from both.

The metric used to evaluate our approach was the

correct classification rate (CCR) (Silva et al., 2015).

ICEIS 2022 - 24th International Conference on Enterprise Information Systems

444

Figure 2: Compound scaling method and the effect of fixed ratio on the three dimensions. (Adapted from (Tan and Le, 2019)).

Table 3: CCR(%) results for Intra-sensor on the NDCL and IIIT-D databases.

Methods

NDCL IIIT-D

AD100

LG4000

Cogent

Vista

MLBP (Yadav et al., 2014) 77.67 80.04 73.01 80.04

CLDnet (Silva et al., 2015) 78.33 86.00 69.05 72.08

ContlensNet (Raghavendra et al., 2017) 95.00 96.91 86.73 87.33

EB3LD 89.67 94.33 93.11 96.89

Table 4: CCR(%) results for Inter-sensor on the NDCL and IIIT-D databases. The first sensor is the train one, and the second,

test.

Methods

NDCL IIIT-D

AD100

LG4000

Cogent

Vista

LG4000 AD100 Vista Cogent

MLBP (Yadav et al., 2014) 60.08 61.03 77.79 65.29

CLDnet (Silva et al., 2015) 78.00 75.33 45.54 43.08

ContlensNet (Raghavendra et al., 2017) 90.45 88.00 84.80 94.80

EB3LD 78.83 91.00 82.85 70.71

4 EXPERIMENTS AND RESULTS

The EB3LD approach implementation is conducted

using the Keras/TensorFlow framework. This model

requires all images resized to 300 × 300 pixels and

3 channels (RGB). Since our datasets are in gray-

scale, the three channels are replicated using the gray-

scale image. The CNN models are trained on a

GPU GeForce Titan X with 12GB with a Intel(R)

Core(TM) i9-10900 CPU @ 2.80GHz and a RAM of

128GB.

The EB3LD model is trained for 20 epochs using

the Adam optimizer with a mini-batch size of 20 and

the categorical cross-entropy loss. The training data

is shuffled and 10% is used as validation data. The

initial learning rate is set to 10

−3

with the callback of

reducing learning rate on the plateau by a factor of 0.5

and patience equal to 2 if the accuracy on validation

data stopped improving. Furthermore, the ImageNet

weights are used for transfer learning.

The results obtained are compared against the

literature (Yadav et al., 2014; Silva et al., 2015;

Raghavendra et al., 2017). Tables 3, 4 and 5 present

CCRs for contact lens classes detection problem on

three scenarios: intra, inter, and multi-sensor evalua-

tions, respectively. These results are analyzed as fol-

lows.

4.1 Intra-sensor Evaluation

According to Table 3, the proposed EB3LD approach

outperforms the literature in the IIIT-D sensors, in

which the difference among the other methods was

meaningful.

The worst performance is observed in AD100 sen-

sor from the NDCL dataset which has a small num-

ber of training data, only 600. It is also observed

when compared to the results reported by Raghaven-

An Efficient Contact Lens Spoofing Classification

445

Table 5: CCR(%) results for Multi-sensor on the NDCL and IIIT-D databases.

Methods

Database

NDCL IIIT-D

MLBP (Yadav et al., 2014) 73.20 72.96

CLDnet (Silva et al., 2015) 82.80 69.28

ContlensNet (Raghavendra et al., 2017) 94.65 92.60

EB3LD 94.73 94.73

dra et al. (2017). A comparable performance is ob-

served on LG4000 (NDCL) sensor. However, the ap-

proach proposed in (Raghavendra et al., 2017) has

more pre-processing (segmentation and normaliza-

tion) and each image has to be processed 32 times by

the network. In contrast, the proposed approach is a

single feed-forward in the network.

4.2 Inter-sensor Evaluation

According to Table 4, the proposed approach outper-

formed the literature results for the NDCL database

when training with images from the LG4000 sensor.

Overall, the transfer learning approach has shown less

efficiency for the inter-sensor scenario. Although the

EB3LD method did not overcome the literature in the

IIT-D sensors scenario, results show that iris location

improves EB3LD and ContlensNet classification. Our

hypothesis to lower performance on the inter-sensor

evaluation is overfitting. Both network architectures

(ContlensNet and EB3LD) generate high dimension

representation vectors, which could cause overfitting

due to overtraining. We hypothesize that the models

captured specific details of the sensors.

4.3 Multi-sensor Evaluation

According to Table 5, The EB3LD method outper-

forms the literature results in the multi-sensor sce-

nario for both NDCL and IIIT-D database. In this

sense, for this case, the transfer learning has obtained

impressive results. Our hypothesis for low perfor-

mance for the inter-sensor case and high performance

for the multi-sensor is specialization in the sensors

signature. Models could be learning specific details

regarding sensor technology and causing overfitting

when only one sensor is used for the training. The

Multi-sensor scenario is the closest to the real test,

once the models would be trained with data from sev-

eral sensors, which would decrease specialization on

a specific sensor. Thus, multi-sensor results shown

the suitability of our method for contact lens prob-

lems.

4.4 Discussion

The proposed approach had converged for all datasets,

even for the smallest one (NDCL-AD100). However,

it is latent that the EB3LD takes advantage of more

data as seen in all training using the sensors NDCL-

LG4000, and IIIT-D Cogent and Vista.

In the inter-sensor scenario, the model did not

generalize well for the IIIT-D sensors. Two hypothe-

ses arise: (i) over-training on the sensors’ data, or (ii)

the learned characteristics from a sensor are not useful

for the other. The second hypothesis is supported by

the results presented in Table 5 in which the EB3LD

approach presented state-of-the-art results.

5 CONCLUSIONS AND FUTURE

WORK

In this paper, we proposed deep image representations

through the Inverted Bottleneck Convolution (MB-

conv) blocks by adapting the EfficientNet B3 network

for contact lens detection problem. The proposed

model could be embedded and work as one step of

the iris recognition pipeline.

The proposed model is called in this work

EB3LD. Experiments results revealed that the pro-

posed EB3LD model approach surpasses the litera-

ture in five out of 10 scenarios, including NDCL and

IIIT-D databases. The proposed method allows the us-

age of a deeper network with a reduced number of im-

ages. The main limitation of the proposed approach

is related to the small number of training images such

as the one observed in the NDCL-AD100 dataset.

One future research direction would be to investi-

gate the transference of learning from another domain

or task, such as face or eye. Another direction is to

use a different representation of the image and apply

Data Augmentation techniques and evaluate the pro-

posed approach in an embedded scenario to be used

in industry.

ICEIS 2022 - 24th International Conference on Enterprise Information Systems

446

ACKNOWLEDGEMENTS

The authors would also like to thank the Coordenac¸

˜

ao

de Aperfeic¸oamento de Pessoal de N

´

ıvel Superior

- Brazil (CAPES) - Finance Code 001, Fundac

˜

ao

de Amparo

`

a Pesquisa do Estado de Minas Gerais

(FAPEMIG, grants APQ-01518-21), Conselho Na-

cional de Desenvolvimento Cient

´

ıfico e Tecnol

´

ogico

(CNPq) and Universidade Federal de Ouro Preto

(UFOP/PROPPI) for supporting the development of

the present study. We gratefully acknowledge the sup-

port of NVIDIA Corporation with the donation of the

Titan X Pascal GPU used for this research.

REFERENCES

Agarwal, R. and Jalal, A. S. (2021). Presentation attack

detection system for fake iris: a review. Multimedia

Tools and Applications, 80(10):15193–15214.

Baker, S. E., Hentz, A., Bowyer, K. W., and Flynn,

P. J. (2010). Degradation of Iris Recognition Per-

formance due to non-Cosmetic Prescription Contact

Lenses. Computer Vision and Image Understanding,

114(9):1030–1044.

Bjorck, N., Gomes, C. P., Selman, B., and Weinberger,

K. Q. (2018). Understanding batch normalization. In

Advances in Neural Information Processing Systems,

pages 7694–7705.

Bowyer, K. W. and Doyle, J. S. (2014). Cosmetic Con-

tact Lenses and Iris Recognition Spoofing. Computer,

47(5):96–98.

Bowyer, K. W., Hollingsworth, K., and Flynn, P. J. (2008).

Image Understanding for Iris Biometrics: A Sur-

vey. Computer Vision and Image Understanding,

110(2):281–307.

Choudhary, M., Tiwari, V., and Venkanna, U. (2019). An

approach for iris contact lens detection and classifica-

tion using ensemble of customized densenet and svm.

Future Generation Computer Systems, 101:1259–

1270.

Daugman, J. (2003). Demodulation by complex-valued

wavelets for stochastic pattern recognition. Interna-

tional Journal of Wavelets, Multiresolution and Infor-

mation Processing, 1(01):1–17.

Daugman, J. G. (1993). High Confidence Visual Recogni-

tion of Persons by a Test of Statistical Independence.

IEEE Transactions on Pattern Analysis and Machine

Intelligence, 15(11):1148–1161.

Doyle, J. and Kevin, B. (2014). Notre Dame Im-

age Database for Contact Lens Detection In Iris

Recognition-2013: README.

Doyle, J. S., Bowyer, K. W., and Flynn, P. J. (2013). Varia-

tion in Accuracy of Textured Contact Lens Detection

based on Sensor and Lens Pattern. In IEEE Inter-

national Conference on Biometrics: Theory, Applica-

tions, and Systems, pages 1–7.

Flom, L. and Safir, A. (1987). Iris recognition system. US

Patent 4,641,349.

Galbally, J., Marcel, S., and Fierrez, J. (2014). Image Qual-

ity Assessment for Fake Biometric Detection: Ap-

plication to Iris, Fingerprint, and Face Recognition.

IEEE Transactions on Image Processing, 23(2):710–

724.

Goodfellow, I., Bengio, Y., and Courville, A. (2016). Deep

learning. Book in preparation for MIT Press.

He, Z., Sun, Z., Tan, T., and Wei, Z. (2009). Efficient iris

spoof detection via boosted local binary patterns. In

International Conference on Biometrics, pages 1080–

1090. Springer.

Kohli, N., Yadav, D., Vatsa, M., and Singh, R. (2013). Re-

visiting Iris Recognition with Color Cosmetic Contact

Lenses. In International Conference on Biometrics,

pages 1–7.

Komulainen, J., Hadid, A., and Pietikainen, M. (2014).

Generalized Textured Contact Lens Detection by Ex-

tracting BSIF Description from Cartesian Iris Images.

In IEEE International Joint Conference on Biomet-

rics, pages 1–7.

Menotti, D., Chiachia, G., Pinto, A., Schwartz, W., Pedrini,

H., Falc

˜

ao, A., and Rocha, A. (2015). Deep Repre-

sentations for Iris, Face, and Fingerprint Spoofing De-

tection. IEEE Transactions on Information Forensics

and Security, 10(4):864–879.

Ming, Z., Visani, M., Luqman, M. M., and Burie, J.-C.

(2020). A survey on anti-spoofing methods for facial

recognition with rgb cameras of generic consumer de-

vices. Journal of Imaging, 6(12):139.

Morales, A., Fierrez, J., Galbally, J., and Gomez-Barrero,

M. (2021). Introduction to presentation attack de-

tection in iris biometrics and recent advances. arXiv

preprint arXiv:2111.12465.

Pan, S. J. and Yang, Q. (2010). A survey on transfer learn-

ing. IEEE Transactions on knowledge and data engi-

neering, 22(10):1345–1359.

Prabhakar, S., S., P., and Jain, A. K. (2003). Biometric

recognition: Security and privacy concerns. IEEE Se-

curity & Privacy, 1(2):33–42.

Raghavendra, R. and Busch, C. (2015). Robust Scheme

for Iris Presentation Attack Detection Using Mul-

tiscale Binarized Statistical Image Features. IEEE

Transactions on Information Forensics and Security,

10(4):703–715.

Raghavendra, R., Raja, K. B., and Busch, C. (2017). Con-

tlensnet: Robust iris contact lens detection using deep

convolutional neural networks. In 2017 IEEE Win-

ter Conference on Applications of Computer Vision

(WACV), pages 1160–1167. IEEE.

Ramachandran, P., Zoph, B., and Le, Q. V. (2017).

Searching for activation functions. arXiv preprint

arXiv:1710.05941.

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S.,

Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bern-

stein, M., et al. (2015). Imagenet large scale visual

recognition challenge. International journal of com-

puter vision, 115(3):211–252.

An Efficient Contact Lens Spoofing Classification

447

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., and

Chen, L.-C. (2018). Mobilenetv2: Inverted residu-

als and linear bottlenecks. In Proceedings of the IEEE

conference on computer vision and pattern recogni-

tion, pages 4510–4520.

Sequeira, A. F., Murari, J., and Cardoso, J. S. (2014a). Iris

liveness detection methods in the mobile biometrics

scenario. In Neural Networks (IJCNN), 2014 Interna-

tional Joint Conference on, pages 3002–3008. IEEE.

Sequeira, A. F., Oliveira, H. P., Monteiro, J. C., Monteiro,

J. P., and Cardoso, J. S. (2014b). Mobilive 2014-

mobile iris liveness detection competition. In IEEE

International Joint Conference on Biometrics (IJCB),

pages 1–6. IEEE.

Silva, P., Luz, E., Baeta, R., Pedrini, H., Falcao, A. X.,

and Menotti, D. (2015). An approach to iris con-

tact lens detection based on deep image representa-

tions. In Graphics, Patterns and Images (SIBGRAPI),

2015 28th SIBGRAPI Conference on, pages 157–164.

IEEE.

Song, Y., Cao, W., and He, Z. (2014). Robust Iris Recog-

nition using Sparse Error Correction Model and Dis-

criminative Dictionary Learning. Neurocomputing,

137:198–204.

Srivastava, N., Hinton, G. E., Krizhevsky, A., Sutskever, I.,

and Salakhutdinov, R. (2014). Dropout: a simple way

to prevent neural networks from overfitting. Journal

of Machine Learning Research, 15(1):1929–1958.

Sun, Z., Zhang, H., Tan, T., and Wang, J. (2014). Iris Im-

age Classification Based on Hierarchical Visual Code-

book. IEEE Transactions on Pattern Analysis and Ma-

chine Intelligence, 36(6):1120–1133.

Tan, M. and Le, Q. V. (2019). Efficientnet: Rethink-

ing model scaling for convolutional neural networks.

arXiv preprint arXiv:1905.11946.

Wei, Z., Qiu, X., Sun, Z., and Tan, T. (2008). Counterfeit

Iris Detection based on Texture Analysis. In Interna-

tional Conference on Pattern Recognition, pages 1–4.

IEEE.

Yadav, D., Kohli, N., Doyle, J. S., Singh, R., Vatsa, M.,

and Bowyer, K. W. (2014). Unraveling the effect

of textured contact lenses on iris recognition. IEEE

Transactions on Information Forensics and Security,

9(5):851–862.

Yang, P., Baracchi, D., Ni, R., Zhao, Y., Argenti, F., and

Piva, A. (2020). A survey of deep learning-based

source image forensics. Journal of Imaging, 6(3):9.

Zhang, H., Sun, Z., and Tan, T. (2010). Contact Lens De-

tection based on Weighted LBP. In International Con-

ference on Pattern Recognition, pages 4279–4282.

Zin, N. A. M., Asmuni, H., and Hamed, H. N. A. (2021).

Soft lens detection in iris image using lens boundary

analysis and pattern recognition approach. Interna-

tional Journal, 10(1).

ICEIS 2022 - 24th International Conference on Enterprise Information Systems

448