Supporting the Adaptation of Agents’ Behavioral Models in Changing

Situations by Presentation of Continuity of the Agent’s Behavior Model

Yoshimasa Ohmoto

1 a

, Junya Karasaki

2

and Toyoaki Nishida

2,3

1

Faculty of Infomatics, Shizuoka University, Hamamatsu-shi, Shizuoka-ken, Japan

2

Department of Intelligence Science and Technology, Kyoto University, Sakyo-ku, Kyoto, Japan

3

Faculty of Informatics, University of Fukuchiyama, Fukuchiyama-shi, Kyoto, Japan

Keywords:

Human-agent Interaction, Human Factors, Shared Awareness.

Abstract:

In this study, we attempted to make participants continually estimate an agent’s behavioral model by having

the agent itself present the continuity of its behavior model during a task. By doing so, we aimed to encourage

users to pay attention to changes in the agent’s behavioral model and to make the user continuously change

the relationship between themselves and the agent. In order to make the participants continually estimate

the agent’s behavioral model, we proposed the method of “presentation of continuity of the agent’s behavior

model.” We implemented agents based on this and conducted an experiment using an animal-guiding task in

which one human and two agents cooperated. As a result, we were able to significantly increase the degree

to which participants paid attention to the agents and induce active interaction behavior. This suggests that

the proposed method contributed to maintaining a relationship between the agents and the participant even in

changing situations.

1 INTRODUCTION

In various aspects of society, complex problems arise

that cannot be handled by a single person. To deal

with such problems, plans are designed and managed,

and tasks are executed (Jennings et al., 2014). In

recent years, the importance of cooperation between

humans and agents in problem-solving has been rec-

ognized (van Wissen et al., 2012), and systems used

to support cooperation have been designed (Pacaux-

Lemoine et al., 2017). By utilizing the strengths of

humans as well as agents in cooperatively execut-

ing tasks, both entities can solve problems more ef-

ficiently than if they work separately.

To solve complex problems, Allen et al. pro-

posed a flexible interaction strategy called a “mixed-

initiative interaction” (Allen et al., 1999). In a mixed-

initiative interaction, the roles of each subject are not

predetermined, and the goal is to accomplish the task

while dynamically changing the roles and initiatives

of both. This mixed-initiative interaction is particu-

larly important in cooperative tasks where it is diffi-

cult to capture the full scope of the plan, the plan is

fluid, or where the capabilities, environment, and in-

a

https://orcid.org/0000-0003-2962-6675

formation obtained by each entity are different.

Gianni et al. (Gianni et al., 2011) pointed out that

achieving a mutually initiated interaction between hu-

mans and agents involves challenges in four areas:

“allocation of responsibility for tasks between hu-

mans and agents,” “methods for switching initiative

between humans and agents,” “methods for exchang-

ing information between humans and agents,” and

“building and maintaining a shared awareness of the

state of humans and agents.” In order to “build and

maintain a shared awareness,” humans must sponta-

neously and continuously approach agents.

To build and maintain a shared awareness, a wide

range of information needs to be exchanged between

humans and agents. Awareness includes the relation-

ships among team members, goals to be achieved,

tasks required to achieve them, actions of individuals

and reasons for their actions, and changes in the sur-

rounding environment (Atkinson et al., 2014; Lyons,

2013). Cheetham et al. (Cheetham and Goebel, 2007)

categorized this information into “facts and beliefs,

reasoning, and conclusions.” If an agent has a certain

“reasoning,” it is likely that it should be presented in

a proactive manner.

Creating agents for mixed-initiative interactions

with humans has been attempted before, with some

290

Ohmoto, Y., Karasaki, J. and Nishida, T.

Supporting the Adaptation of Agents’ Behavioral Models in Changing Situations by Presentation of Continuity of the Agent’s Behavior Model.

DOI: 10.5220/0010846500003116

In Proceedings of the 14th International Conference on Agents and Artificial Intelligence (ICAART 2022) - Volume 1, pages 290-298

ISBN: 978-989-758-547-0; ISSN: 2184-433X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

success (Chen et al., 2019; de Souza et al., 2015).

On the other hand, to realize a two-way change in

initiative, it is necessary to encourage a mutual vol-

untary change of initiative by confirming the con-

structed shared awareness, rather than by using inter-

face functions. In order to encourage users to volun-

tarily switch initiatives, the agent’s intention model

must be predicted. Several agent design methods

have been proposed that focus on the cognitive prop-

erty that the intention model of the agent as predicted

by the user influences the interaction (Kiesler, 2005;

Matsumoto et al., 2005). It is believed that when an

agent has a certain purpose, the user will infer some

intentions toward the agent. In this study, the agents

record the events they experience and the information

they collect from other users and agents, and decide

their own actions based on these records, thereby im-

plicitly presenting the agents’ intentions during a se-

ries of tasks and making it easier for users to infer the

agent’s intention model.

There are many situations in which the construc-

tion of shared awareness is required. “Facts and be-

liefs” and “conclusions” can be shared in advance be-

fore the task occurs, but “reasoning” must be updated

in real time. However, especially when an agent or

system is the interaction partner, users often do not

assume that the partner’s “reasoning” model will be

updated by a consistent mechanism. We hypothesized

that by explicitly stating that the agent updates its be-

havioral model during the task in a consistent manner,

the user will notice that the agent’s behavioral model

is updated within a predictable range, and will change

the relationship between themselves and the agent to

accommodate the change.

The purpose of this study is to make users pay at-

tention to the changes in the agent’s behavioral model

and to make the relationship between the user and

the agents change adaptively. For this, we proposed

a method of presentation of continuity of the agent’s

behavior model that explicitly states the relevance of

past actions as the basis for the agent’s actions while

performing continuous interaction with the agent, in-

cluding chatting, and examined its effectiveness.

2 PRESENTATION OF

CONTINUITY OF THE

AGENT’S BEHAVIOR MODEL

Our ultimate goal is to build shared awareness among

the users and the agents in a collaborative task, which

is one of the concerns in realizing mixed-initiative in-

teraction. In this study, by encouraging the partici-

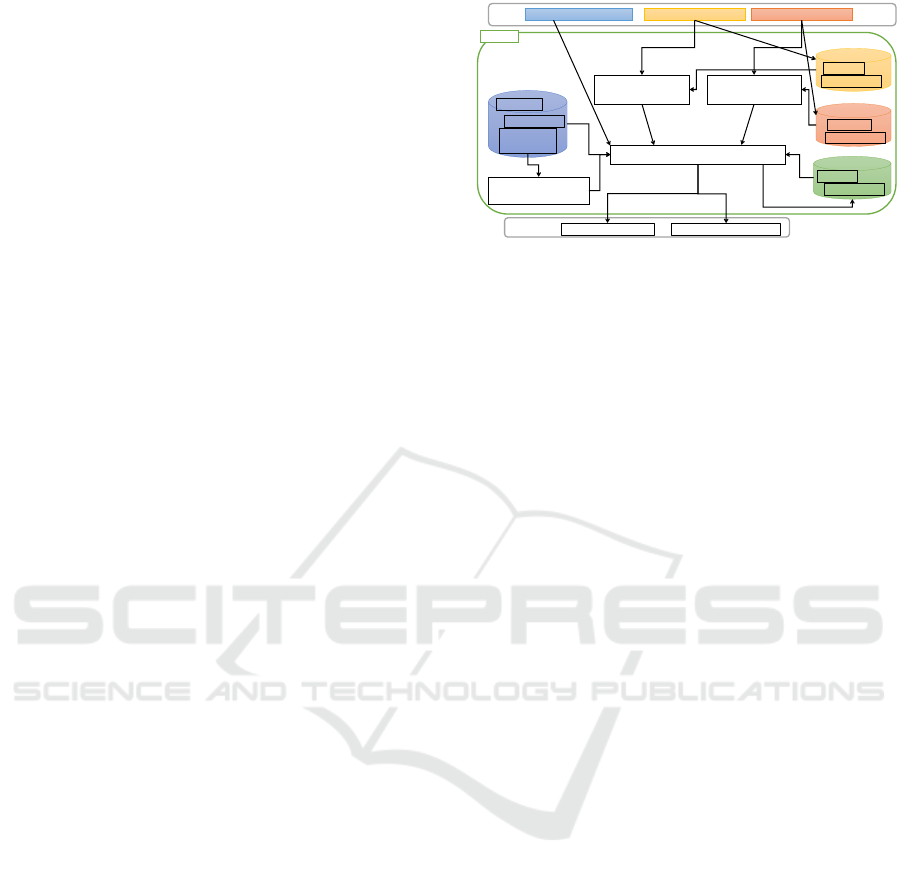

Input

Task Environment Player 1 behavior Player 2 behavior

Memory

for Player1

Memory

for Player2

Own

memory

Action

Utterance

Action

Utterance

Action

Utterance

Task database

Action

Utterance

Task

structure

Agent

Player 1

state estimation

Player 2

state estimation

Agent behavior decision

Future

state estimation

Output

Agent’s Action

Agent’s Utterance

Figure 1: The component diagram of the agent.

pants to continue to estimate the other person’s be-

havioral model forcing making them recognize the re-

lationship between the behavior expressed during the

interaction, we attempted to support users in adapting

to changes in the agent’s behavioral model in chang-

ing situations. This is referred to as “presentation of

continuity of the agent’s behavior model (PCB)”.

As an approach to realize continuous estimation

of agent’s behavioral model during interaction, con-

sidering “behavior selection based on temporal con-

tinuity,” we considered 1) presenting a rationale for

the agent’s actions based on information obtained in

successive events that occurred during the task, and 2)

conducting chats related to the agent’s personality and

the actions performed during the events in scenarios

with low work density.

2.1 Behavior Selection based on

Information Obtained in Events

We considered a behavioral model in which an agent

records the events it experiences and the information

it collects from other users and agents, and determines

its own behavior based on information obtained in

successive events. The component diagram is shown

in Figure 1. By using this, the agent can change the

basis of the action it presents according to the sur-

rounding situation and the history of its past actions.

The agent memorizes the actions and utterances

of other users and agents, and selects the behavior

to be performed from among the predetermined op-

tions based on the information about them in the past

and the information about the current surrounding en-

vironment. When the agent decides on its own be-

havior, it also records that action in the memory and

uses it for deciding on the next behavior. In this way,

the data that can be used to make decisions increases

over time, but is also lost from the memory as time

passes. In addition, there is a future estimation com-

ponent to predict future events based on past events.

In the current implementation, this future estimation

Supporting the Adaptation of Agents’ Behavioral Models in Changing Situations by Presentation of Continuity of the Agent’s Behavior

Model

291

component is maintained as a tree-structured condi-

tional branching database based on a pre-analyzed

task structure. The actions and utterances selected by

the action decision component are all selected from

those in the database.

2.1.1 Presenting Evidence based on Experience

If a user is unaware that the agent’s actions and deci-

sions are related to the user’s actions and the events

of the task, it becomes difficult for the user to assume

an agent’s behavior model. To overcome this issue,

we added a mechanism to the action decision com-

ponent that clearly presents the fact that the agent is

acting based on past information that is continuous in

time as the basis for the action in the scene where the

agent made the decision.

In situations where an agent decides on an action,

it expresses verbally which information from past

events, the current environment, or future speculation

most strongly influenced the choice. Furthermore, in

situations where other agents are acting, when it is

possible to predict from which information the action

was chosen, the information on which the action is

based is communicated to the surroundings. In either

case, the expression itself is predetermined and con-

cise.

2.1.2 Chatting as Continuous Interaction

When users and agents collaborate on long-term

tasks, their work density is not always constant, and

it is expected that there will be a mixture of busy

and relatively relaxed situations. The interaction de-

creases when the work density is low, and the user

pays less attention to the agent. This inhibits the user

from continuously estimating the behavioral model of

the agent.

To prevent such a situation, we added a mecha-

nism to the action decision component for “chatting

to share information about user and agent’s personal

experiences” and “chatting about events performed in

the past” in situations where the work density is low.

By referring to past events, we expected to confirm

each other’s “facts and beliefs” that were implicitly

shared before. In the chat that shared information

about personal experience, we encouraged the estima-

tion of the agent’s behavioral model by talking about

episodes related to the agent’s preferences.

3 EXPERIMENT

We attempted to support the participant’s adaptation

to changes in the agents’ behavioral model even in

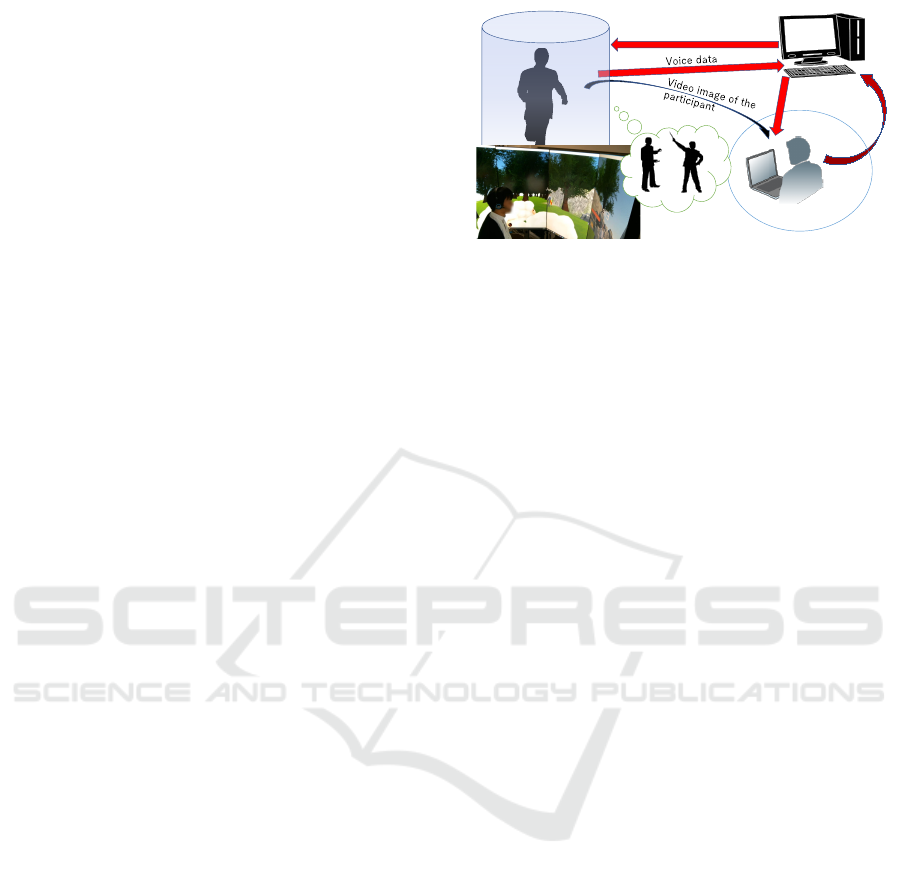

Task control PC

Agent status

Participant’s

preferences

WoZ

environment

Display the Task environment

Experimenter

Commands

Immersive display

Participant

Figure 2: The experimental setting.

changing situations by making them recognize the rel-

evance of the agent’s actions through the proposed

PCB. We implemented agents that acted based on the

model, and conducted an experiment using a task of

crowd guidance. The group with two agents that im-

plemented PCB was called the “continuity presen-

tation group (CP-group)”, and the group with two

agents that did not present the basis for their actions

or engage in chitchat, but whose behavioral model it-

self was the same, was called the “non-presentation

group (NP-group)”.

3.1 Experimental Setting

The experimental setting is shown in Figure 2. We

used a 360-degree immersive display consisting of

eight portrait orientation LCD monitors with a 65-

inch octagonal screen. In this environment, partici-

pants can easily look around in the virtual space with

a low cognitive load, similar to the real world. A par-

ticipant’s virtual avatar was controlled using a game

pad. Participants wore a headset with a microphone.

In order to ensure a verbal response, the participant’s

speech was transmitted to an operator through the mi-

crophone, and the agent’s speech was output by the

operator based on predetermined rules (Wizard of Oz:

WoZ).

3.2 Procedure

First, the experiment was explained to the partici-

pants, and they were asked to practice the manipula-

tion of the task. The manipulation practice was com-

pleted when the participants felt that they could oper-

ate the task at a level that would not interfere with the

experiment.

After providing a brief explanation of the main

session, the experimenter began recording the video

and started agent interaction. The participants per-

formed the task sequentially. In the main session,

a WoZ operator partially controlled agent behavior.

The WoZ operator input a reaction command to the

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

292

agents based on predefined rules and reaction pat-

terns when the participant tried to interact with the

agents. During conversational interaction, the con-

versation between the two agents was automatically

controlled based on the predetermined scenario. Af-

ter all sessions were completed, a questionnaire was

administered to obtain a subjective evaluation of the

participants.

We conducted an experiment with 27 Japanese

university students (aged 19 to 24 years, mean = 22.7,

SD = 2.64). Sixteen were male and eleven were fe-

male. The participants were divided into two groups.

The members in the CP-group included 13 partici-

pants (eight male and five female) and the remaining

participants were included in the NP-group.

3.3 Description of the Task

The participants played a game in which two agents

(John and Ellie) and one participant worked together

to guide animals in a virtual space. Here, the partici-

pant and the agents are referred to as “players”. The

objective of the task was for the three players to co-

operate in guiding multiple animals (sheep) wander-

ing around the game field to the goal point in virtual

space. At first, there were only two sheep, but they

were scattered near the route to the goal point, and

the goal was to collect those sheep while heading for

the goal point. In this task, it was necessary for play-

ers to flexibly coordinate how to deal with problems

that arise periodically in the task while cooperating to

achieve a single goal.

A standard route was shown on the game field, and

the sheep might be found around the route. There

were various obstacles in the field, and the player

needed to guide the sheep to the goal point by avoid-

ing or removing these obstacles. There were also

items in the field, which could be picked up to re-

move obstacles and guide the sheep. The game score

was calculated based on the number of sheep taken to

the goal and the time taken to reach the goal.

On the way to the goal point, there were several

objects that blocked movement. Those objects could

be destroyed. Among the objects that blocked move-

ment were doors that could not be destroyed but could

be unlocked. Destroying or opening an object without

using an item (a weapon or a key) took a relatively

long time, which reduced the score obtained when the

goal was reached. This was taught to the participants.

Figure 3 shows a bird’s eye view of the field where

the participants performed the animal guidance task.

The orange line represents the standard route to the

goal, and the circled numbers indicate the locations

where events occurred in the task. The starting point

Goal

Start

Figure 3: The event map of the game field.

is in the upper left corner, and the players took the

sheep scattered around the route to the farm at the goal

point. When the players used the map item, the white

lines and numbers in Figure 3 were erased.

1. Players introduced themselves.

2. One of the agents picked up a map.

3. A flag and a weapon were placed on the floor.

4. The players experienced that destroying an object

reduced their stamina, and that it was easier for

the sheep to get lost if the same player kept hold-

ing the flag.

5. A sheep was hiding in a side street.

6. If the players chose to ignore the sheep, they

needed to break the fence on the road ahead.

7. The route was blocked by a large rock. There was

no other choice but to deviate from the route and

continue through the forest.

8. The entrance to the forest was blocked by a fence.

The players were able to open it and proceed from

the menu command.

9. The players could find a pen with sheep trapped in

it in the middle of the pond. The two agents told

the participant that they would move to the left to

find a way back to the standard route, and told the

participant to go to the right to catch the sheep.

10. The participant was able to open the fence of the

pen and catch the sheep. A sign on the side de-

scribed the traps to catch sheep further along the

route.

11. The two agents who were separated from the par-

ticipants picked up a weapon here.

12. The participant and the agents met up before here.

There was a trap to catch sheep that the partici-

pant had read about on the sign. If the participant

had not shared this information with the agents in

advance, the agents would have triggered the trap.

Supporting the Adaptation of Agents’ Behavioral Models in Changing Situations by Presentation of Continuity of the Agent’s Behavior

Model

293

13. The route was blocked by a small rock. By using

an item, they were able to efficiently remove the

obstacle.

14. The route was blocked by a gate. The key to open

the gate could be found by looking around or by

looking at the map.

15. The players opened the fence and obtained the

key. If the participant spotted sheep, the partici-

pant could take them away.

3.4 Behavior of Agents in the CP-group

and the NP-group

The two agents in each group had different prefer-

ences in the way they performed the task. So, each

agent gave different directional advice.

In the CP-group, two types of behavior were pre-

sented: (1) when the agents acted at a certain point

in time, they indicated that they had a consistent ba-

sis with their previous actions, and (2) when the work

density in the task execution was low, they gave their

impressions based on the previous events. The agents

in the CP-group and NP-group performed the same

actions and judgments related to task execution. The

differences in the agents of each group are shown be-

low.

Situations That Require Action, Such as Breaking

an Object, Catching Sheep, or Holding a Flag.

CP-group: In situations where it was judged that the

agent should take active action, one agent pro-

posed the action while stating the basis, and the

other agent acted by indicating that he or she

agreed. In situations where the participant should

perform the action, one agent suggested the action

while stating the basis.

NP-group: In situations where it was judged that the

agents should take active action, one agent pro-

posed what to implement, and the other agent

acted by indicating his approval. In situations

where it was judged that the participant should

perform the action, one agent proposed what

should be performed.

Scene Where Players Moved with Sheep in Tow.

CP-group: Each agent engaged in chats related to

his or her own occupation and other personali-

ties, chats about their impressions of the previ-

ous events, and chats about speculating on future

scenes based on past events. In some cases, the

agents chatted with each other, but in other cases,

they asked the participant for his or her opinions.

NP-group: The players moved basically in silence.

In situations where task hints were required, the

agents muttered the hints.

Agents’ Behavior When Agents and Participant

Were Acting Separately.

CP-group: In addition to the content of the chats be-

tween events, the agents also chatted about what

each other was doing during their separate activi-

ties. These chats could also be heard by the par-

ticipant via walkie-talkies. The agents did not talk

to the participant when they were working.

NP-group: The players worked in silence. If a task

hint was available on the agent side, the agents

muttered. This mumbling was also heard by the

participants via walkie-talkies.

Differences in the Behavior of the Two Agents.

Commonality: Hints that required consideration of

the task were often stated by Ellie, whereas intu-

itive actions were more often carried out by John.

John and Ellie had common criteria for how to

proceed with the task, such as breaking obstacles

or bypassing the route.

CP-group: Each agent presented actions and opin-

ions that were considered appropriate in each sit-

uation based on their own preferences. In chatting

and rationale situations, John had a weak outlook

on the future and relied on his intuition, while El-

lie preferred to see the whole picture and some-

times admonished John.

NP-group: Same as the CP-group, each agent pre-

sented actions and opinions that were considered

appropriate in each situation according to their

own preferences. However, they did not indicate

that they had a common basis with their previous

actions, and did not engage in chats that were not

directly related to task execution.

3.5 Result

3.5.1 Differences in the Attention That

Participants Paid to the Agents

We attempted to determine how much attention the

participants paid to the agent’s actions and state dur-

ing the task. However, in situations where the partic-

ipant and the agent were acting together, it was diffi-

cult to determine whether the participant’s action was

the result of paying attention to the agent or the re-

sult of understanding the task situation. Therefore,

in events where the participant and the agents acted

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

294

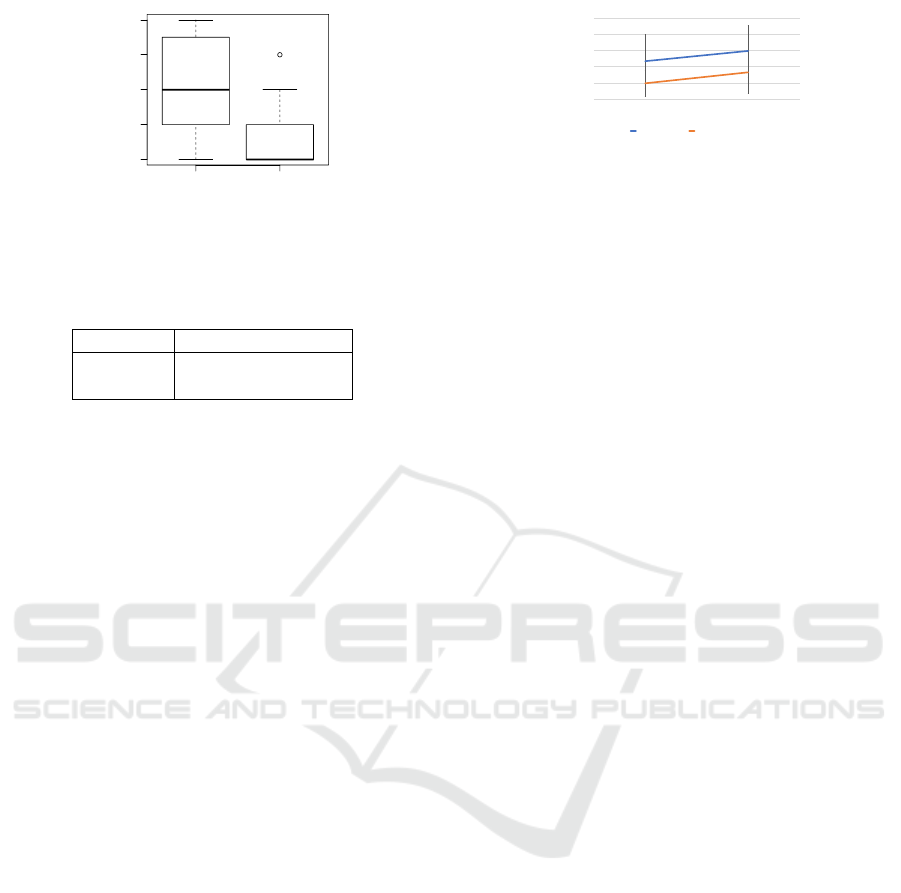

Experiment Control

0

1

2

3

4

Score

Figure 4: The number of participant’s actions that would

have occurred if the participant had paid attention to the

agent’s actions and state.

Table 1: The number of participants who actively ex-

changed information with the agent in these events.

active non-active

CP-group 9 3

NP-group 3 10

separately, we counted the participant’s actions that

would have occurred if the participant had paid at-

tention to the agent’s actions and state. The target

events were Events 9, 10, and 13: whether the par-

ticipant properly observed the agents when they went

their separate ways in Event 9, whether the partici-

pant shared information with the agents after obtain-

ing information that only the participant could know

in Event 10, and whether the participant thought of a

way to break through Event 13 after considering the

items obtained by the agent in Event 11.

Figure 4 shows the result. The mean for the CP-

group was 2.08 (SD = 1.44), and the mean for the NP-

group was 0.69 (SD = 0.95). Wilcoxon’s rank sum

test showed that there was significantly more attention

to the agents in CP-group (p = 0.010).

The behaviors in Event 10 and 13 required strong

proactivity. Therefore, we examined the number of

participant who actively exchanged information with

the agent in these events. The number of people who

took any of the actions is showed in Table 1, and the

result of Fisher’s exact test showed that there were

significantly more active participants in CP-group (p

= 0.017).

3.5.2 Number of Utterances

The number of utterances is considered to be a

straightforward indicator of participants’ aggressive-

ness. Therefore, we counted the number of utterances

of the participant during the task. As the CP-group

and NP-group had different opportunities to talk de-

pending on whether or not there was a situation to

chat, we counted the number of utterances during

the execution of the event that were common to both

groups. We excluded utterances without linguistic

meaning, such as exclamations and affirmations.

0

5

10

15

20

25

First half Second half

CP-group NP-group

Figure 5: The number of utterances in the event that were

common to both groups.

The results are shown in Figure 5. We performed

the two-way analysis of variance (group: continuous

or discrete x half: first or second). Between the CP-

group and NP-group, there was significantly more the

number of utterances in the CP-group (F(1, 23) =

5.99, p = 0.023). Furthermore, between the first and

second half of the task, significantly more the number

of utterances was in the second half (F(1, 23) = 17.66,

p = 0.00030). The second half of the task was more

complex and required more speech than the first half,

which may have led to more speech. However, it is

interesting to note that there is a difference between

the CP-group and the NP-group regardless of the task

content.

3.5.3 Questionnaires

Participants’ subjective evaluations of the agent and

the task were investigated using questionnaires. For

Q01 through Q06 and for Q09 through Q14, partic-

ipants responded with a 7-point Likert Scale. The

results are showed in Figure 6. The participants an-

swered Q07, Q08 and Q15 with a value from 0 to

100. The results are showed in Figure 7. To exam-

ine the differences between the CP-group and the NP-

group, Wilcoxon’s rank sum test was conducted for

each questionnaire item, but no significant differences

were found.

Q01 and Q02, Q03 and Q04, Q05 and Q06, Q07

and Q08, Q09 and Q10, Q11 and Q12, and Q13 and

Q14 of the questionnaires asked the same questions

about the impressions of “John” and “Ellie” respec-

tively. Wilcoxon’s signed rank test was conducted

to examine the difference between the CP-group and

NP-group, but no significant difference was found

here.

3.5.4 Correlation Analysis of Questionnaires

We analyzed whether there were correlations between

“John” and “Ellie” on the same questions. The results

showed that most of the questions had significantly

high correlations (above 0.7). However, only in the

case of Q07 and Q08, no significant correlation was

found in the NP-group (CP-group: correlation coeffi-

cient 0.85, p=0.00042; NP-group: correlation coeffi-

Supporting the Adaptation of Agents’ Behavioral Models in Changing Situations by Presentation of Continuity of the Agent’s Behavior

Model

295

0 1 2 3 4 5 6 7

Q14: If the task had

continued, how…

Q13: If the task had

continued, how…

Q12: If the task had

continued, how…

Q11: If the task had

continued, how…

Q10: How well did

you feel Ellie…

Q09: How well did

you feel John…

Q06: How much did

you tried to guess…

Q05: How much did

you tried to guess…

Q04: How much did

you care about…

Q03: How much did

you care about…

Q02: During the

entire task, did…

Q01: During the

entire task, did…

NP-group CP-group

Q01: During the entire task, did you pay attention

to the actions of John?

Q02: During the entire task, did you pay attention

to the actions of Ellie?

Q03: How much did you care about what John was

doing while you were separated from him?

Q04: How much did you care about what Ellie was

doing while you were separated from her?

Q05: How much did you tried to guess John's

intentions that he did not specifically stated?

Q06: How much did you tried to guess Ellie's

intentions that she did not specifically stated?

Q09: How well did you feel John observed and

understood your actions?

Q10: How well did you feel Ellie observed and

understood your actions?

Q11: If the task had continued, how much do you

want to continue working with John after the task?

Q12: If the task had continued, how much do you

want to continue working with Ellie after the task?

Q13: If the task had continued, how trustworthy

did you find John to be?

Q14: If the task had continued, how trustworthy

did you find Ellie to be?

Figure 6: The results of questionnaires of Q01 to Q06 and

Q09 to Q14.

0 20 40 60 80 100

Q15: What

percentage of the…

Q08: What

percentage of the…

Q07: What

percentage of the…

NP-group CP-group

Q07: What percentage of the hints

given by John did you find useful?

Q08: What percentage of the hints

given by Ellie did you find useful?

Q15: What percentage of the total

task did you feel you contributed to?

Figure 7: The results of questionnaires of Q07, Q08 and

Q15.

cient 0.49, p=0.085). The questions were “What per-

centage of the hints given by John/Ellie did you find

useful?”

This result is a bit surprising. Intuitively, it is pos-

sible that by continuing the interaction while present-

ing a continuity of each agent’s behavioral model, the

individuality of the agents could be understood and

they could be recognized as individual entities. In or-

der to speculate on the cause of this result, we calcu-

lated the correlation with this question for John and

Ellie. The results showed that there were differences

between the CP-group and the NP-group in the corre-

lations between “Q01 and Q07” and “Q02 and Q08”.

There were also differences between the CP-group

and NP-group for the correlations between “Q09 and

Q07” and “Q10 and Q08”.

Between “Q01 and Q07” and “Q02 and Q08”.

Questions Q01 and Q02 were “During the entire task,

did you pay attention to the actions of John/Ellie?”

When the correlations were calculated, the correla-

tions for John’s impression were 0.75 for the CP-

group and 0.19 for the NP-group. The correlations

for Ellie’s impression were 0.76 for the CP-group and

0.46 for the NP-group. In other words, for both John

and Ellie, there were significantly high positive corre-

lations in the CP-group, but none in the NP-group.

Between “Q09 and Q07” and “Q10 and Q08”.

Questions Q09 and Q10 were “Did you feel that

John/Ellie was observing and understanding your be-

havior well?” When the correlations were calculated,

the correlations of John’s impression were 0.58 for

CP-group and 0.74 for NP-group. The correlations

for Ellie’s impression were 0.32 for the CP-group

and 0.79 for the NP-group. In other words, the NP-

group showed significantly high positive correlations

for both John and Ellie, but the CP-group showed rel-

atively weak correlations for John and no correlations

for Ellie.

These results can be summarized as follows: in

the CP-group, whether or not the participant paid at-

tention to the agent’s behavior was related to whether

or not the participant found the agent’s hint useful,

and in the NP-group, whether or not the participant

felt that the agent was paying attention to the partici-

pant’s behavior was related to whether or not the par-

ticipant found the agent’s hint useful. In other words,

in the CP-group, the participants who were oriented

toward active interaction with the agent thought the

agent’s hints were useful, while in the NP-group, the

participants who were oriented toward passive inter-

action with the agent thought the agent’s hints were

useful. These results suggest that the way of relating

the attention to agents’ actions and the evaluation of

agents’ actions differed between the CP-group and the

NP-group.

Between “Q11 and Q15” and “Q12 and Q15”.

The correlations between Q11 and Q15 and between

Q12 and Q15 were also different. Questions Q11 and

Q12 were “If the task had continued, how much do

you want to continue working with John/Ellie after

the task?” Question Q15 was “What percentage of the

total task did you feel you contributed to?” The cor-

relations were calculated. The correlations of John’s

impression were 0.80 for the CP-group and 0.047 for

the NP-group. The correlations for Ellie’s impres-

sion were 0.80 for the CP-group and -0.11 for the

NP-group. In both cases, a significantly high positive

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

296

correlation was found only for the CP-group. This in-

dicates that the relationship between one’s own con-

tribution to the task and the evaluation of the agent’s

task performance is different between the CP-group

and the NP-group. In other words, it suggests that in

the CP-group, the participants’ own subjective degree

of contribution to the task was associated with their

evaluation of the agent’s behavior during the task.

3.5.5 Correlation between Behavioral Indices

and Questionnaires

Correlation analysis was conducted to examine the re-

lationship between each item of the questionnaires,

the attention to the agent, and the number of utter-

ances. As a result, there were significant correlations

between the following items in the CP-group: Q15

and attention to the agent (0.66), Q15 and number

of utterances (in first half (0.58), and in second half

(0.65)). But, there was no significant correlation in

the NP-group.

This indicates that in the case of the CP-group,

there was a connection between the participant’s own

actual behavior and the evaluation of his or her con-

tribution to the task. In other words, in the CP-group,

the participants’ own active involvement in the task

and agent was perceived to be related to their subjec-

tive evaluation of their own contribution to the task,

while this was not the case in the NP-group.

4 DISCUSSIONS AND

CONCLUSION

In this study, we attempted to make participants con-

stantly estimate the agent’s behavioral model by hav-

ing the agent itself present the continuity of the ac-

tions it performs in the task. By doing so, we aimed to

help users continually adapt to changes in the agent’s

behavioral model even as the surrounding situation

changed. Specifically, we 1) presented the basis for

the agent’s behavior based on information about suc-

cessive events that occurred in the task, and 2) con-

ducted chats related to the agent’s personality and

the events performed in the task even in situations

where there were no events in the task. Using these,

we examined the effects of “presentation of continu-

ity of the agent’s behavior model (PCB)”, in which

participants are made aware that the agent retains the

same memories, personalities, and behavioral mod-

els throughout the task, and can therefore estimate the

behavioral models associated with the agent’s actions

and opinions. We implemented agents that act based

on this, and conducted an evaluation experiment us-

ing a guiding sheep task. As a comparison, we im-

plemented agents like conventional agents that do not

present the basis for their actions, but the behavioral

model itself was the same.

The results of the experiment showed that the de-

gree to which the participants in the CP-group paid

attention to the agents was significantly higher than

that in the NP-group. In addition, the number of par-

ticipants who not only paid attention but also actively

shared information was significantly higher in the CP-

group. The number of utterances, which is considered

to be an indicator of the participants’ active approach

to the agent, was also significantly higher in the CP-

group. Therefore, we can suggest that the PCB en-

couraged participants to pay attention to the agent

continuously, and elicited a positive attitude toward

the interaction with the agents.

The fact that the tendency of the participants’ be-

havior did not change until the end of the task sug-

gests that it contributed to maintaining the relation-

ship between the agent and the participant even in

dynamic situations. In previous studies, forced in-

teraction and other methods were used to build and

maintain an active attitude toward the agent (Ohmoto

et al., 2016; Ohmoto et al., 2018), but the interest to-

ward the agent decreased over time. However, in the

present experiment, the participants paid attention to

the agent even in the latter half of the task, so we

can affirm that presentation of continuity is one of

the methods to solve the issue faced in the previous

works.

We examined participants’ subjective evaluations

of each of the two agents using a questionnaire, but

there was no significant difference between the CP-

and NP-groups here. By contrast, when we calcu-

lated the correlations between the questionnaire items

and the behavioral indices in the CP-group and NP-

group, there were several items that showed different

trends. Correlation analysis suggested that the CP-

group and NP-group differed in the following aspects:

1) the CP-group related participants’ attention to the

agent’s behavior to their evaluation of the agent’s be-

havior; 2) the CP-group related participants’ degree of

subjective contribution to the task to their evaluation

of the agent’s behavior, and 3) the CP-group related

the participants’ own active involvement in the task

and agents to their own subjective degree of contri-

bution during the task. Summarizing these results, it

was suggested that the continuity presentation of the

behavioral model made the participants aware of the

relationship between their own actions and judgments

during the task and the actions of the agent.

Supporting the Adaptation of Agents’ Behavioral Models in Changing Situations by Presentation of Continuity of the Agent’s Behavior

Model

297

REFERENCES

Allen, J. E., Guinn, C. I., and Horvtz, E. (1999). Mixed-

initiative interaction. IEEE Intelligent Systems and

their Applications, 14(5):14–23.

Atkinson, D. J., Clancey, W. J., and Clark, M. H. (2014).

Shared awareness, autonomy and trust in human-robot

teamwork. In 2014 AAAI fall symposium series.

Cheetham, W. E. and Goebel, K. (2007). Appliance call

center: A successful mixed-initiative case study. AI

Magazine, 28(2):89–89.

Chen, T.-J., Subramanian, S. G., and Krishnamurthy, V. R.

(2019). Mini-map: Mixed-initiative mind-mapping

via contextual query expansion. In AIAA Scitech 2019

Forum, page 2347.

de Souza, P. E. U., Chanel, C. P. C., and Dehais, F. (2015).

MOMDP-based target search mission taking into ac-

count the human operator’s cognitive state. In 2015

IEEE 27th international conference on tools with ar-

tificial intelligence (ICTAI), pages 729–736. IEEE.

Gianni, M., Papadakis, P., Pirri, F., and Pizzoli, M. (2011).

Awareness in mixed initiative planning. In 2011 AAAI

Fall Symposium Series.

Jennings, N. R., Moreau, L., Nicholson, D., Ramchurn,

S., Roberts, S., Rodden, T., and Rogers, A. (2014).

Human-agent collectives. Communications of the

ACM, 57(12):80–88.

Kiesler, S. (2005). Fostering common ground in human-

robot interaction. In ROMAN 2005. IEEE Interna-

tional Workshop on Robot and Human Interactive

Communication, 2005., pages 729–734. IEEE.

Lyons, J. B. (2013). Being transparent about transparency:

A model for human-robot interaction. In 2013 AAAI

Spring Symposium Series.

Matsumoto, N., Fujii, H., Goan, M., and Okada, M. (2005).

Minimal design strategy for embodied communication

agents. In ROMAN 2005. IEEE International Work-

shop on Robot and Human Interactive Communica-

tion, 2005., pages 335–340. IEEE.

Ohmoto, Y., Takashi, S., and Nishida, T. (2016). Effect on

the mental stance of an agent’s encouraging behavior

in a virtual exercise game. In Cognitive 2016: The

eighth international conference on advanced cogni-

tive technologies and applications, pages 10–15.

Ohmoto, Y., Ueno, S., and Nishida, T. (2018). Effect of vir-

tual agent’s contingent responses and icebreakers de-

signed based on interaction training techniques on in-

ducing intentional stance. In 2018 IEEE International

Conference on Agents (ICA), pages 14–19. IEEE.

Pacaux-Lemoine, M.-P., Trentesaux, D., Rey, G. Z., and

Millot, P. (2017). Designing intelligent manufacturing

systems through human-machine cooperation princi-

ples: A human-centered approach. Computers & In-

dustrial Engineering, 111:581–595.

van Wissen, A., Gal, Y., Kamphorst, B., and Dignum, M.

(2012). Human–agent teamwork in dynamic environ-

ments. Computers in Human Behavior, 28(1):23–33.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

298