A Natural Interaction Paradigm to Facilitate Cardiac Anatomy

Education using Augmented Reality and a Surgical Metaphor

Dmitry Resnyansky

1 a

, Nurullah Okumus

2 b

, Mark Billinghurst

1 c

, Emin Ibili

3 d

,

Tolga Ertekin

4 e

, Düriye Öztürk

5 f

and Taha Erdogan

5 g

1

School of Information Technology and Mathematical Sciences, University of South Australia, Mawson Lakes, Australia

2

Department of Child Health and Diseases, Afyonkarahisar Health Sciences University, Afyonkarahisar, Turkey

3

Department of Healthcare Management, Afyonkarahisar Health Sciences University, Afyonkarahisar, Turkey

4

Department of Anatomy, Afyonkarahisar Health Sciences University, Afyonkarahisar, Turkey

5

Department of Radiation Oncology, Afyonkarahisar Health Sciences University, Afyonkarahisar, Turkey

emin.ibili@afsu.edu.tr, tolga.ertekin@afsu.edu.tr, duriye.ozturk@afsu.edu.tr, taha.erdogan@afsu.edu.tr

Keywords: Augmented Reality, Visualisation, Cardiac Anatomy Learning, Interactivity, HMD.

Abstract: This paper presents a design approach to creating a learning experience for cardiac anatomy by providing an

interactive visualisation environment that uses Head Mounted Display (HMD)-based Augmented Reality

(AR). Computed tomography imaging techniques were used to obtain accurate model geometry that was

optimised in a 3D modelling software package, followed by photo-realistic texture mapping using 3D painting

software. This method simplifies the process of modelling complex, organic geometry. Animation, rendering

techniques, and AR capability were added using the Unity game engine. The system’s design and

development maximises immersion, supports natural gesture interaction within a real-world learning setting,

and represents complex learning content. Hand input was used with a surgical-dissection metaphor to show

cross-section rendering in AR in an intuitive manner. Lessons learned from the modelling process are

discussed as well as directions for future research.

1 INTRODUCTION

Understanding the anatomical composition of the

heart is a difficult task for medical students due to the

organ’s complex arrangement of individual elements

and multi-chambered structures (Maresky et al.,

2019). Traditional anatomy learning methods are

based on 2D images, slides, and plastic models, and

can be less effective due to lack of interactivity, and

difficulties with understanding 3D structure from 2D

materials (Kurniawan et al., 2018; Rosni et al. 2020).

Augmented Reality (AR) and Virtual Reality (VR)

can enhance anatomy learning by addressing these

a

https://orcid.org/0000-0003-3897-7864

b

https://orcid.org/0000-0001-6082-0818

c

https://orcid.org/0000-0003-4172-6759

d

https://orcid.org/0000-0002-6186-3710

e

https://orcid.org/0000-0003-1756-4366

f

https://orcid.org/0000-0002-3265-2797

g

https://orcid.org/0000-0002-3559-8933

problems and creating a more interesting, active and

flexible learning experience (Bork et al., 2019).

This paper is part of a wider educational project

that aims to support effective knowledge transfer of

cardiac anatomy and physiology for medical students

through innovative technologies. A Head-Mounted

Display (HMD)-based interactive Augmented Reality

Learning Environment (ARLE) prototype is currently

being developed with the aim of communicating the

multitude of the heart’s inner and outer structure, as

well as basic aspects of its circulatory system. This

has been designed with the focus of creating a

realistic interactive augmented reference medium for

204

Resnyansky, D., Okumus, N., Billinghurst, M., Ibili, E., Ertekin, T., Öztürk, D. and Erdogan, T.

A Natural Interaction Paradigm to Facilitate Cardiac Anatomy Education using Augmented Reality and a Surgical Metaphor.

DOI: 10.5220/0010835400003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 1: GRAPP, pages

204-211

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

students to compare to textbooks, physical models,

dissections, and surgical observation sessions.

The intended design goals of the prototype ARLE

are: (1) improving the production pipeline of

complex, organic models with Computed

Tomography- (CT) extracted 3D scan data; (2)

increasing learner immersion when interacting with

visualisations by facilitating user stereopsis with the

aid of a HMD; (3) supporting natural interaction with

AR visualisations without the need for marker-based

tracking, mobile devices, or physical peripherals; and

(4) representing information in a realistic and

engaging manner with the aid of graphic and

animated content.

This paper focuses on the design of the prototype

system and highlights the importance of adopting a

multi-layered approach to the creation of visual

learning content, and to supporting natural interaction

(Piumsomboon et al., 2014). This work deals with a

limited subset of the content necessary for the

teaching of the anatomical structure of the human

heart: in this case, the primary heart aorta, aortic

valve, and aortic circulation system.

The rest of the paper is organised as follows:

In section 2, the relevant literature is outlined.

Section 3 describes our prototype system and its

associated interaction metaphors and a brief rationale

for the design decisions made. Section 4 describes an

approach to development of visualisations deriving

from medical imaging data obtained from CT scans,

including 3D asset creation and preparation inside a

game engine for adding functionality. Finally, section

5 concludes the paper by reiterating the aim,

formulating insights gained, and future work.

2 RELATED WORK

The main advantages of using AR or VR in anatomy

learning is that these technologies can help students

with lower visual-spatial abilities (Bogomolova et al.,

2021) and enable more active, exploratory, and

embodied learning, which improves learning

efficiency and memory retention (Cakmak et al.,

2020).

There is significant research on virtual learning

for anatomy students using different delivery

modalities, such as highly immersive (stereoscopic;

3D VR) vs. less immersive (desktop). For instance,

Kurul et al. (2020) suggest that a 3D immersive VR

system can provide a useful alternative to

conventional anatomy training methods. In Wainman

et al. (2020), VR and AR representations of pelvic

anatomy were tested in comparison to photographs

and physical models. They found that VR and AR had

fewer advantages for learning than physical models,

and that “true stereopsis is critical in learning

anatomy” (p. 401). In a pilot study (Birbara et al.,

2020), a skull anatomy virtual learning resource was

developed using Unity, and was made available in

different delivery modalities (more immersive and

less immersive). The stereoscopic delivery was found

to be more mentally demanding and a greater degree

of physical discomfort and disorientation was

reported by some participants. Moro et al. (2021)

compared the effectiveness of delivering human

physiology content in AR through the HoloLens

HMD and in non-AR on a tablet. The study did not

find any significant differences in test scores, and

provided evidence that both modes could be effective

for learning, although dizziness when using the

HoloLens was reported by some participants.

However, other studies suggest that anatomical

learning could benefit from the various affordances of

AR/VR such as immersive interactivity and

visualisation (Samosky et al., 2012; Venkatesan et al.,

2021). For example, Gloy et al. (2021) provide an

example of this in their development of an immersive

anatomy atlas that enables virtual dissection as a way

to interact with human anatomical structures. The

application (using a HMD and bi-manual controllers)

allows users to explore anatomy by modifying organ

transparency, toggling overall visibility within a

specific radius with an interactive manipulation tool,

or revealing and concealing selected geometry by

offering a cross-section capability. Higher retention

rate was demonstrated by students using the

interactive atlas compared to those using traditional

anatomical textbooks. Bakar et al. (2021) describe

GAAR (Gross Anatomy Augmented Reality) – a

mobile AR learning tool. By interacting with 3D

objects, video, and information while using the tool,

users can experience the perception of operating on a

“real” organ (p. 162).

In a review of mobile AR applications for learning

biology and anatomy (Kalana et al., 2020), four

interactive AR tools have been reviewed: APPLearn

(Ba et al., 2019), to support learning biology in

secondary schools; a Magic Book application for

medical students to learn neuroanatomy (Küçük et al.,

2016); an application that combines 3D modelling

and AR for learning molecular biology (Safadel &

White, 2018); and Human Anatomy in Mobile-

Augmented Reality (HuMAR) (Jamali et al., 2015)

which focuses on 3D visualisation of selected bones.

Kalana et al. (2020) emphasise AR’s potential for

visualisation of complex information and enabling

active learning. They also review commercial AR

A Natural Interaction Paradigm to Facilitate Cardiac Anatomy Education using Augmented Reality and a Surgical Metaphor

205

applications for learning anatomy, highlighting

features that enable interaction between the user and

the 3D models, such as using the “Pinch In-Pinch

Out” gesture, and controllers for moving 3D models

in four directions, rotating and scaling, or “peeling

off” the layers of an organ (pp. 582-583).

Romli and Wazir (2021) assess and compare six

applications: Web based AR for Human Body

Anatomy Learning (Layona et al., 2018); Human

Anatomy Learning Systems Using AR on Mobile

Application (Kurniawan et al., 2018); AR for the

Study of Human Heart Anatomy (Kiourexidou et al.,

2015); an AR application for smartphones and tablets

for cardiac physiology learning (Gonzalez et al.,

2020); a human heart teaching tool (Nuanmeesri,

2018); and a mobile AR application for teaching heart

anatomy (Celik et al., 2020). Romli and Wazir (2021)

maintain that marker-based, mobile AR systems

enable users to scan physical images in the real world,

and display 3D visualisations that may be acted upon

with the mobile device.

To summarise, a major advantage of immersive

HMD-based technologies such as AR/VR to deliver

anatomy-related learning content is that it enables

unique interaction modalities innate to the hardware.

Natural interaction is afforded more readily in the

form of simulated dissection and transparency

techniques. However, less immersive approaches

(specifically, mobile AR with touch-based GUIs) that

afford less natural interaction relative to a HMD tend

to be more widely used for teaching anatomy. One of

the problems that need to be addressed is creation of

the anatomy and the visualisation of cardiac blood

circulation. For developers, simulation of dynamic

fluids for representing physiological processes

involving human circulation is one specific challenge

(Rosni et al., 2020). Such dynamic visualisation

requires the physics processing capabilities of a game

engine in order to avoid expensive processing of pre-

cached particle animations. The difficulty is

compounded by the subsequent limitations of

common game engines in rendering liquids in real

time at an adequate level of realism.

3 SYSTEM DESCRIPTION

The prototype ARLE affords interaction in three

dimensions for engaging with 3D visualisations.

Compared to a 2D interaction approach, which uses

contact-based touch and swipe gestures to interact

with virtual objects, 3D interaction offers a method of

natural interaction using depth-tracking hand gestures

in 3D space (Mandalika et al., 2017).

The main features of the ARLE are: (1)

markerless AR interaction supported by a HMD to

provide six degrees of freedom (6DOF) of movement

and vision for users in and around the anatomical

visualisation; (2) hand-based manipulation of 3D

anatomy models, allowing rotation and scaling of the

cardiac system; (3) hand-based interaction that uses a

cross-section tool in 3D space to dissect anatomy.

A HMD can readily provide stereopsis capability

to users, enabling immersive and engaging

representations of visual information by displaying

anatomy models in actual 3D space. In addition, the

depth perception affordances of the HoloLens2

encourage natural interaction using the hands as well

the entire body by facilitating a “walkthrough”

experience where the user can walk around the AR

view (Billinghurst & Henrysson, 2009). This allows

learners the freedom to study the organic structure of

complex organs through a “hands-on, feet-first”

approach by exploring a heart model in physical

space with their own hands as well as on foot.

The prototype ARLE uses interactive scale

manipulation to represent visual information in a

broader context. This contrasts with mobile AR

techniques where zoom controls (e.g. sliders, finger

swiping) or (physical) device proximity allows users

to observe detailed information for a specific object,

but seeing “the bigger picture” becomes a challenge.

Restricted magnification of an individual object is

thus prioritised over the ability to view and

understand its global relationship to its neighbours.

Object rotation can be achieved through a similar 3D

interaction approach. Users can expand and contract

the virtual representation by manipulating anchor

points on a bounding box that surrounds the heart

model. A surgical dissection tool metaphor is

included in the natural interaction paradigm by

enabling the user to “dissect” models in 3D space by

way of a hand-manipulated virtual tool.

4 DESIGN: APPROACH AND

OBSERVATIONS

4.1 Equipment and Interaction

The application uses a Microsoft HoloLens2 see-

through AR HMD that provides stereo viewing of

virtual content, inside-out head tracking, and support

for natural two-handed gesture input. The HMD

enables viewing of virtual anatomy on top of the

user’s physical environment. A 3D model of the

primary heart aorta is chosen as the basis of the

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

206

visualisation, along with an aortic valve animation

and animated blood flow simulation. The AR

component may be interacted with by gesture-based

input, thereby supporting exploration of the

anatomical model through scale and rotation controls.

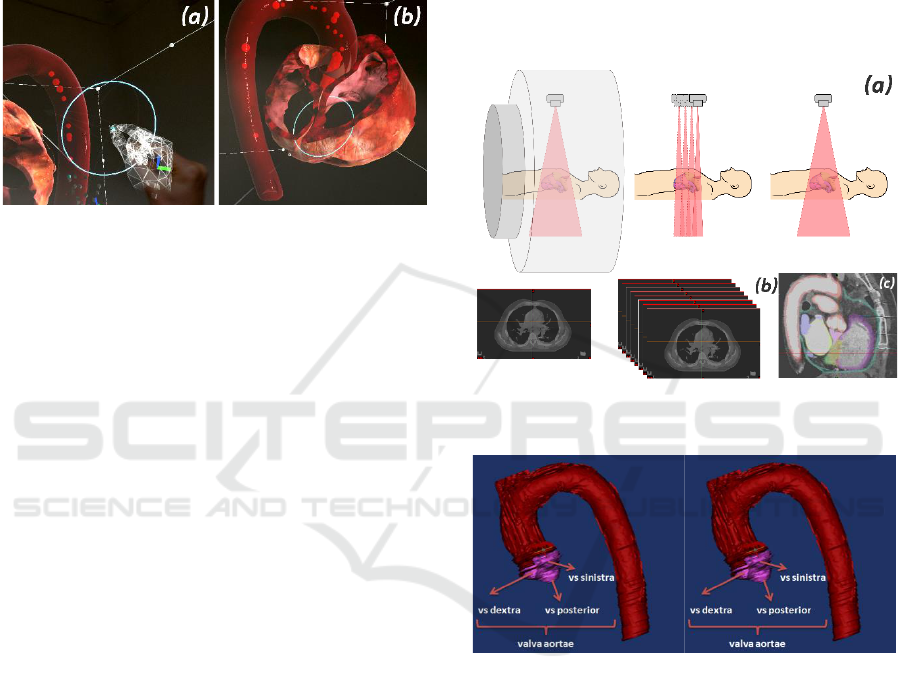

Fig. 1 indicates the user-manipulated AR articulated

hand controller, and the gesture-based cross-section

tool that shows the inner structure of the aorta.

Figure 1: Articulated hand controller operated by user’s

hand input for operating the cross-sectioning tool (a) and

cross-section tool in a passive state of dissection over the

left and right atriums (b).

4.2 Production Process

The amended development process of the prototype

ARLE comprises the following stages:

1. Scanning: image extraction; image layer

splicing; creation of 3D point cloud data sets; and

conversion of scan data to 3D geometry.

2. Modelling: conversion of individual

polygon arrays to a unified 3D mesh inside a 3D

modelling package; cleaning stray polygons and

vertices resulting from data point scatter; mesh repair

of missing faces and topology; model optimisation

and export to game engine; additional modelling and

export of aortic valve anatomy to game engine.

3. Texturing: creation of reference textures in

a 2D image editor; painting of texture maps for each

organ model in 3D painting software; export of maps

as Unity-compatible textures.

4. Animation: animation of aortic valve in a

3D modelling package; path construction and particle

simulation in a game engine for aorta blood flow

visualisation.

5. Rendering: setup of composite materials

using painted textures and cross-section shader.

6. Interaction: reconstruction of heart

structure using imported anatomical models; addition

of hand-based scale/angle manipulation; creation of

dissection tool with hand interaction.

4.2.1 Modeling, Animation & Particle

Simulation

Information about the patient’s anatomy can be

transferred to digital media with medical imaging

techniques. The most used imaging methods today

are Computed Tomography (CT) and Magnetic

Resonance Imaging (MRI) (Eichinger et al., 2010).

CT is a radiological method that creates a cross-

sectional image of the examined area of the body with

X-rays (Fig. 2(a)).

Figure 2: Obtaining a cross-sectional image from CT scan

data in MIMICS.

Figure 3: Anatomical structures resulting from 3D scan

belonging to the aorta anatomy.

After taking cross-sectional images (Fig. 2(a, b)),

the contouring of all anatomical structures of the aorta

was started on transverse sections (Fig. 2(c)). These

cross-sectional images were modelled by the

MIMICS image processing software for 3D design

and modelling (Fig. 3). Modelling is the conversion

of anatomy structures into three-dimensional form

using CT scan data. This procedure requires serious

anatomical knowledge, because the image obtained

from the anatomy can become quite complex due to

the low resolution and patient variations. The

geometric mesh surfaces of the primary heart aorta

and additional structures were built by converting

medical scan data from a CT image to AutoCAD

A Natural Interaction Paradigm to Facilitate Cardiac Anatomy Education using Augmented Reality and a Surgical Metaphor

207

DXF format for import into a 3D modelling and

animation package.

AutoDesk 3DStudio Max was used to edit

individual imported DXF polygon object arrays and

carry out a conversion, retopology, and mesh repair

process for each organ structure. Models were then

imported into Unity, where four splines were

constructed and positioned inside the aorta to serve as

paths for the blood flow simulation. This method

allowed for additional control over the paths of each

particle simulation by parenting each particle array to

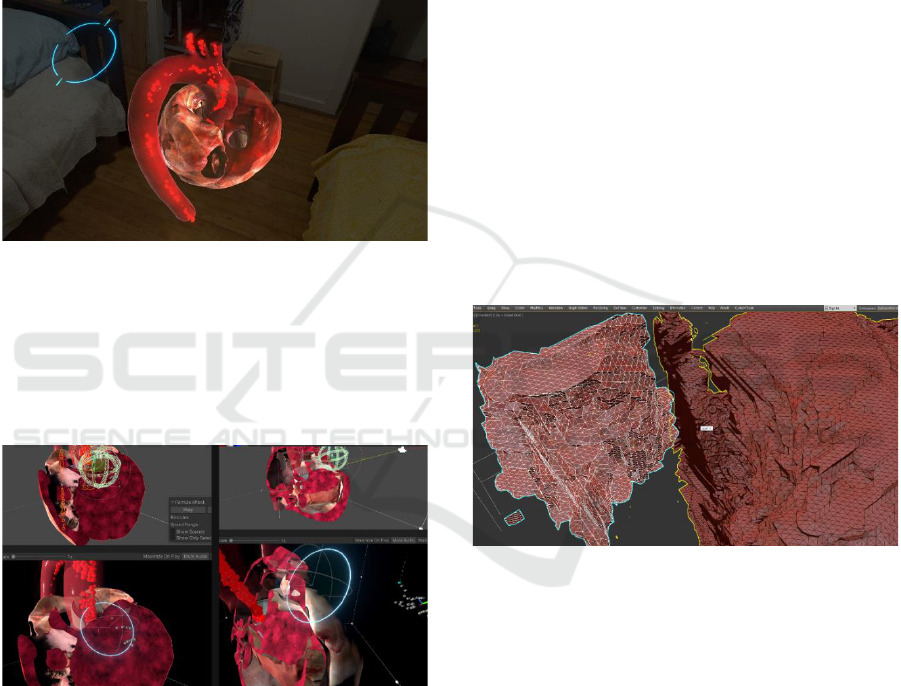

the splines (Fig. 4).

Figure 4: Reconstructed particle animation in Unity’s

physics engine with newly-constructed splines for directing

blood flows.

Figure 5: Animation process of aortic valve using Object-

Space Modifers in 3DS Max and final looped animation

sequence in Unity.

A newly created aortic valve was modelled and

animated in 3DS Max (Fig. 5). A cloth modifier was

used in the valve model to allow for faster simulation

of symmetrical geometry. This provides the ability to

animate without a bone-skin-Inverse Kinematics (IK)

system. However, export issues arose when

converting the valve animation to Unity-compatible

formats, none of which presently support Object-

Space Modifiers.

Various approaches involving vertex animation-

baking yielded a solution in the form of a third-party

mesh baker plug-in that was able to pre-cache the

animation as an OBJ mesh sequence. When combined

with an additional third-party plug-in to import the

baked data into Unity, this was able to output the cloth

simulation correctly. The animation process was

finalised by looping the sequence in Unity’s

Mecanim, then parenting the new valve to the aorta

model, thus allowing for the valve to transition in

together with the aorta and blood flow particle

animations. Unity’s particle physics engine was used

to provide a more accurate representation of blood

flow by using particle collision in conjunction with a

pre-cached valve animation converted to a rigid body

(Fig. 6).

Figure 6: Unity’s physics engine was used to provide

accurate particle simulation for blood flow animation.

4.2.2 Texturing Mapping and Render

Process

The challenges presented by manually unwrapping,

stitching and painting textures on complex geometry

were overcome with the aid of the Substance Painter

software package. This enabled instantaneous,

automated UV unwrapping of geometry that included

automatic stitching of individual UV maps into a

single pelt map. The process was further simplified

due to using the same virtual map in 3D space for

direct-to-surface painting.

Figure 7: Early UV mapping process in 3DS Max,

compared to texture map creation in Substance Painter.

This was achieved by baking the mesh geometry

into textures, which allowed for automatic alignment

of painted sections and a fully-rendered output of the

final texture at all times. This approach was used to

dynamically paint and edit custom texture channels

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

208

on top of a 3D object, then export them as 2D maps,

reversing the traditional workflow of 2D to 3D to 2D

software package (Fig. 7).

Most traditional workflows do not afford dynamic

evaluation of the final result during map editing, and

instead rely on the user’s imagination, high-end,

expensive 2D graphics packages, and third-party

tools to produce texture channels. A variety of

customised brushes and textures were created by

referencing open-source anatomical material in the

form of photographs and realistic CGI renders.

Figure 8: Texture maps created in Substance Painter as

viewed on HoloLens2 in physical environment.

Once texture sets for each organ structure were

imported into Unity, a collection of third-party cross-

section shaders with specular shading and normals-

rendering support were configured to render the

organic material properties of the structures (Fig. 8).

Figure 9: Planar cross-section gizmo configured with

compatible shaders and Substance Painter textures applied

to anatomy in Unity.

Finally, the cross-section manipulation tool was

created in Unity using third-party cross-section

shaders and a planar gizmo to adhere to a natural

surgical-dissection metaphor inside the AR

environment. A simple visual cue was used to denote

the tool’s position and facing direction to aid

usability. Gesture input capability was configured

within the tool to allow users to have dynamic control

over the dissection process (Fig. 9).

4.3 Observations on Technical Practice

and Workflow

One of the first challenges was accurate conversion

of CT imaging data into three-dimensional data for

the visualisation assets. In order to accurately model

the heart aorta, chambers, and its associated

anatomical structures, it was necessary to extract

image slices from CT data, followed by preparation

through combining slices into a 3D AutoCAD object

suitable for modelling software. The scatter of

physical data points resulting from the scanning

process created a challenge during the modelling

stage, and required flexible methods for optimising

model geometry for texturing and animation.

Artefacts from the initial scan data shifted the

workload to optimisation of the complex organic

objects in a modelling software package. Since

accurate conversion of individual polygons into unified

model follows “garbage in, garbage out,” it requires

initial CT scan data capture with high enough precision

to capture the maximum amount of physical geometry

of fine and complex organ structures and avoid missing

geometry during the conversion (Fig. 10).

Figure 10: 3D point cloud data during polygon unification

process, depicting multiple stray artefacts and missing

geometry.

Relatively simple and optimised geometry still

required lengthy editing of UV coordinate maps for

texturing complex organic structures. This was done

using the Adobe Substance Painter 3D painting

application that allowed for procedurally generated

texturing and auto UV mapping and dramatically

decreased the time and effort expended on creation of

realistic anatomy assets.

Contrary to traditional methods of using a 3D

modelling and animation package to export

visualisations, the full range of rendering techniques

provided by Unity affords richer experiences that

support active learning and the AR medium by using

shaders to reveal and conceal interior contents of

anatomical structures.

A Natural Interaction Paradigm to Facilitate Cardiac Anatomy Education using Augmented Reality and a Surgical Metaphor

209

Particle simulation for visualising dynamic

processes such as blood flow in an aorta should

follow a workflow that prioritises the game engine

used for building the ARLE and not 3D animation

software. Despite the capability of 3DS Max to

produce realistic liquids and synchronise blood pump

sequences easily to other animated organs, export

compatibility issues and severe memory loads both

for exported particle animation caches and Unity’s

rendering engine make cross-package workflows a

challenge. Game engines offer faster computation and

rendering of particles, as well as versatility in

controlling animation. Physics-based animation is

achieved easily, with minimum memory overhead,

allowing particles to correspond accurately to

imported model geometry and animation.

5 CONCLUSION AND FUTURE

WORK

This paper has outlined techniques for creating a

photo-realistic cardiac anatomy and physiology

visualisation in an AR HMD. This approach enables

the learner to observe anatomical content in a real-

world setting, while avoiding hands-on interaction

with the environment.

The development of the prototype ARLE

contributes to AR design practice, particularly to

design and development pipelines that focus on HMD-

supported AR visualisation. A range of technical

challenges related to data visualisation, 3D asset

creation, and AR environment design were identified

and addressed. These serve as the impetus for some

general conclusions: (1) an AR environment that uses

HMDs for presenting anatomical visualisations can

support immersion and improved usability due to

presenting models in three dimensions; (2) anatomical

visualisations, particularly those involving compound

organ structures of complex shapes and configurations,

viewed in an AR HMD may benefit from a dissection

metaphor; (3) a walkthrough modality for viewing

anatomy visualisations could further shift cognitive

load away from manual manipulation actions through

hand interaction by allowing users to physically travel

around the heart model and interactively study its

structure.

These conclusions suggest grounds for evaluation

using metrics such as users’ dissection frequency and

position during interaction, or frequency of physical

traversal within the AR space.

Future plans to develop the system include a more

precise extraction of CT scans. This should address the

problem of missing geometry in complex and thin

sections of organic structures, caused by limited

scanning resolution. Once obtained, subsequent

remodelling and retexturing of structures will take

place, creating a more expansive AR representation of

the entire heart anatomy. Similarly, the final version of

the ARLE will include improvements to animated

physiological processes so that the heart circulation

may be represented with greater accuracy and realism.

Finally, an evaluation will be conducted of the

educational impact of the prototype ARLE on cardiac

anatomy knowledge acquisition and learning

experience. Once development and a study design are

complete, the project will progress to empirical user

studies involving selected medical student cohorts in

order to observe interaction behaviour and learning

efficacy while using the ARLE. There are also plans

to develop AI-based evaluation methods. This

includes plans to model the patient-specific heart

structure with AI algorithms using CT images, to

detect possible diseases using the patients’

Electrocardiogram (ECG) data, and to animate both

the heartbeat rhythm and diseases on the 3D model to

support decision making and educational purposes.

ACKNOWLEDGEMENTS

This project was supported by grant from the

Afyonkarahisar Health Sciences University Scientific

Research Projects Coordinatorship (Project No: 19.

TEMATIK.001).

REFERENCES

Ba, R. K. T. A., Cai, Y., and Guan, Y. (2018, December).

Augmented reality simulation of cardiac circulation

using APPLearn (heart). In 2018 IEEE International

Conference on Artificial Intelligence and Virtual

Reality (AIVR), pp. 241-243.

Bakar, W. A. W. A., Solehan, M. A., Man, M., and Sabri, I.

A. A. (2021). GAAR: Gross Anatomy using

Augmented Reality Mobile Application. International

Journal of Advanced Computer Science and

Applications, 12(5), 162-168.

Billinghurst, M. & Henrysson, A., 2009. Mobile

architectural augmented reality. In X. Wang & M. A.

Schanbel (Eds.), Mixed reality in architecture, design

and construction (pp. 93-104). Springer.

Birbara, N. S., Sammut, C., and Pather, N. (2020). Virtual

reality in anatomy: a pilot study evaluating different

delivery modalities. Anatomical Sciences Education,

13(4), 445-457.

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

210

Bogomolova, K., Hierck, B. P., Looijen, A. E., Pilon, J. N.,

Putter, H., Wainman, B., and van der Hage, J. A.

(2021). Stereoscopic three‐dimensional visualisation

technology in anatomy learning: A meta‐ analysis.

Medical Education, 55(3), 317-327.

Bork, F., Stratmann, L., Enssle, S., Eck, U., Navab, N.,

Waschke, J., and Kugelmann, D. (2019). The benefits

of an augmented reality magic mirror system for

integrated radiology teaching in gross anatomy.

Anatomical Sciences Education, 12(6), 585-598.

Cakmak, Y. O., Daniel, B. K., Hammer, N., Yilmaz, O.,

Irmak, E. C., & Khwaounjoo, P. (2020). The human

muscular arm avatar as an interactive visualization tool in

learning anatomy: Medical students’ perspectives. IEEE

Transactions on Learning Technologies, 13(3), 593-603.

Celik, C., Guven, G., and Cakir, N. K. (2020). Integration

of mobile augmented reality (MAR) applications into

biology laboratory: Anatomic structure of the heart.

Research in Learning Technology, 28.

Eichinger, M., Heussel, C. P., Kauczor, H. U., Tiddens, H.,

and Puderbach, M. (2010). Computed tomography and

magnetic resonance imaging in cystic fibrosis lung

disease. Journal of Magnetic Resonance Imaging,

32(6), 1370-1378.

Gloy, K., Weyhe, P., Nerenz, E., Kaluschke, M., Uslar, V.,

Zachmann, G., and Weyhe, D. (2021). Immersive

Anatomy Atlas: Learning Factual Medical Knowledge

in a Virtual Reality Environment. Anatomical Sciences

Education.

Gonzalez, A. A., Lizana, P. A., Pino, S., Miller, B. G., and

Merino, C. (2020). Augmented reality-based learning

for the comprehension of cardiac physiology in

undergraduate biomedical students. Advances in

Physiology Education, 44(3), 314-322.

Jamali, S. S., Shiratuddin, M. F., Wong, K. W., and Oskam,

C. L. (2015). Utilising mobile-augmented reality for

learning human anatomy. Procedia - Social and

Behavioral Sciences, 197 (February), 659–668.

Kalana, M. H. A., Junaini, S. N., and Fauzi, A. H. (2020).

Mobile augmented reality for biology learning: Review

and design recommendations. Journal of Critical

Reviews, 7(12), 579-585.

Kiourexidou, M., Natsis, K., Bamidis, P., Antonopoulos, N.,

Papathanasiou, E., Sgantzos, M., and Veglis, A. (2015).

Augmented reality for the study of human heart anatomy.

International Journal of Electronics Communication and

Computer Engineering, 6(6), 658-663.

Küçük, S., Kapakin, S., and Göktaş, Y. (2016). Learning

anatomy via mobile augmented reality: Effects on

achievement and cognitive load. Anatomical Sciences

Education, 9(5), 411–421.

Kurniawan, M. H., Suharjito, D., & Witjaksono, G. (2018).

Human anatomy learning systems using augmented

reality on mobile application. Procedia Computer

Science, 135, 80-88.

Kurul, R., Ögün, M. N., Neriman Narin, A., Avci, Ş., and

Yazgan, B. (2020). An alternative method for anatomy

training: Immersive virtual reality. Anatomical

Sciences Education, 13(5), 648-656.

Layona, R., Yulianto, B., and Tunardi, Y. (2018). Web

based augmented reality for human body anatomy

learning. Procedia Computer Science, 135, 457-464.

Mandalika, V. B. H., Chernoglazov, A. I., Billinghurst, M.,

Bartneck, C., Hurrell, M. A., Ruiter, N. de, Butler, A.

P. H., and Butler, P. H. (2017). A hybrid 2D/3D user

interface for radiological diagnosis. Journal of Digital

Imaging, 31(1), 56–73.

Maresky, H. S., Oikonomou, A., Ali, I., Ditkofsky, N.,

Pakkal, M., and Ballyk, B. (2019). Virtual reality and

cardiac anatomy: Exploring immersive three ‐

dimensional cardiac imaging, a pilot study in

undergraduate medical anatomy education. Clinical

Anatomy, 32(2), 238-243.

Moro, C., Phelps, C., Redmond, P., and Stromberga, Z.

(2021). HoloLens and mobile augmented reality in

medical and health science education: A randomised

controlled trial. British Journal of Educational

Technology, 52(2), 680-694.

Nuanmeesri, S. (2018). The Augmented Reality for

Teaching Thai Students about the Human Heart.

International Journal of Emerging Technologies in

Learning, 13(6), 203-213.

Piumsomboon, T., Altimira, D., Kim, H., Clark, A., Lee,

G., & Billinghurst, M. (2014, September). Grasp-Shell

vs gesture-speech: A comparison of direct and indirect

natural interaction techniques in augmented reality. In

2014 IEEE International Symposium on Mixed and

Augmented Reality (ISMAR) (pp. 73-82). IEEE.

Romli, R. and Wazir, F. N. H. B. M. (2021, July). AR Heart:

A Development of Healthcare Informative Application

using Augmented Reality. Journal of Physics:

Conference Series, 1962(1), 012065.

Rosni, N. S., Kadir, Z. A., Mohamed Noor, M. N. M.,

Abdul Rahman, Z. H., and Bakar, N. A. (2020).

Development of mobile markerless augmented reality

for cardiovascular system in anatomy and physiology

courses in physiotherapy education. In 14

th

International Conference on Ubiquitous Information

Management and Communication (IMCOM), 2020, pp.

1-5, doi: 10.1109/IMCOM48794.2020.9001692.

Safadel, P. and White, D. (2019). Facilitating molecular

biology teaching by using Augmented Reality (AR) and

Protein Data Bank (PDB). TechTrends, 63(2), 188-193.

Samosky, J. T., Nelson, D. A., Wang, B., Bregman, R.,

Hosmer, A., Mikulis, B., and Weaver, R. (2012,

February). BodyExplorerAR: enhancing a mannequin

medical simulator with sensing and projective

augmented reality for exploring dynamic anatomy and

physiology. In Proceedings of the Sixth International

Conference on Tangible, Embedded and Embodied

Interaction, pp. 263-270.

Venkatesan, M., Mohan, H., Ryan, J. R., Schürch, C. M.,

Nolan, G. P., Frakes, D. H., and Coskun, A. F. (2021).

Virtual and augmented reality for biomedical

applications. Cell Reports Medicine, 2(7), 100348.

Wainman, B., Pukas, G., Wolak, L., Mohanraj, S., Lamb, J.,

and Norman, G. R. (2020). The critical role of stereopsis

in virtual and mixed reality learning environments.

Anatomical Sciences Education, 13(3), 401-412.

A Natural Interaction Paradigm to Facilitate Cardiac Anatomy Education using Augmented Reality and a Surgical Metaphor

211