Near and Far Interaction for Augmented Reality Tree Visualization

Outdoors

Gergana Lilligreen

a

, Nico Henkel and Alexander Wiebel

b

UX-Vis, Hochschule Worms Univeristy of Applied Sciences, Erenburgerstr. 19, 67549 Worms, Germany

Keywords:

Augmented Reality, Interaction, Mixed Reality, 3d Interaction, Nature, Outdoor.

Abstract:

The implementation of augmented reality in nature and in an environmental education context, is not a com-

mon case. Furthermore, augmented reality is often used basically for visualization, which puts users in a

rather passive state. In this paper we investigate how near and far interaction using a head-mounted-display

can be combined with visualization on a tree outdoors and which of the interaction techniques are suitable for

a future use in an environmental education application. We examine the interaction with virtual leaves on the

floor combined with a virtual-real interaction with a real tree. Parameters like type of interaction, different real

surroundings and time task performance, as well the interplay and connection between them are discussed and

investigated. Additionally, process visualization in a nature setting like clouds and rain, and growing of tree

roots are included in the augmented reality modules and evaluated in user tests followed by a questionnaire.

The results indicate, that for a future educational application both, near and far interaction, can be beneficial.

1 INTRODUCTION

Environmentally responsible behavior and its devel-

opment depend on many different factors. The in-

terplay of knowledge about the natural environment

and outdoor experiences, for example, are mentioned

by Ernst and Theimer in their literature review (Ernst

and Theimer, 2011). With augmented reality (AR)

the environment can be enriched with additional in-

formation, visualization and interaction possibilities.

Azuma defines three conditions for AR: the real envi-

ronment must be combined with virtual objects, there

must be interaction in real time, and registration in 3D

(Azuma, 1997). Augmented reality is often used in

education, but its implementation outdoors, in nature

and in an environmental education context, is not a

common case (Rambach et al., 2021) and there is still

a need for more research in this area. Furthermore,

augmented reality is widely used basically for visual-

ization, which puts users in a rather passive state.

For a more interactive setting we examine the dif-

ferent interaction possibilities with an HMD (head

mounted display), in a use case scenario outdoors in

the nature. We discuss the advantages and disadvan-

tages of near and far interaction techniques with re-

a

https://orcid.org/0000-0001-7281-7069

b

https://orcid.org/0000-0002-6583-3092

gard to the special outdoors setting. The discussion is

based on results we obtained from specially designed

user tests with an educational background.

The developed AR modules can be adapted and

integrated into different educational scenarios. This

can be done, for example, in the context of green

school programs on topics such as forest knowledge,

”creatively experiencing the forest”, soil, and the con-

nection between weather, climate and trees. The mod-

ules can also be used during guided hikes, with spe-

cial stations in the forest where environmental AR

games are offered, or during action days for innova-

tive forms of environmental education.

A HoloLens 2 is used for our augmented reality

(also noted by Microsoft as mixed reality (Microsoft,

2021a)) modules. In particular, we study the different

task performance of near versus far interaction in a

tidy and even-leveled versus a more natural environ-

ment.

2 RELATED WORK

Prior research compares interaction techniques in AR

and shows their value in different use cases. Nizam

et al. present an empirical study of key aspects and

issues in multimodal interaction augmented reality

Lilligreen, G., Henkel, N. and Wiebel, A.

Near and Far Interaction for Augmented Reality Tree Visualization Outdoors.

DOI: 10.5220/0010785700003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 2: HUCAPP, pages

27-35

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

27

(Nizam et al., 2018). They found out, that the modal-

ity getting the most attention is the gesture interaction

technique. In another paper, Chen et al. are explor-

ing the possibility of combining two input modali-

ties, gesture and speech, for enhancing user experi-

ence in AR. In their application the example of in-

teracting with a virtual dog is fulfilled by using pre-

defined marker, Leap Motion controller for gestures

and Google Cloud Speech API for speech interaction

(Chen et al., 2017). Their results show that both, ges-

ture and speech, are effective interaction modalities

for performing simple tasks in AR.

Some works compare direct and indirect interac-

tion, either gesture combined with speech (Piumsom-

boon et al., 2014) or, e. g., Lin et al. investigate direct

interaction versus interaction with the use of a con-

troller (Lin et al., 2019). In other works, two-handed

gesture interaction is evaluated with focus on rota-

tion and scaling (Chaconas and H

¨

ollerer, 2018). In

contrast, our work compares near and far interaction

for selection and translation tasks in different environ-

ments in nature.

The efficacy and usability of AR interactions de-

pending on the distance to the virtual objects are

discussed in the work of Whitlock et al. (Whitlock

et al., 2018). The used device (AR-HMD Microsoft

HoloLens 1) is combined with a Nintendo Wiimote as

an additional handheld input device. They concluded

embodied freehand interaction to be the user’s highly

preferred choice of interaction. They explored dis-

tances reaching from 8 to 16 feet (2.44 m to 4.88 m),

which can all be considered as far interaction and do

not include the near interaction that we also observe.

Recent work (Williams et al., 2020) focused on

understanding how users naturally manipulate virtual

objects based on different interaction modalities in

augmented reality. These manipulations consist of

translation, rotation, scale and abstract intentions such

as create, destroy and select. A Magic Leap One op-

tical see-through AR-HMD was used in this experi-

ment. They found out that, when manipulating vir-

tual objects, using direct manipulation techniques for

translations is more natural. This is also the type of

interaction that we investigate.

Others compare different interaction metaphors

(e. g. Worlds-in-Miniature) that can also be seen

as near and far interaction (see e. g. (Kang et al.,

2020)). Some previous work has even attempted to

fuse near and far interaction into a single interaction

metaphor (Poupyrev et al., 1996). However, none

of this previous work considered an outdoor environ-

ment in nature.

Recently, we conducted an outdoor study (Lilli-

green et al., 2021) regarding rendering possibilities

and depth impression for an AR visualization in na-

ture context, but there was no focus on interaction like

in the presented work.

3 AR APPLICATION DESIGN

AND IMPLEMENTATION

In this section, we give an overview of our AR ap-

plication, the implemented interaction techniques and

the developed visualizations for outdoor usage in na-

ture on a tree. A user centered design approach (Gab-

bard et al., 1999) has been applied through the devel-

opment of the educational AR modules. After meet-

ings and interviews with experts in the area of envi-

ronmental education and augmented reality, personas

and user task scenarios were developed and later mod-

ified through the development cycles. The main target

groups for the AR modules are:

• experts in environmental education, that want to

use innovative solutions for nature and environ-

ment

• people interested in nature

• “digital natives” who prefer learning with new

technologies

Depending on the target group, the AR application

can bring different benefits. People, interested in na-

ture can learn in a new way and e.g., explore hidden

things in the forest. On the other hand, people, inter-

ested in technologies can be motivated to learn more

about nature. First user tests (Lilligreen et al., 2021)

were helpful for the visualization of underground AR,

that is part of the module ”growing roots” (see section

3.2). User tests regarding the interaction in outdoor

scenarios are discussed further in this paper.

3.1 Interactive AR Modules Outdoors

As we want to investigate how people interact in an

outdoor scenario, we developed interactive AR com-

ponents focusing on the example tree. These compo-

nents are described in the following.

3.1.1 Touchable Tree

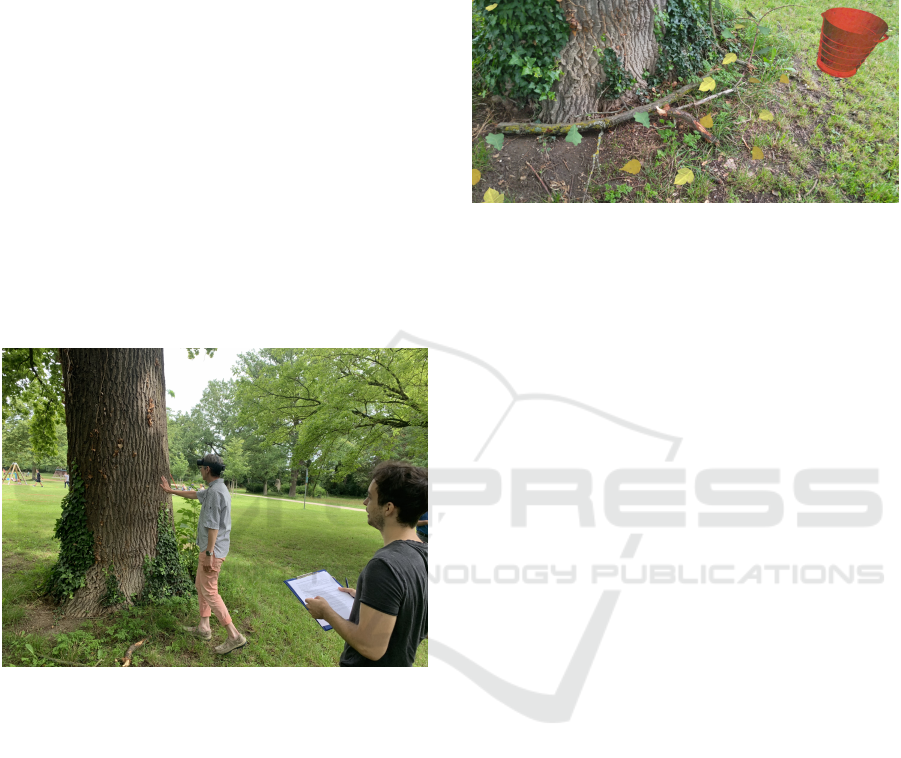

The appearance of virtual leaves is triggered by touch-

ing a real tree stem (see figure 1). Leaves from dif-

ferent tree species fall to the ground around the tree.

This virtual-real interaction makes the connection be-

tween the real environment and the AR visualization

stronger, as this is one of the goals for using AR by

definition. Without any connection to the real-world

virtual reality could be used for visualizing objects.

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

28

We used a draggable virtual stem to decide on which

real tree to show the visualization. Here, the first idea

was to use the surface magnetism solver (Microsoft,

2021d) to position the visualization. This would be a

good choice for displaying objects on walls or floors

as they appear automatically and ”stick” to the walls.

In the context of nature most objects do not have flat

and big surfaces and therefore the surface magnetism

does not work well. We decided to let the virtual stem

be manually and thus more precisely positioned by

an advanced user, which could be a teacher in edu-

cational context or an organizer of nature educational

excursions. After positioning the virtual tree on the

desired real one, the teacher confirms and the virtual

stem ”disappears”. Technically this happens by using

a shader that renders the stem invisible. The virtual

tree is not draggable anymore but can be used to show

virtual leaves on the ground. The user (a student, in

the educational context) can now touch the tree and

virtual leaves fall to the ground.

Figure 1: By touching the real tree virtual leaves fall to the

ground.

3.1.2 Collecting AR Leaves

After touching the tree over 30 virtual leaves (three

different types) fall to the ground (See figure 2). The

leaves are mixed and appear around the real tree. The

users can grab the leaves using direct manipulation

with their hands, which is recognized by the HMD

(HoloLens 2 (Microsoft, 2021b)). We refer to this

type of interaction as near interaction. The users can

then carry them to a virtual bucket, which also appears

next to the tree. By putting a leaf in the bucket, a timer

and a counter are activated and the users can see the

time and count of leaves above the bucket. The sec-

ond possibility to collect the virtual leaves is by using

the far interaction (sometimes called ”point and com-

mit with hands” (Microsoft, 2021c)), which is also re-

alizable on the HMD used (Microsoft, 2021c). Here,

a ray, coming from the hand is used to select the leaf

and then with the grab gesture known from the di-

rect interaction the object can be moved. The exact

scenario for the user tests is explained in detail in sec-

tion 4.1.

Figure 2: Different types of leaves lie on the ground and can

be collected in a bucket.

3.1.3 Clouds and Rain

Another interactive AR module in our application, in-

spired by nature, shows virtual clouds a couple of

meters away from the tree. These clouds (rendered

in low-poly style) can be dragged by the users using

near or far interaction. When moved to the tree, vir-

tual rain starts falling from the clouds. For the vi-

sualization of the rain, we combined several particle

systems in the Unity engine (Unity, 2021).

3.2 Visualization of Natural

Underground Processes in the

Forest

To exploit the AR advantage of making the invisi-

ble visible in nature, we developed two AR visual-

izations, which are part of the AR application for the

user tests. The modules are described next.

3.2.1 Underground Water

After starting the rain by dragging the clouds, un-

derground water molecules are visualized with blue

spheres (See figure 3). The spheres are animated and

shrink over time to disappear in the soil and the tree

root. A particle systems is used to realize this effect.

3.2.2 Growing Roots

The biggest hidden object in our case is the tree

root. We used a 3D model which extends three me-

ters in each direction starting at the tree stem. To

show the growth we animated a root string using

the computer graphics software Blender (Blender-

Foundation, 2021). The animation is played in a loop,

Near and Far Interaction for Augmented Reality Tree Visualization Outdoors

29

Figure 3: Part of the tree root and blue water particles.

so the user can watch it more than one time. The ani-

mation starts when the rain begins to fall. Because in

nature there are trees of different sizes, an adjustable

size of the roots is necessary. We provide functional-

ity for scaling to the model and the users can control

the size incrementally with voice commands (“big-

ger”/“smaller”).

3.3 Indoor vs. Outdoor

During the development cycle we completed tests in-

doors and outdoors and compared them. For example,

in the beginning flat leaves were used for the collect-

ing task. This was pretty easy indoors, but quite dif-

ficult or uncomfortable outdoors. Some users could

not grab the leaves. To ensure that the leaves can be

collected easier, also on uneven areas outdoors, we

provided the leaves with (invisible) colliders a couple

of centimeters bigger with respect to the y axis. This

way our modules can be also used in an area covered

with grass and small branches. Additionally, if the

soil is wet after rain, it could be uncomfortable for

the users to touch it when using direct manipulation.

With bigger colliders, they can still grab the virtual

leaves almost naturally but do not have to get their

hands wet.

Regarding depth perception and the visualization

of underground objects like the tree roots, a recent

work (Lilligreen et al., 2021) investigated different

rendering possibilities using virtual holes and differ-

ent textures in an outdoor scenario. They found out

that the quality of the depth perception using an HMD

is already on a very high level due to the availability

of stereo rendering. We decided to display the tree

root without additional masking so it could be seen in

its whole size. In an indoor location this would be not

very convincing as usually there is less space and the

context of nature would be missing.

For the visualization of the rain outdoors, we used

brighter colors because outside there is more light and

the raindrops merge with the background more then in

an indoor location.

A location outdoors in the nature can be more

dynamic than an indoor location. Light changes

can appear depending on the weather or the season.

Unplanned changes in the environment like fallen

branches or too high grass could also hinder the us-

age of the application on a particular tree. Therefore

it could be necessary to choose a tree spontaneously

– with sufficient shade or enough space around the

tree. To enable more flexibility we built in functions

for scaling the tree stem and roots in the AR appli-

cation with the usage of speech commands. Further-

more a teacher can choose between tree types, so that

different virtual leaves can be selected according to

the situation on site.

4 METHODS AND

EXPERIMENTAL DESIGN

In this study we applied a formative evaluation to ex-

amine near and far interaction in AR with small and

bigger objects in two different nature settings out-

doors. Task-based scenarios were developed in order

to compare the different interaction techniques in the

different natural surroundings. We were interested in

user impression of the two variants of the interaction

technique, the task performance using the different in-

teraction techniques and in usability issues. We as-

sumed that each technique had advantages and weak-

nesses.

4.1 User Tests

For the conduction of user tests outdoors the climate

conditions are very important. We chose days without

rain and snow, so that the hardware can be used easily.

Although we have performed the tests in the summer,

some appointments had to be postponed due to rain.

The time periods were in the morning or the early af-

ternoon in order to ensure that the light conditions are

suitable. The tests were made under a tree, so there

was enough shadow, that an optical see through de-

vice could be used also on sunny days.

At the beginning the HMD was calibrated for the

current user. This way the precision for the interac-

tions is increased. During the tests we observed the

participants and took notes when they commented on

the application.

Using the module “Collecting AR Leaves”

(Sec. 3.1.2) we measured the user task performance.

We chose task completion time as a metric since this

is a commonly used metric for evaluation of inter-

actions in virtual and augmented reality (Samini and

Palmerius, 2017). The participants stood under an oak

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

30

tree and had to collect five oak leaves and put them

in a virtual bucket next to the tree as fast as possi-

ble. They had to choose from three leaf types – birch,

oak and maple. In a preparatory stage, the users tried

the different techniques, which had been explained to

them before the test started. The first task was to col-

lect the virtual oak leaves using the direct manipula-

tion with hands (near interaction, see figure 4 top).

The second task was to collect the leaves using far

interaction (See figure 4 down). The different times

it took users to complete the tasks were saved. Col-

lecting of virtual leaves was selected as a task to com-

pare the different interaction possibilities. The overall

scenario described so far was chosen for two reasons.

First, an AR outdoor study (Lilligreen et al., 2021)

focused on depth perception found that context plays

an important role – e. g., users rejected a visualization

of a grid over a virtual hole to enhance depth percep-

tion in the forest because a grid is not what would be

expected in nature. Leaves are part of the forest and

collecting them during a walk is not an atypical ac-

tion. Second, a task to collect only a specific type of

tree leaves can be used in a learning scenario.

Figure 4: The two investigated interaction techniques. Top:

Direct manipulation with hands (near). Down: Point and

commit with hands (far).

Each user had to perform the described test two

times. The first location was in a tidy and even-leveled

area in a park alley, under an oak tree. The second test

was in a more natural area with grass and branches

under another oak tree. This way we wanted to com-

pare also the times of the tasks in different types of

outdoor environments.

Another task for the participants (to be performed

at both locations) was to move virtual clouds to the

tree. Then a virtual rain starts to fall as described in

Section 3.1.3. The water molecules appear under the

ground and a branch of the tree root begins to grow.

Similar scenarios can be used in an educational set-

ting to show hidden processes in nature or e.g., to

introduce a topic about a drought caused by climate

crises and the connection with trees and roots. The

participants had to observe and comment what they

see and after the three tasks, they had to fill in a ques-

tionnaire, which is described in Section 4.4.

4.2 Working Hypotheses

We defined some working hypotheses which are de-

scribed next.

H1: There will be a difference regarding perfor-

mance between near and far interaction.

We assumed that the two interaction methods will

perform differently. From first short tests we no-

ticed, that different people had a different pre-

ferred method. To investigate this, the time per-

formance had to be compared and in the question-

naire there were statements for subjective prefer-

ence.

H2: Older participants (55 and older) will prefer us-

ing far interaction.

Around 1/3 from the test users were 55 or older.

We thought that the bending to collect items from

the ground could be uncomfortable or tiring over

time, especially for older people. Therefore they

could prefer to collect the items from distance us-

ing the far interaction method with a ray.

H3: Users will be faster in a tidy, even-leveled area.

When comparing the performance in the two lo-

cations, we assumed that in a flat area, where the

users can walk easier and the ground is even they

would need less time to perform the task (col-

lecting virtual leaves). Also, the grasping of the

leaves should be easier when no or at least less

real objects are on the ground.

H4: For more participants near interaction for col-

lecting items would be easier.

We predicted, that near interaction for collecting

objects would be more natural and easier, as this

type of interaction is more similar to the real-

world grasping and the users are more familiar

with that, then with the interaction using a ray

from a distance.

Near and Far Interaction for Augmented Reality Tree Visualization Outdoors

31

Table 1: Participants characteristic – age.

Age: years Count (%)

< 20 2 (10%)

20 - 29 4 (20%)

30 - 39 1 (5%)

40 - 49 6 (30%)

50- 59 3 (15%)

> 60 4 (20%)

H5: In a more natural area, the far interaction will be

preferred rather than the near interaction.

In contrast to H4 we raised the question if the type

of environment plays a big role. We assumed that

in wilder environments people would prefer to in-

teract from distance instead of touching the real

grass, leaves, branches or to walk between bushes

to reach an object. Furthermore, when the partic-

ipants get more familiar with the far interaction,

they could prefer it, as this way they do not have

to move too much and could be faster.

4.3 Participants

A total of 20 people (2/3 male, 1/3 female) partic-

ipated in our user tests. The youngest user was 10

years old, the child was accompanied by his parent,

who gave permission to perform the experiment. The

oldest user was 78, the average age was 42 years. The

age distribution is listed in table 1.

The results of our test are intended to be useful

for an educational module in nature. Therefore, we

focused on two types of participants - people which

work or are very interested in nature and environment

(11 participants) and users, which already have first

experience with AR or study/work in the area of com-

puter science (9 participants). This way we collected

opinions from the first group that can represent teach-

ers or organizers of educational excursions in the na-

ture. Some of these participants work in nature or-

ganizations or in the area of environment and plants.

The second group can be representative for student

groups or digital natives which enjoy working with

technical items.

4.4 Questionnaire

A questionnaire (See figure 5) completed by the par-

ticipants after the user tests, helped to obtain further

information and to capture the subjective experience

of the participants. We adopted and adjusted state-

ments from SUS (Brooke, 1995) and NASA Task

Load Index (Hart, 2006). The first part of the ques-

tionnaire was completed directly at the first testing lo-

cation. The remaining questions were answered at the

end of the whole user test. Questionnaire metrics, that

can be considered for measuring usability (Samini

and Palmerius, 2017) are integrated in our statements

e. g., ease of use (S2, S6), comfort (S2, S3, S6, S7),

enjoyability (S4, S8) or fatigue (S3, S7). An expected

problem regarding the translation of virtual objects is

the “gorilla-arm” effect (Jerald, 2015) when moving

a lot of objects using near interaction and holding the

arm high. This can cause fatigue and reduce the user

comfort.

Figure 5: Statements from the questionnaire for the collect-

ing leaves task (lower rows for the natural area marked with

a graphic of leaves).

We also integrated an additional free text question

(“You triggered the falling of the leaves by touching

the real tree trunk. How did you perceive this real-

virtual interaction?”) to obtain more qualitative data.

5 RESULTS

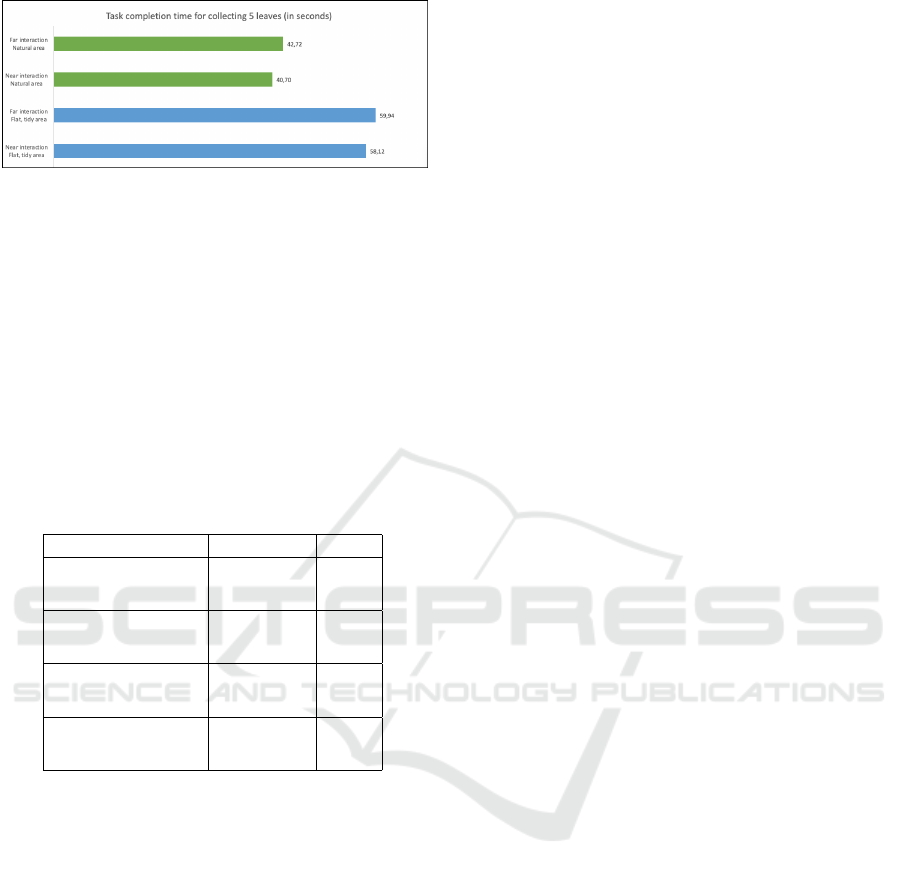

Regarding the task completion time and our hypothe-

ses H1 and H3, the average time from all finished

tests was calculated for the four combinations of in-

teraction techniques and locations (See figure 6). The

participants were faster at the more natural location,

which was also the second location. Between the near

interaction (collecting directly with hands) and the far

interaction (using the ray to select) there was a differ-

ence of approximately two seconds – the participants

were slightly faster when they collected the leaves

directly with their hands. It is also to mention that

some of the older participants had problems to collect

the virtual leaves and could not finish the time-based

tasks. These were excluded from the calculation of

the results.

Another result which was not the assumed one

(H2), is that older participants slightly preferred more

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

32

Figure 6: Results for the average task completion times for

collecting 5 leaves (in seconds) with the different interac-

tion techniques at the different locations.

the direct collecting of the leaves. Here, the more

natural interaction is to some extent stronger than the

benefit of not needing to bend and walk.

According to the data from the filled question-

naires (See Table 2) and after reading and analyzing

the observation notes we can state, that our assump-

tion, that users will find the direct interaction tech-

nique easier (H4) is not correct. The results for both

techniques (near and far) show minimal difference.

Table 2: Results for the statement: ”Picking up the leaves

was easy after a short period of familiarization.”.

fully agree agree

near interaction

in tidy area

8 5

near interaction

in natural area

10 6

far interaction

in tidy area

8 7

far interaction

in natural area

10 5

Observing the results, it is apparent that no sub-

stantial difference appears between the far and near

interaction in a natural area (H5). For the near inter-

action the mean value was 4.3 with SD = 0.78, for

the far interaction the mean value was 4.05 with SD

= 1.20. 3/4 of the users agree or fully agree that both

were easy – collecting the leaves with far (15 persons)

and with near (16 persons) interaction.

Regarding the factors of enjoyability and fatigue

it is to mention, that most users agree or fully agree,

that it was fun to collect leaves using near and far in-

teraction and that each interaction is not tiring.

Considering the the free text question about how

users perceive the real-virtual interaction with the

tree, 65 % of the participants have given positive com-

ments e.g., “surprisingly real”, “The interaction was

intuitive as it established the connection to reality and

thereby slowly introduced to the AR world”, “a great

experience”. One third of the users could not see the

falling of the virtual leaves, they saw them lying on

the ground already. The small field of view of the

HMD could be a reason for this. Additional visual

feedback or longer, respectively more slowly falling

leaves can improve the perception.

6 DISCUSSION

With this work we provide some valuable insights in

the usage of near and far interaction techniques in a

nature context. This way, we address the need for

more research about the usage of AR in the area of

nature and environment.

The difference between the two investigated in-

teraction possibilities – near (direct with hands) and

far (with a ray) is not substantial regarding the ease

of use. It was observed that it seems to be a sub-

jective choice according to the preferences of the

users. In some cases, only one of the interaction tech-

niques functioned good. Some participants moved

their hands too fast or too slowly while “pinching”

the leaf and it seemed as if the calibration process at

the beginning was not fully precise. The interaction

with the ray was to some extent untypical and not in-

tuitively understandable for some of the participants.

For these reasons we recommend for future applica-

tions to include both interaction options.

The results from our user tests regarding the dif-

ferent environments indicate other than expected, that

users were faster when interacting in the natural area.

The uneven ground, covered with grass and branches

did not affect the speed of collecting leaves. It was

observed that some participants move grass with one

hand to reach the virtual leaves, but the provided big-

ger colliders for the virtual leaves seem to be a good

and efficient way to deal with the outdoor conditions.

Additional explanation for this result can be the fact,

that for the second test at the natural area, the users

have gotten used to the interaction techniques and

were a little faster. More research with different set-

tings and with more AR experienced users might be

useful to get a more comprehensive result.

The underground visualization was fascinating for

the participants, which increased the enjoyability fac-

tor. But in a future application the growth of the tree

roots should be demonstrated on the whole root struc-

ture (and not only on a branch) in order to be directly

visible.

7 CONCLUSION

In this paper we investigated the usage of near and far

interaction using an HMD at two different types of lo-

Near and Far Interaction for Augmented Reality Tree Visualization Outdoors

33

cations in nature. We developed AR visualizations for

the example of a tree and used them in interactive ed-

ucational scenarios. This way, we explored how users

interact in a natural-virtual constellation and gained

understanding in usability matters or preferences for

a particular interaction technique in an outdoor usage.

We investigated the interaction techniques on the ex-

ample of virtual leaves, clouds and with a real tree.

A slightly better task completion time was measured

when using the near interaction technique (see sec-

tion 5). However, further work and different cases

are needed to provide more general conclusions for

an outdoor usage. We observed that the users enjoyed

the different tasks and highlighted that a usage in a

future educational application can benefit from both –

the near and the far interaction.

ACKNOWLEDGEMENTS

This work has been performed in project SAARTE

(Spatially-Aware Augmented Reality in Teaching and

Education). SAARTE is supported by the European

Union (EU) in the ERDF program P1-SZ2-7 and by

the German federal state Rhineland-Palatinate (Antr.-

Nr. 84002945).

REFERENCES

Azuma, R. T. (1997). A survey of augmented reality. Pres-

ence: Teleoper. Virtual Environ., 6(4):355–385.

Blender-Foundation (last access 13 Sep 2021). Blender

– the free and open source 3d creation suite

(https://www.blender.org/).

Brooke, J. (1995). Sus: A quick and dirty usability scale.

Usability Eval. Ind., 189.

Chaconas, N. and H

¨

ollerer, T. (2018). An evaluation of bi-

manual gestures on the microsoft hololens. pages 1–8.

Chen, Z., Li, J., Hua, Y., Shen, R., and Basu, A. (2017).

Multimodal interaction in augmented reality. In 2017

IEEE International Conference on Systems, Man, and

Cybernetics (SMC), pages 206–209.

Ernst, J. and Theimer, S. (2011). Evaluating the effects of

environmental education programming on connected-

ness to nature. Environmental Education Research,

17(5):577–598.

Gabbard, J., Hix, D., and Swan, J. (1999). User-centered

design and evaluation of virtual environments. IEEE

Computer Graphics and Applications, 19(6):51–59.

Hart, S. G. (2006). Nasa-task load index (nasa-tlx); 20

years later. Proceedings of the Human Factors and

Ergonomics Society Annual Meeting, 50(9):904–908.

Jerald, J. (2015). The VR Book: Human-Centered Design

for Virtual Reality. Association for Computing Ma-

chinery and Morgan and Claypool.

Kang, H., Shin, J.-h., and Ponto, K. (2020). A compara-

tive analysis of 3d user interaction: How to move vir-

tual objects in mixed reality. In the Proceedings of the

2020 IEEE VR Conference.

Lilligreen, G., Marsenger, P., and Wiebel, A. (2021). Ren-

dering tree roots outdoors: A comparison between

optical see through glasses and smartphone modules

for underground augmented reality visualization. In

Chen, J. Y. C. and Fragomeni, G., editors, Virtual,

Augmented and Mixed Reality; HCI International

Conference 2021, pages 364–380, Cham. Springer In-

ternational Publishing.

Lin, C. J., Caesaron, D., and Woldegiorgis, B. H. (2019).

The effects of augmented reality interaction tech-

niques on egocentric distance estimation accuracy.

Applied Sciences, 9(21).

Microsoft (last access 14 Sep 2021d). Surface mag-

netism solver - mixed reality toolkit for unity

(https://docs.microsoft.com/en-us/windows/mixed-

reality/design/surface-magnetism).

Microsoft (last access 5 Aug 2021a). Microsoft hololens

2 description (https://www.microsoft.com/de-

de/hololens).

Microsoft (last access 5 Aug 2021b). Microsoft:

Mixed reality - direct manipulation with hands

(https://docs.microsoft.com/en-us/windows/mixed-

reality/design/direct-manipulation).

Microsoft (last access 5 Aug 2021c). Microsoft:

Mixed reality - point and commit with hands

(https://docs.microsoft.com/en-us/windows/mixed-

reality/design/point-and-commit).

Nizam, M. S., Zainal Abidin, R., Che Hashim, N., meng

chun, L., Arshad, H., and Majid, N. (2018). A review

of multimodal interaction technique in augmented re-

ality environment. International Journal on Advanced

Science, Engineering and Information Technology,

8:1460.

Piumsomboon, T., Altimira, D., Kim, H., Clark, A., Lee, G.,

and Billinghurst, M. (2014). Grasp-shell vs gesture-

speech: A comparison of direct and indirect natural

interaction techniques in augmented reality.

Poupyrev, I., Billinghurst, M., Weghorst, S., and Ichikawa,

T. (1996). The go-go interaction technique: Non-

linear mapping for direct manipulation in vr. In Pro-

ceedings of the 9th Annual ACM Symposium on User

Interface Software and Technology, UIST ’96, page

79–80, New York, NY, USA. Association for Com-

puting Machinery.

Rambach, J., Lilligreen, G., Sch

¨

afer, A., Bankanal, R.,

Wiebel, A., and Stricker, D. (2021). A survey on ap-

plications of augmented, mixedand virtual reality for

nature and environment. In Proceedings of the HCI

International Conference 2021.

Samini, A. and Palmerius, K. L. (2017). Popular perfor-

mance metrics for evaluation of interaction in virtual

and augmented reality. In 2017 International Confer-

ence on Cyberworlds (CW), pages 206–209.

Unity (last access 5 Aug 2021). Unity technolo-

gies: Unity user manual - particle systems

(https://docs.unity3d.com/manual/particlesystems.html).

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

34

Whitlock, M., Harnner, E., Brubaker, J., Kane, S. K., and

Szafir, D. (2018). Interacting with distant objects in

augmented reality. 2018 IEEE Conference on Virtual

Reality and 3D User Interfaces (VR), pages 41–48.

Williams, A., Garcia, J., and Ortega, F. (2020). Understand-

ing multimodal user gesture and speech behavior for

object manipulation in augmented reality using elici-

tation. IEEE transactions on visualization and com-

puter graphics, PP.

Near and Far Interaction for Augmented Reality Tree Visualization Outdoors

35