Exploiting EEG-extracted Eye Movements for a Hybrid SSVEP Home

Automation System

Tracey Camilleri

1 a

, Jeanluc Mangion

1,2

and Kenneth Camilleri

1,2 b

1

Department of Systems and Control Engineering, University of Malta, Msida MSD2080, Malta

2

Centre for Biomedical Cybernetics, University of Malta, Msida MSD2080, Malta

Keywords:

Hybrid BCI, SSVEP, Eye Movement Detection, EEG.

Abstract:

Detection of eye movements using standard EEG channels can allow for the development of a hybrid BCI

(hBCi) system without requiring additional hardware for eye gaze tracking. This work proposes a hierarchical

classification structure to classify eye movements into eight different classes, covering both horizontal and

vertical eye movements, at two different gaze angles in each of four directions. Results show that the highest

eye movement classification was obtained with frontal EEG channels, achieving an accuracy of 98.47% for

two directions, 74.38% with four directions and 58.31% with eight directions. Eye movements can also be

classified reliably in four directions using occipital electrodes with an accuracy of 47.60% which increases to

around 80% if three frontal channels are also included. The latter result was used to develop a hybrid SSVEP

home automation system which exploits the EEG-extracted eye movement information. Results show that a

sequential hBCI gave an average accuracy of 82.5% when compared to the 69.17% obtained with a standard

SSVEP based BCI system.

1 INTRODUCTION

Electroencephalography (EEG) is the recording of

brain signals using non-invasive electrodes, typically

used for the development of EEG-based brain com-

puter interface (BCI) systems. One of the most

promising BCI systems is that based on steady-state

visual evoked potentials (SSVEPs) which are elicited

in the occipital region of the brain when the subject

attends to a set of flickering stimuli. When making

use of such a system the user carries out a series of

eye movements to saccade from one stimulus to an-

other, selecting a sequence of command functions in

the process. Eye movements have been used in hy-

brid BCI systems aimed to improve the BCI perfor-

mance either by recording electrooculographic (EOG)

signals (Padfield, 2017) or through a vision based

eye gaze tracker (Saravanakumar, 2018). The for-

mer however requires an extra set of electrodes placed

around the subject’s eyes whereas the latter requires

additional hardware.

This work investigates the possibility of classify-

ing eye movements typically carried out during the

a

https://orcid.org/0000-0002-4908-1863

b

https://orcid.org/0000-0003-0436-6408

use of an SSVEP-based BCI, using standard EEG sig-

nals only, thus allowing for the possibility to design a

simple hybrid BCI requiring only the use of an EEG

cap. Some researchers (Gupta et al., 2012; Hsieh

et al., 2014) took this approach but the classification

of the eye movements was limited to horizontal eye

movements, typically distinguishing between left and

right eye movements only. Belkacem et al. (Belka-

cem et al., 2013; Belkacem et al., 2015) however

attempted to classify both horizontal (left vs right)

and vertical (up vs down) eye movements, obtaining

an accuracy of 98% for the horizontal and 46% for

the vertical eye movements in their most recent work

(Belkacem et al., 2015). Dietriech et al. (Dietriech

M. P., 2017) also considered the classification of four

extreme eye movements and the central position and

obtained a true positive rate of 96.6% for one subject

using a KNN classifier.

In (Gupta et al., 2012; Hsieh et al., 2014; Belka-

cem et al., 2013; Belkacem et al., 2015; Dietriech

M. P., 2017), frontal and temporal electrodes were

used but none of the works investigated the possi-

ble detection of the eye movements through occipital

electrodes, which are the standard set of electrodes

used in SSVEP-based BCIs. Furthermore, eye move-

ments were limited to left, right, up and down move-

Camilleri, T., Mangion, J. and Camilleri, K.

Exploiting EEG-extracted Eye Movements for a Hybrid SSVEP Home Automation System.

DOI: 10.5220/0010783800003123

In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2022) - Volume 4: BIOSIGNALS, pages 117-127

ISBN: 978-989-758-552-4; ISSN: 2184-4305

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

117

ments. This work thus aims to investigate the extent

at which eye movement information can be extracted

from the frontal region, occipital region, or both, and

whether it is possible to classify a saccadic eye move-

ment of different visual angles. These results are then

exploited within an SSVEP-based home automation

system where a comparative analysis is carried out to

assess the performance of a hybrid BCI which fuses

EEG-based eye movement information with SSVEP

information and compare it with that of a standard

SSVEP-based BCI system.

This paper is divided as follows: Section 2

presents the experimental setup, the processing of

data and the method used to classify eye movements,

Section 3 presents and discusses the results related

to the EEG-based eye movement detection, Section

4 presents the hybrid SSVEP-based home automation

system with the results obtained for both an offline

and an online study and finally Section 5 concludes

the paper.

2 METHOD

2.1 Experimental Setup and Data

Acquisition

A computer system with a 22 inch monitor, a resolu-

tion of 1920 × 1080 pixels and a refresh rate of 60Hz

was used. The g.USBamp from g.tec was used for

EEG data acquisition. The visual stimuli were de-

signed using PsychoPy (Peirce et al., 2019), an open-

source Python toolbox, which permits control of the

timing of the stimuli with very high precision.

EEG data was recorded at a sampling frequency

of 256 Hz from a total of 19 channels: O1, Oz, O2,

PO7, PO3, POz, PO4, PO8, T7, FT7, F7, AF7, Fp1,

Fpz, Fp2, AF8, F8, FT8 and T8. This set of electrodes

was chosen in order to carry out a thorough analysis

of which combination of frontal channels is best to

extract eye-movement related EEG and to determine

to what extent is the occipital region capable of pro-

viding such information.

Five healthy subjects participated in this study

which was approved by the University Research

Ethics Committee (UREC) of the University of Malta.

Every participant was seated in front of an LCD mon-

itor, placed approximately at eye-level with the sub-

ject. Participants were advised to limit their physical

movement to avoid EMG artifacts. A chin rest was

provided to restrict head movements. This setup was

used to ensure that for this preliminary study the data

is not confounded by artifacts.

Figure 1: Positions considered on screen for 8 different sac-

cadic movements.

Figure 2: Timing protocol of a single trial.

EEG-based eye-gaze data was recorded for of-

flline analysis. Five sessions were recorded and in

each session, 10 trials were allocated for each po-

sition, amounting to a total of 80 trials. In a trial,

the subject was instructed to look at the center of the

screen for 1 second, saccade to one of eight positions

on the screen as shown in Fig. 1 for another 1 second

and saccade back to the center in the next 1 second.

Subjects were instructed to blink only during rest pe-

riods which were indicated by a red stimulus at the

last 1 second of each trial. The timing protocol of a

single trial is shown in Fig. 2. A rest period of 1.5

seconds was allocated after each trial.

2.2 Data Processing

EEG data was filtered with a 4

th

order infinite im-

pulse response bandpass filter having cut-off frequen-

cies of 0.5 Hz and 7 Hz. Common spatial patterns

(CSP) (Ramoser et al., 2000) was then used as the

feature extraction method. CSP applies a joint diago-

nalisation on the covariance matrices of two classes,

resulting in a transformation W . This is applied to the

EEG data, X ∈ R

N×T

, where N represents the num-

ber of channels and T the length of the data, to project

this to Z ∈ R

N

1

×T

with a reduced number of channels

N

1

< N., The variance of each channel is then used as

a feature vector, i.e. v = [σ

2

1

,.. .,σ

2

N

1

]

T

where σ

2

i

is the

variance of channel i of Z . A support vector machine

as in (Bishop, 2006) was then applied to classify fea-

tures into two classes. Since both the CSP and SVM

algorithm require training, the recorded data was di-

vided into three sets; one was used to train the CSP,

the other to train the SVM and the third was used as

BIOSIGNALS 2022 - 15th International Conference on Bio-inspired Systems and Signal Processing

118

test data. A three fold cross validation approach was

then adopted to quantify performance.

2.3 Classifying Eye Movements

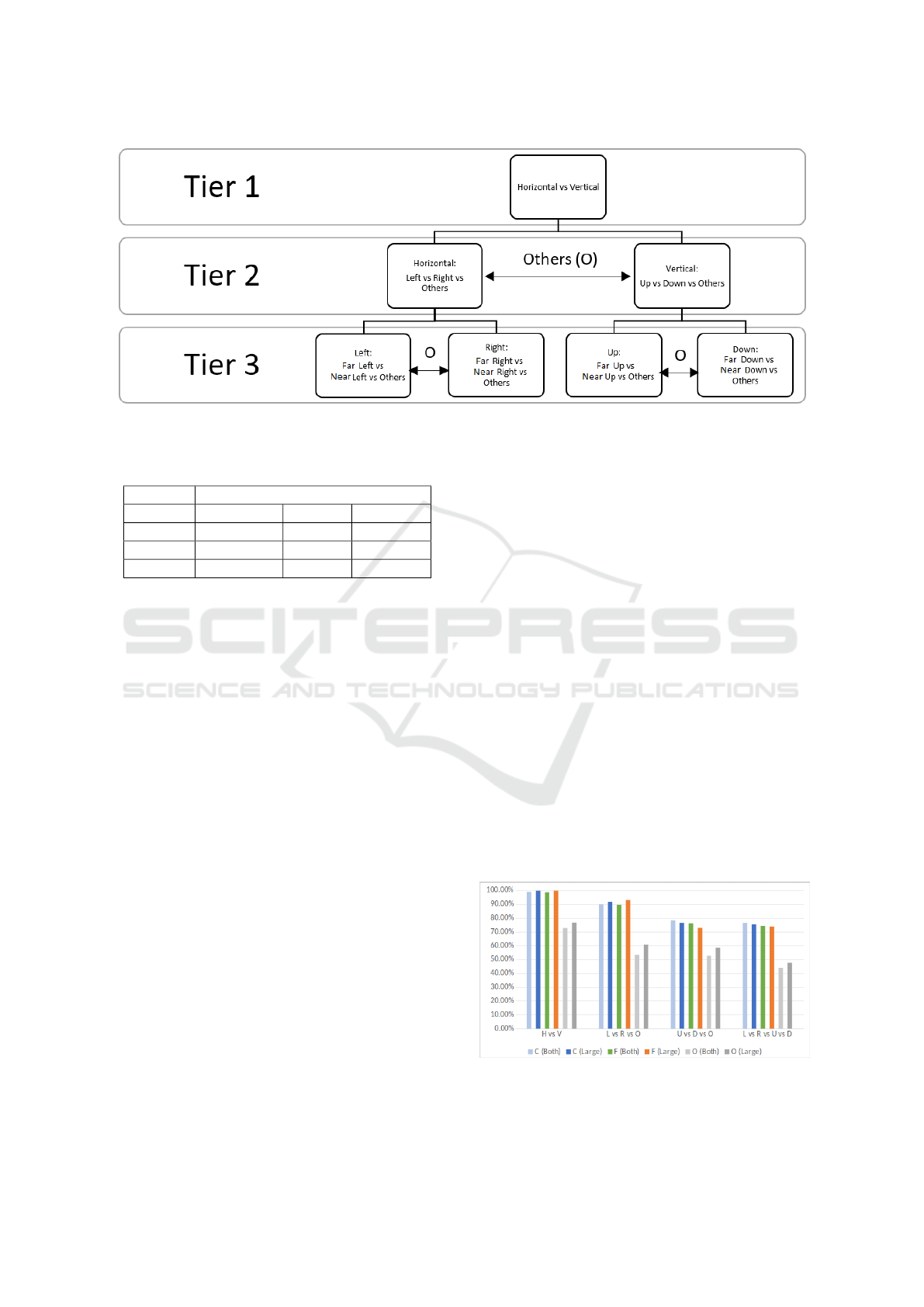

A hierarchical classification system was used to clas-

sify an eye movement into one of eight possible

classes. As shown in Fig. 3, the hierarchy consists

of three tiers. At the top tier, epochs are classified

as either horizontal (H) or vertical (V) saccadic eye

movements. Once labelled, epochs are passed down

to the second tier where the H labelled saccades are

passed to the ‘Left (L) vs Right (R) vs Others (O)’

classifier while the V labelled saccades are passed to

the ‘Up (U) vs Down (D) vs Others (O)’ classifier. It

must be noted that although three classes are present

from the second tier downwards, both the CSP algo-

rithm and the SVM classifier were executed by per-

forming independent pairwise classifications between

all pairs of classes and assigning the majority class to

the trial (Johannes M. G., 1999), known as the ‘One

vs One’ approach. Epochs labeled as ‘Others’ within

the second tier are passed on to the sibling class on

the same tier. For example, a saccade labelled as O

by the ‘L vs R vs O’ classifier is passed to its sibling

class, specifically to the ‘U vs D’ classifier within the

’U vs D vs O’ block.

Finally, at the third tier, epochs are classified ac-

cording to the visual angle of the horizontal or vertical

saccade where a visual angle of 23.9

◦

from the screen

centre is labelled as ’far’ (F), while a 12.5

◦

visual an-

gle is labelled as ’near’ (N). For example, trials la-

belled as L from the second tier are passed to a ’Near

Left (NL) vs Far Left (FL) vs O’ classifier. Similar to

classifiers at the second tier, eye movements classified

as O are passed on to their sibling classifier within this

third tier.

2.4 Performance across Different Scalp

Regions

Part of the analysis carried out was to investigate how

the eye movement classification performance varies

when considering EEG channels at i) frontal chan-

nels, ii) occipital channels and iii) both frontal and oc-

cipital channels combined. For the frontal channels,

subsets of 11, 7, 5 and 3 channels were also consid-

ered as in Table 1.

Table 1: Subsets of considered frontal channels.

Number

of Frontal

Channels

Frontal Channels

11 T7, FT7, F7, AF7, Fp1, Fpz, Fp2,

AF8, F8, FT8 T8

7 F7, AF7, Fp1, Fpz, Fp2, AF8 F8

5 AF7, Fp1, Fpz, Fp2 AF8

3 AF7, Fpz, AF8

3 RESULTS

3.1 Hierarchical Classification

Initially, the testing trials were passed through the hi-

erarchical structure and labelled into one of the 8 pos-

sible classes. Table 2 shows the classification accu-

racy of the 7 classifiers within the hierarchy while Ta-

ble 3 shows the results at each tier. Clearly, the perfor-

mance is very high at the first tier, with results of the

combined or frontal channels exceeding 98%. Hence,

classifying between horizontal and vertical eye move-

ments can be done at a very high accuracy using these

channel combinations. If occipital channels are used

instead, the performance is 71.11%.

As the trials flow through the hierarchy, the la-

belling of left vs right trials is done with a higher ac-

curacy than that involving up and down trials. Tak-

ing the frontal channels option as an example, the L

vs R vs O classifier achieved an accuracy of 89.17%

while the U vs D vs O classifier reached 73.82%.

This work further investigated the possibility of dis-

tinguishing between two visual angles, referred to as

near and far, in each of the four directions. The re-

sults of Table 2 show that left and right trials are more

accurately classified as near and far eye movements,

than up and down trials. For the former, classifica-

tion reached 70.83% (left) and 68.06% (right) while

Table 2: Classification accuracies of the 7 classifiers within

the hierarchical structure at combined (C), frontal (F) and

occipital (O) channels.

Scalp Region

C F O

H vs V 98.61% 98.47% 71.11%

L vs R vs O 88.96% 89.17% 50.21%

U vs D vs O 74.40% 73.82% 53.40%

FL vs NL vs O 64.17% 70.83% 53.40%

FR vs NR vs O 65.42% 68.06% 46.25%

FU vs NU vs O 49.24% 52.50% 37.71%

FD vs ND vs O 47.78% 50.97% 37.08%

Exploiting EEG-extracted Eye Movements for a Hybrid SSVEP Home Automation System

119

Figure 3: 3-Tiered hierarchical classifier used to classify EEG-based eye movement potentials into one of eight classes.

Table 3: Classification accuracies at each tier of the hierar-

chical classification structure at different scalp regions.

Scalp Region

Combined Frontal Occipital

1st Tier 98.61% 98.47% 71.11%

2nd Tier 76.51% 74.38% 43.85%

3rd Tier 54.87% 58.31% 28.02%

for the latter the performance was of 52.5% (up) and

50.97% (down). Table 3 also gives a clear indication

of how the performance varies across each tier. For

the combined channel option the classification is de-

creasing by around 20% as the number of classes in-

creases from 2 (tier 1), to 4 (tier 2), to 8 (tier 3).

This work also aimed to investigate, for the first

time, at which accuracy can such eye movements

be classified considering only the standard occipital

channels used in an SSVEP-based BCI. These results

show that horizontal and vertical movements can be

classified with an accuracy of over 70% but this per-

formance decreases to around 43% if classification is

carried out between 4 classes, specifically left, right,

up and down. Classifying eye movements based on

the visual angle becomes more challenging from this

set of electrodes with the resulting classification accu-

racy of the third tier going down to 28% among 8 gaze

directions. This means that if such a system is to be

used for a hybrid BCI, recordings from frontal chan-

nels would be highly desirable to boost performance.

The actual number of frontal channels required is fur-

ther analysed in Section 3.3.

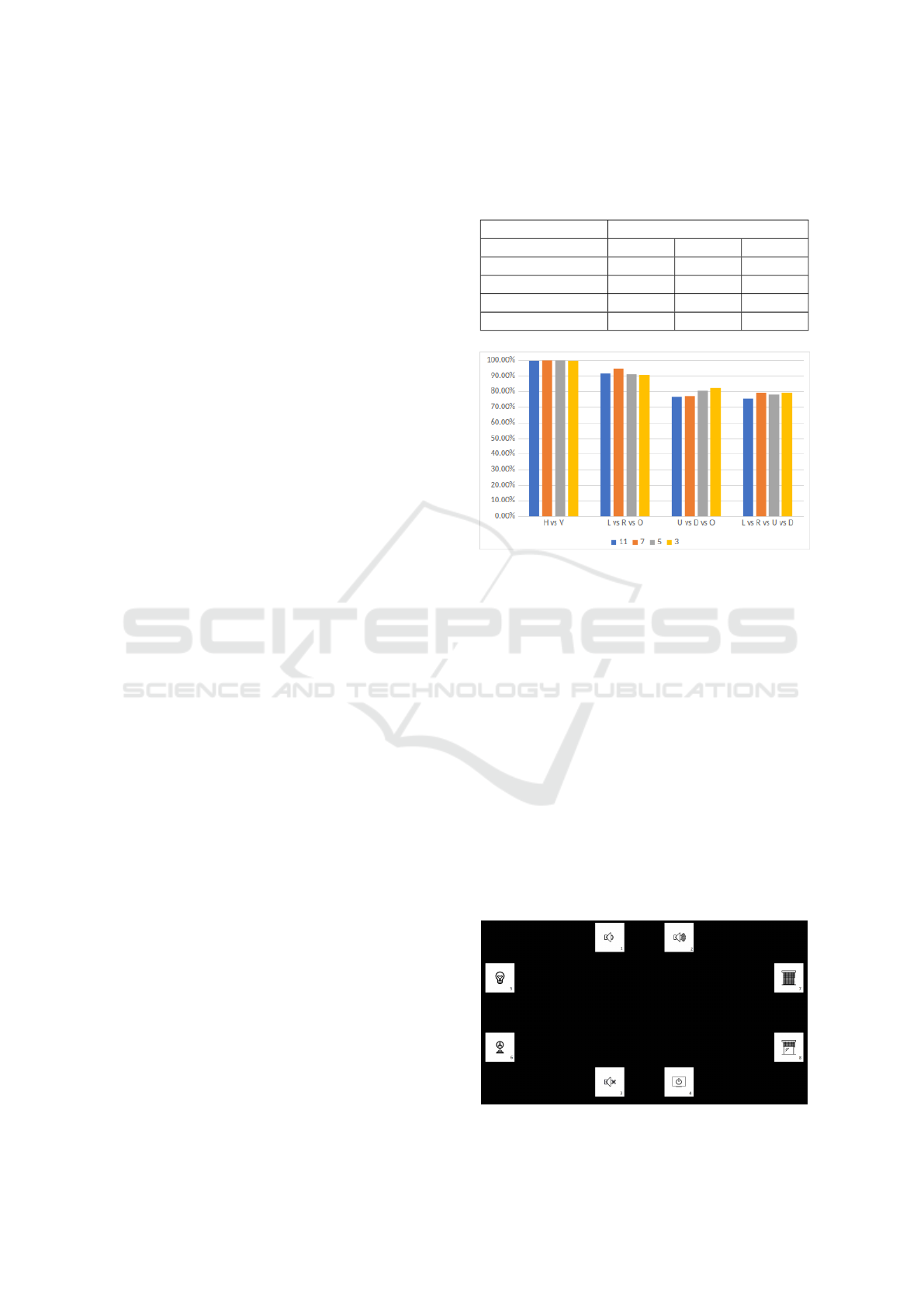

3.2 Distinction between Visual Angle

Given that eye movements corresponding to small vi-

sual angles are characterised by small saccadic dis-

placements in the EEG signals, this part of the anal-

ysis investigates whether the performance would im-

prove if trials corresponding to only large visual an-

gles are considered. The hierarchical system would

now only consist of two tiers and involve a distinc-

tion between four classes, specifically left, right, up

and down. The results of this analysis are shown in

Figure 4 and they are being compared to the classi-

fication accuracy obtained when trials of both small

and large visual angles progress through the same two

tiers in the hierarchy. It must be noted that the first

and last block of bar graphs correspond to the classi-

fication at the first and second tier respectively. The

results show that for combined or frontal channels,

the accuracies obtained are comparable for all trials,

with and without the inclusion of small visual angles.

This demonstrates that trials with small visual angles

do not adversely affect the accuracy of detection of

eye movement direction.. However, if occipital chan-

nels only are used, as in a standard SSVEP-based BCI

system, the eye movement accuracies not only de-

crease substantially, but if trials with both small and

large visual angles are used, the performance is sta-

tistically significantly lower than if only trials having

large visual angles are used. These results indicate

Figure 4: Classification accuracies within a two-tier struc-

ture when using trials with both small and large visual an-

gles (‘Both’) and large visual angles only (‘Large’) for the

combined ‘C’, frontal ‘F’ and occipital ‘O’ case.

BIOSIGNALS 2022 - 15th International Conference on Bio-inspired Systems and Signal Processing

120

that occipital channels alone may only be useful, at

most, to distinguish between horizontal and vertical

eye-movements.

3.3 Analysis on the Amount of Frontal

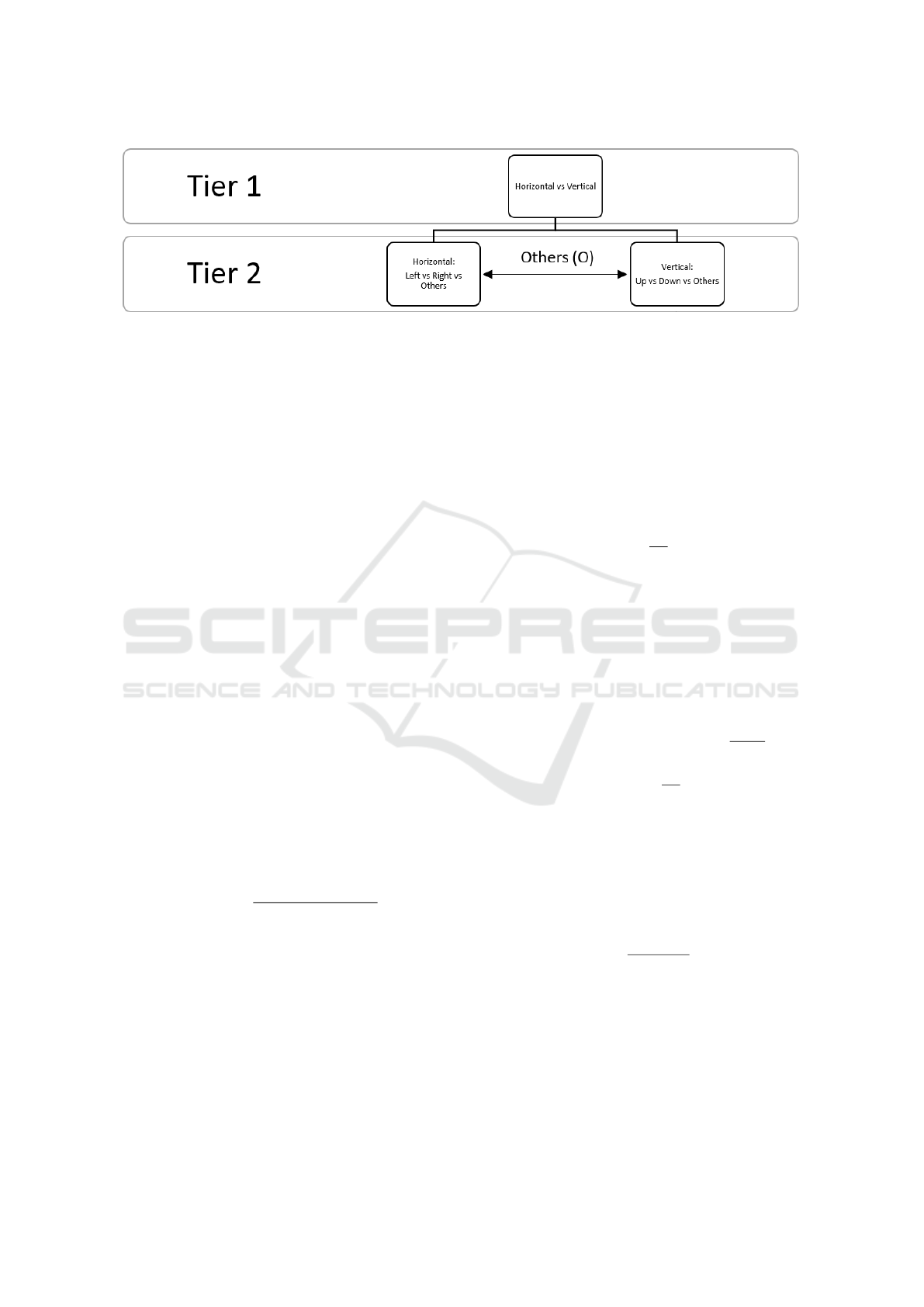

Channels

Table 4 shows the classification accuracies obtained

at the three different scalp regions considering a two-

tiered hierarchy. Using only occipital electrodes, a

classification performance of 47.60% is obtained after

the second tier but the performance of the combined

channels increased to 75.52%. This result however

was obtained by including 11 frontal channels with

the occipital channels. This analysis thus investigated

how eye movement classification performance varies

when reducing the frontal channels according to Table

1. Figure 5 shows that the classification performance

remains very stable even when reducing the number

of frontal channels down to three. This means that

if 3 frontal channels were included with the occipital

channels typical of a standard SSVEP setup, it is pos-

sible to classify eye movements into four classes with

an accuracy of close to 80%. Results using frontal

channels only indicated that a similar performance to

that of Figure 5 could be obtained, with an accuracy

around 80% when using three electrodes. This may

be useful for any type of BCI system which needs to

exploit this eye movement information in four direc-

tions with large visual angles.

4 HYBRID SSVEP HOME

AUTOMATION SYSTEM

Based on the results of the previous sections, a hybrid

SSVEP based home automation system was designed

with the main menu as shown in Figure 6. The com-

mands at the top and bottom of the menu correspond

to TV controls, those on the left control the on/off

switch of a lamp and fan, and those on the right allow

for the opening and closing of the blinds. Five healthy

subjects (three male and two female) participated in

this study. EEG data was recorded at a sampling fre-

quency of 256Hz and based on the results of the previ-

ous sections, eight occipital channels and three frontal

channels were used, specifically O1, Oz , O2 , PO7,

PO3 , POz , PO4 , PO8, AF7, Fpz, and AF8.

4.1 Experimental Paradigm

A comparative analysis was carried out to compare

a hybrid BCI (hBCI) which fuses EEG-based eye-

Table 4: Classification accuracies of the two-tiered hier-

archical system for the combined (C) frontal and occipital

scalp regions, frontal (F) only region and occipital (O) only

region.

Scalp Region

C F O

H vs V 99.58% 99.58% 76.67%

L vs R vs O 91.53% 92.92% 60.56%

U vs D vs O 76.53% 72.92% 58.33%

L vs R vs U vs D 75.52% 73.75% 47.60%

Figure 5: Classification accuracies of four different classi-

fiers when considering 3, 5, 7, or 11 frontal electrodes only.

movement-potentials with SSVEPs, and a conven-

tional SSVEP based BCI. Two types of hybrid BCIs

were designed, specifically a sequential hBCI and a

mixed hBCI. In the former, an eye movement is first

detected and classified as either horizontal or vertical

and the result is used such that only the side or top-

bottom stimuli respectively start flickering. Hence

this reduces the stimuli by half and a final selection

is then made using the SSVEP detection algorithm.

In the mixed hBCI, the attended icon is selected by a

fusion of the SSVEP detection algorithm and the eye-

movement detection algorithm. For comparison pur-

poses, an HCI using only EEG-based eye-movement

potentials was also developed.

Prior to each session, a 72s long training session

was carried out to collect EEG-based eye-movement-

potentials pertaining to four classes (Up, Down, Left

Figure 6: Menu Layout of Smart Home BCI Application.

Exploiting EEG-extracted Eye Movements for a Hybrid SSVEP Home Automation System

121

and Right), matching the interface shown in Figure

6. Four trials of the same length as those shown ear-

lier in Figure 2 were collected for each class and the

training data was used to construct CSP and SVM

models to classify the user’s eye movements within

the hBCI system. No training was required for the

SSVEP-recognition part as an unsupervised learning

technique was used in this case.

Following training the actual experiment was con-

ducted. For the SSVEP-based BCI, 0.75s were allo-

cated for the user to shift the gaze towards the target,

3s were allocated for the flickering of the stimuli, and

finally a 2s feedback period was provided. For the

hBCI, an additional 0.75s were allocated prior to the

gaze shift, instructing the user to focus on a central

cross. Re-centering of the user’s gaze is essential for

correctly classifying the user’s eye movement.

For the EEG-based eye movement only HCI, an

optimal cross layout as shown in Figure 7 was used.

Users were instructed to center their gaze and then

shift their gaze according to the location of the desired

target.

Both offline and online sessions were carried out

for this part of the analysis. In each case an ex-

periment for each of the SSVEP-based BCI, the hB-

CIs and the eye-movement EEG-based HCI was con-

ducted. Each exercise consisted of selecting each

possible icon three times, hence obtaining 24 trials

per experiment. In the online session however, sub-

jects were asked to execute 3 different command se-

quences, specifically:

1. Switch on TV → Close blinds → Decrease TV

volume

2. Open blinds → Switch fan → Increase TV volume

3. Switch lamp → Mute TV → Close blinds

For each command the subject was allowed three

consecutive attempts to correctly select the scheduled

icon. If the user did not manage to generate the nec-

essary SSVEP and the system thus did not succeed

in correctly detecting the target after three attempts,

the application executed the intended command and

progressed on to the following pre-defined cue.

4.2 Algorithms

Three algorithms were needed for the comparative

analysis: (i) An eye-movement classification algo-

rithm which classifies the eye-movements detected;

(ii) an SSVEP classification algorithm which pro-

cesses and classifies the SSVEP response of the sub-

ject; and (iii) a fusion algorithm for the mixed hy-

brid BCI system which fuses the output of the eye-

Figure 7: Interface for the EEG-based eye-gaze HCI.

movement classification algorithm with the SSVEP

classification algorithm.

4.2.1 Eye-movement Classification Algorithm

A similar approach to that discussed in Section 3.1

was adopted here. Specifically the EEG data was fil-

tered with a 4th order IIR bandpass filter having cut-

off frequency at 0.5Hz and 7Hz. The trials were then

projected into CSP space. The natural logarithm was

applied to the variance of the resulting signals and

these were used as features to the SVM classifiers.

Seven pairs of CSP and SVM models, as listed below,

were compiled from the training data obtained from

each user prior to the experiment.

• Horizontal vs Vertical Model

• Left vs Right Model

• Left vs Other Model

• Right vs Other Model

• Up vs Down Model

• Up vs Other Model

• Down vs Other Model

The first pair classifies eye movements as either

horizontal or vertical. This is used to decrease the

number of options within the BCI menu. As shown

in Figure 8, the other six pairs are used to either i)

categorise the horizontal eye movements as leftward

or rightward eye movements ii) categorise the vertical

eye movements as upward or downward eye move-

ments or iii) through the use of the ‘Others’ class at-

tempt to recover trials which are misclassified at the

first tier. As shown in Figure 8, eye-movements la-

belled as ‘Others’ are passed onto the adjacent classi-

fier within the second tier. The SVM models of these

six pairs were modified with a Platt Scaling (Platt,

2000) such that the SVM classifier is converted into a

probabilistic classifier giving a probabilistic estimate

of how much the EEG trial pertains to a specific class.

This conversion is done to aid the SSVEP detection

algorithm within the mixed hBCI.

BIOSIGNALS 2022 - 15th International Conference on Bio-inspired Systems and Signal Processing

122

Figure 8: 2-Tiered Hierarchy for Eye-Movement Classification.

4.2.2 SSVEP Classification Algorithm

The filterbank canonical correlation analysis

(FBCCA) (Chen et al., 2015) was used to process

and classify the SSVEP-related EEG obtained from

the user in the online experiments. The FBCCA

algorithm consists of three major procedures: i) fil-

terbank analysis; ii) CCA between SSVEP sub-band

components and sinusoidal reference signals; and iii)

target identification. The design of the sub-bands in

the filter bank was based upon a study by Chen et al.

(Chen et al., 2015) since the bandwidth of stimulation

frequencies used within the online experiments corre-

sponded to that used within the study. The sub-bands

covered multiple harmonic frequency bands. Each

sub-band had a different lower cut-off frequency but

they all shared the same upper cut-off frequency. The

lower cut-off frequency of the n

th

sub-band was set

at n× 8Hz while the upper one was set at 88Hz. An

additional bandwidth of 2Hz was added to both sides

of the passband for each sub-band (Chen et al., 2015).

4.2.3 Fusion Algorithm for the Mixed hBCI

In this case the extended form of Bayes Rule

(Kriegler, 2009), given by Equation 1, was used to

fuse the predictions made by the eye-movement clas-

sification algorithm and the SSVEP-target identifica-

tion algorithm for the mixed hBCI.

P(ω

k

|X) =

P(X|ω

k

)P(ω

k

)

∑

N

j=1

P(X|ω

j

)P(ω

j

)

(1)

where P(ω

k

|X) is the posterior probability of a

specific class ω

k

given the EEG trial X, P(X|ω

k

) is the

class conditional distribution of X for class ω

k

, and

P(ω

k

) is the prior probability of class ω

k

, the initial

degree of belief in the class ω

k

. The prior probability

is computed by the SVM classifier modified by Platt

scaling while P(X|ω

k

) is computed from the FBCCA

algorithm by modelling the probability distribution of

the correlation given the class.

4.2.4 Performance Metrics

To evaluate the performance of the different BCI sys-

tems considered in this work, the classification accu-

racy, information transfer rate (ITR) and efficiency

were used, all of which are common metrics in this

domain. The classification accuracy determines how

often a correct selection is made by the BCI and is

computed by (Stawicki et al., 2017):

P =

N

c

C

N

(2)

where N

c

and C

N

denote the number of correct

classifications and the total number of classified com-

mands.

The ITR, also known as bit rate, takes into con-

sideration the speed of the BCI system and together

with accuracy, gives a clearer picture of the system

throughput. The ITR in bits/minute is calculated as

(Stawicki et al., 2017):

B = log

2

N + P log

2

P + (1 − P)log

2

1 − P

N − 1

(3)

ITR = B

C

N

T

(4)

where B represents the number of bits per trial and

T denotes the length of the trial in minutes.

Finally, the efficiency metric gives a measure of

the efficiency of the BCI system, taking into consid-

eration the cost of errors. The efficiency in terms of

the actual time taken, t, to complete a task, is calcu-

lated as (Zerafa, 2013):

η =

t

max

−t

t

max

−t

min

(5)

The minimum t

min

and t

max

time that a user could

take to complete a task are computed by:

t

min/max

= κ ×t

o

× α (6)

where κ denotes the number of commands re-

quired to complete a task, t

o

represents the fixed time

between two consecutive commands for the system to

detect an SSVEP, while α denotes the number of user

attempts to execute the correct command.

Exploiting EEG-extracted Eye Movements for a Hybrid SSVEP Home Automation System

123

Table 5: Performance Results of Offline Analysis Across all Subjects.

Method: SSVEP Sequential Hybrid Mixed Hybrid Eye-Gaze HCI

Metrics: Acc(%) ITR (bpm) Acc (%) ITR (bpm) Acc (%) ITR (bpm) Acc (%) ITR (bpm)

S01 83.33 30.11 91.67 31.36 75 19.83 41.67 15.3

S02 41.67 6.12 62.5 13.24 75 19.83 41.67 15.3

S03 87.5 33.69 100 40 70.83 17.47 41.67 15.3

S04 87.5 33.69 95.83 35.11 62.5 13.24 20.83 1.57

S05 45.83 7.75 62.5 13.24 37.5 3.88 16.67 0.42

Mean 69.16 16.17 82.5 19.06 64.16 11.32 32.5 9.58

4.3 Results for the Offline Analysis

The results of the offline analysis are quantified in

terms of the classification accuracy and ITR. The re-

sults presented in Table 5 show that the highest clas-

sification accuracy obtained within the SSVEP-based

BCI was that of 87.5% with a high ITR of 33.69

bpm. For the sequential hBCI, a high classification

accuracy of 100% was achieved with a corresponding

ITR of 40bpm, while for the mixed hBCI, the high-

est classification accuracy achieved was that of 75%

with an ITR of 19.83 bpm. As for the EEG-based

eye-gaze HCI, the highest performance obtained was

with a classification accuracy of 41.67% and an ITR

of 15.3bpm. On average, the best performance was

achieved by the sequential hBCI with an average ac-

curacy of 82.5% and an ITR of 19.06 bpm.

With the exception of Subject 2, although differ-

ences in performance were noted, in general, sub-

jects achieved their best performance for the sequen-

tial hBCI. Conversely, Subject 2 obtained poor re-

sults with an SSVEP-based BCI and achieved the best

performance when using the mixed hybrid BCI, thus

demonstrating the strength of this hBCI configuration

when the SSVEP response of a subject is weak.

A considerable drop in performance was noted for

the EEG-based eye-gaze HCI, indicating that the clas-

Figure 9: Classification Accuracies of Different BCI Archi-

tectures against Varying Stimulating Periods.

sification of EEG-based eye-movements, according to

their visual angle extent, hinders the performance of

an HCI system. In addition, the drop in performance

attributes to the absence of SSVEP recognition tech-

niques within the HCI.

Figures 9 and 10 show the relation between the

classification accuracy and ITR, respectively, and the

stimulating period. As the stimulus flickering time

is reduced from 3 s down to 1 s, the SSVEP-based

BCI, the sequential hBCI and the mixed hBCI all dis-

play a reduction in accuracy, with the SSVEP-based

BCI and the mixed hBCI suffering the highest and

lowest reduction, respectively. It may also be noted

that the sequential hBCI remains the best performing

BCI throughout. With a stimulus period of 0.5s, the

SSVEP is normally difficult to detect; therefore, it is

not surprising that for this stimulus period, the mixed

hBCI has the highest performance, albeit at around

35%, indicating that the strength of this hBCI config-

uration is mainly due to the separate eye-movement

detection. Similarly, for this lowest stimulus period,

the EEG-based eye-gaze HCI also outperformed the

sequential hBCI and the SSVEP-based BCI.

With regard to the ITR, as the stimulus flickering

time is reduced from 3 seconds down to 1 second,

the performance of the SSVEP-based BCI decreases

monotonically. Conversely, the ITR of the sequential

Figure 10: ITRs of Different BCI Architectures against

Varying Stimulating Periods.

BIOSIGNALS 2022 - 15th International Conference on Bio-inspired Systems and Signal Processing

124

hBCI and the mixed hBCI tend to remain steady, even

exhibiting a slight increase, down to a stimulus period

of 1.5 s, with a noticeable but small reduction at 1 s.

Similar to the accuracy trends, the sequential hBCI

always has the best ITR throughout and at a stimu-

lus period of 0.5 s, both the SSVEP-based BCI and

the sequential hBCI exhibit a large drop in ITR, with

the mixed hBCI exhibits the highest ITR at a mere 9

bpm. The EEG-based eye-gaze HCI exhibits an ITR

of 6bpm and, similar to the accuracy, for a stimulus

period of 0.5s, this HCI outperforms the sequential

hBCI and the SSVEP-based BCI.

From the classification accuracy and ITR results

of the offline comparative analysis it was concluded

that a stimulation period of 2s is an adequate time

window and hence, for the online experiment, whose

results are presented in the next section, the stimulat-

ing period was set to 2s.

4.4 Results for the Online Analysis

An online experiment was conducted to allow the sub-

jects to operate the smart home BCI using either an

SSVEP-based BCI architecture, a sequential hBCI ar-

chitecture or a mixed hBCI architecture. In contrast

with the offline analysis, apart from classification ac-

curacy and ITR, the performance of the three smart

home BCI systems is also quantified in terms of effi-

ciency. As the online experiment grants each subject a

number of attempts to complete a task, the efficiency

evaluation criteria was introduced to take this number

into consideration.

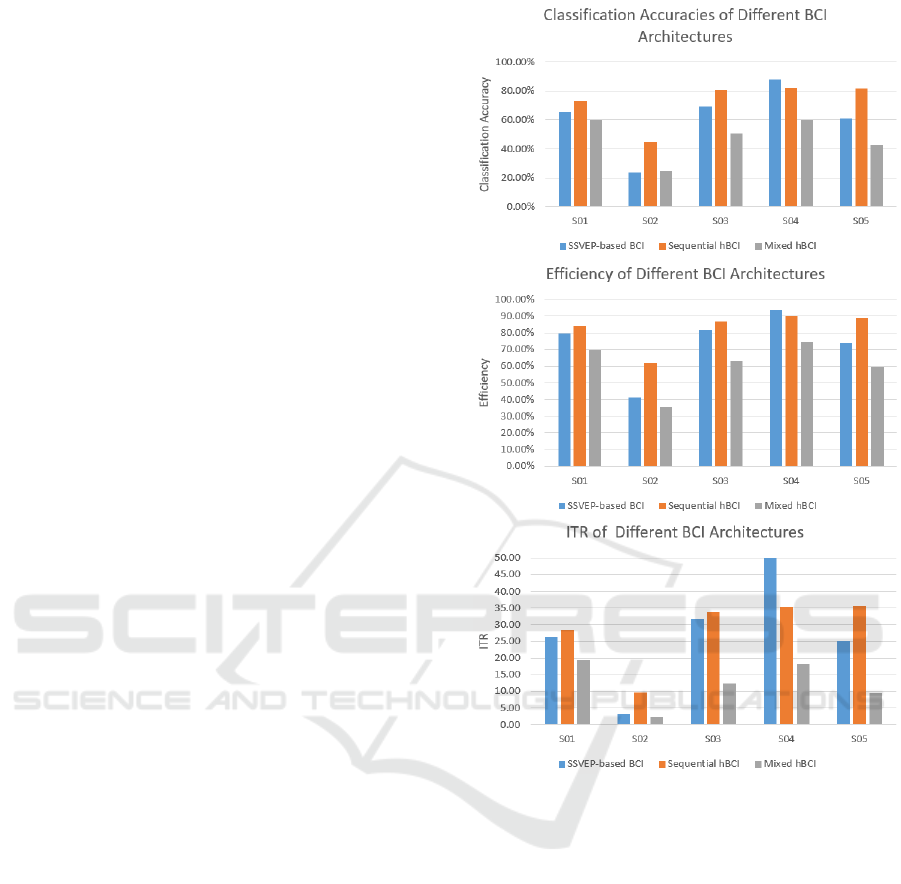

Figure 11 illustrates the performance achieved by

each subject for each BCI architecture on the basis of

classification accuracy, efficiency and ITR. With the

exception of Subject 4, subjects achieved their best

performance when using the sequential hybrid BCI.

Furthermore, relative to the mixed hybird BCI, sub-

jects achieved better results when using the SSVEP-

based BCI. However, as can be seen in the top bar

plot of Figure 11, Subject 2, who achieved poor re-

sults with an SSVEP-based BCI, achieved a slightly

higher classification accuracy when using a mixed hy-

brid BCI, demonstrating once more the advantages of

hBCI configurations when the SSVEP response of a

subject is weak.

When averaging the classification accuracy, ITR

and efficiency across the subjects for each smart home

BCI architecture, results showed that the sequential

hBCI outperformed the other two systems on all per-

formance metrics. The sequential hBCI was found to

be 11.3% more accurate and 8.3% more efficient than

the smart home SSVEP-based BCI. Pairwise t-tests

showed that the differences between the two systems

Figure 11: Performance Metrics for the Three Different BCI

Architectures across Five Different Subjects.

were significant (p-value < 0.01 for both metrics). In

terms of ITR, a slight difference of 1bpm was found

between the two, in favour of the smart home sequen-

tial hBCI. However, this was not found to be statisti-

cally significant. The smart home sequential hBCI ex-

ceeded the accuracy, efficiency and ITR of the mixed

hBCI by 25.1%, 21.9% and 16.21 bpm respectively

and the differences between these two smart home

hybrid BCIs were also found to be statistically sig-

nificant (p < 0.01).

The subjects taking part in the online study were

also asked to fill in a questionnaire to be able to com-

pare the different systems based on the user’s feed-

back. Overall users found the systems easy to con-

trol with the smart home sequential hBCI reported

as being the easiest. In terms of concentration re-

quirements, in general subjects found no difference

between the three systems. Users perceived the se-

Exploiting EEG-extracted Eye Movements for a Hybrid SSVEP Home Automation System

125

quential hBCI system as the least erroneous and the

mixed hBCI as the most erroneous. This correlates

with the quantitative results discussed earlier.

Users perceived the flickering stimuli of the hy-

brid systems to be less than that of the SSVEP-based

BCI. This may be attributed to the fact that the hybrid

systems makes use of four flickering stimuli instead

of eight. In fact, users found the hybrid systems to be

less tiring than the SSVEP-based BCI. Overall users

agreed that the time taken to make a selection was ap-

propriate for all systems. With regards to smart home

system features, all subjects stated that the menu in-

terface was easy to get used to and that it was adequate

for a smart home system.

5 CONCLUSIONS

This work concluded that eye movements can be clas-

sified reliably into horizontal or vertical eye move-

ments, using frontal EEG channels, with an accu-

racy of 98.47%. For the second tier in the proposed

hierarchical structure, classification into left, right,

up or down eye movements reached an accuracy of

74.38%. Finally, the eye movements could be clas-

sified into the eight considered saccadic gaze angle,

with an accuracy of 58.31%. These results compare

well with the limited literature in this field. Specifi-

cally (Dietriech M. P., 2017) had obtained a classifi-

cation of 96.6% when classifying between 4 extreme

eye movements (up, down, left, right) and the central

location using a kNN classifier, and 58.4% when us-

ing a linear SVM classifier. This however was only

based on a single subject as opposed to our work

which was validated on 5 subjects.

The results presented in this paper also show that

reliable eye-movement information may also be ex-

tracted using only the occipital EEG channels, though

with lower accuracies. Specifically classification into

horizontal or vertical eye movements reached an ac-

curacy of 71.11% while classification in the second

and third tiers of the proposed hierarchical structure

dropped to 43.85% and 28.02% respectively. How-

ever, if three frontal channels are added to the occipi-

tal channels vertical and horizontal eye movements at

large visual angles may be distinguished with an ac-

curacy close to 80% using the proposed hierarchical

classifier.

These results were then used for the develop-

ment of a smart home automation system where eye

movement information occurring prior to the visu-

ally evoked potential was exploited to improve clas-

sification performance of a hybrid BCI. An offline

study showed that a sequential hBCI gave an aver-

age accuracy over 5 subjects of 82.5% and an ITR

of 19.06bpm, while the mixed hBCI and the SSVEP-

based BCI gave an accuracy of 64.16% and 69.16%

respectively, and an ITR of 11.32bpm and 16.17bpm

respectively. The results of the online automation sys-

tem also confirmed that users performed better with

the sequential hBCI and users found this more intu-

itive to control. These results show that eye move-

ment information extracted from standard EEG chan-

nels typically used in an SSVEP based BCI can pro-

vide relevant information which improves the classi-

fication performance, especially for subjects whose

SSVEP response is not very strong.

ACKNOWLEDGEMENTS

This work was partially supported through the Project

R&I-2015-032-T ‘BrainApp’ financed by the Malta

Council for Science & Technology, for and on be-

half of the Foundation for Science and Technology,

through the FUSION: R&I Technology Development

Programme.

REFERENCES

Belkacem, A., Hirose, H., Yoshimura, N., Shin, D., and

Koike, Y. (2013). Classification of four eye directions

from eeg signals for eye-move-based communication

system. J Med Biol Eng, 34:581–588.

Belkacem, A., Saetia, S., Zintus-art, K., Shin, D., Kam-

bara, H., Yoshimura, N., Berrached, N., and Koike,

Y. (2015). Real-time control of a video game using

eye-movements and two temporal eeg sensors. Com-

putational Intelligence and Neuroscience.

Bishop, C. (2006). Pattern Recognition and Machine

Learning. Springer.

Chen, X., Wang, Y., Gao, S., Jung, T., and Gao, X. (2015).

Filter bank canonical correlation analysis for imple-

menting a high-speed ssvep-based brain-computer in-

terface. Journal of Neural Engineering, 12(4).

Dietriech M. P., Winterfeldt G., V. M. S. (2017). Towards

eeg-based eye-tracking for interaction design in head-

mounted devices. 7th International Conference on

Consumer Electronics (ICCE).

Gupta, S., Soman, S., Raj, P., Prakash, R., Sailaja, S., and

Borgohain, R. (2012). Detecting of eye movements in

eeg for controlling devices. IEEE Int. Conf. on Com-

putational Intelligence & Cybernetics.

Hsieh, C., Chu, H., and Huang, Y. (2014). An hmm-based

eye movement detection system using eeg brain-

computer interface. IEEE International Symposium

on Circuits and System.

Johannes M. G., Pfurtscheller G., F. H. (1999). Design-

ing optimal spatial filters for single-trial eeg classifi-

BIOSIGNALS 2022 - 15th International Conference on Bio-inspired Systems and Signal Processing

126

cation in a movement task. Clinical neurophysiology,

110(5):787–798.

Kriegler, E. (2009). Updating under unknown unknowns:

An extension of bayes’ rule. International Journal of

Approximate Reasoning, 50(4):583–596.

Padfield, N. (2017). Development of a hybrid human

computer interface system using ssveps and eye gaze

tracking. Master’s thesis, University of Malta.

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M.,

H

¨

ochenberger, R., Sogo, H., Kastman, E., and Lin-

deløv, J. K. (2019). Psychopy2: Experiments in be-

havior made easy. Behavior research methods.

Platt, J. (2000). Probabilistic outputs for support vector

machines and comparisons to regularized likelihood

methods. Adv. Large Margin Classif., 10.

Ramoser, H., Muller-Gerking, J., and Pfurtscheller, G.

(2000). Optimal spatial filtering of single trial eeg dur-

ing imagined hand movement. IEEE transactions on

rehabilitation engineering, 8(4):441–446.

Saravanakumar, D., R. R. M. (2018). A visual keyboard

system using hybrid dual frequency ssvep based brain

computer interface with vog integration. In Interna-

tional Conference on Cyberworlds.

Stawicki, P., Gembler, F., Rezeika, A., and Volosyak, I.

(2017). A novel hybrid mental spelling application

based on eye tracking and ssvep-based bci. Brain Sci-

ences, 4.

Zerafa, R. (2013). Ssvep-based brain computer interface

(bci) system for a real-time application. Master’s the-

sis, University of Malta.

Exploiting EEG-extracted Eye Movements for a Hybrid SSVEP Home Automation System

127