Emotion State Induction for the Optimization of Immersion and User

Experience in Automated Vehicle Simulator

Javier Silva

a

, Juan-Manuel Belda-Lois

b

, Sofía Iranzo

c

, Begoña Mateo

d

,

Víctor de Nalda-Tárrega

e

, Nicolás Palomares

f

, José Laparra-Hernández

g

and José S. Solaz

h

Instituto de Biomecánica de Valencia, Universitat Politècnica de València, València, Spain

nicolas.palomares@ibv.org, jose.laparra@ibv.org, jose.solaz@ibv.org

Keywords: Connected and Automated Vehicles, Human Factors, Emotion, Driving Simulator, Physiological Signals.

Abstract: Users’ acceptance is one of the predominant barriers of connected and automated vehicles (CAVS), which

should be addressed at the highest priority. Loss of control, perceived safety and therefore lack of trust are

some of the main aspects that lead to scepticism about the adoption of this technology. Addressing this issue,

the H2020 project SUaaVE seeks to enhance the acceptance of CAVS through understanding the passengers’

state and managing corrective actions in vehicle for enhancing trip experience. The research to understand

passenger emotions is mainly based on experimental tests consisting in immersive experiences with subject’s

participation in a simulated CAV, specifically adapted to SUaaVE research purposes. This paper present

different strategies to obtain realistic simulations with high levels of immensity in these tests using a dynamic

platform with the objective of studying the emotional reaction of the subjects in representative scenarios and

events within the framework of CAVs.

1 INTRODUCTION

The automation of driving is changing the role of

humans so that SAE levels L4 and L5 of automated

vehicles (SAE International, 2021) will take over all

control and monitoring tasks for specific applications

performed by humans in conventional motor vehicles

(Drewitz et al., 2020). However, the lack of control

can lead to lack of trust among users of fully

automated vehicles (Lee & See, 2004), identified as a

key issue in the acceptance and adoption of this

emerging technology (Bazilinskyy et al., 2015). In

this regard, trust in automation is strictly related to

”emotions on human-technology interaction, which is

a key factor for acceptance, but is also important for

safety and performance” (Lee & See, 2004). For this

reason, it should be a factor considered when

a

https://orcid.org/0000-0001-5115-1392

b

https://orcid.org/0000-0002-7648-799X

c

https://orcid.org/0000-0003-2579-7135

d

https://orcid.org/0000-0003-2633-8993

e

https://orcid.org/0000-0002-0988-341X

f

https://orcid.org/0000-0002-4523-341X

g

https://orcid.org/0000-0002-7121-5418

h

https://orcid.org/0000-0002-2058-9591

designing complex, high-consequenced systems like

Connected Automated Vehicles (CAVs) (Paddeu et

al., 2020).

With this situation, H2020 SUaaVE project

(SUpporting acceptance of automated VEhicle), aims

to enhance the acceptance of CAVs through the

formulation of ALFRED: A human centred artificial

intelligence to humanize the vehicle actions of the

CAV by understanding the passengers’ state and

managing corrective actions in vehicle for optimizing

trip experience. One of the main challenges in

SUaaVE, and in line with recent studies regarding

empathic vehicles (Braun et al., 2020), is the emotion

recognition of the vehicle occupants. It is also

important to consider mental stress in the

identification of these emotions in perception and

cognition in drivers above all due to the possible

affection of burn-out syndromes (Cuzzocrea et al.,

Silva, J., Belda-Lois, J., Iranzo, S., Mateo, B., de Nalda-Tárrega, V., Palomares, N., Laparra-Hernández, J. and Solaz, J.

Emotion State Induction for the Optimization of Immersion and User Experience in Automated Vehicle Simulator.

DOI: 10.5220/0010722700003060

In Proceedings of the 5th International Conference on Computer-Human Interaction Research and Applications (CHIRA 2021), pages 251-257

ISBN: 978-989-758-538-8; ISSN: 2184-3244

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

251

2013). The measurement of these emotions is based

on the obtention of arousal (level of intensity) and

valence (level of pleasantness). These values can be

estimated from physiological signals:

Electrocardiogram (ECG), heart rate (HR), galvanic

skin response (GSR), skin temperature (T) and facial

electromyogram (EMG) from the corrugator and

zygomatic muscles (Shu et al., 2018). Other

approaches could also include additional behavioral

parameters (facial expression according to

landmarks, blinking, etc.) (Suja et al., 2014).

In order to understand the passenger emotions, the

research is mainly based on experimental tests

consisting in immersive experiences with subject’s

participation in a simulated CAV, specifically

adapted to SUaaVE research purposes. This enables

to monitor the subjects’ reactions in different

situations and contexts within the framework of

automated vehicles.

This paper describes the experimental design for

the first test of the project in a driving simulator. The

aim is to provide and immersive experience to the

participants with the objective of eliciting relevant

and characteristic emotions in the framework of

automated vehicles while gathering their

physiological reaction.

2 METHODS

2.1 Experimental Design

The main objective of the experimental tests was to

analyse the subject’s response to different on-board

situations and circumstances on an automated

vehicle.

According to that purpose, we defined a set of

scenarios required to validate the emotional model.

These scenarios were designed to elicit the most

representative emotions that passengers can feel in

the framework of automated vehicles, thus can be

represented by different values of arousal and

valence. These emotions are: Fear, hope, pity,

satisfaction, distress, anger, relief and joy. The

elaboration of these relevant and critical scenarios

was defined in a previous study within SUaaVE using

people-oriented innovation techniques and with the

participation of 592 subjects from different EU

countries by means of online qualitative research

tools and surveys (Belda et al., 2021).

A total of seven scenarios were generated through

an open-source simulator for autonomous driving

research (CARLA) with a duration between 3 and 5

minutes. The first scenario is a manual driving. The

purpose of this one was to get to know the dynamics

of the platform, the simulated dimensions of the car,

visual perspective, sounds and in general to get

familiarized to the simulation environment. Five

more scenarios were designed in order to simulate full

automated driving (L5), whereas one more scenario

simulates automated driving (L4+) with a car failure,

requiring to take over the vehicle (manual driving due

to an electronic failure in the vehicle). Within this

scenario, several options can be taken. For example,

before a highway exit during autonomous mode from

vehicle, the participant is requested to take over the

car to drive inside an urban area. In case the

participant does not take over, the vehicle stops safely

after the new lane entrance. In Figure 1, a screenshot

of one of these scenarios is depicted.

Figure 1: Example screenshots of some scenarios used in

the test.

The experimental session has been designed in

order not to exceed the duration of two hours.

2.2 Immersion Methods for

Participants

The tests were performed in The Human Autonomous

Vehicle (HAV) at IBV, shown in Figure 2, a complete

dynamic driving simulator (six degrees of freedom)

that allows to emulate the behaviour of a vehicle with

different degrees of autonomy enabling fully

immersive driving experience.

It is composed by 3 large screens to facilitate the

immersion and includes steering wheel and pedals for

simulating low levels of automation. The simulator

provides surrounding sounds to the participants

representing engine sounds (emulating the sound of

an electric car), the road and other cars passing by.

HAV includes an instrument panel that provides

complete information of the vehicle to the participant,

including speed, regenerative braking, battery level,

information trip (expected time of arrival) and

information failure. Both trip and failure information

are also notified redundantly through audio messages.

SUaaVE 2021 - Special Session on Research Trends to Enhance the Acceptance of Automated Vehicles

252

Figure 2: Example of HAV simulating the behaviour of an

autonomous vehicle in a highway.

In order to adjust and validate the procedure

protocol for the test, a pre-pilot test with 6

participants was conducted. Participants were internal

IBV staff and also external participants (to avoid

potential bias in their results). According to the

preliminary results obtained, we designed and

implemented an extra set of strategies to optimize the

user experience enhancing the realism to improve the

participants’ attention during the test. These strategies

are defined next:

Initial context: Imagination is one of the simplest

emotion induction techniques, so in the

beginning of each scenario, we needed the

participants to imagine situations that elicit

emotions. At first, the technician in charge of the

tests informed the participants that they were in

a hurry to reach the destination. We noticed that

films, more than any other art forms, have a way

of drawing viewers into a situation that helps

people empathize and identify themselves with

characters. After that, we recorded videos with

professional actors reading a script that later

were played before the scenario. With emotions

and personality expressions hidden in the voice

of the actor and a detailed storytelling we can

elicit an initial cognitive load in the participant.

Thus there are more examples in literature in

which the formulation of methods to augment the

construction of predictive models with domain

knowledge can provide support for producing

understandable explanations for prediction, as it

is one of the future objectives of this

experimentation (Holzinger & Kieseberg, 2020).

Feedback during scenario: In each scenario,

visual and audible information was provided

through a Human-Machine Interface (HMI) in

the HAV central console and audio messages

were played as if it was an AI virtual assistant,

providing time to destination, vehicle status and

other trip information. A screenshot of the HMI

is shown in Figure 3.

This information lets the participant know the

driving mode (sportive, eco, etc.) weather

conditions that could affect the vehicle’s

roadmap (like cloudy, rainy or windy), status of

traffic (like good fluency or jams in several

locations on the way) and more pills that could

affect the emotional state of the passenger of an

autonomous vehicle circulating in both urban and

intercity trips. Results of a metanalysis on 32

studies with a total of 2468 participants showed

that the success-failure manipulation through

real time feedback is a reliable induction

technique to evoke both positive and negative

affective reactions (Nummenmaa & Niemi,

2004).

Figure 3: Detail of the HMI.

Embodiment: It can be a powerful tool to elicit

cognitive emotion to participants. For instance,

in one of the designed scenarios we force

postural change in order to catch a smartphone

ringing with a call that asks you to finish a certain

office task (while making eye contact with the

simulated road). This obliges the user to take a

similar attitude than when there is a stressful

situation at work.

In order to gather the subjective assessment of the

subjects, after each scenario, the participants reported

the emotions felt regarding every specific event of the

journey conducted in the scenarios through the scales

of Arousal and Valence from the Self-Assessment

Manikin (SAM) (Bradley & Lang, 1994). The value

of valence in a scale from 1 to 9 refers to the negative

or positiveness of the emotion felt. In the same way,

arousal refers to the intensity of the emotion in terms

of calmness or excitation, as seen in Figure 4. A

number of 30 events were evaluated including all

scenarios.

Emotion State Induction for the Optimization of Immersion and User Experience in Automated Vehicle Simulator

253

Figure 4: SAM questionnaire.

2.3 Participants

A total of 50 volunteers integrated the subject sample

in the test. This sample is composed of car drivers

between 25 and 55 years old, aged and BMI balanced.

The sample has an equal distribution of males and

females.

Exclusion conditions regarding the requirements

of the participants were simple. They do not have to

suffer visual & hearing impairment (wearing glasses

was allowed) and generally not suffering motion

sickness in transport. Thus, they had to come without

drowsiness, alcohol or drug issues in previous hours.

The participants’ physiological signals were

continuously monitored and synchronized with the

simulator. The synchronization is needed to associate

the scenario events with the onset of the participants’

emotional reactions.

The test was approved by the Ethical Committee

of the Polytechnic University of Valencia (UPV).

2.4 Acquisition instrumentation

We used different equipment to gather biosignals and

behaviour of the participants during the test. The

equipment used to gather the biometrics,

physiological data acquisition, were:

Biosignalsplux©. This equipment, shown at left

in Figure 5, allows high-quality physiological

signals acquisition by placing electrodes over

the skin with a high-resolution sample

frequency. This device gives accurate

measurements and it is very flexible for

synchronizing with other devices, like, for

instance, the software of the driving simulators.

Empatica E4© wristband (see right part in Figure

5). A non-invasive equipment and the only

wearable on the market to combine

Electrodermal Activity (EDA)

Photoplethysmography (PPG) and Temperature

sensors, simultaneously enabling the

measurement of the duality between sympathetic

vs parasympathetic nervous system activity.

Figure 5: Left: biosignalsplux©. Right: Empatica E4©

wristband.

The aim of measuring with both equipment is to

have a more complete overview of physiological

changes as a result of the fight between sympathetic

and parasympathetic systems. On one hand, the

Biosignals Plux allows a deeper analysis of the

physiological reactions in a more accurate way. On

the other hand, Empatica E4 allows to measure the

signals in a much less invasive way, so it could be

used in latter stages of tests (being easier to wear by

the test subjects) once the signals are better

characterized.

In general, the signals collected through these

sensors are involuntary and subconscious, and then,

they are hardly falsifying, so they can be used to

assess emotional states in a continuous way and they

are non-disruptive to the performance of the task.

Through different processing and analysis we could

obtain comparable outputs among them. The

information about the physiological signals acquired

is described in the following section.

Regarding the study of the participant’s physical

behaviour, a camera was placed in front of the driving

simulator to gather the facial gestures and understand

the participant’s reactions. There are several

software-based tools in the market able for analysing

facial expression such as the Affectiva Affdex

emotion recognition by iMotions© or Rekognition

service by Amazon Web Services©. These software

toolboxes detect changes in key face features (i.e.,

facial landmarks such as brows, eyes, and lips) and

generates data representing the basic emotions of the

recorded face. A photo of this sensor in the HAV and

the image acquired is depicted in Figure 6. More

information about the parameters calculated is

described in the following section.

This experimental data (physiological signals,

SAM questionnaires, labels with emotion type,

arousal, valence and facial videos) are the input to

generate the emotional model.

SUaaVE 2021 - Special Session on Research Trends to Enhance the Acceptance of Automated Vehicles

254

Figure 6: Left: camera placed in the driving simulator.

Right: Example of facial landmarks of Affectiva.

2.5 Data Gathered

The set of physiological signals acquired and

visualized in real time for each participant during the

whole test are detailed next:

Electrocardiogram (ECG) sensor (in case of

biosignalplux) and Blood Volume Pulse (BVP)

sensor (in case of Empatica) to obtain the heart

rate (HR) and heart rate variability (HRV).

Skin conductance sensor to record the electro

dermal activity (EDA).

Two facial electromyography (EMG) sensors to

record recruitment activity of zygomaticus

major and corrugator supercilia muscles.

Infrared thermopile sensor to gather the

peripheral skin temperature (only available in

Empatica E4© wristband).

Regarding behavioural data, we are able to

calculate the following parameters using facial

landmarks analysis:

Basic emotions: Calmness, Joy, Anger,

Surprise, Fear, Sadness, Disgust and Contempt;

with probability scores on a 0-100% scale.

Valence (measure of how positive or negative

the expression is).

Engagement: A general measure of overall

engagement or expressiveness.

Attention. Measure of point of focus of the

subject based on the head position.

Interocular Distance: the distance between the

two outer eye corners.

Pitch, Yaw, & Roll: x, y, & z rotation of the

head.

The physiological signals and the videos are

synchronized with the time of each scenario using

UNIX timestamps.

Besides objective measures, as mentioned before,

the subjective opinion of participants was also

collected.. It was gathered through:

Socio-demographic profile form: Age, gender,

driving experience and preferences.

SAM questionnaire (Geethanjali et al., 2017).

Appraisal of the emotional state of the

participant (level of arousal and valence) with

regards to different events on road.

Survey to assess acceptability and acceptance

from the perspective of automated vehicles and

developed in SUaaVE by the University of

Groningen (Post et al., 2020).

Since each physiological signal has its own

representation, all of them requires to be set in the

same proper scale. As we aim at having a continuous

representation of the emotional state of the

participants (in the domain of valence and arousal),

physiological signals are calibrated with a self-

reported emotional status at some specific times.

3 RESULTS

A preliminary analysis confirmed the hypothesis of

providing a higher immersive user experience with

the strategies aforementioned. This was observed by

the comparison of the physiological signals of the

participants performing the test with the strategies

and without them (in the pre-pilot test). More

concretely, it was noticed a more variable interval

within heart and breathing rate and a higher quantity

of responses generated by the sympathetic nervous

system (shown by the number of peaks detected in the

electrodermal activity signal of participants) in the

test with the strategies.

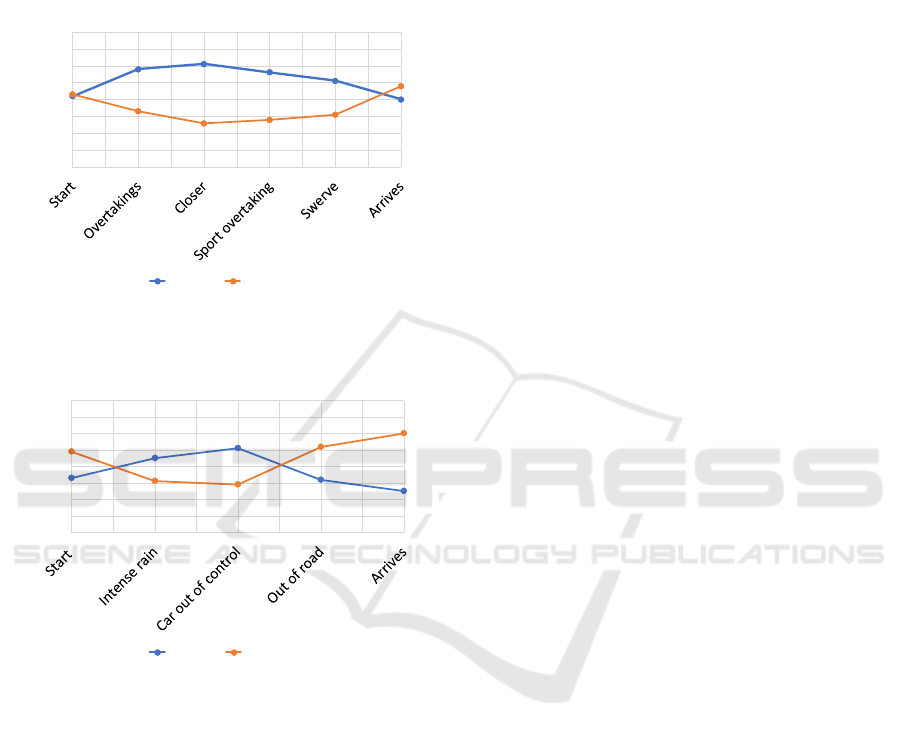

The emotional reactions and their variation are

also seen in the subjective assessment indicated by

the subjects after the end of each scenario through

SAM questionnaire. Figure 7 and Figure 8 show an

example of the mean values of arousal and valence

reported in two selected scenarios in the test with

strategies. As it can be seen, there are different events

in the scenarios where the interval relative to the

range in Arousal and Valence is higher than 2.5 points

in the scale, confirming the variation in the emotion

felt by the subjects. This means high intensity with

negative feeling of emotion load.

Regarding arousal, the mean values reached 7

points in events where the car gets closer to another

vehicle circulating at high speeds in the highway (3

rd

event in scenario 2) and 6 points while raining and the

participant witnesses an accident of other vehicle (3rd

event in scenario 4). Having in mind the complete

scale is from 1 to 9, these values in the upper third

part of intensity of emotion proves a good immersion

into the scenarios feeling the events with enhanced

intensity.

Emotion State Induction for the Optimization of Immersion and User Experience in Automated Vehicle Simulator

255

We can observe the same behaviour in valence

values reported in these events, where the values

nearly reach 3.5 points in both cases. Furthermore, in

the final event from scenario 4, participants agree in

arriving with a low emotional load in terms of arousal

with a positive feeling in terms of valence (around 7).

Figure 7: Mean SAM results in questionnaires from

scenario 2 per event.

Figure 8: Mean SAM results in questionnaires from

scenario 4 per event.

After a deep analysis of the data, the expected

result is the database of physiological signals in the

different autonomous driving situations and their self-

appraisal of the emotion felt. This database is used to

generate the dimensional emotional model. To this

purpose, the most appropriate classificatory system is

selected. These include: Artificial Neural Networks

(ANN), Convolutional Neural Networks (CNN),

Recurrent Neural Networks such as the bidirectional

Long Short Term Memory (BLSTM) or

Transformers, Support Vector Machines (SVM),

Relevance Vector Machine (RVM), Linear

Discriminant Analysis (LDA) and others (Bong et al.,

2013; Jang et al., 2012; Mohamad, 2005; Shu et al.,

2018).

In these preliminary analyses, the initial results

also show that the emotions felt varies from events

according to the scenario and it is also gender

dependent (variations in sentiment whether the

participant was a female or a male). However, the

emotion is independent of the achievement (on time

or not) of the task of the different scenarios.

4 DISCUSSION

SUaaVE seeks to integrate the human component in

CAVs through understanding the emotional state of

the occupants. In this regard, the immersion strategies

defined in this study are a key aspect for studying the

occupants reactions in a realistic way in a driving

simulator.

This study means the first step to generate a model

so as to characterise drivers and passengers in L4+

CAVs from their physiological signals, to study the

factors that might influence their emotional reactions

during the trip. In fact, a better estimation of the

occupants state can be used for the identification,

together with vehicle sensors (cameras, radar,

and LiDAR), of those factors that influence their

emotional state, such as the vehicle dynamics (ride

comfort), the environmental conditions (traffic

density, behaviour other vehicles, presence of VRUs,

etc.), or the interior ambient conditions & design. It

also enables to consider the human factor in the

development of advanced driver assistance systems

(ADAS) as well as to support the artificial

intelligence of the CAV by adjusting the decision-

making algorithms of vehicles in terms of dynamics

and itinerary for a comfortable and safe ride. In short,

understand how we feel in a CAV and use such

information to make system more empathic,

responding to the occupant emotions in real time.

5 CONCLUSIONS

This paper addresses different strategies to enhance

the immersivity and engagement of subjects while

conducting tests in a driving simulator in the

framework of automated vehicles.

The results of the test performed with these

strategies showed that participants felt immerse in the

simulation and that they could evaluate the events in

the different scenarios as if they were real, with

intense emotions noted both in objective and

subjective feedback obtained from them, as it is the

physiological signals, which were continuously

monitored and by questionnaires respectively.

The following steps in SUaaVE, using as a basis

the data base obtained from the tests with subjects

(physiological signals and subjective assessment) is

1

2

3

4

5

6

7

8

9

LevelofValence

/

Arousal

EventsinScenario2

Arousal Valence

1

2

3

4

5

6

7

8

9

LevelofValence/Arousal

EventsinScenario4

Arousal Valence

SUaaVE 2021 - Special Session on Research Trends to Enhance the Acceptance of Automated Vehicles

256

the generation of an emotional model aimed to

estimate the passengers state from their physiological

signals.

ACKNOWLEDGEMENTS

This work were funded by the European Union’s

Horizon 2020 Research and Innovation Program

SUaaVE project: “SUpporting acceptance of

automated Vehicles”; under Grant Agreement No.

814999.

REFERENCES

Bazilinskyy, P., Kyriakidis, M., & de Winter, J. (2015). An

international crowdsourcing study into people’s

statements on fully automated driving. Procedia

Manufacturing, 3, 2534–2542.

Belda, J.-M., Iranzo, S., Jimenez, V., Mateo, B., Silva, J.,

Palomares, N., Laparra-Hernández, J., & Solaz, J.

(2021). Identification of relevant scenarios in the

framework of automated vehicles to study the emotional

state of the passengers. 10th International Congress on

Transportation Research, Rhodes, Greece.

Bong, S. Z., Murugappan, M., & Yaacob, S. (2013).

Methods and approaches on inferring human emotional

stress changes through physiological signals: A review.

International Journal of Medical Engineering and

Informatics, 5(2), 152–162.

Bradley, M. M., & Lang, P. J. (1994). Measuring emotion:

The self-assessment manikin and the semantic

differential. Journal of Behavior Therapy and

Experimental Psychiatry, 25(1), 49–59.

Braun, M., Weber, F., & Alt, F. (2020). Affective

Automotive User Interfaces–Reviewing the State of

Emotion Regulation in the Car. ArXiv Preprint

ArXiv:2003.13731.

Cuzzocrea, A., Kittl, C., Simos, D. E., Weippl, E., & Xu, L.

(Eds.). (2013). Availability, Reliability, and Security in

Information Systems and HCI (Vol. 8127). Springer

Berlin Heidelberg. https://doi.org/10.1007/978-3-642-

40511-2

Drewitz, U., Ihme, K., Bahnmüller, C., Fleischer, T., La,

H., Pape, A.-A., Gräfing, D., Niermann, D., & Trende,

A. (2020). Towards user-focused vehicle automation:

The architectural approach of the AutoAkzept project.

International Conference on Human-Computer

Interaction, 15–30.

Geethanjali, B., Adalarasu, K., Hemapraba, A., Kumar, S.

P., & Rajasekeran, R. (2017). Emotion analysis using

SAM (self-assessment manikin) scale. Biomedical

Research.

Holzinger, A., Kieseberg, P., Tjoa, A. M., & Weippl, E.

(Eds.). (2020). Machine Learning and Knowledge

Extraction: 4th IFIP TC 5, TC 12, WG 8.4, WG 8.9, WG

12.9 International Cross-Domain Conference, CD-

MAKE 2020, Dublin, Ireland, August 25–28, 2020,

Proceedings (Vol. 12279). Springer International

Publishing. https://doi.org/10.1007/978-3-030-57321-8

Jang, E.-H., Park, B. J., Kim, S. H., & Sohn, J. H. (2012).

Emotion classification by machine learning algorithm

using physiological signals. Proc. of Computer Science

and Information Technology. Singapore, 25, 1–5.

Lee, J. D., & See, K. A. (2004). Trust in automation:

Designing for appropriate reliance. Human Factors,

46(1), 50–80.

Mohamad, Y. (2005). Integration von emotionaler

Intelligenz in Interface-Agenten am Beispiel einer

Trainingssoftware für lernbehinderte Kinder. RWTH

Aachen University.

Nummenmaa, L., & Niemi, P. (2004). Inducing affective

states with success-failure manipulations: A meta-

analysis. Emotion, 4(2), 207–214. https://doi.org/

10.1037/1528-3542.4.2.207

Paddeu, D., Parkhurst, G., & Shergold, I. (2020). Passenger

comfort and trust on first-time use of a shared

autonomous shuttle vehicle. Transportation Research

Part C: Emerging Technologies, 115, 102604.

Post, J. M. M., Ünal, A. B., & Veldstra, J. L. (2020).

Deliverable 1.2. Model and guidelines depicting key

psychological factors that explain and promote public

acceptability of CAV among different user groups.

H2020 SUaaVE project.

SAE International. (2021). J3016C: Taxonomy and

Definitions for Terms Related to Driving Automation

Systems for On-Road Motor Vehicles - SAE

International.

Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., Liao, D., Xu, X.,

& Yang, X. (2018). A review of emotion recognition

using physiological signals. Sensors, 18(7), 2074.

Suja, P., Tripathi, S., & Deepthy, J. (2014). Emotion

Recognition from Facial Expressions Using Frequency

Domain Techniques. In S. M. Thampi, A. Gelbukh, &

J. Mukhopadhyay (Eds.), Advances in Signal

Processing and Intelligent Recognition Systems (pp.

299–310). Springer International Publishing.

https://doi.org/10.1007/978-3-319-04960-1_27

Emotion State Induction for the Optimization of Immersion and User Experience in Automated Vehicle Simulator

257