Dual-context Identification based on Geometric Descriptors for 3D

Registration Algorithm Selection

Polycarpo Souza Neto

a

, Jos

´

e Marques Soares

b

, Michela Mulas

c

and George Andr

´

e Pereira Th

´

e

d

Department of Teleinformatics Engineering, Federal University of Ceara, Fortaleza CEP 60455-970, Brazil

Keywords:

Point Cloud Registration, Iterative Closest Point, Generalized ICP, Eigentropy, Omnivariance.

Abstract:

In 3D reconstruction applications, matching between corresponding point clouds is commonly resolved using

variants of the Iterative Closest Point (ICP). However, ICP and its variants suffer from some limitations,

functioning properly only for some contexts with well-behaved data distribution; outdoor scene, for example,

poses many challenges. Indeed, the literature has suggested that the ability of some of these algorithms to find

a match was reduced by the presence of geometric disorder in the scene, for example. This article presents

a method based on the characterization of the eigentropy and omnivariance properties of clouds to indicate

which variant of the ICP is best suited for each context considered here, namely, object or outdoor scene

alignment. In addition to the context selector, we suggest a partitioning step prior to alignment, which in most

cases allows for reduced computational cost. In summary, the proposal as a whole worked satisfactorily to the

alignment as a multipurpose registration technique, serving to pose correction of data from different contexts

and thus being useful for computer vision and robotics applications.

1 INTRODUCTION

In three-dimensional image processing, the problem

of point cloud registration associated to small objects

as well as to wide outdoor environments has been in-

tensively studied in the last few decades for its im-

portance in a wide range of applications, including

human recognition (Siqueira et al., 2018), agricul-

ture (Chebrolu et al., 2018) and autonomous driving

(Levinson et al., 2011). These two categories of sce-

narios differ in many aspects, including the 3D im-

age size, the susceptibility to cluttering, the influence

of surface deformations among sequential shots, etc,

what poses different challenges to algorithms dealing

with one or other category. Indeed, in the literature

there has always been an effort to associate the scene

context and the registration technique that best suits

the given scenario. For example, objects have been

dealt with from Iterative Closest Point-based regis-

tration algorithms. Especifically, ICP point-to-point

implementation has been reported in many contribu-

a

https://orcid.org/0000-0001-5057-1942

b

https://orcid.org/0000-0002-5111-5794

c

https://orcid.org/0000-0001-9120-2465

d

https://orcid.org/0000-0002-8064-8901

tions (Besl and McKay, 1992). Although it is a pi-

oneer technique, limitations regarding the computa-

tional efforts have led to many variants; one of such is

the approach presented in (Souza Neto et al., 2018),

in which registration is performed on sub-cloud space

after a cloud partitioning of the original data. As an

iterative technique, ICP is susceptible to falling into

local minima, and the literature has provided impor-

tant advances in that matter, as it is the generalized

algorithm named GICP (Segal et al., 2009). In view

of that association between registration technique and

the context regarding the scenario, we have posed the

problem of automatic identifying them as a prior step

to the registration itself. The contribution brought in

this paper is not on the selection only, but it is one that

comprises the use of the registration algorithm as the

alignment core in a point-cloud partitioning approach.

2 RELATED WORKS

2.1 Point Cloud Registration

Since the introduction of the ICP algorithm (Besl and

McKay, 1992), a number of variants have been pro-

150

Neto, P., Soares, J., Mulas, M. and Thé, G.

Dual-context Identification based on Geometric Descriptors for 3D Registration Algorithm Selection.

DOI: 10.5220/0010712400003061

In Proceedings of the 2nd International Conference on Robotics, Computer Vision and Intelligent Systems (ROBOVIS 2021), pages 150-157

ISBN: 978-989-758-537-1

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

posed in the literature to overcome its limitations. For

example, random (Zhang, 1994) or uniform down-

sampling (Vitter, 1984). Although the classical sam-

pling strategies may be very useful for computational

reasons when dense clouds are concerned, for sparse

point clouds they might lead to loss of relevant infor-

mation instead. An efficient approach to registration

3D data from outdoor scenes is demonstrated in (Se-

gal et al., 2009). It explores planar patches in both

point clouds, which takes to plan to plan concept.

For real-world applications involving 3D scene

perception, time-performance is a must, and naturally

a look at recent applications is worth. In (Forte et al.,

2021), authors solved a problem related to UAV-pose

estimation from a point-cloud registration assisted es-

timation algorithm relying on the linear version of

Kalman Filter. In that case, the solution achieved 10%

correction in volume estimation of a large coal stock-

pile in a thermal power plant. To be stressed that the

registration algorithm relied on a cloud-partitioning

approach (Souza Neto et al., 2018) with GICP (Segal

et al., 2009) as alignment core.

Despite the fact that all those techniques provided

self-consistent and comprehensible description of the

manipulations and transformations done in the point

clouds during the registration itself, it is to be men-

tioned the proposition of deep learning approaches in

the field. In that domain, it is worth mentioning the

recent contribution in (Kurobe et al., 2020), which in-

troduces Corsnet, a solution working on the basis of

many intermediate convolutional layers of local and

global data retrieved directly from the 3D image sam-

ples. Since point clouds are particularly dense data

in its essence, and deep learning methods represent a

completely different paradigm regarding data repre-

sentation, in the present work no additional attention

is paid to that, though we recognize its relevance and

the recent rise of interest of the scientific community.

2.2 Eigen-features

Important works in the literature of 3D image process-

ing report on spatial representation of surfaces from a

geometric description point of view. In many of them,

parameters calculated from the eigenvalues of the co-

variance matrix associated to the point cloud are used

as features for classification or recognition purposes.

For example, in (Hackel et al., 2016), authors were

able to perform efficient countour detection in 3D out-

door scenario.

Other important papers are (Demantk

´

e et al.,

2011) and (Donoso et al., 2017). In [(Demantk

´

e et al.,

2011), authors introduced a vicinity-based approach

for lines, surface and sphere detection from entropy-

like measurements of the point clouds. In (Donoso

et al., 2017), eigentropy is revisited and seems to ap-

ply well to the points selection problem, but details on

the normalization of the entropy associated measures

are missed or neglected in the discussion.

We highlight that those papers are mostly inter-

ested in segmentation; furthermore, in the biblio-

graphic search done so far, we have found no pa-

pers suggesting to use entropy or covariance matrix-

derived parameters as descriptors for scene context

identification. This appear as a gap in literature,

which we intend to cover for in the present contri-

bution.

3 MATHEMATICAL

FORMULATION

The technique here proposed is a registration algo-

rithm with dual-context selection alternative. It is

a cloud-partitioning approach in the sense that input

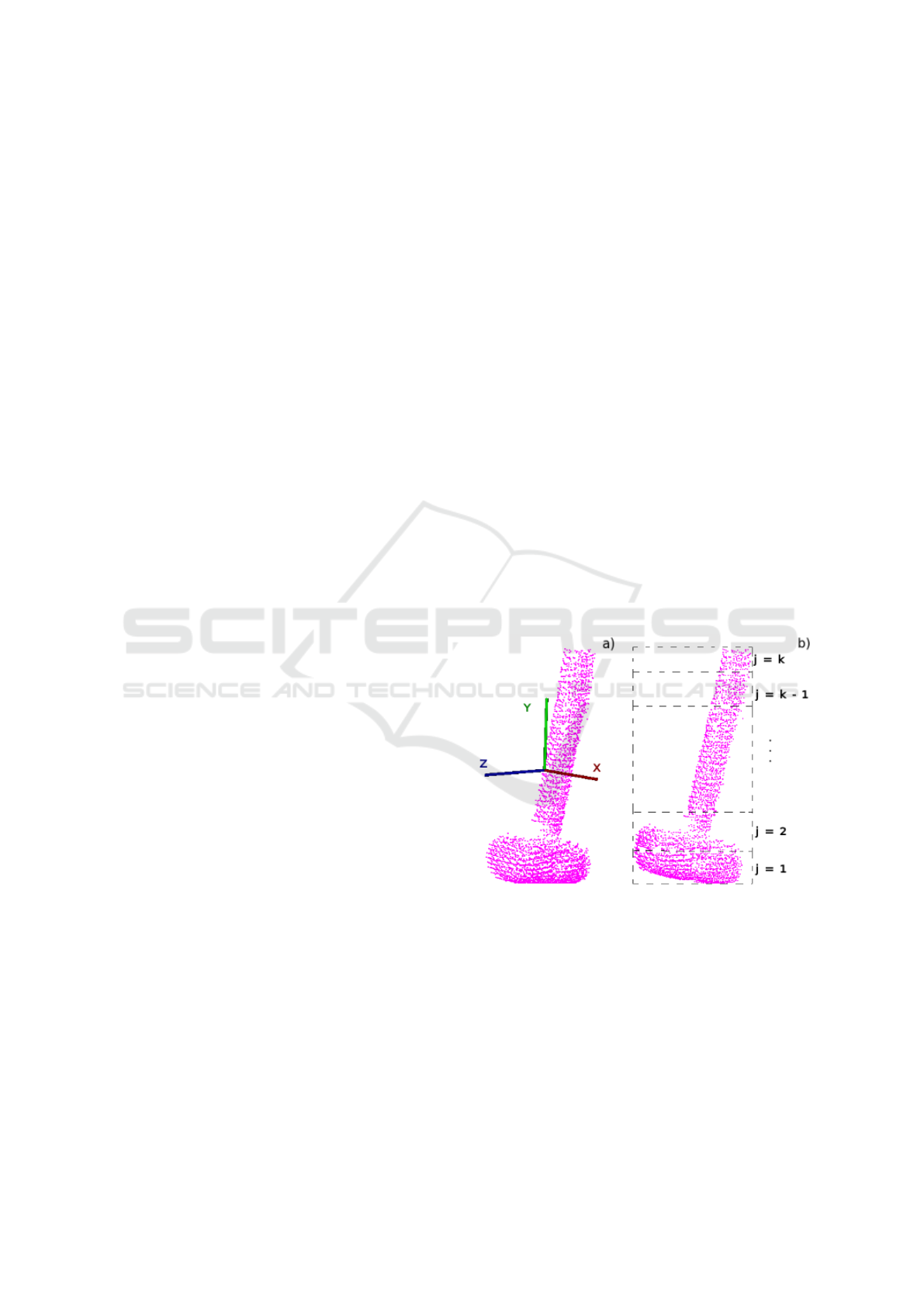

data are segmented into smaller groups of points. Fig-

ure 1 illustrates that. Sections in the following pro-

vide details on the sub-cloud grouping, on the choice

of partitioning direction and on the dual-context se-

lection procedure for the alignment core.

Figure 1: In (a), we have the Hammer model and the repre-

sentation of the respective directions, while in (b), we have

an analogy to partitioning, where j is the index for each

partition.

3.1 Cloud Partitioning Approach

Let (S) and (T ) be the source and target models to

undergo registration; partitioning is here defined as

the grouping operation of the whole point sets into

smaller groups, or sub-clouds, which are here indexed

by j, with j = 1 to k groups. It starts with a or-

dering procedure similar to quicksort implementation

Dual-context Identification based on Geometric Descriptors for 3D Registration Algorithm Selection

151

along a given ξ-axis, which can be one of the princi-

pal ˆx, ˆy, ˆz-axes. Details on the axis choice for parti-

tioning is given in the next section. The vectors

Q

~

s

i

e

Q

~

t

m

represent the points lying within source and target

models after the ordering. They sub-clouds can then

be defined according to the following sets:

S

j

= {

Q

~

s

i

| ( j − 1) ·

N

S

k

< i < j ·

N

S

k

} (1)

T

j

= {

Q

~

t

m

| ( j − 1) ·

N

T

k

< m < j ·

N

T

k

} (2)

,

in which N

S

e N

T

are the size of source and tar-

get models, respectively. The reader should note that,

from the formation law of the above equations above

show that the grouping is nothing else than a collec-

tion of points taken contiguously in lots of

N

T

k

or

N

S

k

points.

3.2 Choice of Partitioning Axis

In the current version of the partitioning approach, the

choice regarding the axis along t which the grouping

is done relies on the spatial distribution of points. It

starts with the calculation of the centroid coordinates

of the points and an offset in the whole points set,

making them zero-mean centered:

µ =

1

N

N

∑

i=1

~x

i

. (3)

~x

k

=~x

i

− µ,∀i,k ∈ [1,N] (4)

The covariance matrix can then be calculated

Σ

X

=

1

N

N

∑

i=1

~x

i

~x

i

T

(5)

and its eigenvalues can be put in order

λ

3

≤ λ

2

≤ λ

1

(6)

where λ

1

, the largest one, is chosen as the partitioning

axis.

3.3 Alignment Core Selection Criterium

Besides being useful for the partitioning-axis choice,

the data spatial distribution information as measured

by the eigenvalues and eigenvectors of the covariance

matrix can be used to calculate descriptors for the

point clouds. In the present work, we investigated

the sum of eigenvalues, omnivariance, eigentropy, lin-

earity, planarity, sphericity, anisotropy and surface

change for a large dataset of objects and outdoor

scenes. In that preliminary study, the goal was to see

which of those descriptors could lead to acceptable

inter-class discrimination between object and outdoor

scene contexts. The analysis counted on 2D plots of

those characteristics, for a total of 64 pairwise com-

binations. The dataset had 100 samples equally dis-

tributed into the two classes. Table I synthetizes that.

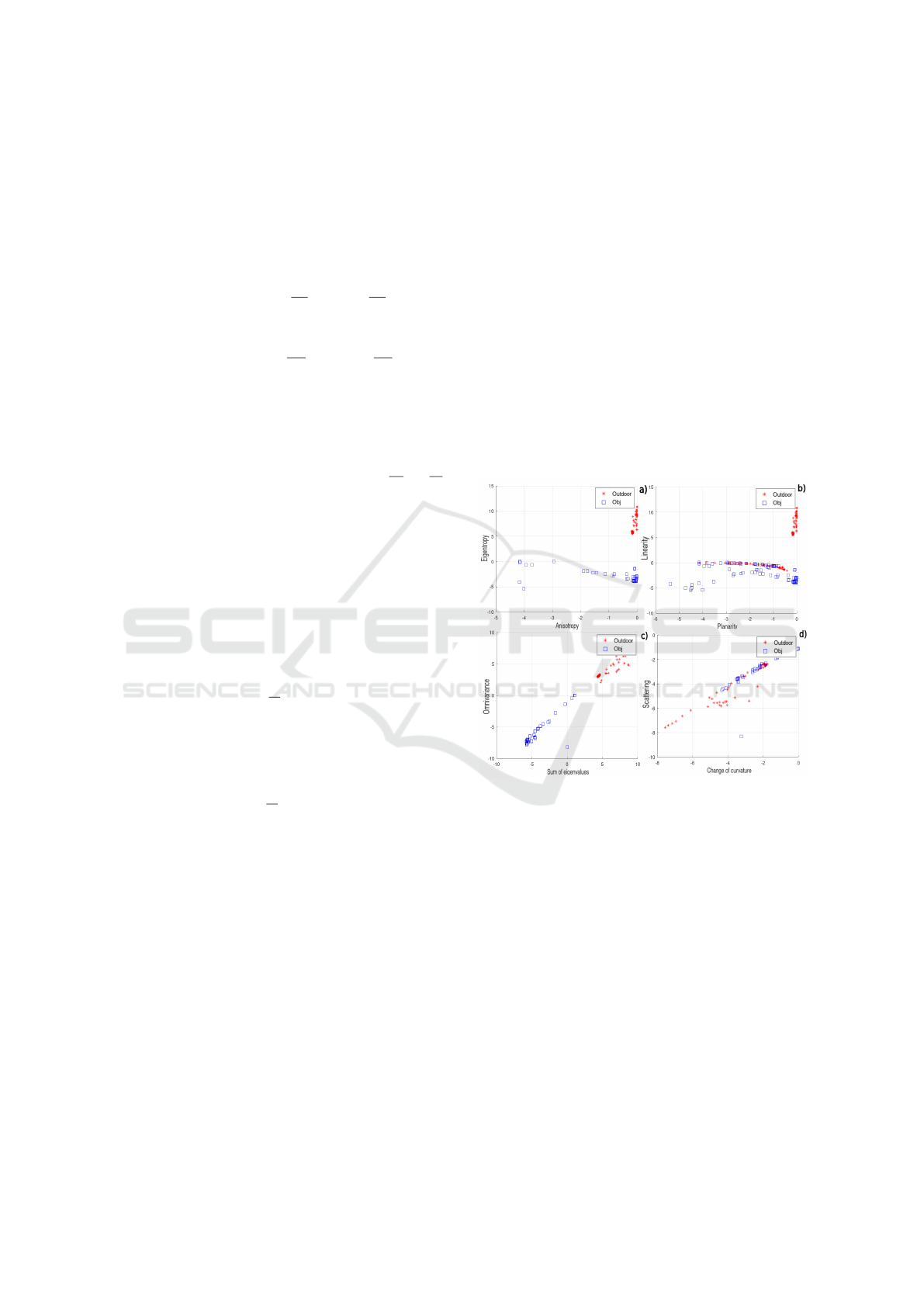

During the analysis, we got results as those shown

in Figure 2, and two particular descriptors revealed as

promissing measurements for good discrimination in

the dual-context identification, namely the eigentropy

and the omnivariance. For the other pairwise combi-

nations (even those not shown here for brevity), sig-

nificant overlap in the 2D space were observed. The

robustness of those two descriptors may be explained

by the fact that they are less susceptible to data size

and density, making them suitable for situations in

which intraclass discrimination is not a goal.

Figure 2: Pairwise dispersion diagram of geometric descrip-

tors for the datasets of each context. In (a) we have the

anisotropy plot × eigentropy, in (b) flatness × linearity, in

(c) sum of eigenvalues × omivariance and in (d) scattering

× change of curvature.

In the present work, the geometric descriptors

named eigentropy and omnivariance are calculated di-

rectly from the covariance matrix eigenvalues, with

no additional normalization on them, according to:

E

λ

= −

3

∑

i=1

λ

i

log(λ

i

); (7)

O

λ

=

3

∏

i=1

(λ

i

)

1/3

. (8)

For what concerns the preliminary study reported

in Figure 1, the calculated geometric descriptors were

collected for a large set of samples of objects and

ROBOVIS 2021 - 2nd International Conference on Robotics, Computer Vision and Intelligent Systems

152

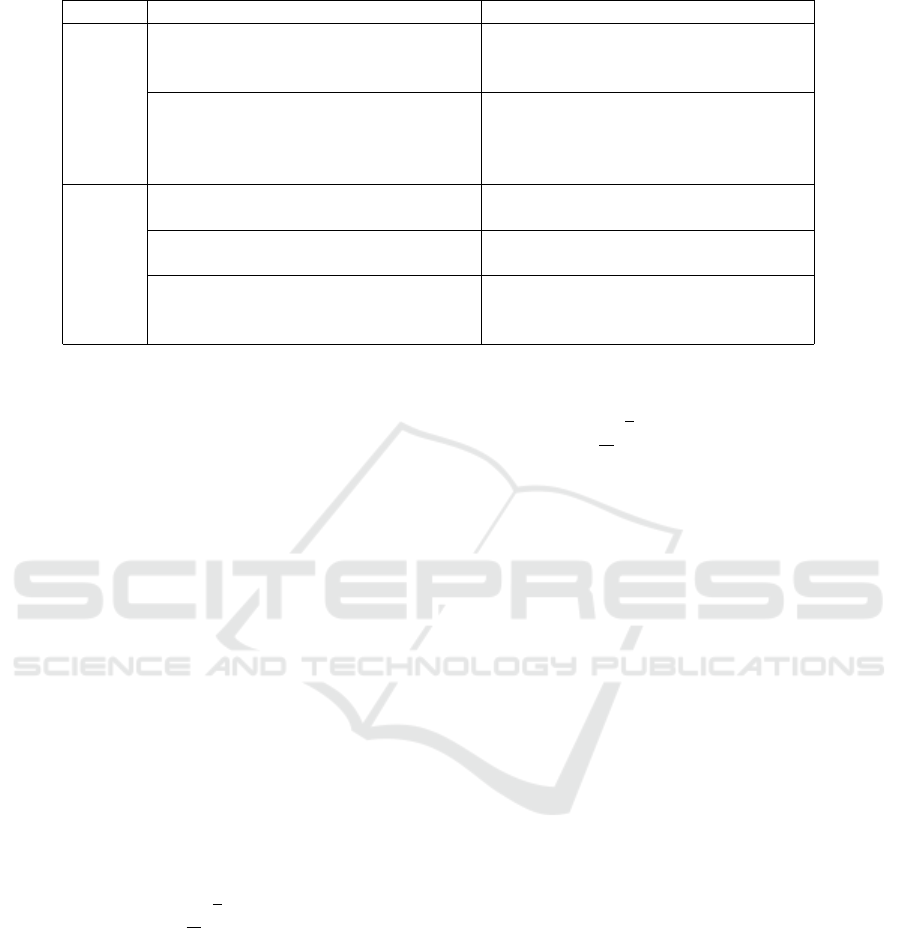

Table 1: Division of data used in the regression process.

Class Dataset Instances

Objects

Stanford (Levoy et al., 2005)

(36 samples)

Bunny: Rotations: 0 to 315 degree;

Buddha and Dragon: 0 to 336 degrees;

Armadillo: 0 to 270 degrees.

Parma (Aleotti et al., 2014)

(14 samples)

Horse: 0 and 180 degrees;

Hammer: 0 and 45 degrees;

Fustino grande: 0 to 270 degrees;

Fustino piccolo: 0 to 315 degrees.

Scenes

Bremen (Wulf, 2016)

(13 samples)

–

Hannover (Oliveira and Tavares, 2014)

(10 samples)

–

Priority dataset

(27 samples)

Department (Two samples);

Camp (Fifteen samples);

Stockpile (Ten samples);

outdoor, as well. For both measurements, when

they are taken independently, a threshold of nearly

' 0.4619 provided good separation line between the

two classes investigated. Following the reasoning

given in (Donoso et al., 2017), we associate low val-

ues of entropy-based measurements to well-behaved

points distribution like in solid and compact objects

(less disorder), whereas higher entropy suggests in-

creased disorder and, as such, points to uncontrolled

outdoor environments. This leads to the very simple

decision rule for a given input point cloud model:

i f log(E

S

λ

,O

S

λ

) ≤ 0.4619, core = ICP

pp

otherwise, core = GICP.

(9)

Once the context is identified, (if object or outdoor

scene), then the registration itself is triggered. When-

ever an object is identified, the point-to-point version

of the ICP algorithm adapted to the cloud-partitioning

framework discussed so far is used. One of the main

differences of this adaptation regards the cost func-

tion of the ICP registration, which is now in sub-cloud

space calculated as:

E

j

(Ψ)

ICP

=

k

N

N

k

∑

i=1

k M

j

− ΨS

j

k, (10)

where the index j refers to partitions undergoing reg-

istration in a given iterative step, N is the cloud size

and index i refers to a given point lying on the parti-

tion j. In the equation, Ψ gives the rigid transforma-

tion relating input source and target models, which is

the expected outcome of a registration algorithm. On

the other hand, if an outdoor scene is identified, GICP

algorithm adapted to the cloud-partitioning frame-

work discussed so far is used. Compared to the tra-

ditional version (Segal et al., 2009), the adaptation

regards the cost function equation of the GICP algo-

rithm, which now in sub-cloud space calculated re-

sembles like:

E

j

(Ψ)

GICP

=

k

N

N

k

∑

i=1

d

(Ψ)

T

i

(Σ

M

j

i

+ ΨΣ

S

j

i

Ψ

T

)

−1

d

(Ψ)

k

,

(11)

where d

(Ψ)

T

i

gives the pointo-to-point distance in the

correspondence step, and Σ

S

j

i

and Σ

M

j

i

give the covari-

ances for the vicinity of points in the j −th subclouds

of source and target models. The whole registration

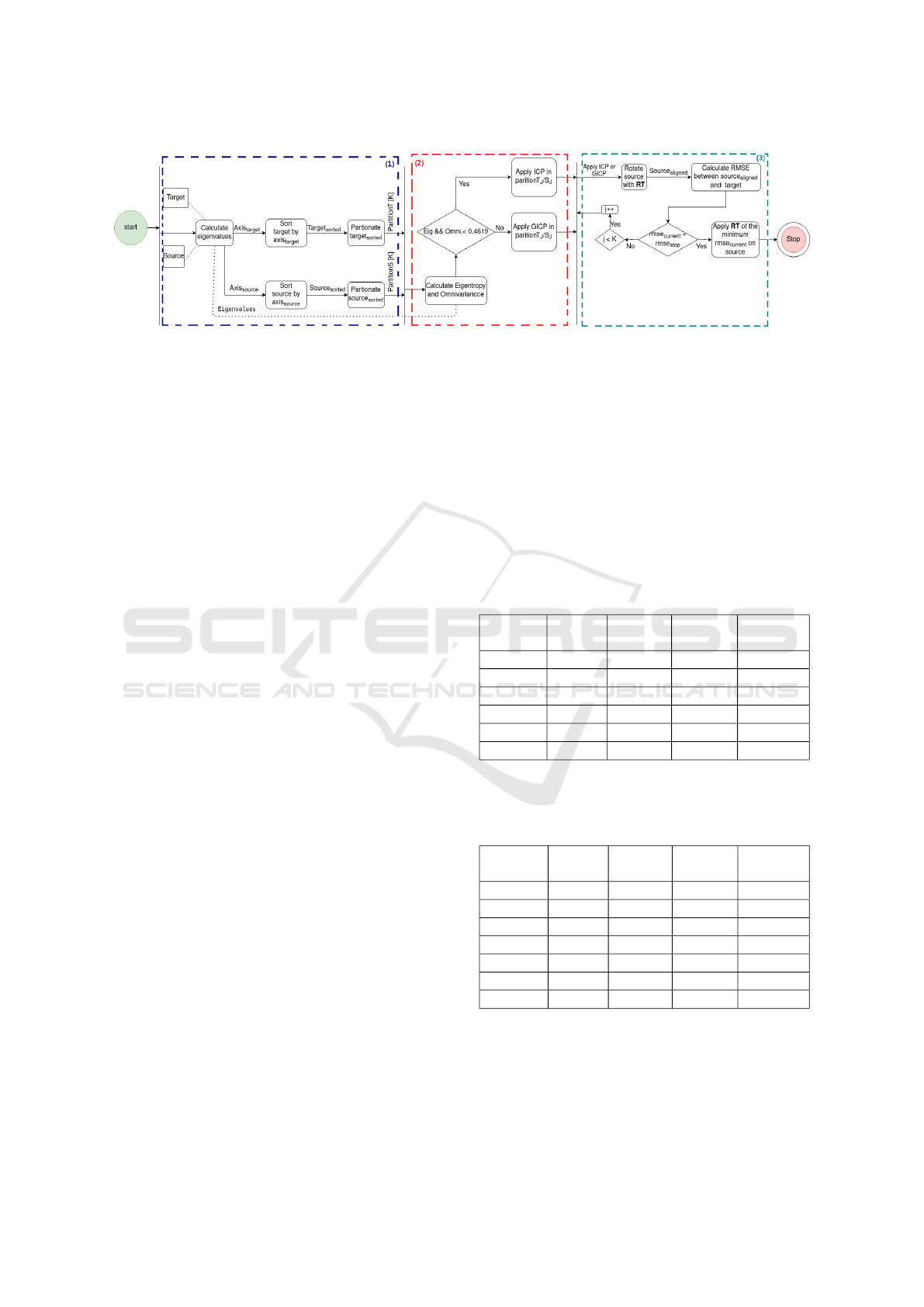

approach proposed in the present work is summarized

in the flowchart of Figure 3.

4 RESULTS

In this section, we discuss results for different reg-

istration experiments through a comparative analysis

among algorithms. They include Go-ICP (Yang et al.,

2016), CP-ICP (Souza Neto et al., 2018), CP-GICP

(Forte et al., 2021), ICP variants (Besl and McKay,

1992) (Chen and Medioni, 1992) (Segal et al., 2009),

the one named 3D-NDT (Magnusson et al., 2007),

also the 4PCS (Aiger et al., 2008) and, finally, the

algorithm SAC IA+ICP (Liu et al., 2020). For what

concerns the 3D models, the dataset of objects in-

cludes samples from (Levoy et al., 2005) and from

(Aleotti et al., 2014). The samples of outdoor scenes

are the ones from (Wulf, 2016) and also some shots

acquired after aerial imaging at an university cam-

pus (Forte et al., 2021). The whole set of algorithms

were C++ written in the PCL framework (Rusu and

Cousins, 2011), except for the Go-ICP whose exe-

cutable was made available and run on a 12 GB Intel

Core i5-8265U.

For additional information regarding the sim-

ulations (code implementation and parameters),

Dual-context Identification based on Geometric Descriptors for 3D Registration Algorithm Selection

153

Figure 3: Flowchart of the proposed method. We have, respectively, in (1) the partitioning step, in (2) the choice of the

alignment algorithm and in (3) the verification of the registration.

the reader is suggested to check the material

available at https://github.com/pneto29/. For vi-

sualization of every result discussed in this paper,

refer to https://drive.google.com/drive/folders/

1Mt5tavDks5LPtBNFumdW6rhgaSXE5fxO?usp=

sharing.

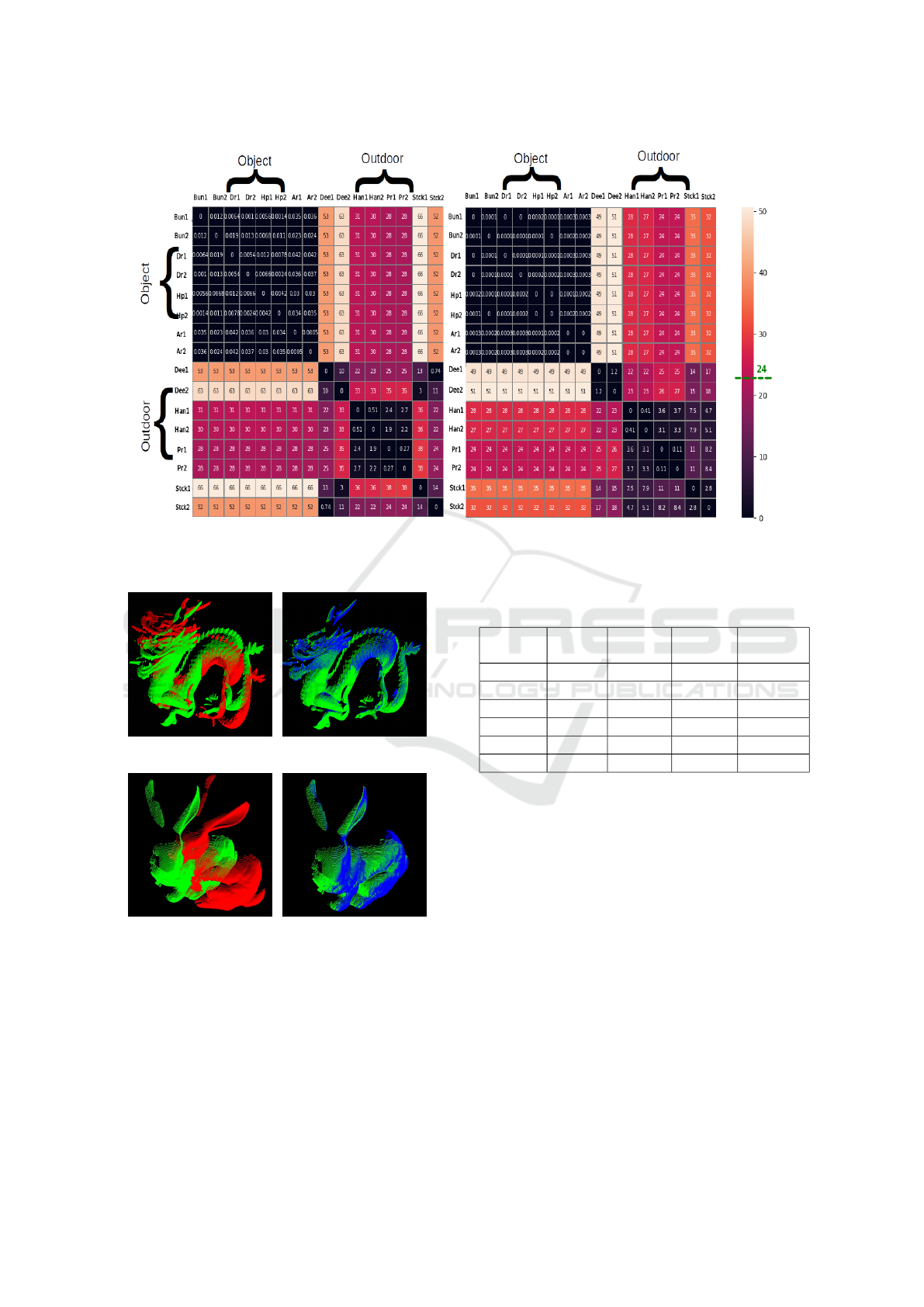

4.1 Heatmap of Geometric Descriptors

Initially, we report on the use of the geometric de-

scriptors and their ability to get the scene context.

Figure 4 represent the pairwise similarity of Eigen-

tronpy and Omnivariance measurements as a heat

map, thus allowing for a simple perception of how

good they are to distinguish between objects and out-

door scenario classes. We see how those two mea-

surements are good at preserving intraclass similar-

ity whilst separating well interclass sample pairs. For

better comprehension of the above mentioned, in the

color bar at right, the separation line between objects

and outdoor scenes are shown for each geometric de-

scriptor assessed.

4.2 Pairwise Registration of Objects

We report here on the pairwise registration of ob-

jects; it can be thought of as a validation experiment,

since the ground-truth (GT) is available. For quantita-

tive assessment of matching goodness, Table 2 brings

the root-mean square error between source and tar-

get models after registration. To help comparing the

methods, Tables 3 and Tables 4 report the achieved

pose correction (along with the ground-truth) and the

time spent by each algorithm during registration.

Globally, we see that, although our technique is

not the fastest in every case, it reaches a very good

trend when analyzed under the presence of matching

goodness requirement. In other words, ours can be

lazier when Dragon model are subject to matching,

but it is very good at doing the matching in both qual-

itative (see Figures 5(a) to 5(d)) and quantitative as-

sessment (see Tables 2 and 3).

It is worth mentioning some words about 4PCS al-

gorithm. Although it is a feature-space algorithm, and

thus conceptually different to points-coordinate space

approaches as ICP variants, its close efficiency and

good time performance achieved in some of the in-

vestigated cases would suggest that it deserves some

attention, perhaps in the context of a future investiga-

tion of a cloud-partitioning approach with 4PCS fea-

ture representation embedded in the sub-cloud space.

Table 2: RMSE obtained from registration between pairs of

objects.

(∗10

−3

) Bunny Dragon

Happy

Buddha

Hammer

ICP

p2p

2.026 1.835 2.537 3.535

4PCS 2.664 2.311 2.791 9.001

SAC IA 2.823 2.205 3.123 3.544

Go-ICP 89.000 55.000 32.000 207.000

CP-ICP 11.985 1.886 2.565 4.017

Our 2.222 1.886 2.621 3.807

Table 3: Rotation obtained from the registration of pairs

of objects. Note the first row of the table, with the numbers

highlighted. These values refer to the ground-truth available

in the databases (*Ground-truth).

(degree) Bunny Dragon

Happy

Buddha

Hammer

GT* 45.000 24.000 24.000 45.000

ICP

p2p

41.300 23.862 21.679 45.577

4PCS 42.582 24.423 23.744 44.673

SAC IA 39.992 23.491 20.626 44.691

Go-ICP 34.480 61.281 15.612 36.198

CP-ICP 16.498 24.009 22.543 44.590

Our 43.245 24.009 24.039 45.042

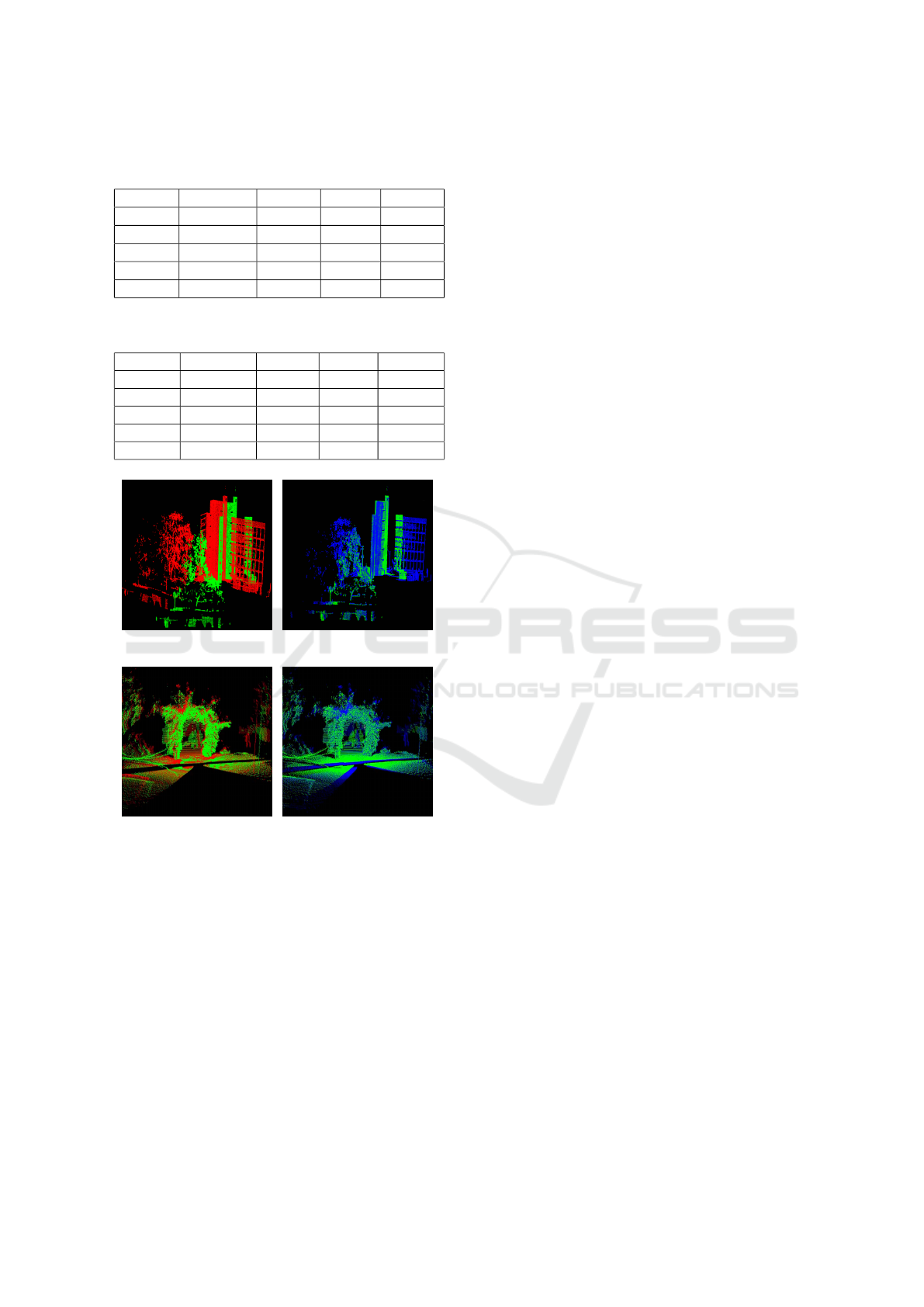

4.3 Pairwise Registration of Outdoor

Scenes

This is an experiment in which the challenges of out-

door scene perception are posed to the registration al-

ROBOVIS 2021 - 2nd International Conference on Robotics, Computer Vision and Intelligent Systems

154

Figure 4: Heatmap-like images of Eigentropy and Omnivariance corresponding to Euclidean distances between pairs of the

investigated samples. The darker areas correspond to close samples in the given space. The line in the color bar refers to the

separation threshold between the classes.

(a) (b)

(c) (d)

Figure 5: Result of alignment of Dragon and Bunny models.

In (a) we have the initial dragon pose, in (b) the registration,

in (c) the initial Bunny pose and finally, in (d) the registra-

tion of the Bunny model.

gorithm. Indeed, the surfaces to be matched are no

more rigid ones and, therefore, the use of a rigid trans-

formation approach for pose correction may simply

fail. If the reader is less aware of the concept, think

of the existence of moving people or cars and also of

Table 4: Time in seconds to correct the pose of pairs of

objects.

(in sec.) Bunny Dragon

Happy

Buddha

Hammer

ICP

p2p

9.427 7.527 17.084 0.356

4PCS 7.425 0.646 51.388 187.40

SAC IA 342.34 276.90 245.93 21.999

Go-ICP 36.537 35.847 36.288 36. 198

CP-ICP 5.916 3.503 9.297 0.157

Our 4.043 3.301 3.015 0.294

trees with moving leaves in the different acquisition

shots of the scenario; they all represent disturbance to

the surface representation which could require local

transformation matrices (or other nonrigid approach)

for proper pose correction.

Results are brought in Tables 5 and 6, which report

once again the root-mean square error between source

and target models after registration and the elapsed

time in the task. Compared to the other techniques, it

is remarkable the impressive time-efficiency achieved

by the proposed algorithm. The goodness of matching

as measured by the RMSE metric showed to be good

enough for many of purposes concerning scene 3D

perception, as it can be illustrated in Figures 6(a) to

6(d), for example.

Dual-context Identification based on Geometric Descriptors for 3D Registration Algorithm Selection

155

Table 5: RMSE obtained from registration between pairs of

outdoor scenes.

Hannover Gasebo Camp Depart.

ICP

p2pl

0.406 0.309 15.597 3.047

GICP 0.451 0.429 32.655 4.582

NDT 0.408 0.324 12.714 8.084

CP

GICP

0.336 0.201 13.097 2.708

Our 0.341 0.222 12.484 3.631

Table 6: Time in seconds to correct the pose of pairs of

outdoor scenes.

(in sec.) Hannover Gasebo Camp Depart.

ICP

p2pl

4.141 13.014 198.00 92.291

GICP 6.479 27.994 288.64 318.21

NDT 5.752 36.111 197.37 87.797

CP

GICP

6.939 117.69 265.25 1968.06

Our 0.648 11.582 86.642 29.345

(a) (b)

(c) (d)

Figure 6: Result of the Department and Gasebo models

alignment, respectively. In (a) we have the initial pose and

in (b) the alignment for the Department model, in (c) the

initial pose and in (d) the register for the Gasebo model.

5 CONCLUSIONS AND FUTURE

WORK

In the present paper, it was introduced a registra-

tion algorithm for point-clouds originating from two

different contexts, namely objects and outdoor envi-

ronment. A look at the geometric descriptors based

on the covariance matrix eigenvalues revealed that

eigentropy and omnivariance can be used as features

and are able to simply and successfully separate the

data samples corresponding to the mentioned con-

texts, whilst preserving the intraclass similarity for

the whole and wide dataset prepared for the present

investigation.

As future work, there is an improvement to pur-

suit regarding the possibility to switch among sev-

eral registration cores, thus opening up the way to a

many-context aware registration approach. Although

this is strictly dependent on the availability of ad-hoc

knowledge about the correspondence between a given

technique and the context itself, which in turn relies

on huge efforts of bibliographic reports surveying, it

does pave the way towards fully-automatic registra-

tion methods to be embedded in robots or autonomous

vision systems. Such an automatic system would ob-

viously rely on very good stop criterion, which sug-

gests a second relevant issue to deal with later: the

search for alternatives to RMSE as registration qual-

ity measurement, since it often gives false or unac-

ceptable quantitative representation of the goodness

of matching.

ACKNOWLEDGEMENTS

This work was financed in part by Fundac¸

˜

ao Cearense

de Apoio ao Desenvolvimento Cient

´

ıfico e Tec-

nol

´

ogico (FUNCAP). This study was financed in part

by the Coordenac¸

˜

ao de Aperfeic¸oamento de Pessoal

de N

´

ıvel Superior - Brasil (CAPES) - Finance Code

001. The authors thank colleagues from the research

group for the valuable comments. Authors also thank

Marcus Forte and Fabricio Gonzales for acquiring and

providing priority datasets.

REFERENCES

Aiger, D., Mitra, N. J., and Cohen-Or, D. (2008). 4-points

congruent sets for robust pairwise surface registration.

In ACM Transactions on Graphics (TOG), volume 27,

page 85. ACM.

Aleotti, J., Rizzini, D. L., and Caselli, S. (2014). Percep-

tion and grasping of object parts from active robot ex-

ploration. Journal of Intelligent & Robotic Systems,

76(3-4):401–425.

Besl, P. J. and McKay, N. D. (1992). Method for registration

of 3-d shapes. IEEE Transactions on Pattern Analysis

and Machine Intelligence.

Chebrolu, N., L

¨

abe, T., and Stachniss, C. (2018). Robust

long-term registration of uav images of crop fields for

precision agriculture. IEEE Robotics and Automation

Letters, 3(4):3097–3104.

ROBOVIS 2021 - 2nd International Conference on Robotics, Computer Vision and Intelligent Systems

156

Chen, Y. and Medioni, G. (1992). Object modelling by reg-

istration of multiple range images. Image and vision

computing, 10(3):145–155.

Demantk

´

e, J., Mallet, C., David, N., and Vallet, B. (2011).

Dimensionality based scale selection in 3d lidar point

clouds. In Laserscanning.

Donoso, F., Austin, K. J., and McAree, P. R. (2017).

Three new iterative closest point variant-methods that

improve scan matching for surface mining terrain.

Robotics and Autonomous Systems, 95:117–128.

Forte, M. D. N., Neto, P. S., The, G. A. P., and Nogueira,

F. G. (2021). Altitude Correction of an UAV As-

sisted by Point Cloud Registration of LiDAR Scans.

In Informatics in Control, Automation and Robotics:

18th International Conference, ICINCO 2021 Online

streaming, July 6-8, 2021, volume 1.

Hackel, T., Wegner, J. D., and Schindler, K. (2016). Fast se-

mantic segmentation of 3d point clouds with strongly

varying density. ISPRS Annals of Photogrammetry,

Remote Sensing & Spatial Information Sciences, 3(3).

Kurobe, A., Sekikawa, Y., Ishikawa, K., and Saito, H.

(2020). Corsnet: 3d point cloud registration by deep

neural network. IEEE Robotics and Automation Let-

ters, 5(3):3960–3966.

Levinson, J., Askeland, J., Becker, J., Dolson, J., Held, D.,

Kammel, S., Kolter, J. Z., Langer, D., Pink, O., Pratt,

V., et al. (2011). Towards fully autonomous driving:

Systems and algorithms. In 2011 IEEE intelligent ve-

hicles symposium (IV), pages 163–168. IEEE.

Levoy, M., Gerth, J., Curless, B., and Pull, K. (2005). The

stanford 3d scanning repository.

Liu, L., Shi, T., Liu, B., and Yao, H. (2020). Compari-

son of initial registration algorithms suitable for icp

algorithm. In 2020 International Conference on Com-

puter Network, Electronic and Automation (ICCNEA),

pages 106–110. IEEE.

Magnusson, M., Lilienthal, A., and Duckett, T. (2007).

Scan registration for autonomous mining vehicles us-

ing 3d-ndt. Journal of Field Robotics, 24(10):803–

827.

Oliveira, F. P. and Tavares, J. M. R. (2014). Medical image

registration: a review. Computer methods in biome-

chanics and biomedical engineering, 17(2):73–93.

Rusu, R. B. and Cousins, S. (2011). 3d is here: Point cloud

library (pcl). In Robotics and automation (ICRA),

2011 IEEE International Conference on, pages 1–4.

IEEE.

Segal, A., Haehnel, D., and Thrun, S. (2009). Generalized-

icp. In Robotics: science and systems, volume 2, page

435.

Siqueira, R. S., Alexandre, G. R., Soares, J. M., and The, G.

A. P. (2018). Triaxial Slicing for 3-D Face Recogni-

tion From Adapted Rotational Invariants Spatial Mo-

ments and Minimal Keypoints Dependence. IEEE

Robotics and Automation Letters, 3(4):3513–3520.

Souza Neto, P., Pereira, N. S., and Th

´

e, G. A. P. (2018).

Improved Cloud Partitioning Sampling for Iterative

Closest Point: Qualitative and Quantitative Compar-

ison Study. In 15th International Conference on In-

formatics in Control, Automation and Robotics.

Vitter, J. S. (1984). Faster methods for random sampling.

Communications of the ACM, 27(7):703–718.

Wulf, O. (2016). Robotic 3d scan repository.

Yang, J., Li, H., Campbell, D., and Jia, Y. (2016). Go-

ICP: A Globally Optimal Solution to 3D ICP Point-

Set Registration. IEEE Transactions on Pattern Anal-

ysis and Machine Intelligence.

Zhang, Z. (1994). Iterative point matching for registration

of free-form curves and surfaces. International jour-

nal of computer vision, 13(2):119–152.

Dual-context Identification based on Geometric Descriptors for 3D Registration Algorithm Selection

157