Testing Environment for Developing a Wireless Networking System

based on Image-assisted Routing for Sports Applications

Shiho Hanashiro

1

, JunFeng Xue

1

, Junya Morioka

1

, Ryusuke Miyamoto

2

, Takuam Hamagami

3

,

Kentaro Yanagihara

3

, Yasutaka Kawamoto

3

, Hiroyuki Okuhata

4

, Hiroyuki Yomo

5

and Tomohito Takubo

6

1

Graduate School of Science and Technology, Meiji University, Kawasaki, Japan

2

School of Science and Technology, Meiji University, Kawasaki, Japan

3

Oki Electric Industry Co., Ltd., Tokyo, Japan

4

Soliton Systems K.K., Tokyo, Japan

5

Faculty of Engineering Science, Kansai University, Suita, Japan

6

Graduate School of Engineering, Osaka City University, Osaka, Japan

hiroyuki.okuhata@soliton.co.jp, yomo@kansai-u.ac.jp, takubo@eng.osaka-cu.ac.jp

Keywords:

Image-assisted Routing, Testing Environment, AR Marker, Wireless Sensor Network, Vital Sensing.

Abstract:

To improve the effectiveness of exercise a novel vital sensing system is under development. For real-time

sensing of vital signs by a wireless network during exercise, image-assisted routing that enables dynamic

routing of a multi-hop network according to sensor locations estimated by visual information. To develop the

novel networking system, testing environment that enables runtime verification of dynamic routing based on

image processing. Experimental results actual sensor nodes with AR markers showed that locations of sensor

nodes obtained using a USB camera could be appropriately given to the control software of base station to

manage routing information.

1 INTRODUCTION

To improve the effectiveness of exercise and prevent

sudden illness, a novel vital sensing system is cur-

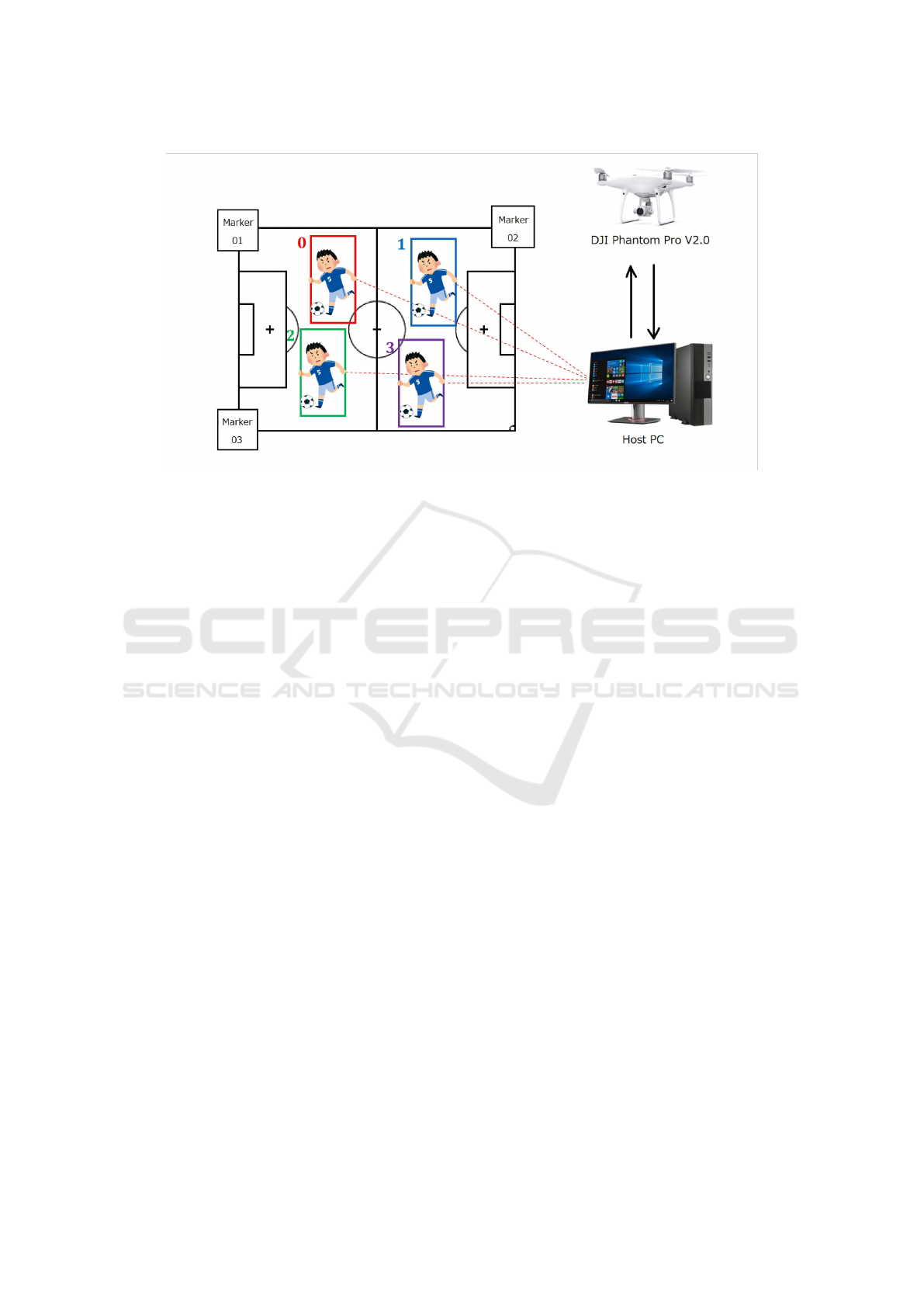

rently under development(Hara et al., 2017). Figure 1

illustrates the collection of vital signs via a multihop

wireless network. For the real-time sensing of hu-

man vitals by a wireless network during exercise, the

most significant challenge is the localization of sensor

nodes. This is owing to the inadequate operations of

conventional routing schemes based on the received

signal-strength indicator (RSSI) or global position-

ing system (GPS). To address this challenge, image-

assisted routing (IAR), which localizes sensor nodes

using visual information, was proposed(Miyamoto

and Oki, 2016). In IAR, vision-based human detec-

tion and tracking processes are applied to obtain the

location of humans wearing sensor nodes.

The vision-based localization of humans wear-

ing sensor nodes for vital monitoring comprises hu-

man detection and tracking. For the detection pro-

cess, an exhaustive search based on sliding windows

Figure 1: Collecting vital signs via a multi-hop wireless net-

work.

is adopted. Because accurate detection can be exe-

cuted(Oki et al., 2019b), its processing speed can be

improved, provided parallel processing is adopted, us-

ing specialized hardware and a graphics processing

unit (Oki and Miyamoto, 2017). Once accurate detec-

tion results are obtained by a detector, a simple track-

ing scheme (Yokokawa et al., 2017) that correlates de-

tection results over several frames of input images can

138

Hanashiro, S., Xue, J., Morioka, J., Miyamoto, R., Hamagami, T., Yanagihara, K., Kawamoto, Y., Okuhata, H., Yomo, H. and Takubo, T.

Testing Environment for Developing a Wireless Networking System based on Image-assisted Routing for Sports Applications.

DOI: 10.5220/0010690900003059

In Proceedings of the 9th International Conference on Sport Sciences Research and Technology Support (icSPORTS 2021), pages 138-143

ISBN: 978-989-758-539-5; ISSN: 2184-3201

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Figure 2: System overview.

be optimally adopted in this application. Fundamental

technologies for wireless networking have also been

developed to actualize the real-time vital sensing of

humans during exercise(Hara et al., 2020; Hamagami

et al., 2020).

As described above, elementary technologies that

are indispensable for IAR have been successfully de-

veloped. However, to develop the definitive sys-

tem for IAR that combines image-based localization

and wireless multi-hop networking, it is necessary to

verify the operational efficiency of dynamic routing

based on sensor locations estimated using visual in-

formation. Accordingly, this study proposes a novel

testing environment that enables the verification of

dynamic routing for multi-hop networking using lo-

cations of sensor nodes estimated by visual informa-

tion.

The System organization of this definitive system

is presented in Figure 2, where the video sequence

obtained from a drone flying in a sports field is uti-

lized to localize humans wearing sensor nodes based

on computer vision technologies. However, it is im-

practical to use a flying drone for the integration test,

where the algorithm and implementation of dynamic

routing based on image-based localization should be

verified.

To actualize an efficient system that supports the

development of a novel sensor networking system, a

testing environment that includes the vision-based lo-

calization of sensor nodes without drones is required,

as well as a wireless networking system identical to

the definitive system. In the testing environment, an

AR marker is attached to a sensor node, and its lo-

cation is determined using a USB camera. Estimated

locations are provided to the control software of the

base station that handles the routing information of

the sensor nodes. Via this approach, it is possible to

develop an algorithm and a system for novel wireless

networking systems.

2 RELATED WORK

This section explains the concept of IAR, as well as

the indispensable computer vision technologies re-

quired for IAR actualization: visual human detection

and tracking.

2.1 Concept of Image-assisted Routing

In image-assisted routing (IAR), dynamic routing

in multi-hop networking is performed using the lo-

cations of sensor nodes estimated by computer vi-

sion technologies, using a video sequence obtained

by a flying drone; however, conventional wireless

multi-hop systems control multi-hop networking us-

ing RSSI and/or GPS. In addition, although the sensor

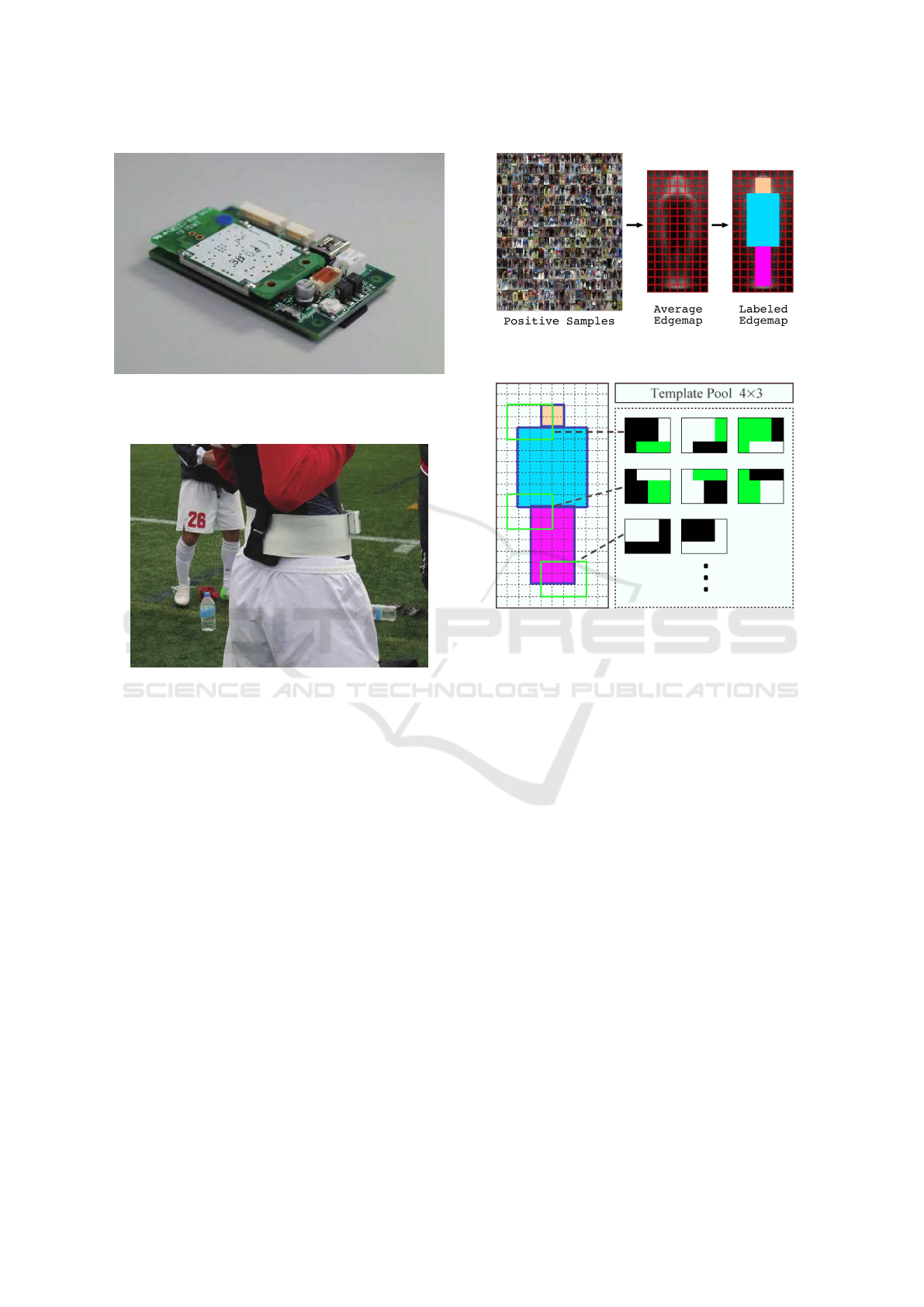

node presented in Figure 3 can sense RSSI and esti-

mate location using the GPS, IAR is implemented to

improve the robustness of multi-hop networking un-

der several conditions that may occur in sports scenes.

2.2 Visual Human Detection

The primary purpose of IAR is to actualize real-time

vital sensing of humans during exercise, where the

locations of sensor nodes are estimated as the loca-

tions of the humans wearing them. Here, a sensor

Testing Environment for Developing a Wireless Networking System based on Image-assisted Routing for Sports Applications

139

Figure 3: Sensor nodes for real-time vital sensing during

exercises.

Figure 4: Attaching a sensor node to a human doing exer-

cise.

node is attached, as illustrated in Figure 4. The chal-

lenge of localizing target humans in an image can be

addressed by adopting visual object detectors widely

used in several computer vision applications. The

YOLO series (Redmon and Farhadi, 2017; Redmon

and Farhadi, 2018), RetinaNet (Lin et al., 2017), and

EfficientDet (Tan et al., 2020) are advanced schemes

based on deep neural networks, with excellent perfor-

mance in detecting target objects from an image.

In addition, a traditional detection scheme com-

prising handcrafted features and boosting also ex-

hibits adequate accuracy for human detection in

sports scenes (Oki et al., 2019b; Oki et al., 2019a)

because humans should be easier detected from back-

ground images than generic cases.

We adopt informed-filters, which are one of the

most accurate schemes for human detection among

the schemes with handcrafted features. This scheme

achieves both accurate detection and fast processing

by feature design, according to the average edge map

obtained from training samples, as illustrated in Fig-

ures 5 and 6. The processing speed of this scheme can

Figure 5: Edgemap generation.

Figure 6: Template generation.

be improved with the parallel application of a GPU

and an FPGA. The aforementioned advantages of this

scheme are suitable for the real-time processing of

IAR in sports scenes.

2.3 Visual Human Tracking to Estimate

a Target’s ID

Accurate human detection from sports scenes can

be realized by utilizing a detector constructed with

informed-filters. However, IAR requires human iden-

tification when several humans need to be monitored

by the proposed vital sensing system. Although sev-

eral approaches can be applied to the identification

of target humans, tracking over several frames of in-

put images is applied for this purpose in the proposed

scheme. This task is called visual target tracking or

visual object tracking in the field of computer vision,

where the family of Kalman and particle filters play a

significant role. KCF(Henriques et al., 2015), which

does not utilizes time-series filters, instead of image

correspondence over frames, has recently garnered re-

markable attention.

Several approaches have been proposed for this

task; however, we have selected a simpler approach

because relatively accurate detection results can be

icSPORTS 2021 - 9th International Conference on Sport Sciences Research and Technology Support

140

Figure 7: Overview of the proposed testing environment.

obtained by a detector in the sports scenes that we

tested (Oki et al., 2019a). This simple approach solely

associates detection results in the current frame with

those of previous frames. Remarkably, this approach

exhibited optimal accuracy, and became effective in

some test scenes with the application of error correc-

tion based on color information (Aoki et al., 2020).

Tracking-based identification is beneficial when all

IDs obtained via the detection and tracking processes

are assigned to the IDs of the sensor nodes used in

wireless networking. The correct assignment of IDs

can be obtained when the target humans line up ac-

cording to the IDs of the sensor nodes attached to

them before starting vital sensing during exercises.

3 TESTING ENVIRONMENT FOR

DEVELOPING A

WIRELESS-NETWORKING

SYSTEM BASED ON

IMAGE-ASSITED ROUTING

FOR SPORTS APPLICATIONS

This section proposes a testing environment for mul-

tihop wireless networking based on IAR, where the

location of sensor nodes is estimated via visual infor-

mation.

3.1 Overview of the Testing

Environment

Figure 7 presents an overview of the proposed testing

environment. In this testing system, the locations of

sensor nodes with AR markers are determined in real

time using a video sequence captured from a USB

camera, and they are sent to the control software of

a base station at runtime. This real-time localization

mechanism of sensor nodes using input images en-

ables the development and verification of a dynamic

routing system based on IAR.

Figure 8: Example of a JSON file that contains locations of

sensor nodes estimated by a USB camera.

3.2 AR Marker Detection

In an actual system based on IAR, the locations of

sensor nodes are estimated via human detection and

tracking using a video sequence obtained by a cam-

era mounted on a drone. However, it is expensive to

verify the networking system based on IAR using a

video sequence captured by a drone, because several

target humans in the sports field where a drone can

fly become indispensable. Therefore, a more com-

pact system is required to verify the basic functions

of IAR, especially in terms of the dynamic update of

routing information according to the results of the lo-

calization based on image processing. Accordingly,

we decided to attach an AR maker to a sensor node

and provide locations of sensor nodes to control the

software of a base station that manages the dynamic

routing of sensor nodes.

To verify the basic functions of the wireless net-

working based on IAR, AR markers are attached

to sensor nodes, which can be accurately obtained

using image sequences provided by a USB cam-

era. To generate and detect AR markers, the ArUco

marker (J.Romero-Ramirez et al., 2018; Garrido-

Jurado et al., 2016) used in the OpenCV library was

adopted. This library can drastically reduce the im-

plementation cost of AR marker detectors.

3.3 System Implementation

JSON file is adopted as the interface to control the

software of the base station. The locations of the sen-

sor nodes are written to a JSON file, and the control

software of the base station reads the node locations

from the file. Figure 8 presents an example of a JSON

file that contains node locations obtained via image

processing. The dynamic update of routing informa-

Testing Environment for Developing a Wireless Networking System based on Image-assisted Routing for Sports Applications

141

tion becomes feasible when the control software of

the base station reads the current locations of the sen-

sor nodes after their updates. In actual cases, the cur-

rent locations of sensor nodes are determined by hu-

man detection and tracking using a video sequence

obtained from a camera mounted on a drone.

To verify the combined application of the image-

based localization and dynamic update of routing in-

formation using the control software, a software in-

terface for localizing sensor nodes using AR markers

to the control software is designed to be identical to

the actual one. This implementation does not require

any modification to the control software; instead, it

solely requires the implementation of the localization

based on AR markers with an identical interface. Via

the organization of this testing environment, the ef-

fective development of the novel sensor networking

algorithm and system becomes feasible.

4 EVALUATION

This section evaluates the proposed testing environ-

ment according to the following procedures:

1. Identical ID assignment of sensor nodes in the

control software and image-based localization,

2. Manual movement of sensor nodes, and

3. Update of sensor locations in the control software,

according to the movement.

To assign an identical ID to a sensor node in the

control software of the base station and the localiza-

tion software using AR markers, the ID assignment

procedure of the control software that assigns a new

ID to a sensor node when it is switched on, as well as

the sensor nodes, are switched on according to the ID

of the AR markers attached to them. Accordingly, the

same ID in the control software and image processing

were assigned to a sensor node.

After activating all the sensor nodes, a sensor node

was moved, as illustrated in Figures 9,10, and 11. In

these images, the AR markers corresponding to all

the sensor nodes were accurately detected. Accord-

ingly, appropriate locations and IDs were obtained.

The update rate of the base station’s control software

was significantly less than the frame rate of the USB

camera. Hence, the control software updated the lo-

cations of the sensor nodes after a while, because

the actual movement of the sensor node had finished.

Figure 12 illustrates how the locations of the sensor

nodes were updated according to the movement of

the sensor node. These results indicate that the lo-

cations of sensor nodes determined by a USB camera

can be appropriately assigned to the control software

Figure 9: Before moving.

Figure 10: While moving.

Figure 11: After moving.

Figure 12: After update of locations.

of the base station to manage the routing information

of the sensor nodes. It is evident that the proposed

testing environment enables the algorithm design and

icSPORTS 2021 - 9th International Conference on Sport Sciences Research and Technology Support

142

system development of dynamic routing based on the

sensor locations determined by visual information at

runtime.

5 CONCLUSION

This study proposes a novel testing environment for

developing a novel vital sensing system based on the

IAR of humans during exercises in an outdoor sports

field. The key technology for the vital sensing system

is the dynamic routing of wireless networking among

sensor nodes based on their locations estimated by

computer vision technologies. However, it is difficult

to verify the functions of the networking system us-

ing actual video sequences of several people perform-

ing exercises on the outdoor sports field captured by a

camera mounted on a flying drone at runtime; In ad-

dition, the development of this approach is too expen-

sive. To address this challenge, a compact but effec-

tive system was developed to verify the combined ap-

plication of a control and localization software based

on image processing. The proposed system adopts

AR markers to determine the locations and IDs of

sensor nodes and provide locations of sensor nodes

to the control software in real time. The experimental

results of actual sensor nodes with AR markers indi-

cate that the locations of sensor nodes obtained using

a USB camera could be appropriately assigned to the

control software of the base station to manage routing

information.

ACKNOWLEDGEMENTS

The research results have been partly achieved by

“Research and development of Innovative Network

Technologies to Create the Future”, the Commis-

sioned Research of National Institute of Information

and Communications Technology (NICT), JAPAN.

REFERENCES

Aoki, R., Oki, T., and Miyamoto, R. (2020). Accuracy

improvement of human tracking in aerial images us-

ing error correction based on color information. In

Proceedings of the 9th International Conference on

Software and Computer Applications, ICSCA 2020,

Langkawi, Malaysia, February 18-21, 2020, pages

124–128. ACM.

Garrido-Jurado, S., Salinas, R. M., Madrid-Cuevas, F., and

Medina-Carnicer, R. (2016). Generation of fiducial

marker dictionaries using mixed integer linear pro-

gramming. Pattern Recognition, 51:481–491.

Hamagami, T., Hara, S., Kawamoto, Y., Yomo, H.,

Miyamoto, R., and Okuhata, H. (2020). Summary

of experimental results on accurate wireless sensing

system for a group of exercisers spread in an out-

door ground. In 14th International Symposium on

Medical Information Communication Technology, IS-

MICT 2020, Nara, Japan, May 20-22, 2020, pages 1–

4. IEEE.

Hara, S., Hamagami, T., Kawamoto, Y., Yomo, H.,

Miyamoto, R., and Okuhata, H. (2020). An adaptive

superframe change in a wireless vital sensor network

for a group of outdoor exercisers. In 92nd IEEE Ve-

hicular Technology Conference, VTC Fall 2020, Victo-

ria, BC, Canada, November 18 - December 16, 2020,

pages 1–6. IEEE.

Hara, S., Yomo, H., Miyamoto, R., Kawamoto, Y., Okuhata,

H., Kawabata, T., and Nakamura, H. (2017). Chal-

lenges in Real-Time Vital Signs Monitoring for Per-

sons during Exercises. International Journal of Wire-

less Information Networks, 24:91–108.

Henriques, J. F., Caseiro, R., Martins, P., and Batista, J.

(2015). High-speed tracking with kernelized corre-

lation filters. IEEE Transactions on Pattern Analysis

and Machine Intelligence, 37:583–596.

J.Romero-Ramirez, F., Mu

˜

noz-Salinas, R., and Medina-

Carnicer, R. (2018). Speeded up detection of squared

fiducial markers. Image and Vision Computing,

76:38–47.

Lin, T.-Y., Goyal, P., Girshick, R., He, K., and Doll

´

ar, P.

(2017). Focal loss for dense object detection. In Proc.

IEEE Int. Conf. Comput. Vis., pages 2980–2988.

Miyamoto, R. and Oki, T. (2016). Soccer Player Detection

with Only Color Features Selected Using Informed

Haar-like Features. In Advanced Concepts for Intel-

ligent Vision Systems, volume 10016 of Lecture Notes

in Computer Science, pages 238–249.

Oki, T., Aoki, R., Kobayashi, S., Miyamoto, R., Yomo, H.,

and Hara, S. (2019a). Vision-based detection of hu-

mans on the ground from actual aerial images by in-

formed filters using only color features. In Proc. Inter-

national Conference on Sport Sciences Research and

Technology Support, pages 84–89. ScitePress.

Oki, T. and Miyamoto, R. (2017). Efficient GPU imple-

mentation of informed-filters for fast computation. In

Image and Video Technology, pages 302–313.

Oki, T., Miyamoto, R., Yomo, H., and Hara, S. (2019b).

Detection accuracy of soccer players in aerial images

captured from several viewpoints. Journal of Func-

tional Morphology and Kinesiology, 4(1).

Redmon, J. and Farhadi, A. (2017). YOLO9000: better,

faster, stronger. In Proc. IEEE Conf. Comput. Vis. Pat-

tern Recognit., pages 6517–6525.

Redmon, J. and Farhadi, A. (2018). Yolov3: An incremental

improvement. CoRR, abs/1804.02767.

Tan, M., Pang, R., and Le, Q. V. (2020). Efficientdet: Scal-

able and efficient object detection. In Proc. IEEE

Conf. Comput. Vis. Pattern Recognit.

Yokokawa, H., Oki, T., and Miyamoto, R. (2017). Feasi-

bility study of a simple tracking scheme for multiple

objects based on target motions. In Proc. International

Workshop on Smart Info-Media Systems in Asia, pages

293–298.

Testing Environment for Developing a Wireless Networking System based on Image-assisted Routing for Sports Applications

143