Netychords: An Accessible Digital Musical Instrument for Playing

Chords using Gaze and Head Movements

Nicola Davanzo

a

, Matteo De Filippis

b

and Federico Avanzini

c

Dept. of Computer Science, University of Milan, 20133 Milan, Italy

Keywords:

Accessible Digital Musical Instrument, Eye Tracking, Head Tracking, Chords.

Abstract:

Research on Accessible Digital Musical Instruments (ADMIs) dedicated to users with motor disabilities, fa-

cilitated by the introduction of new sensors on the mass market during the last decades, has carved out an

important niche within the scenario of new interfaces for musical expression. Among these instruments, Ne-

tytar, developed in 2018, is a hands-free ADMI enabling the performance of monophonic melodies, controlled

using the gaze (for the selection of the notes) and the breath (for the control of the dynamics), through an eye

tracker and an ad-hoc breath sensor. In this article we propose Netychords, a Netytar extension developed to

allow a quadriplegic musician to play chords. The instrument is controlled using gaze and head movement. A

head tracking paradigm is used to control chord strumming, through a cheap ad-hoc built head tracker. Inter-

action methods and mappings are discussed, along with a series of experimental chord keys layouts, problems

encountered and planned future developments.

1 INTRODUCTION

Other than being an engaging activity, playing a mu-

sical instrument can improve one’s cognitive abil-

ities (Jaschke et al., 2013), contribute to their

health (Stensæth, 2013) and the social aspects of their

life (Costa-Giomi, 2004). The right of participating to

cultural and artistic activities in the society is recog-

nized by the Declaration of the Human Rights (United

Nations, 2015). Despite the developments in in-

terfaces for musical expression and accessibility re-

search, people with motor disabilities often have var-

ious difficulties in participating in musical activities,

such as playing an instrument. A quadriplegic disabil-

ity hinders the control of hands and feet, necessary to

play most of the instruments and music interfaces de-

signed for able-bodied users. According to a World

Health Organization report, spinal cord injuries af-

fect between 250,000 and 500,000 people worldwide

on average every year (World Health Organization,

2013). Accessible Digital Musical Instruments (AD-

MIs) are able to extend or transcend the creative pos-

sibilities offered by traditional musical instruments,

providing interaction based on unconventional phys-

a

https://orcid.org/0000-0003-3073-5326

b

https://orcid.org/0000-0001-8568-9983

c

https://orcid.org/0000-0002-1257-5878

ical interaction channels. In the literature, a few in-

struments are found dedicated to this niche of motor

disability. A recent survey (Davanzo and Avanzini,

2020c) is dedicated to their design and analysis. Such

instruments can exploit alternative interaction chan-

nels available from the neck upwards: eyes and eye-

brows, mouth and related facial muscles, head move-

ment and neck tension, brain activity detected via

electroencephalogram. In this article we propose Ne-

tychords, an ADMI and MIDI controller which allows

the performance of chords through two of the listed

channels: gaze, which is used for chord selection, and

head movement, used to perform strumming. The in-

strument is inspired by Netytar, an ADMI presented

and discussed in (Davanzo et al., 2018) and (Davanzo

and Avanzini, 2020d), designed to perform mono-

phonic melodies, controlled through gaze (again for

notes selection) and breath (which controls notes dy-

namics and note on/off events). Netychords could

complement it in collective musical performance. As

we will see in Sec. 2, a small amount of instruments

dedicated to quadriplegic users found in literature al-

low to play chords, thus Netychords could fill that

niche. We will discuss the related state of the art in

Sec. 2, the current implementation of Netychords in

Sec. 3, and its main design-related aspects in Sec. 4.

Finally, we will discuss problems as well as future

work, testing and evaluation in Sec. 5.

202

Davanzo, N., De Filippis, M. and Avanzini, F.

Netychords: An Accessible Digital Musical Instrument for Playing Chords using Gaze and Head Movements.

DOI: 10.5220/0010689200003060

In Proceedings of the 5th Inter national Conference on Computer-Human Interaction Research and Applications (CHIRA 2021), pages 202-209

ISBN: 978-989-758-538-8; ISSN: 2184-3244

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

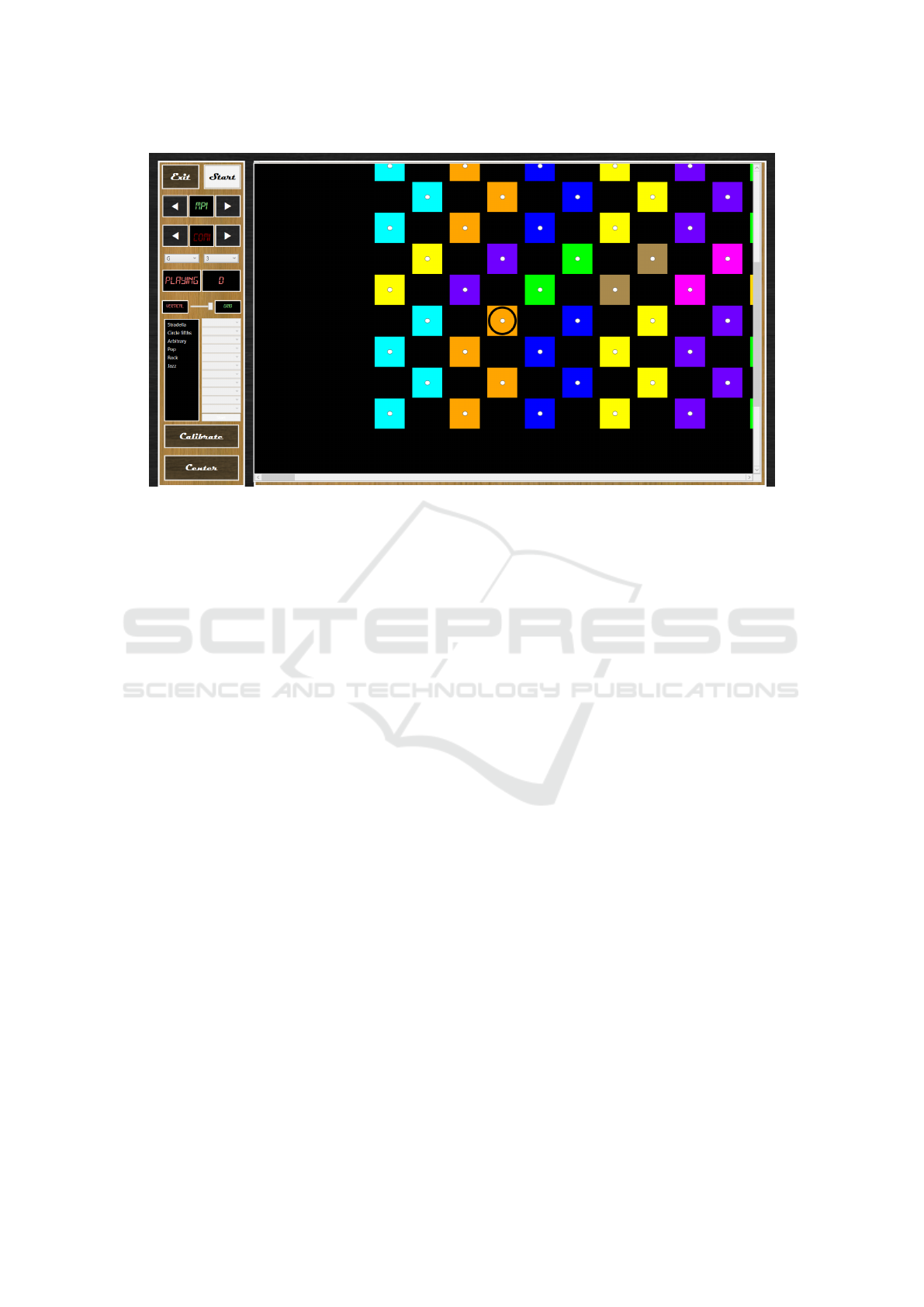

Figure 1: Netychords interface. On the left, options such as layout selection and customization, sensors calibration and

customization are present. The layout of the virtual keyboard is drawn on the right.

2 RELATED WORKS

The aforementioned work (Davanzo and Avanzini,

2020c) analyzes 15 musical instruments operable by

people with quadriplegic disability. Only 6 of these

seem to allow the performance of chords, with various

degrees of versatility. (Frid, 2019) proposes an analy-

sis of general accessible musical interfaces in the state

of the art, dividing them into 10 categories. Of the 83

instruments analyzed, only 2 fall in the gaze-based

category, suggesting how this interaction channel can

still be exploited. One further survey (Larsen et al.,

2016) lists 16 musical applications (performance in-

struments, sequencers, video games and more) which

can be operated by people with different types of dis-

abilities.

Head tracking and eye tracking are widely con-

solidated as interaction channels for accessible ap-

plications. Their use in selection tasks have been

recently evaluated and compared through Fitts’ Law

tests (Davanzo and Avanzini, 2020b), showing that

gaze pointing is particularly fast and fairly stable,

especially if the eye tracker data stream is filtered,

while head movement is slightly slower but has ex-

cellent stability. However, it has also been demon-

strated that a maximum limit of physiologically pos-

sible saccadic eye movements per second can be ob-

tained (Hornof, 2014), which potentially hinders the

performance of fast sequences. This limit is poten-

tially more important if gaze is used for notes se-

lection while performing melodic lines, whereas for

chord changes it could be sufficiently fast. While pre-

cise eye tracking requires a dedicated peripheral, head

tracking has been exploited for accessibility purposes

(e.g. to navigate tablets) also through integrated cam-

eras (Roig-Maim

´

o et al., 2016). However, for Nety-

chords we preferred to use a wearable peripheral in

order to improve tracking precision. Head tracking

was also used to control and direct wheelchairs: a re-

view on the topic is proposed by (Leaman and La,

2017). Head motion peculiarities from a physiolog-

ical point of view have also been extensively stud-

ied (Sarig-Bahat, 2003).

As already stated, some ADMIs dedicated to

quadriplegic people already allow to play chords.

Tongue-Controlled Electro-Musical Instrument (Ni-

ikawa, 2004) consists of a PET board mounted on the

palate. Using the tongue it is possible to press one of

the buttons, arranged in a cross shape, to play the cor-

responding chord. Eye Play The Piano is a gaze-based

interface which allows to control a real piano through

actuators placed on the keyboard. Although the in-

strument is not described in any scientific publication,

the material available on the official website (Fove

Inc., nd) shows that it is possible to customize the

interface to play chords. Jamboxx (Jamboxx, nd) is

an ADMI bearing similarities to a digital harmonica:

a cursor moved using the mouth along a continuous

horizontal axis is mapped to pitch selection. Although

no scientific publication on the istrument is available,

as with the previous one, it seems that it is possi-

ble to play chords. The EyeHarp (Vamvakousis and

Netychords: An Accessible Digital Musical Instrument for Playing Chords using Gaze and Head Movements

203

Ramirez, 2016) is controlled entirely by gaze. Keys

are arranged in a pie shape. In the described prototype

it is possible to build arpeggios through a sequencer

layer, then use some of the circularly arranged keys to

trigger chord changes. Those will be played continu-

ously in the background following the defined arpeg-

gio pattern at a fixed tempo. P300 Harmonies (Vam-

vakousis and Ramirez, 2014) is an electroencephalo-

gram based interface that allows to generate and edit

arpeggios live in a simplified way, by editing a 6-note

loop.

Only two ADMIs found in the literature are con-

trolled through head tracking. In Hi Note (Matossian

and Gehlhaar, 2015), head movements are tracked to

move a cursor over a virtual keyboard having a spe-

cial layout. Breath is used to control notes dynamics.

It is unclear if chords performance is possible. Clar-

ion (Open Up Music, nd) is an ADMI whose layout

can be customized according to the musical piece to

be played, drawing colored keys of various sizes. It

is controllable using touch screen, gaze point or head

tracking, according on the setup. In both Clarion and

Hi Note, head movement is used to control a cursor

and is mapped to note selection.

Netychords is somehow related to a guitar: in both

instruments, notes selection and strumming are con-

trolled by two different channels (the two hands in

the guitar, gaze and head movement in Netychords).

Some guitar-inspired ADMIs, in the form of aug-

mented instruments or novel interfaces, are already

present in the literature, though not operable by a

quadriplegic user. The Actuated Guitar (Larsen et al.,

2013; Larsen et al., 2014), for example, consists of a

guitar adapted for by people with hemiplegic paraly-

sis. The able hand is placed on the neck, while a pedal

controls an actuator capable of plucking the strings.

Strummi (Harrison et al., 2019) is an instrument rela-

tively similar to a digital guitar, which allows the per-

formance of chords, designed for partial motor dis-

abilities.

3 NETYCHORDS

IMPLEMENTATION

Netychords interface is depicted in Fig. 1. The idea

for its implementation stems from a general lack in

the literature of polyphonic instruments (i.e., able

to play chords) dedicated to users with severe mo-

tor disabilities. As an example, the aforementioned

EyeHarp (Vamvakousis and Ramirez, 2016) allows

for the performance of chords only in diatonic logic

and using predefined rhythmic patterns. Netychords

shares with Netytar the use of the gaze point to per-

Figure 2: Netytar running on a laptop, equipped with Tobii

4C and the ad-hoc build head tracker.

form notes selection. However, while Netytar exploits

breath to control note dynamics, Netychords exploits

head movement to perform note strumming events,

which actually trigger a group of notes at the same

time. The instrument is operable through low cost

sensors. It has been developed using a Tobii 4C eye

tracker, which features 90 Hz image sampling rate

through near infrared illuminators (NIR 850nm)

1

. We

built an ad-hoc head tracker using an MPU-6050/GY-

521 accelerometer/gyroscope and an Arduino Uno

microcontroller, both mounted on an headphone set,

interfaced via USB port and featuring a ∼100 Hz

sampling rate. The device is depicted in Fig. 2. The

Netychords code is available

2

under the Open-Source

GNU GPL V3 license. A demo video of the instru-

ment is linked in the GitHub Readme.

3.1 Chord Selection

Gaze point is used to navigate a virtual keyboard,

having differently colored keys to indicate different

chords. Each color corresponds to a different root

note, while different color shades indicate different

chord types. Six different layouts have been imple-

mented in the current iteration, allowing the user to

choose the most suitable for their performance. Each

key is assigned a square shaped gaze sensitive area

(occluder). Given the noisiness of the eye tracker

signal, there is a trade-off between selection accu-

racy and length of movement required. For this rea-

son, most of the implemented layouts have two dis-

play modes having different distances between keys:

1

Tobii 4C eye tracker: https://help.tobii.com/hc/en-

us/articles/213414285-Specifications-for-the-Tobii-Eye-

Tracker-4C

2

Netychords on GitHub:

https://github.com/LIMUNIMI/Netychords/

CHIRA 2021 - 5th International Conference on Computer-Human Interaction Research and Applications

204

square grid (Fig. 2, 3, 4 and 5) or slanted (Fig. 1). The

reader can use Table 1 as a reference for the chords

named below. The implemented layouts are the fol-

lowing:

• Stradella. It is inspired by the Stradella bass sys-

tem (Balestrieri, 1979), used in some italian ac-

cordions, which arranges the chords using the cir-

cle of fifths. While each column of keys cor-

responds to the same root note, each row corre-

sponds to a different family of chords, as shown

in Fig. 3. The original Stradella includes 4 chord

families. In Netychords these have been extended

to 11: major, minor, dominant 7th, diminished

7th, major 7th, minor 7th, dominant 9th, domi-

nant 11th, suspended 2nd, suspended 4th and half-

diminished 7th.

• Simplified Stradella. Since the original Stradella

bass system is designed for a fingered keyboard,

the distance between the keys could be disadvan-

tageous for gaze based interaction. In this sim-

plified version, all the chords from Tab. 1 have

been grouped into 5 families (major, minor, dom-

inant, diminished and half-diminished). While

maintaining the circle of fifths rule for horizon-

tal movements (each key is incremented by seven

semitones from the previous one), the first chord

of each row is different according to the chord

family. Taking as fundamental the first major

chord (for example, a C chord), the minor chords

row starts from the VI degree of the major scale

(relative minor, therefore in the example an A

chord), the dominant sevenths start from the V de-

gree (in our example, a G chord), the diminished

rows follows the same arrangement as the major

one while the half-diminished rows starts from the

VII degree (in the example, a B chord). Fig. 4

shows how, with this arrangement, chords belong-

ing to an harmonized diatonic scale are kept close

together, resulting in less eye movement required

to play musical pieces in a single key. Diatonic

scale harmonization is resumed in Tab. 2. By

removing rows from this layout, three simplified

genre-specific presets have been obtained, poten-

tially useful for playing pop, rock and jazz mu-

sic. In the jazz preset, for example, the major and

minor chords are replaced with major 6th and mi-

nor 6th chords, following the indications of Pino

Jodice, jazz pianist (Jodice, 2017), thus keeping

close the grades listed in Tab. 2 (right half). The

rock preset instead contains only major, minor

(thus keeping close the degrees described in the

left half of Tab. 2), dominant 7th, suspended 2nd

and suspended 4th chords. The user is also able to

create a new custom layout selecting which chord

Table 1: Intervals describing each type of chord present in

Netychords. Colors reflects the chord families subdivision

implemented in the Simplified Stradella layout: major (red);

minor (blue); dominant (green); diminished (orange); half-

diminished (gray).

Suffix Chord name Intervals

(degrees)

Sample C-

chord

- Major 1, 3, 5 C-E-G

min Minor 1, [3, 5 C-E[-G

maj6 Major 6th 1, 3, 5, 6 C-E-G-A

min6 Minor 6th 1, [3, 5, 6 C-E[-G-A

maj7 Major 7th 1, 3, 5, 7 C-E-G-B

min7 Minor 7th 1, [3, 5, [7 C-E[-G-B[

7 Dominant 7th 1, 3, 5, [7 C-E-G-B[

o7 Diminished 7th 1, [3, [5, [[7 C-E[-G[-A

ø7 Half-dimin. 7th 1, [3, [5,[7 C–E[–G[–B[

sus2 Suspended 2nd 1, 2, 5 C-D-G

sus4 Suspended 4th 1, 4, 5 C-F-G

9 Dominant 9th 1, 3, 5, [7, 9 C-E-G-B[-D

11 Dominant 11th 1, 3, 5, [7, 9,

11

C–E–G–B[–

D–F

Table 2: Harmonized diatonic major scale pattern used in

Netychords. On the left, harmonization with 3 notes per

chord; on the right, harmonization with 4 or more notes per

chord. Degrees are provided using jazz notation.

3 notes / chord 4+ notes / chord

Degree Example Degree Example

I C I Cmaj7

ii Dmin ii Dmin7

iii Emin iii Emin7

IV F IV Fmaj7

V G V7 G7

vi Amin vi Gmin7

vii Bmin vii

ø7

B

ø7

rows to include, and in which order.

• Flowerpot. This layout has a completely different

structure from the previous ones. The keyboard is

divided into groups of 5 keys arranged in a cross,

called flowers. A major flower is red; the mid-

dle key is mapped to a natural major chord, while

the other keys are mapped to dominant 7th, ma-

jor 7th, major 6th and suspended 4th chords. A

minor flower is blue; the central key is mapped

to a natural minor chord, while the other keys are

mapped to minor 7th, minor 6th, diminished 7th

and half-diminished chords. Flowers are grouped

in proximity to each other, obtaining a square grid

tessellation without empty spaces. After selecting

the tonal center, the central flower will correspond

to the I degree of the harmonized diatonic major

scale, while the adjacent flowers, arranged in a cir-

cle, will cover the other degrees (again according

to the scheme shown in Fig. 5). This layout could

therefore be practical for playing songs without

key changes.

Netychords: An Accessible Digital Musical Instrument for Playing Chords using Gaze and Head Movements

205

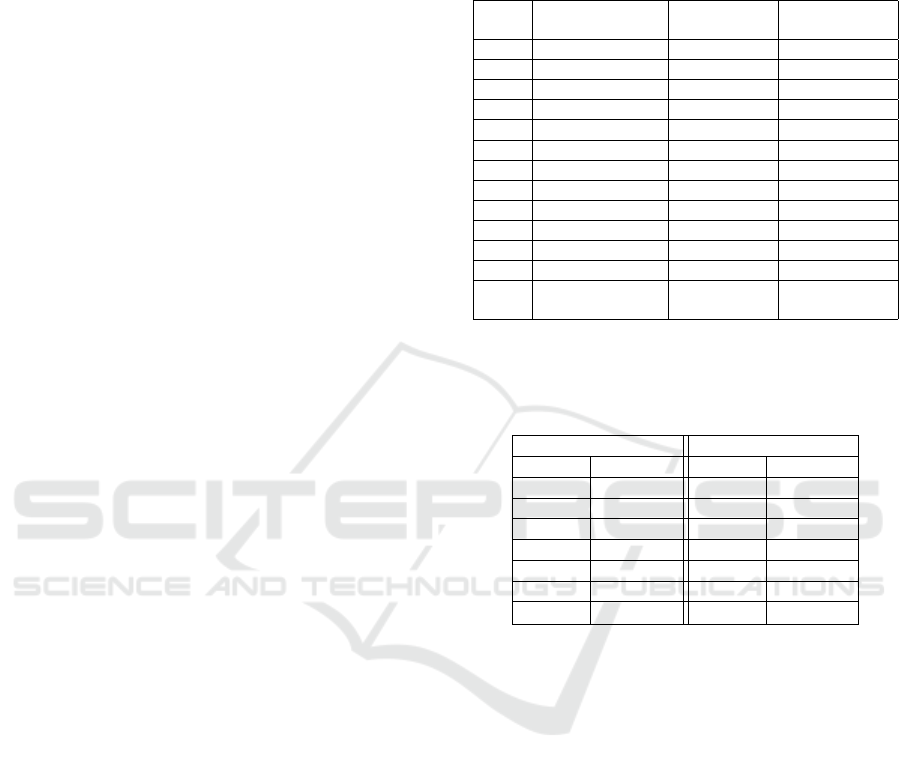

Figure 3: A four rows/six chords detail of the Stradella lay-

out implementation. Chord labels are not visible while play-

ing.

Figure 4: A four rows/six chords detail of the Simplified

Stradella layout implementation. Keys belonging to the di-

atonic harmonization of the B major scale (labels indicate

the various degrees) are enclosed in the red square. All the

labels are not visible while playing.

Figure 5: Current implementation of the Flowerpot layout

with C as root note. Labels are not visible while playing.

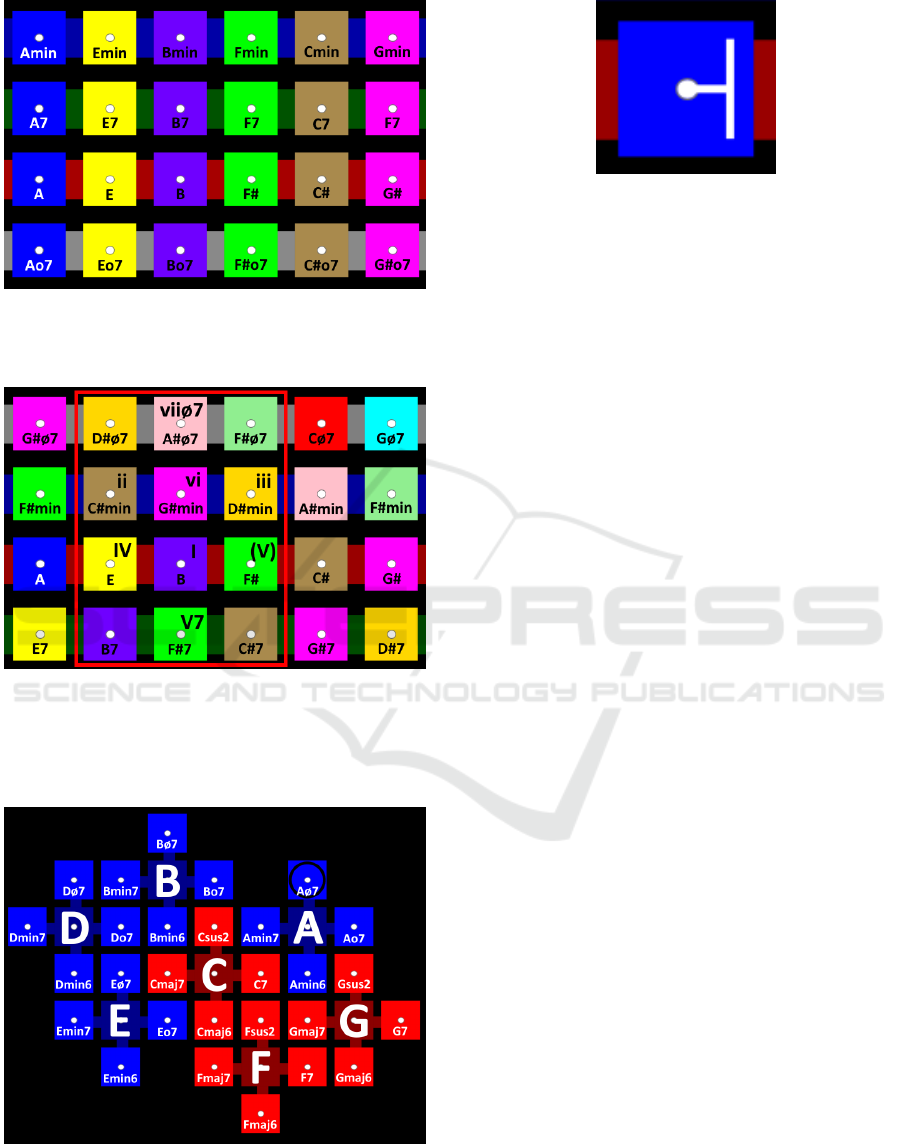

Figure 6: Head position feedback handle (in white).

For most of the layouts, keys cannot all be shown

within the application window, due to the size of the

screen and because too small keys would be difficult

to select using gaze pointing. Netychords therefore

implements the same autoscrolling system as Nety-

tar (Davanzo et al., 2018), which smoothly moves

the fixated key to the center, taking advantage of the

“smooth pursuit” capabilities of the eyes (Majaranta

and Bulling, 2014). Octave choice is based on ”reed”

selectors (recalling again the Stradella accordion), to

reduce the number of keys drawn on the screen.

3.2 Strumming

Chords strumming (traslated into MIDI note on/off

events and velocities) is controlled through head

tracking. Here we discuss the strumming modality

implemented for Netychords, which to our knowledge

has not been previously proposed in the literature of

digital musical instruments.

Head rotations on the horizontal axis (yaw) are

tracked. A strum occurs when a change in rotation di-

rection is detected. A MIDI velocity value (which in

turn determines the resulting sound intensity) is gen-

erated as a proportional value to the angle described

by the head with respect to a center position (cali-

brated before playing), where the proportionality fac-

tor is adjustable through a slider. In order to actually

trigger a new strum (i.e., to generate a MIDI note on

event), it is necessary to pass through a central zone

called deadzone, defined around 0

◦

. The deadzone

has an adjustable size, within which changes in direc-

tion are not detected.

Visual feedback of head rotation is given directly

on the key that is being fixated, through a white han-

dle whose width corresponds to the head rotation an-

gle with respect to the center, which is indicated by a

white dot, as depicted in Fig. 6.

A known problem to face when designing gaze-

based interfaces is that of Midas Touch (Majaranta

and Bulling, 2014), namely the involuntary activa-

tion of interface elements (e.g. keys) when these are

crossed by gaze trace. In previous works, different

gaze controlled instruments addressed the problem in

CHIRA 2021 - 5th International Conference on Computer-Human Interaction Research and Applications

206

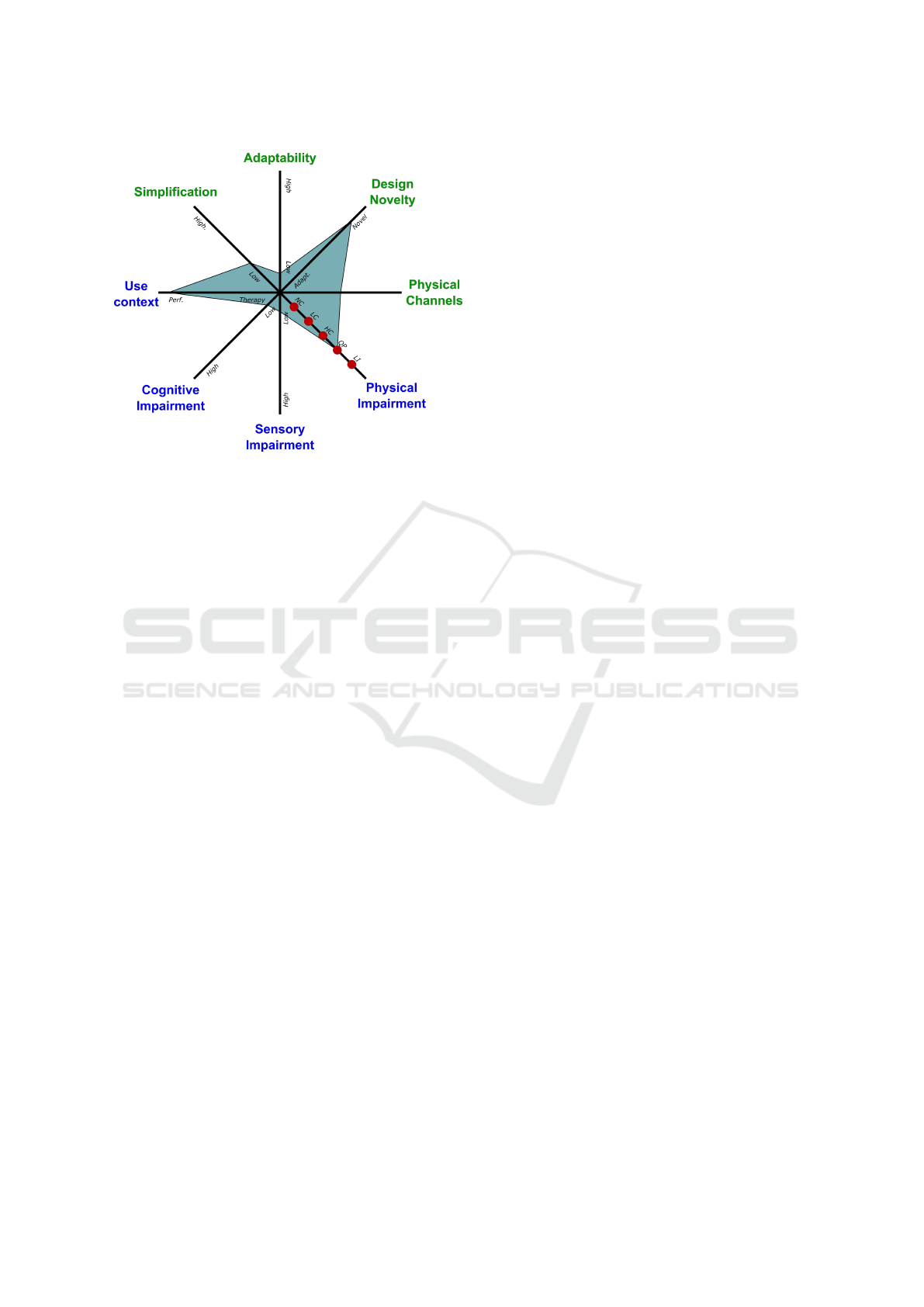

Figure 7: The main design aspects of Netychords, visual-

ized on a dimension space for the evaluation of ADMIs (Da-

vanzo and Avanzini, 2020a).

different ways. In Netychords the Midas Touch is

to an extent solved by design, as notes are triggered

by strumming and head movements, rather than fixa-

tions. Therefore, notes are let ring until a new strum

action is detected regardless of gaze moving to other

keys. In addition, neutral areas between occluders

are also placed. To trigger chord stops (pauses), eye

blinking is exploited. By closing both eyes for a suffi-

ciently long period (corresponding to 4-5 samples of

the eye tracker data stream, adjustable) the sound is

stopped.

4 DISCUSSION

We will discuss in this section some notable prop-

erties of the instrument design, as well as the ratio-

nale behind the outlined design choices. (Davanzo

and Avanzini, 2020a) proposed an 8-axis “dimension

space”, inspired by (Birnbaum et al., 2005) for the

evaluation of the general characteristics of ADMIs.

This dimension space can be useful for summarizing

and visualizing Netychords features.

The Simplification axis indicates which degree of

simplification was introduced to enable less trained

musicians (or to make up for some deficits) through

the introduction of specific aids. In Netychords, no

layout allows the selection of the single notes in a

chord, differently from most polyphonic acoustic in-

struments. This however translates into a potential

gain in ease of use and could be considered an aid.

Apart from this, no other aids have been introduced.

Playing aids like strumming temporal quantization

could be introduced for facilitating the learning pro-

cess.

Hence, the Use context is more oriented to-

wards performance. According to McPherson et al.

(McPherson et al., 2019), there is a clear distinction

between performance interfaces, dedicated to skilled

users, and musical interfaces dedicated to music ther-

apy, usually simplified to be usable without prior mu-

sic training. Netychords is thought to belong to the

performance category, as it does not offer particular

performance aids. It is a complex instrument requir-

ing training to be mastered.

The Design Novelty axis indicates whether an

ADMI resembles the design of a traditional musical

instrument or departs from such traditional designs.

Although Netychords differs from any acoustic in-

strument, some similarities can be found with accor-

dions, with particular regard to the octave manage-

ment system, the reed system and the Stradella Lay-

out.

The usability and the expressivity of an instrument

are largely affected by the amount of Physical Chan-

nels that the user can employ in the interaction. Ne-

tychords uses two channels, namely head movement

and gaze pointing. This choice is the result of a trade-

off between expressivity (as an example, no contin-

uous control on the emitted chord is possible after it

has been triggered) and usability (with particular re-

gard to the Midas Touch problem discussed earlier).

Although the two interaction channels are largely in-

dependent from each other, the eye tracker can only

tolerate a certain degree of head rotation. We found

that a head rotation angle of about 30 degrees is suffi-

cient to strum at different intensities without compro-

mising gaze detection.

The dimension space clusters categories of im-

pairments into three main axes: physical, cogni-

tive, sensory (or perceptual), and classifies target user

groups along these three categories, also considering

that physical, sensory, and cognitive impairments are

often intertwined due to the multidimensional charac-

ter of disability. Although Netychords is potentially

usable by a quadriplegic user, the head movement re-

quired makes it incompatible with the maximum de-

gree of the discrete Physical Impairment scale (LI, or

Lock-In syndrome, characterized by the possibility of

move your eyes only). Instead it falls into the QP

(Quadriplegic Paralysis) level.

Future iterations of the instrument may however

include the choice for new input methods. Blink

based strumming could be tested for users with diffi-

culties in rotating the head. This could influence and

improve the actually low Adaptability of the instru-

ment, namely the possibility to adapt to the individual

Netychords: An Accessible Digital Musical Instrument for Playing Chords using Gaze and Head Movements

207

user needs. Sensory and Cognitive Impairments are

instead not addressed by Netychords.

5 CONCLUSIONS

We have presented the first iteration in the design and

implementation of a polyphonic ADMI, Netychords.

Future work will be mainly addressed at evaluation

with target users, in order to guide subsequent itera-

tion of the instrument design.

One primary element of evaluation concerns an

in-depth study of new key layouts and a comparative

study of existing ones. Representing a large amount

of chord families on the screen requires a great num-

ber of keys. We plan to experiment with separating,

among different interaction channels, root note selec-

tion and chord type selection. Different head rota-

tion axes (or different angles) could for example cor-

respond to different chords (e.g. major, minor).

The proposed strumming modality also needs

thorough evaluation, and may be further extended to

allow for more expressive interaction. In the current

prototype, chords notes are played all in the same

time while strumming. A method for sequential note

strumming (arpeggio) could be implemented, in a

similar fashion as guitar strings, by subdividing the

head rotation interval. Strumming can be good for

simulating plucked instruments or piano. Continuous

intensity detection could be implemented, suitable for

playing strings, for example evaluating head rotation

velocity for each sample.

Evaluating a musical instrument from an objective

point of view is a complex task. (O’Modhrain, 2011)

proposes a framework for the evaluation of Digi-

tal Musical Instruments from the perspective of the

various stakeholders (performer/composer, designer,

manufacturer and audience), consisting in a set of pa-

rameters to be evaluated. (Vamvakousis and Ramirez,

2016) for example implemented this framework for

the evaluation of The EyeHarp from the point of view

of the audience through questionnaires submitted to

the attendees of a concert. As O’Modhrain highlights,

being able to trace a link between the performer’s

motion and the perceived sound is a very important

element for the audience to appreciate a live perfor-

mance, and this is a concern for gaze based interfaces

since movements are very subtle. Head rotation in

Netychords could be a way to convey the expressive

intention.

Interaction in both Netytar (Davanzo et al., 2018)

and EyeHarp (Vamvakousis and Ramirez, 2016) has

been evaluated quantitatively, also from a precision

and accuracy point of view, through the recording of

simple musical exercises in order to measure timing

errors and number of wrong notes. We feel however

that a comparative evaluation between Netychords

and another instrument could be difficult to make

since all the instruments listed in Sec. 2 offer a dif-

ferent degree of control of the chords performance.

The COVID-19 pandemic has so far prevented

testing Netychords with target users. We thus con-

clude by discussing a test procedure for Netychords

we intend to carry out in the future. This is simi-

lar to the one used for Netytar’s evaluation (leaving

aside the comparison phase). A sample of at least

25-30 individuals, possibly with musical experience,

will be recruited. A training phase of at least 20

minutes will provide a minimum of familiarity with

the instrument and the proposed interaction methods;

a practical test will involve the performance of mu-

sical exercises (or songs), and the performance will

be recorded as a MIDI track and analyzed later. We

intend to detect elements such as error rates, strum-

ming and chord change speed, as well as the flexibil-

ity of the various layouts in allowing chord changes

between distant keys; a qualitative test will include

a questionnaire with general questions on the usabil-

ity of the system. Elements such as perceived fatigue,

degree of naturalness and simplicity of interaction and

interface clarity will be detected. Case studies should

be also carried out with musicians having physical

(quadriplegic) disabilities.

Another session could be devoted solely to test-

ing the proposed head-based interaction method.

While the movement precision and stability have al-

ready been discussed in other experiments (Davanzo

and Avanzini, 2020b), it would be useful to detect

through recorded exercises the head’s rhythmic ca-

pabilities, namely the relationship between precision

and speed/frequency of head strums.

REFERENCES

Balestrieri, D. (1979). Accordions Worldwide. Accord

Magazine, USA.

Birnbaum, D., Fiebrink, R., Malloch, J., and Wanderley,

M. M. (2005). Towards a Dimension Space for Musi-

cal Devices. In Proc. Int. Conf. on New Interfaces for

Musical Expression (NIME’05), pages 192–195, Van-

couver, BC, Canada.

Costa-Giomi, E. (2004). Effects of Three Years of Pi-

ano Instruction on Children’s Academic Achievement,

School Performance and Self-Esteem. Psychology of

Music, 32(2):139–152.

Davanzo, N. and Avanzini, F. (2020a). A Dimension Space

for the Evaluation of Accessible Digital Musical In-

struments. In Proc. 20th Int. Conf. on New Interfaces

for Musical Expression (NIME ’20), NIME ’20.

CHIRA 2021 - 5th International Conference on Computer-Human Interaction Research and Applications

208

Davanzo, N. and Avanzini, F. (2020b). Experimental Eval-

uation of Three Interaction Channels for Accessible

Digital Musical Instruments. In Proc. ’20 Int. Conf. on

Computers Helping People With Special Needs, pages

437–445, Online Conf. Springer, Cham.

Davanzo, N. and Avanzini, F. (2020c). Hands-Free Acces-

sible Digital Musical Instruments: Conceptual Frame-

work, Challenges, and Perspectives. IEEE Access,

8:163975–163995.

Davanzo, N. and Avanzini, F. (2020d). A Method for

Learning Netytar: An Accessible Digital Musical In-

strument:. In Proceedings of the 12th International

Conference on Computer Supported Education, pages

620–628, Prague, Czech Republic. SCITEPRESS -

Science and Technology Publications.

Davanzo, N., Dondi, P., Mosconi, M., and Porta, M. (2018).

Playing music with the eyes through an isomorphic

interface. In Proc. of the Workshop on Communica-

tion by Gaze Interaction, pages 1–5, Warsaw, Poland.

ACM Press.

Fove Inc. (n.d.). Eye Play the Piano.

http://eyeplaythepiano.com/en/.

Frid, E. (2019). Accessible Digital Musical Instruments—A

Review of Musical Interfaces in Inclusive Music

Practice. Multimodal Technologies and Interaction,

3(3):57.

Harrison, J., Chamberlain, A., and McPherson, A. P. (2019).

Accessible Instruments in the Wild: Engaging with

a Community of Learning-Disabled Musicians. In

Extended Abstracts of the 2019 CHI Conference on

Human Factors in Computing Systems, CHI EA ’19,

pages 1–6, New York, NY, USA. Association for

Computing Machinery.

Hornof, A. J. (2014). The Prospects For Eye-Controlled

Musical Performance. In Proc. 14th Int. Conf. on New

Interfaces for Musical Expression (NIME’14), NIME

2014, Goldsmiths, University of London, UK.

Jamboxx (n.d.). Jamboxx. https://www.jamboxx.com/.

Jaschke, A. C., Eggermont, L. H., Honing, H., and

Scherder, E. J. (2013). Music education and its ef-

fect on intellectual abilities in children: A systematic

review. Reviews in the Neurosciences, 24(6):665–675.

Jodice, P. (2017). Composizione, Arrangiamento e Or-

chestrazione Jazz. Dalla Formazione Combo Alla Big

Band — Volume I, volume 1 of Manuali e Saggi Di

Musica. Morlacchi Editore.

Larsen, J. V., Overholt, D., and Moeslund, T. B. (2013).

The Actuated Guitar: A platform enabling alterna-

tive interaction methods. In Proceedings of the Sound

and Music Computing Conference 2013 (SMC 2013),

pages 235–238. Logos Verlag Berlin.

Larsen, J. V., Overholt, D., and Moeslund, T. B. (2014).

The Actuated Guitar: Implementation and User Test

on Children with Hemiplegia. In Proceedings of the

International Conference on New Interfaces for Musi-

cal Expression, pages 60–65. Goldsmiths, University

of London.

Larsen, J. V., Overholt, D., and Moeslund, T. B. (2016).

The Prospects of Musical Instruments For People with

Physical Disabilities. In Proc. 16th Int. Conf. on

New Interfaces for Musical Expression, NIME ’16,

pages 327–331, Griffith University, Brisbane, Aus-

tralia. NIME.

Leaman, J. and La, H. M. (2017). A Comprehensive Re-

view of Smart Wheelchairs: Past, Present, and Fu-

ture. IEEE Transactions on Human-Machine Systems,

47(4):486–499.

Majaranta, P. and Bulling, A. (2014). Eye Tracking and

Eye-Based Human–Computer Interaction. In Fair-

clough, S. H. and Gilleade, K., editors, Advances in

Physiological Computing, Human–Computer Interac-

tion Series, pages 39–65. Springer, London.

Matossian, V. and Gehlhaar, R. (2015). Human Instruments:

Accessible Musical Instruments for People with Var-

ied Physical Ability. Annual Review of Cybertherapy

and Telemedicine, 219:202–207.

McPherson, A., Morreale, F., and Harrison, J. (2019). Mu-

sical Instruments for Novices: Comparing NIME,

HCI and Crowdfunding Approaches. In Holland, S.,

Mudd, T., Wilkie-McKenna, K., McPherson, A., and

Wanderley, M. M., editors, New Directions in Music

and Human-Computer Interaction, pages 179–212.

Springer International Publishing, Cham.

Niikawa, T. (2004). Tongue-Controlled Electro-Musical In-

strument. In Proc. 18th Int. Congr. on Acoustics, vol-

ume 3, pages 1905–1908, International Conference

Hall, Kyoto, Japan. Acoustical Society.

O’Modhrain, S. (2011). A Framework for the Evaluation of

Digital Musical Instruments. Computer Music Jour-

nal, 35(1):28–42.

Open Up Music (n.d.). Clarion.

https://www.openupmusic.org/clarion.

Roig-Maim

´

o, M. F., Manresa-Yee, C., Varona, J., and

MacKenzie, I. S. (2016). Evaluation of a Mobile

Head-Tracker Interface for Accessibility. In Miesen-

berger, K., B

¨

uhler, C., and Penaz, P., editors, Comput-

ers Helping People with Special Needs, Lecture Notes

in Computer Science, pages 449–456, Cham. Springer

International Publishing.

Sarig-Bahat, H. (2003). Evidence for exercise therapy in

mechanical neck disorders. Manual Therapy, 8(1):10–

20.

Stensæth, K. (2013). “Musical co-creation”? Exploring

health-promoting potentials on the use of musical and

interactive tangibles for families with children with

disabilities. International Journal of Qualitative Stud-

ies on Health and Well-being, 8(1).

United Nations (2015). Universal Declaration of Human

Rights. https://www.un.org/en/universal-declaration-

human-rights/index.html.

Vamvakousis, Z. and Ramirez, R. (2014). P300 Harmonies:

A Brain-Computer Musical Interface. In Proc. 2014

Int. Computer Music Conf./Sound and Music Comput-

ing Conf., pages 725–729, Athens, Greece. Michigan

Publishing.

Vamvakousis, Z. and Ramirez, R. (2016). The EyeHarp:

A Gaze-Controlled Digital Musical Instrument. Fron-

tiers in Psychology, 7.

World Health Organization (2013). Spinal cord injury re-

port. Technical report, World Health Organization.

Netychords: An Accessible Digital Musical Instrument for Playing Chords using Gaze and Head Movements

209