A Comparative Study of Ego-centric and Cooperative Perception for

Lane Change Prediction in Highway Driving Scenarios

Sajjad Mozaffari

1 a

, Eduardo Arnold

1 b

, Mehrdad Dianati

1 c

and Saber Fallah

2 d

1

Warwick Manufacturing Group, University of Warwick, Coventry CV4 7AL, U.K.

2

Department of Mechanical Engineering Sciences, University of Surrey, Guildford, GU2 7XH, U.K.

Keywords:

Lane Change Prediction, Perception Models, Vehicle Behaviour Prediction, Intelligent Vehicles.

Abstract:

Prediction of the manoeuvres of other vehicles can significantly improve the safety of automated driving

systems. A manoeuvre prediction algorithm estimates the likelihood of a vehicle’s next manoeuvre using the

motion history of the vehicle and its surrounding traffic. Several existing studies assume full observability

of the surrounding traffic by utilising trajectory datasets collected by top-down view infrastructure cameras.

However, in practice, automated vehicles observe the driving environment using egocentric perception sensors

(i.e., onboard lidar or camera) which have limited sensing range and are subject to occlusions. This study firstly

analyses the impact of these limitations on the performance of lane change prediction. To overcome these

limitations, automated vehicles can cooperate in observing the environment by sharing their perception data

through V2V communication. While it is intuitively expected that cooperation among vehicles can improve

environment perception by individual vehicles, the other contribution of this work is to quantify the potential

impacts of cooperation. To this end, we propose two perception models used to generate egocentric and

cooperative perception dataset variants from a set of uniform scenarios in a benchmark dataset. This study

can help system designers weigh the costs and benefits of alternative perception solutions for lane change

prediction.

1 INTRODUCTION

Predicting the lane change (LC) manoeuvre of nearby

vehicles enables automated vehicles to make proac-

tive decisions and reduce the risk of collisions in high-

way driving. A vehicle’s LC manoeuvre is highly

dependent on the behaviour of other vehicles in its

vicinity, particularly in congested highway traffic. For

example, a slow-moving vehicle motivates its follow-

ing vehicles to perform an LC manoeuvre, provided

there is an available gap in the side lane. Therefore,

an Ego Vehicle (EV) needs to observe the states (e.g.,

location and velocity) of the Target Vehicle (TV), i.e.,

the vehicle of interest, and its Surrounding Vehicles

(SVs) during a time window to predict the next ma-

noeuvre of the TV.

Automated vehicles observe their surrounding us-

ing egocentric perception sensors (e.g., camera and

LiDAR) which have limited range and are subject to

a

https://orcid.org/0000-0001-8109-6953

b

https://orcid.org/0000-0001-7896-7252

c

https://orcid.org/0000-0001-5119-4499

d

https://orcid.org/0000-0002-1298-1040

spatial impairments such as occlusions. The limita-

tions of egocentric perception can prevent tracking

some of the SVs which might negatively impacts the

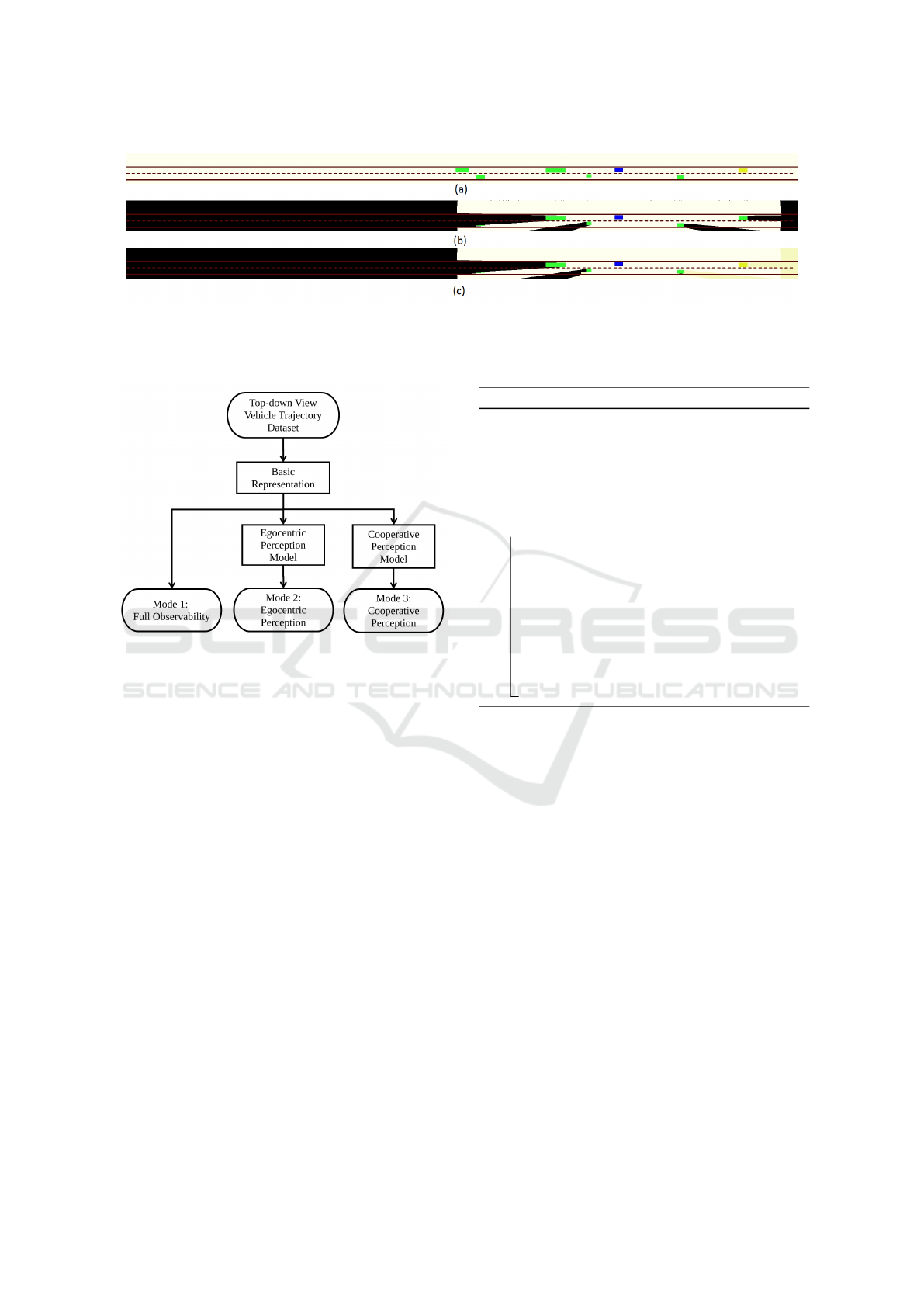

LC prediction performance. Figure 1-a illustrates an

example of a driving scenario where the EV aims to

predict the manoeuvre of a leading TV. The TV is

going to perform an LC manoeuvre shortly due to a

slow-moving vehicle in front of the TV. However, the

EV cannot observe the slow-moving vehicle since the

TV is occluding the EV’s perception sensors. Con-

sequently, the EV cannot predict the upcoming LC

manoeuvre by the TV.

The majority of the existing studies on LC predic-

tion assumes full observability of the driving environ-

ment. In such studies, the prediction model is trained

using LC scenarios extracted from trajectory datasets

such as NGSIM (Colyar and Halkias, 2007; Halkias

and Colyar, 2007) and highD (Krajewski et al., 2018).

In these datasets, the driving environment is being

observed using wide and top-down view cameras in-

stalled on infrastructure buildings or drones. Such

perception assumption is not realistic for all driving

scenarios since the large-scale deployment of infras-

Mozaffari, S., Arnold, E., Dianati, M. and Fallah, S.

A Comparative Study of Ego-centric and Cooperative Perception for Lane Change Prediction in Highway Dr iving Scenarios.

DOI: 10.5220/0010655700003061

In Proceedings of the 2nd International Conference on Robotics, Computer Vision and Intelligent Systems (ROBOVIS 2021), pages 113-121

ISBN: 978-989-758-537-1

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

113

Figure 1: An illustration of a driving environment with (a)

egocentric perception, and (b) cooperative perception. Ob-

servable areas are depicted with blue and orange colours for

egocentric sensor and shared cooperative sensors, respec-

tively. Unobservable vehicles are filled with hashed colours.

Following terminology is used in this figure. EV: Ego ve-

hicle, TV: Target vehicle, SVs: Surrounding Vehicles (they

have a considerable impact on TV’s behaviour). NVs: Non-

effective vehicles (their impact on TV’s behaviour can be

neglected). CAVs: Connected Automated Vehicles.

tructure sensors to cover all road sections incurs in

high cost. Analysing the impact of ego-centric per-

ception limitations on the performance of an LC pre-

diction model has remained a research gap in the lit-

erature.

One practical solution for the limitations of ego-

centric perception is the cooperation of nearby Con-

nected Automated Vehicles (CAVs) in observing the

environment through V2V communication. V2V

communication has been used to improve the per-

formance of different automated driving applications

such as road geometry estimation (Sakr et al., 2017),

3D object detection (Chen et al., 2019), and trajec-

tory planning (Kim et al., 2015). However, sharing

perception data through V2V for the task of LC pre-

diction has not been considered in the literature. To

investigate the effectiveness of cooperative percep-

tion for LC prediction, a large-scale dataset recorded

by perception sensors of multiple vehicles driving si-

multaneously on a road section is needed for training

and evaluating the prediction model. To the best of

our knowledge, such a cooperative perception dataset

does not currently exist. One way to address this

problem is to use synthetic data. However, such

synthetic datasets with simplified driving behaviour

models fail to replicate the naturalistic behaviour of

human-driven vehicles. The lack of naturalistic tra-

jectory datasets recorded with cooperative perception

is a gap in the literature that prevents further research

on this topic.

To address the aforementioned research gaps, this

paper carries out a comparative study on the impact

of two perception modes, namely egocentric percep-

tion, and cooperative perception on LC prediction

performance. In doing so, this paper proposes two

perception models used to generate dataset variants

with egocentric and cooperative perception from a

naturalistic trajectory dataset with full observability

(i.e., captured from wide and top-down view cam-

era). These perception models, which are applicable

to any top-down view trajectory dataset, enable iden-

tifying the impact of partial observability in egocen-

tric perception and preliminary evaluation of coop-

erative perception for any vehicle behaviour predic-

tion study. In this paper, we use the generated dataset

variants to train and evaluate our baseline LC predic-

tion model with different prediction horizons. We are

specifically interested in answering the following re-

search questions:

• What is the impact of limited range and occlusion

in egocentric perception on the performance of a

LC prediction model for different prediction hori-

zons?

• What is the average gain obtained in LC predic-

tion when using cooperative perception with vari-

able penetration rates of automated vehicles?

2 RELATED WORKS

The vehicle behaviour prediction problem has been

extensively studied in the literature (Lef

`

evre et al.,

2014; Mozaffari et al., 2020). In this section, we re-

view the related works to LC prediction in highway

driving scenarios and highlight their observability as-

sumption under two categories: full observability and

ego-centric perception. We then overview the exist-

ing studies on the application of offboard V2V data in

vehicle behaviour prediction.

2.1 LC Prediction Assuming Full

Observability

Several existing studies assume full observability of

the TV’s surrounding environment by utilizing natu-

ralistic trajectory datasets collected by wide and top-

down view sensors (Yoon and Kum, 2016; Liu et al.,

2019; Ding et al., 2019; Hu et al., 2018; Scheel

et al., 2019; Rehder et al., 2019a; Gallitz et al.,

2020; M

¨

antt

¨

ari et al., 2018; Deo and Trivedi, 2018).

In (Yoon and Kum, 2016) and (Liu et al., 2019), the

states of the TV (e.g., lateral position and velocity) are

used to predict the TV’s LC manoeuvre. These stud-

ies do not consider the interaction between the TV and

its surrounding vehicles, which results in a limited

ROBOVIS 2021 - 2nd International Conference on Robotics, Computer Vision and Intelligent Systems

114

prediction horizon and performance. In (Ding et al.,

2019; Hu et al., 2018; Scheel et al., 2019; Rehder

et al., 2019a; Gallitz et al., 2020) the interaction be-

tween the TV and a fixed number of vehicles around

the TV are modelled using fully-connected neural net-

works (Hu et al., 2018), Bayesian networks (Rehder

et al., 2019a), and recurrent neural networks (Ding

et al., 2019; Scheel et al., 2019; Gallitz et al., 2020).

Instead of considering a constant number of surround-

ing vehicles, the authors in (M

¨

antt

¨

ari et al., 2018)

and (Deo and Trivedi, 2018) use convolutional neu-

ral networks to model the spatial interaction among

vehicles within a distance to the TV. In the afore-

mentioned interaction-aware studies, the observabil-

ity of the TV’s nearby vehicles is assumed to be guar-

anteed, which is not practical given the limited sen-

sor range and potential occlusions of automated ve-

hicles’ perception sensors. In this paper, we pro-

pose an ego-centric perception model which is used to

identify the potential performance loss when moving

from a dataset with full observability to an egocentric

dataset.

2.2 LC Prediction with Egocentric

Perception

Another category of the existing studies considers

more realistic assumption by using datasets collected

from egocentric perception sensors. In (Rehder et al.,

2019b), the LC behaviour of a TV is predicted using a

hybrid Bayesian network applied to interaction-aware

features such as time to collision and time headway.

The authors collect data using a test vehicle equipped

with LiDAR sensors and an object tracking system

that provides a list of tracked vehicles within 100 me-

ters of the ego vehicle. However, the prediction hori-

zon of their model is limited to 50 meters, allowing

the additional 50 meters to track the TV’s surround-

ing traffic. The authors in (Kr

¨

uger et al., 2019) apply

a neural network to extract relevant patterns from the

TV and its SVs’ motion features, followed by a Gaus-

sian process to produce a probabilistic LC prediction.

D. Lee et al. (Lee et al., 2017) apply a six-layer con-

volutional neural network (CNN) on a binary two-

channel representation of the driving environment in

front of ego vehicle to predict the left/right cut in ma-

noeuvres of preceding vehicles. This representation is

created using front-facing radar and camera sensors

installed on the ego vehicle. The binary representa-

tion only covers a limited area in front of the EV and,

thus, fail to consider other areas that also might influ-

ence the next manoeuvres of the preceding vehicles.

The authors in (Izquierdo et al., 2019a; Fern

´

andez-

Llorca et al., 2020) train and evaluate a convolutional

neural network model to predict the LC manoeuvres

of TVs using raw egocentric sensor images from Pre-

vention Dataset (Izquierdo et al., 2019b). To reduce

the computation cost, the raw sensor images used in

these studies are cropped around a close vicinity of

the TV, limiting the observation of the surrounding

environment. Although the studies presented in this

subsection use egocentric perception datasets, they do

not quantify the performance drop caused by the par-

tial observability intrinsic to the egocentric perception

mode. This paper evaluates the impact of ego-centric

perception on the problem of LC prediction. Further-

more, we propose and evaluate a cooperative percep-

tion solution to mitigate the limitations of egocentric

perception.

2.3 Application of Offboard V2V in

Behaviour Prediction

Off-board V2V data (i.e., data from other vehicles)

are used to improve the performance of a variety

of autonomous driving applications such as road ge-

ometry estimation (Sakr et al., 2017), object detec-

tion (Chen et al., 2019), and planning (Kim et al.,

2015). However, few studies use off-board V2V

data for LC detection and prediction. The authors

in (Sakr et al., 2018) assume that the TV is sending

its states(e.g., position/velocity) to the EV using V2V

communication. Therefore, the EV can detect the LC

manoeuvres of the TV by observing the transmitted

states. N.Williams et al. (Williams et al., 2018) devel-

ops an LC warning system by extending the observ-

able field of onboard sensors using the assumption

that unobservable vehicles can transmit their states to

the EV using V2V communication. We extend these

works by assuming that CAVs can share processed

perception data, i.e., a list of detected objects, with

the EV particularly for the task of LC prediction.

3 PROBLEM DEFINITION AND

SYSTEM MODEL

The problem of LC prediction consists of estimating

the probability of LC manoeuvres of a TV during a

prediction window T

pred

given the available observa-

tion of the states of the TV and its SVs during an ob-

servation window T

obs

. The LC manoeuvres can be

one of Lane Keeping (LK), Left LC (LLC) or Right

LC (RLC). We assume a time delay T

delay

separates

the observation and prediction window. The value of

T

delay

controls the prediction horizon. A low value of

T

delay

corresponds to short-term prediction (i.e., pre-

A Comparative Study of Ego-centric and Cooperative Perception for Lane Change Prediction in Highway Driving Scenarios

115

dicting the LC of the TV in near future) and a large

value corresponds to long-term prediction, as illus-

trated in Figure. 2. The LC prediction problem can

be formulated as estimating the following conditional

probability mass function:

P(m = ¯m|Observations), (1)

where

¯m ∈ M = {LK, LLC, RLC} (2)

We assume that the observations in this formula-

tion is obtained using one of the following perception

modes:

1. Full-observability: baseline mode, where the

surrounding vehicles are assumed to be fully ob-

servable, as seen from top-down view infrastruc-

ture sensors.

2. Egocentric Perception: We assume a 360-degree

horizontal field of view sensor (e.g., camera or li-

dar) with R

sensor

meters effective range installed

on the centre of the EV is observing the envi-

ronment. Note that the range of the sensor is

adjustable in our proposed perception modelling

method depending on the actual range of the de-

ployed sensor.

3. Cooperative Perception: A percentage of vehi-

cles (i.e., P

CAVs

: CAVs penetration rate) on the

road are randomly selected as CAVs which can

observe their surrounding environment and share

the list of detected vehicles in the environment

with the EV.

In all the aforementioned perception modes, we

assume that:

• Ideal object detection and tracking modules are

estimating the states e.g., (x,y) locations and size

of the bounding boxes of observable vehicles.

• The same set of perception sensor models are

used in both egocentric and cooperative percep-

tion modes.

• Ideal communication channels are being used in

the cooperative perception mode.

4 PROPOSED PERCEPTION

MODELS

To evaluate the impact of each perception mode, de-

fined in the previous section, on the performance of

LC prediction, it is required to have a dataset cap-

tured from the corresponding perception mode. How-

ever, a public real-world trajectory datasets collected

Figure 2: An illustration of the (a) short-term, (b) long-term

LC manoeuvre prediction.

from multiple vehicles do not currently exist. There-

fore, we propose two perception models to represent

egocentric and cooperative perception modes. The

proposed models are applied to a base vehicle tra-

jectory dataset captured from top-down view sensors

(representing the full-observability mode) to generate

variants of the same underlying driving scenarios cor-

responding to egocentric and cooperative perception,

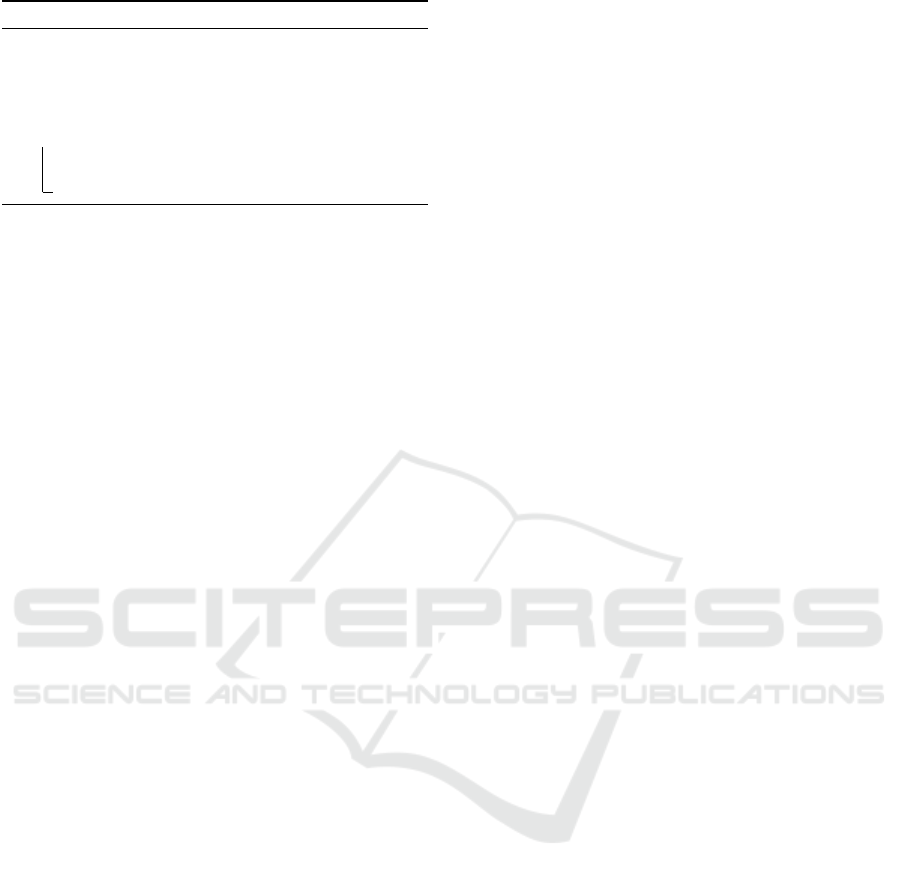

respectively. Figure 4 illustrates the data rendering

processes required for generating each dataset variant

and Figure. 3 demonstrates an example of the repre-

sentation in each dataset variant.

4.1 Basic Representation

Using the selected base vehicle trajectory dataset, we

populate a binary three-channel image containing a

top-down view of the covered road section at each

time-step, denoted as the basic representation I

basic

.

The first channel of this representation depicts the 2D

bounding boxes of vehicles within the road section.

The map data (e.g., lane markings) are indicated in

the second channel. The third channel specifies the

observability status of each pixel. This channel is ini-

tialized with zeros (i.e., all pixels are considered to be

unobservable at first) and is populated in later stages

according to the considered perception model. The

data representation of the first perception mode (i.e.,

full observability) is identical to the basic representa-

tion except that the observability status of all pixels is

set to 1 (i.e., observable).

4.2 Egocentric Perception Model

The egocentric perception model estimates the ob-

servability status of each area in the driving environ-

ment assuming that one of the vehicles, considered

ROBOVIS 2021 - 2nd International Conference on Robotics, Computer Vision and Intelligent Systems

116

Figure 3: An example of the representation rendered in each perception mode: (a) full observability, (b) egocentric perception,

and (c) cooperative perception. The representation is coloured for illustration purposes. Black colour represents unobservable

areas. The EV and CAVs are represented with blue and yellow bounding boxes, respectively. Other vehicles are represented

with green bounding boxes.

Figure 4: Data generation process for (1) Full observability,

(2) Egocentric percception, and (3) Cooperative perception

modes.

as the EV, is observing the environment with onboard

perception sensors. The egocentric perception model

uses I

basic

: the top-down view basic representation,

X

EV

: the location of the selected EV, and R

sensor

: the

range of the perception sensor, to generate I

ego

: a top-

down view representation with the modelled percep-

tion of the EV. The I

ego

is an updated version of I

basic

,

where the observability status of each pixel is esti-

mated according to the perception model described

in Algorithm 1. This algorithm considers the sensor

range and occlusion effect in estimating the observ-

ability status of all pixels. To model the range of the

observing vehicle’s sensors, we first consider all the

lines connecting the vehicle centre point to the points

on the boundary of the sensor range. Then, we use

Bresenham’s line algorithm (Earnshaw, 1985) to se-

lect the pixels on each line in the input representation.

To model occlusion, we then select the segments of

each line from the centre of the EV until the first oc-

cupied pixel by other vehicles. Finally, the pixels on

the final line segments are marked as observable by

setting their value to 1 in the observation channel of

the representation.

Algorithm 1: Egocentric Perception Model.

Input: I

basic

, X

EV

, R

sensor

Output: I

ego

1 Initialisation:

2 I

ego

← I

basic

3 {B

i

}

P

i=1

← List of pixels in I

basic

on the

border of the EV’s sensor range.

4 for i ← 1 to P do

5 L

i

← List of pixels in I

basic

on the line

connecting X

EV

to B

i

obtained using

Bresenham’s line algorithm (Earnshaw,

1985).

6

ˆ

L

i

← Pixels on L

i

line from X

EV

until

reached the first occupied pixel in I

basic

by other vehicles.

7 Update I

ego

by setting

ˆ

L

i

pixels in third

channel of I

ego

to 1.

4.3 Cooperative Perception Model

The cooperative perception model estimates the ob-

servability status of each pixel in the driving environ-

ment assuming a group of CAVs, including the EV,

are observing the environment with their onboard per-

ception sensors. The cooperative perception model

uses the estimated I

ego

and the location of N vehi-

cles, selected uniformly at random as CAVs, to gen-

erate the cooperative perception mode representation

denoted as I

coop

. The percentage of CAVs w.r.t. all

vehicles is donated as P

CAVs

or CAVs penetration rate.

This perception model, described in Algorithm 2, iter-

atively uses the Algorithm 1 to update the observabil-

ity status of the I

coop

based on the location of each

CAVs. As we assumed ideal object detection and

tracking, there is no misalignment error among the

detected vehicles from different CAVs and the EV.

Therefore, the fusion of the representations is done

using pixel-wise logical OR operation among the rep-

resentations.

A Comparative Study of Ego-centric and Cooperative Perception for Lane Change Prediction in Highway Driving Scenarios

117

Algorithm 2: Cooperative Perception Model.

Input: I

ego

, {X

CAV#1

, X

CAV#2

, ..., X

CAV#N

}, R

sensor

Output: I

coop

1 Initialisation:

2 I

coop

← I

ego

3 for i ← 1 to N do

4 I

coop

← I

coop

logical OR

Algorithm 1(I

coop

, X

CAV#i

, R

sensor

)

5 DATASET AND PREDICTION

MODEL

This section describes the base vehicle trajectory

dataset used to generate the three dataset variants cor-

responding to each perception mode. Next, the pre-

diction model used for performing the comparative

study on perception modes is introduced.

5.1 Vehicle Trajectory Dataset

Description

The highD dataset (Krajewski et al., 2018), a publicly

available naturalistic vehicle trajectory dataset is used

as the base dataset from which the three dataset vari-

ants are obtained. This dataset is recorded on German

highways using a top-down view camera installed on

a drone. The highD Dataset, compared to similar ex-

isting datasets such as NGSIM (Colyar and Halkias,

2007; Halkias and Colyar, 2007), contains more data

samples and more accurate annotations (typical posi-

tioning error is less than 10 cm). The data is reported

in 60 spreadsheets and includes several features of ve-

hicles (e.g., x-y position, velocity, lane ID, etc) for

each time frame. In this study, we select the first 40

spreadsheets of the highD dataset as training data, the

next 10 spreadsheets as validation data, and the final

10 spreadsheets as test data. After applying the data

preparation process on the highD dataset, we have

14K training samples, 6K validation samples and 5K

test samples in each dataset variant, where a sample

is a group of T

obs

frames.

5.2 Prediction Model

A convolutional neural network (CNN) is used to es-

timate the likelihood of the LC manoeuvres using the

representations generated in each dataset variant. The

CNN model consists of 3 layers of 2D convolution,

each with 16 filters with a kernel size of 3 × 3 and

followed by a 2 ×2 pooling layer and a ReLU activa-

tion function. These layers are followed by two fully-

connected layers with 512 and 3 output neurons, cor-

responding to three classes of LC manoeuvres (i.e.,

RLC, LLC, and LK). To feed the representations to

the CNN prediction model, first, the TV is selected

from one of the observed vehicles exactly next to the

EV (i.e., the EV’s preceding/following vehicle in its

lane or adjacent lanes or the right/left alongside ve-

hicles). Then, A rectangle

ˆ

L ×

ˆ

W crop of the repre-

sentation is selected which is centred on the TV and

moves with it. A stack of cropped images for the time-

steps in [t

0

− T

obs

, t

0

] forms a data sample in the cor-

responding dataset variant, which is fed as input to

the CNN for prediction query at t

0

. The sample is la-

belled as an RLC or LLC if the TV keeps the lane dur-

ing T

obs

and T

delay

and its centre crosses the right or

left lane marking during prediction window T

pred

, re-

spectively. The data sample in which the TV does not

cross any lane marking during T

obs

,T

delay

and T

pred

, is

labelled as lane-keeping. We perform random under-

sampling on the LK class to balance the dataset.

6 COMPARATIVE EVALUATION

This section describes the implementation details,

evaluation metrics and discusses the experiments and

results.

6.1 Implementation Details

We empirically set the length of the observations time

(T

obs

) and prediction window (T

pred

) to 1 second. In

the analysis, we train and test the baseline model sep-

arately with different time delays (T

delay

) from 0 to

4 seconds. The width and height of cropped images

are selected as 100 and 90, respectively. The selected

height assures all the driving lanes are covered in the

cropped image, regardless of the TV’s current lane.

We adopt Adam optimizer (Kingma and Ba, 2017)

with the initial learning rate of 0.001 and set the max-

imum number of epochs to 10. Using the early-

stopping technique on validation data, we stop train-

ing before over-fitting occurs. All the models are

trained and tested on an NVIDIA RTX 2080 TI us-

ing PyTorch platform (Paszke et al., 2017).

6.2 Evaluation Metrics

Given a balanced dataset (i.e., equal number of sam-

ples in each class), accuracy can be a trustworthy met-

ric to identify the utility of each perception mode for

the problem of vehicle behaviour prediction. The ac-

curacy (ACC) is defined as the percentage of correctly

ROBOVIS 2021 - 2nd International Conference on Robotics, Computer Vision and Intelligent Systems

118

Table 1: LC prediction accuracy and OBS metrics for different perception modes.

Perception Mode OBS

Time Delay (T

delay

)

0s 1s 2s 3s 4s

Mode 1. Full Observability 100% 98.3% 90.77% 80.33% 77.11% 73.49%

Mode 2. Egocentric Perception 63% 97.03% 88.69% 78.18% 73.35% 69.2%

Mode 3. Cooperative Perception (P

CAVs

= 20%) 85% 97.59% 90.34% 80.53% 72.69% 72.28%

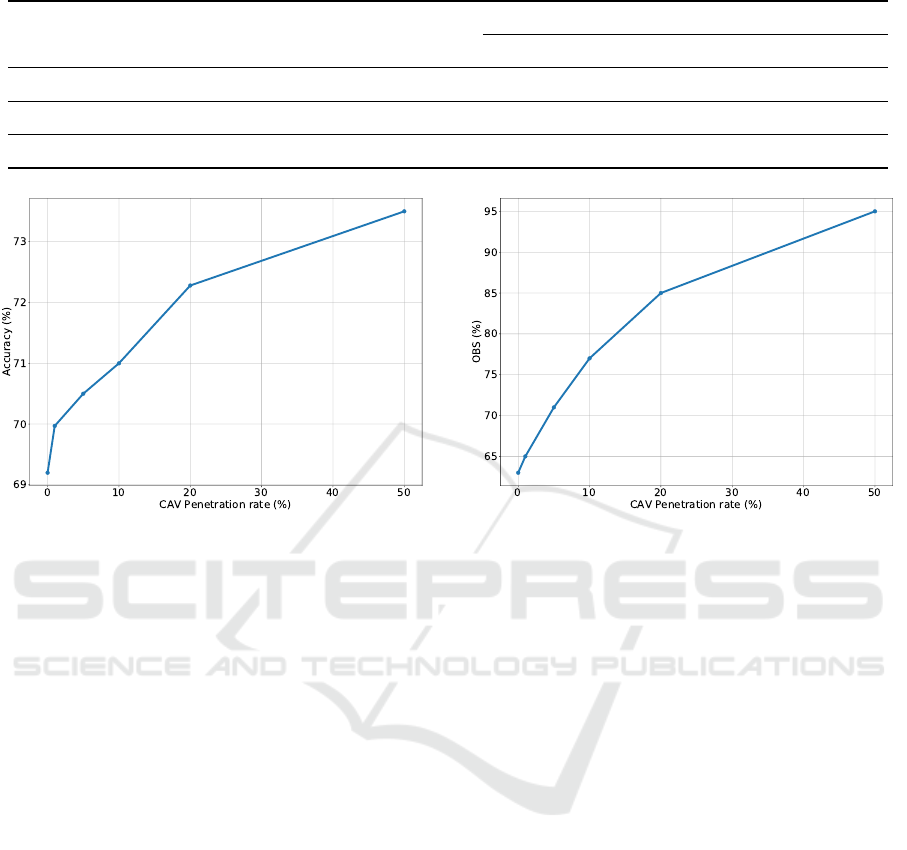

Figure 5: Long-term prediction accuracy for different pen-

etration rate of cooperative perception.

predicted LC and LK manoeuvres of the TV to the to-

tal number of LC and LK manoeuvres in a dataset

variant corresponding to a perception mode. We also

report the average percentage of observable pixels in

cropped input image for each dataset variant as the

observability (OBS) metric. This metric shows the

utility of perception mode in observing the TV’s sur-

rounding.

6.3 Model Performance for Each

Perception Mode

This experiment aims to quantify the impact of us-

ing each of the defined perception modes (i.e., full

observability, egocentric perception, and cooperative

perception modes) on the baseline prediction model

with different prediction horizons. To this end, we

train and test the baseline model separately on each

dataset variants from the highD dataset and for time

delays T

delay

of 0,1,2,3, and 4 seconds. The results are

reported in Table 1.

The accuracy of short-term LC prediction for a

time delay of 0 seconds in all perception modes are

above 97% and the difference between modes is neg-

ligible. Nonetheless, the gap between the accuracy of

the prediction model applied to Mode.1 and Mode.2

increases from 2% to 4% when the time delay in-

Figure 6: observability of TV’s surrounding (OBS metric)

for different penetration rate of cooperative perception.

creases from 1 to 4 seconds, indicating the impor-

tance of observing the TV’s surrounding for longer

prediction horizons. Fusing the CAVs perception with

onboard perception can compensate the performance

gap completely for time delays of 1s and 2s and de-

crease it to 1% for time delay of 4s; however, no im-

provement is observed for time delay of 3s. In ad-

dition, augmenting onboard perception with offboard

CAVs perception can increase the observability met-

ric (OBS) from 63% to 85%. These results achieved

by assuming the penetration rate of CAVs on the road

to be P

CAVs

= 20%.

Comparing the LC prediction performance of the

CNN model for different perception modes suggests

that comprehensive perception of the driving environ-

ment is especially important in longer prediction hori-

zons. This is mainly because long-term predictions

require a deep understanding of the driving environ-

ment and of the emerging traffic status. This is where

cooperative perception has the most impact due to the

increased observability of the driving environment

6.4 Model Performance with Different

CAVs Penetration Rate

In this experiment, we measure the performance of

the long-term LC prediction model (T

delay

= 4) and

A Comparative Study of Ego-centric and Cooperative Perception for Lane Change Prediction in Highway Driving Scenarios

119

the OBS metric for different penetration rates of

CAVs. The model’s performance is only reported for

the long-term prediction since such samples have the

highest performance drop in the egocentric percep-

tion model based on the aforementioned experiment.

To prepare the data, we model the cooperative per-

ception mode by assuming 1%, 5%, 10%, 20%, and

50% percentages of CAVs. Figure 6 and Figure 5

show the relation between percentage of CAVs and

OBS metric, and percentage of CAVs and accuracy,

respectively. Both figures demonstrate a logarithmic

increase in performance and observability with in-

creasing the penetration rate. With 20% penetration

of CAVs on average, 85% of TV’s surrounding be-

come observable and the drop in performance com-

pared to full observability mode is decreased to 1%.

7 CONCLUSION

In this paper, we proposed two perception models, ap-

plicable to any vehicle trajectory dataset recorded by

a top-down view sensor, to model the egocentric and

cooperative perception. Then, a comparative study

has been performed to quantify the impact of each

perception mode on the problem of lane change pre-

diction. The results showed a 4% performance drop in

our long-term LC prediction model (i.e., T

delay

= 4s)

when using ego-centric perception instead of the full-

observable original dataset. Also, the results indi-

cated that cooperative perception with 20% penetra-

tion of CAVs can almost compensate for the perfor-

mance drop of our prediction model related to ego-

centric perception limitation.

Future work should consider extending the data

generation method by considering the errors in object

detection and tracking modules. The binary represen-

tation used in this work can be extended to a proba-

bilistic representation which enables encoding the er-

ror and uncertainty in vehicles states estimation. Fur-

thermore, the 2D occlusion model used in this study

can be extended to a 3D occlusion model to decrease

the modelling error.

ACKNOWLEDGEMENTS

This work was supported by Jaguar Land Rover and

the U.K.-EPSRC as part of the jointly funded Towards

Autonomy: Smart and Connected Control (TASCC)

Programme under Grant EP/N01300X/1. We would

like to thank Omar Al-Jarrah for reviewing previous

version of this paper.

REFERENCES

Chen, Q., Tang, S., Yang, Q., and Fu, S. (2019). Cooper:

Cooperative perception for connected autonomous ve-

hicles based on 3d point clouds. arXiv preprint

arXiv:1905.05265.

Colyar, J. and Halkias, J. (2007). Us highway 101 dataset.

Technical report, U.S. Department of Transportation,

Federal Highway Administration (FHWA).

Deo, N. and Trivedi, M. M. (2018). Convolutional social

pooling for vehicle trajectory prediction. In Proceed-

ings of the IEEE Conference on Computer Vision and

Pattern Recognition (CVPR) Workshops.

Ding, W., Chen, J., and Shen, S. (2019). Predicting vehi-

cle behaviors over an extended horizon using behav-

ior interaction network. In 2019 International Con-

ference on Robotics and Automation (ICRA), pages

8634–8640.

Earnshaw, R. A. (1985). Fundamental Algorithms for Com-

puter Graphics: NATO Advanced Study Institute Di-

rected by JE Bresenham, RA Earnshaw, MLV Pitte-

way. Springer.

Fern

´

andez-Llorca, D., Biparva, M., Izquierdo-Gonzalo, R.,

and Tsotsos, J. K. (2020). Two-stream networks for

lane-change prediction of surrounding vehicles. In

2020 IEEE 23rd International Conference on Intelli-

gent Transportation Systems (ITSC), pages 1–6.

Gallitz, O., De Candido, O., Botsch, M., Melz, R., and

Utschick, W. (2020). Interpretable machine learning

structure for an early prediction of lane changes. In

Farka

ˇ

s, I., Masulli, P., and Wermter, S., editors, Artifi-

cial Neural Networks and Machine Learning – ICANN

2020, pages 337–349, Cham. Springer International

Publishing.

Halkias, J. and Colyar, J. (2007). Us highway 80 dataset.

Technical report, U.S. Department of Transportation,

Federal Highway Administration (FHWA).

Hu, Y., Zhan, W., and Tomizuka, M. (2018). Probabilis-

tic prediction of vehicle semantic intention and mo-

tion. In 2018 IEEE Intelligent Vehicles Symposium

(IV), pages 307–313.

Izquierdo, R., Quintanar, A., Parra, I., Fern

´

andez-Llorca,

D., and Sotelo, M. A. (2019a). Experimental val-

idation of lane-change intention prediction method-

ologies based on cnn and lstm. In 2019 IEEE In-

telligent Transportation Systems Conference (ITSC),

pages 3657–3662.

Izquierdo, R., Quintanar, A., Parra, I., Fern

´

andez-Llorca,

D., and Sotelo, M. A. (2019b). The prevention dataset:

a novel benchmark for prediction of vehicles inten-

tions. In 2019 IEEE Intelligent Transportation Sys-

tems Conference (ITSC), pages 3114–3121.

Kim, S., Liu, W., Ang, M. H., Frazzoli, E., and Rus, D.

(2015). The impact of cooperative perception on de-

cision making and planning of autonomous vehicles.

IEEE Intelligent Transportation Systems Magazine,

7(3):39–50.

Kingma, D. P. and Ba, J. (2017). Adam: A method for

stochastic optimization.

ROBOVIS 2021 - 2nd International Conference on Robotics, Computer Vision and Intelligent Systems

120

Krajewski, R., Bock, J., Kloeker, L., and Eckstein, L.

(2018). The highd dataset: A drone dataset of natural-

istic vehicle trajectories on german highways for val-

idation of highly automated driving systems. In 2018

21st International Conference on Intelligent Trans-

portation Systems (ITSC), pages 2118–2125.

Kr

¨

uger, M., Novo, A. S., Nattermann, T., and Bertram,

T. (2019). Probabilistic lane change prediction using

gaussian process neural networks. In 2019 IEEE In-

telligent Transportation Systems Conference (ITSC),

pages 3651–3656.

Lee, D., Kwon, Y. P., McMains, S., and Hedrick, J. K.

(2017). Convolution neural network-based lane

change intention prediction of surrounding vehicles

for acc. In 2017 IEEE 20th International Conference

on Intelligent Transportation Systems (ITSC), pages

1–6.

Lef

`

evre, S., Vasquez, D., and Laugier, C. (2014). A survey

on motion prediction and risk assessment for intelli-

gent vehicles. ROBOMECH Journal, 1(1):1.

Liu, J., Luo, Y., Xiong, H., Wang, T., Huang, H., and

Zhong, Z. (2019). An integrated approach to prob-

abilistic vehicle trajectory prediction via driver char-

acteristic and intention estimation. In 2019 IEEE In-

telligent Transportation Systems Conference (ITSC),

pages 3526–3532.

M

¨

antt

¨

ari, J., Folkesson, J., and Ward, E. (2018). Learn-

ing to predict lane changes in highway scenarios using

dynamic filters on a generic traffic representation. In

2018 IEEE Intelligent Vehicles Symposium (IV), pages

1385–1392.

Mozaffari, S., Al-Jarrah, O. Y., Dianati, M., Jennings, P.,

and Mouzakitis, A. (2020). Deep learning-based ve-

hicle behavior prediction for autonomous driving ap-

plications: A review. IEEE Transactions on Intelligent

Transportation Systems, pages 1–15.

Paszke, A., Gross, S., Chintala, S., Chanan, G., Yang, E.,

DeVito, Z., Lin, Z., Desmaison, A., Antiga, L., and

Lerer, A. (2017). Automatic differentiation in pytorch.

Rehder, T., Koenig, A., Goehl, M., Louis, L., and Schramm,

D. (2019a). Lane change intention awareness for as-

sisted and automated driving on highways. IEEE

Transactions on Intelligent Vehicles, 4(2):265–276.

Rehder, T., Koenig, A., Goehl, M., Louis, L., and Schramm,

D. (2019b). Lane change intention awareness for

assisted and automated driving on highways. IEEE

Transactions on Intelligent Vehicles, 4(2):265–276.

Sakr, A. H., Bansal, G., Vladimerou, V., and Johnson, M.

(2018). Lane change detection using v2v safety mes-

sages. In 2018 21st International Conference on In-

telligent Transportation Systems (ITSC), pages 3967–

3973.

Sakr, A. H., Bansal, G., Vladimerou, V., Kusano, K., and

Johnson, M. (2017). V2v and on-board sensor fusion

for road geometry estimation. In 2017 IEEE 20th In-

ternational Conference on Intelligent Transportation

Systems (ITSC), pages 1–8.

Scheel, O., Nagaraja, N. S., Schwarz, L., Navab, N., and

Tombari, F. (2019). Attention-based lane change pre-

diction. In 2019 International Conference on Robotics

and Automation (ICRA), pages 8655–8661.

Williams, N., Wu, G., Boriboonsomsin, K., Barth, M.,

Rajab, S., and Bai, S. (2018). Anticipatory lane

change warning using vehicle-to-vehicle communica-

tions. In 2018 21st International Conference on Intel-

ligent Transportation Systems (ITSC), pages 117–122.

Yoon, S. and Kum, D. (2016). The multilayer perceptron

approach to lateral motion prediction of surrounding

vehicles for autonomous vehicles. In 2016 IEEE In-

telligent Vehicles Symposium (IV), pages 1307–1312.

A Comparative Study of Ego-centric and Cooperative Perception for Lane Change Prediction in Highway Driving Scenarios

121