Automatically Segmentation the Car Parts and Generate a Large Car

Texture Images

Yan-Yu Lin, Chia-Ching Yu and Chuen-Horng Lin

Department of Computer Science and Information Engineering, National Taichung University of Science and Technology,

No. 129, Sec. 3, Sanmin Rd., Taichung, ROC, Taiwan

Keywords: Simulation System, Car Model, Parts, Segmentation.

Abstract: This study is segmentation the car parts in a car model data collection and then use the segment car parts to

generate large car texture images to provide automatic detection and classification of future 3D car models.

The segmentation of car parts proposed in this study is divided into simple and fine car parts segmentation.

Since there are few texture images of car parts, this study produces various parts to generate many automobile

texture images. First, segment the parts after texture images in an automated method, change the RGB

arrangement, change the color, and rotate the parts differently. Also, this study made various changes to the

background, and then it randomly combined large texture images with various parts and the background. In

the experiment, the car parts were divided into 6 categories: the left door, the right door, the roof, the front

body, the rear body, and the wheels. In the performance of automated car parts segmentation technology, the

simple and fine car parts segmentation has good results in texture images. Next, the segment car parts and use

multiple groups to generate large car texture images automatically. It is hoped that we can practically apply

these results to simulation systems.

1 INTRODUCTION

Autonomous vehicle (autonomous vehicle) and

virtual reality (VR), augmented reality (AR), and

mixed reality (MR). In the latest technological

development, three-dimensional simulation system

has become the main research trend in computer

vision. A simulation system is a system that presents

real-world situations and physical feedback. It is

applied to autonomous driving, medical technology,

military training, aerospace technology, disaster

response, etc. To make the simulation system more

widely used and make the user's senses on the

simulation system more realistic, when entering

different situations, they can more experience the

reproduction of the actual scene, allowing the user to

experience multiple visual feelings, and the

simulation in the real scene contingency and

operation in a different environment. According to

the material properties assigned to the objects in the

simulation system, the system can present the effects

corresponding to the real world through the material

properties and reflect various environments' physical

characteristics in the real world.

In the real world, the color and texture of an object

can be visually distinguished from its parts and types

by the appearance attributes such as the refraction

angle of illumination, color, and transparency of the

light source, and determine the material properties of

various parts. However, if the parts of an object are

manually identified and the types of the parts are

marked one by one. Then the type of information of

these objects is input into the simulation system. It

will cost a lot of workforce and time.

Texture images can compare objects' appearance

better than shading and make the 3D model present a

more realistic simulation system. However, in the

absence of material information, the simulation

system's choice of situations will be limited.

Therefore, if the texture map can be classified into the

material first and map the label of this classification

to the 3D model and save the workforce and time of

sailing, it can also adapt to the simulation system of

different environmental changes. Even so, the

number of texture images corresponding to the 3D

model is not much. Take the texture image of a car as

an example, as shown in Figure 1. Therefore, in

addition to the material classification of texture

images, this study also proposes a method to generate

Lin, Y., Yu, C. and Lin, C.

Automatically Segmentation the Car Parts and Generate a Large Car Texture Images.

DOI: 10.5220/0010601301850190

In Proceedings of the 2nd International Conference on Deep Learning Theory and Applications (DeLTA 2021), pages 185-190

ISBN: 978-989-758-526-5

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

185

large texture images to detect and classify future 3D

car models automatically.

Figure 1: Texture image of the car.

To extract an object from an image, the commonly

used method is object segmentation. To accurately

distinguish the background and object area of the

image from the image, it depends on the precise

image segmentation technology (Gonzalez and

Woods, 2002; Pandey et al., 2019). In the past, image

segmentation methods can be divided into three

categories according to image pixels' characteristics.

The first type of method is called discontinuity, which

refers to the area where the pixel intensity value

changes drastically. The algorithms of this type

include the Gradient method (Gonzalez and Woods,

2002), Sobel edge detection (Gonzalez and Woods,

2002; Kubicek et al., 2019), Canny edge detection

[3,4,5](Canny, 1986; Xu et al., 2019), Laplacian edge

detection(Gonzalez and Woods, 2002), and Laplacian

Gaussian edge detection. The second type of method

is called similarity, which is based on pre-defined

criteria and segmentation of similar regions of the

image, including Threshold Method (Gonzalez and

Woods, 2002), Area Growing (Zhao et al., 2015),

Region Splitting and Merging (Gonzalez and Woods,

2002), and Clustering (Zhao et al., 2015). The third

type of method is hybrid techniques, which integrate

edge detection and region-based methods to obtain

more accurate image cutting results (Wang et al.,

2016).

This study uses traditional image processing

technology to automatically segment parts from the

existing two-dimensional car texture images and

generate many car texture images from the segment

parts. When building a car texture image, in addition

to the placement and number of layouts based on the

fineness of the car surface, the car parts also be

separated. Therefore, this study first performs

segmentation of the two-dimensional texture image

and then generates large car texture images for the

segment parts.

This study has two contributions. First, to reduce

the cost of marking object categories, this study uses

traditional image processing technology to auto-

segment parts with two-dimensional texture images.

Second, this study produces the background changes

of different texture images of the object model and

changes the shape, color, and rotation of the parts and

the background color system, and randomly generates

many texture images.

2 MANUSCRIPT PREPARATION

This study separates the car parts from the existing

car model's texture image by automatic segmentation

method. It then generates large car texture maps from

these parts to provide the future 3D car model to

detect and classify car parts. The processing flow is

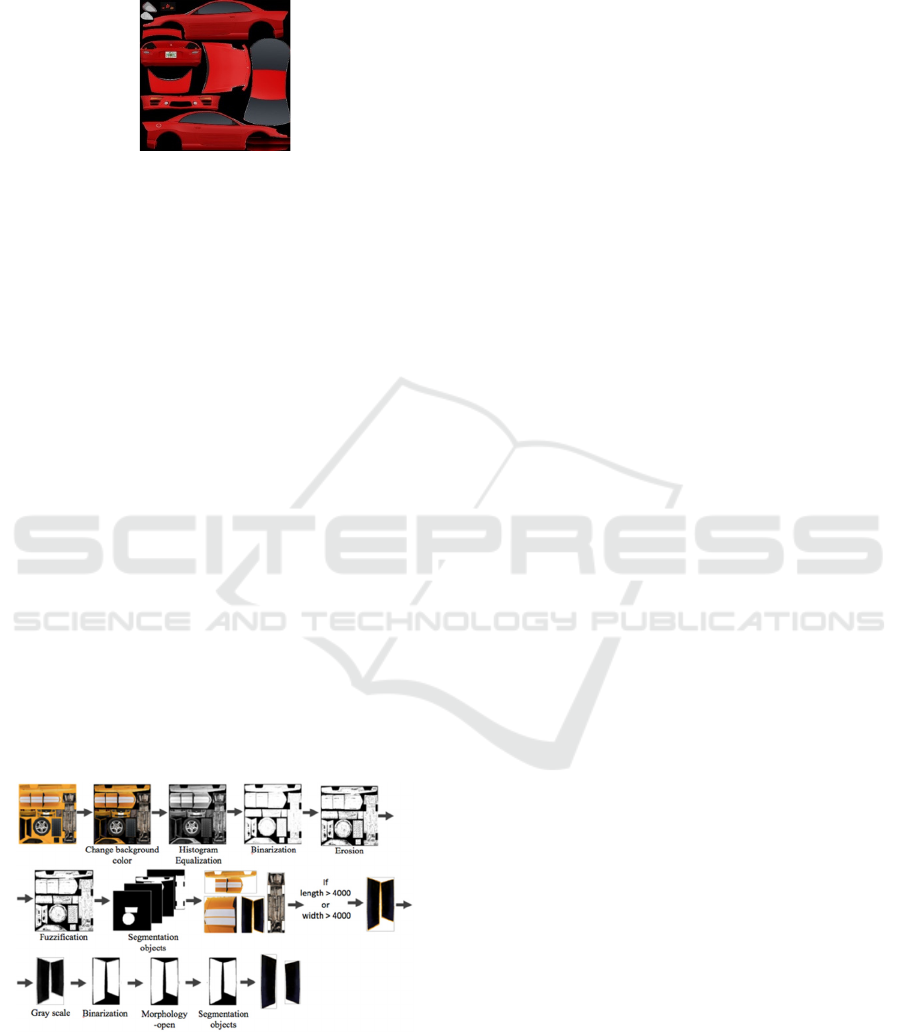

shown in Figure 2. This study is divided into two

stages. The first stage uses automated segmentation

technology to segment the parts of two-dimensional

car texture images. In the second stage, various types

of changes and different background types are added

to these parts to generate large car texture images.

Figure 2: The processing flow of this study.

2.1 Automated Segmentation of Car

Parts

The two-dimensional car texture image is composed

of multiple parts. Because the parts are closely

arranged, and there are no rules, the texture's

presentation method varies for the creator. The styles

presented on the texture also have obvious

differences, as shown in Figure 3. Therefore, this

study proposes a segmentation technology for car

parts. Two different segmentation technologies are

proposed according to the different characteristics of

the texture images. The first segmentation technology

is for textures with large differences between the parts

and the background color and is called the simple

segmentation method of car parts. The second

segmentation technology is for textures with small

differences between the parts and the background

color and is called the fine segmentation method of

DeLTA 2021 - 2nd International Conference on Deep Learning Theory and Applications

186

car parts. The two segmentation technologies used

above are referred to as automated segmentation of

car parts.

Figure 3: Texture image of the car.

Simple Segmentation Method of Car Parts: First

convert the color car texture image into a grey image,

then do the binary image, and finally perform the

part's labelling to detect the part and segment.

Fine segmentation method of car parts: if a simple

segmentation method is used to the color difference

between parts and the background color in an image

is small, and the parts cannot be completely

segmented. Therefore, this study first converts the

color image into a grayscale image, then adjusts the

contrast of the image grayscale by histogram

equalization, then performs binarization, and then

uses erosion blurring to remove the impurities of the

part. Finally, the parts are labelled, and the parts can

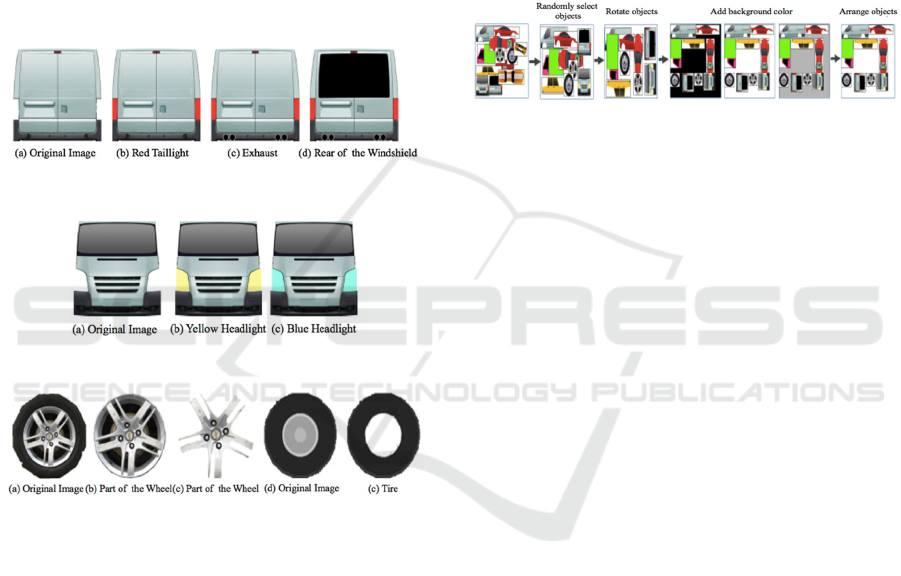

be detected and segmented. The processing flow is

shown in Figure 4. To filter the overlap of some parts,

large objects segment to more than two parts are

caused. Therefore, after calculating all parts' size in

this study, if the cut part's length or width exceeds

4000 pixels, we convert the part image to a grayscale

image. Then the grayscale image is binarized to the

opening is processed to remove the noise between the

parts, and then the parts are cut so that we can cut out

those independent parts.

Figure 4: Fine segmentation process flow of car parts.

Histogram Equalization: When the color of the

parts in the texture image is similar to the

background, the parts are not easily separated from

the background, or the parts' outline is less obvious.

Therefore, this study equalized the histogram of the

grayscale image to make the overall color scale

distribution of the image more uniform to enhance the

contrast of the image color scale and improve the

discrimination between the part and the background.

Gaussian Blur: The parts in the texture image are the

areas surrounded by contour lines, and they are

enclosed in a closed shape. Because some parts in the

texture have light shades and noise points in the

background area, this study uses Gaussian blurring to

reduce the image's noise.

Binarization: First convert the color texture image

into a grayscale image, then use Otsu’s(Otsu, 1979)

method to obtain the binarization threshold, and

finally binarized the grayscale image.

Erosion: It uses a fixed-size filter to perform a

convolution operation on a grayscale image.

Dilate: Use filters of different sizes to perform

convolution operations on grayscale images.

Contour: This study can obtain the contour of the

part by subtracting the original part from the area of

the part that has been eroded or expanded.

Segmentation of Parts: After obtaining the contour

mask of each part one by one, we can obtain the parts

in the original texture corresponding to the mask area.

2.2 Automatically Generate Large Car

Parts Texture Images

Due to the small number of existing texture images,

there are not many parts. Therefore, to increase the

number and variety of training images, this study

produces large and diversified texture images for

training. First, make different changes to the parts

after automatic segmentation, such as the RGB

channel arrangement, color, and parts of different

angles. We also make different changes to the

textured background and then randomly combine

various parts with the background to generate large

texture images, which can be used as training images

for future deep learning models. This study changes

the shape, color, and rotation of the parts and the color

system of the background and then randomly

combines them to generate a large number of texture

images.

The Shape of the Part: The part's appearance is

changed on an equal basis and meets the part types of

Automatically Segmentation the Car Parts and Generate a Large Car Texture Images

187

conditions. This study adds three new arrivals: (1)

Add red rear lights and exhaust pipes on the rear body

of the car and the rear windshield, as shown in Figure

5.; (2) The yellow headlights and cyan headlights of

the front body of the vehicle are added, as shown in

Figure 6.; the tire frame and part of the new car tires.

The tire frame and tire skin are shown in Figure 7.

Figure 7b. is to remove the black part of the tired skin

in Figure 7a., and only the silver tire frame is taken;

Figure 7c. is to extract the disc surface pattern in the

center of Figure 7b.; Figure 7e. is to take Figure 7d.

The black tire skin part. In this study, we added

additional small details to the original parts and

extracted some features from the original parts, and

we added parts and quantities in this way.

Figure 5: The car rear body.

Figure 6: The car front body.

Figure 7: Car tires.

The Color of the Parts: Because the car's metal shell

has the possibility of various colors, this study

changes the order of the RGB channels to combine

the parts with multiple colors. The way to change the

channel is to change the RGB channel's arrangement

without changing the RGB value. A total of 6

arrangements (RGB, RBG, GRB, GBR, BGR, BRG)

can generate 6 different color textures images.

Rotation of Parts: randomly rotate each part, the

angle of rotation is in units of 90 degrees, and the

range of part rotation is from 0 to 270 degrees.

Background Color: After observing the background

color of the original texture image, this study uses

white, grey, and black as the background color of the

new texture image.

Texture image generation: Because of the

irregular arrangement of the parts of the texture

image, the angle, and direction of the placement are

also not fixed, the number of types of parts is also

different, and the background colors of the textures

are also quite diverse. Therefore, this study generates

large car texture images based on the original texture

images' design and placement. The production

process is shown in Figure (17)-first, randomly select

parts from all categories, with 8 to 10 parts. Next, the

rotating parts are arranged in a non-fixed interval, and

finally, various parts are randomly arranged and

placed to generate large car texture images.

Figure 8: The process of generating texture images.

In this study, the number of parts categories, part

type, and RGB channel sorting is used to generate

texture images. The texture training set will be

generated in 4 different ways, called "Type-1",

"Type-2", and "Type-1", "Type-2", and "Type-2".

The "Type-3" and "Type-4" texture training sets are

generated and combined. The number of parts

categories in the table is the number of parts

categories in the generated image, divided into "all

categories" and "single category only." The part types

are combined by "original parts" or "newly created

parts," respectively. The RGB channel refers to the

"grayscale part image" and "the arrangement and

combination of all color channels." Type-1 is a new

texture image composed of all parts categories,

original parts, and 6 color images. Type-2 is a new

texture image composed of all types of parts, newly

generated parts, and grayscale images. Type-3 is a

new texture image composed of all parts categories,

newly generated parts, and 6 color images. Type-4 is

a new texture image composed of all single part types,

newly generated parts, and 6 color images.

3 EXPECTED RESULTS

This experiment uses a small amount of 2D car model

datasets. In addition to verifying the effectiveness of

the automatic segmentation of car parts proposed in

this study, we also segment the car parts to generate

large car texture images. In this experiment, the 2D

car texture image is used for the automatic part

segment. The car part images are produced according

DeLTA 2021 - 2nd International Conference on Deep Learning Theory and Applications

188

to different settings to produce multiple sets of

different types of image data.

3.1 Experimental Environment

In this study experiment, the CPU processor system

is Intel Core i7-4790, the GPU processor is NVIDIA

GeForce GTX 1080Ti 11GB, and 16GB memory is

used. The host uses Windows 10 as the system

environment, the programming language uses

Python, and the image pre-processing and other

image processing programs use the OpenCV Library.

3.2 Datasets

The parts in the texture image of this experiment are

all related parts of the car body. In this study, five

texture images with target types of parts are selected

for use in the cutting experiment of texture image

parts. Among them, there are mainly two styles of 3

images of "cars" and 2 images of "RVs." On average,

each image has 6 different types of parts.

3.3 Car Parts Segmentation Results

and Analysis

The experimental evaluation of the part segmentation

of the car texture image is based on the number of

parts after the division and the part appearance's

completeness as the judgment standard. There are a

total of 88 car parts in the experiment. After simple

segmentation, the correct part number is 61, the loss

parts are 27, and the over segment parts are 62. There

is no gap between the parts, and the color difference

between the background and the parts is small,

resulting in unsatisfactory segmentation results. After

fine segmentation, the correct part number is 59, the

loss parts are 29, and the over segment parts are 19.

The number of correct cuts for fine segmentation has

been reduced by two, but the number of over-segment

parts has been reduced from 62 to 19. Finally, the

results of the two segmentation are combined to

obtain parts for simple and fine segmentation.

3.4 Generate Texture Images of Car

Parts

Since the ready-made 3D model provides few texture

images, the texture image production method is

artificially generated, so there are no rules. The parts

are not easy to separate, and there are not many parts

after segmentation. Therefore, this study creates

training images with diversified parts. First, the

texture image parts automatically segmented, change

the RGB channels' arrangement, change the color and

rotate the parts. Also, various changes are made to the

background. Large texture images are randomly

combined with various parts and backgrounds to

serve as training images for deep learning models.

Table 1. is a statistical table of the number of

parts in this study. There are a total of 50 original

parts from the texture image. Among them, the

number of parts for the front body is the least, with

only 3 parts for the front body, and the number of

parts for the wheels is the most, with 20. Therefore,

this study modifies the color of the original parts,

attaches small parts to the original parts, and increases

the parts' diversity and applications. Finally, 281

parts, the original parts, and the newly added parts

generate large car texture images.

Table 1: Statistics of the number of parts.

Class

label

Number of parts

after segmentation

Add

parts

Parts

total

The car

left body

6 39 45

The car

right body

4 26 30

Roof 12 4 16

The car

front body

3 117 120

The car

rear body

5 20 25

Wheel 20 25 45

Parts total 50 231 281

Table 2. shows the initial number of images, the

number of parts, and additional training sets for the

subsequent 4 groups. Type-1 is a new texture image

composed of all parts categories, original parts, and 6

color images. Type-2 is a new texture image

composed of all parts categories, newly generated

parts, and grayscale images. The content of the data

set has changed more than the Type-1 data set. Type-

3 is a new texture image composed of all parts

categories, newly generated parts, and 6 color images.

Type-4 is a new texture image composed of all single

part categories, newly generated parts, and 6 color

images so that the number of parts is more even.

Automatically Segmentation the Car Parts and Generate a Large Car Texture Images

189

Table 2: Statistics of the number of parts and training

images.

Image Type-1 Type-2 Type-3 Type-4 Total

Left

car door

6 305 450 245 2240 3246

Right car

door

4 240 460 275 2095 3074

Roof 12 245 570 355 2090 3272

The car

rear body

5 285 450 300 2125 3165

The car

front body

3 260 605 385 2155 3408

Wheel 20 305 565 295 2080 3265

Parts

total

50 1905 3100 1855 12785 19695

Image

total

5 4500 10500 5250 6300 26555

4 CONCLUSIONS

This study proposes a set of processing procedures for

the material classification of the part model of the

simulation system to reduce the manual increase of

the part model's material information and reduce the

huge workforce and time. The texture image hand

first uses traditional image processing technology to

segment various parts in the texture image and

generate large texture images. The part classification

of 2D texture images is to overcome a small number

of texture images. The texture image used in the

experiment has a total of 88 parts. After the automatic

segmentation experiment, the number of fine

segmentation is reduced by two than the correct

segmentation of simple segmentation. Still, the

number of over-segment parts is reduced from 62 to

19. The reason is that there are no gaps between the

parts, and the color difference between the

background and the parts is small, resulting in

unsatisfactory segmentation results. The two

segmentation methods have good results in different

texture images. Combine the results of the two

segmentation to obtain automatically segmented

parts. This study automatically segmentation the

texture image parts, changes the arrangement of the

RGB channels, changes the color and rotation of the

parts, etc., and makes various changes to the

background and randomly combines large texture

images of various parts and backgrounds deep

learning model. Training images to improve the

classification accuracy of the parts category.

ACKNOWLEDGEMENTS

This work was supported in part by Ministry of

Science and Technology, Taiwan, under Grant No.

MOST 109-2221-E-025-010.

REFERENCES

Gonzalez, R. C. and Woods, R. E. (2002). “Digital Image

Processing”, Prentice-Hall.

Kubicek, J., Timkovic, J., Penhaker, M., Oczka, D.,

Krestanova, A., Augustynek, M. (2019). "Retinal blood

vessels modeling based on fuzzy sobel edge detection

and morphological segmentationn", Biode-vices, vol.

1, pp. 121-126.

Canny, J. F.(1986). “A Computational Approach to Edge

Detection,” IEEE Transaction on Pattern Analysis and

Machine Intelligence, vol. 8(6), pp. 679–698.

Ding, L. and Goshtasby, A. A. (2001). “On the Canny Edge

Detector,” Pattern Recognition, vol. 34(3), pp. 721–

725.

Xu, H., Xu, X., Xu, Y. (2019). Applying morphology to

improve Canny operator's image segmentation method.

The Journal of Engineering, 2019(23).

Aslam, A., Khan, E.,and Beg, M. M. S. (2015)."Improved

Edge Detection Algorithm for Brain Tumor

Segmentation", Second International Sympos-ium on

Computer Vision and the Internet, vol. 58, pp. 430-437.

Wang, H., Huang, T.-Z., Xu, Z., Wang, Y. (2016). "A two-

stage image segmentation via global and local region

active contours", Neurocomputing, 205 (2016) ,

pp. 130-140

Lorencin, I., Anđelić, N., Španjol, J., and Car, Z. (2020).

“Using multi-layer perceptron with Laplacian edge

detector for bladder cancer diagnosis,” Artificial

Intelligence in Medicine, vol. 102, article 101746.

Pandey, R. K., Karmakar, S., Ramakrishnan, A., and Saha,

N. (2019). "Improving facial emotion recognition

systems using gradient and laplacian images".

Zhao, M., Liu, H., and Wan, Y. (2015)."An improved

Canny Edge Detection Algorithm", IEEE International

Conference on Progress in Informatics and Computing,

pp. 234-237.

Otsu. N. (1979). A threshold selection method from gray-

level histograms. IEEE Transactions of Systems, Man,

and Cybernetics, 9(1). pp. 62-66.

DeLTA 2021 - 2nd International Conference on Deep Learning Theory and Applications

190