Concept of a Robotic System for Autonomous Coarse Waste Recycling

Tim Tiedemann

1

, Matthis Keppner

1

, Tom Runge

2

, Thomas V

¨

ogele

2

, Martin Wittmaier

3

and Sebastian Wolff

3

1

Department CS, University of Applied Sciences Hamburg, Berliner Tor 7, Hamburg, Germany

2

German Research Center for Artificial Intelligence, Robotics Innovation Center, Bremen, Germany

3

Institut for Energy, Recycling and Environmental Protection at Bremen University of Applied Sciences, Bremen, Germany

Keywords:

Smart Recycling, Construction Waste Recycling, Multispectral Image Processing, Mobile Robotics.

Abstract:

The recycling of coarse waste such as construction and demolition waste (CDW), bulky waste, etc., is a

process that is currently performed mechanically and manually. Unlike packaging waste, commercial waste

and the like, which is usually cut or shredded into small pieces and then automatically separated and sorted on

conveyor belt-based systems, coarse waste is separated by specialized personnel using wheel loaders, cranes or

excavators. This paper presents the concept of a robotic system designed to autonomously separate recyclable

coarse materials from bulky waste, demolition and construction waste, etc. The proposed solution explicitly

uses existing heavy equipment (e.g., an excavator currently in use on-site) rather than developing a robot from

scratch. A particular focus is set on the sensory system options used to identify and classify waste objects.

1 INTRODUCTION

The waste management and recycling sector can

make a significant contribution to reducing climate-

damaging emissions. For example, the disposal of

municipal waste in a landfill is associated with emis-

sions of approx. 400 g CO

2

equiv./kg of waste, while

high-quality energy recovery of the same waste is as-

sociated with only approx. -22 g CO

2

equiv./kg of

waste (Wittmaier et al., 2009). In Germany, the ban

on dumping untreated waste in landfills and the asso-

ciated requirement for mechanical-biological or ther-

mal waste treatment indicates that the waste- and en-

vironmental services industry already plays a signifi-

cant role in the reduction of climate-damaging emis-

sions. If waste, such as plastics, is recycled materially

rather than energetically, climate-damaging emissions

can be reduced by 1600 g to 2000 g CO

2

equiv./kg

plastic waste (HDPE, LDPE, PET) (Rudolph et al.,

2020). Material recycling is an effective form of cli-

mate protection and also, naturally, of resource con-

servation. For this reason, more and more efforts

have been made in recent decades to improve ma-

terial recycling. An essential prerequisite for mate-

rial recycling is the sorting of materials (paper, card-

board, plastics etc.). In order to obtain sorted ma-

terials from mixed waste, conveyor belt-based sort-

ing plants for small-scale waste have been developed

since the 1990s. Whereas in the beginning, sorting

was exclusively manual and mechanical, AI-assisted

robotic systems are now slowly starting to be used

for small-scale waste. The technology is continuously

improved (Zhang et al., 2019), which makes the sort-

ing process more efficient. However, although effi-

cient sorting techniques are available today specifi-

cally for small-sized waste, coarse waste is still sorted

using the same technology as in the 1970s and 1980s.

As a result, large quantities of principally recyclable

materials hidden in coarse waste are lost (coarse waste

in Germany: approx. 2.25 million Mg of bulky

waste, 197 million Mg of construction and demoli-

tion waste etc.) (Federal Statistical Office of Germany

(Destatis), 2020). For climate protection and resource

conservation purposes, more effective processes for

sorting coarse waste by type must be developed to re-

cover recyclable materials from mixed waste.

In this paper, results from investigations into the de-

velopment of AI-based robotic sorting systems for

coarse (bulky waste, construction and demolition

waste etc.) are presented. The results show strategies

that enable a more efficient sorting of coarse waste.

The partial material recycling of mixed waste offers

an active contribution to climate protection and re-

source conservation.

Tiedemann, T., Keppner, M., Runge, T., Vögele, T., Wittmaier, M. and Wolff, S.

Concept of a Robotic System for Autonomous Coarse Waste Recycling.

DOI: 10.5220/0010584004930500

In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2021), pages 493-500

ISBN: 978-989-758-522-7

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

493

2 RELATED WORK

2.1 Bulky Waste Sorting Pilot Study

In a pilot study conducted in 2011 (the R&D project

”Efficient Sorting of Bulk Wastes with Robots”

(ROSA, 2011)), a system concept for the automatic

sorting of bulky wastes was developed. The project

evaluated the feasibility of technical solutions for

the automated removal of non-recyclable items from

large piles of bulky wastes entering the recycling

plant. It also looked at options for the automatic ex-

traction of larger items of recyclable material during

the final sorting on the conveyor belt. The focus of the

project was on the evaluation of sensors and methods

for 2D and 3D object recognition. The lack of consis-

tent form features of the often deformed and damaged

objects as well as the highly heterogeneous composi-

tion of the waste conglomerate were identified as the

key challenges for both object recognition and object

manipulation.

In support of a theoretical study, lab experiments

were conducted to recognize and subsequently ma-

nipulate objects within the waste conglomerate us-

ing data fusion of different sensors (e.g., cameras, 3D

laser scanners, NIR sensors) and standard robotic ma-

nipulators (e.g., KUKA). The lab demonstrator was

able to prove the basic feasibility of the ROSA con-

cept. However, it was not possible to adapt and test

the concept under actual working conditions due to

the lack of financial resources and available state-of-

the-art equipment at the time.

Nevertheless, the outcome of the ROSA project

provides a valuable starting point for more recent ef-

forts to implement a SmartRecycling concept. As

described in this paper, new developments in AI-

based object recognition, sensor fusion and mobile

robotics are the key to solving some of the fundamen-

tal problems with the automated extraction of large

waste objects from a heterogeneous waste conglom-

erate ROSA had identified at the time.

2.2 Automation of Large Hydraulic

Machines

Standard industrial robots as well as most profes-

sional service robots developed for indoor and field

applications use electric actuators to grip, hold, and

move objects. In these electric-powered systems,

several integrated sensors continuously monitor the

system-state and thus deliver the information needed

to automate the control of manipulators, grippers and

other sub-systems.

On the other hand, hydraulic-powered machines,

such as cranes and excavators, are more challeng-

ing to automate, since they are typically not equipped

with the necessary sensors and hydraulic actuators of-

ten lack the precision of their electric counterparts.

Despite these shortcomings, large hydraulic-

powered machines are prevalent in many industries

such as construction, mining, waste sorting, agricul-

ture and forestry, etc. Due to their high performance,

robustness, and reliability, they are used in harsh en-

vironments and rugged terrain. Also, the deployment

of automated hydraulic heavy machinery in construc-

tion, mining, and agriculture is increasing. There

already exist several automated hydraulic machines,

either as commercial products or as research proto-

types.

In project ROBDEKON (K

¨

uhn et al., 2020), DFKI

is part of a consortium that develops solutions for

the automation of large hydraulic machines. By

retrofitting a M545 excavator, build by the Swiss com-

pany Menzi Muck, with sensors and modified actu-

ators, DFKI developed the hydraulic robot ARTER

(Automated Rough Terrain Excavator Robot)

1

With ARTER, ROBDEKON could prove that a

large hydraulic excavator can be automated to suc-

cessfully handle complex tasks, like manipulating

barrels filled with hazardous waste, if equipped with

sensors that can measure the state and pose of joints,

limbs and the like. However, the project also showed

that this retrofitting does come at a high cost, limiting

the applicability of the concept for many legacy sys-

tems. Also, since the robot has to operate in a very un-

structured and highly dynamic environment, conven-

tional methods for robot control that cannot react to

changes in the environment are only of limited value.

2.3 Sensor Data Processing in

Construction Waste Analysis

In different projects, the classification of waste ob-

jects based on RGB images was studied. Kim et al.

(2019) use a modified LeNet 5 convolutional neural

network (CNN) to classify objects by RGB images in

carton vs. plastic (Kim et al., 2019). Also, some more

applications of neural networks to classify waste ob-

jects based on visual image data are presented by Kim

et al. (2019). Such an RGB-image-based classifica-

tion seems to be feasible once objects can be iden-

tified within images. In an extreme case, this could

be accomplished by scanning a barcode of an ob-

ject (e.g., when supporting people sorting their waste

1

https://robotik.dfki-bremen.de/en/research/robot-

systems/arter/

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

494

(Bonino et al., 2016)). In the use case by Kim et al.

(2019) the objects are separated from others before

the classification task and solely consumer packaging

objects are used (and only two classes need to be dis-

tinguished).

Regarding the recycling of construction and de-

molition waste (CDW) two problems arise that make

a classification solely based on RGB images much

harder: 1. The objects in CDW are usually broken

into several parts and 2. they were often constructed

in a unique shape depending on the specific situa-

tion and the specific construction. Furthermore, in

this use case, objects will cover each other within the

heap. All these properties make it hard to classify ob-

jects just by RGB image data. A solution could be

the use of additional spectra for image classification.

Thereby, not just an object classification but a mate-

rial classification could be feasible.

Linß (2016) presents a thorough review of sev-

eral methods used to distinguish between different

classes of construction waste (Linß, 2016). Discussed

are solutions that use sensors of different modalities,

e.g., visual RGB cameras, near-infrared (NIR) and

short wave infrared (SWIR) imaging for hyperspec-

tral imaging (HSI), as well as methods using x-ray.

She concludes that especially the combination of VIS

and NIR is of particular interest and will be studied

further. Other publications also support these sensor

modalities (Anding et al., 2013; Kuritcyn et al., 2015),

as well as considered as state-of-the-art in consumer

waste recycling utilizing VIS/NIR/SWIR-based clas-

sification.

3 THE SmartRecycling CONCEPT

This paper summarizes the results of a study con-

ducted by the authors in 2020 and 2021 (project

“SmartRecycling”), with funding from the German

Federal Ministry for the Environment, Nature Conser-

vation and Nuclear Safety. The objective of SmartRe-

cycling was to develop a general technical concept

for the sorting of coarse and bulky wastes. The im-

plementation and validation of this concept were not

within the scope of this study but may be realized in

a later project phase.

As the first step in SmartRecycling, the on-site

conditions and processes in several recycling plants in

Northern Germany were studied, and functional sys-

tem requirements were developed. A thorough anal-

ysis revealed that the process of pre-sorting holds the

best potential for automation. Pre-sorting describes

the process of removing recyclable items of higher

quality and value (e.g., wood, plastics, metals) as well

as objects that either contain hazardous materials or

are a potential obstruction to the machinery (e.g., mat-

tresses, ropes, nets) from the wastes before shredding

and further processing. The items are extracted from

the waste conglomerate manually, i.e., by skilled op-

erators with the help of large hydraulic or electro-

hydraulic cranes and excavators.

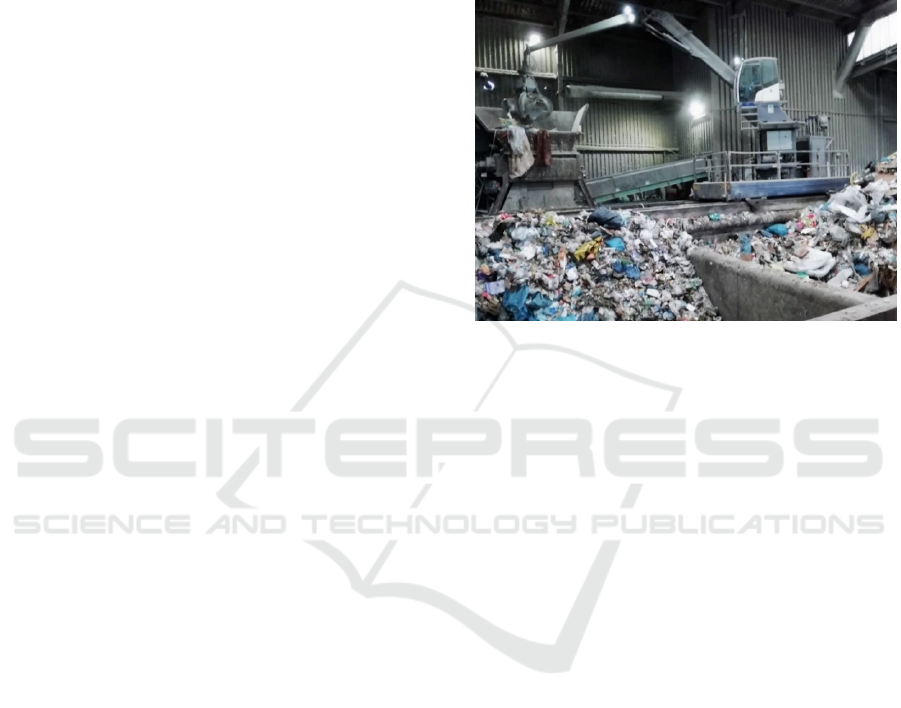

Figure 1: Rail-bound crane from BZ (at ASO, Osterholz.)

(Wolff and Wittmaier, 2021, under CC-BY 4.0).

Figure 1 shows a rail-mounted crane from the

manufacturer Baljer & Zembrod GmbH & Co. KG

that is used in a recycling facility operated by ASO

Abfall-Service Osterholz GmbH. The crane is oper-

ated manually by an experienced operator. Both the

manipulator and the gripper of this machine are ac-

tuated hydraulically. The machine itself moves elec-

trically on a rail parallel to the length of the rectan-

gular pit that holds the waste. Other machines typi-

cally used in recycling facilities for the pre-sorting of

coarse waste are regular diesel-powered mobile hy-

draulic excavators.

3.1 AI-driven Automation and

Actuation

Although the machines used for the pre-sorting of

coarse waste can be of different types, they have in

common that they are usually not equipped for au-

tomation and thus require significant investments in

sensors and electronics to make them fit for automated

control. The SmartRecycling study postulated the use

of state-of-the-art AI and machine learning to develop

a solution for the automation of standard off-the-shelf

hydraulic and electro-hydraulic machines without the

need for significant modifications. Desirable side ef-

fects are a reduction in investment and the automated

use of legacy machinery in recycling facilities (and

other application areas). It needs to be kept in mind

Concept of a Robotic System for Autonomous Coarse Waste Recycling

495

that the standard electrical off-the-shelf industry ma-

nipulators are usually not suitable for CDW recy-

cling tasks with respect to workspace and payload de-

mands.

The basic idea of this AI-driven automation is to

use AI methods such as Reinforcement Learning (RL)

to teach an artificial neural network (ANN) how to

control a machine by letting it associate observable

control inputs, issued through the machine’s standard

control interface, with the corresponding machine be-

havior. Motion sensors installed on the ceiling and

the walls of the recycling facility track the machine’s

behavior and record, for example, the movements of

the manipulator and gripper. By using markers at-

tached to the gripper, manipulator joints and other

critical parts of the machine, each trajectory through

3D space is tracked. Based on that, a 3D motion

model of the entire machine is developed. The ANN

is then trained with the motion model as output and

the corresponding control commands issued by the

human operator as input. Using this approach, the

ANN can predict the relationship between a control

command and the crane’s reaction and use this to

move the crane precisely to a target position.

Training of the ANN can happen during spe-

cific training sessions or during the standard human-

controlled operation of the machine. In principle,

such an AI-driven control should be largely inde-

pendent of the machine it is applied to (see also

www.smartrecycling-projekt.de).

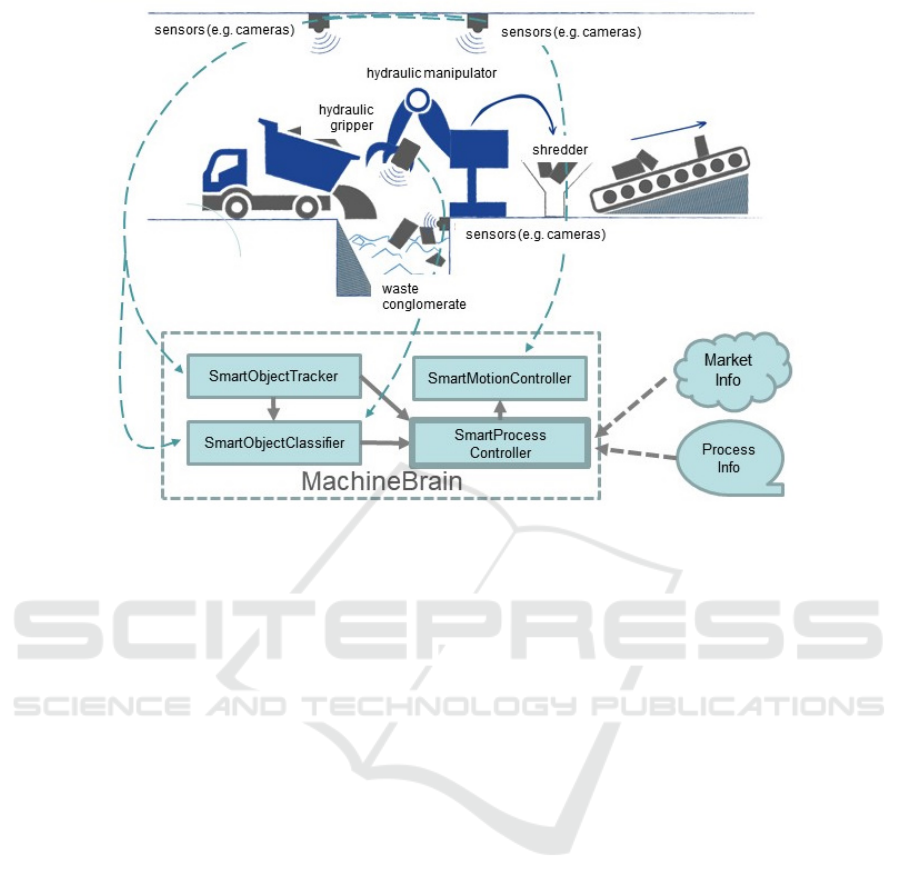

3.2 System Components

Several AI-based software modules are required to

implement the AI-based control and automation out-

lined above. The SmartRecycling approach proposes

four software modules bundled in one unit, dubbed

the ’MachineBrain’. The MachineBrain supports the

control of the hydraulic manipulator and the hydraulic

gripper as well as the detection and classification of

objects and materials in the waste conglomerate. The

four modules of the MachineBrain are:

• SmartObjectClassifier: The SmartObjectClassi-

fier enables the recognition of individual objects

in the waste conglomerate with the help of AI-

based object and material classification based on

data from infrastructure sensors installed in the fa-

cility. In a first step, an object classification and

a rough material classification is carried out (see

Section 3.3 and Section 3.4). In a second step,

the SmartObjectClassifier includes data from the

sensors installed in the gripper and the waste pit’s

walls to further improve the object and/or material

classification.

• SmartObjectTracker: This module determines the

x-y-z position of the objects recognized by the

SmartObjectClassifier. Details are given in Sec-

tion 3.5.

• SmartMotionController: As described in Sec-

tion 3.1, the SmartMotionController enables the

crane to move independently and approach a

specific target position from the SmartObject-

Tracker. To achieve this, the AI-driven control

software must have learned the relationship be-

tween control commands and manipulator and

gripper movements. Its motion is calculated using

inverse kinematics, considering the precise posi-

tion and orientation of each manipulator joint, hy-

draulic cylinders, and such.

• SmartProcessController: The SmartProcessCon-

troller combines the data on the position and the

material class of an object (from ObjectTracker

and ObjectClassifier) with the data on the crane’s

current position and orientation (from the Mo-

tionController) and plans the crane’s next work

steps. External data on the current market sit-

uation (Which recyclable materials do currently

have the best economic value?) and the overall re-

cycling process (When arrives the next transport?

Which materials are potentially harmful to the en-

vironment?) can also further optimize the pro-

cess control regarding ecological and economic

aspects.

3.3 Sensory System I: Object

Classification

The sensory system’s first step is the detection and

classification of known objects in the waste heap. If it

recognizes known objects or parts of objects, it reads

an exact definition of its material(s) from its database.

Thus, this is comparable to the approach by Bonino et

al. (2016) for consumer waste (Bonino et al., 2016).

While Kim et al. (2019) did not explicitly follow this

approach, their modified LeNet at least has the chance

to identify objects (instead of materials) (Kim et al.,

2019). Visual object classification has been well stud-

ied for decades. Solutions using different types of ma-

chine learning (ML) such as Support Vector Machines

(SVM) and deep learning methods such as the well-

known convolutional neural networks (CNN) (”one-

stage” and ”two-stage”: e.g., Inception and YOLO

variants) were presented (review (Jiao et al., 2019)).

These candidate methods will be evaluated and, if

necessary, further developed. Sensors usually utilized

for this first step are vision (RGB) cameras.

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

496

Figure 2: SmartRecycling concept.

3.4 Sensory System II: Material

Classification

For unknown and unrecognized objects in the waste

heap, a second step is planned in the sensory system:

Again, machine learning methods (ML), particularly

SVM or CNN, are used to identify the class of mate-

rial. If this step succeeded, the first step can be trig-

gered again, now in run two, considering the mate-

rial classification result. For the application of SVM

in CDW recycling to classify materials, see (Linß,

2016). As presented within this, the non-visual op-

tical spectra should be taken advantage of, too.

So far, these sensors are used in short distances

and with separated objects. Within this project’s on-

going work, further research must show whether a

material classification is possible in a distance of 15 m

to 25 m from sensors at the hall’s roof. If only a coarse

classification is possible at such distances, additional

sensor equipment could be attached to the excavator

for a more detailed material classification when grasp-

ing.

3.5 Sensory System III: Determination

of 3D Position

After the classification of objects and/or materials, the

next object targeted to be grasped and sorted can be

selected. This could be done using predefined rules,

e.g., based on the type of object or its position in the

waste heap. To compute the manipulator’s path and

to perform the grasp, the 3D position of the selected

object needs to be known. To this purpose, (1) mul-

tiple cameras and triangulation, or (2) other sensors,

e.g., LiDAR or time-of-flight (TOF) camera, could be

used. As the environment and most sensors are fixed

to the infrastructure, a calibration could be carried out

to map object positions to an angle in one type of sen-

sors and a 3D position in the position sensors. Here,

the solution proposed by Kim et al. (2019) seems

to be a very interesting approach (Kim et al., 2019).

However, a problem in this use case is supposed to be

the large distance between sensors and objects.

4 FIRST SENSOR DATA

COLLECTION

In the project’s concept phase, the first tests were car-

ried out to reduce the number of potentially usable

sensor modalities down to a manageable set. Dis-

cussed but not selected were radar sensors due to their

coarse spatial resolution and thermal imaging sensors

due to the long time constants when the objects’ tem-

peratures change. Both sensor types can be added to

counter (temporary) poor viewing conditions for the

other sensors (due to smoke etc.).

Concept of a Robotic System for Autonomous Coarse Waste Recycling

497

Figure 3: Left column: Sample RGB images in the visual spectrum. Right column: gray images of a spectrum of around

1000 nm. The resolution of the visual spectrum camera is 1920×1200 (FOV 30.4

◦

× 19.0

◦

) and of the SWIR camera 320×256

(FOV 22.9

◦

× 18.3

◦

). The images were taken in a distance of about 15 m (to the plants in the image centers). The second and

third row show magnifications of the center of the original images (Kaßmann et al., 2021a, under CC-BY 4.0).

4.1 UV/VIS/NIR/SWIR Sensor Data

Most promising to distinguish different materials

seem to be multi-spectral image data in the UV-, vi-

sual, and SWIR-spectrum. First sample images were

taken in a laboratory environment as depicted in Fig-

ure 3. As can be seen in the second row images, the

1,000 nm spectrum (as an example) shows differences

that cannot be seen in the RGB image: comparing the

two green ”plants” in the 1,000 nm spectrum shows

that one is a natural plant while the other is made from

plastic. Also, the blue vs. the black parts of the chairs

can be distinguished more easily in infrared as well as

the display frames from the displays.

The third row image shows the problem of the

coarse resolution of the SWIR camera’s InGaAs sen-

sor: in a distance of 15 m the field of view (FOV)

leads to an area that covers a large part of a waste

heap, but small objects (like the leaves of the plants)

shrink to just a few pixels. Thus, the material classifi-

cation in a distance of, e.g., 15 m could maybe work

for objects of about 10 cm×10 cm but not for small

objects in the size of one leaf (≈ 3 cm×5 cm).

Fifty-eight objects of different materials (wood,

metal, plastic, stone, paper) were collected at CDW

recycling sites and households to generate test image

data. For each subset of these objects, images were

taken with a visual image camera, a UV camera, and

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

498

Figure 4: Left: RGB image of six sample pieces in the visual spectrum. Right: false-color image with the spectra around

880 nm, 1300 nm, and 395 nm (Kaßmann et al., 2021b, under CC-BY 4.0).

a SWIR camera. Multiple bandpass filters of differ-

ent wavelengths were used (plus one image without

filters). Altogether, 14 images of different conditions

(wavelengths) were taken for each set of test objects.

Sample images of one set of objects are shown in

Figure 4. The false-color image shows a more pro-

nounced difference between the two objects on the

left and fewer differences within the objects on the

right than the image in the visual spectrum. Both are

good to distinguish objects of different materials but

not to divide objects into image segments.

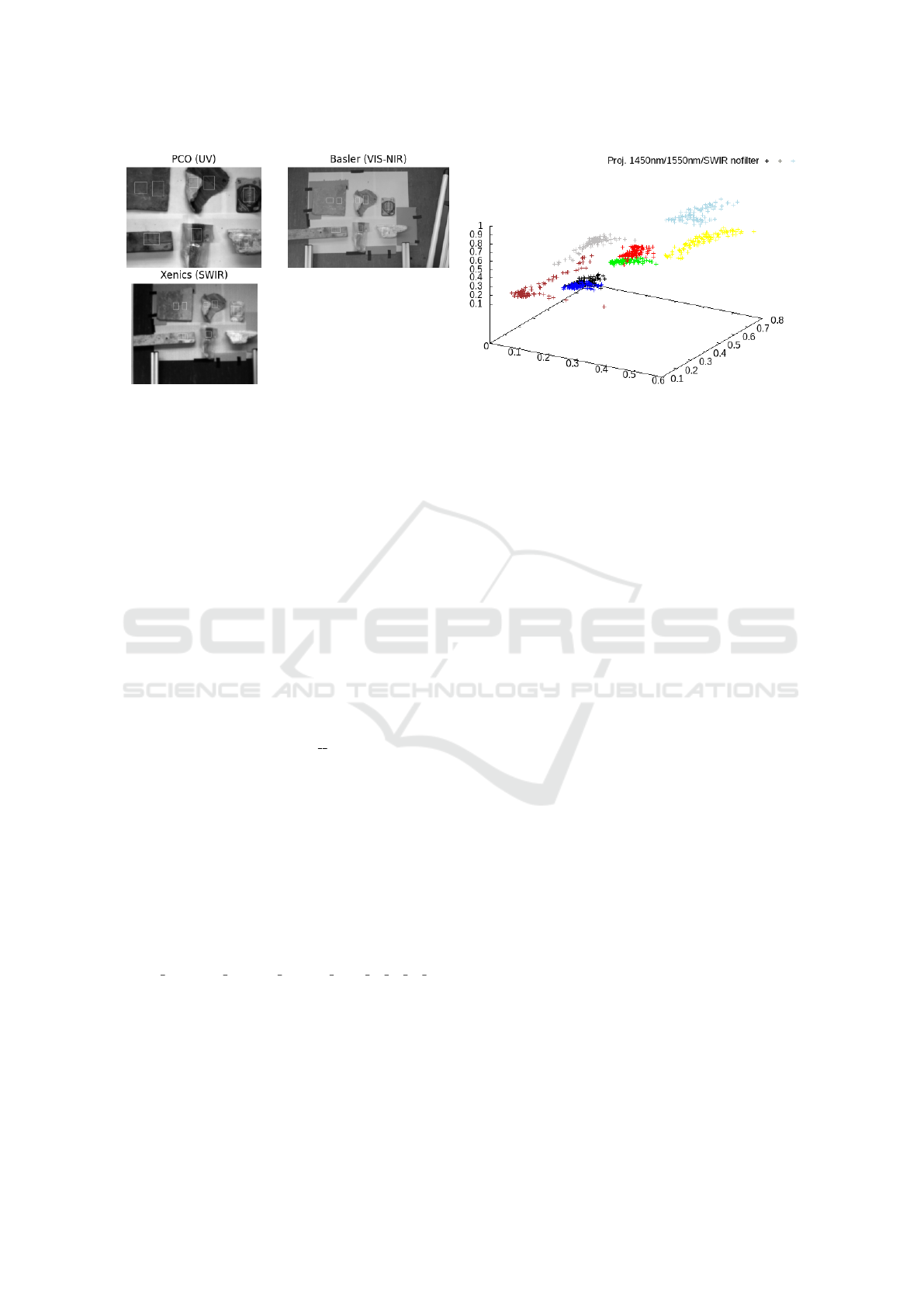

After the first manual analysis of the sample data,

we implemented small scripts to prepare data sets that

can be analyzed automatically. Figure 5 (left) shows a

tool to select rectangular areas within the test objects.

By the script, for each test object, we get a number

of pixels in each of the three camera images for each

of the different filter conditions. Eventually, each ob-

ject (rectangle) is represented by sample points in a

14-dimensional space. Figure 5 (right) shows a pro-

jection of all selected points (within the rectangles)

from the 14-dimensional space to a three-dimensional

space.

Each color in the plot represents one rectangle in

the camera images. As can be seen, even in such a

simple 3D projection, the pixels of the different colors

(i.e., different rectangles and different objects) are rel-

atively well separated. As the next step, cluster analy-

sis will be done to check if – for the collected data set

– a classification seems to be feasible and what kind

of pre-processing should be applied. Afterwards, dif-

ferent classification methods will be tested.

5 CONCLUSIONS

A concept of an autonomous robotic system for sort-

ing construction and demolition waste (CDW) or

bulky waste, in general, was proposed. It emphasizes

reusing standard presorting equipment typically used

in the bulky waste recycling industry, i.e., hydraulic

cranes or excavators. The application of a sophisti-

cated and adaptive control system that uses reinforce-

ment learning (RL) methods has been identified as a

useful and promising approach. A further advantage

of such an ML-based control system is the potential

portability to other cranes and applications.

Classifying materials in distances of 15 m to even

25 m is one of the main challenges for such a sensory

system. This concept uses a combination of differ-

ent imaging sensors of spectra in visible and invisible

wavelength ranges plus fixed sensors in the infrastruc-

ture and mobile sensors at the crane. After the first

manual tests have been carried out, automated analy-

ses need to be run on all collected data next. Thereby,

the separability of different clusters and the accuracy

of the cluster–object assignments can be studied and

quantified.

ACKNOWLEDGEMENTS

The authors would like to thank Maximilian De

Muirier for his thorough review of this paper and

helpful advice.

Furthermore, the authors would like to thank Adrian

Kaßmann for his support in the data collection and

data pre-processing steps.

This work was partly funded by the Federal Re-

public of Germany, Ministry for the Environment,

Nature Conservation and Nuclear Safety, grant no.

67KI1013A/B/C.

Concept of a Robotic System for Autonomous Coarse Waste Recycling

499

Figure 5: Left: A tool to select sample data of the test objects from all images of a scene. With the rectangles, specific parts

(pixels) of the objects can be selected (Keppner and Kaßmann, 2021, under CC-BY 4.0). Right: Three-dimensional projection

of the 14-dimensional multi-spectral image data of the objects selected by the rectangles (compare the images on the left).

Each color represents pixel data of one rectangle in the three images i.e. of the same object (pixels in rectangle “1” in the UV

and in the VIS and in the SWIR image, pixels in rectangle “2” in the UV/VIS/SWIR images etc.).

REFERENCES

Anding, K., Garten, D., and Linß, E. (2013). Application of

intelligent image processing in the construction mate-

rial industry. ACTA IMEKO, 2(1):61–73.

Bonino, D., Alizo, M. T. D., Pastrone, C., and Spirito, M.

(2016). Wasteapp: Smarter waste recycling for smart

citizens. In 2016 International Multidisciplinary Con-

ference on Computer and Energy Science (SpliTech),

pages 1–6. IEEE.

Federal Statistical Office of Germany (Destatis) (2020). Ab-

fallbilanz 2018. https://www.destatis.de/DE/Themen/

Gesellschaft-Umwelt/Umwelt/Abfallwirtschaft/

Publikationen/Downloads-Abfallwirtschaft/

abfallbilanz-pdf-5321001.pdf? blob=

publicationFile. [Online; accessed 30-March-2021].

Jiao, L., Zhang, F., Liu, F., Yang, S., Li, L., Feng, Z., and

Qu, R. (2019). A survey of deep learning-based object

detection. IEEE Access, 7:128837–128868.

Kaßmann, A. F., Keppner, M., and Tiedemann, T. (2021a).

Comparison of a vis and a 1,000 nm ir image with

different magnifications. figshare. figure. doi: https:

//doi.org/10.6084/m9.figshare.14607777.v1.

Kaßmann, A. F., Keppner, M., and Tiedemann, T. (2021b).

Comparison of vis and uv/ir false color image of dif-

ferent materials. figshare. figure. doi: https://doi.org/

10.6084/m9.figshare.14607612.v2.

Keppner, M. and Kaßmann, A. F. (2021). rectangu-

lar pixelvalue selection example scene 21 01 20 0.

figshare. figure. doi: https://doi.org/10.6084/m9.

figshare.14604291.v2.

Kim, J., Nocentini, O., Scafuro, M., Limosani, R., Manzi,

A., Dario, P., and Cavallo, F. (2019). An innovative

automated robotic system based on deep learning ap-

proach for recycling objects. In ICINCO (2), pages

613–622.

K

¨

uhn, Heide, and Woock (2020). Robotersysteme f

¨

ur

die dekontamination in menschenfeindlichen umge-

bungen. Proceeding at Leipziger Deponiefachtagung

2020.

Kuritcyn, P., Anding, K., Linß, E., and Latyev, S. (2015).

Increasing the safety in recycling of construction and

demolition waste by using supervised machine learn-

ing. In Journal of Physics: Conference Series, volume

588, page 012035. IOP Publishing.

Linß, E. (2016). Sensorgest

¨

utzte sortierung von mineralis-

chen bau-und abbruchabf

¨

allen. Fachtagung Recycling

R, 16.

ROSA (2011). Rosa project website. https://robotik.

dfki-bremen.de/en/research/projects/rosa.html. Last

seen 17.05.2021.

Rudolph, N., Kiesel, R., and Aumnate, C. (2020). Un-

derstanding plastics recycling: Economic, ecological,

and technical aspects of plastic waste handling. Carl

Hanser Verlag GmbH Co KG.

Wittmaier, M., Langer, S., and Sawilla, B. (2009). Possibili-

ties and limitations of life cycle assessment (lca) in the

development of waste utilization systems–applied ex-

amples for a region in northern germany. Waste Man-

agement, 29(5):1732–1738.

Wolff, S. and Wittmaier, M. (2021). Treatment process of

bulky waste. figshare. figure. doi: https://doi.org/10.

6084/m9.figshare.14604375.v1.

Zhang, Z., Wang, H., Song, H., Zhang, S., and Zhang, J.

(2019). Industrial robot sorting system for municipal

solid waste. In International Conference on Intelligent

Robotics and Applications, pages 342–353. Springer.

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

500