A Deep Learning based Approach for Biometric Recognition using

Hybrid Features

Mrityunjay Kumar

1

, Arvind Kumar Tiwari

2

1,2

Department of CSE, Sultanpur(India)

Keywords: Biometrics, Biometric Recognition, Identification Techniques, Multimodal Identification, Deep Learning,

CNN.

Abstract: Biometric authentication and identification is most important and challenging problem in this evolved era of

computer technology. Goal of new technical developments is to make our task easy and life smoother. It is

important to develop an efficient computational method to recognize and identify biometrics more

efficiently with least time delay. This paper proposed a CNN based multimodal biometric identification

system using feature fusion of three biometric traits Faces, Fingerprints, and Iris. In this paper PCA and WT

are used for feature extraction and feature fusion respectively. The accuracy of the proposed approach is

about 96.67% on fused features of three biometric traits Faces, Fingerprints, and Iris. The proposed

approach in this paper provides better accuracy in compare to the existing method in literature.

1 INTRODUCTION

Biometrics is the method of identifying an individual

using physical or behavioral features of an

individual like fingerprints, faces, gait, and voice

etc. Base of biometrics systems exists in the

permanence and uniqueness of the physiological and

behavioral traits of individuals. The technological

developments in the modern era are very fast. This

fast developing era need more fast, secure and

reliable identification systems in variety of

requirements like Airports, International border

crossings, Law enforcement agencies, Commercial

places like banks and other business applications,

availing benefits from government's social service

schemes etc. Biometrics has capacity to handle large

identity management systems and for this reason

identification systems based on biometrics placed

themselves in the position where no competitor

exists. Though it is not completely a novel idea to

use biometrics for identification, there are evidences

for more than thousands years ago it was in use in

some form but not exactly same or nearly same as it

is in modern era. It is about 50 years ago when IBM

first proposed that a remote computer system may

use for the identification purpose of the human (Jain,

2007).

Evidences of biometrics are older than the

centuries. In some ancient caves, there were some

traces of claws in front of the artists who have been

created about 31000 years ago, such as the modern

painters used to prove their signature identity on

their created paintings.There is also proofs regarding

use of fingerprints for identifying individual’s

around 500 B.C. Business transactions in

Babylonian civilization used clay tablets to record

fingerprints (

Maio, 2004).

Biometrics recognition is most popular tool for

human identification and verification in modern era

for so many reasons. Some of the obvious reasons

are performance, reliability, real time computability,

and security. General thinking about biometric traits

are that they are unique and permanent. But in

reality in spite of having sufficient amount of

uniqueness the biometric systems are not sufficiently

reliable in terms of permanence of human biometric

traits, both behavioral as well as physiological.

There are numerous researches which proven the

degradability of common biometric traits. By this

reason the identification/recognition process using

biometrics becomes not to trusted fully. In most of

the cases the Genuine Acceptance Rate(GAR) is not

100%, and it always also contains some False

Acceptance Rate(FAR). So there is always a better

model possible with respect to a existing

Kumar, M. and Tiwari, A.

A Deep Learning based Approach for Biometric Recognition using Hybrid Features.

DOI: 10.5220/0010567900003161

In Proceedings of the 3rd International Conference on Advanced Computing and Software Engineering (ICACSE 2021), pages 273-282

ISBN: 978-989-758-544-9

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

273

identification/verification system using biometric

recognition.

In the modern era traits like fingerprints, faces,

iris, palm prints, hand geometry, DNA, voice

samples etc. may be used for biometric

authentication. A biometric recognition system using

only single trait as identification/verification tool has

a high frequency of failure because of the changing

nature for the considered trait. For e.g. suppose we

develop a system which uses fingerprint as a

biometric trait for identification for a group of

persons which include multiple job class persons

(Some of them are might physical workers like

labors etc). People who work in rugged conditions

have high rate of change in their fingerprints, which

will increase the failure rate of the system. Similarly

systems based on Face and Iris may suffer from

problems of their own.

Biometric traits may even used in various forms

like in a way where only one trait is used for

identification generally referred as uni-modal or any

combination of two or more traits generally referred

as multimodal of biometric identification. Systems

using multiple biometric traits for identification are

more reliable as compared to systems with single

biometric traits. This is because suppose fingerprint

of a person sufficiently changed due to his/her

working conditions their face and iris will be there

for the identification of the person. Similarly if there

is any major change in face then there are

fingerprints and iris are there for identification and

so on. There are a number of reasons which makes a

multimodal biometrics system more reliable, few of

them are:

1. A result from obtained from combination of

multiple traits is more acceptable than a single trait

system.

2. If a person somehow lost his any trait then we

are still capable of verifying his identity with the

help of remaining traits in multimodal system.

3. A multimodal provides high security against

forgery because spoofing becomes more difficult for

a person entering to the system and claiming a

registered identity.

This paper is about developing an identification

system based on multimodal which used feature

fusion technique. The traits which are used in this

method are faces, fingerprints and iris. For the

purpose of increasing efficiency of the system we

used intra class variations by using five various

poses of faces, five different fingerprints and two

different images of each eye. Fig.1 presents a

summary of proposed model.

This model used a deep learning based feature

fusion technique for identification. More specifically

it uses CNN of deep learning methods.

2 RELATED WORKS

Jain et al. (1996) described a two stage on line

verification system based on fingerprints. The first

stage is minutia extraction and the second one is

minutia matching. It used a fast and reliable

algorithm for feature minutia extraction, which

results improvement in Ratha et al. algorithm and

for minutia matching, they developed an elastic

matching algorithm based on alignment. It directly

correlates the stored template with the input image

omitting the expansive search. It is also capable of

dealing with the nonlinear deformations and inexact

pose transformations between fingerprints. This

method was very efficient in terms of reliability as

well as time complexity. The average verification

time is reduced up to about eight seconds using

SPARC20 workstation (

Jain, 1996).

Van der Putte et al.(2000) presented a paper

which examines how biometrics systems based on

fingerprints can be fooled. It categorizes the process

in two categories which includes with the co-

operation of fingerprint owner (In the cases of

attendance monitoring systems) and without the co-

operation of fingerprint owner( In the applications

involving authentication purposes eg. PDS systems

etc).It is possible to easily store the fingerprint

sequences on smart cards and it is very much

possible to read this smart card via a solid state

fingerprint scanner. It categorizes the counterfeiting

in two parts, first is duplication with Co-operation

and second is duplication without Co-operation. In

both the cases the duplicate image creation of

fingerprint is possible. In the first case with the help

of wafer thin silicon dummy is used to take samples

of fingerprint and further used when required. In the

second case it is possible always to make duplicate

copies of finger prints with the help of some storage

devices by associating it with scanner. It is also

possible to collect samples of fingerprints from a

surface by using stamp type materials (

Van, 2000).

Liu et al. (2001) presented a paper on face

recognition which combines shape and texture

features which is Enhanced Fisher Classifier (EFC).

Face geometry contains the shape while shape-free

normalized images are provided by texture.

Dimensions of shape and texture spaces are reduced

by PCA and enhanced Fisher linear discriminant

model is used enhancing generalization. The great

ICACSE 2021 - International Conference on Advanced Computing and Software Engineering

274

benefit of the method is that it achieves accuracy of

98.5% and using just 25 features (Liu, 2001).

Blanz and Vetter (2003) presented a mechanism

for face recognition, which is capable to work for

varying poses and illuminations. Wide range of

variations and varying illumination level requires to

simulation of image formation in 3D space. For this

simulation purpose computer graphics is used.

Efficiency of the method is judged on three different

views: front , side, and profile. The front view

performed better than two other with a success rate

of 95% , whether profile view is the lowest success

rate with 89% (

Blanz, 2003).

Daugman(2004) presented a study and

observations on working of iris recognition and its

performance. The author examined the problem of

finding the eye portion in an image in briefly by

developing concepts and appropriate equations. In

the later phase of the paper the author presented a

speed performance summary for various operations

performed during the process in which XOR

comparison of two Iris Codes takes minimum time

which is 10 micro seconds while Demodulation and

Iris Code creation takes a maximum of 102 mili

seconds (

Daugman, 2004).

Daugman(2006) presented a paper which

examined the randomness and uniqueness if Iris

Codes. The author of the paper had taken 200 billion

Iris pairs for their comparison work. This paper is

helpful in finding false matches in iris recognition

for large database. Daugman developed his own

algorithm for the purpose named Daugman

Algorithm and it is found that over 1 million

comparisons there is a maximum of 1 false match

occurred (

Daugman, 2006).

(Shams et.al. 2016) presented an experimental

work for biometric identification which used a

multimodal based on Face, Iris, and Fingerprints.

This experimental work used SDUMLA-hmt

database, where data is present in the form of

images. The images are preprocessed by using

Canny edge detection and Hough Circular

Transform. Further, they used Local Binary Pattern

with Variance(LBPV) histograms for feature

extraction. Separately extracted features are fused

together. Feature reduction is accomplished by

LBPV histograms. Combined Learning Vector

quantization classifier is used for classification and

matching purpose. The system was able to achieve

GAR 99.50% with minimum elapsed time 24

Seconds (

Shams, 2016).

(Choi et.al. 2015) presented a multimodal

biometric authentication system based on face and

gesture. Gesture is represented by various frames

from one pose to another. This work is capable of

accepting faces and gestures from moving videos.

HOG descriptor is used for representation of gesture.

4-Fold Cross Validation is used for validation in this

work. The performance of the system is about

97.59% -99.36% for multimodal using face and

gesture. The whole work is performed on a self

made database of 80 videos from 20 different

objects (

Choi, 2015).

(Khoo et.al. 2018) presented a multimodal

biometric system based on iris and fingerprints

which uses feature level fusion for modal

development. Indexing-First-One (IFO) hashing and

integer value mapping is used for the purpose.

CASIA-V3 Iris database and FVC 2002 fingerprint

database is used in model development. The main

reason behind use of IFO hash function is its

capacity survival against many attacks methods like

SHA and ARM. The equal error rate (EER) of the

system is provided in the paper which is 0.3842 for

Iris, 0.9308 for Fingerprints and 0.8 for IFO hash

function. There is no description provided about

elapsed time (Khoo, 2018).

(Ammour et.al. 2017) presented a paper for

biometric identification based on face and iris. Face

recognition is performed by three methods discrete

cosine transform (DCT), PCA and PCA in DCT, and

Iris recognition is also performed by three methods

which are Hough, Snake and distance regularized

level set (DRLS). They used ORL and CASIA-V3-

Interval dataset for their experimental work. Fusion

is applied at matching score level in this work. Face

recognition results with PCA is 91%, with DCT is

94% and with PCA in DCT is 93% with recognition

times 0.055s, 2.623s and 3.012s respectively. Iris

recognition results with Hough are 81%, with Snake

is 87% and with DRLS is 80% with recognition time

15.82s, 15.78s, and 16.52s respectively. In the

multimodal the recognition rate of Z-score

normalization is maximum and it is 98% (Ammour,

2017).

(Parkavi et.al. 2017) presented a biometric

identification system based on two traits fingerprint

and iris. Separate templates of fingerprints and iris

are obtained by minutiae matching and edge

detection. Decision level score fusion is applied for

decision making. They are able achieve accuracy of

97%, but the size of dataset and time complexity is

mentioned nowhere (Parkavi, 2017).

(Sultana et.al. 2017) presented a multimodal

biometrics system based on face, fingerprint and a

very rare trait social behavior. The social behavioral

trait is obtained by a social network and combined

with traditional traits faces and fingerprints. The

A Deep Learning based Approach for Biometric Recognition using Hybrid Features

275

social behavioral data is obtained by various social

media platforms a user is associated with. The key

idea is that two people having similar social behavior

profile has very less chance of similarity of face and

ear and vice- versa. The model performance accuracy

is about 92%, while time complexity related aspects

have not been discussed (Sultana, 2017).

(Gunasekran et.al. 2019) presented a multimodal

biometrics recognition using deep learning approach

for traits like faces, fingerprints and iris. Images are

taken from CASIA dataset. Contourlet transform is

used for preprocessing of images. Histograms are

obtained and weighted rank level fusion is applied

for combining the key features. Deep learning is

applied for matching. It achieved up to 96% of

accuracy when dataset size reaches to 500. The best

thing is that they achieved time in milliseconds. The

maximum time it took was 49.2 milliseconds. The

key outcome of this work is that, deep learning

based approach performs better with increasing size

of dataset, while time complexity increased very

slightly (Gunasekran, 2019).

(Cheniti et.al. 2017) presented a multimodal

biometric system using face and fingerprint using

symmetric sum-based biometric score fusion. Score

level fusion is tested on two different partitions of

NIST-BSSR1 database, which are NIST-Multimodal

database and NIST-Fingerprint database. GAR of

99.8% is obtained by S-sum generated by Schweizer

& Sklar t-norm (Cheniti, 2017).

(Zhang et.al. 2017) proposed a multi-task and

multivariate model for biometric recognition using

low rank and joint sparse representations. This

experimental work used three different datasets,

WVU, UMDAA-01, and Pascal-Sentence in non-

weighted and weighted categories. This work is

based on multiple traits and traits are not specialized.

They used different combinations of biometric traits

from different datasets, like fingerprint, iris and

faces. The modal performed variably for different

datasets and variance is about 18% with changing

datasets. Its recognition rate is 99.80% for both non-

weighted and weighted categories in WVU dataset,

for UMDAA-01 dataset its performance is 89.51%

and 90.45% for non-weighted and weighted

respectively, for Pascal-Sentence dataset the

recognition rates are 81.48% and 82.72% for non-

weighted and weighted respectively (Zhang, 2017).

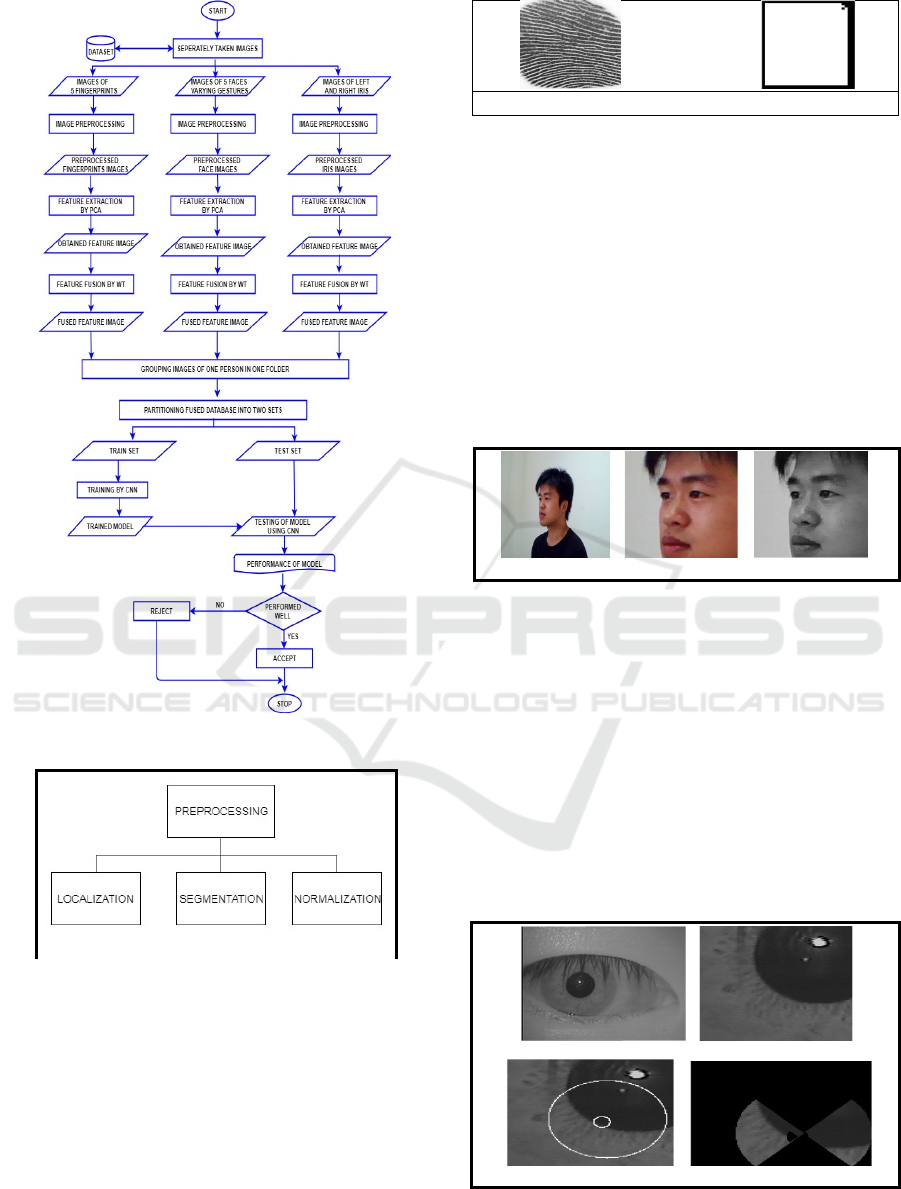

3 PROPOSED WORK

A deep learning based approach which uses CNN

for the multimodal biometric identification using

feature fusion is presented in this section. Using

deep learning on feature fused images of multiple

traits is a good idea. The method uses PCA for

feature extraction, inverse wavelet transform for

feature fusion and CNN for classification and

matching. The biometric traits which are used in the

work are faces, fingerprints, and iris of SDUMLA-

HMT database (Yin, 2011). The proposed work is an

integration of a series of works like preprocessing,

feature extraction, feature fusion, training and

testing, and decision making. A general overview is

presented in the Fig.1 and a corresponding flow

chart is presented in Fig.2.

Figure 1: An overview of proposed Mode

3.1 Preprocessing

The images obtained from sensors are not directly

operable for so many reasons. The primary task is to

identify the desired portion of the image on which

operation can be performed. The preprocessing

generally includes localization, segmentation and

normalization. The objective of the various

operations is detection of interested parts, finding

the patterns in images and to resize all the images to

uniform size so that similar operations are performed

easily. The resizing is necessary when we have

samples from various input devices, because the

image which contains the extracted features may

vary in size due to a variation in size of input images

and this cause the problem in matching. General

tasks involved in preprocessing are depicted in

Fig.3.

ICACSE 2021 - International Conference on Advanced Computing and Software Engineering

276

Figure 2: A flow chart of proposed Model

Figure 3: General tasks involved in Pre-processing

3.1.1 Fingerprints

RGB to Gray is applied to input finger images, if the

input image is a colored image. If input image is a

black and white image, then this operation is not

required at all. Mask operation is applied to image to

display meaningful area of image. Mask basically

provides our desired blocks of the image on which

further operations is to be performed. The result of

masking is shown in the Fig.4.

Original Image Mask

Figure 4: Result of Masking on a Fingerprint Image

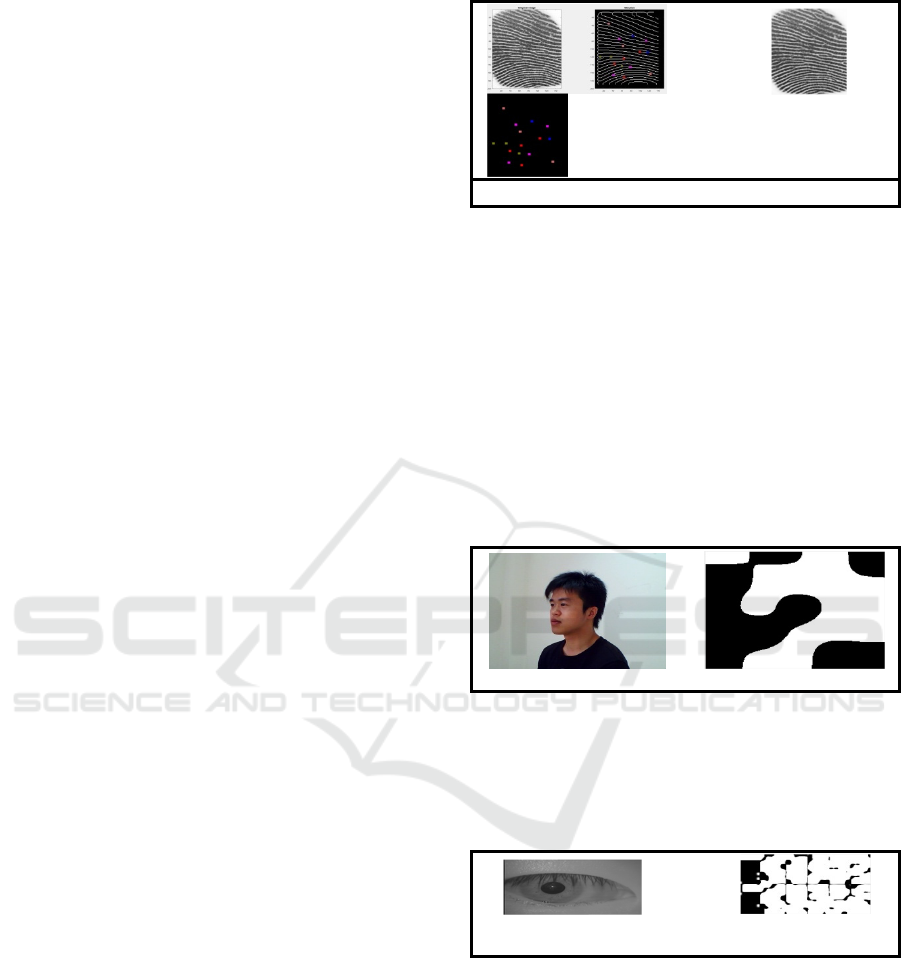

3.1.2 Faces

The desired area is detected by Viola Jones

algorithm of the input face image. The key principle

of using Viola Jones algorithm is that it is capable of

detecting faces in a sub window of the input image,

while the standard face detection algorithm always

try to detect faces from the whole input image which

is time consuming. RGB to Gray is applied to the

cropped image for eliminating the hue and saturation

information while persisting luminance. Effects of

preprocessing on face images are depicted in Fig. 5.

(a) (b) (c)

Figure 5: Effect of pre-processing on Face Images (a)

Original Image (b) Localized Image, (c) RGB to Gray

Image

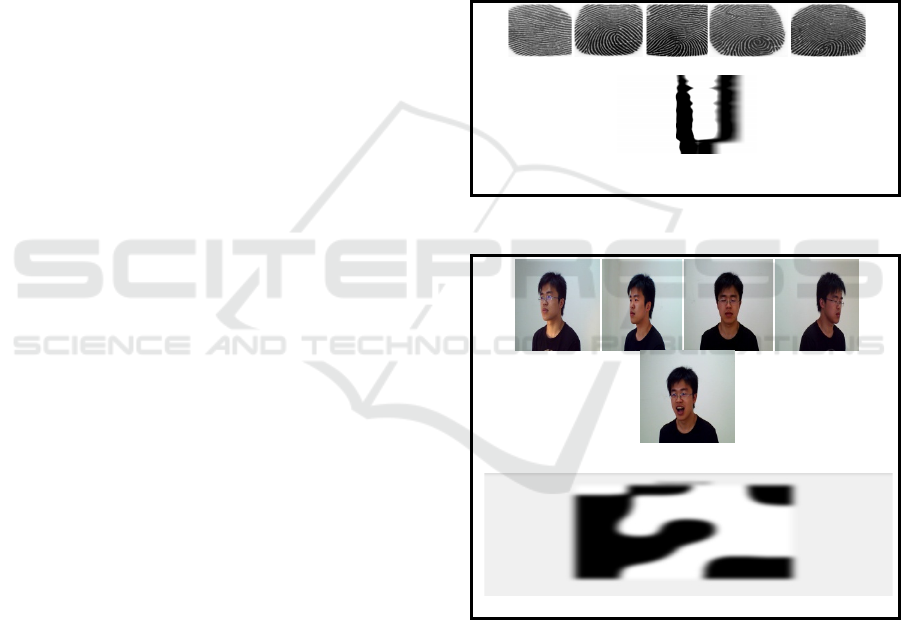

3.1.3 Iris

RGB to Gray is applied to the input iris images if

they are color images. In case of a black and white

iris input images this operation is not required at all.

Black hole search is used to localize the portion of

iris image which is desired. Masking operation is

performed to spot pupil inside iris image. Operation

of normalization is performed to increase the

intensity of the pixels present in spotted area. Effect

of pre-processing on Iris Images is depicted in Fig.6.

(a) (b)

(c) (d)

Figure 6: Effect of pre-processing on Iris Images (a)

Original Image, (b) Localization, (c) Masking, (d)

Normalization

A Deep Learning based Approach for Biometric Recognition using Hybrid Features

277

3.2 Feature Extraction

After pre-processing, Principal Component Analysis

(PCA) is applied on the obtained images for feature

extraction. PCA is used because of its simplicity and

performance. It finds new and small set of variable

while retaining the original ones. It is a statistical

method.

Any large data set consists many variables which

are interrelated to each other in some way, thus the

dimensionality of the data set is very high.

Dimensionality of the data set is reduced by PCA,

while retaining highest degree of variance as

possible. Once we have a reduced dimensionality

with maximum variance, it is possible to retain the

original motive of the data set. The goal is achieved

by transforming to a new set of variables, referred as

principal components. These obtained variables are

uncorrelated and organized in a way that some of

them retain most of the variation present in all of the

original variables.

Suppose we have a set of n random variables and

v is a vector of it. If n is very large then it is not

feasible to analyze n variances and 1/2(n(n-1)

covariance. Then it is wise to look for a new set of

variables which contains less than n elements and

which contains most of the variance represented by

the original set.

To achieve this we have to look first a linear

function f

1

'

(v) of the vector v, which contain the

maximum variance, where f

1

is a vector n constants

f

11

, f

12

, ........... f

1n

and

'

denotes the transpose, i.e

f

'

(v) = f

11

v

1 +

f

12

v

2 +

........... +f

1n

v

n

Continuing this we look for a linear function

f

2

'

(v), which is not related to f

1

'

(v), with maximum

variance and so on. By this way at the k

th

stage a

linear function f

k

'

(v) is obtained which contained

maximum variance. f

k

'

(v) is the k

th

principal

component . We have to iterate this up to n principal

components, but in general it is observed that we

have to never reach up to n. Most of the variance in

v is obtained for i principal components, where i ≤ n

(Jolliffe, 2003)( Takane, 2016)( Sanguansat, 2012).

3.2.1 Fingerprint

Feature extraction from fingerprint includes location

of minutiae points in the input image. The operation

is shown in the Fig.7 (a) .Once minutia points are

located we just store only those minutiae points

ignoring all other points from the image. A resultant

feature image is depicted in Fig. 7 (c).

(a) (b) (c)

Figure 7: Feature Extraction From Fingerprints (a)

Detection of Minutiae Points, (b) Original Image, (c)

Bifurcation Points

3.2.2 Faces

PCA is applied on pre-processed face images for

feature extraction. Eigen faces are computed from

the input image which contains a small set of

essential characteristics of the face image. Once the

Eigen values of the face image are computed the

projection is found in the new set of dimensions. A

resulting image with extracted features is shown in

Fig.8 (b).

(a) Original Image (b) Eigen Face Image

Figure 8: Representation of a Eigen Face of a Input Face

Image

3.2.3 Iris

Effect of PCA on iris image is depicted in Fig.9.

(a) Original Image (b) Feature Extracted

Image

Figure 9: Representation of an iris image to resulting

feature image.

3.3 Feature Fusion

The wavelet transform is a mechanism by which

data or operators or functions were cuts up into

various frequency components and analyzes each

component with a resolution matched to its scale.

The aim of a pattern recognition system is to obtain

the best possible classification performance. The

ICACSE 2021 - International Conference on Advanced Computing and Software Engineering

278

better classification is obtained if the feature set is

optimal. The three prime fusion strategies are:

Information or Data Fusion:- More meaningful

raw data is produced by data fusion, by

combining obtained data from various sources.

Feature Fusion:- The extracted feature set

contains irrelevant and redundant features. If

two features are of similar types then one of

them must be redundant and we need only to

keep any one of them. A feature is irrelevant if

it does not strongly correlate the class

information. The aim of feature fusion

technique is to obtain a better feature set by

fusing features, which may further given to

classifier to obtain the final result.

Decision Fusion:- A set of classifiers are used to

provide unbiased and better result. Classifiers

may be the same or different.

Wavelet Transformation (WT) is used for

feature fusion. WT is an efficient method of image

fusion which is capable of combining images which

are from different sensors and sensing environments.

The wavelet transform of the image is first

computed. The computed wavelet transform of

images contains different type of bands like high-

high, high-low and low-high at various scales. To

make this uniform the average is computed of all

computed transform values. Max rule is applied to

compute the larger value because the larger absolute

transform coefficient corresponds to the sharp

brightness changes to the image, which is the salient

feature of the image (Li, 1995)( Andra, 2002)(

Mangai, 2010)( Hubbard, 1998).

Effects of feature fusion using wavelet transform

on fingerprints, faces, and iris images are shown in

Fig.10, Fig.11, and Fig.12 respectively.

3.4 Training and Testing

Performance of Machine learning is derived from

the fact that how well system is trained. A well

trained system more sensitive to error detection.

First we test the system for the data set and then test

are performed to measure the performance of the

system. How a system behaves for unseen data

determines its performance. If the system behaves

well to an unseen data and successfully predicts its

class then it improves the performance otherwise it

generates error. Deep leaning is applied here for the

training and testing of the proposed model. More

specifically Convolution Neural Network (CNN) is

used. The reason behind the use of deep learning

method is that neural networks are a powerful

technology for classification of visual inputs. The

most important practice is getting a training set as

larger as possible (Simard, 2003).

Recognition is performed by using Convolution

Neural Network (CNN) in the proposed work. CNN

is a very powerful tool for character, speech and

visuals which includes image as well as video

recognition. A CNN is composed of many

processing layers which gives it power to minutely

observe the object and making it a powerful tool. It

uses a hierarchy of layers to extract features where

output of one layer became the input for the next

layer in hierarchy. There are four key concepts

behind convolution neural networks, which are: Use

of multiple layers, Local Connections, shared

weights and pooling.

(a) Input Fingerprint Images

(b) Image Representing Fused Features for all 5 fingerprint

samples

Figure 10: Fingerprint Feature Fusion

(a) Input Face Images of Varying Gestures

(b) Image Representation of Eigen Faces of all Face Inputs

Figure 11: Representation of Fused Feature Eigen Image

of Input Face Images.

A Deep Learning based Approach for Biometric Recognition using Hybrid Features

279

(a) (b)

(c) Image Representing Fused Features of Both Eye Images

Figure 12: Representation of Fused Feature Image of Input

Eye Images: (a) Input Image of Left Eye, (b) Input Image

of Right Eye

Many natural signals are combination of

hierarchies, and deep neural networks get the benefit

of this composition. In these hierarchies, lower level

features generates the higher level features. In

images local combinations of edges form some

specific patterns, parts are obtained by assembling

these patterns, and parts form objects. When the

elements vary in their position and appearance in

previous layer, it allows to vary very little in the next

layer with the help of pooling.

There is a concept of simple cells and complex

cells in visual neuroscience. In CNN the convolution

layer is inspired by simple cells and pooling layer is

inspired by complex cells. Convolution and pooling

layer compose the few early layers of CNN. Feature

maps organize the units of convolution layer.

Feature maps contain the patches. The pooling layer

is used to merge features whose semantics are same.

The method used by CNN is very similar to

animal’s visual cortex. The image is processed in

the form of independent small portions which is

generally termed as visual fields. Each visual field is

processed by separate neurons which are stacked in

layers. Some of most used layers are: Convolution

Layer, Pooling Layer, Locally Connect Layer and

Fully Connected Layer (Le, 2015)( Krizhevsky,

2012)( Dũng, 2014).

4 RESULTS AND DISCUSSION

Experimental work is performed using SDUMLA-

HMT database. This database contains biometrics

samples of total 106 persons with 5 traits per person

which are face, fingerprint, iris, finger veins, and

gait. It includes 61 males and 45 females with the

age between 17 and 31. Out of 5 biometric traits

present in the database only 3 are of our interests

which are Face, Fingerprint, and Iris. From the

database, a separate cluster of 3 traits of all 106

persons is created which includes 5 random samples

of faces with different gestures, 5 fingerprints, and 2

iris images left and right. Hence the database

contains a total of 1272 images.

Out of 106 entries from database 100 persons are

registered and their corresponding fused feature

images are stored 3 per person, 1 fused image of all

5 face gestures, 1 fused image of all 5 fingerprints

samples and 1 fused image of two iris samples. Out

of 100 sets 80 are used for training and 20 are used

for testing. When CNN is applied for train test its

accuracy was 96.67%, while the error was 3.33%

with elapsed time for .77(approx.) for testing where

1 epoch is used and for training it is .55 and .33

seconds where number of epoch used is 2.

A plot between number of epoch multiplied by

number of batches versus CNN loss. Since number

of epoch in testing is 1, hence graph is considered

between number of batches and CNN loss. The

vertical axis represents the CNN loss and horizontal

axis representing the number of batches. The graph

is shown in Fig.13.

Figure 13: Plot CNN Loss (Vertical) Vs No. of Batches

(Horizontal)

Table-I shows some result comparisons with

existing latest Multimodal systems available. This

table contains 5 columns as depicted. Here four key

parameters are selected for result comparison, which

are: Traits, Method, Accuracy and Elapsed time.

Most multimodals use three traits, but few with 2

traits also exist. Use of only two traits may perform

better in terms of time and recognition rates, but

reliability may get affected in some cases. It is easily

observed that accuracy of some existing modals is

slightly better than accuracy achieved in this work.

This is because of two reasons. The first reason is

that, use of different datasets. It is observed in

literature survey that recognition rate of a particular

method varies large with the change of datasets. The

second reason is the kind of methods being used. A

ICACSE 2021 - International Conference on Advanced Computing and Software Engineering

280

method with high recognition rate may take large

extent of time in comparison with deep learning

method. Deep learning method is much faster than

other methods and this can be easily observed by the

Table-I. The only limitation of deep learning method

is that it requires large data sets to perform better.

And when we use a fused modal with deep learning

then it may get a much reduced dataset in

comparison with actual one. Here we have used a

large data set but because of feature fusion the size

of dataset is reduced up to 75% and deep learning is

applied only 25% of the actual dataset. But the

proposed work is able to perform better than existing

modals, which are based on deep learning method.

The elapsed time of deep learning approach is far

better than other methods.

Table 1.

Title Traits Method Accuracy

Elapsed

Time(S)

Shams M.,

Tolba A, &

Sarhan S

(2016).

Face,

Fingerprint,

Iris

LBPV 99.50% 24 Sec.

Choi H. &

Park H.

(2015).

Face,

Gesture

4-Fold

Cross

Validation

97.59%-

99.36 %

--

Khoo Y.

H., et.al.

(2018, June

Iris,

Fingerprint

IFO-

Hashing

99.2% --

Ammour B,

Bouden T,

& Amira-

Biad S

(2017

Face, Iris

Snake,

DCT, Z-

Score

87%,

94%, 98%

2.623Sec.,

15.82Sec.,

--

This Work

Face,

Fingerprint,

Iris

PCA, WT,

Deep

Learning

96.67% .77 Sec

5 CONCLUSIONS

This paper proposed a biometric identification

system based on a multimodal by using feature

fusion technique. Identifying a person using only a

single trait is not optimal always due to several

problems like physical loss of traits, medical reasons

or any other reasons. Using a multimodal minimizes

that risk in comparisons to uni-modals by providing

extra set of information. Here, three most frequent

traits of human such as Faces, Fingerprints and Iris

have been used for biometric recognition. In this

paper PCA and WT were used feature extraction and

feature fusion respectively. This paper has been

proposed a CNN based model with fused feature of

three biometric traits to recognize the biometrics.

The proposed approach has been achieved the

accuracy up to 96.67%.

REFERENCES

Ammour B, Bouden T, & Amira-Biad S (2017, October).

Multimodal biometric identification system based on

the face and iris. In 2017 5th International Conference

on Electrical Engineering-Boumerdes (ICEE-B) (pp.

1-6). IEEE

Andra K, Chakrabarti C, & Acharya T (2002). A VLSI

architecture for lifting-based forward and inverse

wavelet transform. IEEE transactions on signal

processing, 50(4), 966-977.

Blanz, V, & Vetter T. (2003). Face recognition based on

fitting a 3D morphable model. IEEE Transactions on

pattern analysis and machine intelligence, 25(9), 1063-

1074.

Cheniti M., Boukezzoula N. E. & Akhtar Z. (2017).

Symmetric sum-based biometric score fusion. IET

Biometrics, 7(5), 391-395.

Choi H. & Park H. (2015). A multimodal user

authentication system using faces and gestures.

BioMed research international, 2015.

Daugman J (2006). Probing the uniqueness and

randomness of Iris Codes: Results from 200 billion iris

pair comparisons. Proceedings of the IEEE, 94(11),

1927-1935.

Daugman J, & PhD, O. B. E. (2004). University of

Cambridge. How Iris Recognition Works.

Dũng PV (2014). Multiple Convolution Neural Networks

for an Online Handwriting Recognition System.

Gunasekaran K., Raja J., & Pitchai R. (2019). Deep

multimodal biometric recognition using contourlet

derivative weighted rank fusion with human face,

fingerprint and iris images. Automatika, 60(3), 253-

265.

Hubbard BB (1998). The world according to wavelets: the

story of a mathematical technique in the making. AK

Peters/CRC Press.

Jain A, & Hong L(1996, August). On-line fingerprint

verification. In Pattern Recognition, 1996.,

Proceedings of the 13th International Conference on

(Vol. 3, pp. 596-600). IEEE.

Jain AK, Flynn P, & Ross AA (Eds.). (2007). Handbook

of biometrics. Springer Science & Business Media.

Jolliffe IT (2003) Principal component analysis.

Technometrics, 45(3), 276.

KhooY. H., Goi B. M., Chai T. Y., Lai Y. L., & Jin Z.

(2018, June). Multimodal biometrics system using

feature-level fusion of iris and fingerprint. In

Proceedings of the 2nd International Conference on

Advances in Image Processing (pp. 6-10).

Krizhevsky A, Sutskever I, & Hinton GE (2012).

Imagenet classification with deep convolutional neural

networks. In Advances in neural information

processing systems (pp. 1097-1105).

A Deep Learning based Approach for Biometric Recognition using Hybrid Features

281

Le QV (2015). A tutorial on deep learning part 2:

Autoencoders, convolutional neural networks and

recurrent neural networks. Google Brain, 1-20.

Li H, Manjunath BS, & Mitra SK (1995). Multisensor

image fusion using the wavelet transform. Graphical

models and image processing, 57(3), 235-245.

Liu C, & Wechsler H (2001). A shape-and texture-based

enhanced Fisher classifier for face recognition. IEEE

transactions on image processing, 10(4), 598-608.

Maio D, Maltoni D, Cappelli R, Wayman JL, & Jain AK

(2004, July). FVC2004: Third fingerprint verification

competition. In International Conference on Biometric

Authentication (pp. 1-7). Springer, Berlin,

Heidelberg.

Mangai UG, Samanta S, Das S, & Chowdhury PR (2010).

A survey of decision fusion and feature fusion

strategies for pattern classification. IETE Technical

review, 27(4), 293-307.

Parkavi R, Babu K C, & Kumar J A (2017, January).

Multimodal biometrics for user authentication. In 2017

11th International Conference on Intelligent Systems

and Control (ISCO) (pp. 501-505). IEEE.

Sanguansat P (Ed.) (2012). Principal Component Analysis:

Multidisciplinary Applications. BoD–Books on

Demand.

Shams M., Tolba A, & Sarhan S (2016). Face, iris, and

fingerprint multimodal identification system based on

local binary pattern with variance histogram and

combined learning vector quantization. Journal of

Theoretical & Applied Information Technology, 89(1).

Simard P. Y.Steinkraus D. & Platt J. C. (2003, August).

Best practices for convolutional neural networks

applied to visual document analysis. In Icdar (Vol. 3,

No. 2003).

Sultana M, Paul P P, & Gavrilova M L (2017). Social

behavioral information fusion in multimodal

biometrics. IEEE Transactions on Systems, Man, and

Cybernetics: Systems, 48(12), 2176-2187.

Takane Y (2016). Constrained principal component

analysis and related techniques. Chapman and

Hall/CRC.

Van der Putte T, & Keuning J. (2000). Biometrical

fingerprint recognition: don’t get your fingers burned.

In Smart Card Research and Advanced Applications

(pp. 289-303). Springer, Boston, MA.

Yin Y, Liu L, & Sun X (2011, December). SDUMLA-

HMT: a multimodal biometric database. In Chinese

Conference on Biometric Recognition (pp. 260-268).

Springer, Berlin, Heidelberg.

Zhang H., Patel V. M. & Chellappa R. (2017). Low-rank

and joint sparse representations for multi-modal

recognition. IEEE Transactions on Image Processing,

26(10), 4741-4752.

ICACSE 2021 - International Conference on Advanced Computing and Software Engineering

282