Simulating Live Cloud Adaptations Prior to a Production Deployment

using a Models at Runtime Approach

Johannes Erbel, Alexander Trautsch and Jens Grabowski

University of Goettingen, Institute of Computer Science, Goldschmidtstraße 7, Goettingen, Germany

Keywords:

Cloud, Simulation, Runtime Model, Adaptation, DevOps.

Abstract:

The utilization of distributed resources, especially in the cloud, has become a best practice in research and

industry. However, orchestrating and adapting running cloud infrastructures and applications is still a tedious

and error-prone task. Especially live adaptation changes need to be well tested before they can be applied

to production systems. Meanwhile, a multitude of approaches exist that support the development of cloud

applications, granting developers a lot of insight on possible issues. Nonetheless, not all issues can be dis-

covered without performing an actual deployment. In this paper, we propose a model-driven concept that

allows developers to assemble, test, and simulate the deployment and adaptation of cloud compositions with-

out affecting the production system. In our concept, we reflect the production system in a runtime model and

simulate all adaptive changes on a locally deployed duplicate of the model. We show the feasibility of the

approach by performing a case study which simulates a reconfiguration of a computation cluster deployment.

Using the presented approach, developers can easily assess how the planned adaptive steps and the execution

of configuration management scripts affect the running system resulting in an early detection of deployment

issues.

1 INTRODUCTION

Due to the rise of scalable, elastic, and on demand re-

sources many different tools emerged allowing the de-

scription infrastructure and application deployments

using software artifacts (Brikman, 2019). As a re-

sult, the once individual teams, responsible for the

development of the software (Dev) and the manage-

ment of hardware (Ops), merged into one known as

DevOps (Humble and Molesky, 2011). Even though

many tools got developed to support DevOps in pro-

visioning and configuring infrastructure, an increased

knowledge is required as systems get more complex

due to the huge amount of different resources that

need to be managed. Therefore, developing, deploy-

ing, and orchestrating large and dynamic cloud infras-

tructures remains a difficult and error-prone task. As

a result changes made to the production system need

to be well tested and inspected to assess the impact of

the change. Hereby, not all issues can be discovered

without performing an actual deployment requiring a

test environment, which can be difficult to set up and

maintain (Guerriero et al., 2019).

In this paper, we present a model-driven con-

cept that allows developers to assemble, test, and as-

sess the impact of cloud adaptations and deployments

without affecting the production system using a run-

time model. This runtime model provides an abstract

representation of the system and maintains a direct

connection to it. The use of runtime models can be

found in many approaches as they allow to easily de-

sign, validate, and manage cloud applications (Ko-

rte et al., 2018; Achilleos et al., 2019; Ferry et al.,

2018). Similar to the concept presented in (Ferry

et al., 2015), we reflect the production system in a

runtime model and duplicate it for testing purposes.

In our approach, we further annotate each individual

resource in the duplicated runtime model with differ-

ent simulation details. Additionally, we reduce the

required infrastructure to a minimum so that a local

and offline environment is sufficient to develop adap-

tive steps for distributed systems. Furthermore, this

enables the user to investigate the extent to which the

developed changes cope with scaling rules attached to

the runtime model and thus the production cloud de-

ployment. Our approach focuses on the simulation

and reflection of Infrastructure as a Service (IaaS)

clouds providing full access to infrastructure compris-

ing compute, storage, and network resources. We

demonstrate the feasibility of the approach by per-

Erbel, J., Trautsch, A. and Grabowski, J.

Simulating Live Cloud Adaptations Prior to a Production Deployment using a Models at Runtime Approach.

DOI: 10.5220/0010552003350343

In Proceedings of the 11th International Conference on Simulation and Modeling Methodologies, Technologies and Applications (SIMULTECH 2021), pages 335-343

ISBN: 978-989-758-528-9

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

335

forming a case study in which we duplicate the pro-

duction runtime model of a deployed computation

cluster and extend its capabilities with additional fea-

tures offline. We show, that the approach helps devel-

opers to assess how the planned adaptive steps and the

execution of configuration management scripts affect

relevant parts of the cloud deployment. This results

in an early detection of deployment and performance

issues using an abstract model of the system.

The remainder of this paper is structured as fol-

lows. Section 2 provides the papers foundations. Sec-

tion 3 discusses related work. Section 4 presents our

simulation approach. In Section 5, we describe the ex-

ecution of our case study and discuss it in Section 6.

Finally, in Section 7 an overall conclusion is given as

well as an outlook into future work.

2 FOUNDATIONS

Cloud Computing is an umbrella term, usually de-

scribing a service in which consumers can dynami-

cally rent virtualized resources from a provider pool-

ing large amounts of resources together (Mell and

Grance, 2011). This in turn creates an illusion of

infinite resources available to the consumer that can

be scaled up and down on demand (Armbrust et al.,

2009). Cloud orchestration refers to the creation,

manipulation and management of rented cloud re-

sources. This includes not only the infrastructure,

but also applications deployed on top of them (Liu

et al., 2011). To reach this goal the concept of In-

frastructure as Code (IAC) was introduced, which al-

lows to describe infrastructure via software artifacts.

IAC tools are utilized to provision infrastructural re-

sources and manage the configuration and deploy-

ment of applications on top of it. The latter is usually

done either via configuration management perform-

ing a sequence of configuration steps or containeriza-

tion providing access to templated resource configu-

rations (Wurster et al., 2020). Configuration manage-

ment directly affects the state of the system by inter-

preting management scripts. When executed, these

scripts perform the required actions to bring the re-

source to configure in to the described state. Often

these tools provide idempotence mechanisms which

ensure that even when an operation is executed mul-

tiple times the result stays the same. Containeriza-

tion tools, like docker, on the other hand compress all

the information required within an isolated part of the

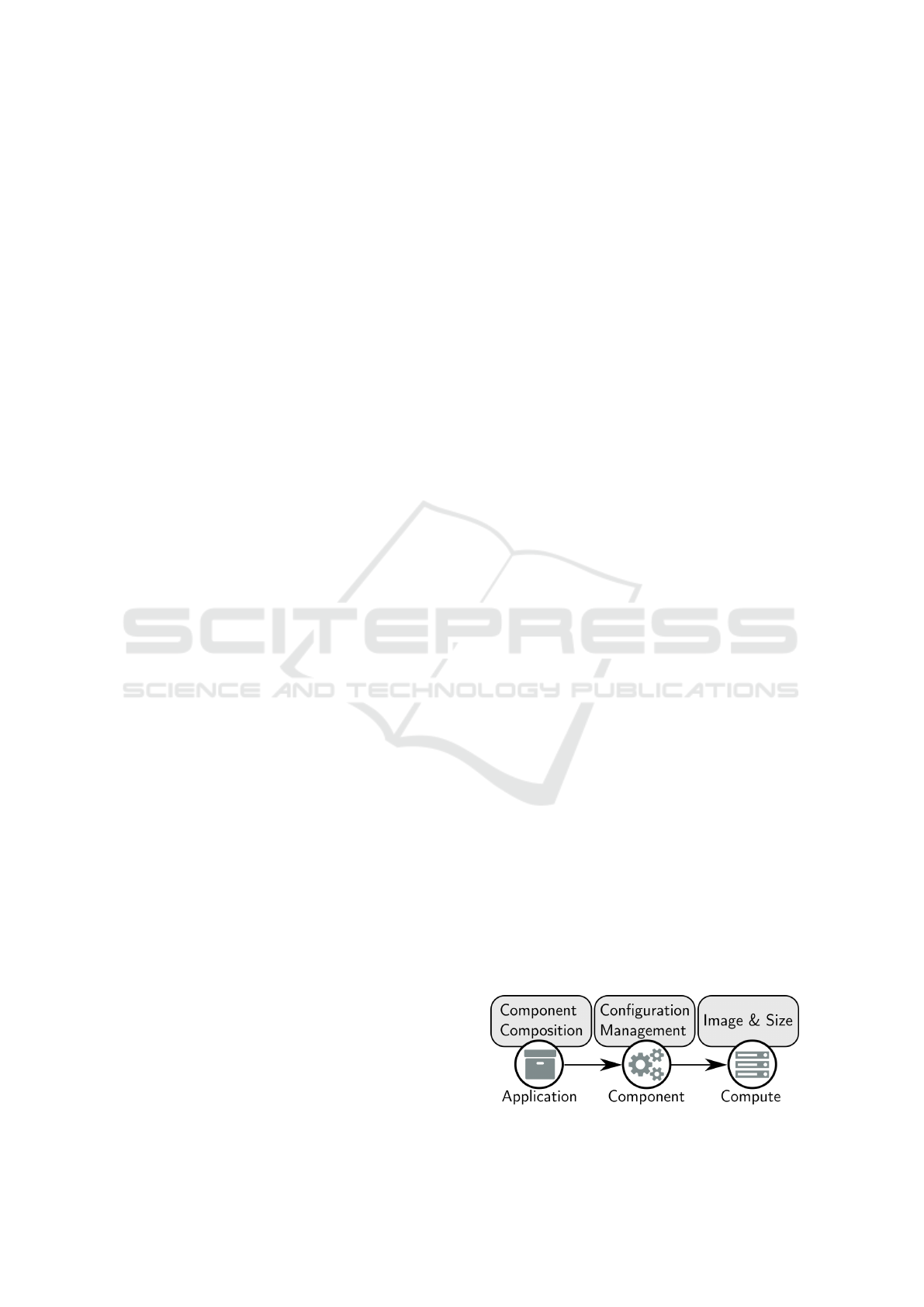

compute resource. A generic composition of a cloud

deployment and its accompanying artifacts is depicted

in Figure 1. A Compute node represents, e.g., a Vir-

tual Machine (VM) or container node which resides

on the infrastructure layer. On this node a Component

node is deployed. This node describes, e.g., a run-

ning service. Finally, an Application node groups

up related component instances. Each of these ele-

ments, possesses different attributes. For example the

Component is linked to a Configuration Management

script. The Compute node on the other hand is of a set

Size and represents a defined Image, e.g., an ubuntu

image for a VM or a docker container image hosting

already deployed components.

Models at Runtime (M@R) is a subcategory of

Model Driven Engineering (MDE) that promotes the

utilization of models as a central artifact of software

or its development. In M@R, however, these mod-

els are not only used as a static representation of a

system, but rather as a live representation of it. To un-

derstand the concept of M@R, the term model and

its relationship to a metamodel is fundamental. In

the following, we refer to the term model as defined

by (K

¨

uhne, 2006): “A model is an abstraction of a

(real or language based) system allowing predictions

or inferences to be made”. Each “model is an instance

of a metamodel” (OMG, 2011) whereby the meta-

model specifies elements, e.g., entities, resources,

links and attributes, that can be used to create a model.

In general, a metamodel is a model of models (OMG,

2003) forming a language of models (Favre, 2004).

The formality introduced by a metamodel allows to

validate its instances to check whether its “language”

is correctly used. To allow for such a validation de-

velopers can infuse their metamodel with constraints

that need to hold using declarative languages for rules

and expressions like the Object Constraint Language

(OCL) (OMG, 2016). This in turn supports an early

detection of errors in a model. Designing cloud de-

ployments within a model is a common approach as it

grants an abstracted view on the overall system. Com-

pared to typical models, a runtime model additional

possess a causal connection to the abstracted sys-

tem (Bencomo et al., 2013; Szvetits and Zdun, 2016).

Hereby, effector components propagate changes in the

runtime model directly to the system and vice versa.

Using this strong connection to the system, a runtime

model forms a monitorable runtime state of the sys-

tem that allows to control and reason about it (Blair

et al., 2009).

Figure 1: Generic cloud deployment stack.

SIMULTECH 2021 - 11th International Conference on Simulation and Modeling Methodologies, Technologies and Applications

336

3 RELATED WORK

Creating a domain specific language is a com-

mon approach to reduce the complexity of design-

ing cloud deployments. Some of these languages

support the utilization of a runtime model like

CAMEL (Achilleos et al., 2019) and CloudML (Ferry

et al., 2018). Also, approaches around the Open

Cloud Computing Interface (OCCI) cloud standard

exist which cover with runtime model function-

alities (Zalila et al., 2017; Korte et al., 2018).

Furthermore, an extension for OCCI is described

by (Ahmed-Nacer et al., 2020) which is build around

CloudSim (Calheiros et al., 2011). Among others this

simulation allows to model data centers and simulate

resource utilization and pricing strategies for cloud

deployments.

Still, testing IAC and configuration management

scripts is challenging because of missing information

in form of a real world scenario, e.g., the current state

of the system to be configured and possible state de-

pendencies. A recent mapping study (Rahman et al.,

2019) found that most research concerning infrastruc-

ture as code is concerned with frameworks and tools

to support developers. In (Hanappi et al., 2016) an

automated model-based test framework is proposed

to determine whether a system configuration specifi-

cation converges to a desired state. In contrast to their

work our concept not only encompasses testing but

also allows practitioners a high level overview and di-

rect interactions for debugging purposes via a local

deployment.

The approach most similar to our concept is pre-

sented in (Ferry et al., 2015), which describes the uti-

lization of a models at runtime test environment next

to a production one to continuously deploy a multi

cloud system. In contrast to their work we consider

the local replication of a production cloud deploy-

ment. Furthermore, our approach enables the devel-

oper to replay workloads on the local duplicate to as-

sess the impact of planned adaptations to the produc-

tion environment.

4 APPROACH

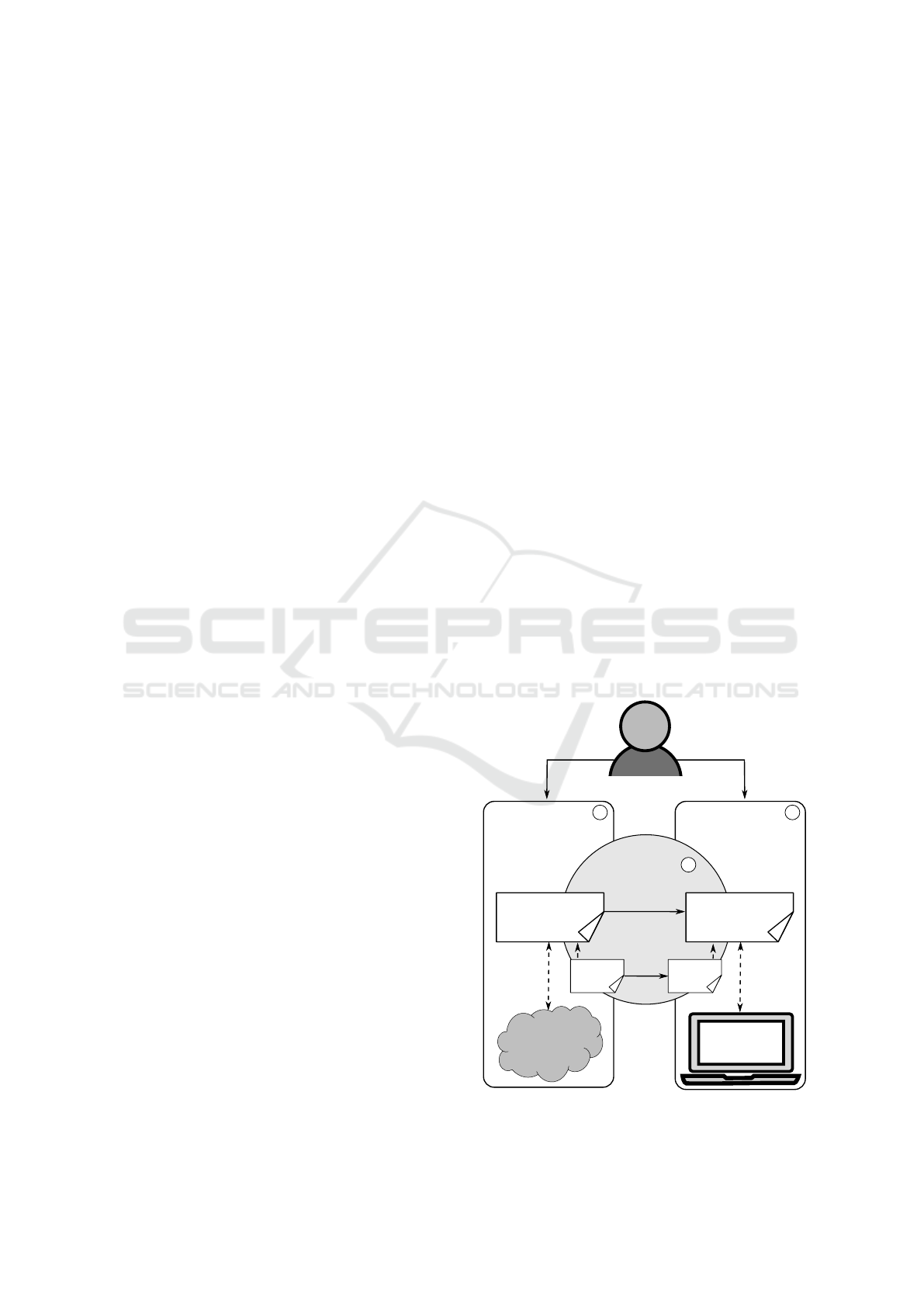

To ease the replication of production environments

and to easily assess the impact of adaptation changes,

we utilize a local runtime model duplicate as shown in

Figure 2. We separate the Production Environment

1

from the Local Environment

2

and use the

Simulation Configurator

3

to create a duplicate

version of the production runtime model to locally

assess the impact of adaptation changes.

The Production Environment

1

consists of a

Runtime Model (Prod) that is causally connected over

a dedicated effector to a Cloud system. Within this

model all provisioned infrastructure resources and de-

ployed applications are present. This runtime model

is used by the DevOps to operate the system which

is an approach that can be often found in related

work (Achilleos et al., 2019; Korte et al., 2018). One

of the benefits of this model is that the DevOps have

access to an abstracted representation of the system

that can be easily adjusted to adapt to the needs of

changing requirements.

The Local Environment

2

is used to adjust, de-

velop, and simulate desired adaptive actions, instead

of applying these live adaptation changes directly.

Within this environment a Runtime Model (Loc) is

maintained that is causally connected to a local en-

vironment, e.g., on a local Workstation or a server

instance. Hereby, the effector accompanying the run-

time model implements the simulation behaviour pro-

viding a simulation environment in which, e.g., con-

figuration management scripts can be applied to lo-

cally spawned compute resources. Depending on the

used environment, the developer has access to differ-

ent amounts of computing resources and therefore can

simulate the deployment on different levels of detail.

The Simulation Configurator

3

configures the

runtime model for two successive simulation appli-

ances. A deployment simulation and an orchestration

simulation. For the deployment simulation, we du-

Runtime Model

(Loc)

Workstation

operate

shrink

DevOps

Runtime Model

(Prod)

Local

Environment

2

Production

Environment

1

Cloud

develop

effector

effector

Scaling

Rules

Scaling

Rules

copy

Simulation

Configurator

3

Figure 2: Overview of utilized environments.

Simulating Live Cloud Adaptations Prior to a Production Deployment using a Models at Runtime Approach

337

plicate and shrink the Runtime Model (Prod) from the

Production Environment into the Runtime Model (Loc)

so that it fits into the Local Environment. Hereby,

the transformation prunes the runtime model in terms

of assigned resources so that parts of the deploy-

ment are actually deployed in a simulation environ-

ment. The remaining parts of the model are simulated

on a model-based level only performing state tran-

sitions using a stub implementation of the elements

state machine. To simulate runtime behaviour, we

perform an orchestration simulation. For this sim-

ulation, we create a copy of the Scaling Rules ap-

plied to the Production Environment to apply them on

our local Runtime Model (LOC). This allows to in-

vestigate the extent to which developed changes cope

with the utilized orchestration processes. Hereby,

the runtime model supports the investigation of run-

time states being changed by the orchestration pro-

cess while the simulation environment, e.g., allows

to define automated tests. Later on, the DevOps

can propagate the offline developed cloud applica-

tion components, states, and scaling rules to the

Production Environment.

4.1 Deployment Simulation

To locally work on cloud deployments, our approach

combines an actual local provisioning of resources of

interest while simulating the rest of the deployment.

Therefore, we reduce the size of the production en-

vironments resources for our local replication. We

aim to mimic as many resources as possible without

breaking the limit of resources available for the devel-

opment of new cloud deployment states. Addition-

ally, we allow the developer to select different sim-

ulation levels for individual elements of the current

cloud deployment. Depending on the chosen simula-

tion level, the developer can work on new configura-

tion management scripts and test actual deployments.

To ease this process, we perform a transformation on

the runtime model to investigate, such as the one of

the production environment. We use this transforma-

tion to shrink the size of selected resources to fit to

the size of the new environment and add annotations

to specify simulation behaviour. This process is de-

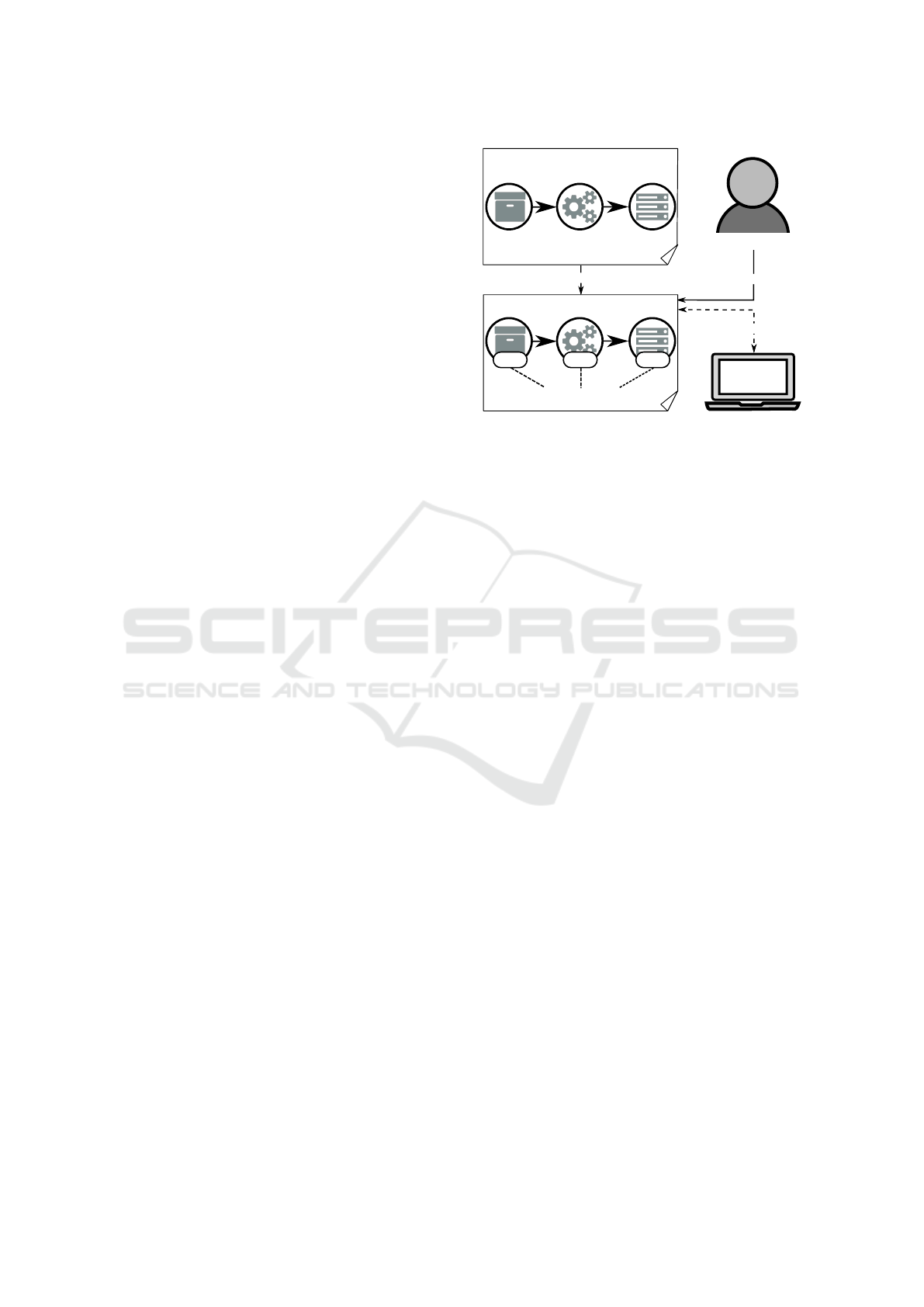

picted in Figure 3 and described in the following.

As a first step in the deployment simula-

tion process, we transform and duplicate the

Runtime Model (Prod) representing the production en-

vironment into a Runtime Model (Loc) used for the lo-

cal deployment. Within this transformation both mod-

els are instances of the same metamodel and there-

fore refer to the same language to describe the cloud

deployment. During the transformation a Simulation

DevOps

adjust

Runtime Model (Prod)

Runtime Model (Loc)

transform

Simulation Tags

L: 0 L: 0L: 0

effector

Figure 3: Runtime model simulation annotation.

Tag is added to each resource. This simulation tag

serves as an indicator to be recognized by the effector

of the runtime model. This effector ensures the causal

connection to the system. While the effector of the

production runtime model forwards requests to an ac-

tual cloud, the effector of the local runtime model han-

dles the simulation behaviour. The transformation au-

tomatically adds the simulation tag with a default sim-

ulation level of zero which describes that only man-

agement operations should be simulated on a model-

based level. Furthermore, this simulation level in-

cludes the simulation of lifecycle actions. Among

others these actions trigger state changes on a re-

source. Such actions, e.g., start or stop a VM describ-

ing a transition from an active to inactive state of the

compute node representing it.

After the transformation, the DevOps can adjust

the transformed Runtime Model (Loc) with desired

simulation levels (L) and specializations. Therefore,

the automatically added tag can be infused with ad-

ditional information that can be used by the effec-

tor handling the simulation logic. A subset of used

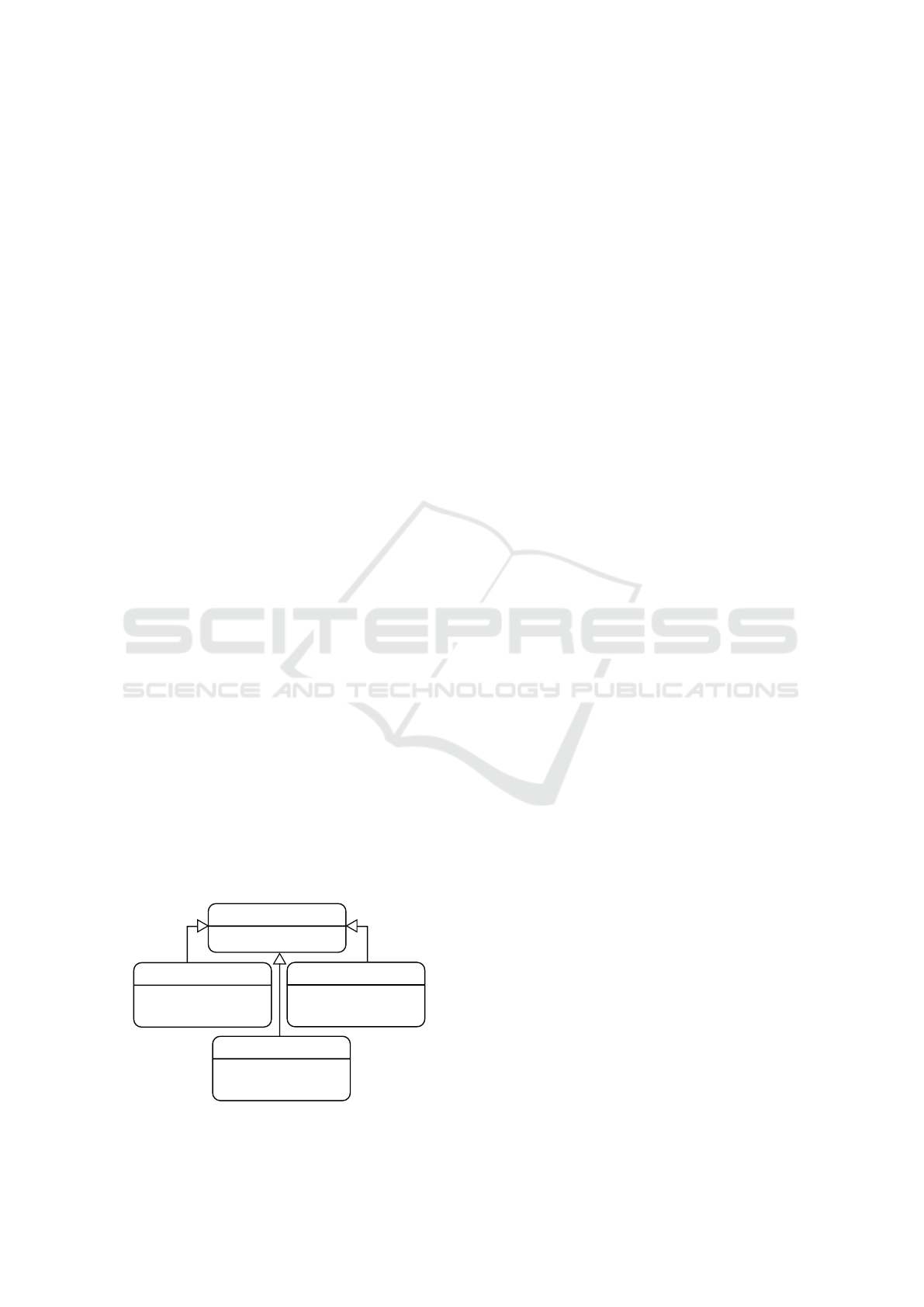

SimulationTag specializations is shown in Figure 4. In

this figure, the simulation.level attribute is shown with

a default value of zero that can be increased by the de-

veloper to set the desired simulation level of each re-

source in the model. As different simulation levels for

different entity types may require different attributes,

we specify a specialization to be used for individual

resource types.

The ComputeSim is used for compute re-

sources like VM or container instances. Here, a

simulation.level greater than zero is used to actually

provision the compute resource on the local envi-

ronment. To choose the kind of virtualization to be

used for the provisioning, the tag introduces multiple

attributes. Among others the virtualization.type and

compute.image can be set, allowing the developer to

SIMULTECH 2021 - 11th International Conference on Simulation and Modeling Methodologies, Technologies and Applications

338

define the desired configuration of the compute in-

stance. Depending on the effort made by the devel-

oper, exact images may be extracted from the pro-

duction environment and assigned to the simulation

tag. Herewith, all information of the environment is

replicated granting the most information of how the

adaptive changes affect the system. The simulation

tag is important for the local replication of the cloud

deployment for development purposes, as virtualized

resources are provisioned taking space from the local

workstation. To cope with the space limitations, fur-

ther attributes in the simulation tag may be used to

control the assigned sizes of the resource in relation

to its production environment counterpart.

The ComponentSim shares the same behaviour for

the lowest simulation level, i.e., a simulation.level of

zero results in a simulation of state changes. For

components, this comprises, e.g., changing the state

of it from undeployed to deployed once a deploy ac-

tion is triggered. For the simulation of components

multiple simulation levels can be defined. For ex-

ample, a simulation.level of one can additionally sim-

ulate the time required to perform lifecycle actions

without actually executing them. Among others, this

simulation is useful as it allows to more easily ob-

serve shifting runtime states enforced by orchestra-

tion processes. To define the timing of the actions

the attributes action.timing.min and action.timing.max

can be set describing the timings upper and lower

boundaries. This tag can even be further specialized

to handle higher levels of simulations. For example,

a simulation.level of two may add artificial workload

to be enforced on the compute node once the compo-

nent’s lifecycle actions are triggered. To generate the

workload we directly coupled the ComponentSim tag

with a configuration management script that allows to

stress the compute resource. Finally, when setting the

simulation.level to three an actual deployment is per-

formed on the attached compute host. This simula-

tion level helps to develop new configuration manage-

ment scripts using the context provided by the runtime

ComputeSim

- virtualization.type

- compute.image

- action.timing.min

- action.timing.max

ComponentSim

- change.rate

- monitoring.results

SensorSim

SimulationTag

- simulation.level=0

Figure 4: A subset of utilized simulation tags.

model or adjust existing ones. It should be noted, that

for an actual deployment the attached compute host

must have a simulation level greater than zero which

can be checked by model validation.

The specialized simulation tags provided in Fig-

ure 4 are by no means exhaustive. For example, a tag

for network simulations may be added that provides

actions that inject, e.g., http faults. In the following,

we discuss how locally deployed cloud applications

can be simulated in a more dynamic environment by

paying special attention to the SensorSim tag.

4.2 Orchestration Simulation

While the deployment simulation represents a rather

static simulation, cloud applications need to be tested

in dynamic scenarios to investigate their behavior un-

der different load scenarios. As already mentioned,

many runtime models provide scalability features al-

lowing to define rules when to add or remove compute

resources. Moreover, often orchestration engines are

used which manage modeled resources and directly

affect the runtime state. The partial deployment of the

runtime model coupled with the simulated part allows

to investigate how developed adaptive changes and

new components cope with the activities performed

by utilized orchestration engines. While we affect the

effectors of the runtime model to simulate behaviour,

the interface to the runtime model itself remains the

same. Therefore, orchestration engines need next to

no adjustments allowing to test the same scaling rules

applied to the production environment in the local

simulation environment.

To allow for a simulation of the orchestration pro-

cess, we artificially generate workload by making use

of monitoring information reflected within the run-

time model (Erbel et al., 2019). Therefore, we in-

troduce the SensorSim tag, see Figure 4, which al-

lows to generate monitoring information over differ-

ent means. Either the simulation mechanisms added

by the tag can be used to generate monitoring in-

formation or the modeled sensor can be actually de-

ployed and artificial workload can be enforced on

the system. To generate monitoring information the

montoring.results and change.rate can be specified

by the developer to define the values the sensor can

observe and how often it changes. To enforce the

workload, the load simulation configuration manage-

ment script coupled with the ComponentTag may be

used. As a result, the developer can not only simulate

how orchestration processes behave under generating

monitoring results, but also under artificial workload.

This in turn allows to locally test the scalability and

robustness of designed cloud applications.

Simulating Live Cloud Adaptations Prior to a Production Deployment using a Models at Runtime Approach

339

5 CASE STUDY

In our case study the production environment is a pri-

vate Openstack cloud that currently hosts an Apache

Hadoop cluster which needs to be enhanced with the

functionalities provided by Apache Spark. To not

compromise the functionality offered by the produc-

tion environment, the required deployment and adap-

tation scripts need to be developed apart from it,

which using our approach can be done offline and

without the need of a dedicated staging environment.

In this study, we completely develop the new desired

component and its accompanying script offline. Fur-

thermore, we investigate how the newly developed

management scripts cope with scaling rules applied

to the deployed cluster. In the following, the setup,

execution and results of the case study are presented.

5.1 Case Study Setup

We implemented our approach using the OCCI a

cloud standard, as it provides an extensible datamodel

accompanied by a uniform interface supporting the

runtime model management. The OCCIWare ecosys-

tem (Zalila et al., 2017) implements an Eclipse Mod-

eling Framework (EMF) metamodel for this standard

and provides editors and generators, as well as a

server maintaining an OCCI runtime model. Within

our case study, OCCI extensions and effectors to sup-

port sensor (Erbel et al., 2019), container (Paraiso

et al., 2016) and configuration management (Korte

et al., 2018) are used. We enhanced these effectors

with simulation behaviour for use in the local envi-

ronment. For the deployment of the runtime model,

a model-driven adaptation process is used that is al-

ready presented in previous work (Erbel et al., 2018).

While a complete introduction of the standard is out

of scope of this paper, its general structure fits to the

one shown in Figure 1.

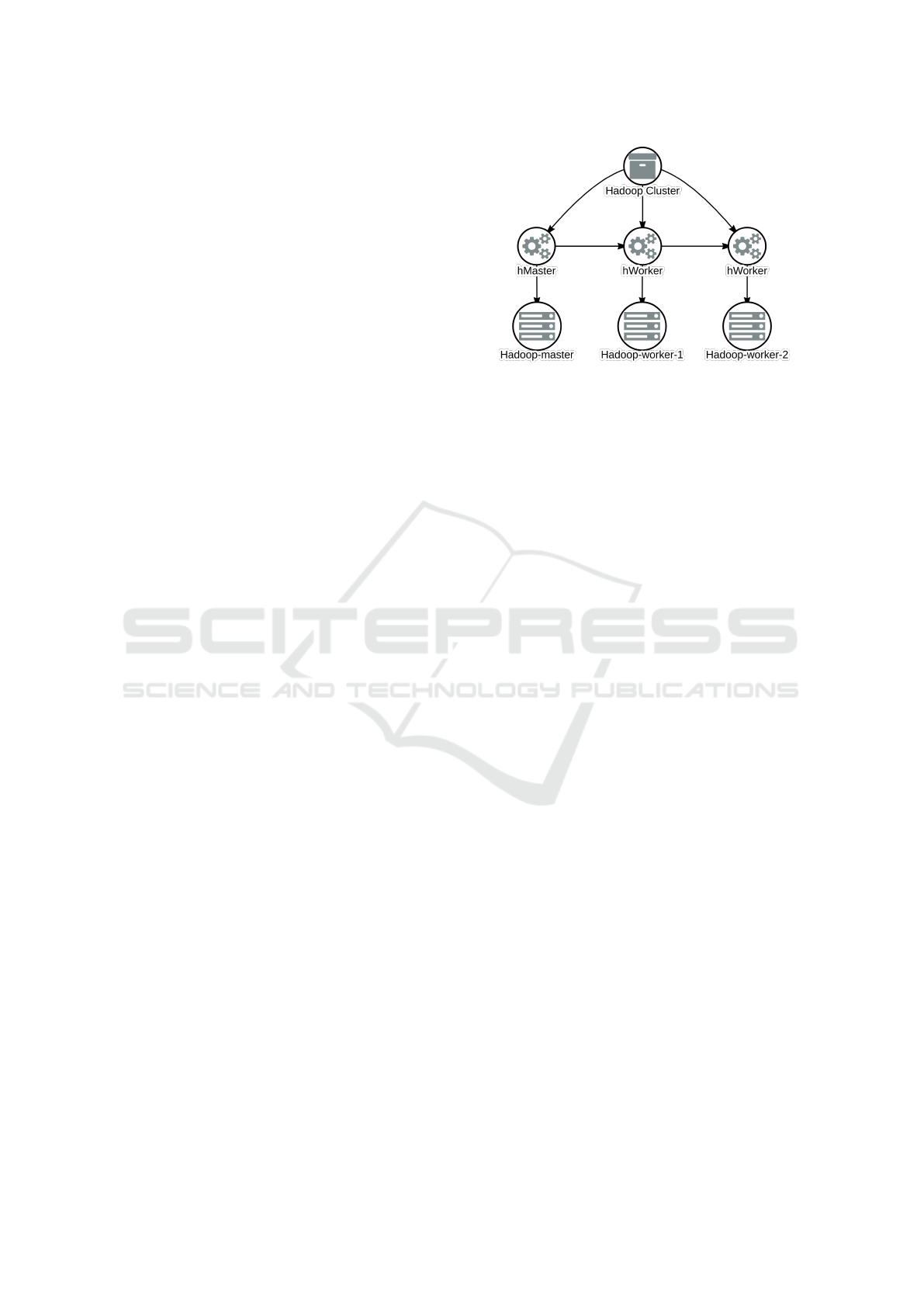

Figure 5 shows the runtime model describing the

deployed hadoop cluster. While the production envi-

ronment consists of multiple VM spanning the cluster,

we only depict three machines for clarity. These three

VMs consist of the fundamental components to de-

ploy a Hadoop Cluster application. In this case one

VM hosting a hMaster component which describes

the clusters NameNode and ResourceManager. Fur-

thermore, the application consists of two VMs host-

ing a hWorker component, representing the clusters

DataNodes. Additionally, each VM in the model

is connected over a network present in the runtime

model. We omitted this network from the visualisa-

tion due to clarity.

Figure 5: Production environment runtime model.

5.2 Case Study Execution

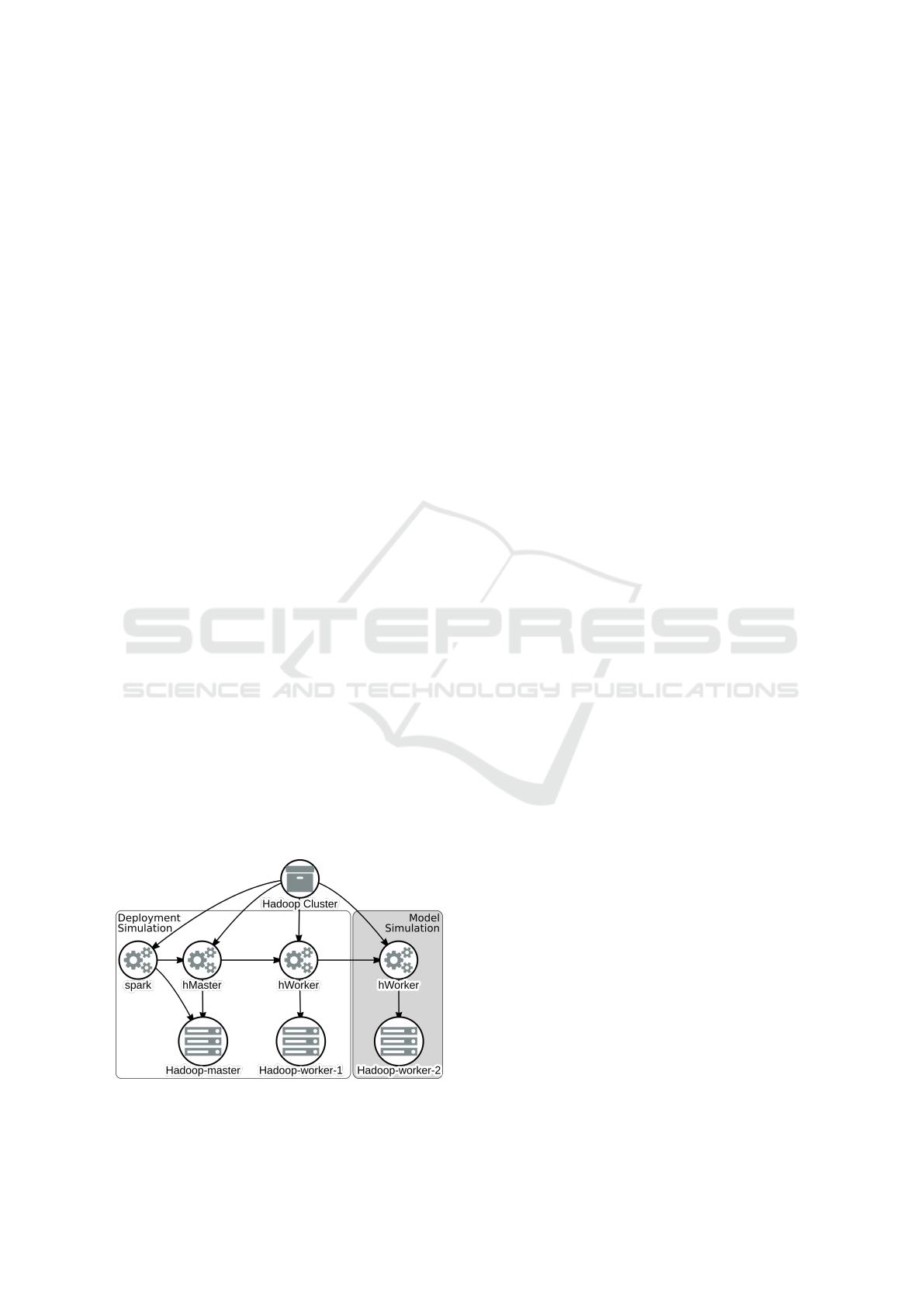

To perform our deployment simulation, we first re-

trieve the runtime model from the production environ-

ment. Thereafter, we performed the simulation trans-

formation on it annotating each resource with a simu-

lation tag. As shown in Figure 6, we then adjusted the

simulation level of one worker and one master node

in such a manner that an actual provisioning and de-

ployment is performed. To enhance the cluster with

the capabilities of Spark, we developed a new compo-

nent spark and attached it to the Hadoop Cluster ap-

plication. To not strain our local resources, we tagged

the Hadoop-master and Hadoop-worker-1 so that they

are provisioned in form of containers on which their

components can be deployed. Furthermore, we ad-

justed the tags so that the Hadoop-worker-2 and its

hosted component are only simulated on a model-

based level, i.e., its state changes. We tag the runtime

model in such a manner, as we assume that when the

configuration management script to be developed op-

erates correctly on one worker and master node, that it

also performs correctly on each other node in the clus-

ter. It should be noted, that the simulation levels can

be also be adjusted at runtime. Finally, we deployed

the tagged runtime model in our local simulation en-

vironment. This process resulted in the provision-

ing of two local container instances representing the

Hadoop-master and Hadoop-worker-1 compute node,

while the Hadoop-worker-2 is simulated on a model-

based level and thus is only present in the runtime

model in state active.

After checking the functionality of the deployed

cluster, we enhanced the management scripts to ad-

ditionally provision Spark capabilities. During this

development process, we used the functionalities pro-

vided by the runtime model to manually trigger indi-

vidual lifecycle actions of the components which al-

lowed to develop individual parts of the configuration

management scripts step by step. At first, we adjusted

SIMULTECH 2021 - 11th International Conference on Simulation and Modeling Methodologies, Technologies and Applications

340

the deploy step responsible to simply download the

Spark binaries and triggered the deploy lifecycle ac-

tion. Thereafter, we adapted the configuration step

of the component which among others utilizes infor-

mation stored in connected components. In case of

the hMaster component, e.g., the hostnames of con-

nected worker nodes are registered. Especially, this

step is of interest as it utilizes the information pro-

vided by the runtime model and thus also the simu-

lated Hadoop-worker-2 compute node. Even though,

the compute node is not started, we could observe

that the configuration registered the node. It should

be noted, that this configuration setup could lead to

failing startups for non-dynamic and non fault toler-

ant cloud applications. Finally, for the start lifecycle

action no adjustments had to be performed, as Spark

requires the same services as the Hadoop cluster. To

check whether the designed adaptations can be ap-

plied to the production environment, we store our per-

formed changes. Thereafter, we freshly deployed the

first version of the runtime model, i.e., the original

duplicate of the production environment, and infused

it with our newly developed components.

After we ensured that the adaptation of the hadoop

cluster is applicable. We tested how our orchestration

processes handle the deployment under specific work-

load. Therefore, we enhanced our runtime model

with sensor elements and generated monitoring infor-

mation for the CPU load of each worker and master

node. To scale the deployment, we reutilize an en-

gine previously developed, which monitors the gath-

ered monitoring results in the runtime model which

allows to adjust the deployment accordingly (Erbel

et al., 2019). To quickly get an overview of the en-

gines scaling mechanisms, we use the capabilities of

the runtime model to directly set up and affect the re-

sults to be observed by the sensor. For example, to in-

vestigate the upscale behaviour we set the CPU sensor

to only monitor critical load.

Figure 6: Local deployment simulation runtime model.

5.3 Results and Observations

In the deployment simulation, we chose to provision

the production compute nodes locally as containers

rather than as VMs. This decision resulted in faster

local deployment times and lesser strain on our local

system compared to the utilization of hardware vir-

tualization. However, to spawn container nodes we

needed to create a specialized image which mimics

the VM of the production environment. Depending on

the scenario, the change of virtualization techniques

can apply some constraints such as denying access to

specific configurations. After the creation of the im-

age, the actual execution of the component on a local

basis allowed for short cycles to create, improve and

test the respective configuration management script.

By simulating parts of the deployment model, we

could utilize the information stored within the runtime

model without an actual provisioning of the compute

nodes resulting in less resources required. Among

others, this simulation supported the development of

queries used to retrieve the address and port of asso-

ciated worker and master nodes.

While the orchestration simulation did not support

the development of the cloud deployment directly, it

allowed to test the robustness of developed adjust-

ments. Furthermore, by applying already existing

scaling rules, we observed that the some adjustments

were required. The down scaling rule worked as in-

tended, as only resources needed to be removed form

the model. The upscaling rule however needed to

be adjusted to incorporate the newly developed com-

ponent. In the following, we provide a discussion

about the extent to which the described simulation

processes support DevOps to develop and integrate

adaptive changes to running deployments.

6 DISCUSSION

For practitioners, the access to a local environment for

cloud applications can support the development pro-

cess, as it allows multiple developers to work simul-

taneously using their own hardware resources. Fur-

thermore, it allows to develop and test adaptations of-

fline, without requiring access to a staging environ-

ment. However, to create such a local environment,

the size of modeled compute resources had to be re-

duced while focusing on areas of interest. There-

fore, to create a meaningful environment, the person

or team holding the DevOps role need to know what

is required to observe and adjust the simulation lev-

els of the system accordingly. Furthermore, atten-

tion must be paid to the available resources includ-

Simulating Live Cloud Adaptations Prior to a Production Deployment using a Models at Runtime Approach

341

ing their restrictions. To cope with this issue, defin-

ing a range of resources of interest could be benefi-

cial to automatically adjust the partial deployment to

local resources. Moreover, the described simulation

capabilities helped to develop and test orchestration

engines built around the runtime model. Especially,

as a pure model-based simulation allows to execute

automated tests. Additionally, when trying to iden-

tify bugs the timing of the lifecycle actions can be

slowed providing a clearer picture about transitioning

runtime states. Hereby, test cases can be built around

individual runtime model states that can be easily ex-

tracted. Also, the research community may greatly

benefit from a runtime model-based simulation envi-

ronment. Not only because it allows to quickly de-

velop and test cloud deployments, but also because it

allows to provide an environment that may serve as

a replication kit for performed studies. Hereby, the

simulation environment allows an easier replication

of the study, as no cloud access is required.

Overall, having a local simulation environment for

distributed systems proved to be useful for the de-

velopment of cloud applications while assessing the

impact of changes prior to an actual deployment in a

production environment.

6.1 Threats to Validity

Even though we only provided one case study to show

the feasibility of our concept, the study revealed the

possibilities of individual simulation levels of our run-

time model-based approach. Furthermore, the study

allowed to demonstrate how the environment supports

the development process for cloud deployments and

connected orchestration tools. Our approach depends

on the utilization of runtime models for which many

approaches can be found in the literature. We chose

to evaluate the simulation approach using OCCI, as

it is standardized and has an existing ecosystem built

around it. Even though only one example cloud run-

time modelling language was investigated, the con-

cept of the approach is applicable to a wide variety

of runtime models as the tag can be implemented by

different means, e.g., as a simple annotation. To per-

form our study, only a single production environment

cloud was used. However, due to the abstract nature

of the model-driven approach the resulting model and

thus our process is applicable on different providers

as long as the implementation supports it. Further-

more, in terms of virtualization techniques our case

study focused on the utilization of locally spawned

container instances. While only the utilization of one

kind of compute resources was discussed, there is

conceptually no difference to provision locally VMs.

7 CONCLUSION

In this paper, we presented a simulation concept uti-

lizing a runtime model to assess the impact of live

cloud adaptations prior to a production deployment.

We demonstrate how a production environment can

be locally recreated by annotating individual model

elements with desired simulation behaviour. Based on

an example case study, we discussed how the partial

simulation supports the development process of adap-

tive changes on a local workstation. Furthermore, we

presented how the runtime model supports to assess

the impact of developed changes in a dynamic envi-

ronment. Therefore, orchestration engines adapting

the cloud deployment were used which utilize simu-

lated monitoring results. Overall, the approach sup-

ports the development process for cloud application

by providing access to a local deployment and sim-

ulation environment which allows to early assess the

impact of developed adaptations.

In future work, we aim at extending the simula-

tion capabilities of the individual cloud components

to exhaustively investigate the possibilities for a lo-

cal replication. Additionally, we plan to extend the

amount of cloud application scenarios the simulation

environment is tested. Furthermore, we will investi-

gate to what extent the simulation environment can

be linked to the production environment to directly

stream successful adaptive actions.

AVAILABILITY

The implementation and videos demonstrating the

case study are available at: https://gitlab.gwdg.de

/rwm/de.ugoe.cs.rwm.domain.workload.

REFERENCES

Achilleos, A. P., Kritikos, K., Rossini, A., Kapitsaki,

G. M., Domaschka, J., Orzechowski, M., Seybold,

D., Griesinger, F., Nikolov, N., Romero, D., and Pa-

padopoulos, G. A. (2019). The cloud application mod-

elling and execution language. Journal of Cloud Com-

puting, 8(1):20.

Ahmed-Nacer, M., Kallel, S., Zalila, F., Merle, P., and

Gaaloul, W. (2020). Model-Driven Simulation of

Elastic OCCI Cloud Resources. The Computer Jour-

nal.

Armbrust, M., Fox, A., Griffith, R., Joseph, A. D., Katz,

R. H., Konwinski, A., Lee, G., Patterson, D. A.,

Rabkin, A., Stoica, I., and Zaharia, M. (2009). Above

the Clouds: A Berkeley View of Cloud Computing.

SIMULTECH 2021 - 11th International Conference on Simulation and Modeling Methodologies, Technologies and Applications

342

Electrical Engineering and Computer Sciences, Uni-

versity of California at Berkeley.

Bencomo, N., Blair, G., G

¨

otz, S., Morin, B., and Rumpe,

B. (2013). Report on the 7th International Workshop

on Models@run.time. ACM SIGSOFT Software Engi-

neering Notes, 38(1):27–30.

Blair, G., Bencomo, N., and France, R. B. (2009). Models@

run.time. Computer.

Brikman, Y. (2019). Terraform: Up & Running: Writing

Infrastructure as Code. O’Reilly Media.

Calheiros, R. N., Ranjan, R., Beloglazov, A., De Rose, C.

A. F., and Buyya, R. (2011). Cloudsim: A toolkit for

modeling and simulation of cloud computing environ-

ments and evaluation of resource provisioning algo-

rithms. Softw. Pract. Exper., 41(1):23–50.

Erbel, J., Brand, T., Giese, H., and Grabowski, J. (2019).

OCCI-compliant, fully causal-connected architecture

runtime models supporting sensor management. In

Proceedings of the 14th Symposium on Software En-

gineering for Adaptive and Self-Managing Systems

(SEAMS).

Erbel, J., Korte, F., and Grabowski, J. (2018). Comparison

and runtime adaptation of cloud application topolo-

gies based on occi. In Proceedings of the 8th Interna-

tional Conference on Cloud Computing and Services

Science (CLOSER).

Favre, J.-M. (2004). Towards a Basic Theory to Model

Model Driven Engineering. In Proceedings of the

3rd UML Workshop in Software Model Engineering

(WiSME).

Ferry, N., Chauvel, F., Song, H., Rossini, A., Lushpenko,

M., and Solberg, A. (2018). Cloudmf: Model-driven

management of multi-cloud applications. ACM Trans-

actions on Internet Technology (TOIT), 18(2):1–24.

Ferry, N., Chauvel, F., Song, H., and Solberg, A. (2015).

Continous deployment of multi-cloud systems. In

Proceedings of the 1st International Workshop on

Quality-Aware DevOps (QUDOS).

Guerriero, M., Garriga, M., Tamburri, D. A., and Palomba,

F. (2019). Adoption, support, and challenges of

infrastructure-as-code: Insights from industry. In Pro-

ceedings of the 2019 IEEE International Conference

on Software Maintenance and Evolution (ICSME).

Hanappi, O., Hummer, W., and Dustdar, S. (2016). Assert-

ing reliable convergence for configuration manage-

ment scripts. In Proceedings of the 2016 ACM SIG-

PLAN International Conference on Object-Oriented

Programming, Systems, Languages, and Applications

(OOPSLA).

Humble, J. and Molesky, J. (2011). Why enterprises must

adopt devops to enable continuous delivery. Cutter IT

Journal, 24(8):6.

Korte, F., Challita, S., Zalila, F., Merle, P., and Grabowski,

J. (2018). Model-driven configuration management

of cloud applications with occi. In Proceedings of

the 8th International Conference on Cloud Comput-

ing and Services Science (CLOSER).

K

¨

uhne, T. (2006). Matters of (meta-) modeling. Software

& Systems Modeling, 5(4):369–385.

Liu, C., Mao, Y., Van der Merwe, J., and Fernandez, M.

(2011). Cloud resource orchestration: A data-centric

approach. In Proceedings of the biennial Conference

on Innovative Data Systems Research (CIDR).

Mell, P. and Grance, T. (2011). The NIST Definition of

Cloud Computing. Available online: https://nvlpubs.

nist.gov/nistpubs/Legacy/SP/nistspecialpublication80

0-145.pdf, last retrieved: 04/22/2021.

OMG (2003). MDA Guide Version 1.0.1. Available online:

http://www.omg.org/news/meetings/workshops/UM

L 2003 Manual/00-2 MDA Guide v1.0.1.pdf, last

retrieved: 04/22/2021.

OMG (2011). Unified Modeling Language Infrastructure

Specification. Available online: http://www.omg.or

g/spec/UML/2.4.1/Infrastructure/PDF, last retrieved:

04/22/2021.

OMG (2016). Object Constraint Language. Available on-

line: http://www.omg.org/spec/OCL/2.4/PDF/, last

retrieved: 04/22/2021.

Paraiso, F., Challita, S., Al-Dhuraibi, Y., and Merle, P.

(2016). Model-Driven Management of Docker Con-

tainers. In Proceedings of the 9th IEEE International

Conference on Cloud Computing (CLOUD).

Rahman, A., Mahdavi-Hezaveh, R., and Williams, L.

(2019). A systematic mapping study of infrastructure

as code research. Information and Software Technol-

ogy, 108:65 – 77.

Szvetits, M. and Zdun, U. (2016). Systematic literature re-

view of the objectives, techniques, kinds, and archi-

tectures of models at runtime. Software & Systems

Modeling.

Wurster, M., Breitenb

¨

ucher, U., Falkenthal, M., Krieger,

C., Leymann, F., Saatkamp, K., and Soldani, J.

(2020). The essential deployment metamodel: a sys-

tematic review of deployment automation technolo-

gies. SICS Software-Intensive Cyber-Physical Sys-

tems, 35(1):63–75.

Zalila, F., Challita, S., and Merle, P. (2017). A model-driven

tool chain for OCCI. In Proceedings of the 25th Inter-

national Conference on Cooperative Information Sys-

tems (CoopIS).

Simulating Live Cloud Adaptations Prior to a Production Deployment using a Models at Runtime Approach

343