Detection of Security Vulnerabilities Induced by Integer Errors

Salim Yahia Kissi

1 a

, Yassamine Seladji

1 b

and Rab

´

ea Ameur-Boulifa

2 c

1

LRIT, University of Abou Bekr Belkaid, Tlemcen, Algeria

2

LTCI, T

´

el

´

ecom Paris, Institut Polytechnique de Paris, France

Keywords:

Security Vulnerability, Memory Errors, Software Analysis, Satisfiability Analysis, Integer Overflow.

Abstract:

Sometimes computing platforms, e.g. storage device, compilers, operating systems used to execute software

programs make them misbehave, this type of issues could be exploited by attackers to access sensitive data

and compromise the system. This paper presents an automatable approach for detecting such security vulner-

abilities due to improper execution environment. Specifically, the advocated approach targets the detection of

security vulnerabilities in the software caused by memory overflows such as integer overflow. Based on analy-

sis of the source code and by using a knowledge base gathering common execution platform issues and known

restrictions, the paper proposes a framework able to infer the required assertions, without manual code anno-

tations and rewriting, for generating logical formulas that can be used to reveal potential code weaknesses.

1 INTRODUCTION

With the aim of classifying the software weaknesses

(types of vulnerabilities) organizations define a huge

number of different data-sources to be used by soft-

ware developers to avoid particular attacks. Data-

sources are generally descriptions and set of charac-

teristics, which require extra effort to interpret, assess

and demonstrate potential risks. In practice, in com-

panies this kind of tasks is being carried out through

review processes and conduct audit sessions compris-

ing a wide range of experiments. However, manual

reviews can be time-consuming and costly to the com-

panies in terms of resources, and they can fall short

in detecting security vulnerabilities. From a software

engineering perspective, finding out security vulnera-

bilities is not a trivial task for several reasons includ-

ing software weaknesses are often written in an in-

formal style, they use various technical information

(concepts), and they require domain expertise to in-

terpret them.

In this work we consider a well known threat to

systems security: integers errors. These errors result

from integer operations, including arithmetic over-

flow, oversized shift, division-by-zero, lossy trunca-

tion and sign misinterpretation, that can be manipu-

a

https://orcid.org/0000-0002-9222-0291

b

https://orcid.org/0000-0003-2778-7555

c

https://orcid.org/0000-0002-2471-8012

lated by malicious users. Our focus here is on arith-

metic overflow within the C standard. One reason

why such errors remain serious source of problems

is that it is difficult for programmers to reason about

integers semantics (Dietz et al., 2015). Importantly,

there are unintentional numerical bugs that are caused

by the execution environment, including the widely-

used applications and libraries, but they can also be

caused by the features of the execution platforms (e.g.

compilers, operating systems). This is not merely a

fringe case, but it is observable already on small pro-

grams. To illustrate the problem, consider the code

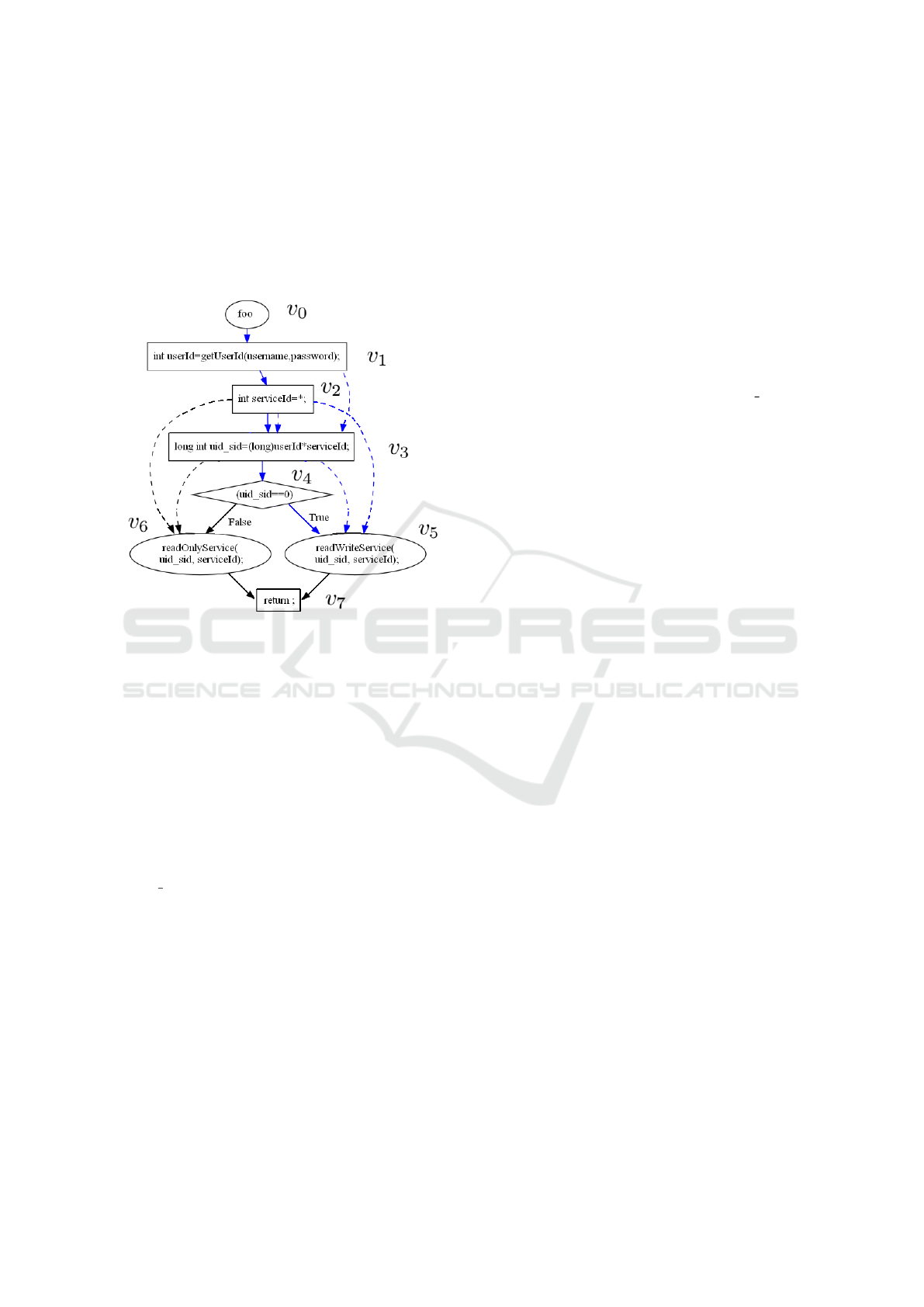

snippet shown in Figure 1. The code shows a method

Figure 1: Access Control Sample Code.

which computes the access rights of an user to ser-

vices from his credentials (username and password)

provided as inputs. It specifies that read and write

access rights to services are granted only to a spe-

Kissi, S., Seladji, Y. and Ameur-Boulifa, R.

Detection of Security Vulnerabilities Induced by Integer Errors.

DOI: 10.5220/0010551301770184

In Proceedings of the 16th International Conference on Software Technologies (ICSOFT 2021), pages 177-184

ISBN: 978-989-758-523-4

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

177

cific user the administrator (its userId has a value of

0) and other users (their userId are different from 0)

should access with reading rights only. Note that the

access right is obtained by multiplying the user iden-

tity and the service identity (line 6). Compiling and

running this code with gcc on Windows operating sys-

tem 32/64 bits using 64 bits CPU (x86 64 such Intel

and AMD processors) produces a program that grants

read and write permissions to an unauthorized user.

This security problem stems from the arithmetic op-

eration, the multiplication overflows resulting in un-

defined behavior.

Detection of computer security vulnerabilities that

are generated inadvertently by runtime platforms is

still an open problem (Hohnka et al., 2019). As a

number of unknown (numerical) bugs in widely used

open source software packages (and even in safe in-

teger libraries) inadvertently create vulnerabilities in

the resulting code. This work presents an approach

to formally detect misbehaviour that can lead to se-

curity concerns. We are able to detect exploits of

C programs induced by an unintentional arithmetic

overflow caused by the execution platform. To al-

low the analysis of programs by integrating execution

platform, we suggest enhancing symbolic execution

models with characteristics of computer system, and

to offer, from the same model, software-specification

and in addition, hardware-specification analyses. The

model gives a fully formal and analyzable semantics

for C code in terms of a logical formula. And the use

of SMT-solvers allows to decide if it is satisfiable.

Contribution. This article presents our approach

that provides the means to formally specify the soft-

ware weaknesses, and to evaluate their potential tech-

nical impact using formal proofs. The approach based

on static analysis is designed to evaluate the security

impact of integer errors, in particular integer over-

flows by analysing memory error exploitations over

programs. Formally speaking, we use symbolic ex-

ecution to generate program constraint (PC), and get

security constraint (SC) from predefined security re-

quirements. In addition, based on a precise knowl-

edge on the execution context of the analysed pro-

gram (EC), we propose to solve the statement: EC `

PC ∧¬SC we seek to find out if there is an assignment

of values to program inputs – executed in a certain

context – which could satisfy PC but violates SC.

Although we focus on the integer errors and pro-

grams in C language, we believe that our approach

is quite general. It can be applied to analyze other

sources of problems (e.g. software/hardware excep-

tions, pointer aliasing) but also other programming

languages.

The paper flow is as follows: Section 2 presents a

high level view of memory error, in particular buffer

overflow and integer errors. This section is then fol-

lowed by an overview of the proposed end-to-end ap-

proach for the specification and verification of their

potential security impact (Section 3). In Section 4,

we present existing approaches that dealt with secu-

rity vulnerabilities detection. Section 5 concludes the

paper and discusses possible directions of this work.

2 MEMORY ERRORS

Memory errors in C and C++ programs are probably

the best-known software vulnerabilities (Van der Veen

et al., 2012). These errors include buffer overflows,

use of pointer references, format string vulnerabilities

and arithmetic vulnerabilities. This paper focuses on

a subclass of software vulnerabilities: buffer overflow

and integer errors, which address mainly the memory

corruption errors.

Buffer Overflow. Buffers are areas of memory ex-

pect to hold data. When a variable is declared in a pro-

gram, space is reserved for it and memory is dynam-

ically allocated at run-time. A buffer overflow may

occur when size of data is larger than the buffer size.

Then while writing data into a buffer, the program

overruns the buffer’s boundary and overwrites adja-

cent memory space, which results in unpredictable

program behaviour, including crashes or incorrect re-

sults.

Integer Errors. Most typed programming lan-

guages have fixed memory size for simple data type

that fits with the word size of the underlying machine.

Two kinds of integer errors that can lead to exploitable

vulnerabilities exist: arithmetic overflows and sign

conversion errors. The first occurs when the result

of an arithmetic operation is a numeric value that is

greater in magnitude than its storage location. While

the second occurs when the programmer defines an

integer, it is assumed to be a signed integer but it is

converted to an unsigned integer.

Basically, this kind of memory-safety issues can

yield result far than expected when the impacted

memory space is accessed. Even worse, they could

become security exploits (security vulnerabilities)

(Younan et al., 2004) if the result of the memory ac-

cess is used to perform unauthorized actions and gain

unauthorized access to privileged areas. Erroneous

programs can allow a malicious actor to run code, in-

stall malware, and steal, destroy or modify sensitive

data.

ICSOFT 2021 - 16th International Conference on Software Technologies

178

Nowadays there are more and more software com-

panies, organizations and developers such as Source

Code Analysis Laboratory (SCALe) (Seacord et al.,

2012) and CERN computer security (CER, ) shar-

ing good and bad programming practices to develop

higher quality software and avoid bugs. These prac-

tices are generic coding conventions and recommen-

dations which may apply (and not apply) for soft-

ware written with a particular programming language.

However, such coding standards are presented in an

informal style, and are not located in one single place.

Our work proposes a proof-based approach that takes

advantages of these coding conventions and devel-

opers knowledge to discover potential vulnerabilities

that can be hidden in a code. We transform some

safety-related practices that can lead to security ex-

ploits from their informal specification to exploitable

safety-properties that can be automatically verified

over a program. For example, integer overflow is po-

sitioned in the ”Top 25 Most Dangerous Software Er-

rors” (CWE, ). On hardware platforms, the range of

two’s complement representation of an n-bit signed

integer is −2

n−1

. . . 2

n−1

−1, which is represented in

the computer as depicted in Figure 2.

Figure 2: Signed Magnitude Representation.

where x

i

∈ {0, 1}. If the most significant digit x

n−1

is a 0, the number is evaluated as an unsigned (posi-

tive) integer, otherwise the number is a negative inte-

ger. The value of the integer can then be calculated by

the following formula:

−x

n−1

2

n−1

+

n−2

∑

i=0

x

i

2

i

Detecting integer overflows is non-trivial because

overflow behaviours are not always bugs. In particu-

lar, some International Standard like C99 imposes no

requirements, the result of evaluating an integer over-

flow in C implementation is an undefined behaviour.

3 APPROACH

We propose an approach that enables the detection

of potential security vulnerability and the formaliza-

tion of security weaknesses. Our approach relies on

formal proofs for the detection of security exploits

caused by intentional or unintentional safety bugs.

By taking an interest in the knowledge about unde-

fined behaviour in programs that can result in poten-

tial security exploits, we focus mainly on verifying

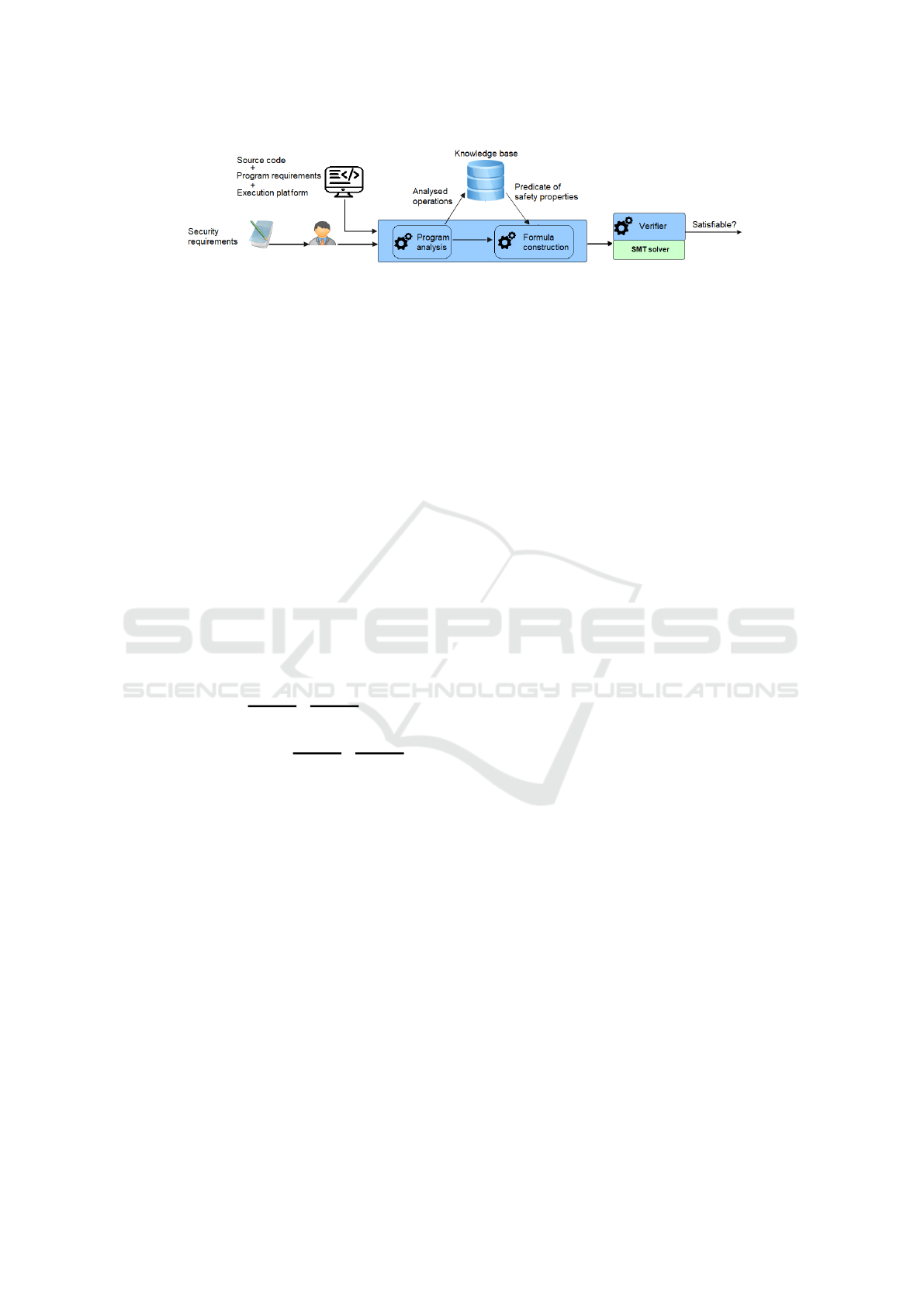

whether those security exploits can occur or not. Fig-

ure 3 illustrates our approach and highlights the rel-

evant phases for the verification of security exploits

over the program to analyze. We aim at separating

the duties and make the distinction between the main

actors in our approach; the specification expert(s) and

the developer(s). In a preliminary phase, the spec-

ification expert(s) carries out the extraction of logi-

cal formulas from software vulnerabilities directories.

This task consists of translating undefined behaviour

into exploitable formulas. It results in a set of generic

logical formulas saved in a database (we refer to as

Safety Knowledge Base) that could be used in differ-

ent analyses.

3.1 Safety Knowledge Base

The key idea of our approach is to build a Safety

Knowledge Base as a centralized repository for gath-

ering formal specifications, which are logical formu-

las so-called safety-properties. The database will be

created from errors and recommendations reported in

software (vulnerabilities) directories. Regarding inte-

ger errors as reported in CERT directory ”INT32-C.

Ensure that operations on signed integers do not re-

sult in overflow”

1

, the developers have listed 15 pos-

sible operations among 36 that could lead to overflow.

Based on the reported result we can distinguish sev-

eral patterns binary operations and unary operations

on different data types: signed char, short, int, long,

long long, and also type of stdint library. For each op-

eration with a given type, in the style of what has been

done in Frama-C (Kosmatov and Signoles, 2013).

The advocated approach relies on using logical

formula (assertions) to uncover security vulnerability

due to overflow errors on two signed integers. An

example of logical formula is that used in (Dietz

et al., 2015) to evaluate an n-bit addition operation

on two’s complement integers (s

1

and s

2

) overflows

is the following expression:

((s

2

> 0) ∧ (s

1

+ s

2

> INT MAX))

∨ ((s

2

< 0) ∧ (s

1

+ s

2

< INT MIN))

meaning that a signed addition can overflow if and

only if this expression is true.

Regarding the example given in Figure 1, if we con-

sider the conditional statement (line 7), an appropriate

property to analyze this statement would be a formula

that deals with with multiplication on two’s comple-

1

https://wiki.sei.cmu.edu/confluence/display/c

Detection of Security Vulnerabilities Induced by Integer Errors

179

Figure 3: End-to-end approach.

ment n-bit signed integers, and also with the compar-

ison with 0. The useful assertion that we propose is:

s

1

× s

2

= 2

n

× k ∧ k > 0 ⇒ s

1

× s

2

= 0

As pointed out in (Dietz et al., 2015) the result

of an integer multiplication can wrap around many

times. Consequently, a processor typically places the

result of an n-bit multiplication into a location that is

2n bits wide. If we suppose that the result s

1

× s

2

will

occupy the high-order n bits, the integer is then eval-

uated by:

s

1

× s

2

=

2n

∑

i=n

x

i

2

i

= x

n

2

n

+ x

n+1

2

n+1

+ . . . + x

2n

2

2n

Let m be the first least significant non-zero bit in

this expression, so the result can be written as:

s

1

× s

2

= 2

m

+ . . . + x

2n

2

2n−m

Naturally, if m ≥ n, we have s

1

× s

2

= 0. Moreover,

the result can be rewritten as:

s

1

× s

2

= 2

m

× (1 + . . . + x

2n

2

2n−m

)

| {z }

k

= 2

n

× 2

m−n

× (1 + . . . + x

2n

2

2n−m

)

| {z }

k

such that k > 0. More simply, the result of the multi-

plication becomes:

s

1

× s

2

= 2

n

× k

with k > 0. Therefore, we get the proposed formula:

s

1

× s

2

= 2

n

× k ∧ k > 0 ⇒ s

1

× s

2

= 0

News feeds will be provided throughout the lifetime

of the database. It will gradually populated by logical

formulas extracted from software vulnerabilities di-

rectories and coding conventions. Basically, this task

requires an expert in logics to build such formulas.

3.2 Model Construction

– The developer(s) provides the source code of the

program to be analyzed. The program written in

a specific programming language, in this work we

focus on programs written in C language.

– He provides both the features about the execution

environment and the platform on which the pro-

gram will be compiled. In other words, all informa-

tion about the targeted execution environment, e.g.

operating system, architecture 32/64 bits and com-

piler. This information should guide the search for

suitable formulas to pick from Knowledge Base.

– He can also provide other specific requirements,

as requirements that specify constraints on the do-

main of variables and on the data structures ap-

pearing in the program. Referring to our example

(given in Figure 1). In addition to the specifica-

tion saying that ”the value of the userId of the ad-

ministrator is equal to 0”, we also add two other

constraints defining the variables domain: userId ∈

[0, . . . , 150 × 10

6

] and serviceId ∈ [1, . . . , 64].

– The developer(s) or the security expert(s) speci-

fies a security requirement which is a statement

of required security functionality that the program

should satisfy. Often, security requirements are

presented in an informal style, so their interpre-

tation and implementation require some expertise.

We aim at reducing the security expert burden to

a minimum. Static analysis can be used to identify

the program points (data/instruction) that can be af-

fected by the security requirement. Consider the

guideline stating ”Only the administrator user will

access services with read/write privileges”. It rep-

resents a typical security requirement and means

that the access to the secure areas is granted only

to the administrator. By referring to our example it

can be easily noticed that the statement at line 8 is

concerned by this requirement.

We aim at constructing a model including all the

parties involved in the analysis: the program and its

context. The outcome of this modelling step is a log-

ical formula that specifies both the program, the re-

quirements, and the execution environment.

– For this an intermediate representation is built

which in the Program Dependence Graph (PDG)

augmented with information and details obtained

from deep dependency analysis on the program (as

shown in Figure 4). Static analysis tools (as Frama-

C for analysis of program C analysis (Cuoq et al.,

2012) and JOANA (Graf et al., 2013) for program

ICSOFT 2021 - 16th International Conference on Software Technologies

180

Java) can be used to construct such structure that

capture control and (explicit/implicit) data depen-

dencies between program instructions, and which

constitutes a strong basis to perform a precise anal-

ysis. Figure 4 shows the PDG graph for the sample

code given in Figure 1. Strong edges represent the

control flows, the dashed edges refer to explicit and

implicit data flows.

Figure 4: PDG model for the sample code given in Figure

1.

– Based on security requirement and by relying on

the assistance of security expert(s) we get the sensi-

tive data. The exploring of PDG graph and tracking

all dependencies (explicit/implicit) flows, we com-

pute the set of all the sensitive information in a pro-

gram. From a developer perspective, it is tough to

fulfil this task without automatic tool, the complex-

ity of this operation increases with the complexity

and the program size. Referring to our example

(Figure 1), sensitive data are clearly password and

username. The complete exploration and computa-

tion will expand this set and produces a broader set:

{uid sid, serviceId, userId, password, username}.

– Based on this complete set of sensitive data we

identify on the PDG graph the set of critical state-

ments (nodes). These nodes represent all the pro-

gram points that may be potentially impacted by

a security problem, regarding to the requirement

under consideration. Referring to the intermedi-

ate representation of our example (Figure 4) we can

clearly see that the vertex v

5

corresponds to a crit-

ical statement node. Indeed, it is the only program

point where administrators can have read/write ac-

cess. From this node, we generate through sym-

bolic execution path conditions. A set of all paths

leading to the execution of the statement at this

node. More specifically, consider π

1

, . . . π

n

all pos-

sible paths leading to a node v

k

the aim is to build a

formula that is a disjunction of all path conditions:

ϕ(v

i

) = ϕ(π

1

) ∨ . . . ∨ ϕ(π

n

). A path in the PDG

is a sequence from the entry node to a given node

(i.e. π = v

0

. . . v

k

). If we denote by C(v

i

) the neces-

sary condition for the execution of v

i

meaning that

the instruction at the node v

i

can be executed only

if C(v

i

) is satisfied. So the path condition can be

rewritten as: ϕ(v

i

) =

_

1≤i≤n

^

0≤ j≤k

C(v

j

)

. Let us

turn to our example, the condition for execution the

statement at the vertex v

5

is C(v

5

) , uid sid = 0.

As the reader can notice, there is only one ex-

ecution path leading to this node (naturally, one

should also consider the control flow). So we get

ϕ(v

5

) , (userId × serviceId = 0). Afterwards, we

formulate the program constraint that made up of

path conditions formula plus the program require-

ments. We get:

PC , (userId × serviceId = 0) ∧ (serviceId ≥ 1 ∧

serviceId ≤ 64)∧(userId ≥ 0∧userId ≤ 150×10

6

)

– Considering the path condition, the next step in-

volves seeking out execution context formula (EC)

from Safety Knowledge Base. It contains a set of

formulas that map software constructors with exe-

cution configurations, including operating systems,

compiler settings, build process tools, etc. The

question is, how to identify the relevant formula to

pick? This is guided by the program constraint and

the target architecture. For instance, for our exam-

ple we clearly identified that the hotspot is the mul-

tiplication operation whose result is 0. By seeking

out formulas that include the multiplication opera-

tion (and possibly concerning the targeted architec-

ture), we will find out the formula already inserted:

EC , s

1

× s

2

= 2

n

× k ∧ k > 0 ⇒ s

1

× s

2

= 0

Actually, this formula should be instanced by the

target architecture settings, i.e. substituted by 32 or

64 depending on target architecture.

– It remains now only to formulate the security con-

straint. Without a thorough examination and under-

standing of the given security requirement, this can

be reformulated as follows: can a user who is not an

administrator obtain a privileged service? We for-

mulated the fact that a user is not an administrator

with userId 6= 0. So get:

SC , userId = 0

The security problem to be solved is thus formu-

lated as:

(s

1

× s

2

= 2

n

× k ∧ k > 0 ⇒ s

1

× s

2

= 0) `

Detection of Security Vulnerabilities Induced by Integer Errors

181

(userId × serviceId = 0) ∧ (serviceId ≥

1 ∧ serviceId ≤ 64) ∧ (userId ≥ 0 ∧ userId ≤

150 × 10

6

) ∧ (userId 6= 0)

3.3 Checking Satisfiability

This step aims at verifying the satisfiability of the con-

structed model. We used an SMT (Satisfiability Mod-

ulo Theories) solver that is a powerful tool for check-

ing satisfiability and supports arithmetic and decid-

able theories. We used Z3 prover developed by Mi-

crosoft Research (as shown in Figure 5).

Figure 5: Specification Formula encoded in Z3.

By instantiating our formula by considering archi-

tecture of 32 bit we get the following model (valuation

that satisfies the formula):

sat (model

(define-fun k () Int 2)

(define-fun userId () Int 134217728)

(define-fun serviceId () Int 64))

meaning that the execution of our program on this ar-

chitecture can violate the security requirement. If the

identifier of the user (userId ) equals 134217728 re-

quests a service (serviceId ) equals 64 we may have

a security problem (take on the role administrator).

In order to get all possible models, we used python

script in which the formula was updated by negating

each model found till the formula became unsatisfied.

So we found all problem cases:

sat [serviceId=32, userId=134217728, k=1]

sat [serviceId=64, userId=67108864, k=1]

sat [serviceId=64, userId=134217728, k=2]

For practical validation of these results, we executed

our example on an Ubuntu 18.04 , 64 bit: we run bi-

nary optimized for both 32 and 64 bit architecture.

The binary optimized for 32 bit architecture can be

obtained by adding a flag ”-m32” while compiling C

source otherwise it is optimized for 64 bit. Figure 6

shows the compilation mode and the output of the ex-

ecution of the resulting program.

So there are no surprises there, experimentation

reinforces the theoretical results. Consequently, we

focused our efforts on studying the effects of vari-

ous architectures (operating systems and compiler op-

tions) on integer error classes, more specifically, on

arithmetic overflow caused by the multiplication op-

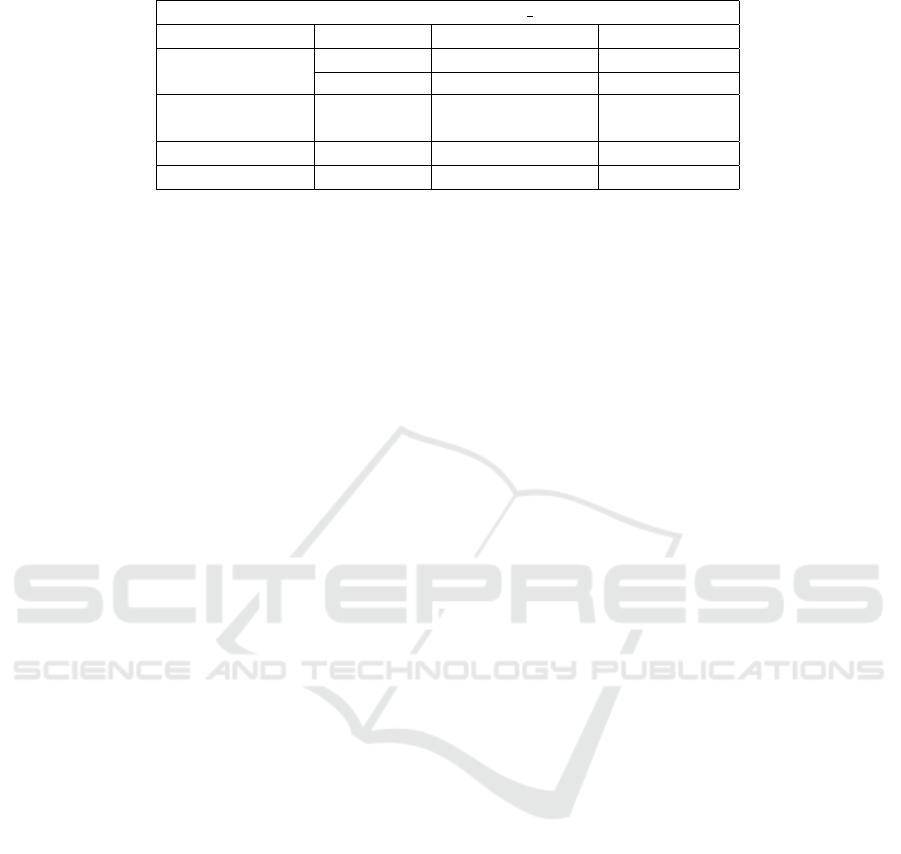

eration. The results of the evaluation is summarized

in Table 1. We report the occurrence of security vio-

lation (3) and its absence (7) for each configuration.

Figure 6: Practical validation of theoretical results.

Discussion. Naturally in early stages of this work

we attempted to carry out the analysis by using

Frama-C (Cuoq et al., 2012), which is a pub-

licly available and well-known toolset for analysis

of C programs; which furthermore supports varia-

tion domains for variables by means of Eva plugin.

To encode function getUserId, we used the func-

tion random that returns a value within the domain

[0, . . . , 150 × 10

6

]. We encoded function read in a

similar way. To check whether the targeted security

property is satisfied or not, we inserted the following

assertion in the header (requires clauses):

//@ assert userId==0;

indicating that only an authorized user (an adminis-

trator) can execute the first branch. We faced with an

issue, Frama-C uses a constant f c rand max = 32767

that bounds the returned values of random function

call. For circumventing this problem, we encoded the

range of values of the domain in an array. Although,

the tool inserts a clause in the program, the latter is

not relevant, it is not linked to a particular architec-

ture. Indeed, software security analysis in most ex-

isting tools are performed, regardless to a particular

execution architecture. Nowadays, it is known that

vulnerabilities can be inadvertently introduced by the

execution environment for various reasons, typically

they can be induced by compiler settings

2

.

4 RELATED WORKS

Assessing security properties of software components

relying on formal approaches is an active area of

2

https://software.intel.com/content/www/us/en/develop/

articles/size-of-long-integer-type-on-different-architecture-

and-os.html

ICSOFT 2021 - 16th International Conference on Software Technologies

182

Table 1: Effects of Various Architectures on Arithmetic Overflow.

Target architecture x86 64

Target OS Compiler without flag -m32 with flag -m32

Windows10 64

gcc10.2.0 3

clang11.0.0 3 3

Ubuntu18.04 64

gcc7.5.0

7 3

clang6.0.0

Ubuntu 14.04 32 gcc4.8.2 3 3

OS X 64 clang11.0.0 7 3

research. In contrast, to model-driven engineering

(or security by design) approach offering methodolo-

gies for designing secure system e.g. (Ameur-Boulifa

et al., 2018), in this paper we focus on the security by

certification approach, we described how security vul-

nerabilities and architectural features might be cap-

tured by security experts and verified formally by de-

velopers. We focus on errors induced by integer over-

flow.

Several works, e.g. (Wagner et al., 2000; Dietz

et al., 2015) aim to a better understanding integer

overflow in programming language, and extract as-

sertions from a source code. In (Dietz et al., 2015),

authors have demonstrated that the analysis of inte-

ger can be driven by using logical expressions as pre-

condition tests to include in the C/C++ source code

and following the code execution check whether post-

condition CPU flags are set appropriately. An ac-

tive research is done to improve software security as-

pect by identifying potential vulnerabilities vulner-

abilities. Some of them are based on static analy-

sis (Han et al., 2019; Aggarwal and Jalote, 2006), dy-

namic analysis (Aggarwal and Jalote, 2006) and sym-

bolic execution (Zhang et al., 2010; Li et al., 2013;

Boudjema et al., 2019).

In (Zhang et al., 2010), authors present a security

testing approach based on symbolic execution. The

efficiency of this approach depends on the relevancy

of the set of test cases given as entries. An improved

approach is proposed in (Li et al., 2013), without tak-

ing into account test cases. A forward and backward

analysis are performed to detect vulnerable instruc-

tions and construct data flow trees based on sensitive

data. The path exploration problem is controlled by

considering only execution paths related to vulnera-

bilities. The approach presented in this paper is the

closest to our approach. However the approach pre-

sented in this paper does not consider vulnerabilities

that may be induced by the execution environment.

Some work focused on binary code. Specifically

in (Boudjema et al., 2019), authors detect exploits by

combining concrete and symbolic execution with sen-

sitive memory zones analysis. A fixed-length execu-

tion traces annotated by sensitive memory zones are

considered, based on a limit execution time of the

given code, so vulnerabilities that arise after a long

execution time are not detected. The approach pro-

posed in (Wang et al., 2008) to detect vulnerabilities

caused by buffer overflow on binary code relies on

symbolic execution and satisfiability analysis. It uses

also a technique for automatically bypassing some

security protections. In (Han et al., 2019), vulner-

abilities are checked considering only those match-

ing given behaviour patterns. A control flow graph is

computed, then the corresponding vulnerability exe-

cutable path set is defined considering only paths with

vulnerability nodes. This method strongly depends

on the relevancy of the patterns used, while in our ap-

proach all predicates saved in the knowledge database

can be used to catch vulnerabilities.

From the perspective of tools, several have been

developed to detect vulnerabilities on source or bi-

nary code e.g. in (Wang et al., 2008; Boudjema

et al., 2019; Han et al., 2019; Ognawala et al., 2016).

For example, the tool developed in (Ognawala et al.,

2016) combines symbolic executions and path explo-

ration to detect exploits on binary code. It is also able

to assign severity levels to reported vulnerabilities.

5 CONCLUSION AND FUTURE

WORK

The security aspect of critical programs can be im-

proved by eliminating vulnerability bugs, it helps to

increase the system robustness against attacks. In this

paper, we proposed a formal-based approach to detect

security vulnerability induced by integer errors. One

major advantage of our approach, it relies on models

describing both, a system’s architecture and software

in integrated formulas. Furthermore, security anal-

ysis is conducted via combination of symbolic ex-

ecution and satisfiability analysis to check vulnera-

bility bugs caused by an unsafe programs, and even

those introduced inadvertently by the execution envi-

ronment. We analyzed program source code to iden-

tify the critical instructions or operations, which can

Detection of Security Vulnerabilities Induced by Integer Errors

183

be exploited to cause an unsafe memory behaviour.

For that, a safety knowledge base is constructed using

a formal representation of errors and recommenda-

tion reports and also requirement that can provided by

users. The created safety knowledge base is used to

improve the obtained formulas by adding safety con-

straints. The latter are checked by an SMT solver to

detect vulnerability bugs. We illustrate our approach

through an example. Definitely the efficiency of our

approach depends strongly on the relevancy of the

used safety knowledge base. The current work ad-

dresses the problem of extracting and using knowl-

edge of attack and software vulnerabilities directo-

ries to build safety properties patterns. An alterna-

tive solution would be to use interactive annotation

through an interface for the tool as it has been done

in (Thomas, 2015). The aim of our ongoing and fu-

ture work is to formalize more unsafe predicate in-

cluding pointer references, cast operations and more.

By expanding the knowledge data base, we can treat

a broader class of bugs, and can then identify more

vulnerabilities.

REFERENCES

2019 CWE Top 25 Most Dangerous Software Errors.

https://cwe.mitre.org/top25/archive/2019/2019 cwe

top25.html.

CERN Computer Security. https://security.web.cern.ch.

Aggarwal, A. and Jalote, P. (2006). Integrating static and

dynamic analysis for detecting vulnerabilities. In 30th

Annual International Computer Software and Appli-

cations Conference, volume 01, pages 343–350, USA.

IEEE Computer Society.

Ameur-Boulifa, R., Lugou, F., and Apvrille, L. (2018).

SysML model transformation for safety and secu-

rity analysis. In ISSA 2018:International workshop

on Interplay of Security, Safety and System/Software,

Spain. ACM IPCS.

Boudjema, E. H., Verlan, S., Mokdad, L., and Faure, C.

(2019). VYPER: Vulnerability detection in binary

code. volume 3. Wiley.

Cuoq, P., Kirchner, F., Kosmatov, N., Prevosto, V., Sig-

noles, J., and Yakobowski, B. (2012). Frama-C - A

software analysis perspective. In 10th International

Conference, SEFM 2012, Greece, October 1-5, 2012.

Proceedings, volume 7504 of Lecture Notes in Com-

puter Science, pages 233–247. Springer.

Dietz, W., Li, P., Regehr, J., and Adve, V. (2015). Un-

derstanding Integer Overflow in C/C++. ACM Trans.

Softw. Eng. Methodol., 25(1).

Graf, J., Hecker, M., and Mohr, M. (2013). Using JOANA

for Information Flow Control in Java Programs -

A Practical Guide. In Software Engineering 2013,

Aachen, volume P-215 of LNI, pages 123–138. .

Han, L., Zhou, M., Qian, Y., Fu, C., and Zou, D. (2019). An

optimized static propositional function model to de-

tect software vulnerability. IEEE Access, 7:143499–

143510.

Hohnka, M. J., Miller, J. A., Dacumos, K. M., Fritton,

T. J., Erdley, J. D., and Long, L. N. (2019). Evalu-

ation of compiler-induced vulnerabilities. Journal of

Aerospace Information Systems, 16(10):409–426.

Kosmatov, N. and Signoles, J. (2013). A lesson on run-

time assertion checking with Frama-C. In 4th Inter-

national Conference Runtime Verification RV 2013,

France, September 24-27, 2013. Proceedings, volume

8174 of Lecture Notes in Computer Science, pages

386–399. Springer.

Li, H., Kim, T., Bat-Erdene, M., and Lee, H. (2013).

Software vulnerability detection using backward trace

analysis and symbolic execution. In International

Conference on Availability, Reliability and Security,

ARES 2013, Germany, September 2-6, 2013, pages

446–454. IEEE Computer Society.

Ognawala, S., Ochoa, M., Pretschner, A., and Limmer,

T. (2016). Macke: Compositional analysis of low-

level vulnerabilities with symbolic execution. In 31st

IEEE/ACM International Conference on Automated

Software Engineering, ASE 2016, page 780–785,

USA. Association for Computing Machinery.

Seacord, R., Dormann, W., McCurley, J., Miller, P., Stod-

dard, R., Svoboda, D., and Welch, J. (2012). Source

Code Analysis Laboratory (SCALe). Technical Re-

port CMU/SEI-2012-TN-013, Software Engineering

Institute, Carnegie Mellon University, Pittsburgh, PA.

Thomas, T. (2015). Exploring the usability and effective-

ness of interactive annotation and code review for

the detection of security vulnerabilities. In 2015

IEEE Symposium on Visual Languages and Human-

Centric Computing, VL/HCC 2015, USA, October 18-

22, 2015, pages 295–296. IEEE Computer Society.

Van der Veen, V., Dutt-Sharma, N., Cavallaro, L., and Bos,

H. (2012). Memory Errors: The Past, the Present, and

the Future. In Research in Attacks, Intrusions, and

Defenses, pages 86–106, Berlin, Heidelberg. Springer

Berlin Heidelberg.

Wagner, D., Foster, J. S., Brewer, E. A., and Aiken, A.

(2000). A first step towards automated detection of

buffer overrun vulnerabilities. In Network and Dis-

tributed System Security Symposium, pages 3–17.

Wang, L., Zhang, Q., and Zhao, P. (2008). Automated de-

tection of code vulnerabilities based on program anal-

ysis and model checking. In 8th International Work-

ing Conference on Source Code Analysis and Manip-

ulation (SCAM 2008), 28-29 September 2008, China,

pages 165–173. IEEE Computer Society.

Younan, Y., Joosen, W., and Piessens, F. (2004). Code

injection in C and C++:A survey of vulnerabilities

and countermeasures. Technical report, Department

Computer Wetenschappen, Katholieke Universiteit NI

Leuven.

Zhang, D., Liu, D., Lei, Y., Kung, D. C., Csallner, C., and

Wang, W. (2010). Detecting vulnerabilities in C pro-

grams using trace-based testing. In IEEE/IFIP Inter-

national Conference on Dependable Systems and Net-

works, DSN 2010, USA, June 28 - July 1 2010, pages

241–250. IEEE Computer Society.

ICSOFT 2021 - 16th International Conference on Software Technologies

184