PrendoSim: Proxy-Hand-Based Robot Grasp Generator

Diar Abdlkarim

1,3

, Valerio Ortenzi

2

, Tommaso Pardi

1

, Maija Filipovica

1

,

Alan M. Wing

1

, Katherine J. Kuchenbecker

2

and Massimiliano Di Luca

1

1

University of Birmingham, U.K.

2

Max Planck Institute for Intelligent Systems, Stuttgart, Germany

3

Obi Robotics Ltd, U.K.

www.obirobotics.com

Keywords:

Robot Grasping, Simulation, Virtual Environment, Virtual Reality.

Abstract:

The synthesis of realistic robot grasps in a simulated environment is pivotal in generating datasets that support

sim-to-real transfer learning. In a step toward achieving this goal, we propose PrendoSim, an open-source

grasp generator based on a proxy-hand simulation that employs NVIDIA’s physics engine (PhysX) and the

recently released articulated-body objects developed by Unity (https://prendosim.github.io). We present the

implementation details, the method used to generate grasps, the approach to operationally evaluate stability of

the generated grasps, and examples of grasps obtained with two different grippers (a parallel jaw gripper and

a three-finger hand) grasping three objects selected from the YCB dataset (a pair of scissors, a hammer, and a

screwdriver). Compared to simulators proposed in the literature, PrendoSim balances grasp realism and ease

of use, displaying an intuitive interface and enabling the user to produce a large and varied dataset of stable

grasps.

1 INTRODUCTION

Robots exhibit good manipulation skills in structured

environments, where objects are in controlled posi-

tions, information about the environment is complete

and accurate, and actions are repetitive. However,

grasping and manipulation become challenging in un-

structured environments, such as households and hos-

pitals. Here, perception can be noisy and unreliable,

so each action requires reasoning on imperfect sen-

sory data. Despite these problems, robots are in-

creasingly used in industry settings that require com-

plicated dexterous manipulation, such as grasping a

hammer to pound a nail. Sometimes, robots are used

in collaborative, joint-manipulation tasks with human

partners, e.g., during the assembly of a piece of furni-

ture, where the robot could pass tools to the human

partner (Ortenzi et al., 2020b) or directly tighten a

screw on a wood panel held by the human partner.

Collaboration adds another level of complication, as

there are two interacting agents sharing the space and

the tools (Ajoudani et al., 2017).

To be useful in these scenarios, robots need to be

able to grasp objects. Robot grasping has been studied

for over three decades, and enormous advances have

Figure 1: Spherical object grasped using PrendoSim’s Bar-

rettHand gripper (solid rendering) and depiction of the kine-

matic gripper employed in the proxy-hand method (mesh

lines).

been achieved in perception, planning, and grasp syn-

thesis. However, some of the assumptions on which

most of the work is based are still difficult to re-

lax: objects are usually assumed to be rigid, fric-

tion is generally ignored or assumed to be uniform

for the entire object, functional parts are usually not

accounted for, and the task to be performed with the

object is not considered (Ortenzi et al., 2019).

60

Abdlkarim, D., Ortenzi, V., Pardi, T., Filipovica, M., Wing, A., Kuchenbecker, K. and Di Luca, M.

PrendoSim: Proxy-Hand-Based Robot Grasp Generator.

DOI: 10.5220/0010549800600068

In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2021), pages 60-68

ISBN: 978-989-758-522-7

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Many current approaches to grasping are looking

into robot learning as a way of overcoming these limi-

tations (Levine et al., 2018). However, learning grasp-

ing policies applicable to a wide range of situations

requires large datasets of grasps, i.e., employing dif-

ferent objects, different poses for the objects, differ-

ent grippers, and different tasks. It is generally im-

practical to obtain a high number of successful grasps

with real robots; thus, simulators are commonly used

instead. However, the main challenges with simula-

tors are (i) to generate an extensive range of grasps

and (ii) to ensure that the grasps sufficiently replicate

the characteristics of real robots grasping real objects,

minimising sim-to-real differences.

Here, we present PrendoSim, an open-source

grasp generator based on the popular Unity game en-

gine (Unity Technologies, San Francisco, CA), which

allows one to create visually and physically realistic

grasping by using advanced physics simulation of dy-

namic properties, such as friction, weight, weight dis-

tribution, and inertia (Fig. 1). As opposed to most

other grasp generators, PrendoSim has been designed

to require only minimal knowledge of mechanics and

can be controlled with an intuitive user interface with

no programming requirements. The generated grasp

configurations are stored both as an image (PNG for-

mat) and in a standard JSON file format (JavaScript

Object Notation) to enable visual and numerical anal-

ysis and sharing to hardware systems. The version of

PrendoSim presented in this paper can be downloaded

for free from the following URL: https://prendosim.

github.io

2 RELATED WORK

The synthesis and evaluation of grasp candidates in-

volve several aspects that make selecting a suitable

grasp challenging, e.g., the object’s geometry, the ob-

ject’s friction parameters, and the mechanical charac-

teristics of the gripper. Some approaches capitalise

on the design of the hardware to ensure stable grasp-

ing and simplify control. This is the case for in-

dustrial applications where either suction or parallel

jaw grippers are generally used (Honarpardaz et al.,

2017). When more sophisticated grippers are em-

ployed, e.g., multi-fingered hands inspired by the hu-

man hand, the increase in dexterity comes at the cost

of higher complexity and control effort (Berceanu and

Tarnita, 2012).

Given a gripper and an object to grasp, there are

usually a number of possible grasps to choose from.

Force- and form-closure methods select grasp candi-

dates based on stability. For example, force-closure

is based on a mathematical formula that analytically

determines whether the gripper and the object form

a system resistant to external wrenches (Nguyen,

1988). For this, physical quantities like the geometric

configuration of the grasp and of the object, the forces

applied, gravity, and friction all need to be considered

to compute whether the grasp is stable (Ferrari and

Canny, 1992; Bicchi and Kumar, 2000; Ding et al.,

2001). However, there is no consensus on how to de-

fine stability across the community. More operational

definitions consider grasps to be stable whenever the

object is held by the robot gripper for more than a

certain amount of time, or after shaking (Bekiroglu

et al., 2020). PrendoSim similarly adopts an opera-

tional definition of stability, which we will cover in

detail in the following sections.

There are a number of different approaches for

grasp selection. Work like (Gualtieri et al., 2016)

proposed to use artificial neural networks to gener-

ate and evaluate grasps for parallel grippers. At first,

the network learns the key features for grasping ob-

jects from a dataset. Then, it picks the grasp config-

uration that is similar to the ones that had a greater

degree of success. Such simulation-based approaches

can be successful in simulated environments and with

known objects, but they do not usually allow for suc-

cessful sim-to-real transfer learning. Other methods

propose employing local features to make the grasp

more robust (Adjigble et al., 2018). In this case an

algorithm scans the object surface to identify areas

where the local object curvature matches the finger’s

curvature. This method yields more robust results as

it is based on real point clouds and on the kinematics

of the gripper, and it could extend to more complex

grippers with more than two fingers. However, sev-

eral factors (dynamics, forces, friction and the task

context) are not considered in selecting and evaluat-

ing the grasps.

Although researchers are attempting to improve

grasping generation strategies to include new ele-

ments such as friction coefficients (Nguyenle et al.,

2021), only a few of these new grasp generators are

open source and available for download. GraspIt!

(Miller and Allen, 2004) is among the most frequently

used simulators. It provides a framework for test-

ing grasp strategies on a variety of hands. It has a

3D graphical interface, and both robots and obsta-

cles can be loaded using Python scripts. URDF and

XML configuration files allow the user to simulate

complex scenes with more than one robot and ob-

ject, e.g., a kitchen with a service robot. The soft-

ware generates a set of grasps that satisfy the force-

closure metric and are feasible considering the robot

kinematics. The physics engine of GraspIt! allows

PrendoSim: Proxy-Hand-Based Robot Grasp Generator

61

the user to pick up objects and check the dynamic be-

haviour of both robot and object. Because of its ver-

satility, researchers have developed several packages

that integrate GraspIt! with the Robot Operating Sys-

tem (ROS) and Gazebo, a popular visualization and

development tool in the robotics community. How-

ever, the main branch of GraspIt! has been discontin-

ued, and only a few volunteers are maintaining the

software for specific applications. Also, GraspIt! is

platform dependent and only available for the Linux

operating system.

Another very common simulator is Simox

(Vahrenkamp et al., 2012). Simox is a robotic tool-

box for motion and grasp planning, and it allows the

import of complex kinematic chains, like humanoids

and mobile robots. Simox is developed in C++, which

affords efficiency, and it can be used by either script or

interface. An additional strength is that it is platform

independent. The user may load the CAD model of an

object and a hand using an XML file. Then, the algo-

rithm generates a sequence of robust grasp points on

the object surface. A collection of metrics are already

available in the software. Additionally, users may de-

fine their own metric. By default, Simox produces

grasp locations according to the force-closure metric.

This software considers friction by defining friction

cones at the contact points between hand and object.

However, it discounts the effects of an object’s weight

and distribution of mass while grasping. Thus, grasps

produced by Simox may fail to keep the object in-

hand in a real scenario due to mismatches between the

simulated and real interactions. Moreover, Simox in-

stallation requires advanced programming skills to set

up the environment correctly. Our PrendoSim eases

the burden for the user with a friendly installation pro-

cess suitable also for programming-naive users.

Beside specific software targeted at grasping, the

robotics community also resorts to more general

simulators, e.g., CoppeliaSim

1

, PyBullet

2

, MoveIt

3

and MuJoKo

4

. These software packages offer well-

rounded physics engines and allow the simulation of

complex scenes, so that they can be employed to test

grasps. However, they are not designed to generate

grasps and do not implement any grasp generator by

default. Therefore, users must code a generator that

is tailored to the application at hand. Only a few

programmers have released grasp generators for these

software packages, e.g., multi-contact-grasping

5

is a

grasp generator for CoppeliaSim, and OpenAI devel-

1

https://www.coppeliarobotics.com/

2

https://pybullet.org/wordpress/

3

https://moveit.ros.org/

4

http://www.mujoco.org/

5

https://github.com/mveres01/multi-contact-grasping

oped a Python package for PyBullet.

In addition to the custom grasp generators and

more generic simulators mentioned so far, there is

another class of simulation engines offered through

game engines, such as Unity. Until recently, how-

ever, it was challenging to create realistic simulators

in this class of software, because engines emphasised

smooth game performance rather than physical real-

ism. In other words, modelling kinematic chains or a

system of connected hinges, as often seen in robotics,

would have resulted in unstable and unrealistic mo-

tion, unfit for translating to a real robot. With the

latest release of Unity v2020.1 beta, this situation has

now changed. Major game engines like Unity are now

incorporating state-of-the-art, hardware-accelerated,

real-world physics simulators with the aim to support

a growing robotics community in research and proto-

type development.

In the following sections we describe how our

grasp generator takes advantage of Unity’s new and

powerful physics simulator, our proxy-hand-based

grasping method, and the performance test to evalu-

ate generated grasps. We report a number of resulting

grasps and describe the output of PrendoSim.

3 PrendoSim

Our simulator takes advantage of Unity’s articulated

bodies to define a set of connected objects organized

in a hierarchical parent-child tree. This framework

can be used to define mutually constrained parts, such

as joints, digits and rotational limits in a robot gripper.

The root of the hierarchy tree is the base of the robot

gripper, and the furthest components in the tree are the

tips of the gripper’s digits. Moreover, we implement a

new method of contact force estimation for simulated

robotic object grasping. The estimated grip forces are

checked in our grasp stability test, where we gradually

increase the loading on the object until it slips.

Our grasp generator also takes advantage of

Unity’s physics materials to define friction. To com-

pute the friction coefficient, which determines the ra-

tio between lateral force and normal force, our ap-

proach combines material-dependent parameters of

the gripper and object and a Coulomb friction model

with separate static and kinetic coefficients. We de-

scribe the implementation details in Sect. 3.3. Finally,

physics materials also define a term for elasticity, but

in our simulations we have set this term to zero. In

other words, we simulated only rigid surfaces even

though elasticity could be varied to extend the scope

of the project.

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

62

3.1 Proxy-Hand Method

We adopted a method that is commonly employed in

haptic rendering and virtual reality simulation. The

method can take several names depending on the spe-

cific implementation such as proxy, avatar, virtual

tool, or god-object. Because it is under direct user

control, the proxy is not amenable to exact predic-

tion (Dworkin and Zeltzer, 1993). Its interactions

with objects cannot be anticipated and thus need to

be simulated. An algorithm was proposed where such

a user-controlled object retains information about its

contacts with objects to improve force rendering by

considering the history of the interaction (Zilles and

Salisbury, 1995).

The proxy-hand method (Borst and Indugula,

2005) applies similar principles to simulate grip-

force-based object grasping without attempting to

predict the action of the robot gripper. We use a

kinetic gripper that physically interacts with the vir-

tual objects and is driven by a kinematic copy, which

does not interact with the virtual object. We apply

this method using Unity’s “Articulated Body” compo-

nent. The articulated component of each of the grip-

per’s joints defines the associated stiffness and damp-

ing parameters, the maximum force or torque a joint

can exert, and the joint’s positional or angular lim-

its, all according to the manufacturer’s specifications.

The opening and closing motions of the kinetic grip-

per are driven gradually by updating the target angle

or position of the kinematic gripper. Without a gras-

pable object, all joints are driven to their angular or

positional limit, reaching the gripper’s closed state at

the same time.

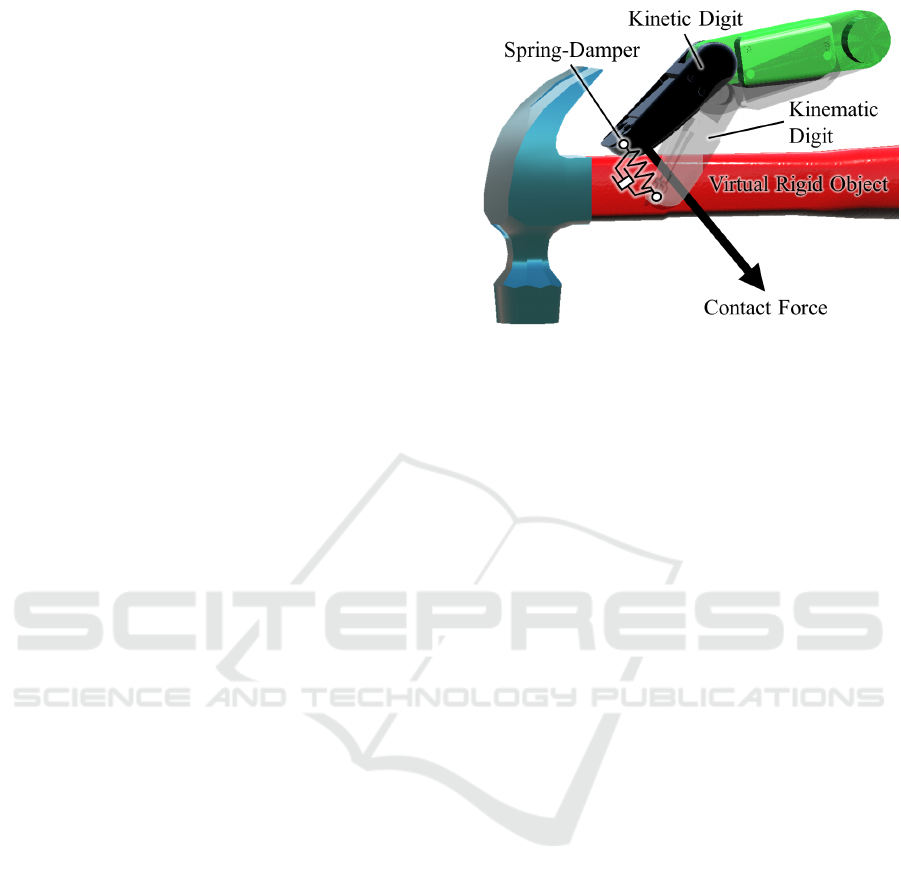

The tips of each of the kinematic and kinetic grip-

per’s digits are coupled with a spring and a damper

(Fig. 2). Because of this coupling, the configuration

of the kinetic gripper closely follows that of the kine-

matic gripper unless there are contacts with objects.

Unity’s collision-detection algorithm prevents inter-

penetration between the kinetic gripper and the ob-

ject, while the kinematic gripper penetrates into the

object. If the kinetic gripper is in contact with the ob-

ject, the configuration of the two simulated grippers

differs and loads the springs that connect the finger-

tips, thus creating a contact force applied by the ki-

netic gripper. The contact force is proportional to the

distance between the tips of the kinematic and kinetic

grippers (penetration distance) (H

¨

oll et al., 2018). To

achieve a grasp that does not overly squeeze the ob-

ject, we set a force threshold to stop the squeeze based

on the known object mass. For example, we set a

5.5 N contact force threshold for an object with 500 g

mass, which is about 10% larger than the minimum

Figure 2: Contact force generation using the proxy-hand

method. The positional discrepancy between the kinematic

and kinetic digits results in a force (F ) computed from the

spring-damper linking the two.

5 N required to hold the object. This contact force is

divided evenly between the digits in contact with the

object so that the set total grip force is exerted on the

object.

3.2 Grasp Generation

Our simulator synthesises gripper configurations

based on a grasp quality index computed through

a dynamically changing load force, which also ac-

counts for friction. The following steps estimate the

performance of a randomly generated object grasp

under friction and dynamics. This sequence of ac-

tions forms the core strategy of PrendoSim; it allows

derivation and validation of every grasp candidate for

any given target object.

1. The gripper starts in a fully open configura-

tion. Any degree of freedom (DOF) available for

changing the type of grasp is set to a random joint

value.

2. One of the provided target objects is randomly

chosen and instantiated in front of the gripper,

with a random pose held statically for one second.

3. Within the one-second window the gripper pro-

ceeds to close as described in section 3.1.

4. The target object is then released to allow physical

interaction with the gripper’s digits.

5. The object will then either fall due to gravity or

reach a stable state (i.e., no motion for two sec-

onds) within the gripper. In the former case, we

discard the grasp. In the latter, we consider the

grasp successful.

PrendoSim: Proxy-Hand-Based Robot Grasp Generator

63

Table 1: Static (π

s

) and kinetic (π

k

) friction parameters for

the two grippers used in PrendoSim. Friction coefficients

µ

s

and µ

k

are calculated by averaging the friction parameter

value of the gripper and the value of the object surface that

it is touching (shown in Table 2).

Gripper π

s

π

k

Franka Emika 0.90 0.50

BarrettHand 0.70 0.25

6. To further evaluate grasp stability, we gradually

increase the mass of the object at a rate of 1.2 kg/s

to measure the point at which the object starts to

fall out of the gripper. An empirically selected ve-

locity threshold of 2.5 cm/s is used to detect when

the object starts to fall.

7. The object mass at this point is defined as the crit-

ical mass.

8. Optionally, this value, together with the gripper’s

joint configuration, applied contact forces, and a

screenshot of the scene are stored in a JSON-data

and PNG-image file, respectively.

By repeatedly following this procedure, Pren-

doSim obtains a collection of successful grasps and

provides a metric for grip stability based on the crit-

ical mass. This approach is agnostic to the gripper

type and target object.

3.3 Grippers and Objects

PrendoSim provides two types of grippers and a set of

three target objects with different shapes.

3.3.1 Grippers

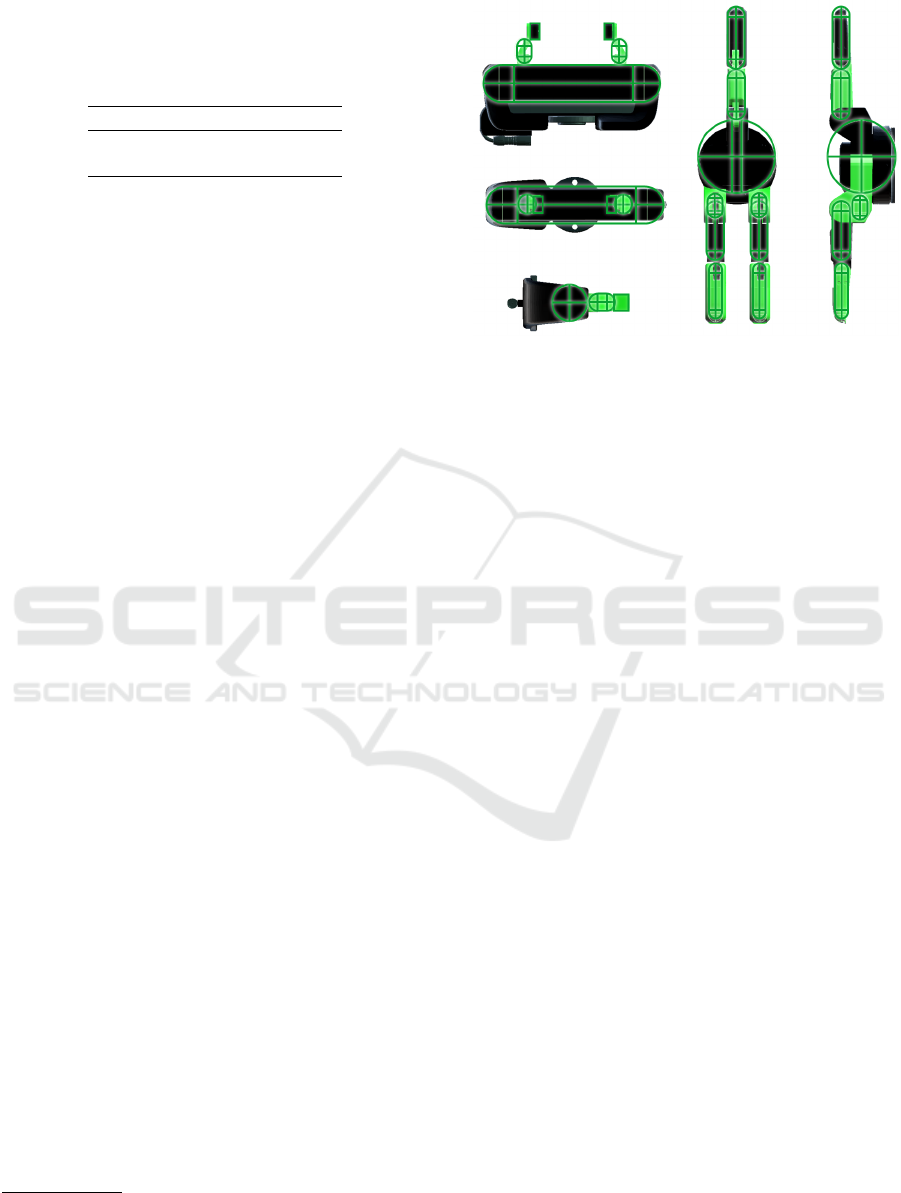

The two built-in grippers consist of a two-finger,

parallel jaw gripper (Franka Emika gripper

6

), and

a three-digit gripper (BarrettHand BH8-series from

Barrett Technology

7

); both appear in Fig. 3. Each

gripper has a set of primitive shape colliders to de-

fine its physical shape. Unlike other simulators, we

use primitive shape colliders for improved computa-

tional efficiency as our simulator runs in real time (up

to 500 frames per second). Finally, we equip each

gripper with one physics material to describe the grip-

per’s contact-point friction parameters (Table 1).

The choice of these two grippers allows for a large

range of variability in the types of grasps that can

be generated. Parallel jaw grippers are particularly

favoured in industry as they offer a high degree of ro-

bustness, and only two opposite grasping points must

be selected to pick up an object. However, more

6

https://github.com/frankaemika

7

https://advanced.barrett.com/barretthand

Figure 3: Franka Emika (left) and BarrettHand grippers

(right) with highlighted primitive-shapes colliders (green

lines).

sophisticated behaviours, such as the distinction be-

tween precision and power grasps, are not possible.

In contrast, a multi-finger hand allows for more flex-

ibility in the choice of grasp. This higher complexity

comes at a cost of generally lower grasp robustness

and higher control effort.

The BarrettHand is a multi-fingered gripper with

a higher degree of dexterity that can grasp objects of

various sizes and shapes and at different orientations.

The three articulated fingers are composed of two in-

dependently moving joints with ranges of 140

◦

(finger

base joint) and 48

◦

(fingertip). They can each apply a

maximum force of 20 N at the fingertip. Two of the

fingers have a 180

◦

spread (mirrored joint) that allows

a change in grasp configuration, e.g., from cylindrical

grasp to spherical grasp. We obtain the open-hand

configuration from the kinematic description of the

BarrettHand when every finger is fully open. As for

the closed-hand configuration, we first load the grip-

per into Unity. Then, we set a capsule collider for

every digit of the gripper. The BarrettHand has three

fingers with two phalanges for each finger; therefore,

we obtain a total of six capsule colliders. Moreover,

we set a collider for the base of the gripper.

PrendoSim contains two built-in grippers, but it

is possible to include a new gripper by defining the

open-hand configuration and the colliders for each

of its digits, which will drive the kinematic gripper

throughout the closure movement.

3.3.2 Objects

There are presently three built-in objects in Pren-

doSim: a pair of scissors, a hammer, and a screw-

driver. We selected these models from the YCB

dataset (Calli et al., 2017), because they are famil-

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

64

iar to most people and offer different degrees of con-

straint difficulty for their intended use (Ortenzi et al.,

2020a). All objects are considered to be rigid, i.e.,

the blades of the scissors cannot open and close. We

divided each object into two functional areas: a tool

interface (grey area) and a grasp interface (red area)

(Fig. 4) (Osiurak et al., 2017). We accounted for ma-

terials, weight distribution, and friction separately on

both parts of each object (Table 2).

The pair of scissors present the most stringent con-

straints among the three tools. Although they have a

handle like a hammer and a screwdriver, this handle

requires the user to insert the fingers through the rings

to achieve the correct cutting motion. The blades must

enclose the material while an opening-and-closing

motion of the fingers performs the cut.

In particular, a hammer is designed to be grasped

by the handle (graspable side) and used by the head

(tool side). The handle is quite large, with respect to

the entire body, and can be grasped at any point. The

only constraint to its use is the head orientation with

respect to the object to pound. Thus, a hammer offers

loose constraints on the grasp.

Finally, the screwdriver is generally smaller than a

hammer, but the two structures are similar. The body

can be divided into two main functional areas: the

handle and the metallic rod. The handle is the gras-

pable part, and the metallic rod is the interface be-

tween the tool and the object to be tightened or loos-

ened with the screwdriver. A screwdriver presents

tighter constraints with respect to the hammer, as the

orientation of the rod has to match the approaching

direction of the screw, and the tip must be slightly in-

serted into the head of the screw.

Each object has a set of two specifically chosen

colliders that conform to the object’s shape as closely

as possible (Fig. 4). For the scissors, we have chosen

a concave mesh collider for the graspable side, which

more accurately wraps around the handle, leaving the

two holes as empty space. For the tool side of the

scissors, we have chosen a mesh collider. As for the

other two objects, a capsule collider was sufficient to

define the objects for accurate grasping.

PrendoSim additionally considers two, often ne-

glected, physical characteristics: weight distribution

and friction, for which appropriate values were se-

lected based on the material that made up each part

of the objects. These values are unique for each

target object, and they physically match their real-

world equivalents as closely as possible; however, a

few physics properties were globally set for all ob-

jects, i.e., angular and translational drag coefficients,

which were set to 0.05, Unity’s default setting. Unity

provides several collision detection algorithms, from

Figure 4: Geometry of the object colliders. Top: pair of

scissors in closed fixed position with a concave collider ap-

plied to the handle (graspable side) and a mesh collider ap-

plied to its tool side. Middle and bottom: Hammer and

screwdriver with two capsule colliders each; a capsule for

the tool side and another one for the graspable side. All

colliders are shown as green lines.

which we selected the “Continuous Dynamics” op-

tion, because it optimises the simulation while pre-

venting objects from intersecting one another.

Table 2 presents details about the physical char-

acteristics of each object deployed in the simulator.

Static and dynamic friction parameters match the real-

world equivalents based on the materials of each part

(tool or graspable interface) of the objects. We ob-

tained these coefficients from The Engineering Tool-

box

8

. The bounce coefficient was set to 0 for all ob-

jects to simulate perfectly plastic contacts, with no

rebound speed. Simulating grasps where the object

bounces around within the hand after contact is be-

yond the scope of PrendoSim.

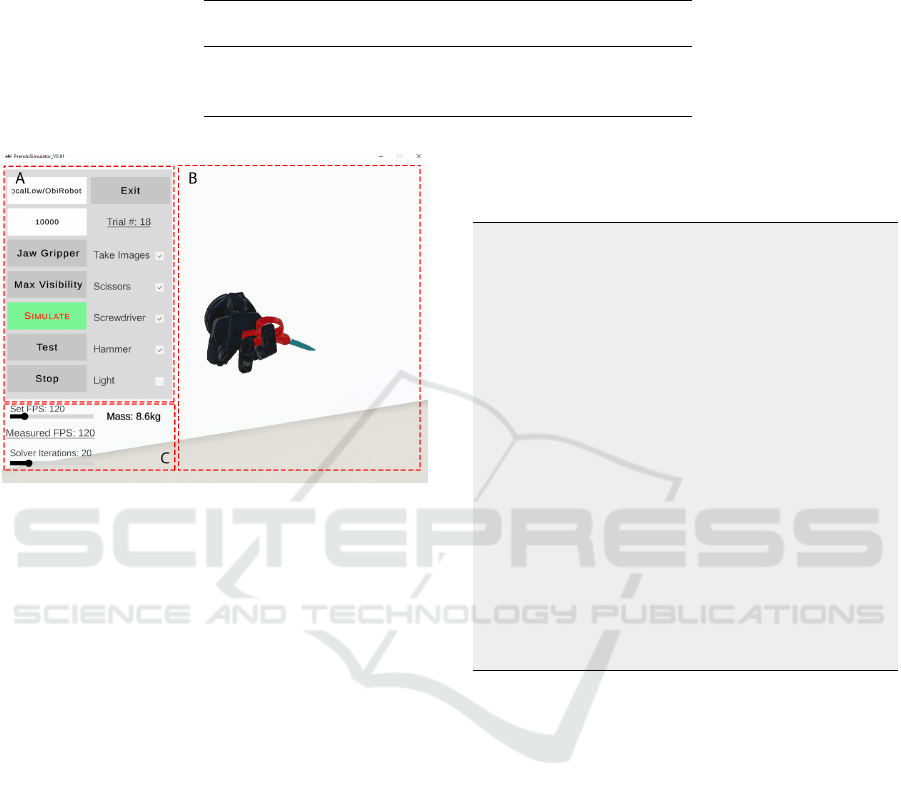

3.4 User Interface

The user interface (UI) is a critical point of our grasp

generator. PrendoSim provides an easy-to-use UI,

which allows an effortless selection of the various op-

tions and parameters for the simulations. The UI has

three sections: Section A with major inputs (Fig. 5A);

section C with minor inputs (Fig. 5C); and section

B with the simulation screen (Fig. 5B). In section A,

users are prompted to type the key parameters for the

simulation, i.e., number of simulations, gripper type,

target object, and the directory path where the data

will be stored after the simulation. Each trial is shown

in the simulation screen in section B. This section ren-

ders the 3D model of both gripper and object, and it

shows the current grasp in real time. The user can

8

https://www.engineeringtoolbox.com/friction-

coefficients-d 778.html

PrendoSim: Proxy-Hand-Based Robot Grasp Generator

65

Table 2: Simulated mass (m), static friction parameter (π

s

), and kinetic friction parameter (π

k

) of the two functional areas

(Osiurak et al., 2017) of the three objects in PrendoSim. Friction coefficients µ

s

and µ

k

are calculated by averaging the friction

parameters of the gripper (shown in Table 1) and the object surface it is touching.

Handle Side Tool Side

Object m (g) π

s

π

k

m (g) π

s

π

k

Scissors 25 0.78 0.25 80 1.05 0.47

Hammer 70 0.40 0.65 280 0.50 0.30

Screwdriver 50 1.13 1.40 85 0.35 0.80

Figure 5: PrendoSim user interface, with A: user input

fields, B: 3D visual rendering of the simulation scene, and

C: physics engine adjustment fields.

visually confirm the gripper’s configuration and qual-

itatively evaluate the input parameters. The viewpoint

can be regulated via mouse. In section C the user can

further adjust simulation-related parameters such as

sampling rate and the number of physics solver itera-

tions. Moreover, a display shows the current weight

of the object when the algorithm executes point 6 of

our strategy, as described in Sec. 3.2.

3.5 Output

PrendoSim outputs the object category, camera pose,

and the gripper’s pose in a JSON file. We record

the condition as the target object involved in the sim-

ulation, for example “Screwdriver”. We record the

camera’s view angle onto the scene in world coordi-

nates (position and rotation in quaternions). Then we

record the gripper’s digit positions and rotation an-

gles in both local (relative to the gripper’s root) and

world coordinates. The same data is also recorded for

the target object. Finally we take note of the target

object’s categorical orientation, defined in four cate-

gories: up, down, pointing away from the hand, and

turned with respect to the tool interface. For example

in Fig. 6, the tool interface of the screwdriver is point-

ing away from the gripper; hence it has been labelled

as “pointing” in the output file, which format is shown

below:

{

" conditionInfo ": " S c r e w d r i v er " ,

" cameraPose " : [ posX , po sY , p osZ ,

ro tX , r otY , ro tZ , rot W " ] ,

" join t D a t a " : [

" Di g it_ 1 _j oint _ 1 , pos X , posY , po sZ ,

ro tX , r otY , ro tZ , rot W "

" Di g it_ 1 _j oint _ 2 , pos X , posY , po sZ ,

ro tX , r otY , ro tZ , rot W "

" D ig i t _ n _ j o i n t _ n , po sX , posY , p osZ ,

ro tX , r otY , ro tZ , rot W " ]

" root " : [ po sX , po sY , p osZ , ro tX , r otY

, rotZ , ro tW "] ,

" objec t P o s e " : [ " scr e w d ( Cl one ) ,

po sX , p osY , po sZ , rot X , rotY , ro tZ ,

ro tW "],

" categoricalDir " : [ " po i nting , rot X

, rotY , ro tZ , r otW " ]

}

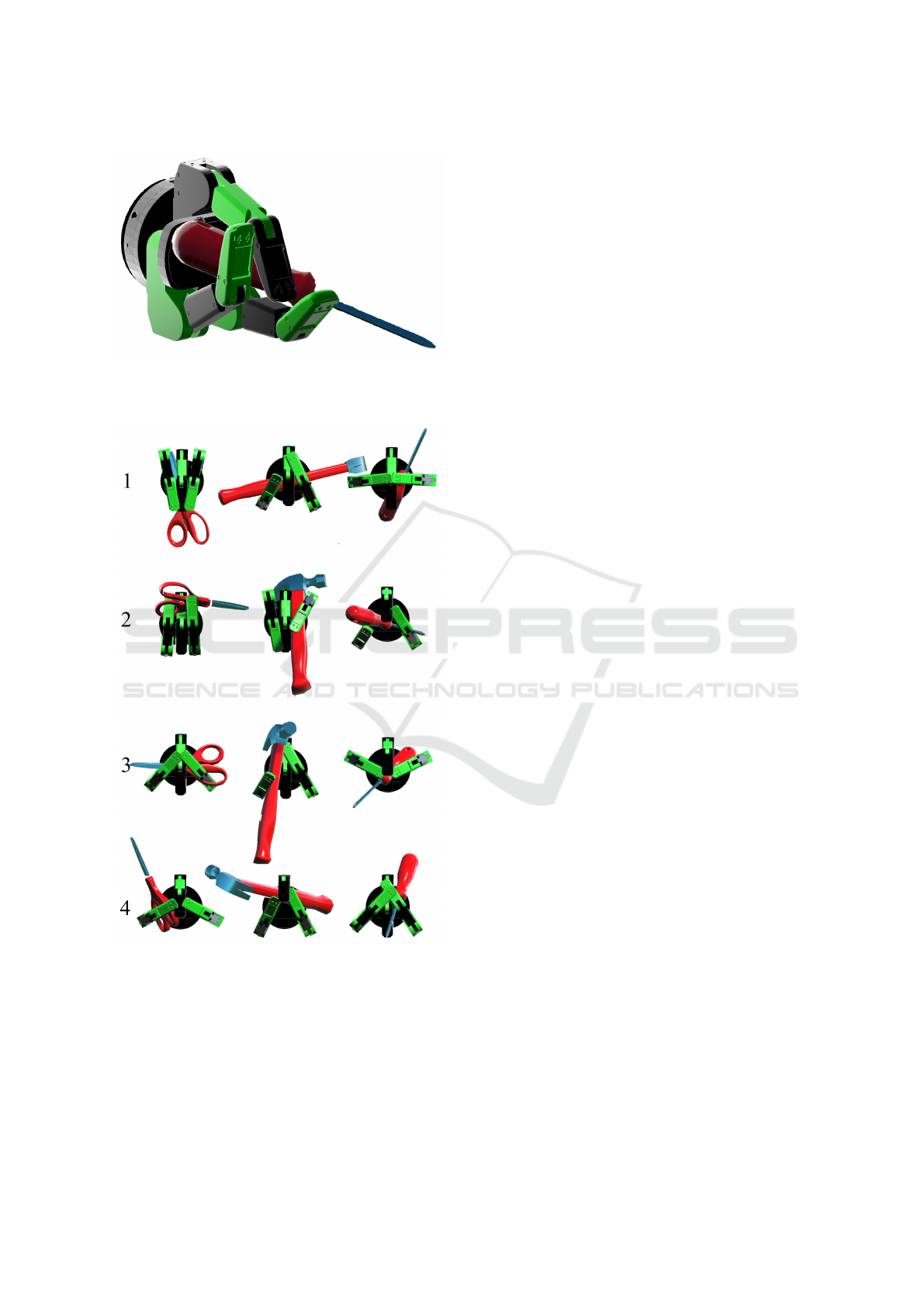

Finally, in Fig. 7 we present a series of stable grasps

generated by PrendoSim. We report four grasps

per object to show qualitatively the variety of stable

grasps generated by PrendoSim. Interestingly, some

of the grasps are not human-like (see example grasp

1 for the scissor in Fig. 7) and could not have been

generated by following a human-inspired generation

process based on learning from demonstration.

4 DISCUSSION AND

CONCLUSION

We present a novel grasp generator that accounts for

dynamic properties of the gripper and the grasped ob-

ject, including static and dynamic friction, weight,

and weight distribution. PrendoSim is built on Unity’s

latest physics articulated-body component, and it

presents two built-in grippers (a parallel jaw gripper

and a three-finger hand) and three built-in objects (a

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

66

Figure 6: BarrettHand successfully grasping a screwdriver

pointing away from the gripper.

Figure 7: Four examples of grasps for each of the target ob-

jects (a pair of scissors, a hammer, a screwdriver) arranged

in columns.

pair of scissors, a hammer, and a screwdriver). Pren-

doSim utilises the proxy-hand method to compute

forces at the contacts and provide an operational defi-

nition of grasp stability. The intuitive interface allows

the user to easily set up the simulations.

PrendoSim has the great advantage of describ-

ing objects realistically, allowing non-uniform mate-

rials by defining areas with different dynamic prop-

erties. Moreover, it is easy to use, open source and

downloadable for free from the following URL: https:

//prendosim.github.io. We believe that it can be ex-

tended to VR simulation with haptic rendering con-

sidered a god-object (Dworkin and Zeltzer, 1993) that

retains information about contact with objects (Zilles

and Salisbury, 1995).

PrendoSim has a few limitations and room for im-

provement. At the moment, a gripper configuration

is considered successful if the object remains in the

grasp after the hand first closes on it with a moder-

ate grasp force. We are planning to add more grasp

stability measures, such as force closure, in order to

enable a wider validation of the results. The addi-

tion of further objects and grippers would give an even

wider range of variation to the simulations. Currently,

such additions are possible by directly working on the

files in the open-source project of PrendoSim. While

the consideration of parameters such as friction and

weight distribution has reduced the gap between sim-

ulation and the real world, we are also planning to

replicate the grasps generated by PrendoSim on a real

robot in order to more thoroughly verify its output.

To enable more advanced image analysis, such as

3D image reconstruction or deep learning, our simu-

lator could be extended to include depth-imaging or

multi-camera functionalities by adding more cameras

in the scene in Unity, which is currently possible with

the provided open-source project.

One of the problems with the proxy-hand method,

which has been highlighted in haptic rendering, is that

the simulated objects are rigid; thus, they have only

point contacts. This is a problem especially in hu-

man hand simulations, where contact areas are gen-

erally wider considering the soft deformations of the

fingertips. Contact area simulations can be added to

improve realism of contact dynamics, for example to

add point torques as in (Talvas et al., 2013).

We believe that PrendoSim is a tool that can bene-

fit multiple communities, as its applications span from

the development of grasp algorithms, to the recording

of grasp datasets, to the development of VR applica-

tions and human-subject experiments.

ACKNOWLEDGMENTS

This work was supported by the UK Engineering and

Physical Sciences Research Council (EPSRC) grant

EP/L016516/1 and by the Biotechnology and Bio-

logical Sciences Research Council (BBSRC) grant

PrendoSim: Proxy-Hand-Based Robot Grasp Generator

67

BB/R003971/1 for the University of Birmingham.

This work was partially supported by the Deutsche

Forschungsgemeinschaft (DFG, German Research

Foundation) under Germany’s Excellence Strategy –

EXC 2120/1 – 390831618.

REFERENCES

Adjigble, M., Marturi, N., Ortenzi, V., Rajasekaran, V.,

Corke, P., and Stolkin, R. (2018). Model-free

and learning-free grasping by local contact moment

matching. In Proceedings of the 2018 IEEE/RSJ In-

ternational Conference on Intelligent Robots and Sys-

tems (IROS), pages 2933–2940, Madrid, Spain.

Ajoudani, A., Zanchettin, A. M., Ivaldi, S., Albu-Sch

¨

affer,

A., Kosuge, K., and Khatib, O. (2017). Progress

and prospects of the human-robot collaboration. Au-

tonomous Robots.

Bekiroglu, Y., Marturi, N., Roa, M. A., Adjigble, K. J. M.,

Pardi, T., Grimm, C., Balasubramanian, R., Hang, K.,

and Stolkin, R. (2020). Benchmarking protocol for

grasp planning algorithms. IEEE Robotics and Au-

tomation Letters, 5(2):315–322.

Berceanu, C. and Tarnita, D. (2012). Mechanical design and

control issues of a dexterous robotic hand. Advanced

Materials Research, 463-464:1268–1271.

Bicchi, A. and Kumar, V. (2000). Robotic grasping and

contact: a review. In Proceedings of the IEEE In-

ternational Conference on Robotics and Automation

(ICRA), volume 1, pages 348–353.

Borst, C. and Indugula, A. (2005). Realistic virtual grasp-

ing. In Proceedings of the IEEE Conference on Virtual

Reality (VR), pages 91–320, Bonn, Germany.

Calli, B., Singh, A., Bruce, J., Walsman, A., Konolige, K.,

Srinivasa, S., Abbeel, P., and Dollar, A. M. (2017).

Yale-CMU-Berkeley dataset for robotic manipulation

research. The International Journal of Robotics Re-

search, 36(3):261–268.

Ding, D., Lee, Y.-H., and Wang, S. (2001). Computation

of 3-D form-closure grasps. IEEE Transactions on

Robotics and Automation, 17(4):515–522.

Dworkin, P. and Zeltzer, D. (1993). A new model for ef-

ficient dynamic simulation. In Proceedings of the

Fourth Eurographics Workshop on Animation and

Simulation, pages 135–147.

Ferrari, C. and Canny, J. (1992). Planning optimal grasps.

In Proceedings of the IEEE International Conference

on Robotics and Automation, volume 3, pages 2290–

2295.

Gualtieri, M., ten Pas, A., Saenko, K., and Platt, R. (2016).

High precision grasp pose detection in dense clutter.

In Proceedings of the IEEE/RSJ International Confer-

ence on Intelligent Robots and Systems (IROS), pages

598–605.

H

¨

oll, M., Oberweger, M., Arth, C., and Lepetit, V. (2018).

Efficient physics-based implementation for realistic

hand-object interaction in virtual reality. In Proceed-

ings of the IEEE Conference on Virtual Reality and

3D User Interfaces (VR), pages 175–182.

Honarpardaz, M., Tarkian, M.,

¨

Olvander, J., and Feng, X.

(2017). Finger design automation for industrial robot

grippers: A review. Robotics and Autonomous Sys-

tems, 87:104 – 119.

Levine, S., Pastor, P., Krizhevsky, A., Ibarz, J., and Quillen,

D. (2018). Learning hand-eye coordination for robotic

grasping with deep learning and large-scale data col-

lection. The International Journal of Robotics Re-

search, 37(4-5):421–436.

Miller, A. T. and Allen, P. K. (2004). GraspIt! a versa-

tile simulator for robotic grasping. IEEE Robotics Au-

tomation Magazine, 11(4):110–122.

Nguyen, V.-D. (1988). Constructing force- closure grasps.

The International Journal of Robotics Research,

7(3):3–16.

Nguyenle, T., Verdoja, F., Abu-Dakka, F., and Kyrki, V.

(2021). Probabilistic surface friction estimation based

on visual and haptic measurements. IEEE Robotics

and Automation Letters, pages 1–8.

Ortenzi, V., Cini, F., Pardi, T., Marturi, N., Stolkin, R.,

Corke, P., and Controzzi, M. (2020a). The grasp strat-

egy of a robot passer influences performance and qual-

ity of the robot-human object handover. Frontiers in

Robotics and AI, 7:138.

Ortenzi, V., Controzzi, M., Cini, F., Leitner, J., Bianchi, M.,

Roa, M. A., and Corke, P. (2019). Robotic manipula-

tion and the role of the task in the metric of success.

Nature Machine Intelligence, 1(8):340–346.

Ortenzi, V., Cosgun, A., Pardi, T., Chan, W., Croft, E., and

Kulic, D. (2020b). Object handovers: a review for

robotics. arXiv.

Osiurak, F., Rossetti, Y., and Badets, A. (2017). What is an

affordance? 40 years later. Neuroscience & Biobehav-

ioral Reviews, 77:403–417.

Talvas, A., Marchal, M., and L

´

ecuyer, A. (2013). The god-

finger method for improving 3d interaction with vir-

tual objects through simulation of contact area. In

Proceedings of the IEEE Symposium on 3D User In-

terfaces (3DUI), pages 111–114.

Vahrenkamp, N., Kr

¨

ohnert, M., Ulbrich, S., Asfour, T.,

Metta, G., Dillmann, R., and Sandini, G. (2012).

Simox: A robotics toolbox for simulation, motion and

grasp planning. In International Conference on Intel-

ligent Autonomous Systems (IAS), pages 585–594.

Zilles, C. B. and Salisbury, J. K. (1995). A constraint-based

god-object method for haptic display. In Proceedings

of the IEEE/RSJ International Conference on Intel-

ligent Robots and Systems (IROS), volume 3, pages

146–151.

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

68