Anomaly Detection in Beehives using Deep Recurrent Autoencoders

Padraig Davidson, Michael Steininger, Florian Lautenschlager, Konstantin Kobs, Anna Krause

and Andreas Hotho

Institute of Computer Science, Chair of Computer Science X, University of Würzburg, Am Hubland, Würzburg, Germany

Keywords:

Precision Beekeeping, Anomaly Detection, Deep Learning, Autoencoder, Swarming.

Abstract:

Precision beekeeping allows to monitor bees’ living conditions by equipping beehives with sensors. The data

recorded by these hives can be analyzed by machine learning models to learn behavioral patterns of or search

for unusual events in bee colonies. One typical target is the early detection of bee swarming as apiarists want

to avoid this due to economical reasons. Advanced methods should be able to detect any other unusual or

abnormal behavior arising from illness of bees or from technical reasons, e.g. sensor failure.

In this position paper we present an autoencoder, a deep learning model, which detects any type of anomaly

in data independent of its origin. Our model is able to reveal the same swarms as a simple rule-based swarm

detection algorithm but is also triggered by any other anomaly. We evaluated our model on real world data

sets that were collected on different hives and with different sensor setups.

1 INTRODUCTION

Precision apiculture, also known as precision bee-

keeping, aims to support beekeepers in their care de-

cisions to maximize efficiency. For that, sensor data is

collected on 1) apiary-level (e.g. meteorological pa-

rameters), 2) colony-level (e.g. beehive temperature),

or 3) individual bee-related level (e.g. bee counter)

(Zacepins et al., 2015). To gather data on colony

level, beehives are equipped with environmental sen-

sors that continuously monitor and quantify the bee-

hive’s state. Occasionally there are sensor readings

that deviate substantially from the norm. We refer to

these events as anomalies. They can be categorized

as behavior anomalies, sensor anomalies, and exter-

nal interferences. The first type describes irregular

behavior within the monitored subject, the second de-

scribes any abnormal measurements of the used sen-

sors, whereas the last can be subsumed as any exterior

force operating.

A prominent behavioral anomaly in apiculture is

swarming. Swarming is the event of a colony’s queen

leaving the hive with a party of worker bees to start

a new colony in a distant location. It is a naturally

occurring, albeit highly stochastic reproduction pro-

cess in a beehive. During the prime swarm the cur-

rent queen departs with many of the workers from the

old colony. Subsequent after swarms can occur with

fewer workers leaving the hive. Swarming events

can reoccur until the total depletion of the original

colony (Winston, 1980). Swarming diminishes a bee-

hive’s production and requires the beekeeper’s imme-

diate attention, if new colonies are to be recollected.

Therefore, beekeepers try to prevent swarming events

in their beehives.

A second notable behavioral anomaly in beehives

are mite infestations (varroa destructor) (Navajas

et al., 2008). They weaken colonies and make bees

more susceptible to additional diseases. Over time,

they lead to bee deaths and thus have severe conse-

quences to the environment as they reduce the polli-

nation power of bees in general. Just like swarming,

mite infestations and diseases require immediate at-

tention by beekeepers.

Sensor anomalies and external interferences are

non-bee-related anomalies. They can arise in any sen-

sor network. Any technical defects including faulty

sensors can be summarized as sensor anomalies and

require maintenance of the beehive and sensor net-

work. In apiaries, external interference is physical in-

teraction of beekeepers, or other external forces, with

their hives, e.g. opening the beehive for honey yield.

Finding anomalies in large datasets requires spe-

cialized methods that extend beyond manual evalua-

tions. Autoencoders (AEs) are a popular choice in

anomaly detection. They are a deep neural network

architecture, that is designed to reconstruct normal

behavior with minimal loss of information. In con-

142

Davidson, P., Steininger, M., Lautenschlager, F., Kobs, K., Krause, A. and Hotho, A.

Anomaly Detection in Beehives using Deep Recurrent Autoencoders.

DOI: 10.5220/0009161201420149

In Proceedings of the 9th International Conference on Sensor Networks (SENSORNETS 2020), pages 142-149

ISBN: 978-989-758-403-9; ISSN: 2184-4380

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

trast, an AE’s reconstruction of anomalous behavior

shows significant loss and can therefore be identified.

They are purely data-driven without the need for bee-

hive specific knowledge.

In this paper, we present an autoencoder that can

detect all three types of anomalies in beehive data in

a data-driven fashion. Our contribution is twofold:

First, we explore the possibility of using an autoen-

coder for anomaly detection on beehive data. Second,

we show that this architecture can be applied to dif-

ferent types of beehives due to its data-driven origin,

without the need for additional fine-tuning.

We evaluate our approach on three datasets: One

long term dataset of four years provided by the

HOBOS (https://hobos.de/) project, one short term

dataset obtained from (Zacepins et al., 2016), and an-

other short term dataset from we4bee (https://we4bee.

org/). For this preliminary study, we focus on time

spans where swarming can occur to show that our ap-

proach is working in general.

The remainder of this paper is structured as fol-

lows: Section 2 presents related research. Section 3

describes the used datasets in detail, whereas Sec-

tion 4 concentrates on a comprehensive characteriza-

tion of our autoencoder network structure. In Sec-

tion 5 we describe our experiments and list the results

on swarming data in Section 6. Section 7 investigates

normal behavior in contrast to selected anomalies, the

sensor foundation to detect those with our model, and

discusses the results. We conclude with a summary

and possible directions for future work in Section 8.

2 RELATED WORK

In order to learn how to distinguish normal from

anomalous behavior, we use anomaly detection tech-

niques based on neural networks. In particular, we

use recurrent autoencoders, since they have shown

to work in many anomaly detection settings with se-

quential data before (Filonov et al., 2016; Malhotra

et al., 2016; Shipmon et al., 2017; Chalapathy and

Chawla, 2019). Prior work for anomaly detection in

bee data has focused mainly on swarm detection, for

which several techniques have been published.

(Ferrari et al., 2008) monitored sound, temper-

ature and humidity of three beehives to investigate

changes of these variables during swarming. The bee-

hives experienced nine swarming activities during the

monitoring period, for which they analyzed the col-

lected data. They concluded that the shift in sound

frequency and the change in temperature might be

used to predict swarming.

(Kridi et al., 2014) proposed an approach to iden-

tify pre-swarming behavior by clustering tempera-

tures into typical daily patterns. An anomaly is de-

tected if the measurements do not fit into the typical

clusters for multiple hours.

(Zacepins et al., 2016) used a customized swarm-

ing detection algorithm based on single-point temper-

ature monitoring. They asserted a base temperature of

34.5

◦

C within the hive, which is allowed to fluctuate

within ± 1

◦

C. If an increase of ≥ 1

◦

C lasted between

two and twenty minutes, they reported the timestamp

of the peak temperature as the swarming time.

(Zhu et al., 2019) found, that a linear temperature

increase can be observed before swarming. They pro-

posed to measure the temperature between the wall

of the hive and the first frame near the bottom which

provides the most apparent temperature increase.

While swarming is an important type of anomaly,

we believe that other exceptional events should also

be detected.

3 DATASETS

We use sensor measurements from HOBOS, a subset

of the data from we4bee and the data used by (Za-

cepins et al., 2016) (referred to as Jelgava) in our

work. All datasets are referenced by the location of

the beehives.

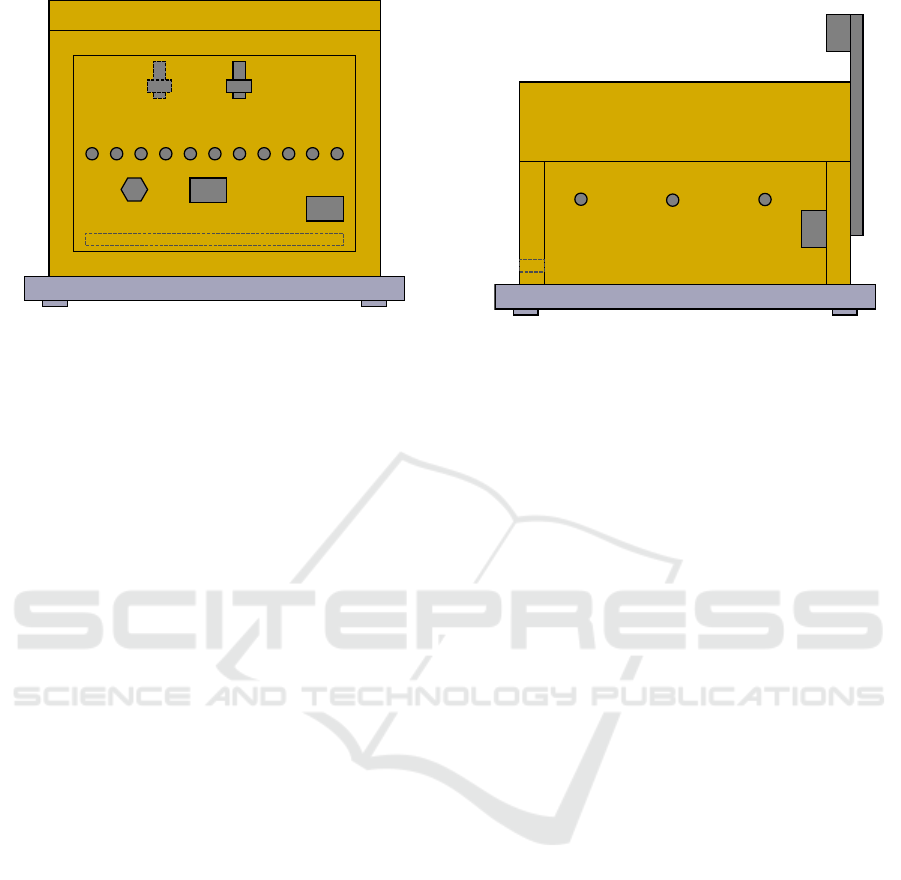

3.1 Würzburg & Bad Schwartau

HOBOS equipped five beehives (species: apis mellif-

era; beehive type: zander beehive) with several envi-

ronmental sensors. We use data from two beehives,

located in Bad Schwartau and Würzburg. While there

are three verified swarming events at Bad Schwartau,

the Würzburg beehive data is completely unlabeled.

We use this beehive to assess cross-beehive applica-

bility of our model. Figure 1 shows the back of a HO-

BOS beehive with all 13 temperature sensors. The

beehive in Bad Schwartau is not equipped with T

2

,

T

3

, T

9

, T

10

and T

12

while the one in Würzburg has

all sensors except T

2

and T

3

. Additionally, weight,

humidity and carbon dioxide (CO

2

) are measured in

the beehives. Measurements are taken once a minute

at every sensor. Data was collected from May 2016

through September 2019. As we are mainly interested

in swarming events, we only used the data from the

typical swarming period May to September of each

year for this preliminary study (Fell et al., 1977). HO-

BOS granted us access to their complete dataset.

Anomaly Detection in Beehives using Deep Recurrent Autoencoders

143

1

2

3

4

5

6

7

8 9

10

11

12

E

13

Figure 1: Back of a HOBOS beehive. Temperature sen-

sors T

1

–T

11

are mounted between honeycombs, tempera-

ture sensors T

12

and T

13

are mounted on the back and the

front of the hive, respectively. E denotes the hive’s entrance

on the front of the beehive.

3.2 Jelgava

Ten colonies (apis mellifera mellifera; norwegian-

type hive bodies) were monitored by a single tem-

perature sensor placed above the hive body. Mea-

surements were recorded once every minute over the

time span of May through August in 2015. The au-

thors granted us access to the nine days in their dataset

which contain swarming events, one each day.

3.3 Markt Indersdorf

The colony (apis mellifera; top bar hive) in Markt

Indersdorf is monitored by five temperature sensors:

one on the outside and four on the inside of the bee-

hive. Three temperature sensors measure laterally

to the orientation of the top bars, the remaining one

on the inside is placed in parallel at the back. Fig-

ure 2 shows a cutaway view of a we4bee hive. Ad-

ditionally other environmental influences are moni-

tored with sensors for air pressure, weight, fine dust,

humidity, rain and wind. Measurements are taken

once every second (fine dust: every three minutes).

Data ranges from June (start of the colony) through

September 2019.

4 AUTOENCODER

Since autoencoders (AE) have proven to be success-

ful for anomaly detection, we use such a model for

our task (Goodfellow et al., 2016; Sakurada and Yairi,

2014; Chalapathy and Chawla, 2019). Especially for

analyzing anomalies within sequential data, e.g. time

l

m

r

E

i

o

Figure 2: Cutaway view of a we4bee beehive. T

l

, T

m

, T

r

,

and T

i

are mounted on the inside, laterally to the honey-

combs. T

o

is placed outside at the pylon. E denotes the

entrance on the front of the beehive.

series, deep recurrent autoencoders using long-short-

term memory networks (LSTMs) (Goodfellow et al.,

2016) have shown great success over conventional

methods (e.g. SVM) (Ergen et al., 2017). We adapt

this model for this work.

An autoencoder is a pair of neural networks: an

encoder φ : X → F and a decoder ψ : F → X , where

X is the input space and F is the feature space or

latent space. In the case of deep recurrent autoen-

coders, both, encoder and decoder are LSTMs. The

training objective of the autoencoder is to reconstruct

the input: ¯x = ψ(φ(x)) ∼ x. Usually, the latent space

is smaller than the input space, producing a bottleneck

that forces the autoencoder to encode patterns of the

input data distribution in the encoder’s and decoder’s

weights. During the training phase, the autoencoder

is provided with data of normal behavior. It learns to

reconstruct normal data well. When it encounters ab-

normal data during inference, i.e. input data that does

not fit into the learned patterns, it is not able to recon-

struct the input properly. Large deviations between

model output and data indicate anomalies.

More formally, the two networks are tuned to min-

imize a given reconstruction loss function:

φ, ψ = arg min

φ,ψ

L(x − ψ(φ(x))).

Commonly the l

2

norm (Zhou and Paffenroth, 2017)

or the Mean-Squared-Error (MSE) (Shipmon et al.,

2017) are chosen as L. If this loss is greater than a

given threshold α, the input is considered an anomaly:

L(x − ψ(φ(x))) = L(x − ¯x) ≥ α

α can be set manually or based on a validation dataset

containing anomalies, depending on the desired sen-

sibility of the model. It should ideally be chosen in

such a way that all validation anomalies are detected

and no normal behavior is misclassified.

SENSORNETS 2020 - 9th International Conference on Sensor Networks

144

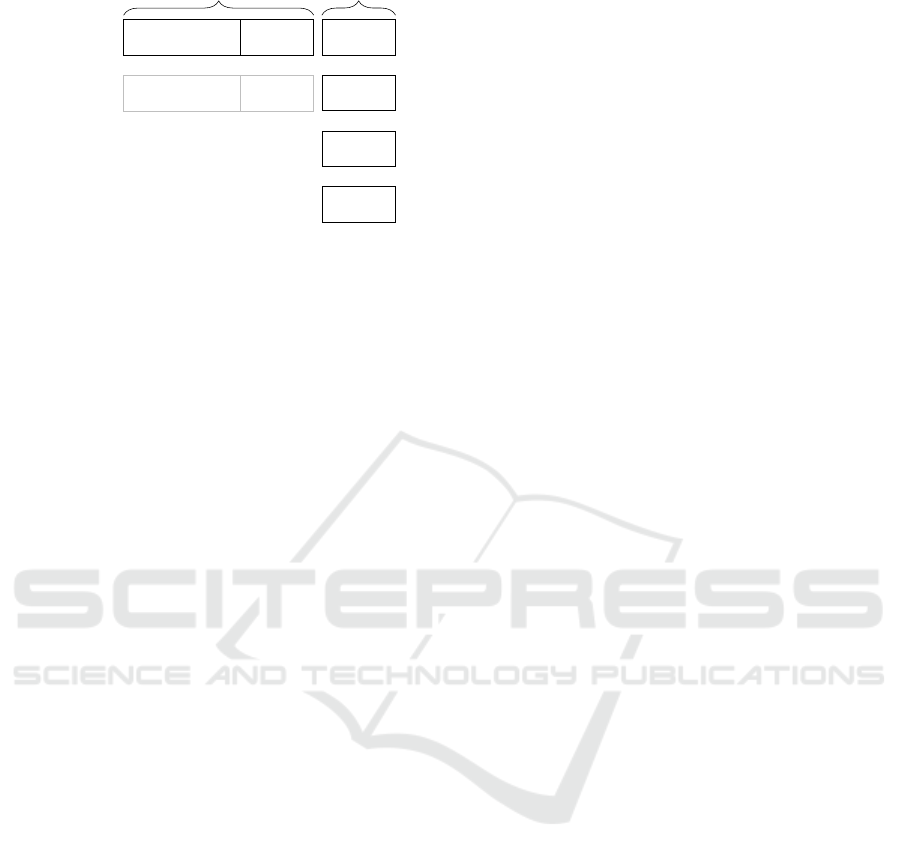

Holdout

69132 min

Validation

75314 min

Training

677822 min

Bad Schwartau

Test

72013 min

Validation

46082 min

Training

42134 min

Würzburg

Test

12960 min

Jelgava

Test

100021 min

Markt Indersdorf

Normal Behavior

Anomalous Behavior

Figure 3: The data splits used for Bad Schwartau. The au-

toencoder is trained on Bad Schwartau’s ‘Training’. The

hyperparameters and α are tuned using its ‘Validation’ and

‘Holdout’, respectively. The model is then tested on all

‘Test’. For Würzburg, the splits are set accordingly using

its ‘Training’, ‘Validation’, and ‘Test’ as ‘Holdout’. We

provide the recording time for all splits.

5 EXPERIMENTAL SETUP

We evaluate our AE model on beehive data from the

four locations described in Section 3.

Data Splitting. Both HOBOS hives, Bad Schwartau

and Würzburg, were used for training purposes.

Through visual analysis of the data, we labeled each

day of the dataset as either normal or anomalous. Ac-

cording to (Zacepins et al., 2016; Ferrari et al., 2008)

a fully enlarged colony maintains a constant core tem-

perature of 34.5

◦

C. All days with much higher or

lower temperature readings were considered to con-

tain anomalies. The training and validation set is

sampled from the normal portion of the dataset. The

holdout set contains all days with abnormal behav-

ior from the beehive used for training, while the test

set contains all days with anomalies from any other

beehive. Figure 3 visualizes the procedure exemplary

when training on the Bad Schwartau hive. Days la-

beled as abnormal typically also contain fragments of

normal behavior. This implies that test and holdout

sets are a mixture of normal and anomalous behavior.

Input Data. We use the centrally located tempera-

ture sensor T

6

for the locations Würzburg and Bad

Schwartau, in Markt Indersdorf we use T

m

, downsam-

pling its measurements to one minute resolution. In

Jelgava the only temperature sensor available is uti-

lized. We have also tried T

r

for Markt Indersdorf and

T

8

for Würzburg and Bad Schwartau in another ex-

periment to analyze differences in predictions when

using other sensors.

The temperature data is provided to the model in

windows containing a fixed number of subsequent

measurements. We used a window size of 60 min,

i.e. 60 measurements, since a swarming event usu-

ally ranges from 20 min to 60 min (Zhu et al., 2019;

Zacepins et al., 2016; Ferrari et al., 2008). For aug-

mentation purposes we built all possible windows of

consecutive measurements. All time series were nor-

malized by their z-score.

Model Training. We performed a random grid

search (Bergstra and Bengio, 2012) to find the best

hyperparameters, i.e. the hidden size hs ∈ {2, . . . , 64}

and the number of layers n ∈ {1, . . . , 4} for the en-

coder and decoder LSTMs.

For training we used the Adam optimizer (Kingma

and Ba, 2014) with the default parameters (learning

rate 10

−3

) and MSE as the loss function. To pre-

vent overfitting, we employed early stopping with a

patience of five epochs and used a ten percent split of

the training data for validation.

As described in Section 4, any time series with

a reconstruction error larger than α is considered an

anomaly. We selected the threshold manually by ex-

amining plots of the found anomalies and gradually

decreased this value so that no false positive was de-

tected in the holdout set. As a holdout set we used the

test set from the same beehive as the autoencoder was

trained on (see Figure 3).

Predictions. The rule-based algorithm described

in (Zacepins et al., 2016) (referred to as RBA) was

used on all subsets. That is, it was used on the training

and testing data from the colonies in Bad Schwartau,

Würzburg and Markt Indersdorf, as well as the one

in Jelgava itself. We found no swarming events, nei-

ther false nor true positives, with this method in any

training set, verifying our manual selection of train-

ing data. Where possible, we tested it with several

temperature sensors.

We trained the AE on both HOBOS hives inde-

pendently and used their respective holdout set for

setting the anomaly threshold. After that, we used

this threshold and the trained model as an inference

model to predict anomalies in all other anomaly sets,

e.g. Bad Schwartau was used to predict anomalies in

Würzburg, Jelgava and Markt Indersdorf. Jelgava and

Markt Indersdorf were not used for training purposes,

since the former only contains anomalous data, and

the latter was installed only recently.

6 RESULTS

Table 1 lists all known or found swarming events us-

ing the temperature sensor T

6

or T

m

. This table only

lists true positives of swarming events and false pos-

itives for comparison. Swarms detected by apiarists

on site are marked with

?

. Figure 4a displays sensor

traces of a typical swarming event. All other swarm-

Anomaly Detection in Beehives using Deep Recurrent Autoencoders

145

Table 1: Detected Anomalies. The first column shows the

name of the used test (anomaly) set. (S) signifies that the set

contains swarms while (O) stands for other anomalies. The

next column displays the date of the event, and — where

suitable — a reference to subfigures in Figures 4, 6 and 7.

The last two columns indicate whether RBA or our method

(AE) detected the anomaly. Predictions on HOBOS-hives

are based on sensor T

6

, on T

m

for we4bee. We used the

Bad Schwartau trained model to predict the swarms in any

other beehive, except for Bad Schwartau itself.

Dataset Timestamp

Detected

RBA AE

Bad Schwartau (S)

2016-05-11 11:05

4a

X X

2016-05-22 07:30 X X

2017-06-06 15:02 X X

2019-05-13 09:30

?

X X

2019-05-21 09:15

?

X X

2019-05-25 12:00

?

X X

Bad Schwartau (O) 2016-08-03 17:24 X X

Würzburg (S)

2019-05-01 09:15

6c

X

2019-05-10 11:15

6b

X X

Würzburg (O) 2019-04-17 16:22

6a

X X

Jelgava (S)

2015-05-06 18:02

?

X X

2016-06-02 13:48

?

X X

2016-05-30 10:03

?

X X

2016-06-16 15:50

?

X X

2016-06-01 13:20

?

X X

2016-06-03 09:11

?

X X

2016-06-13 03:30 X X

2016-06-16 10:52

?

X X

2016-06-13 13:32

?

X X

Markt Indersdorf (O)

2019-07-26 08:10 X X

2019-08-31 17:08

7b

X

ing events were found by a combination of RBA and

our approach: we ran RBA and examined the respec-

tive sensor readings to verify a swarming event. Then

we applied our AE model to the data and verified that

it also found all events detected by RBA. Addition-

ally, we used our approach to find other anomalies or

missed swarms.

The table states, that we found all true positives

of swarming events, which can be seen in the groups

of location (S). Our AE found one additional swarm

in Würzburg with T

6

and T

8

, which is only found

by RBA with T

8

. Furthermore, the groups of loca-

tion (O) list all anomalies detected as swarms by RBA

with T

6

, but are false positives of swarming events.

Other anomalies are manifold and inherently hard

to categorize, such as excited bees due to outside in-

fluences, and are thus not included in the table. Three

exemplary non-behavioral anomalies are depicted in

Figure 7.

(a) (Prototypical) Swarm as indicated by T

6

and T

8

, de-

tected by RBA and AE.

(b) Normal behavior of all three sensors

Figure 4: Exemplary data. (4a) Expected variations of all

sensors for a swarm. (4b) Expected variations of all sensors

for normal behavior.

7 DISCUSSION

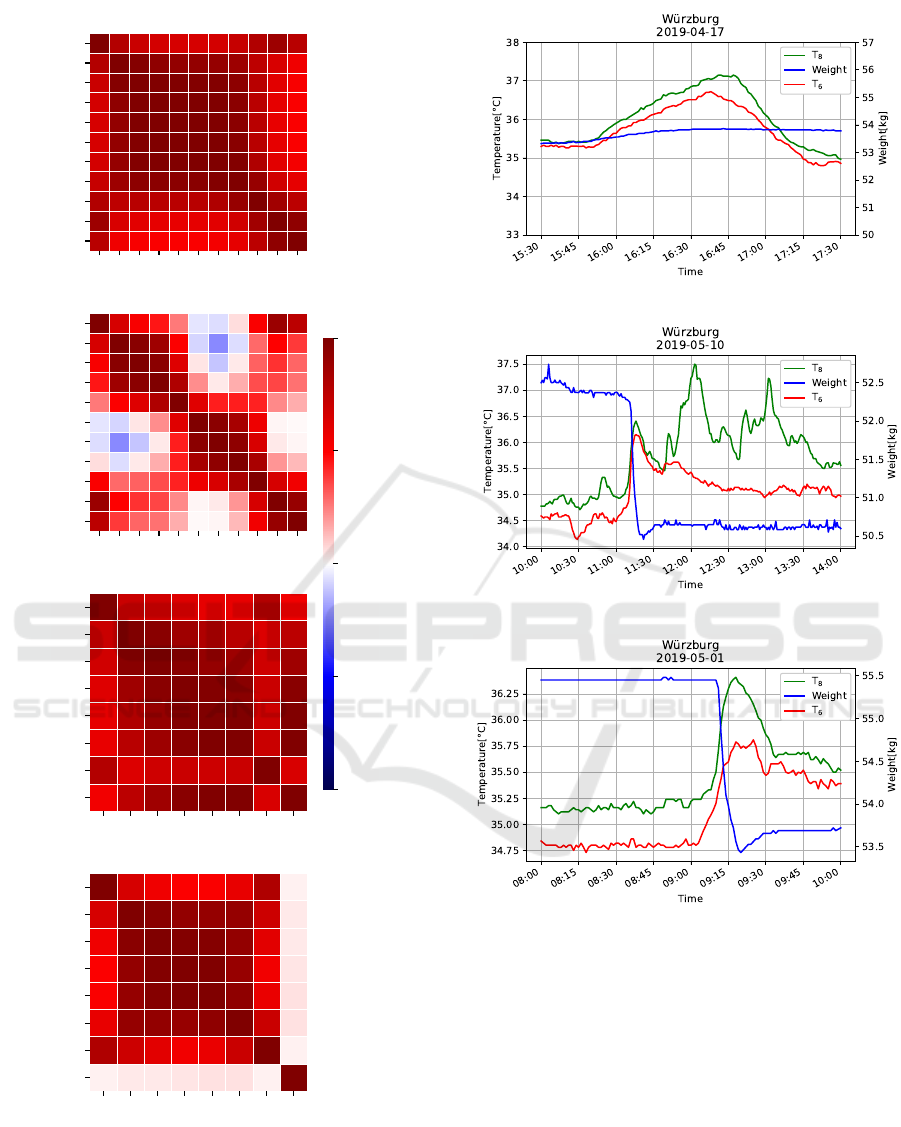

Analysis. Figure 5 displays the inter-sensor correla-

tion using the Pearson correlation coefficient. When

observing normal behavior, adjacent sensors correlate

highly and positively. Especially the sensors T

4

–T

10

,

located centrally inside the hive, show high correla-

tion between each other. Sensors closer to the edges

tend to correlate more with outside temperature sen-

sors (T

12

and T

13

). Correlations during the anomalies

are weaker, except between neighbors. This confirms

the findings in (Zhu et al., 2019), that certain sensor

placements tend to capture swarms superiorly.

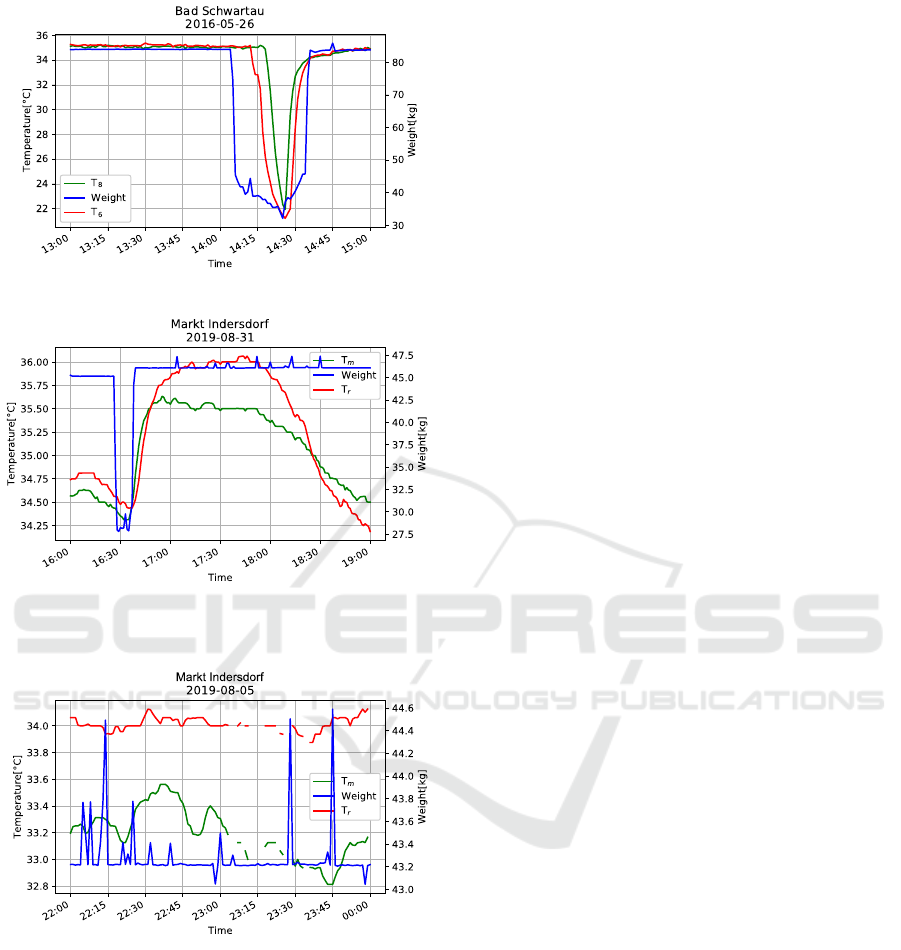

Figure 6 shows swarm-like anomalies, Figure 7

depicts sensor anomalies and external interferences.

All plots contain two temperature sensors (HOBOS:

T

6

, T

8

; we4bee: T

r

, T

m

), as well as the measured

weight. As already mentioned in Section 5, Figure 4a

shows a prototypical swarm as indicated by all three

sensors and detected by both methods.

Figure 6a shows an anomaly, that is falsely de-

tected as a swarm when only looking at the temper-

ature sensors. The weight readings show normal be-

SENSORNETS 2020 - 9th International Conference on Sensor Networks

146

T

1

T

4

T

5

T

6

T

7

T

8

T

9

T

10

T

11

T

12

T

13

T

1

T

4

T

5

T

6

T

7

T

8

T

9

T

10

T

11

T

12

T

13

W¨urzburg (N)

T

1

T

4

T

5

T

6

T

7

T

8

T

9

T

10

T

11

T

12

T

13

T

1

T

4

T

5

T

6

T

7

T

8

T

9

T

10

T

11

T

12

T

13

W¨urzburg (A)

T

1

T

4

T

5

T

6

T

7

T

8

T

11

T

13

T

1

T

4

T

5

T

6

T

7

T

8

T

11

T

13

Bad Schwartau (N)

T

1

T

4

T

5

T

6

T

7

T

8

T

11

T

13

T

1

T

4

T

5

T

6

T

7

T

8

T

11

T

13

Bad Schwartau (A)

−1.0

−0.5

0.0

0.5

1.0

Figure 5: Sensor correlations. All figures display the Pear-

son correlation between temperature sensors within a given

beehive. (N) stands for the dataset containing normal be-

havior and (A) for the dataset with anomalous behavior.

(a) Swarm-like anomaly in sensors T

6

and T

8

, but not

within the measured weight.

(b) Swarm anomaly indicated by both T

6

and T

8

, but addi-

tional swarms in T

8

. Swarm anomaly within the weight.

(c) Swarm detected with T

8

, but not with T

6

(RBA).

Anomaly in both for AE. Swarm anomaly within the

weight.

Figure 6: Examples for behavior anomalies of swarm-like

events.

havior, thus contradicting the event trigger initiated

by the temperature values.

On the other hand, Figure 6b shows a colony

swarm, as indicated by all three sensors. If we were

to use only one temperature sensor (T

8

), both meth-

ods would detect three swarms in this window.

In contrast to RBA, AE detects the swarm dis-

played in Figure 6c with temperature sensor T

6

. Only

with temperature sensor T

8

find this swarm.

Anomaly Detection in Beehives using Deep Recurrent Autoencoders

147

(a) External interference of an opened apiary. The influx of

outer air leads to the temperature drop.

(b) External interference by a possible varroa treatment.

The beehive was opened, weight added, leading to the ex-

citement of bees with a temperature increase. In contrast to

our AE with T

m

, RBA detected a swarm with T

r

and T

m

.

(c) Sensor anomaly with missing values in T

r

and T

m

, but

not in the measured weights.

Figure 7: Examples for external interferences (7a, 7b) and

sensor anomalies (7c) that are present in the datasets.

Figure 7a shows a window in which the beehive

was opened, explaining the steep and quick drop in

weight. The temperature sensors trail this pattern with

different delays, until the hive temperature has cooled

to ambient temperature. They quickly return to their

initial readings as soon as the hive is closed again.

Figure 7b shows a varroa treatment with formic

acid (hence the gain in weight). RBA detects swarms

in this window for both temperature sensors, whereas

our method only detects an anomaly in T

r

. That is

possibly due to the fact, that sensor readings in T

m

are within one standard deviation of the training data.

Table 1 shows only swarm-like anomalies, but our

method finds a lot more anomalies. The larger por-

tion of found anomalies are temperature readings well

below 30

◦

C. Other monitoring anomalies, e.g. Fig-

ure 7a, are detected, too.

Methodology. As described in Section 3, the defini-

tion of normal beehavior is vague and the visual divi-

sion is error prone.

We selected the error threshold α introduced in

Section 4 manually, such that no normal behavior is

detected as an anomaly in the validation set. This ap-

proach allows to control the sensitivity of the AE. It

is a trade-off between fine-tuning for swarming detec-

tion and suppressing previously unknown anomalies,

as seen in Figure 7b. Methods that determine α auto-

matically also require labeled data.

In Table 1, we listed all known swarms and their

respective time of occurrence. Due to the windowing

technique described in Section 5, we detect swarms

at any position in their respective window. That is,

anomalies are detected, both, at the end of the window

(predictive estimation) or at the beginning (historical

estimation). This prediction quality is highly depen-

dent on the threshold, but enables apiarists to timely

react to an ongoing or preeminent swarm.

8 CONCLUSION/FUTURE WORK

In this work we analyzed the possibilities of AEs in a

new environment: bee colonies and their habitat. Our

model found more swarming events than RBA, a rule

based method specifically designed for swarming de-

tection. Additionally, AEs detected not only swarm-

ing events but also other anomalies. There are how-

ever several aspects with potential for improvements:

Multivariate Anomaly Detection. HOBOS and

we4bee datasets enable us to use multivariate time se-

ries in contrast to the presented univariate temperature

time series. This allows us to refine predictions even

further, as not only an anomaly within sensors is de-

tectable, but an anomaly between sensors, i.e. inter-

and intra-sensor anomalies of any type. This could

also minimize the overall error if only a subset of sen-

sor shows abnormalities (e.g. in Figure 6b).

Method Tweaking. Instead of simply using the re-

construction error, we can adapt the loss to make dif-

ferent types of anomalies distinguishable. For exam-

ple, we could integrate the knowledge of temperature

or weight patterns during swarming.

SENSORNETS 2020 - 9th International Conference on Sensor Networks

148

Another possibility to enhance anomaly detection,

especially swarming detection, is to include a second

training process to introduce α as a trainable param-

eter. This requires a labeled dataset and is therefore

subject to future analysis.

In future work we will experiment with other

types of networks, e.g. generative models such as

generative adversarial networks or variational autoen-

coders. This has two key advantages: A) they al-

low anomalies to be contained in the training set, and

B) classification is based on probability rather than

reconstruction error (An and Cho, 2015). The param-

eter α would then be more interpretable.

Hibernation Period. We excluded the months Octo-

ber through March in any dataset (cf. Section 3). De-

tecting anomalies during this hibernation time is sub-

ject to future work, as the assumption of a nearly con-

stant temperature within the colony (34.5

◦

C) is void.

Especially in Bad Schwartau, sea wind is an environ-

mental influence that incurs very high deviations from

the mentioned normal behavior which also increases

the chances of sensor anomalies. Additionally, inter-

nal temperature sensors start to mimic the patterns of

the outside sensors.

Dataset Generation. Due to its data-driven fashion,

our method can be improved continuously by inte-

grating collected information in we4bee. This project

comprises a broad spatial distribution of apiaries, en-

abling us to collect a large amount of data fast. Partic-

ipating apiarists can further improve our model by la-

beling events presented to them. Furthermore we can

use our model as an alert-system to predictively warn

beekeepers about ongoing anomalies, whose feed-

back can again improve our predictions.

ACKNOWLEDGEMENTS

This research was conducted in the we4bee project

sponsored by the Audi Environmental Foundation.

REFERENCES

An, J. and Cho, S. (2015). Variational autoencoder based

anomaly detection using reconstruction probability.

Special Lecture on IE, 2(1).

Bergstra, J. and Bengio, Y. (2012). Random search for

hyper-parameter optimization. JMLR, 13:281–305.

Chalapathy, R. and Chawla, S. (2019). Deep learning for

anomaly detection: A survey. CoRR, abs/1901.03407.

Ergen, T., Mirza, A. H., and Kozat, S. S. (2017).

Unsupervised and semi-supervised anomaly detec-

tion with LSTM neural networks. arXiv preprint

arXiv:1710.09207.

Fell, R. D., Ambrose, J. T., Burgett, D. M., De Jong, D.,

Morse, R. A., and Seeley, T. D. (1977). The seasonal

cycle of swarming in honeybees. Journal of Apicul-

tural Research, 16(4):170–173.

Ferrari, S., Silva, M., Guarino, M., and Berckmans, D.

(2008). Monitoring of swarming sounds in bee hives

for early detection of the swarming period. Computers

and electronics in agriculture, 64(1):72–77.

Filonov, P., Lavrentyev, A., and Vorontsov, A. (2016). Mul-

tivariate industrial time series with cyber-attack simu-

lation: Fault detection using an LSTM-based predic-

tive data model. NIPS Time Series Workshop 2016.

Goodfellow, I., Bengio, Y., and Courville, A. (2016). Deep

Learning. MIT Press.

Kingma, D. P. and Ba, J. (2014). Adam: A

method for stochastic optimization. arXiv preprint

arXiv:1412.6980.

Kridi, D. S., Carvalho, C. G. N. d., and Gomes, D. G.

(2014). A predictive algorithm for mitigate swarming

bees through proactive monitoring via wireless sensor

networks. In Proceedings of the 11th ACM symposium

on PE-WASUN, pages 41–47. ACM.

Malhotra, P., Tv, V., Ramakrishnan, A., Anand, G., Vig,

L., Agarwal, P., and Shroff, G. (2016). Multi-sensor

prognostics using an unsupervised health index based

on lstm encoder-decoder. 1st SIGKDD Workshop on

ML for PHM.

Navajas, M., Migeon, A., Alaux, C., Martin-Magniette,

M.-L., Robinson, G., Evans, J. D., Cros-Arteil, S.,

Crauser, D., and Le Conte, Y. (2008). Differential

gene expression of the honey bee apis mellifera as-

sociated with varroa destructor infection. BMC ge-

nomics, 9(1):301.

Sakurada, M. and Yairi, T. (2014). Anomaly detection

using autoencoders with nonlinear dimensionality re-

duction. In Proceedings of the MLSDA 2014 2Nd

Workshop on Machine Learning for Sensory Data

Analysis, MLSDA’14, pages 4:4–4:11. ACM.

Shipmon, D. T., Gurevitch, J. M., Piselli, P. M., and Ed-

wards, S. T. (2017). Time series anomaly detection;

detection of anomalous drops with limited features

and sparse examples in noisy highly periodic data.

arXiv preprint arXiv:1708.03665.

Winston, M. (1980). Swarming, afterswarming, and re-

productive rate of unmanaged honeybee colonies (apis

mellifera). Insectes Sociaux, 27(4):391–398.

Zacepins, A., Brusbardis, V., Meitalovs, J., and Stalidzans,

E. (2015). Challenges in the development of precision

beekeeping. Biosystems Engineering, 130:60–71.

Zacepins, A., Kviesis, A., Stalidzans, E., Liepniece, M., and

Meitalovs, J. (2016). Remote detection of the swarm-

ing of honey bee colonies by single-point temperature

monitoring. Biosystems engineering, 148:76–80.

Zhou, C. and Paffenroth, R. C. (2017). Anomaly detection

with robust deep autoencoders. In Proceedings of the

23rd ACM SIGKDD, pages 665–674. ACM.

Zhu, X., Wen, X., Zhou, S., Xu, X., Zhou, L., and Zhou, B.

(2019). The temperature increase at one position in the

colony can predict honey bee swarming (apis cerana).

Journal of Apicultural Research, 58(4):489–491.

Anomaly Detection in Beehives using Deep Recurrent Autoencoders

149