DaDiDroid: An Obfuscation Resilient Tool for Detecting Android

Malware via Weighted Directed Call Graph Modelling

Muhammad Ikram

1,3

, Pierrick Beaume

2

and Mohamed Ali Kaafar

1, 2

1

Macquarie University, Australia

2

Data61 CSIRO, Australia

3

University of Michigan, U.S.A.

Keywords:

Malware, Obfuscation, Machine Learning, Android, Mobile Apps.

Abstract:

With the number of new mobile malware instances increasing by over 50% annually since 2012 (McAfee,

2017), malware embedding in mobile apps is arguably one of the most serious security issues mobile platforms

are exposed to. While obfuscation techniques are successfully used to protect the intellectual property of

apps’ developers, they are unfortunately also often used by cybercriminals to hide malicious content inside

mobile apps and to deceive malware detection tools. As a consequence, most of mobile malware detection

approaches fail in differentiating between benign and obfuscated malicious apps. We examine the graph

features of mobile apps code by building weighted directed graphs of the API calls, and verify that malicious

apps often share structural similarities that can be used to differentiate them from benign apps, even under

a heavily “polluted” training set where a large majority of the apps are obfuscated. We present DaDiDroid

an Android malware app detection tool that leverages features of the weighted directed graphs of API calls

to detect the presence of malware code in (obfuscated) Android apps. We show that DaDiDroid significantly

outperforms MaMaDroid (Mariconti et al., 2017), a recently proposed malware detection tool that has been

proven very efficient in detecting malware in a clean non-obfuscated environment. We evaluate DaDiDroid’s

accuracy and robustness against several evasion techniques using various datasets for a total of 43,262 benign

and 20,431 malware apps. We show that DaDiDroid correctly labels up to 96% of Android malware samples,

while achieving an 91% accuracy with an exclusive use of a training set of obfuscated apps.

1 INTRODUCTION

In recent years, Android OS and mobile applica-

tions (apps in short) have experienced an exponen-

tial growth with over 3 Million apps on Google

Play (Google, 2019a) in 2017. This popularity nat-

urally attracted malware developers leading to a con-

tinuous 50% yearly increase of the number of mal-

ware apps for over five years (McAfee, 2017). Sev-

eral research studies (e.g., (Ikram et al., 2016; Horny-

ack et al., 2011; Enck et al., 2014)) focused on dy-

namic runtime-network analysis to detect potential

malicious behaviour of Android apps. Another line

of research (e.g., (Mann and Starostin, 2012; Arp

et al., 2014; Chen et al., 2016; Aafer et al., 2013))

aimed to reduce the resources required to perform dy-

namic analysis by adopting static code analysis ap-

proaches. DroidAPIMiner (Aafer et al., 2013), for in-

stance, uses the frequency of API calls to classify mal-

ware apps. Recently, MaMaDroid (Mariconti et al.,

2017) leverages the similarity in the code of apps

as extracted from the transition probabilities between

different API calls to build an efficient Android mal-

ware detection system. However, the increased code

complexity and the use of obfuscation techniques as

a way to protect apps from code theft, reverse engi-

neering and code inspection, pose several challenges

to static analysis of malware apps.

In this paper, we set the objective of building

an effective mobile malware detection tool that is

resistant to code obfuscation techniques. We pro-

pose DaDiDroid, a tool that runs static code analysis,

leveraging apps’ API call graphs to characterize the

functional behaviour of apps and filter out malicious

behaviour, even in presence of an obfuscated code.

Specifically, DaDiDroid builds, for each Android app,

the API families calls graph (e.g., API packages such

as android.util.log are abstracted to android) and ex-

tracts the weighted directed graphs features (we use

23 unique graph features) to model the Android app

Ikram, M., Beaume, P. and Kaafar, M.

DaDiDroid: An Obfuscation Resilient Tool for Detecting Android Malware via Weighted Directed Call Graph Modelling.

DOI: 10.5220/0007834602110219

In Proceedings of the 16th International Joint Conference on e-Business and Telecommunications (ICETE 2019), pages 211-219

ISBN: 978-989-758-378-0

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

211

behaviour. Using the graph features, we then rely on

a classifier to establish whether or not the directed

weighted graph belongs to a benign app.

This paper makes the following contributions:

Open Source Malware Detection Tool: We

present our open source

1

malware detection tool, Da-

DiDroid, that leverages features from the weighted di-

rected graph representation of apps’ API calls (§ 3).

Efficacy of Graph Features: We empirically

evaluate our proposed system and demonstrate the ef-

ficacy of the graph features as peculiar features dis-

tinguishing between benign and malicious apps. We

use a combination of six publicly available datasets

to evaluate the effectiveness of DaDiDroid and deter-

mine that, in a clean environment (i.e., no obfuscation

used by apps) it detects malware apps with an accu-

racy of 96.5%. We present the distributions of some

selected graph features to show how they could be

used as discriminating features of (obfuscated) mal-

ware and benign apps.

Comparison against MaMaDroid: We empir-

ically show (cf. § 5 and § 5.3) that the accuracy of

MaMaDroid, a recent static analysis mobile malware

detection tool, is heavily dependent of the balance in

the training dataset as captured by the ratio of benign

versus malicious apps. In addition, we show that Ma-

MaDroid is prone to obfuscation techniques with a

generated false negatives as high as 86.3%.

DaDiDroid’s Robustness against Obfuscation:

We show that DaDiDroid is robust against several

code obfuscation techniques. With no obfuscated

apps present in the training set, DaDiDroid accurately

classifies 91.2% of all obfuscated apps

2

, an average

17.7% higher success rates when compared to Ma-

MaDroid.

2 OVERVIEW OF OBFUSCATION

TECHNIQUES

An Android app package (i.e., APK) comes a com-

pressed file containing all the module and con-

tent. Generally, the compressed APK include: four

directories (res, assets, lib, and META-INF) and

three files (AndroidManifest.xml, classes.dex, and re-

sources.arsc). Among these components, classes.dex

contains Java classes of an app, organized in a way

the Dalvik virtual machine can interpret and execute.

1

For further research, we plan to release DaDiDroid to

the research community.

2

Android apps that use several code obfuscation tech-

niques, further reviewed in § 2 and discussed in § 5.3.

In general, obfuscation attempts to obscure a pro-

gram and makes the source code

3

more difficult for

humans to understand, to evade malware detection

tools, or both. Developers can deliberately obfuscate

code (i.e., Java classes in classes.dex) to protect app’s

(malicious) logic or purpose, prevent tampering, or to

deter reverse engineering efforts. Common obfusca-

tion techniques used by apps include:

Call Indirection: This involves the manipulation

of apps’ call graphs by calling any two methods from

each others. In essence, in this obfuscation technique,

a method invocation is moved into a new method

which, in turn, is invoked in place of the original

method.

String Encoding and Encryption: Strings are

very common-used data structures in software devel-

opment. In an obfuscated app, strings could be en-

crypted to prevent information leakage. Based on

cryptographic functions, the original plain texts are

replaced by random strings and restore at runtime.

As a result, string encryption could effectively hinder

hard-coded static scanning.

Packing: In this obfuscation, an APK file is com-

posed of an origin APK and a wrapper APK. Gen-

erally, a wrapper APK launches the origin APK into

memory and executes it on Android devices. A wrap-

per APK may employ encryption to hide (or limit ac-

cess to) the original APK which are decrypted at run-

time thus often evade static code analysis.

Identifier Renaming: In software development,

for good readability, code identifiers’ names are usu-

ally meaningful, though developers may follow dif-

ferent naming rules. However, these meaningful

names also accommodate reverse-engineers to un-

derstand the code logic and locate the target func-

tions rapidly. Therefore, to reduce the potential in-

formation leakage, the identifier’s name could be

replaced by a meaningless string. Generally, with

this obfuscation technique, a given API’s name such

as myApp.net.myPackage is transformed to at most

three-letters words such as a.bc.def.

3 THE DaDiDroid SYSTEM

Figure 1 depicts an overview of the four steps of the

DaDiDroid’s malware detection system. By leverag-

ing source code analysis and graph theory, we extract

APIs call graphs of Android apps (step 1) and then

model the relationships among APIs (step 2). We use

various graph features (step 3) and machine learning

3

Some obfuscation techniques may also attempt to

transform machine code, however, in this work we consider

source code obfuscation techniques.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

212

algorithms (step 4) to classify apps. We provide de-

tails of these steps in the following.

APIs Call Graph Extraction: We aim to model

the behaviour of apps (benign and malware) from the

API calls in the source code. To this end, the first

step in DaDiDroid is to extract the apps’ call

4

graphs

by performing static analysis on the apps’ APKs (An-

droid Apps packages). We use Soot (Vall

´

ee-Rai et al.,

1999), a Java optimization and analysis framework,

to extract API call graphs and then instrument Flow-

Droid (v2.5) (Arzt et al., 2014) to extract dependen-

cies among the APIs.

Our intuition is that malware may use calls for var-

ious operations, in a different graph structure, than be-

nign apps (further elaborated in Section 5.1). To en-

sure the benign graph structures are different enough

from the malicious ones, we abstract API calls pack-

age to their respective families.

The APIs calls abstractions refers to all possible

calls of APIs from every and each API present in the

code. That is, for each app in our dataset (see Ta-

ble 1), we determine all possible calls amongst the

APIs. For instance, as depicted in Figure 1 (step

1), we find that the API call com.fac.ex:Execute()

instantiates the functions android.util.log:d() and

java.lang.Throwable:getMessage(). For the API fam-

ily abstraction, we first derive the family names of the

APIs and then construct the family call graphs. For

example, from android.net.http and java.sql APIs we

obtain API family names android and java, respec-

tively and build the graph of calls based solely based

on the family names of the APIs.

The abstraction provides resilience to API

changes in the Android framework as families are of-

ten added and deprecated less frequently than single

API calls. At the same time, this does not abstract

away the behavior of an app. As families include

classes as well as interfaces used to perform similar

operations on set of similar objects, we can model

the types of operations from the family names inde-

pendently of modifications, if any, in the underlying

classes and interfaces. For instance, we know that the

java.sql package is used for database input, output,

and update operations even though there are differ-

ent classes and interfaces provided by the package for

such operations.

Developer may extend the functionalities of their

apps by importing a self-defined API. As mentioned

earlier, developers also often resort to obfuscate the

4

An Android often requires user’s interactions to switch

back to app’s main GUI. The switching among apps GUIs

as programming is termed method callback or simply call-

back. For ease of presentation, we use a generic term,

“call”, to also denote callback.

APIs in the source code. To capture the self-defined

and obfuscated APIs in during our call graph ex-

traction process, we rely on previous work (Mari-

conti et al., 2017) and introduce two types of fam-

ily names in our APIs calls abstraction: self-defined

and obfuscated. We rely on previous works (Schulz,

2012) (Mariconti et al., 2017) to further determine

the difference between these two APIs. In essence,

we define that an API is obfuscated if either at least

50% of its functions or methods are at most 3 charac-

ters (see § 5.3 for further details) or if we cannot tell

what its class implements, extends, or inherits, due

to identifier mangling (Schulz, 2012). When operat-

ing in API abstraction level, we abstract an API call

to its package name using the list of Android pack-

ages, which as of Android OS v8.0 (Google, 2019b)

includes 243 Android and 101 Google APIs.

DaDiDroid detects if malware developers at-

tempt to define their “self-defined” APIs such as

com.google.MyMalware with API names com.google

(or family name com). The derived lists of An-

droid and Google APIs and classes ensure the de-

tection of any attempt that malware developers may

perform to evade DaDiDroid by naming their self-

defined APIs to legal android and google APIs such as

com.google.MyMalware which could have been ab-

stracted as com.google without APIs classes whitelist.

Overall, for Android API Level 26 (Google,

2019b), we obtain 387 distinct APIs and 12 different

families (Android, dalvik, java, javax, junit, apache,

json, com, xml, google, self-defined, and obfuscated).

We evaluated DaDiDroid in family and API abstrac-

tion modes. We observe that DaDiDroid’s results

were similar to that of its operation in API abstrac-

tion mode. To ease presentation, in § 5, we present our

analysis of DaDiDroid operating in family abstraction

mode.

Graph Generation: Next, we model the relation-

ships among API calls (resp. API families) in the An-

droid apps as weighted, directed graph G(V, E, W).

As DaDiDroid uses static analysis, the graph obtained

from Soot and FlowDroid represents the flow of func-

tions that are potentially called by the app. Each node

in V corresponds to a unique API call (resp. API

family name) in the Android app. A directed link

(u, v) ∈ E is presented in the graph if and only if an

API, u ∈ V, calls a method in another API, v ∈ V.

A weight w ∈ W represents the number of times u

calls a method in v. Note that the links (u, v, w) and

(v, u, w) may both exist if Android app’s APIs are

calling each other methods reciprocally.

For each Android app, we represent the relation-

ships among API calls (resp. API families) as adja-

cency matrix containing the number of occurrence of

DaDiDroid: An Obfuscation Resilient Tool for Detecting Android Malware via Weighted Directed Call Graph Modelling

213

com.fac.ex:

execute()

android.util.log

: d()

a.cb.r: abcd()

java.lang.Throw

able:

getMessage()

self-defined

obfuscated

java

XML

android

2

59

1

30

340

181

10

32

1

Call Graph Extraction

Graph Generation

Features Extraction

Classification

Figure 1: Overview of DaDiDroid: The dashed-arrows represent calls to other functions that are not shown in the figure.

possible transitions from v

i

to v

j

.

Features Extraction: Next, we leverage graph

metrics (or features, listed in Table 4) to quantify the

relationship among API calls. As some of the graph

metrics, such as clustering coefficient, are defined

only for undirected graphs, we first convert our di-

rected graphs into a weighted, undirected graphs and

then extract the corresponding graph metrics.

Classification: The last step in DaDiDroid is

to perform classification, i.e., labelling apps as ei-

ther benign or malware. To this end, we use a su-

pervised two-class Support Vector Machine (SVM)

classifier (M

¨

uller et al., 2001), k-Nearest Nigh-

bours (k-NN) (Andoni and Indyk, 2008), and Ran-

dom Forest (Breiman, 2001), all implemented using

Weka (Hall et al., 2009), an open source machine

learning library in Java programming language.

We form two classes by labelling malware and

benign apps’ graph features (cf. Table 4) as pos-

itives and negatives, respectively. We use 80% of

the instances for training and 20% for testing. For

two-class SVM, appropriate values for parameters γ

(radial basis function kernel parameter (Sch

¨

olkopf

et al., 2001)) and υ (SVM parameter) are set empir-

ically by performing a greedy grid search on ranges

2

−10

≤ γ ≤ 2

0

and 2

−10

≤ υ ≤ 2

0

, respectively, on

each training dataset. Likewise, for other classifiers,

we perform empirical tests to set their parameters.

4 DATASET IN USE

Dataset: We use previously published datasets which

include 63,693 Android apps (apk files) consisting

of 43,262 benign and 20,431 malware samples. Ta-

ble 1 summaries the dataset in use. We aim to verify

that DaDiDroid is robust to the existence of a variety

of Android malware samples as well as to heteroge-

nous APIs. We then consider a dataset that includes a

mix of obfuscated and non-obfuscated apps as well as

newer and (relatively) older apps with a publication

date ranging from August 2010 to June 2018. Our

dataset consists of samples from the following data

sources:

Marvin (Lindorfer et al., 2015). This source con-

sists of a total of 38,740 apps, constituted from 28,181

benign apps and 10,559 malware samples.

Drebin (Arp et al., 2014). It consists of 5,525 mal-

ware samples collected from August 2010 to October

2012.

Old Benign. This dataset includes a sample of

5,879 benign apps collected by PlayDrone (Vien-

not, 2014) between April and November 2013 and

published on the Internet Archive Machine (Archive,

2019).

New Benign. We use a customised crawler and un-

official Google Play API (Girault, 2019) to download

a sample of 1,788 free apps, belong to 29 different

categories, from the Google Play. We use VirusTotal

to determine whether or not the app’s embed in their

source codes.

ObData I. We obtained this dataset from Garcia et

al., (Garcia et al., 2015) which consists of obfuscated

apps employing various code transformation (i.e., ob-

fuscation) techniques such as Encoding and Call In-

direction (explained later in § 5.3).

ObData II. Apps developers often minify apps’

source code by mapping the API names to names such

as myApp.net.myPackage with at most three let-

ters: a.b.c or a.bc.def (cf. § 5.3). By parsing the

API names that correspond to a given Android app in

the Marvin dataset, we construct a sample of 7,975

apps that employ source code minification technique.

Table 1: Overview of the datasets used in our analysis. We

obtain two samples of obfuscated apps: We acquire “Ob-

Data I” from authors of (Garcia et al., 2015) and use the

Marvin dataset for obfuscated apps (cf. Section 5.3) to con-

struct “ObData II”.

Dataset #Apps # Benign # Malicious Date Range

Marvin (Lindorfer et al., 2015) 38,740 28,181 10,559 06/12–05/14

Drebin (Arp et al., 2014) 5,525 - 5,525 08/10–10/12

OldBenign 5,909 5,909 - 04/13–11/13

NewBenign 1,788 1,788 - 06/17–06/17

ObData I (Garcia et al., 2015) 883 - 883 06/12–05/14

ObData II (Lindorfer et al., 2015) 7,975 5,884 2,091 05/13–03/14

PackedApps (Dong et al., 2018) 2,873 1,500 1,373 07/16–02/17

Total 63,693 43,262 20,431 08/10–06/18

PackedApps. We obtained this dataset from Dong

et al., (Dong et al., 2018) which consists of 1,500

SECRYPT 2019 - 16th International Conference on Security and Cryptography

214

and 1,371 packed–original APK wrapped in another

APK–benign and malicious APKs, respectively.

Datasets Annotation: To analyze the clas-

sification results of DaDiDroid, we annotate our

datasets considering reports from VirusTotal (Virus-

Total, 2019). VirusTotal is an online service that ag-

gregates the scanning capabilities provided by more

than 68 antivirus (AV) tools, scanning engines and

datasets. It has been widely used in research litera-

ture to detect malicious apps, executables, software

and domains (Ikram et al., 2016). After completing

the scanning process for a given app, VirusTotal gen-

erates a report that indicates which of the participat-

ing AV scanning tools detected any malware activity

in the app under consideration and the corresponding

malware signature (if any). By parsing VirusTotal re-

ports, we extract the number of affiliated AV tools that

identified any malware activity and annotate each app

in our dataset with the corresponding results.

5 EVALUATION OF DaDiDroid

In this section, we present a detailed experimental

evaluation of DaDiDroid.

5.1 The Intuition

We first conduct preliminary experiments in order to

verify our main intuition that graph features are valu-

able to differentiate benign and malicious apps.

To further analyze the importance of our fea-

tures in classifying malware and benign apps, we use

an information-theoretic metric, information gain ra-

tio (Cover and Thomas, 2012). This metric is used to

quantify the differentiation power of features i.e., our

graph metrics or features. In this context, informa-

tion gain is the mutual information between a given

graph metric X

i

and the class label C ∈ {Benign,

Malware}. Formally, for a given graph feature X

i

and

the class label C, the information gain of X

i

with re-

spect to C is defined as:

GainRatio(X

i

, C) =

(H(C) − H(C|X

i

))

H(X

i

)

(1)

where H(X

i

) = −

P

i∈N

p(x

i

)log(p(x

i

)) denotes the

marginal entropy of the graph feature X

i

and H(C|

X

i

) represents the conditional entropy of class label

C given graph feature X

i

. In other words, informa-

tion gain ratio quantifies the reduction in the uncer-

tainty of the class label C given that we have the com-

plete knowledge of feature X

i

. Figure 2 shows the

information gain ratio of our graph features extracted

from the Marvin dataset and the Drebin dataset (cf.

Table 1). We observe that the “algebraic connectiv-

ity” and the “assortativity” are the most distinct be-

tween benign and malicious apps. This analysis re-

veals that the minimum betweenness centrality (see

Figure 2) and the number of weakly connected com-

ponents (see Figure 2) are insignificant in classifying

malware and benign apps that correspond to Marvin

and Drebin dataset.

(a) Marvin Dataset (b) Drebin+Oldbenign

Figure 2: Information gain ratio of our graph features for

the (a) Marvin dataset and (b) Drebin+Oldbenign dataset.

5.2 Effectiveness of DaDiDroid

Using the datasets summarised in Table 1, we per-

form experiments to analyze the effectiveness of

DaDiDroid’s classification on benign and malicious

samples. We also study the effect of unbalanced train-

ing data on DaDiDroid’s performance. We chose to

compare to MaMaDroid, as the most recent work in

the space, since MaMaDroid also aims to leverage

API-calls to extract probabilistic-features building a

Hidden Markov model of transitions between API

calls.

To assess the accuracy of the classification, we

use the standard F-measure = 2 × (

precision × recall

precision + recall

),

where precision =

T P

T P +F P

and recall =

T P

T P +F N

. TP

means true positives denoting the number of apps cor-

rectly classified as malicious. Likewise, FN (false

negatives) and FP (false positives) indicate the num-

ber of samples mistakenly identified as benign and

malicious, respectively. For all experiments, we per-

form 10-fold cross validation using at least one mali-

cious and one benign dataset from Table 1.

Effectiveness of Classifiers: In order to gener-

alize the classification of DaDiDroid, we use three

machine learning classification algorithms: two-class

SVM, k-NN, and Random Forest.

Table 2 summarizes the performance of the clas-

sifiers. Our results show that the Random Forest

classifier achieves the highest accuracy with an accu-

racy of 90% and 96% in classifying malware and be-

nign drawn from the Drebin+oldbenign and the Mar-

vin datasets, respectively. Perhaps due to an over-

fitting effect in our dataset, two-class SVM showed

DaDiDroid: An Obfuscation Resilient Tool for Detecting Android Malware via Weighted Directed Call Graph Modelling

215

poor results and may require further pruning and de-

tailed analysis of parameters, as well as a selection

of the optimal set of prominent features to improve

the classification performances (Kohavi and Sommer-

field, 1995; Breiman, 2001). We resort to the Random

Forest classifier as our best classifier and use it next

to compare against MaMaDroid and in our analysis

of the effect of unbalanced training sets as well as the

study of the resilience against the obfuscation tech-

niques.

Table 2: Performance of our classifiers in classifying mal-

ware and benign drawn from Marvin (Lindorfer et al., 2015)

and Drebin+oldbenign (OB) datasets. Here RM means Ran-

dom Forest while FM, TP, FP represent F1-Measure, True

Positives rate and False Positives rate respectively.

Dataset Classifier TP FP Precision Recall FM Accuracy

RF 90.3% 9.7% 90.3% 90.3% 90.3% 90.1 %

Drebin+OB SVM 70.5% 29.2% 71% 70.5% 70.4% 71.4 %

k-NN 86.8% 13.2% 86.8% 86.8% 86.8% 87.3 %

RF 95.7% 6.6% 95.7% 95.7% 95.7% 96.5 %

Marvin SVM 81.8% 32.4% 81.1% 81.8% 81.1% 82.7 %

k-NN 94.1% 9.6% 94% 94.1% 94.1% 94.3 %

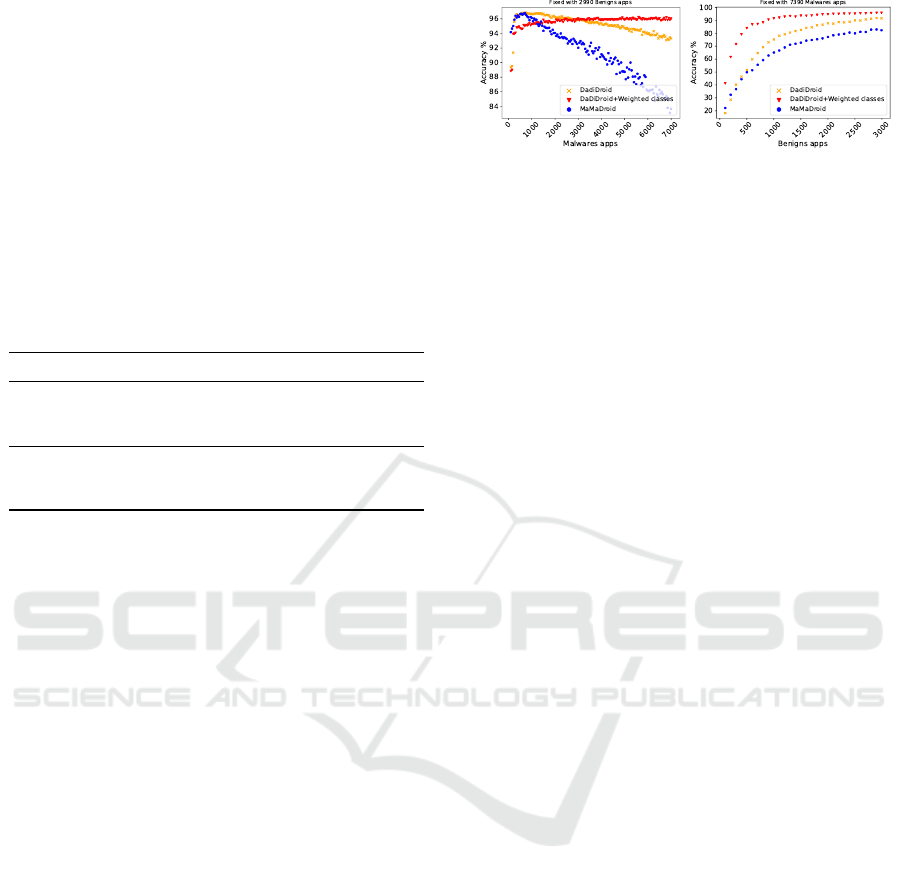

Effectiveness of DaDiDroid with Unbalanced

Training Dataset: In order to determine the mini-

mum number of apps required to train DaDiDroid,

we analyze several sets of training and testing datasets

drawn from the Marvin dataset (see in Table 1). Fig-

ure 3 depicts the effect of unbalanced dataset on per-

formance of DaDiDroid (with Random Forest). In the

case of using fixed size of benign apps and various

sets of malware apps (resp. benign apps) in the train-

ing phase, we observe that DaDiDroid outperforms

MaMaDroid with on average 12% higher accuracy.

Moreover, the results also show that DaDiDroid

achieves higher accuracy (96%) in case of limited

number (i.e, 1,500) of benign apps in training phase.

In contrast, MaMaDroid (Mariconti et al., 2017)

shows vulnerability to unbalanced training dataset. In

Figure 3b, in the case of using fixed size of malicious

apps and a set 1,500 of benign instances in the train-

ing phase, we observe that DaDiDroid outperforms

MaMaDroid with on average 26% higher accuracy.

5.3 Robustness against Obfuscation

Techniques

The main goal of obfuscation is to either secure apps’

source codes or to hide apps’ malware components.

In this section, we evaluate the robustness of our

proposed system against several types of obfuscation

techniques.

Whitespace: To reduce the readability of source

code, this (basic) obfuscation technique adds or re-

(a) (b)

Figure 3: Analyzing the effect of unbalanced dataset on the

performance of DaDiDroid (with Random Forest in classi-

fication phase) and MaMaDroid: In (a) we use 2,990 benign

apps and vary sets of malware apps to measure the accuracy

while in (b) we use a fixed size of malware apps (7,390) and

vary the set of benign apps.

moves white-spaces in apps source code. As Da-

DiDroid leverages API calls (and API family names)

only, this obfuscation technique should have no effect

on the accuracy of DaDiDroid.

Call Indirection (CI): This involves the manipula-

tion of apps’ call graphs by calling any two methods

from each others. To measure the performance of Da-

DiDroid to Call Indirection, we use the Drebin and

OldBenign datasets (cf. Table 1) for training our clas-

sifiers. We use NewBenign and 210 Call Indirecting

malware apps drawn from ObData I (Garcia et al.,

2015) for testing. Table 3 shows the robustness of

DaDiDroid against this type of obfuscation. We ob-

serve that 15 (7%) of apps, having Call Indirection

obfuscation, were classified as benign. In contrast,

MaMaDroid (Mariconti et al., 2017) mis-classified 63

(30%) apps that employ Call Indirection obfuscation

techniques.

String Encoding and Encryption (SEE): With this

obfuscation technique, an app’s developer encodes

the used APIs to unintelligible ones. To evaluate

the performance of DaDiDroid to String Encoding

and Encryption, we use the Drebin and OldBenign

datasets (cf. Table 1) for training our classifiers. We

use NewBenign and 673 malware apps, with this ob-

fuscation technique, drawn from ObData I (Garcia

et al., 2015) for testing. Table 3 shows the robustness

of DaDiDroid against String Encoding and Encryp-

tion obfuscation. We found that 20% of apps, having

String Encoding and Encryption obfuscation, were

classified as benign. In contrast, MaMaDroid (Mari-

conti et al., 2017) results in 86% of false negatives.

Packing: With this obfuscation technique, an app’s

developer wraps the original APKs inside another

APKs. To evaluate the performance of DaDiDroid

to packing obfuscation, we use 80% and 20% of the

PackedApps dataset (cf. Table 1) for training and test-

ing our classifiers, respectively, and use 10-fold cross

validation in our experiments. Table 3 shows the ro-

bustness of DaDiDroid against Packing obfuscation.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

216

Table 3: Comparison of our proposed system, DaDiDroid,

with MaMaDroid on obfuscated datasets (cf. Section 4).

Here RM means Random Forest while P, R, and A represent

precision, recall, and accuracy, respectively.

Obf. Tech. Scheme TP FP P R FM A

DaDiDroid 92.9% 4.5% 70.7% 92.9% 80.2% 95.2%

CI

MaMaDroid (Mariconti et al., 2017) 70.0% 3.5% 70.0% 70.0% 70.0% 93.7%

DaDiDroid 79.5% 4.5% 86.9% 79.5% 83.1% 91.1%

SEE

MaMaDroid (Mariconti et al., 2017) 13.7% 23.2% 59.4% 13.7% 22.2% 73.8%

DaDiDroid 98.4% 0.9% 97.9% 98.4% 98.2% 98.8%

Packing

MaMaDroid (Mariconti et al., 2017) 98.4% 1.2% 97.5% 98.4% 97.9% 98.7%

DaDiDroid 77.1% 7.1% 91.6% 77.1% 83.7% 95.2%

IR

MaMaDroid (Mariconti et al., 2017) 65.2% 2.2% 70.0% 96.8.0% 77.8% 65.2%

DaDiDroid 82.7% 4.5% 90.1% 82.7% 86.2% 91.2%

CI+SEE+Packing+IR

MaMaDroid (Mariconti et al., 2017) 27.1% 3.5% 79.1% 27.1% 40.3% 73.5%

We found that 1.6% of apps, having Packing obfus-

cation, were classified as benign. In contrast, Ma-

MaDroid (Mariconti et al., 2017) results in 1.2% of

false positives.

Identifier Renaming: With Identifier Renaming, a

given API’s name such as myApp.net.myPackage is

transformed to at most three-letters words such as

a.bc.def. By parsing the API names that correspond to

a given Android app in Marvin dataset, we determine

this type of obfuscation in our datasets. In essence,

leveraging previous works (Schulz, 2012; Mariconti

et al., 2017), we define that an API is obfuscated if

either at least 50% of its functions or methods are at

most 3 characters or if we cannot tell what its class

implements, extends or inherits due to identifier man-

gling (Schulz, 2012).

We draw apps with no Identifier Renaming from

Marvin dataset to train MaMaDroid and DaDiDroid

and use ObData II (cf. Table 2) to measure their

robustness against minification. Once again, we use

80% and 20% of the data for training and testing, re-

spectively, and use 10-fold cross validation in our ex-

periments.

(a) (b)

Figure 4: Robustness of DaDiDroid against Identifier Re-

naming (or minification): (a) F1-Measure and accuracy; and

(b) false negatives and false positives.

Table 3 overviews and Figure 4 further illumi-

nates on the robustness of DaDiDroid against Identi-

fier Renaming. With increasing proportions of apps

that employ Identifier Renaming, in Figure 4a, we

observe a decreasing trend in DaDiDroid’s and Ma-

MaDroid’s performance. When tested with 100% of

ObData II, we observe that DaDiDroid outperforms

MaMaDroid: F1-Measure of MaMaDroid drops from

87% to 78% whereas we notice merely 5% (from

88% to 83%) decrease in DaDiDroid’s F1-Measure.

In Figure 4b, we notice that minimification mainly

affects the number of false negatives. With 83% of

F1-Measure, DaDiDroid miss-classifies only 20% of

the malware apps in testing dataset as benign. In

contrasts, besides its lower F1-Measure (78%), Ma-

MaDroid results in 35% of false negatives. Overall,

for its high F1-Measure and low false negatives, Da-

DiDroid pays a very reasonable cost of 7% of false

positives rate.

Overall, when tested on samples of benign and

malware apps that involve four types obfuscation

techniques, we observe (cf. Table 3) that DaDiDroid

outperforms MaMaDroid with on average 46.2% and

17.7%, respectively, higher F1-Measure and accuracy,

however, with a cost of 1% higher false positive rate

than that of MaMaDroid.

6 RELATED WORK

A number of studies have characterised and de-

tected malicious activities in Android apps. Several

works (Ikram et al., 2016; Hornyack et al., 2011;

Enck et al., 2014; Continella et al., 2017) rely on

dynamic runtime-network analysis to detect poten-

tial malicious behaviour of Android apps. To reduce

the resource required in performing dynamic analy-

sis, a large body of works (Mann and Starostin, 2012;

Arp et al., 2014; Chen et al., 2016) perform static

code analysis to detect malware component in An-

droid apps. However, due recent development in ob-

fuscation techniques and malware developers’ affin-

ity to obfuscate their apps (Dong et al., 2018), static

analysis of Android malware apps is an active re-

search topic. CrowDroid (Burguera et al., 2011) per-

formed dynamic analysis apps network traces to de-

tect malware activities of Android apps. By collect-

ing sequence of requests sent to remote servers, it

built features vectors that consisted of number of calls

of a specific API to differentiate malware apps from

benign apps. Drebin (Arp et al., 2014) performed

static analysis of API calls and requests to permission.

It also analyzed hard-coded URLs in apps’ source

codes to determine apps’ benign or malicious activ-

ities. DroidApiMiner (Aafer et al., 2013) proposed a

new approach by using the frequencies of API calls

to sensitive APIs. StormDroid (Chen et al., 2016)

improved the detection of DroidApiMiner by analyz-

ing APIs calls sequences along with the API calls fre-

quencies analysis.

Similarly, MaMaDroid (Mariconti et al., 2017)

improved the in-efficiency of both DroidAPIMiner

DaDiDroid: An Obfuscation Resilient Tool for Detecting Android Malware via Weighted Directed Call Graph Modelling

217

Table 4: List of our graph metrics (or features) to quantify the relationship among API calls as well API families.

Feature Description

Graph Size Denotes number of edges (i.e., relationships) of a node (i.e., API or family) in a graph.

Graph Order Corresponds to the number nodes in a graph.

Number of Cycles A cycle is a path of edges and vertices wherein a vertex is reachable from itself.

Degree Assortativity Coefficient In a Directed graph, in-assortativity and out-assortativity measure the tendencies of nodes to

connect with other nodes that have similar in and out degrees as themselves, respectively. To

compute this feature we use the Pearson correlation approach which is way faster and provide

representative results. Positive values of assortivity coefficient, r, indicates a correlation be-

tween nodes of similar degree, while negative values indicate relationships between nodes of

different degree. In general, r lies between −1 and 1. When r = 1, the network is said to have

perfect assortative mixing patterns, when r = 0 the network is non-assortative, while at r = −1

the network is completely disassortative.

Strongly Connected Components The number of strongly connected components. A vertex v

i

is said to be strongly connected if

it is reachable from every other vertex v

k

.

Weakly Connected Components A weakly connected component is a maximal subgraph of a directed graph such that for every

pair of vertices (u, v) in the subgraph, there is an undirected path from u to v and a directed

path from v to u.

Node Connectivity The node connectivity is the minimum number of nodes that must be removed to disconnect G.

Avg. Shortest Path Length Average shortest path length is a concept in network topology that is defined as the average

number of steps along the shortest paths for all possible pairs of network nodes.

Degree Centrality The degree centrality a vertex v

i

is the fraction of nodes it is connected to.

Betweenness Centrality Measures the fraction of all pair shortest paths, except those originating or terminating at it, that

pass through it,

2I(P

jk

,i)

|V |(|V |−1)

, where P

jk

denote the shortest path from vertex v

j

to vertex v

k

, P

jk

= (v

j

, v

l

, v

m

, v

n

, ..., v

k

) and I(P

jk

, i) = 1 if v

i

∈ P

jk

otherwise I(P

jk

, i) = 0 when v

i

P

jk

.

Vitality Closeness vitality of a node is the change in the sum of distances between all node pairs when

excluding that node.

Attracting Components An attracting component in a directed graph G is a strongly connected component with the

property that a random walker on the graph will never leave the component, once it enters the

component.

Graph Density It measures number of edges of graph is close to the maximum number of edges.

Clustering coefficient It is a measure of the degree to which nodes in a graph tend to cluster together.

Algebraic connectivity The algebraic connectivity (also known as Fiedler value or Fiedler eigenvalue) of a graph G is

the second-smallest eigenvalue of the Laplacian matrix of G. This eigenvalue is greater than 0

if and only if G is a connected graph. This is a corollary to the fact that the number of times 0

appears as an eigenvalue in the Laplacian is the number of connected components in the graph.

Clique Number It corresponds to the size (number of vertices V ) of the largest clique of the graph. A clique

C in an undirected graph is a subset of the vertices such that every two distinct vertices are

adjacent.

Biconnected Components They are maximal subgraphs such that the removal of a node (and all edges incident on that

node) will not disconnect the subgraph.

Diameter The diameter of a graph is the maximum eccentricity of any vertex in the graph. The eccentricity

(v) of a graph vertex v

i

in a connected graph G is the maximum graph distance between v

i

and any other vertex v

j

of G.

Radius The radius r of a graph G is the minimum eccentricity of any vertex.

Center Number The center is the set of nodes with eccentricity equal to radius, r.

Periphery Number The periphery is the set of nodes with eccentricity equal to the diameter.

and StormDroid (Chen et al., 2016) by using the

transitions probabilities among API calls as features.

However, we empirically analyzed that MaMaDroid

is dependent on the type of data used for training

(i.e., apps categories, ratio benign on malicious apps,

and apps release dates) and is prone to obfuscation

techniques (see Section 5.3) employed by malware

apps. Overall, these techniques focused on sensitive

API calls and privileged permissions that often lead

to high false positives: a large number of benign apps

often use sensitive API calls and request permissions

for certain functionalities thus they are wrongly clas-

sified as malware. Moreover, these solutions seldom

detect obfuscated malware components in apps, re-

sulting in poor performance. Zhang et al., (Zhang

et al., 2014) used weighted graph models, extracted

from Android apps’ codes semantics, to quantify the

similarities among malware apps. In contrast, we

used metrics derived from weight directed graphs to

differentiate among benign and malware apps. To an-

alyze malicious software in desktop computing plat-

form, GZero (Shafiq and Liu, 2017) collected the call

sequences of software executables to build graph the-

oretic features. In the same spirit, in this work, we

extracted an extended set of graph features and used

showed the efficacy of graph theoretic metrics in clas-

sifying benign and malware apps. Moreover, we eval-

uate the robustness of our proposed scheme against

unbalanced datasets as well as shown its resiliency

against several type obfuscation techniques.

7 CONCLUSION

We presented DaDiDroid, an obfuscation resilient

tool to detect Android malware based on modeling the

API calls as a weighted, directed graph. We evaluated

the effectiveness of DaDiDroid with several datasets

and tested its robustness against different types of ob-

fuscations. We shown that DaDiDroid classify up to

96.5% of benign and malicious apps. In worst case,

when no obfuscated apps in training set and testing

set contain all obfuscated apps (i.e., apps “Encoding”

obfuscation technique), DaDiDroid achieved 91.2%

of accuracy with only 17.3% of false negative rate

and 4.5 false positive rate. Moreover, DaDiDroid use

small features vectors which can be customized with

SECRYPT 2019 - 16th International Conference on Security and Cryptography

218

more or less features from the graph theory and even

the extraction process depending on the context of

the classification. We compared DaDiDroid to Ma-

MaDroid (Mariconti et al., 2017), a Markov chain

modeling tool for Android apps, showing that beside

achieving similar high results on common datasets,

DaDiDroid is much more resilient to changes in the

datasets and especially to obfuscation techniques. In

the future we plan to improve DaDiDroid by comple-

menting features derived from dynamic runtime anal-

ysis of apps.

REFERENCES

Aafer, Y., Du, W., and Yin, H. (2013). Droidapiminer: Min-

ing api-level features for robust malware detection in

android. In ICSPCS. Springer.

Andoni, A. and Indyk, P. (2008). Near-optimal hashing al-

gorithms for approximate nearest neighbor in high di-

mensions. Communications of the ACM.

Archive (2019). Playdrone apk’s : Free software : Free

download, borrow and streaming : Internet archive.

https://archive.org/details/playdrone-apks.

Arp, D., Spreitzenbarth, M., Hubner, M., Gascon, H.,

Rieck, K., and Siemens, C. (2014). Drebin: Effec-

tive and explainable detection of android malware in

your pocket. In NDSS.

Arzt, S., Rasthofer, S., Fritz, C., Bodden, E., Bartel, A.,

Klein, J., Le Traon, Y., Octeau, D., and McDaniel, P.

(2014). Flowdroid: Precise context, flow, field, object-

sensitive and lifecycle-aware taint analysis for android

apps. In PLDI. ACM.

Breiman, L. (2001). Random forests. Machine Learning.

Burguera, I., Zurutuza, U., and Nadjm-Tehrani, S. (2011).

Crowdroid: behavior-based malware detection system

for android. In WSPSMD, pages 15–26. ACM.

Chen, S., Xue, M., Tang, Z., Xu, L., and Zhu, H. (2016).

Stormdroid: A streaminglized machine learning-

based system for detecting android malware. In CCS.

Continella, A., Fratantonio, Y., Lindorfer, M., Puccetti,

A., Zand, A., Kruegel, C., and Vigna, G. (2017).

Obfuscation-resilient privacy leak detection for mo-

bile apps through differential analysis. In NDSS.

Cover, T. M. and Thomas, J. A. (2012). Elements of infor-

mation theory. John Wiley & Sons.

Dong, S., Li, M., Diao, W., Liu, X., Liu, J., Li, Z.,

Xu, F., Chen, K., Wang, X., and Zhang, K. (2018).

Understanding android obfuscation techniques: A

large-scale investigation in the wild. arXiv preprint

arXiv:1801.01633.

Enck, W., Gilbert, P., Han, S., Tendulkar, V., Chun, B.-G.,

Cox, L. P., Jung, J., McDaniel, P., and Sheth, A. N.

(2014). Taintdroid: an information-flow tracking sys-

tem for realtime privacy monitoring on smartphones.

In TOCS.

Garcia, J., Hammad, M., Pedrood, B., Bagheri-Khaligh,

A., and Malek, S. (2015). Obfuscation-resilient, ef-

ficient, and accurate detection and family identifica-

tion of android malware. Department of Computer

Science, George Mason University, Tech. Rep.

Girault, E. (2019). Google Play Unofficial Python API.

https://github.com/egirault/googleplay-api.

Google (2019a). Google Play Store. https://

play.google.com.

Google (2019b). Package Index (APIv26) — Android

Developers. https://developer.android.com/reference/

packages.html.

Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reutemann,

P., and Witten, I. H. (2009). The weka data mining

software: an update. ACM SIGKDD Explorations.

Hornyack, P., Han, S., Jung, J., Schechter, S., and Wether-

all, D. (2011). These aren’t the droids you’re looking

for: Retrofitting android to protect data from imperi-

ous applications. In CCS.

Ikram, M., Vallina-Rodriguez, N., Seneviratne, S., Kaafar,

M. A., and Paxson, V. (2016). An analysis of the

privacy and security risks of android vpn permission-

enabled apps. In IMC.

Kohavi, R. and Sommerfield, D. (1995). Feature Subset

Selection Using the Wrapper Method: Overfitting and

Dynamic Search Space Topology. In KDD.

Lindorfer, M., Neugschwandtner, M., and Platzer, C.

(2015). Marvin: Efficient and comprehensive mobile

app classification through static and dynamic analysis.

In COMPSAC.

Mann, C. and Starostin, A. (2012). A framework for static

detection of privacy leaks in android applications. In

ASAC.

Mariconti, E., Onwuzurike, L., Andriotis, P., De Cristo-

faro, E., Ross, G., and Stringhini, G. (2017). Ma-

MaDroid: Detecting Android Malware by Building

Markov Chains of Behavioral Models. In NDSS.

McAfee (2017). McAfee Labs Threat Report.

https://www.mcafee.com/au/resources/reports/rp-

quarterly-threats-sept-2017.pdf.

M

¨

uller, K.-R., Mika, S., R

¨

atsch, G., Tsuda, K., and

Sch

¨

olkopf, B. (2001). An Introduction to Kernel-

based Learning Algorithms. IEEE ToNE, 12(2).

Sch

¨

olkopf, B., Platt, J. C., Shawe-Taylor, J. C., Smola, A. J.,

and Williamson, R. C. (2001). Estimating the support

of a high-dimensional distribution. Neural Computa-

tion.

Schulz, P. (2012). Code protection in android. In Rheinische

Friedrich-Wilhelms-Universitat Bonn, Germany.

Shafiq, Z. and Liu, A. (2017). A graph theoretic approach

to fast and accurate malware detection. In IFIP Net-

working.

Vall

´

ee-Rai, R., Co, P., Gagnon, E., Hendren, L., Lam, P.,

and Sundaresan, V. (1999). Soot - a java bytecode

optimization framework. In CASCON.

Viennot, N. (2014). Github-nviennot/playdrone: Google

play crawler.

VirusTotal (2019). VirusTotal. https://www.virustotal.com.

Zhang, M., Duan, Y., Yin, H., and Zhao, Z. (2014).

Semantics-aware android malware classification using

weighted contextual api dependency graphs. In CCS.

DaDiDroid: An Obfuscation Resilient Tool for Detecting Android Malware via Weighted Directed Call Graph Modelling

219