Feedback on a Self-education Module for AutoCAD:

Development of a Self-education Module for Civil Engineering

Loreline Faugier

1

, Patrick Pizette

1

, Gaëlle Guigon

2

and Mathieu Vermeulen

2

1

Département Génie Civil & Environnemental, IMT Lille Douai, 764 Boulevard Lahure, Douai, France

2

IMT Lille Douai, 764 Boulevard Lahure, Douai, France

Keywords: Design-based Research, Participatory Design, User-centered Design, Iterative Design, TEL, ITS, Utility,

Usability, Acceptability.

Abstract: This paper aims to present the work carried out to improve a self-education module for AutoCAD dedicated

to a class of civil engineering students. The whole development and research about an evaluation method is

led by students helped by researchers, in accordance with the principles of participatory design and user-

centered design. After finding objective evaluation criteria such as utility, usability and acceptability, we

translate them into subcriteria and questions applied to the module. Very few students took the survey and

that is a major problem to draw conclusions and to get improvement lines for the module. Thus, all along the

paper, we emphasize on the setup of the survey and focus is given to measuring the efficiency of the evaluation

method itself.

1 INTRODUCTION

With the recent development of all kinds of online

learning structures, more and more people get access

to free educational resources. In higher education, the

digital environment occupies a growing place, as it is

cheaper than a regular course and easily adaptable to

one’s schedule. However, as a new education method,

it deserves to be thought through and analysed in

order to make sure of its efficiency (Miller, 2017).

Beyond the module’s content, its design should

accommodate the student’s needs, capabilities and

ways of behaving (Norman, 1988), to make sure the

module enhances learning and does not generate any

frustration.

This project started after a class of civil

engineering students from IMT Lille Douai expressed

their need to be trained to the software AutoCAD (an

Autodesk software: www.autodesk.com), which is

widely used for drawing and editing plans in many

construction domains. As there is no vacant slot in the

course schedule, researchers from the school asked

two students to develop a module available online.

The next year, two other students worked on the

module, re-arranged it and added some content.

Two years later after the beginning of the project,

this paper is written to create a feedback methodology

involving the students in all the steps and discuss the

hypothesis made to analyse the results, and finally

improve this self-training module.

2 RESEARCH APPROACH AND

CONTEXT

Letting the students design, develop, analyse and

improve the module (and lead this research project as

well) is a conscious decision (Abras, 2004), in

accordance with the principles of participatory design

(Muller and Kuhn, 1993). In addition to the non-

physical form of the course, it is non-compulsory, and

it is not evaluated. Given that, the students are the

most appropriate persons to design the module for

two main reasons:

- They would know what it is to be a beginner and

feel where the main misunderstandings are;

- They would know what is disheartening and how

to keep up the interest.

In the field of applied mechanics, it seems that e-

learning technologies are traditionally created and

designed by the teachers only (Boucard, 2015;

Mouton, 2015). Here, it is a student who conducts this

research, from collecting the bibliography, to

Faugier, L., Pizette, P., Guigon, G. and Vermeulen, M.

Feedback on a Self-education Module for AutoCAD: Development of a Self-education Module for Civil Engineering.

DOI: 10.5220/0007761705050510

In Proceedings of the 11th International Conference on Computer Supported Education (CSEDU 2019), pages 505-510

ISBN: 978-989-758-367-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

505

elaborating the evaluation method and writing this

paper, giving the research its originality.

Another leading value of our project is design-

based research (Wang and Hannafin, 2005), which

implies to put the project directly on the spot, in

contact with the users, instead of in a lab or an office.

This choice makes sense, since the module is

developed on demand of the students, for the students

and by the students.

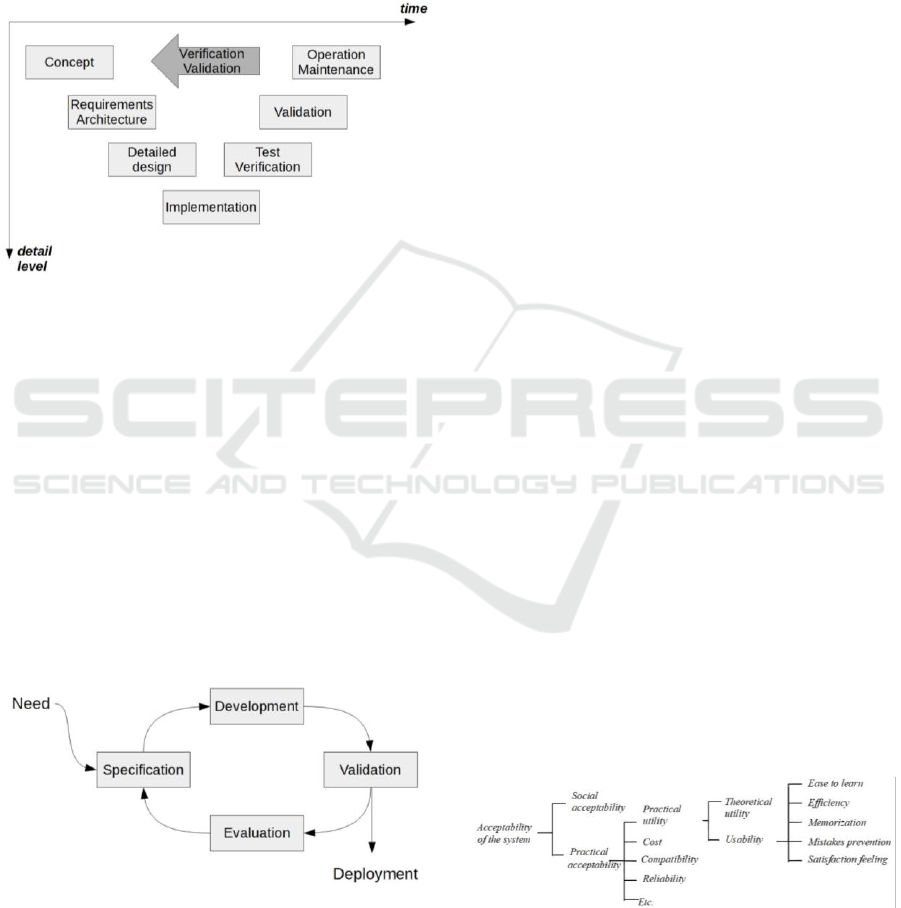

Figure 1: V-Model (Turner, 2007).

The project started two years ago, led by students

from the batch. Following the V-model as in Figure

1, they identified the need by conducting a survey in

the class. Their challenge was to find the adapted

content and medium for the module. An introduction,

simple drawing tools formations and small exercises

(from drawing a line to copying a floor plan) were

designed in the form of interactive slideshows, using

the module Opale for SCENARIchain (Quelennec,

2010) (Gebers and Crozat, 2010). The module also

contains indications on how to install the software,

and it includes screenshots and video recordings of

the software. The learner can navigate through the

module either in a linear order or click on the different

parts of the outline to skip some parts.

Figure 2: Iterative life-cycle (Nielsen, 1993).

After this first step, the module was created but

still offers room for improvement. In order to offer a

good quality education tool, it appeared as necessary

to orient the module towards a continuous

improvement process that is an iterative process

described in Figure 2. During the second year of the

project, the leaders of the project asked one student to

test the module and to give them an oral feedback.

Afterwards, they added some content and

reformulated some parts of the module.

The object of this research project is then to define

an evaluation method that would provide strong

guidelines to improve the module. Thus, this method

could be re-used on several cycles, even after

changing the module (after adding some content or a

reengineering process).

3 DEFINING AN EVALUATION

METHOD

Many parameters influence the performance of the

module. Not only parameters such as the content

memorized or the difficulty of the exercises, but also

the ease of navigation between the contents or the

aesthetic of the module have an impact on the user

experience, and consequently, how efficiently they

learn. Three dimensions can be identified for the

evaluation of a self-learning module (Tricot, 1999):

- The utility refers to the traditional pedagogical

goals: increasing the student’s skills and knowledge.

It measures how well the teachers’ objectives and

what the students have actually learned match: this is

what is evaluated in a classical course.

- The usability is the possibility to use the module and

is related to the interface, the navigation and the

coherence of the scenario.

- The acceptability expresses the way the user

perceives the module (positive or negative opinions

and attitudes). It can be individual as well as

collective, and is influenced by factors such as

culture, job, motivation, social organization, or

practices that the module affects.

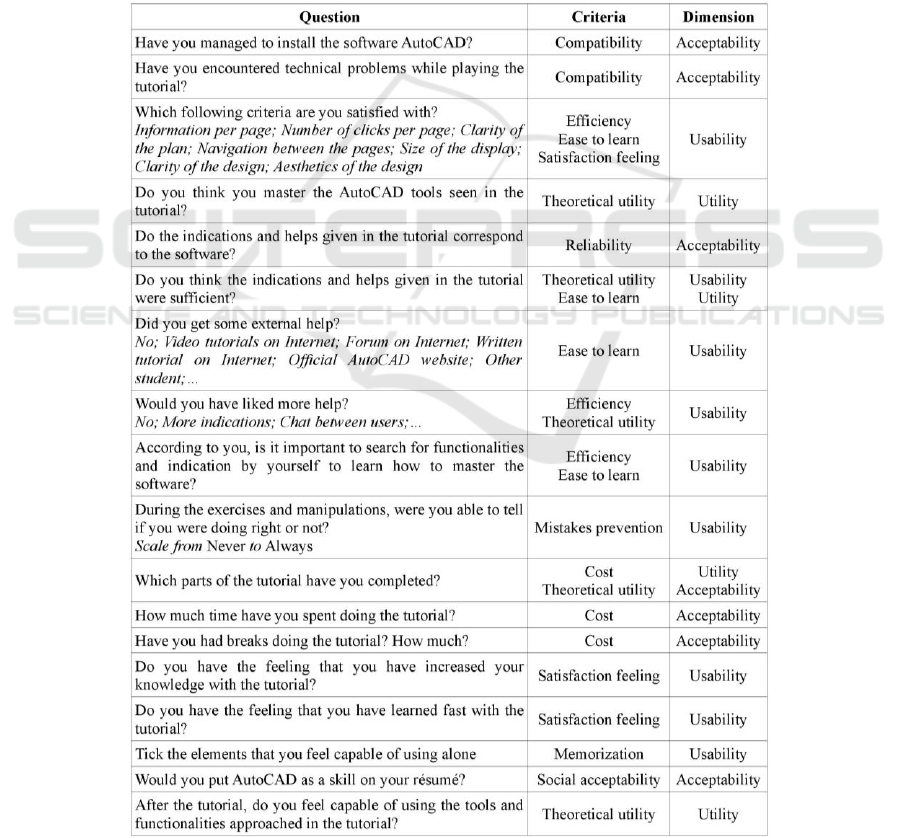

These dimensions can be translated in a list of

criteria enunciated by Nielsen in his work on usability

(Nielsen, 1993), as in Figure 3.

Figure 3: Nielsen’s model (Tricot, 2003).

Although this graph shows a path, we consider

that the different dimensions are not in a chain but can

CSEDU 2019 - 11th International Conference on Computer Supported Education

506

be evaluated separately. The criteria are the ones we

try to validate, or invalidate, through the evaluation.

The evaluation medium must take into account

two mains requirements that we identified:

- Do not affect the user experience while he or she is

doing the module;

- Provide reliable results by avoiding non-precise

questions and focusing on the skills developed thanks

to the module.

We consider different methods, like interviews (free,

or directed by questions), screen recordings, form,

analyse of the user traces (time, path…), comparison

between experimented users and beginner and so on.

An issue there is to determine what kind of data is

available (Choquet, 2007). If we wish to develop the

evaluation method in various schools and

universities, the indicators should not require

complicated programming or software installation,

neither from the teachers nor from the students. In our

case, we are also working on a very short cycle (six

months) for the evaluation and the improvement of

the module, with new students conducting the project

every year. Because it is non-invasive, simple to

design and simple to use, providing readable and

objective results, the best way to get a feedback on

the user experience turns out to be a form, carefully

redacted. This type of evaluation is in accordance

with the data collection protocol advocated in the

THEDRE (Traceable Human Experiment Design

Research) flowchart (Mandran, 2018). In our form,

each question refers to one or several criteria, detailed

in Figure 4.

Figure 4: List of questions, corresponding criteria and dimensions.

Feedback on a Self-education Module for AutoCAD: Development of a Self-education Module for Civil Engineering

507

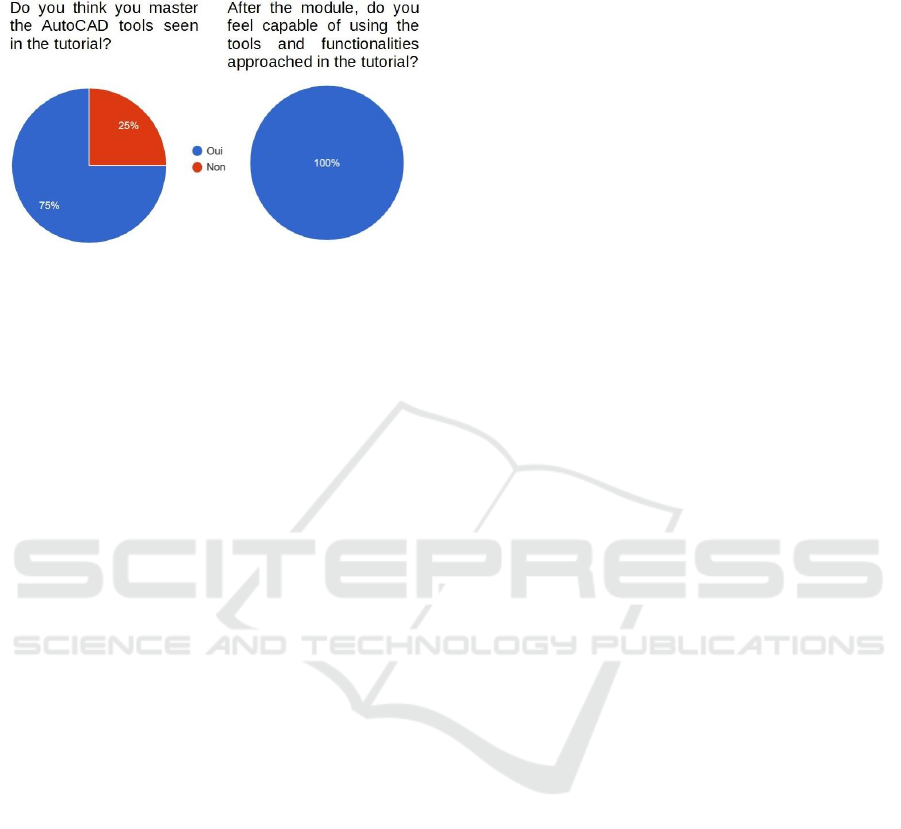

A question concerning the whole module is

voluntarily repeated twice in the form, at the

beginning and at the end of the module (Do you think

you master the AutoCAD tools seen in the tutorial? /

After the module, do you feel capable of using the

tools and functionalities approached in the tutorial?).

These questions are meant to verify two aspects:

firstly, as it is very general question evaluating utility,

if the pedagogic content of the module is as satisfying

as in a regular course. And secondly, this repetition

acts as an evaluation for the form itself, checking that

it does not changes the user's opinion. To meet its

objective, the form should not affect the user’s

answers, and hence we expect the answer to be the

same for the two questions.

4 EVALUATION PHASE

4.1 Presentation of the Sample

The first sample of students who tried the module is

very small and consists of four students, in their

fourth year of engineering school and specialized in

civil engineering since one semester. All of them are

beginners in AutoCAD. They volunteered to test the

module on their free time and were asked to write

down the time spent on the module and to fill the

form.

4.2 Presentation of Answers

The form is designed using Google Forms, which

allows to visualize the answers either in a collective

way (percentages, charts and diagrams) or to each

person’s answers. As the sample is very small, it is

possible and necessary to look very carefully each

question and each individual.

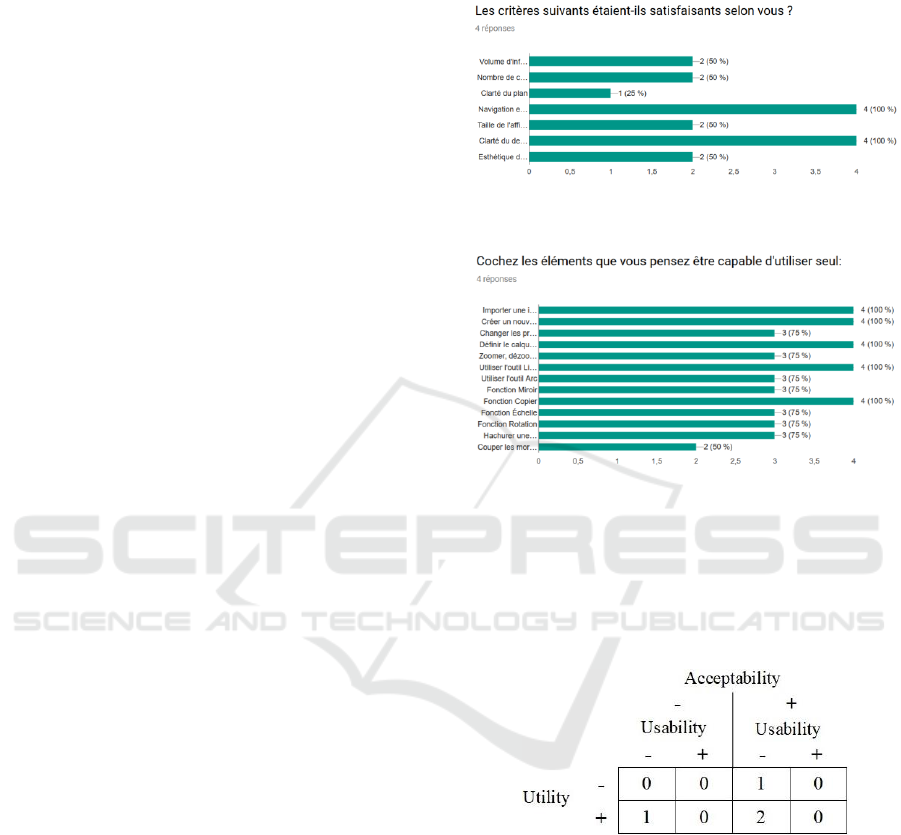

4.3 Analysis of the Results: Feedback

on the Module

We want to identify what points of the module have

to be improved. For example, the bar chart in Figure

5 shows a critical lack of usability: only two criteria

are validated by all the students, and most students are

unsatisfied with the remaining criteria.

The analysis of the memorization results as in

Figure 6 allows us to target the weakest parts of the

module, that are the parts less memorized by the

students.

The table in Figure 4 is used to determine if a

criterion is validated or not for each user, and based

on that, we are able to tell if in average, a dimension

is validated or not. The results are presented in the

form of a contingency table (Tricot, 2003) in Figure

7.

Figure 5: Result of the form (usability): In your opinion,

were the following criteria satisfied?

Figure 6: Result of the form (memorization): Tick the

features that you feel able to use on your own.

For instance, for 2 people, the acceptability and

the utility are validated, but not the usability. From

the contingency table and from Figure 5, we deduce

that the dimension that has to be first improved is

usability.

Figure 7: Contingency table (Tricot, 2003).

4.4 Analysis of the Results: Control of

the Evaluation Method

Finally, we want to check if our evaluation method

meets its goals. The results we got from the students’

answers are accurate enough to target several parts of

the module that must be improved.

Only one student gave different answers to the

similar questions. It is the same students who ticked

the less items for usability in Figure 5. As the first of

the repeated question comes rights after the usability

CSEDU 2019 - 11th International Conference on Computer Supported Education

508

question, we can presume that his answer was

influenced by the previous question.

Figure 8: Result of the repeated question.

5 IMPROVEMENTS OF THE

MODULE

For the tools less mastered by the students (cutting the

exceeding lines, snap tool, orthogonality and angles),

detailed explanations are added, including sometimes

screenshots of the AutoCAD software or screen video

recordings to show exactly how to do something

(Mariais 2017).

Also, as the outline clearness is one of the major

flaws pointed out by the students, the module outline

is simplified. In fact, the module is structured in a lot

of small parts with some of them containing only one

sentence, harming the navigation fluidity between the

different parts, creating potential confusion, loss of

attention, or annoyance. Hence, small parts are

displaced (“tips” and “hints” are grouped together,

and often, the conclusion or the introduction is

merged with an adjacent part) while parts are created

for bigger topics (Autodesk account creation,

zooming…).

Such modifications aim to improve the usability,

by making the module easy to learn and pleasant to

use.

6 FURTHER RESEARCH LINES

As said previously, the results obtained with our

survey and our evaluation are limited by the very

small number of students who tried the module. In

order to get a precise feedback on the module, we

have to focus less on the stats and more on the

individual reactions and suggestions. This first survey

can be considered as a pilot test, and to validate the

method it is necessary to conduct this research within

a larger number of users.

However, we are still able to find which

dimension is the weakest and which points are not

fully mastered by the students, in order to know what

to do and on which content to make the module more

efficient. The study proves that both the content and

the form of the module are significant in terms of user

experience, and both are improved in the new

module.

In keeping with the continuous development

process, the next step of this project is to propose the

improved module to the new batch of students from

our school and to collect answer from a larger sample

of students by asking them to fill the same form.

Analyzing the answers would prove that the

modifications implemented have a real impact on the

dimensions evaluated, and on usability in particular.

In addition, during the evaluation, we consider

that utility, usability and acceptability were

independent dimensions, in order to keep the

evaluation method simple. But it is likely that

improving one of the dimensions will affect, in good

or bad, the two other dimensions.

ACKNOWLEDGEMENTS

Special thanks to all the researchers and teachers from

IMT Lille Douai for kindly sharing their knowledge

and experience.

Thanks also to Laure FRIGOUT and Romain

PROKSA, François MORVAN and Antoine

THIERION, the two pairs of students who first led the

project and drew the path of this research.

REFERENCES

Abras, C., Malonay-Krichmar, D., Preece, J., 2004. User-

centered design. In : Bainbridge, W., Berkshire

Encyclopedia of Human-Computer Interaction,

Berkshire Publishing Group, Great Barrington,

Massachusetts, USA, pp. 763-768.

Boucard, P.A., Chamoin, L., Guidault, P.A., Louf, F.,

Mella, P., Rey, V., 2015. Retour d’expérience sur le

MOOC Pratique du Dimensionnement en Mécanique.

In : 22

ème

Congrès Français de Mécanique, Lyon,

France.

Choquet, C., Iksal, S., 2007. Modélisation et construction

de traces d'utilisation d'une activité d'apprentissage :

une approche langage pour la réingénierie d'un EIAH.

Sciences et Techniques de l'Information et de la

Communication pour l'Education et la Formation

(STICEF), Volume 14, Special issue : Analyses des

traces d’utilisation dans les EIAH. Available from:

sticef.org [Accessed 10 May 2017].

Feedback on a Self-education Module for AutoCAD: Development of a Self-education Module for Civil Engineering

509

Gebers, E., Crozat, S., 2010. Chaînes éditoriales Scenari et

unité ICS. Distances et savoirs 7.3, pp. 421-442.

Labat, J.-M., 2002. EIAH : Quel retour d’informations pour

le tuteur ? In : Technologies de l’Information et de la

Communication dans les Enseignements d’ingénieurs

et dans l’industrie, Villeurbanne, France.

Mandran, N., 2018. Méthode traçable de conduite de la

recherche en informatique centrée humain. Modèle

théorique et guide pratique. ISTE Editions.

Mariais, C., Bayle, A., Comte, M.-H., Hasenfratz, J.-M.,

Rey, I., 2017. Retour d’expérience sur deux années de

MOOC Inria. Sciences et Techniques de l'Information

et de la Communication pour l'Education et la

Formation (STICEF), Volume 24, Numéro 2, Special

issue : Recherches actuelles sur les MOOC. Available

from : sticef.org [Accessed 10 May 2017].

Miller, M., 2017. Les MOOC font pschitt, Le Monde

[online]. Available at :

http://abonnes.lemonde.fr/idees/article/2017/10/22/les-

mooc-font-pschitt_5204379_3232.html [Accessed 15

Oct. 2017].

Mouton, S., Clet, T., Blanchard, A., Ballu, A., Niandou, H.,

Guerchet, E., 2015. Dynamiser l’enseignement avec

Moodle : QCM et travaux pratiques virtuels. In : 22

ème

Congrès Français de Mécanique, Lyon, France.

Muller, M., Kuhn, S., 1993. Participatory design,

Communications of the ACM - Special issue

Participatory Design, Volume 36, Issue 4, pp. 24-28.

Nielsen, J., 1993. Iterative User-Interface Design,

Computer, Volume 26, Issue 11, pp. 32-41.

Nielsen, J., 1993. Usability Engineering, Academic Press,

Boston.

Norman, D., 1988. The Psychology of Everyday Things,

Doubleday, New York.

Quelennec, K., Vermeulen, M., Narce, C., and Baillon, F.,

2010. De l'industrialisation à l'innovation pédagogique

avec une chaîne éditoriale. In : TICE 2010.

Tricot, A., Plegat-Soutjis, F., Camps, J.-F., Amiel, A., Lutz,

G., Morcillo, A., 2003. Utilité, utilisabilité,

acceptabilité : interpréter les relations entre trois

dimensions de l'évaluation des EIAH. In :

Environnements Informatiques pour l'Apprentissage

Humain 2003, Strasbourg, France.

Turner, R., 2007. Toward Agile Systems Engineering

Processes, CrossTalk, The Journal of Defense Software

Engineering, April 2007, pp. 11-15.

Wang, F., Hannafin, M., 2005. Design-based research and

technology-enhanced learning environments,

Educational Technology Research and Development,

Volume 53, Issue 4, pp. 5-23.

Wilensky, U., Stroup, W., 1999. Learning through

participatory simulations: network-based design for

systems learning in classrooms. In: The International

Society of the Learning Sciences, Proceedings of the

1999 conference on Computer support for

collaborative learning, Palo Alto, California, USA.

CSEDU 2019 - 11th International Conference on Computer Supported Education

510