Using the CGAN Model Extend Encounter Targets Image Training

Set

Ruolan Zhang

a

and Masao Furusho

Graduate School of Maritime Sciences, Kobe University, 5-1-1 Fukae-Minami, Higashinada, Kobe, Japan

Keywords: Data Generated, Target Ships, Training Set, Unmanned Navigation, Autonomous Decision.

Abstract: A fully capable unmanned ship navigation requires full autonomous decision-making, large-scale decision

model training data to answer for these conditions is essential. However, it is difficult to obtain enough scenes

training data in a real sea navigation environment. In response to possible emergency situations even no shore-

station support, this paper proposes a method using conditional generative adversarial networks (CGAN) to

generate the most executable large-scale target ships image set, which can be used to training various sea

conditions autonomous decision-making model. In practice, most of the current research on unmanned ships

are based onshore remote control or monitoring. Nonetheless, in some extremely special circumstances, such

as communication interruption, or if the ship cannot be guided or remotely controlled in real time on the shore,

the unmanned ship must make an appropriate decision and form new plans according to the encounter targets

and the whole current situation. The CGAN model is a novel means to generate the target ships to construct

the whole encounter sea scenes situation. The generated targets training image set can be used to train decision

models, and explore a new way to approach large-scale, fully autonomous navigation decisions.

1 INTRODUCTION

The equipment used in modern ocean-going vessels

can be roughly divided into two types: navigation aids

that help the crew to make the right decisions and

control equipment that the seafarer’s control. To

reduce running costs and human factors in accidents,

unmanned vessels with autonomous perception and

decision-making are the future development

direction. For this, a model or system is required to

receive data from the navigation aids and make

appropriate decisions for the obtained data through

the control device to complete the autonomous

navigation. The heavy sea environment may interrupt

satellite communication and result in loss of remote-

control capacity. To achieve long-distance and

completely autonomous, unmanned merchant ship

transportation, the system must be able to make its

own

a

decisions at any time in response to

emergencies, change the established strategy, and

eliminate the danger.

As shown in Figure 1 on the following page, the

ship is sailing along the coast, the target ship

represented by T1~T3, and T* represents the fishing

a

https://orcid.org/0000-0001-6636-3734

boat group that performs fishing operations in one

area. Short-distance path replanning is possible,

especially in extreme navigation environments, and

without remote assistance from the shore, the

unmanned ship must rely on the limited data

information to make appropriate decisions (Liu and

Bucknall, 2015). From the perspective of the bridge,

the confirmation of the target ship and its trajectory

are not easily presented in a three-dimensional

manner. Furthermore, the relative positional

relationship between the target ship and the ship is

critical to the training of decision-making neural

networks, so it is important to construct enough

confrontation scenarios to train large-scale

unsupervised decision models. Another problem of

unsupervised learning is the determining of how to

generate a new path for no-human participation in

decision making; this problem sets high demands on

the model.

First, the available models built using

unsupervised methods are reviewed. The most

straightforward idea is to estimate the sample

distribution p(x) from the training set and sample p(x)

to generate a new sample “similar to the training set”.

Zhang, R. and Furusho, M.

Using the CGAN Model Extend Encounter Targets Image Training Set.

DOI: 10.5220/0007676803270332

In Proceedings of the 5th International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS 2019), pages 327-332

ISBN: 978-989-758-374-2

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

327

Figure 1: Real sea encounter environment.

For low-dimensional samples, a simple

probabilistic model with only a few parameters (such

as Gaussian) can be fitted to p(x), whereas high-

dimensional samples (such as images) are difficult to

implement. In the extreme navigation environment,

the input of the sensing device may be encoding low-

latitude information, or other high-latitude

information such as images. A classic method is to

construct an undirected graph by using the Restricted

Boltzmann Machine (RBM). The energy values and

node probability of the graph have an exponential

relationship (Nair and Hinton, 2010). The training set

is used to set the coefficients of nodes and edges in

the graph to express the relationship between

individual elements and connected elements in x.

This method is cumbersome and computationally

complex. The mixing speed of the Markov chain is

very slow when sampling (Neal, 2000). Another

method is the use of deep belief networks (DBNs), in

which a single RBM and several directed layers are

used to form a network. This method has the same

computational complexity (Hinton et al., 2006).

Another popular method is the use of convolutional

neural networks (CNNs). Although CNNs show

immediate results in supervised learning including

classification and segmentation, how to conduct

unsupervised learning has always been a problem

(Wang and Gupta, 2015). Generative adversarial nets

(GAN) can solve this problem systematically.

2 PREVIOUS WORK

2.1 Generative Adversarial Nets (GAN)

GAN is a new method of training the generation

model proposed by Goodfellow et al., (2014); the

method includes the generation and discrimination of

two “adversarial” models. The generated model (G)

is used to capture the data distribution, and the

discriminant model (D) is used to estimate the

probability that a sample is derived from real data

rather than generating samples. Both the generator

and discriminator are common convolutional

networks as well as fully connected networks. The

generator generates a sample from the random vector,

and the discriminator discriminates between the

generated sample and training set sample. Both train

simultaneously as shown in equation (1):

When training the discriminator, discriminant

model D is fixed, while the parameters of generator G

are adjusted to minimize the expectation of

as shown in equation (2):

where model G is fixedly generated and the

parameters of the D are adjusted to maximize the

expectation of

. As

shown in equation (3)

This optimization process can be attributed to a

“two-player minimax game” problem. Both purposes

can be achieved through a backpropagation method.

A well-trained generation network can transform any

noise vector into a sample similar to the training set.

This noise can be seen as the encoding of the sample

in a low dimensional space. The generator generates

meaningful data based on random vectors. In contrast,

the discriminator learns how to determine real and

generated data, and then passes the learning

experience to the generator, enabling the generator to

generate more workable data based on random

vectors. Such a trained generator can have many uses,

one of them being environmental generation in

automatic navigation. This paper proposes the

feasibility of this method and applies it in image set

generation to allow unmanned ships to achieve fully

autonomous decision-making processes.

2.2 Generated Image Set Data

The acquiring of real data on critical sea conditions is

difficult; therefore, acquiring data similar to real

scenes is important, especially when data is scarce.

When acquiring data for training the automatic

driving system, according to the concept used in this

study, the use of GAN can be extended to replace the

real image according to the virtual image generated

(1)

(2)

(3)

VEHITS 2019 - 5th International Conference on Vehicle Technology and Intelligent Transport Systems

328

from the generator. (Yang et al., 2018) used an

opposing concept, in which the scene image obtained

was directly acquired through real driving by using

unsupervised learning to remove the details unrelated

to the prediction of driving behavior. It was simplified

to the refinement specification representation in the

virtual domain. Accordingly, the ship-driving

instructions were predicted to form a new training

program that is more efficient and accurate.

Unmanned surface navigation involves a type of

driverless navigation. The deep Q-network (DQN)

algorithm can be used to train the unmanned ship

navigation model (Mnih et al., 2015); this is the

embodiment of the wider application of GAN.

Goodwin (Goodwin 1975) derived, training data from

sensory data collected by the ship’s real navigation,

and real navigation seafarers inevitably maintained

sufficient safety distance. Therefore, when an

unmanned ship encounters a dangerous situation, the

experience replay utilized is actually not sufficient.

This is because adopting appropriate decision-

making and behavioral judgment based on the

previous data-training results is difficult when the

unmanned ship actually encounters a sea state that is

different from typical sea conditions (Schaul et al.,

2015).

The purpose of the present study involves

generating data containing more similar critical sea

conditions through the GAN model by using a small

number of maritime-navigation real data in critical

situations. The aforementioned data potentially

corresponds to pre-collision scenes encountered by

two ships [including pictures, Automatic

identification system (AIS) data, and radar data]. For

example, the data can also correspond to a scene that

occurred prior to when a ship is stranded in the waters

with insufficient water depth (the most important

factor corresponds to the water-depth data). The

problem for which the DQN algorithm does not learn

from experience in the case of the aforementioned

data sparseness is solved using the GAN algorithm.

3 VIRTUAL TRAINING IMAGE

SET GENERATION MODEL

3. 1 Conditional GANs

Conditional GANs (CGAN) is an extension of the

original GAN, in which both the generator and

discriminator add additional information y to the

condition. Here, y can be any information, such as

category information or any other modality

information data as shown equation (4). (Mirza and

Osindero, 2014). If condition variable y is a category

label, CGAN can be considered as an improved

supervised model of the pure unsupervised GAN.

This simple and straightforward improvement has

proven to be very effective and widely used in

subsequent related work (Denton et al, 2015; Radford

et al., 2015).

The conditional GAN is achieved by feeding

additional information y to the discriminator and

generator models as part of the input layer. In the

generator model, the input a priori noise p(z) and

condition information y are combined to form a joint

hidden-layer representation. The adversarial training

framework is relatively flexible in terms of the

composition of the hidden-layer representation.

Similarly, the objective function of conditional GAN

is a “Conditional two-player minimax game.”

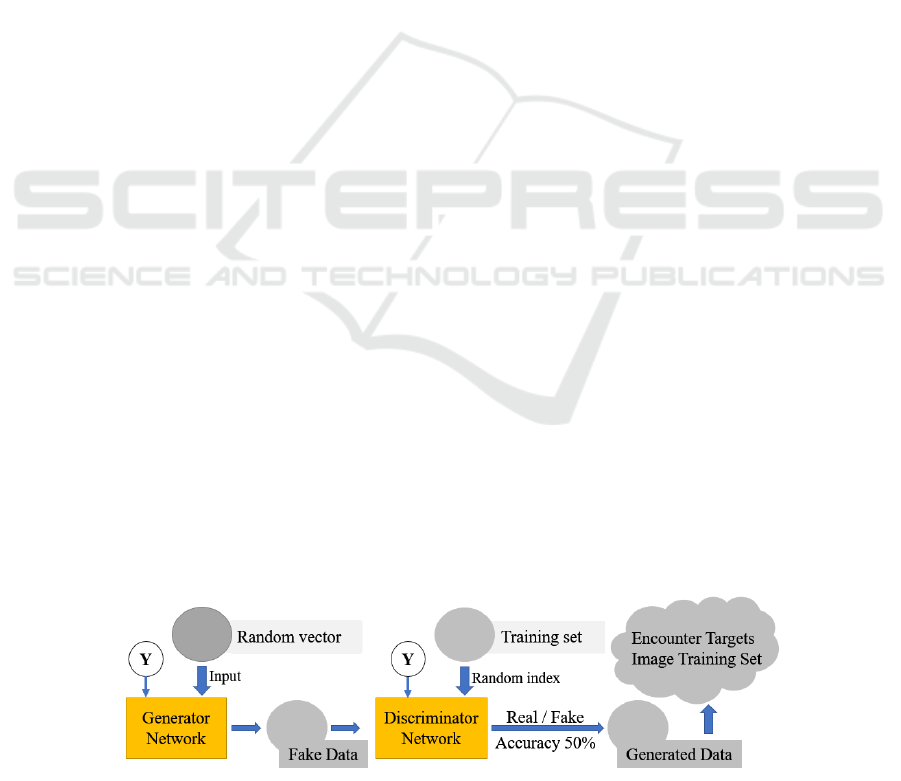

3.2 The Process of CGAN in

Sea-Scene-Environment

Construction

The specific process to obtain various sea-condition

scenarios is shown in Figure 2. First, condition

information Y is entered into the generator and

discriminator, and then a few random vectors are

input to the generator network, and fake data are

subsequently generated by the generator. These fake

data can correspond to a few ship-state pictures or a

few other navigation data, such as AIS data of nearby

(4)

Figure 2: Applying CGAN algorithm to generate encounter targets image training set.

Using the CGAN Model Extend Encounter Targets Image Training Set

329

Figure 3: Generated lifeboat from submarine by Big-GAN.

ship or the path planning data after the ship route is

updated. We inputted the fake data to the

discriminator, which determines whether the input

data are real or generated by the generator based on a

random comparison with the real data. The similarity

between the data by the generator and the real data

from the discriminator progressively increases, thus

increasing the discriminating ability required by the

discriminator. Additionally, the generator and

discriminator share a mutually competitive and

adversarial relationship. The generated data are

considered to sufficiently mirror real data when the

fake data input by the generator appears sufficiently

realistic, and the accuracy of the discriminator at this

time is approximately 50%. This corresponds to the

sea-scene data required in critical sea situations.

3.3 A Case Study for Generated Image

CGAN model image generation as shown in figure3.

This section mainly demonstrates how to generate a

lifeboat using images of submarines taken from

different angles using the Big-GAN model. Big-GAN

model proposed by Andrew Brock from Heriot-Watt

University (Brock et al., 2018). The authors proposed

a model (Big-GAN) with modifications focused on

the following three aspects: a. improving

conditioning by applying orthogonal regularization to

the generator; b. The orthogonal regularization

applied to the generator makes the model amenable to

the “truncation trick” so that fine control of the trade-

offs between fidelity and variety is possible by

truncating the latent space; c. stability is very

significant for large-scale image generate.

Figure 4: Generate lifeboat images by Big-GAN model.

Figure 5: A portion of the generated lifeboat image set.

Figure 6: A portion of the generated ocean liner image set.

We can input different random vectors and

combine the real data input by the discriminator to get

a large number of Real enough image data sets. This

study, we input random vectors are submarine. As

shown in Figure 4, we get three different types of

lifeboats, more importantly, the different background

environments of lifeboats can also achieve various

changes. It can be provided a large amount of training

data for our unsupervised decision model. It is much

VEHITS 2019 - 5th International Conference on Vehicle Technology and Intelligent Transport Systems

330

richer than the target ship data collected from real sea

environment.

4 APPLICATION

4.1 Generated Target Image Set

The most important part of this study is to obtain a

data set of the target ship with sufficient quality and

quantity. As shown in Figure 5, a portion of the entire

large-scale lifeboat target image data set is shown.

These images were not taken by the camera and were

generated entirely from our GAN model. Using our

model, we can generate various situations at sea

scenes and the various forms that the own ship may

encounter; even various types of accidents, such as

collisions, stranding, fire, loss of goods, etc. Not only

the training data set for lifeboats, but also the ocean

liner data set shown in Figure 6, as well as data sets

for various other types of marine moving targets.

4.2 Generated Data for Unsupervised

Decision Model Training

GAN is easy to embed into the framework of

reinforcement learning. For example, when using

Deep Q-Network to solve collision avoidance

problems, GAN can be used to learn the conditional

probability distribution of an action. The agent can

select reasonable images based on the response of the

generated model to different actions.

In the training of image recognition models of

convolutional neural networks and the training of

decision models such as deep reinforcement learning,

the quality of the input data greatly affects the effect

of the training results. The target image dataset

generated by CGAN has the same image size and the

same image density, which can easily solve the

problem of inconsistent input data during the training

process. In addition, the CGAN model solves many

of the scene data that are difficult to obtain in a real

navigation environment, making it possible to use

large-scale data input for deep learning.

5 CONCLUSIONS

This paper using the Conditional Generative

Adversarial Networks to generate image set of the

available target ships and improve the quantity and

quality of training data. The surrounding environment

data of the own ship obtained by the sensors, mainly

includes AIS data, radar data, and image data. Small

vessels, especially those in some areas, do not have

AIS data, radar data is greatly affected by the weather.

Therefore, training automatic driving unmanned

ships are inseparable from the support of image data

sets, especially the image data of small ships in

various states. This paper uses the image data of

target ship as a sample, which obtained from the

perspective of the ship’s bridge, using the CGAN

algorithm to generate more, and the same type of the

target ship image data to support model training.

According to different condition information, through

the CGAN model, it is possible to generate more

different environmental states, such as different near-

shore backgrounds, different city lighting pollution,

different weather conditions, and even different

seasons, new images of different ocean wave levels.

This method can greatly expand the quantity and

quality of the training data set, therefore, easy for

completing the construction of a better autonomous

unsupervised decision model.

REFERENCES

Brock A, Donahue J, Simonyan K. Large scale gan training

for high fidelity natural image synthesis[J]. arXiv

preprint arXiv:1809.11096, 2018.

Denton, E. L., Chintala, S., and Fergus, R. (2015): Deep

generative image models using a laplacian pyramid of

adversarial networks. In Advances in neural

information processing systems.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B.,

Warde-Farley, D., Ozair, S., ... and Bengio, Y. (2014):

Generative adversarial nets. In Advances in neural

information processing systems.

Goodwin, E. (1975): A statistical study of ship domains.

The Journal of Navigation.

Hinton, G. E., Osindero, S., and Teh, Y. W. (2006): A fast

learning algorithm for deep belief nets. Neural

computation.

Liu, Y. and Bucknall, R. (2015): Path planning algorithm

for unmanned surface vehicle formations in a practical

maritime environment. Ocean Engineering.

Mirza, M., and Osindero, S. (2014): Conditional generative

adversarial nets. arXiv preprint arXiv:1411.1784.

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A.,

Veness, J., Bellemare, M. G., ... and Petersen, S. (2015):

Human-level control through deep reinforcement

learning. Nature.

Nair, V. and Hinton, G. E. (2010): Rectified linear units

improve restricted boltzmann machines. In

Proceedings of the 27th international conference on

machine learning (ICML-10).

Neal, R. M. (2000): Markov chain sampling methods for

Dirichlet process mixture models. Journal of

computational and graphical statistics.

Radford, A., Metz, L., and Chintala, S. (2015):

Unsupervised representation learning with deep

Using the CGAN Model Extend Encounter Targets Image Training Set

331

convolutional generative adversarial networks. arXiv

preprint arXiv:1511.06434.

Schaul, T., Quan, J., Antonoglou, I., and Silver, D. (2015):

Prioritized experience replay. arXiv preprint

arXiv:1511.05952.

Wang, X. and Gupta, A. (2015): Unsupervised learning of

visual representations using videos. In Proceedings of

the IEEE International Conference on Computer

Vision.

Yang, L., Liang, X., and Xing, E. (2018): Unsupervised

Real-to-Virtual Domain Unification for End-to-End

Highway Driving. arXiv preprint arXiv: 1801.03458.

VEHITS 2019 - 5th International Conference on Vehicle Technology and Intelligent Transport Systems

332