A Hybrid Neural Network and Hidden Markov Model

for Time-aware Recommender Systems

Hanxuan Chen and Zuoquan Lin

*

Department of Information Science, School of Mathematical Sciences,

Peking University, Beijing 100871, China

Keywords:

Hidden Markov Model, Neural Network, Collaborative Filtering, Recommender Systems.

Abstract:

In this paper, we propose a hybrid model that combines neural network and hidden Markov model for time-

aware recommender systems. We use higher-order hidden Markov model to capture the temporal information

of users and items in collaborative filtering systems. Because the computation of the transition matrix of

higher-order hidden Markov model is hard, we compute the transition matrix by deep neural networks. We

implement the algorithms of the hybrid model for offline batch-learning and online updating respectively. Ex-

periments on real datasets demonstrate that the hybrid model has improvement performances over the existing

recommender systems.

1 INTRODUCTION

Recommender systems help the users find interesting

items from a large amount of products. Time-aware

recommender systems (TARS) (Campos et al., 2014)

exploit temporal information and track the evolution

of users and items that are beneficial for giving sat-

isfactory recommendations. Hidden Markov Models

(HMMs) and Neural Network (NNs) are two major

approaches to TARS.

As a probabilistic approach, HMMs use the hid-

den states to describe the dynamic of users and items

(Sahoo et al., 2012). In the literature, recommender

systems based on HMMs commonly use first-order

HMMs, i.e., the hidden states only depend on the last

one state. For real world recommendations, the users’

interests have long-term dependencies. For example,

on an online-shopping website, the customers of ma-

ternity dress are likely to look articles for babies sev-

eral months later. If there is not long-term affect in

the transitions of the hidden states, this interest prop-

agations will be covered by more frequent purchases

of daily uses. Higher-order HMMs (HOHMMs) are

natural way to model the problem of long-term de-

pendencies. However, it is impractical for recom-

mender systems depended on HOHMMs, since the

cost to compute the state transitions is exponential for

the length of dependencies.

*

Correspondent author

With the development of deep learning, NNs have

got much attention at recommender systems in re-

cent years (Zhang et al., 2017). Recurrent neural net-

works (RNNs) are suitable for sequential data with

long-term dependencies and successful in natural lan-

guage process (Hochreiter and Schmidhuber, 1997;

Cho et al., 2014). There are a number of recom-

mender systems based on RNNs (Hidasi et al., 2016a;

Hidasi et al., 2016b; Jannach and Ludewig, 2017;

Chatzis et al., 2017; Wu et al., 2017; Soh et al., 2017;

Devooght and Bersini, 2016; Chen et al., 2018). They

concentrate on the sessions or the behavior sequences

of users in which RNNs are used to model the se-

quential data. Although the RNNs are usable for the

sequence of users’ behavior with long-term depen-

dencies, they have several shortages compared with

HMMs. Firstly, most RNNs use the order of the users’

behaviors, but neglect the time span between the be-

haviors. Secondly, there is not an overall time axis in

RNNs to indicate the actual time point of each behav-

iors. RNNs can not describe the temporal relations of

the behaviors from multiple users. Thirdly, a single

RNN to model the sequences from all the users makes

the model lack of personalization. Finally, HMMs

have the meaning of the hidden states for the anal-

ysis of users’ types, while RNNs can not have such

meaning.

In this paper, we propose a hybrid model NHM

that combines NN and HMM for time-aware recom-

mender systems. We use HOHMM to capture the

204

Chen, H. and Lin, Z.

A Hybrid Neural Network and Hidden Markov Model for Time-aware Recommender Systems.

DOI: 10.5220/0007380402040213

In Proceedings of the 11th International Conference on Agents and Artificial Intelligence (ICAART 2019), pages 204-213

ISBN: 978-989-758-350-6

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

temporal information of both users and items in col-

laborative filtering. Because the computation of the

transition matrix of HOHMM is hard, we replace the

transition matrix by NN. The hybrid model takes ad-

vantages of NN and HOHMM and has improvement

efficiency and better precision for recommendations.

We implement the algorithms of NHM for offline

batch-learning and online updating respectively. Ex-

periments on real datasets show that the hybrid ap-

proach has better performances over the existing rec-

ommender systems.

The rest of this paper are organized as follows. In

section 2, we present the hybrid model NHM. In sec-

tion 3, we apply NHM in collaborative filtering and

provide the algorithms for the routines of the recom-

mendation. In section 4, we show the improvement

performance of our algorithms. In section 5, we dis-

cuss related works. Finally, we make conclusions in

the concluding section.

2 THE HYBRID MODEL

2.1 Model

What follows, we present the hybrid model NHM.

We introduce the temporal state random variable X

t

and the temporal evidence random variable E

t

, whose

possible values are in {1,. . . , N} and {1, . . . , K} respec-

tively. Let these variables follow HOHMM assump-

tions:

P(X

t

|X

t−1

, X

t−2

, . . . , E

t−1

, E

t−2

, . . .)

=P(X

t

|X

t−1

, X

t−2

, . . . , X

t−L

)

=P(X

0

|X

−1

, X

−2

, . . . , X

−L

),

(1)

P(E

t

|X

t

, X

t−1

, X

t−2

, . . . , E

t−1

, E

t−2

, . . .)

=P(E

t

|X

t

)

=P(E

0

|X

0

),

(2)

where L is the order. In HOHMM, a transi-

tion matrix with N

L

rows is needed to describe

P(X

t

|X

t−1

, X

t−2

, . . . , X

t−L

) in (1) for the N

L

possible

value combinations of X

t−1

, X

t−2

, . . . , X

t−L

.

We use a neural network to replace the transition

matrix for the consideration of computation. Given

the marginal distribution of X at the previous L time

points,

P(X

t−i

) =

−−→

x

t−i

, i = 1, 2, . . . , L. (3)

Then, P(X

t

) is defined as

P(X

t

) = φ(

−−→

x

t−1

,

−−→

x

t−2

, . . . ,

−−−→

x

t−L

), (4)

where φ(·) is a neural network, in which the input

and output are vectors whose dimensions are LN and

N respectively. The output satisfies 0 ≤ φ

j

≤ 1 and

P

N

j=1

φ

j

= 1.

Note that we do not specify the type or structure

of the neural network φ(·). It can be a multilayer per-

ceptron, an RNN or any NN that can deal with the

requirement of such input and output. For instance,

we take GRU (Cho et al., 2014) to implement φ, that

can be represented as follows:

h

t−L

= GRU(

−−−→

x

t−L

,

−→

0 ),

h

i

= GRU(

−→

x

i

, h

i−1

), t − L < i ≤ t,

φ = softmax(

−→

h

t

).

(5)

The observation and initial states of the model

NHM are simply defined with a matrix and a vector

as usual in HMM:

P(E

t

= k|X

t

= j) = B

j,k

, (6)

P(X

t

= i) = π

i

, −L ≤ t < 0. (7)

2.2 Inference

The inference task is to find the conditional distribu-

tion P(X

t

|E

0:T −1

=

−→

e ), given an evidence sequence

−→

e = [e

0

, e

1

, . . . , e

T −1

]. To do this, we use the neural

network approximated forward-backward algorithm.

It imitates the procedure of the forward-backward al-

gorithm of HMM (Rabiner, 1989), and calculates an

approximate γ(t) = P(X

t

|E

0:T −1

=

−→

e ).

Firstly, we take the forward steps:

α(t) =

(

π, −L ≤ t < 0,

φ(α

0

(t − 1), . . . , α

0

(t − L)), 0 ≤ t;

(8)

where

α

0

(t) = normalize(α(t) B

:,e

t

). (9)

The symbol means element-wise product of two

vectors. B

:,e

t

means the e

t

column of the matrix B.

If there is not evidence at t (for t < 0 or t ≥ T ), B

:,e

t

is normalize(

−→

1 ). The function normalize(·) is defined

as follows:

normalize(

−→

v ) =

−→

v

P

N

j=1

|v

j

|

. (10)

Then, we take the backward steps:

β(t) =

(

normalize(

−→

1 ), T ≤ t < T + L,

ψ(β

0

(t + 1), . . . , β

0

(t + L)), t < T ;

(11)

A Hybrid Neural Network and Hidden Markov Model for Time-aware Recommender Systems

205

where

β

0

(t) = normalize(β(t) B

:,e

t

). (12)

ψ(·) is another neural network whose input and out-

put have the same lengths as φ(·). We call it the re-

verse sequence neural network of φ. Intuitively, if φ

is the function (

−→

v

L

,

−−−→

v

L−1

, . . . ,

−→

v

1

) →

−−−→

v

L+1

, ψ is the func-

tion (

−→

v

2

,

−→

v

3

, . . . ,

−−−→

v

L+1

) →

−→

v

1

.

Finally, we find γ in the following:

γ(t) = normalize(α

0

(t) β(t)). (13)

Another inference task is to find the distribution at

the next time point:

γ(T ) = P(X

T

|E

0:T −1

=

−→

e ) = φ(γ(T −1), . . . , γ(T − L)). (14)

By the above equation, we can find γ(T + 1) by

γ(T ), . . . , γ(T − L + 1) and find any γ(t) for t > T .

2.3 Learning

We provide the learning algorithm of the model

NHM. Suppose that we have some evidence se-

quences ES = {

−→

e

(1)

,

−→

e

(2)

, . . . ,

−→

e

(R)

}, where

−→

e

(r)

=

[e

(r)

0

, e

(r)

1

, . . . , e

(r)

T

(r)

−1

]. We need to learn the parameters

θ = {φ, ψ, π, B}.

The learning algorithm works by inference-

updating iterations. At first, we use some initial pa-

rameters θ in the inference steps and calculate z =

{α, α

0

, β, β

0

, γ} for every evidence sequences. Then,

we use z in updating steps to find a better θ

∗

. Finally,

we use θ

∗

in inference steps and carry on until we find

satisfactory parameters.

The updating steps of π and B follow the Baum-

Welch algorithm of HMM (Rabiner, 1989) as follows:

π

∗

= normalize(

R

X

r=1

−1

X

t=−L

γ

(r)

(t)), (15)

B

∗

j,k

=

P

R

r=1

P

T

(r)

−1

t=0

1

e

(r)

t

=k

γ

(r)

j

(t)

P

R

r=1

P

T

(r)

−1

t=0

γ

(r)

j

(t)

; (16)

where

1

e

(r)

t

=k

=

(

1, e

(r)

t

= k,

0, else.

(17)

To update the neural networks φ and ψ, we need

to build training sets for them. The sampling method

is as follows: we firstly select a random

−→

e

(r)

∈ ES ,

then select a random t such that 0 ≤ t < T

(r)

. Accord-

ing to the input and expected output of φ and ψ, we

add the following two examples to the training sets,

respectively:

(α

0(r)

(t − 1), α

0(r)

(t − 2), . . . , α

0(r)

(t − L)) → γ

(r)

(t), (18)

(β

0(r)

(t + 1), β

0(r)

(t + 2), . . . , β

0(r)

(t + L)) → γ

(r)

(t). (19)

After building the training sets, we call standard

training algorithm for these neural networks.

3 RECOMMENDER SYSTEM

3.1 Model

What follows, we use NHM to build a model for

recommender system based on collaborative filtering.

Let RS =< U ser, Item, T ime, Level, Rating > be a rec-

ommender system, where

• U ser is the set of users, and user (or u) ∈ U ser is

a user.

• Item is the set of items, and item (or i) ∈ Item is

an item.

• T ime = Z is the set of time points, where Z is the

integers.

• Level = {1, 2, . . . , N} is the set of rating levels,

where N is a given integer.

• Rating is the set of ratings, where rating =

(user,item, time, level) (or r = (u, i, t, l)) ∈ Rating is

a rating, which means user gives item rating level

at time.

We make recommendations by analyzing the sim-

ilarity of both users and items. We introduce the user

types and the item types to describe common proper-

ties of users and items respectively. The users with

the same type have similar tastes. There are J user

types and K item types, where J and K are given in-

tegers respectively. Any user (or item) has a type at a

specific time. The users and items change, so do their

types. We use temporal random variables X

user,t

and

Y

item,t

to represent their types at time t respectively.

For ratings, we define a random variable R

user,item,t

for each triplet (user, item, t). By some known rat-

ings, R

user,item,t

are (partially) observed, while X

user,t

and Y

item,t

are hidden states. Namely, we have the fol-

lowing definitions:

• U serT ype = {1, 2, . . . , J} is the set of user types.

• ItemT ype = {1, 2, . . . , K} is the set of item types.

• X

user,t

∈ U serT ype is the random variable for the

user’s type at t.

• Y

item,t

∈ ItemT ype is the random variable for the

item’s type at t.

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

206

• R

user,item,t

∈ Level is the random variable for the

rating that the user gives the item at t.

For example, consider a recommender sys-

tem where U ser = {u

1

, u

2

, u

3

}, Item = {i

1

, i

2

}, N =

5, Rating = { (u

1

, i

1

, 1, 5), (u

2

, i

2

, 3, 3), (u

1

, i

2

, 5, 1),

(u

3

, i

1

, 6, 4) } and J = 2, K = 3. There are three user

random variables X

u

1

,t

, X

u

2

,t

and X

u

3

,t

whose possi-

ble values are in U serT ype = {1, 2}. The two item

random variables Y

i

1

,t

, Y

i

2

,t

have possible values in

ItemT ype = {1, 2, 3}. There are four observed rating

random variables R

u

1

,i

1

,1

= 5, R

u

2

,i

2

,3

= 3, R

u

1

,i

2

,5

= 1,

and R

u

3

,i

1

,6

= 4.

We consider how the users and items generate rat-

ings. The probability p

j,k

means that a user with the

j-th type meets an item with the k-th type. We use the

binomial distribution B(N − 1, p

j,k

) to convert p

j,k

into

discrete ratings:

P(R

u,i,t

= n | X

u,t

= j,Y

i,t

= k)

= Pr(n − 1; N − 1, p

j,k

)

=

N − 1

n − 1

!

(p

j,k

)

n−1

(1 − p

j,k

)

N−n

.

(20)

The transitions of X

u,t

and Y

i,t

are described with two

L-order NHMs whose parameters are θ = {φ, ψ, π} and

e

θ = {

e

φ,

e

ψ,

e

π} respectively, where L is a given integer,

θ is shared by all the users and

e

θ is shared by all the

items. There is not matrix B in θ because p

j,k

plays

the role of generating evidences.

For users, we assume that the user u has ratings

at M(u) time points t

1

< t

2

< . . . < t

M(u)

. For 1 ≤ m ≤

M(u), if we know P(X

u,t

m−l

) =

−→

x

u,t

m−l

, l = 1, 2, . . . , L,

then P(X

u,t

m

) is as follows:

P(X

u,t

m

) = φ(t

m

− t

m−1

,

−→

x

u,t

m−1

, . . . , t

m

− t

m−L

,

−→

x

u,t

m−L

).

(21)

Compared with (4), we make an adjustment here.

We only consider the time points that the user has rat-

ings, since there are a lot of time points that the user

has not ratings in recommender system. To indicate

the actual time length between t

m

and t

m−l

, we add

L dimensions in the input of the φ. For t

l

with index

l ≤ 0, we set t

l

= t

1

− τ and

−→

x

u,t

l

= π, where τ is a small

given time span.

Similarly, for Y

i,t

, if the item i is rated at M(i) time

points t

1

< t

2

< .. . < t

M(i)

and we know P(Y

i,t

m−l

) =

−→

y

i,t

m−l

, l = 1, 2, . . . , L for 1 ≤ m ≤ M(i), then P(Y

i,t

m

)

is

P(Y

i,t

m

) =

e

φ(t

m

− t

m−1

,

−→

y

i,t

m−1

, . . . , t

m

− t

m−L

,

−→

y

i,t

m−L

).

(22)

3.2 Inference

We consider that a user u who has ratings at M(u) time

points t

1

< t

2

< . . . < t

M(u)

. At time points t

m

, the user

gives S (u, t

m

) ratings. These ratings are given to items

i

1

, i

2

, . . . i

S (u,t

m

)

and the levels are n

1

, n

2

, . . . , n

S (u,t

m

)

, re-

spectively.

Firstly, we calculate the conditional probability

that a type- j user gives these S (u, t

m

) ratings at t

m

,

which is denoted as b

u,t

m

, j

. By (20), we have

b

u,t

m

, j

=P(R

u,i

1

,t

m

= n

1

, . . . , R

u,i

S (u,t

m

)

,t

m

= n

S (u,t

m

)

| X

u,t

m

= j)

=

S (u,t

m

)

Y

s=1

P(R

u,i

s

,t

m

= n

s

| X

u,t

m

= j)

=

S (u,t

m

)

Y

s=1

K

X

k=1

Pr(n

s

− 1; N − 1, p

j,k

).P(Y

i

s

,t

m

= k).

(23)

The vector b

u,t

m

,:

= (b

u,t

m

,1

, . . . , b

u,t

m

,J

) represents the

probability that the user generates these ratings at t

m

with each type. It plays the role of B

:,e

t

in 2.2.

Algorithm 1 is the forward-backward algorithm

for users. When we refer to t

l

with index l < 1, we set

t

l

= t

1

− τ and α

0

u

(t

l

) = π. For t

l

with index l > M(u),

we set t

l

= t

M(u)

+ τ and β

0

u

(t

l

) = normalize(

−→

1 ).

Algorithm 1: Forward-backward algorithm for users.

1: function Inference User(u)

2: for m=1 to M(u) do

3: α

u

(t

m

) ← φ(t

m

− t

m−1

, α

0

u

(t

m−1

), . . . , t

m

−

t

m−L

, α

0

u

(t

m−L

))

4: α

0

u

(t

m

) ← normalize(α

u

(t

m

) b

u,t

m

,:

)

5: for m = M(u) to 1 do

6: β

u

(t

m

) = ψ(t

m+1

− t

m

, β

0

u

(t

m+1

), . . . , t

m+L

−

t

m

, β

0

u

(t

m+L

))

7: β

0

u

(t

m

) ← normalize(β

u

(t

m

) b

u,t

m

,:

)

8: for m=1 to M(u) do

9: γ

u

(t

m

) ← normalize(α

0

u

(t

m

) β

u

(t

m

))

The inference steps for items are similar to the one

for users. Consider an item i that is rated at M(i) time

points and has S (i, t

m

) rating at t

m

. These ratings come

from u

1

, . . . , u

S (i,t

m

)

and the levels are n

1

, . . . n

S (i,t

m

)

.

Then, we have

b

i,t

m

,k

=

S (i,t

m

)

Y

s=1

J

X

j=1

Pr(n

s

− 1; N − 1, p

j,k

)P(X

u

s

,t

m

= j).

(24)

The forward-backward algorithm Inference Item(i)

for items is similar to Algorithm 1 except for the

function name, function input and indexes.

A Hybrid Neural Network and Hidden Markov Model for Time-aware Recommender Systems

207

Then, the inference steps are taken separately for

each user and each item. When we do it for user u,

we assume that the probability P(Y

i

s

,t

m

= k) in (23) is

known. Similarly, P(X

u

s

,t

m

= j) in (24) is assumed to

be known for the inference steps of item i. In prac-

tical, we use P(X

u,t

= j) = (γ

u

(t))

j

and P(Y

i,t

= k) =

(γ

i

(t))

k

. The symbol (·)

j

means the j-th element of

the vector. We first initialize γ

u

and γ

i

, then take the

inference steps for u and i alternately to update them.

3.3 Learning

We update the parameters θ = {φ, ψ, π},

e

θ = {

e

φ,

e

ψ,

e

π}

and p

j,k

with the α, β and γ that we calculate in the

inference steps. The p

j,k

is updated according to the

parameter estimation of binomial distribution:

p

∗

j,k

=

P

(u,i,t,l)∈Rating

(l − 1)γ

u

(t)γ

i

(t)

P

(u,i,t,l)∈Rating

(N − 1)γ

u

(t)γ

i

(t)

. (25)

The prior π is updated as the sum of the distribu-

tion of all the users t

−L+1

, . . . , t

0

. To find γ(t

l

) with

l ≤ 0, we take backward steps several times to find β

and then γ.

π

∗

= normalize(

X

u∈U ser

0

X

l=−L+1

γ

u

(t

l

)). (26)

In practical, a fixed π = normalize(

−→

1 ) often has good

performance because φ can generate the first L value

of α(t

l

) for l > 0 from normalize(

−→

1 ).

The φ and ψ are trained with standard training al-

gorithm of these neural networks as usually in deep

learning. We only need to build the training set for the

φ and ψ. To sample examples, we first select a random

user u ∈ U ser, then select a random t

m

from the M(u)

time points when the user generates ratings. The fol-

lowing two examples will be added to the training set

of φ and ψ, respectively:

(α

0

u

(t

m−1

), . . . , α

0

u

(t

m−L

)) → γ

u

(t

m

). (27)

(β

0

u

(t

m+1

), . . . , β

0

u

(t

m+L

)) → γ

u

(t

m

). (28)

The updating of

e

π and the training example sam-

pling steps of

e

φ and

e

ψ are the same as those of π, φ

and ψ except for indexes.

3.4 Algorithms

We provide the algorithms for the routines of the

model NHM, including batch learning, online updat-

ing and prediction, see Algorithm 2.

The batch learning algorithm learns the model

from a set of training ratings. It firstly initializes the

θ,

e

θ, p

j,k

, γ

u

(t), γ

i

(t) and empty training sets for each

neural network. Then it takes the inference-learning

iterations for a given loop number. In the inference

steps, we randomly select an u ∈ U ser or an i ∈ Item,

and call the Inference User or Inference Item func-

tions to update γ. In the learning steps, we calculate

p

j,k

, π,

e

π, build the training set for each neural net-

work and train them with standard training algorithm

of them.

The online updating algorithm updates the model

when receiving a rating r = (u,i, t, l). We take the in-

ference steps only for both the user and item that are

related to this rating. Then we update p

j,k

, π,

e

π with

(25) and (26). The first equation is a fraction of two

sums in Rating, and the second equation is the sum

in U ser (or Item). We only need to subtract the pre-

vious contribution of the updated rating, user or item

in these sums, and add their new contributions. We

don’t need to calculate the sums again. For the neu-

ral networks, we sample some examples from u and

i, and update the φ, ψ,

e

φ,

e

ψ with these examples, i.e.,

only run several steps of the training algorithm of the

neural networks on them.

The prediction algorithm makes the prediction

about the rating that a user u will give to an item i

at time t. We firstly calculate γ

u

(t) and γ

i

(t) with the

γ in the model:

γ

u

(t) = φ(t − t

M(u)

, γ

u

(t − t

M(u)

), . . . ,

t − t

M(u)−L+1

, γ

u

(t − t

M(u)−L+1

)),

(29)

γ

i

(t) =

e

φ(t − t

M(i)

, γ

i

(t − t

M(i)

), . . . ,

t − t

M(i)−L+1

, γ

i

(t − t

M(i)−L+1

)).

(30)

Then we use γ

u

(t) and γ

i

(t) to calculate P(R

u,i,t

= n),

which is denoted as q

u,i,t,n

. The algorithm returns the

vector q

u,i,t,:

= (q

u,i,t,1

, . . . , q

u,i,t,N

) as follows:

q

u,i,t,n

=

J

X

j=1

K

X

k=1

(γ

u

(t))

j

(γ

i

(t))

k

Pr(n − 1; N − 1, p

j,k

). (31)

4 EXPERIMENTS

4.1 Setup

We make three kinds of experiments to test the per-

formance in different environments.

Classical experiment (CL): The ratings in the

datasets are randomly divided into training set (80%)

and test set (20%). The algorithms are trained by the

training set to provide prediction about the ratings in

the test set.

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

208

Algorithm 2: Model routines.

1: function BatchLearning(Rating)

2: initialize θ = {φ, ψ, π},

e

θ = {

e

φ,

e

ψ,

e

π}, p

j,k

3: initialize γ

u

(t), γ

i

(t)

4: initialize empty training sets for φ, ψ,

e

φ,

e

ψ

5: for l

1

= 1, 2, . . . , LoopNum1 do

6: for l

2

= 1, 2, . . . , LoopNum2 do

7: Select a random u ∈ U ser

8: Inference

User(u)

9: Select a random i ∈ Item

10: Inference Item(i)

11: Calculate p

j,k

, π,

e

π with (25) and (26)

12: Build each training sets with (27) and (28)

13: Train φ, ψ,

e

φ,

e

ψ with these training sets

14:

15: function OnlineUpdating(u,i, t, l)

16: Inference User(u)

17: Inference Item(i)

18: Update p

j,k

, π,

e

π with (25) and (26)

19: Sample examples from u and i with (27) and

(28)

20: Update φ, ψ,

e

φ,

e

ψ with these examples

21:

22: function Prediction(u, i, t)

23: calculate γ

u

(t) with (29)

24: calculate γ

i

(t) with (30)

25: for n = 1, 2, . . . , N do

26: calculate q

u,i,t,n

with (31)

27: return q

u,i,t,:

Time-order Experiment (TO): The ratings in the

datasets are reordered according to the time they are

generated. We take the former 80% as the training set

and the latter 20% as the test set. This is a reason-

able experiment setup for time-related recommender

systems for it ensures that the algorithms predict the

future by the past.

Time-order Online Experiment (TOO): The rat-

ings in the datasets are reordered according to the time

they are generated and imported one by one to the al-

gorithms. For every rating, the algorithms will first

be required to give their predicted rating, and then

updated their parameters every time they receive new

coming rating. This method simulates the situation of

real-world online recommendation applications and is

suitable to evaluate time-aware and online algorithms.

The algorithms are judged by RMSE (root mean

square error) and MAE (mean absolute error) scores

of the ratings in the test sets.

The test ratings related to the users or items that

have no ratings in the training set will not be counted

in the evaluation scores. In this case, the parameters

of some algorithms for the related users or items are

not defined, or just initialized by small random val-

ues. So the algorithms can not provide reasonable rat-

ings. For the same reason, in time-order online exper-

iments, the very first ratings for every user and item

(that means the user or item has not appeared before)

will not be counted in the scores.

4.2 Datasets

The experiments use the MovieLens100k dataset and

the Epinions dataset as illustrated in Table 1.

MovieLens100k (ML) (Harper and Konstan,

2015) is a famous movie dataset collected through the

web site MovieLens from 1997 to 1998. It consists

of 100,000 ratings from 943 users on 1,682 movies

by 1-5 level ratings. The dataset is cleaned up by

its provider to ensure each user has rated at least 20

movies.

Epinions (EP) (Tang et al., 2012a; Tang et al.,

2012b) is an e-commerce dataset collected through

the web site Epinions from 1999 to 2011. It con-

sists of users’ opinions on items in 27 categories by

1-5 level ratings. We made a clean-up preprocess to

produce a 20-core dataset (each user rated at least 20

items, and each item was rated by at least 20 users),

which has 2,874 users, 2,624 items and 122,361 rat-

ings. The additional information in this dataset, such

as item categories or users’ trust relationships, are not

used in our experiments.

Table 1: Datasets.

Datasets Users Items Ratings

MovieLens100k 944 1,683 100,000

Epinions 2,874 2,624 122,361

4.3 Results

We compare our algorithms of the model NHM with

several representative algorithms including matrix

factorization, neighborhood, hidden Markov model

and neural network.

Probabilistic Matrix Factorization (PMF)

(Salakhutdinov and Mnih, 2007): classical matrix

factorization model for recommender systems. The

model is batch-learning and time-independent. To

apply the batch-learning model in online experiment

settings, we retrain the whole model every 1% of the

experiment process.

Time Weight Collaborative Filtering (TWCF)

(Ding and Li, 2005): time-related item-based neigh-

borhood method. It firstly uses Pearson correlation

coefficient to calculate the similarity of items, and

then uses an exponential time weight function and

A Hybrid Neural Network and Hidden Markov Model for Time-aware Recommender Systems

209

the item similarity to make predictions. Similar to

other neighborhood methods, the algorithm is natu-

rally able to run in online experiments.

Collaborative Kalman Filter (CKF) (Gultekin

and Paisley, 2014): hidden Markov model that uses

Kalman Filter to make recommendations. The model

has continuous time axis and continuous random vari-

ables for users, items and ratings. It has an online up-

dating algorithm, and each update step only uses the

most recent one rating.

Recurrent Recommender Networks (RRN)

(Wu et al., 2017): recurrent neural network model.

It uses two separate RNN to model the temporal

changes of users and items, and uses inner product

as the interaction of them. An additional matrix fac-

torization model is included in the model as the sta-

tionary components. Like PMF, we retrain the batch-

learning model every 1% of the online experiment

process.

The hyperparameters of the tested algorithms

are decided by grid search. Each hyperparame-

ter is selected from a set of candidates to produce

the best performance. In detail, the learning rate

and regularization coefficient of PMF, RRN, NHM

and the decay rate λ of TWCF are selected from

{1, 0.1, 0.01, 0.001, 0.0001}. Because the authors of

CKF used a hyperparameter σ = 1.76 in their ex-

periments, we select σ from {0.5,0.6, 0.7, . . . , 2.5} for

CKF. We use LSTM to implement the neural net-

works in NHM and RRN. Another hyperparameter is

latent vector length (for MF, CKF, RRN and NHM).

Because it is directly related to the time and space

cost, we set it 10 for every algorithm for the sake of

fairness.

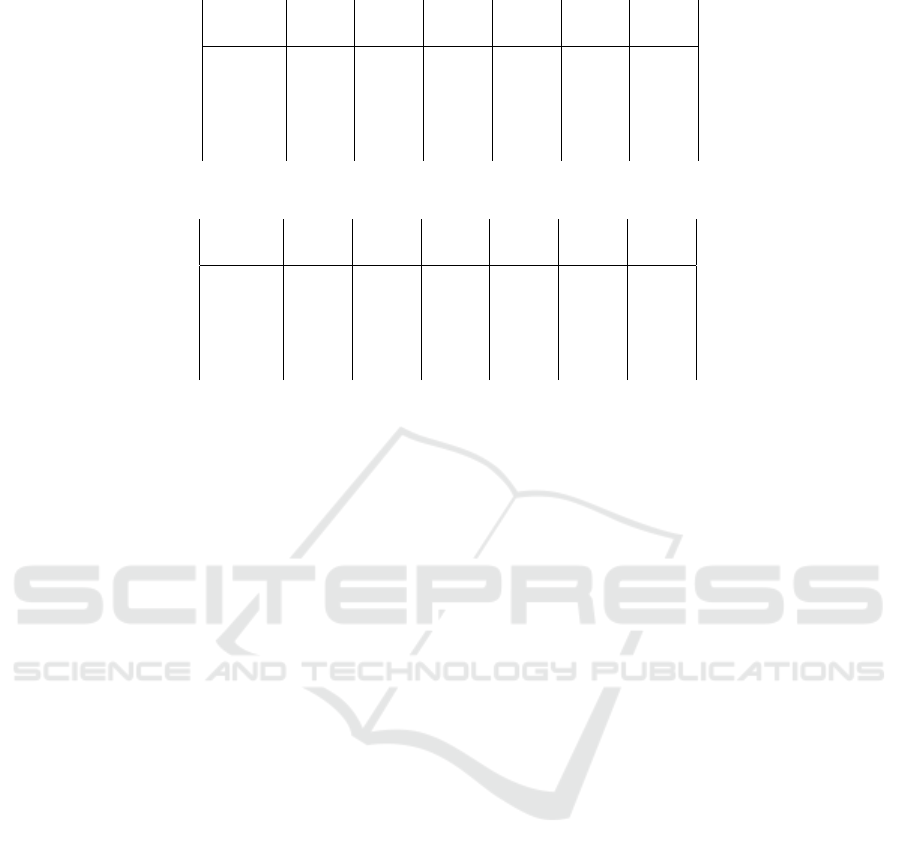

Tables 2 and 3 show the results of the experiments.

The experiment setting CL, TO and TOO stand for

classical experiments, time-order experiments and

time-order online experiments, respectively. From the

experiment results, NHM has the best performance

on most of experiments. In the two experiments with

classical settings, our model has slightly better perfor-

mance than the classical algorithm PMF, while many

other time-related models fail to exceed PMF in this

setting. This shows our algorithm has a stable perfor-

mance even in a setting that is difficult for time-aware

algorithm. In time-order experiments and time-order

online experiments, our algorithm has the best perfor-

mance. On the last three experiments, our algorithm

has obvious improvement over other algorithms with

about 0.1 RMSE difference. Compared with the over-

all performance of the hidden Markov model CKF

and the neural network model RRN, our model shows

its advantage of integrating NNs into HOHMM to ex-

ploit the time-aware recommendations.

5 RELATED WORK

First-order HMM was introduced in (Sahoo et al.,

2012) for recommender systems. It has a lot of ap-

plications such as people-to-people recommendation

(Alanazi and Bain, 2013; Alanazi and Bain, 2016),

sport videos (Sannchez et al., 2012) and sequence pat-

tern mining (Gu et al., 2014; Le et al., 2016). In

(Zhang et al., 2016b; Zhang et al., 2016a), the au-

thors proposed a hidden Semi-Markov model for rec-

ommender systems. Their model can capture the du-

ration that a user stays in a state. This extends the

first-order HMM’s dependency length from one time

point to one staying state. But it can not describe

long-term affects in which the user turns to other in-

terest halfway and comes back at last. Another kind of

HMM that has been applied in recommender systems

is the Kalman Filter (Lu et al., 2009; Paisley et al.,

2010; Chang et al., 2017; Gultekin and Paisley, 2014;

Sun et al., 2012; Sun et al., 2014). This approach

has continuous state space and continuous time axis.

The dependency length in this model is extended to

the last time point that the user has ratings. But it has

the same problem about the long-term interest that is

covered by other purchases.

There are increasing interest in application of

deep learning for recommender systems, including

AE (Wang et al., 2015; Liang and Baldwin, 2015) ,

RBM (Salakhutdinov et al., 2007), CNN (Ding et al.,

2017) and MLP (He et al., 2017; Xue et al., 2017). We

pay attention to RNN for it models time-aware recom-

mender systems. The sequential models (Soh et al.,

2017; Devooght and Bersini, 2016; Chen et al., 2018)

regard the user behavior history as a sequence and ap-

ply RNN on it. These approaches use the sequential

order of the behavior generated by users but neglect

the time span between the records. The session-based

models (Hidasi et al., 2016a; Hidasi et al., 2016b; Jan-

nach and Ludewig, 2017; Chatzis et al., 2017) make

recommendations on the session data generated by

users. Similar to sequential models, they use the order

of user behavior sequence to make recommendations.

Because there are not overall time axes in these two

kinds of approaches, they can not find temporal re-

lationships between different users’ records and can

not describe the changes of multiple users at the same

time. In (Wu et al., 2017), time-aware model with two

separate RNNs for users and items was introduced ,

which is similar to our approach. Because RNNs do

not provide the meaning of hidden states, it’s difficult

to choose the function for interaction between users

and items. As a result, the RNN time-aware model

have to add some stationary components, i.e., an ad-

ditional matrix factorization model, to undertake the

recommendation task.

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

210

Table 2: RMSE values of experiments.

Setting CL CL TO TO TOO TOO

Dataset ML EP ML EP ML EP

PMF 0.925 1.043 1.023 1.149 1.062 1.206

TWCF 0.959 1.219 1.141 1.324 1.017 1.495

CKF 1.101 1.133 1.081 1.151 1.041 1.276

RRN 0.924 1.112 1.021 1.118 1.065 1.199

NHM 0.923 1.043 1.020 1.066 0.952 1.065

Table 3: MAE values of experiments.

Setting CL CL TO TO TOO TOO

Dataset ML EP ML EP ML EP

PMF 0.733 0.808 0.816 0.885 0.830 0.923

TWCF 0.756 0.958 0.891 1.004 0.794 1.108

CKF 0.865 0.911 0.833 0.895 0.816 0.995

RRN 0.936 0.862 0.829 0.848 0.837 0.929

NHM 0.728 0.803 0.808 0.812 0.756 0.823

There are works that integrate NNs and HMMs in

other research fields. In speech recognition(Bourlard

and Wellekens, 1990), the authors proposed a widely-

used hybrid NN-HMM model which uses NN to im-

prove the discriminating power of HMM. In their

work, a neural network was considered as a general

form of Markov model and used to capture contextual

input information. However, their object and model

structure are quite different with ours. In molecular

biology (Baldi and Chauvin, 1995), the authors ap-

plied NN to reduce the parameter number of HMM.

They handled the problem where there are a huge

number of hidden states in HMM and used an NN to

reduce the parameter size. They discussed the long-

range dependencies problem, but chose multiple first-

order HMMs rather than higher-order HMM.

6 CONCLUSIONS

We proposed a hybrid NN and HMM model NHM

that takes advantages of NN and HOHMM for time-

aware recommender systems. It can describe long-

term dependencies of both users and items and is ex-

plainable to the interactions between users and items

in collaborative filtering. We provided the algorithms

of NHM for offline batch-learning and online updat-

ing that have better performance than the existing rec-

ommender systems.

We did not specify the type or structure of NNs in

our models for the consideration of generality. If we

use RNN implementation in our model to deal with

unfixed length input sequences, the model can be ex-

tended to unfixed order hidden Markov models. That

is, we do not need to assign the order of the hidden

Markov models. The other hyperparameters such as

the numbers of the user types and item types can have

a way to be adjusted automatically. We consider the

approach of NHM by combining NNs and HOHMMs

are general enough to various recommender systems.

ACKNOWLEDGMENTS

The work is supported by Natural Science Fund of

China under numbers 61672049/61732001.

REFERENCES

Alanazi, A. and Bain, M. (2013). A people-to-people

content-based reciprocal recommender using hidden

markov models. In Proceedings of the 7th ACM con-

ference on Recommender systems, pages 303–306.

Alanazi, A. and Bain, M. (2016). A Scalable People-to-

People Hybrid Reciprocal Recommender Using Hid-

den Markov Models. The 2nd International Workshop

on Machine Learning Methods for Recommender Sys-

tems.

Baldi, P. and Chauvin, Y. (1995). Protein modeling with

hybrid hidden markov model/neural network architec-

tures. In International Conference on Intelligent Sys-

tems for Molecular Biology.

Bourlard, H. and Wellekens, C. J. (1990). Links between

markov models and multilayer perceptrons. IEEE

Transactions on Pattern Analysis and Machine Intel-

ligence, 12(12):1167–1178.

Campos, P. G., D

´

ıez, F., and Cantador, I. (2014). Time-

aware recommender systems: A comprehensive sur-

vey and analysis of existing evaluation protocols. User

Modeling and User-Adapted Interaction, 24(1–2):67–

119.

A Hybrid Neural Network and Hidden Markov Model for Time-aware Recommender Systems

211

Chang, S., Yin, D., Chang, Y., Hasegawa-johnson, M., and

Huang, T. S. (2017). Streaming Recommender Sys-

tems. In International Conference on World Wide

Web, pages 381–389.

Chatzis, S., Christodoulou, P., and Andreou, A. S. (2017).

Recurrent latent variable networks for session-based

recommendation. In the 2nd Workshop on Deep

Learning for Recommender Systems.

Chen, X., Xu, H., Zhang, Y., Tang, J., Cao, Y., Qin, Z., and

Zha, H. (2018). Sequential recommendation with user

memory networks. In ACM International Conference

on Web Search and Data Mining, pages 108–116.

Cho, K., Van Merrienboer, B., Gulcehre, C., Bahdanau, D.,

Bougares, F., Schwenk, H., and Bengio, Y. (2014).

Learning phrase representations using rnn encoder-

decoder for statistical machine translation. Computer

Science.

Devooght, R. and Bersini, H. (2016). Collaborative filtering

with recurrent neural networks.

Ding, D., Zhang, M., Li, S. Y., Tang, J., Chen, X., and Zhou,

Z. H. (2017). Baydnn: Friend recommendation with

bayesian personalized ranking deep neural network.

In Conference on Information and Knowledge Man-

agement, pages 1479–1488.

Ding, Y. and Li, X. (2005). Time Weight Collaborative Fil-

tering. In Proceedings of the ACM International Con-

ference on Information and Knowledge Management,

pages 485–492.

Gu, W., Dong, S., and Zeng, Z. (2014). Increasing rec-

ommended effectiveness with markov chains and pur-

chase intervals. Neural Computing & Applications,

pages 1153–1162.

Gultekin, S. and Paisley, J. (2014). A Collaborative Kalman

Filter for Time-Evolving Dyadic Processes. In IEEE

International Conference on Data Mining.

Harper, F. M. and Konstan, J. A. (2015). The MovieLens

Datasets: History and Context. ACM Transactions on

Interactive Intelligent Systems.

He, X., Liao, L., Zhang, H., Nie, L., Hu, X., and Chua, T.-S.

(2017). Neural collaborative filtering. In Proceedings

of the 26th International Conference on World Wide

Web.

Hidasi, B., Karatzoglou, A., Baltrunas, L., and Tikk, D.

(2016a). Session-based recommendations with recur-

rent neural networks. In the International Conference

on Learning Representations.

Hidasi, B., Quadrana, M., Karatzoglou, A., and Tikk, D.

(2016b). Parallel recurrent neural network architec-

tures for feature-rich session-based recommendations.

In ACM Conference on Recommender Systems, pages

241–248.

Hochreiter, S. and Schmidhuber, J. (1997). Long short-term

memory. Neural Computation, 9(8):1735–1780.

Jannach, D. and Ludewig, M. (2017). When recurrent

neural networks meet the neighborhood for session-

based recommendation. In ACM Conference on Rec-

ommender Systems, pages 306–310.

Le, D. T., Fang, Y., and Lauw, H. W. (2016). Modeling

sequential preferences with dynamic user and context

factors. In Joint European Conference on Machine

Learning and Knowledge Discovery in Databases.

Liang, H. and Baldwin, T. (2015). A probabilistic rating

auto-encoder for personalized recommender systems.

In ACM International on Conference on Information

and Knowledge Management, pages 1863–1866.

Lu, Z., Agarwal, D., and Dhillon, I. S. (2009). A Spatio-

temporal Approach to Collaborative Filtering. In Pro-

ceedings of the Third ACM Conference on Recom-

mender Systems, pages 13–20.

Paisley, J., Gerrish, S., and Blei, D. (2010). Dynamic mod-

eling with the collaborative kalman filter. In NYAS 5th

Annual Machine Learning Symposium.

Rabiner, L. R. (1989). A Tutorial on Hidden Markov Mod-

els and Selected Applications in Speech Recognition.

Readings in Speech Recognition, pages 267–296.

Sahoo, N., Singh, P. V., and Mukhopadhyay, T. (2012).

A Hidden Markov Model for Collaborative Filtering.

Mis Quarterly, 36(4):1329–1356.

Salakhutdinov, R. and Mnih, A. (2007). Probabilistic Ma-

trix Factorization. In International Conference on

Neural Information Processing Systems, pages 1257–

1264.

Salakhutdinov, R., Mnih, A., and Hinton, G. (2007). Re-

stricted boltzmann machines for collaborative filter-

ing. In International Conference on Machine Learn-

ing, pages 791–798.

Sannchez, F., Alduan, M., Alvarez, F., Menendez, J. M.,

and Baez, O. (2012). Recommender system for sport

videos based on user audiovisual consumption. IEEE

Transactions on Multimedia, 14(6):1546–1557.

Soh, H., Sanner, S., White, M., and Jamieson, G. (2017).

Deep sequential recommendation for personalized

adaptive user interfaces. In International Conference

on Intelligent User Interfaces.

Sun, J. Z., Parthasarathy, D., and Varshney, K. R. (2014).

Collaborative kalman filtering for dynamic matrix fac-

torization. IEEE Transactions on Signal Processing.

Sun, J. Z., Varshney, K. R., and Subbian, K. (2012). Dy-

namic matrix factorization: A state space approach. In

IEEE International Conference on Acoustics, Speech

and Signal Processing.

Tang, J., Gao, H., and Liu, H. (2012a). mtrust: discerning

multi-faceted trust in a connected world. In Proceed-

ings of the fifth ACM international conference on Web

search and data mining, pages 93–102. ACM.

Tang, J., Liu, H., Gao, H., and Das Sarmas, A. (2012b).

etrust: Understanding trust evolution in an online

world. In Proceedings of the 18th ACM SIGKDD in-

ternational conference on Knowledge discovery and

data mining, pages 253–261. ACM.

Wang, H., Wang, N., and Yeung, D. Y. (2015). Collab-

orative deep learning for recommender systems. In

ACM SIGKDD International Conference on Knowl-

edge Discovery and Data Mining, pages 1235–1244.

Wu, C. Y., Ahmed, A., Beutel, A., Smola, A. J., and Jing,

H. (2017). Recurrent recommender networks. pages

495–503.

Xue, H. J., Dai, X. Y., Zhang, J., Huang, S., and Chen, J.

(2017). Deep matrix factorization models for recom-

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

212

mender systems. In International Joint Conference on

Artificial Intelligence, pages 3203–3209.

Zhang, H., Ni, W., Li, X., and Yang, Y. (2016a). A Hidden

Semi-Markov Approach for Time-Dependent Recom-

mendation. In Pacific Asia Conference on Information

Systems.

Zhang, H., Ni, W., Li, X., and Yang, Y. (2016b). Mod-

eling the Heterogeneous Duration of User Interest in

Time-Dependent Recommendation: A Hidden Semi-

Markov Approach. IEEE Transactions on Systems,

Man and Cybernetics Systems, pages 2168–2216.

Zhang, S., Yao, L., and Sun, A. (2017). Deep learning based

recommender system: A survey and new perspectives.

ArXiv e-prints.

A Hybrid Neural Network and Hidden Markov Model for Time-aware Recommender Systems

213