Neural Explainable Collective Non-negative Matrix Factorization for

Recommender Systems

Felipe Costa and Peter Dolog

Aalborg Uiversity, Selma Lagerløfs Vej 300, 9220, Aalborg, Denmark

Keywords:

Explainability, Recommender Systems, Matrix Factorization.

Abstract:

Explainable recommender systems aim to generate explanations for users according to their predicted scores,

the user’s history and their similarity to other users. Recently, researchers have proposed explainable recom-

mender models using topic models and sentiment analysis methods providing explanations based on user’s

reviews. However, such methods have neglected improvements in natural language processing, even if these

methods are known to improve user satisfaction. In this paper, we propose a neural explainable collective non-

negative matrix factorization (NECoNMF) to predict ratings based on users’ feedback, for example, ratings

and reviews. To do so, we use collective non-negative matrix factorization to predict user preferences accord-

ing to different features and a natural language model to explain the prediction. Empirical experiments were

conducted in two datasets, showing the model’s efficiency for predicting ratings and generating explanations.

The results present that NECoNMF improves the accuracy for explainable recommendations in comparison

with the state-of-art method in 18.3% for NDCG@5, 12.2% for HitRatio@5, 17.1% for NDCG@10, and

12.2% for HitRatio@10 in the Yelp dataset. A similar performance has been observed in the Amazon dataset

7.6% for NDCG@5, 1.3% for HitRatio@5, 7.9% for NDCG@10, and 3.9% for HitRatio@10.

1 INTRODUCTION

Recommender systems have become an important

method in online services, aiming to help users to fil-

ter items of their preferences, such as movies, places,

or products. The most well-known model to rec-

ommend top-N items is collaborative filtering (CF),

which recommend items according to the user’s simi-

larities with other users and/or items. However, tradi-

tionally CF methods focus on users, items, and their

interactions to recommend a sorted list of N items.

Among CF techniques, matrix factorization (MF)

has been widely applied in recommender systems be-

cause of its accuracy in providing personalized rec-

ommendation based on user-item interactions, for ex-

ample, overall ratings. However, users may have dif-

ferent preferences about specific features of the same

item as seen in Figure 1. Hence it is a challenge to

infer whether a user would prefer a specific feature

from an item based on overall rating. For example, in

the restaurant domain, one user may rate a restaurant

3-stars due to service, while another user may give the

same rating due to food. This example, shows the im-

portance to model different features when developing

a recommendation method, since those features may

help to explain why a CF model recommends a spe-

cific list of items to a user.

Food

Service

Location

Ambiance

Family

Friendly

Music

Recommendation

Figure 1: Example of Review-aware Recommendation.

Online services provide other explicit feedback

besides the overall ratings, such as item’s content

features, contextual information, sentiments, and re-

views. Items’ content features describe attributes of

an item, for example, which food is served in the

restaurant. Contextual information defines the situ-

ation experienced by a user, for example, if a user

has been in a restaurant for business or leisure. Sen-

timents describes the positive or negative experience

of a user regarding an item. Reviews are user’s per-

sonal assessment about an item, which may describe

items’ content features according to her/his prefer-

Costa, F. and Dolog, P.

Neural Explainable Collective Non-negative Matrix Factorization for Recommender Systems.

DOI: 10.5220/0006893700350045

In Proceedings of the 14th International Conference on Web Information Systems and Technologies (WEBIST 2018), pages 35-45

ISBN: 978-989-758-324-7

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

35

ences. Those explicit feedback have been applied sep-

arately as feature to predict user preferences, however

as aforementioned, a user may like or dislike an item’s

content feature in different contexts.

We propose to align those features using non-

negative collective matrix factorization model, called

NECoNMF. NECoNMF factorizes ratings, item’s

content features, contextual information, and senti-

ment in four non-negative low-rank matrices, repre-

sented in a common latent space. The hypothesis is

that jointly decomposition of different matrices into

the same factor space (for example, users’ prefer-

ences, items’ content features, contextual informa-

tion, and sentiment) improves the prediction of top-N

recommendation.

Moreover, the reviews represent an additional in-

formation for collaborative filtering. Recent research

has modeled reviews to generate explanations for rec-

ommender systems, which are known as explainable

recommendations. Explicit factor model (EFM) de-

veloped by (Zhang et al., 2014) extracts features and

user opinions by phrase-level sentiment analysis on

user generated reviews, and explain the recommenda-

tion based on the extracted information. TriRank pro-

posed by (He et al., 2015) uses a tripartite graph to en-

rich the user-item binary relation to a user-item-aspect

ternary relation. Both projects propose to extract as-

pects from reviews to generate explainable recom-

mendations, but they do not consider influence maxi-

mization in social relation as a source of explanation.

Recently, (Ren et al., 2017) propose the social col-

laborative viewpoint regression model (sCVR), which

detects viewpoints and uses social relations as a la-

tent variable model. sCVR is represented as a tuple of

concept-topic-sentiment from both user reviews and

trusted social relations.

The aforementioned techniques have shown im-

provements in explaining recommendations, however

they neglected how to present the textual explanations

while keeping their accuracy in top-N recommen-

dation. Recently, natural language processing tech-

niques have applied deep learning methods, such as

recurrent neural network (RNN), which has demon-

strated significant improvement in character-level lan-

guage generation, due to the ability to learn latent in-

formation. However, RNN does not capture depen-

dencies among characters, since it suffers from gradi-

ent vanishing problem (Bengio et al., 1994). To solve

this issue, long short-term memory (LSTM) have

been introduced by (Hochreiter and Schmidhuber,

1997; El Hihi and Bengio, 1995) revealing good re-

sults in generating text upon different datasets (Karpa-

thy et al., 2015) and machine translation (Sutskever

et al., 2014).

In this paper, we propose a neural explainable

collective non-negative matrix factorization based on

two steps: (1) prediction of user’s preferences by col-

lectively factorizing ratings, items’ content features,

contextual information and sentiments; and (2) gen-

erative text reviews given a vector of ratings, which

shows specific opinions about different items’ fea-

tures.

The experiments were performed using two real-

world datasets: Yelp and Amazon, where our method

outperforms the state-of-art in terms of Hit Ration and

NDCG.

This paper presents the following contributions:

• Neural Explainable Collective Non-negative Ma-

trix Factorization;

• Collective factorization of four matrices: ratings,

item’s content features, contextual information,

and sentiments;

• Text generation model using neural networks;

• Results according to empirical experiments in two

real-world datasets.

2 RELATED WORKS

Related work can be divided in two tasks: top-N rec-

ommendations and explainable recommendations.

Top-N Recommendations. The most well-known

methods are Popular Items (PopItem) (Cremonesi

et al., 2010) and PageRank (Haveliwala, 2002), since

they present reasonable results and simple implemen-

tation. Another widely applied method is matrix fac-

torization (MF), for example SVD (Koren et al., 2009)

and NMF (Lee and Seung, 2000). SVD was first ap-

plied on the Netflix dataset, presenting good results

in terms of prediction accuracy. Likewise, NMF has

received attention, due to its easy interpretability for

matrices decomposition.

Matrix factorization has been extended over the

years to improve its effectiveness, where collective

matrix factorization has shown good performance.

This technique was applied in multi-view clustering,

where it splits objects into clusters based on multiple

representations of the object, as shown by (Liu et al.,

2013; He et al., 2014). (Liu et al., 2013) proposed

MultiNMF, using a connection between NMF and

PLSA, and (He et al., 2014) proposed a co-regularized

NMF (CoNMF), where comment-based clustering is

formalized as a multi-view problem using pair-wise

and cluster-wise CoNMF.

Local collective embeddings (LCE) is a collective

matrix factorization method proposed by (Saveski and

WEBIST 2018 - 14th International Conference on Web Information Systems and Technologies

36

Mantrach, 2014), which exploits user-document and

document-terms matrices, identifying a common la-

tent space to both item features and rating matrix.

LCE has shown effectiveness in cold-start problem

for news recommendation, however, it has some lim-

itations. The method does not perform well in our

domain which covers online services as restaurants

and e-commerce, because it uses only two matrices

as input and multiplicative update rules as learning

model. An extension of LCE is proposed by (Costa

and Dolog, 2018), named CHNMF, where collective

factorization is applied in three different matrices us-

ing hybrid regularization term. In this paper, we ex-

tend the CHNMF approach decomposing a matrix as

a product of four matrices: ratings, item’s content fea-

tures, contextual information, and sentiments. Con-

tent features are data from each item’s metadata, rat-

ings represents user’s preferences, contextual infor-

mation is the situation where the user rates an item,

and sentiment is the positive (1) or negative (−1) pref-

erence given by a user to an item’s feature.

Explainable Recommendations. Explainable rec-

ommendation aims to improve transparency, effec-

tiveness, scrutability, and user trust (Zhang et al.,

2014). These criteria can be achieved by different

methods to explain the recommendation to a user,

such as graph or table, however for this project we se-

lected two baselines which uses textual information to

justify their predictions. (Zhang et al., 2014) proposes

the explicit factor model based on sentiment lexicon

construction and (He et al., 2015) proposed TriRank

algorithm to improve the ranking of items for review-

aware recommendation.

Our proposal differs from these models in two as-

pects: we collectively factorize ratings, item’s con-

tent features, contextual information, and sentiments;

and apply a character-level explanation model for rec-

ommendation using LSTM for text generation (Costa

et al., 2018).

3 PROBLEM FORMULATION

The research problem investigated in this paper is

defined as followed: Explain the recommended list

of items to each user based on ratings, item’s con-

tent features, contextual information, and sentiments

from user-item interactions. Modeling the rating data

X

u

from a set of users U to a set of items I under

X

a

types of content, X

c

types of context, and X

s

ex-

plicit sentiments as four two-dimensional matrices.

We can formally define them as: user-item matrix

X

u

= {x

u

∈ R

u×i

|1 ≤ x

u

≤ 5}; user-content feature

matrix X

a

= {x

a

∈ Z

u×a

|0 ≤ x

a

≤ 1}; user-context ma-

trix X

c

= {x

c

∈ Z

u×c

|0 ≤ x

c

≤ 1}; and user-sentiment

matrix X

s

= {x

s

∈ Z

u×s

||x

s

| = 1}. Where, u is the

number of users, i is the number of items, a is the

content size, c is the context, and s is the sentiment

regarding item features.

Factor models aim to decompose the original user-

item interaction matrix into two low-rank approxima-

tion matrices. Collective non-negative matrix factor-

ization is a generalization of the classic matrix fac-

torization, where the latent features are stored in four

low-rank matrices: ratings as W × H

u

; content fea-

tures as W × H

a

; context as W × H

c

; and sentiment as

W ×H

s

. Where, H

u

denotes a row vector representing

the latent features for user u. Similarly, H

a

represents

the content’s latent features a, H

c

represents the con-

text’s latent features c, and H

s

defines the sentiment’s

latent features. Finally, W represents the common la-

tent space.

The matrix factorization method does not provide

human-oriented explanations. To address this partic-

ular issue, our model is defined to target the problem

of generating natural language review-oriented expla-

nations.

We formulate the explanation for rating prediction

as: given input ratings vector r

ui

= (r

1

, . . . , r

|r

ui

|

) we

aim to generate item explanation e

i

= (w

1

, . . . , w

|t

i

|

)

by maximizing the conditional probability p(e|r).

Note, rating r

ui

is a vector of the user’s overall and

specific rating of different features from a target item

i. While the review t

i

is considered a sequence of

characters of variable length. For Yelp dataset, we

set |r| as 5, representing 5 different features: f ood,

service, ambiance, location, and f amily f riendly.

The model learns to compute the likelihood of gen-

erated reviews given a set of input ratings. This con-

ditional probability p(e|r) is represented in Equation

1.

p(e|r) =

|e|

∏

s=1

p(w

s

|w < s, r)

(1)

where w < s = (w

1

, . . . , w

t−1

)

4 METHODOLOGY

In this section, we describe NECoNMF for rating pre-

diction and natural language generation model.

4.1 Collective Matrix Factorization

We propose collective non-negative matrix factoriza-

tion applied to domains with specific available infor-

mation, such as, ratings, content features, context, and

Neural Explainable Collective Non-negative Matrix Factorization for Recommender Systems

37

sentiment. This information is decomposed into four

matrices in a common latent space, i.e., each user-

item can be related to item’s content features, contex-

tual situation, sentiment, and ratings.

X

u

X

a

X

s

X

c

[3, 4, food, 3, service, 2, ambiance, 3,

location, positive, weekend]

Explicit

Features

H

u

H

a

V H

s

H

c

Hidden

Features

r"

Predicted

rating

Figure 2: Collective Non-Negative Matrix Factorization.

Considering an online restaurant service as seen

in Figure 2, where a user may rate a specific restau-

rant (here defined as an item) according to different

criteria, such as, f ood, service, ambiance, and

location. Furthermore, the user may write a review

and give an overall rating about the item. Based on

this example, we may retrieve content-feature matrix

X

a

, user-item matrix X

u

, user-context matrix X

c

,

and user-sentiment matrix X

s

. If we factorize X

a

in

two low-dimensional matrices, we will observe the

content features is belonging to an item. Factorizing

X

u

leads to find the items according to the users’

preferences. While factorizing matrix X

c

allow us to

identify the hidden contextual factors related to the

item. Likewise, factorizing X

s

highlight the positive

and negative sentiments from a user given to an item’s

feature. If each matrix is factorized independently it

will represent a different latent space and there will

be no correlation between content feature, context,

sentiment, and rating matrix. The idea of collective

non-negative matrix factorization is that each entity:

users, items, content features, contexts, and sentiment

should be represented in a common latent space,

meaning that each item can be described by a set

of sentiments (for example, positive), and by a set

of explicit feedback (for example, ratings). The

proposal is to collectively factorize X

u

, X

a

, X

c

, and X

s

into a low-dimensional representation in a common

latent space. The formal definition, is given as the

following optimization problem:

min : f (W ) =

1

2

[αkX

u

−WH

u

k

2

2

+ βkX

a

−WH

a

k

2

2

+γkX

c

−WH

c

k

2

2

+ ωkX

s

−WH

s

k

2

2

+λ(kW k

2

+ kH

u

k

2

+ kH

a

k

2

+ kH

c

k

2

) + kH

s

k

2

)]

s.t.W ≥ 0, H

u

≥ 0, H

a

≥ 0, H

c

≥ 0, H

c

≥ 0,

(2)

where the first four terms correspond to the fac-

torization of the matrices X

u

, X

a

, X

c

, and X

s

. The ma-

trix W represents the common latent space, and H

u

,

H

a

, H

c

, and H

s

are matrices representing the hidden

factors for each item-user interaction feature. The

hyper-parameters are defined by α, β, γ, and ω to

control the importance of each factorization with val-

ues between 0 and 1. Setting the hyper-parameters

as 0.25 gives equal importance to the matrices de-

composition, while values of α, β, γ, and ω set as

> 0.25 (or < 0.25) give more importance to the fac-

torization of X

u

(or X

a

, or X

c

, or X

s

), respectively.

The Tikhonov regularization of W is controlled by the

hyper-parameter λ ≥ 0 to enforce the smoothness of

the solution and avoid overfitting.

4.1.1 Optimization

Alternating optimization is required in NECoNMF

to minimize the objective function, since perform-

ing collective factorization leads us to find a common

low-dimensional space that is optimal for the linear

approximation of the user and item data points. As-

sume the data from user and item has a common dis-

tribution where it can exploit a better low-dimensional

space, i.e., two data points, u

i

and v

j

, are close to each

other in the low-dimensional space, if they are geo-

metrically close in the distribution. This assumption

is known as the manifold assumption and is applied

in algorithms for dimensionality reduction and semi-

supervised learning (Saveski and Mantrach, 2014).

We model the local geometric structure through a

nearest neighbour graph on a scatter of data points as

seen in (Saveski and Mantrach, 2014). Considering a

nearest neighbour graph, we represent each data point

as node n. Then, we find the k nearest neighbours for

each node, and we connect these nodes in the graph.

The edges may have: (1) binary representation, where

1 defines one of the nearest neighbours and otherwise

0; (2) or weights representation, for example, pearson

correlation. The result of this process is an adjacency

matrix A, which may be used to find the local close-

ness of two data points u

i

and v

j

.

Based on this assumption, we assume the collec-

tive factorization reduces the data point u

i

from a ma-

trix X, into a common latent space W as w

i

. Then,

applying Euclidean distance kw

i

− w

j

k

2

, we can cal-

culate the distance between two low-dimensional data

WEBIST 2018 - 14th International Conference on Web Information Systems and Technologies

38

points and map them into the matrix A. We repeat the

process until the stationary point or the established

number of maximum iterations, as follows:

M =

1

2

n

∑

i, j=1

kw

i

− w

j

k

2

A

i j

=

n

∑

i=1

(w

T

i

− w

i

)D

ii

−

n

∑

i, j=1

(w

T

i

− w

i

)D

ii

= Tr(W

T

DW ) − Tr(W

T

AW ) = Tr(W

T

LW ),

(3)

where Tr(•) is the trace function, and D is the di-

agonal matrix whose entries are row sums of A (or

column, as A is symmetric). To enforce the non-

negative constraints, we need to define the Laplacian

matrix as D

i

i =

∑

i

A

i

j; L = D − A.

We can re-write the optimization problem of func-

tion f (W ) as:

min : f (W ) =

1

2

[αkX

u

−WH

u

k

2

2

+ βkX

a

−WH

a

k

2

2

+γkX

c

−WH

c

k

2

2

+ ωkX

s

−WH

s

k

2

2

+ϕTr(W

T

LW )

+λ(kW k

2

+ kH

u

k

2

+ kH

a

k

2

+ kH

c

k

2

+ kH

s

k

2

)]

s.t.W ≥ 0, H

u

≥ 0, H

a

≥ 0, H

c

≥ 0, H

s

≥ 0

(4)

where ϕ is a hyper-parameter controlling the ob-

jective function, and the hyper-parameters α, β, γ, ω,

and λ have the same semantics as in Equation 2.

4.2 Multiplicative Update Rule

Multiplicative update rule (MUR) is applied in the

model as regularization term of W . MUR updates the

scores in each iteration to reach the stationary point,

where we fix the value of W while minimizing f (W )

over H

u

, H

a

, H

c

, and H

s

. We formalize the partial

derivative function in Equation 5 before calculating

the update rules.

∇ f (W ) = αW H

u

H

T

u

− αY

u

H

T

u

+ βW H

a

H

T

C

− βY

a

H

T

a

+γW H

c

H

T

c

− γY

c

H

T

c

+ ωW H

s

H

T

s

− ωY

s

H

T

s

+ λI

k

(5)

where k is the number of factors and I

k

is the iden-

tity matrix with k × k dimensions.

The update rules are formalized as in Equation

6, after calculating the derivatives of f (W ), f (H

u

),

f (H

a

), f (H

c

), and f (H

s

) from Equation 5.

W =

[αY

u

H

T

u

+ βY

a

H

T

a

+ γY

c

H

T

c

+ ωY

s

H

T

s

]

[αH

U

H

T

U

+ βH

a

H

T

a

+ γH

c

H

T

c

+ ωH

s

H

T

s

+ λI

k

]

(6)

where

•

•

corresponds to left division.

The results of each iteration give us the solution

for pair-wise division, where the objective function

and the delta decrease on each iteration of the above

update rule, guaranteeing the convergence into a sta-

tionary point. Note, we map any negative values from

W matrix to zero, becoming non-negative after each

update.

4.3 Barzilai-Borwein

The Brasilai-Borwein regularization term is used to

optimize the hidden factor matrices H

u

, H

a

, H

c

, and

H

s

. We represent the hidden factor matrices as H

for the input matrices X

u

, X

a

, X

c

, and X

s

, since they

present equal problem as shown in Equation 7:

min

W ≥0

: f (H) =

1

2

kX −W Hk

2

F

(7)

We map all the negative values into zero through

P(•) for any α > 0, as we defined for W in the previ-

ous section. Equation 8 formally describes the state-

ment as:

kP[H − α∇ f (H)]− Hk

F

= 0.

(8)

Applying ε

H

in Equation 8 lead us to the following

regularization term kP[H − α∇ f (H)] − Hk

F

≤ ε

H

,

where ε

H

= max(10

−3

, ε)kP[H − α∇ f (H)] − Hk

F

.

We decrease the stopping tolerance by ε = 0.1ε

H

, if

the Borzilai-Borwein algorithm defined in (Costa and

Dolog, 2018) solves Equation 7 without any itera-

tions.

The gradient ∇ f (W ) of f (H) is Lipschitz term

with constant L = kW

T

W k

2

. L is not expensive to ob-

tain, since Equation 4 defines W

T

W with dimensions

k × k and k min{m, n}. For a given H

0

≥ 0:

L (H

0

) = {H| f (H) ≤ f (H

0

), H ≥ 0}.

(9)

Following the definition of Equation 9 we have the

stationary point of the Barzilai-Borwein method.

4.4 Top-N Recommendation Process

Factorizing the input matrices return the trained ma-

trices W , H

u

, H

a

, H

c

, and H

s

allows us to use the

hidden factor elements for prediction. Given the new

items’ vector v

i

, we can predict the most preferable

items according to the user’s interest v

u

. To solve

this issue we project the items vector v

i

to the com-

mon latent space by solving the overdetermined sys-

tem v

i

= wH

u

using the least squares method. The

vector w, captures the factors, in the common latent

space, that explain the preferable items v

i

. Then, by

using the low-dimensional vector w we infer the miss-

ing part of the query: v

u

← wH

t

where H

t

is the con-

catenation of content feature, context, and sentiment

Neural Explainable Collective Non-negative Matrix Factorization for Recommender Systems

39

matrices H

t

= H

a

||H

c

||H

s

. Each element of v

u

repre-

sents a score of how likely the user will like a new

item. Then, given these scores, we may sort the list of

items.

Moreover, given the sorted list of items and their

rating scores, we explain the predicted ratings using

the natural language generation model. The expla-

nation model uses the ratings as attention model to

generate personalized sentences according to user’s

writing style. The training model identifies positive

and negative sentences according to the user’s previ-

ous reviews.

4.5 Natural Language Explanation

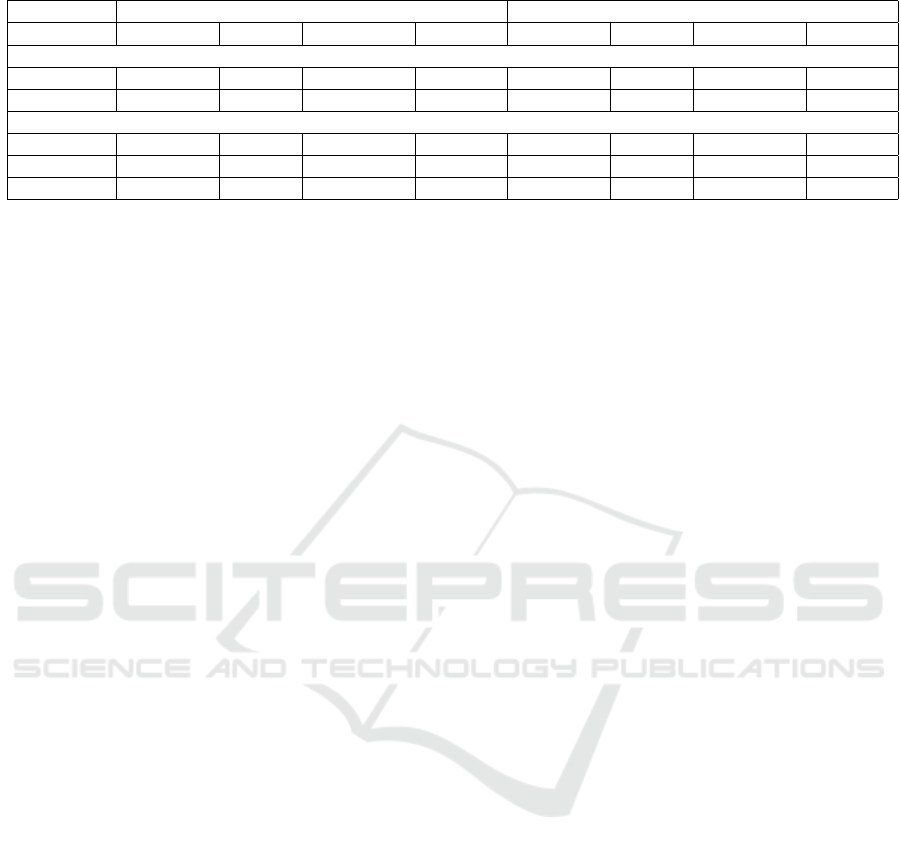

The natural language generation model is divided in

(1) four sub-models: context encoder, LSTM net-

work, attention layer, and generator model; and (2)

two input sources: review text and concatenated char-

acter embeddings of user, item, and rating vector, as

presented in Figure 3. First, users and items em-

beddings are learned from doc2vec model (Le and

Mikolov, 2014), where characters of reviews are con-

verted to one-hot vectors, corresponding to the input

time-steps of LSTM network. Second, the embed-

dings are concatenated with the outputs of LSTM,

which are inputs for the following attention layer. Fi-

nally, the generator model produce sentences using

outputs from the attention layer.

r"

doc2vec

LSTM

g

[3,4]

r

[3,4]

e

[3,4]

a

[3,4]

t

[3,4]

<EOF>

[3,4]

Attention

Generator Explanation

<STR>

[3,4]

g

[3,4]

r

[3,4]

e

[3,4]

a

[3,4]

t

[3,4]

LSTM LSTM LSTM LSTM LSTM

Figure 3: Natural Language Explainability model.

Context Encoder. It aims to encode the input char-

acter into one-hot encoding, then concatenate the rat-

ings given by a user according to different item’s con-

tent features. This data will later become the input

for our LSTM network as seen in Fig. 3. To do so,

we created a dictionary for the characters in the cor-

pus to define their positions. The dictionary is used to

encode the characters during the training step and to

decode during the generating step. One-hot encoding

vector is generated for each character in the reviews

based on its position in the dictionary. Furthermore,

the vector is concatenated with a set of ratings varying

from 0 to 1, as shown in Equation 10.

X

0

t

= [onehot(x

char

);x

aux

]

(10)

LSTM Network. LSTM is an extended version

of recurrent neural network (RNN), where neurons

transmit information among other neurons and layers

simultaneously, as presented in Figure 3. Therefore,

LSTM is built upon a sequential connection of forget,

input and output gates. The forget gate aims to de-

cide which old information should be forgotten; the

input gate updates the current cell state; and the out-

put gate selects the information to go to the next layer

and cell. First, the LSTM network receives the input

data x

t

at time t and the cell state C

t−1

from previous

time step t − 1. Second, the input data feed the for-

get gate, where it chooses which information will be

discarded. In Equation 11, f

t

defines the forget gate

in time t, where W

f

and b

f

refers to the weight ma-

trix and bias, respectively. The third step is to define

which information should be stored in cell state by

the input gate i

t

. During the fourth step, the cell cre-

ates a candidate state C

0

t

by a tanh layer. We update

the current state C

t

according to the candidate state,

the previous cell state, the forget gate, and the input

gate. During the final step, the data goes to the output

gate, where it uses sigmoid function layer to define

the output and multiply the tanh with the current cell

state C

t

to return the next character with the highest

probability.

f

t

= σ([x

t

,C

t−1

] W

f

+ b

f

)

i

t

= σ([x

t

,C

t−1

] W

i

+ b

i

)

C

0

t

= tanh([x

t

,C

t−1

] W

c

+ b

c

)

C

t

= f

t

C

t−1

+ i

t

C

0

t

o

t

= σ([x

t

,C

t−1

] W

o

+ b

o

)

h

t

= o

t

tanh(C

t

)

(11)

Attention Layer. The attention layer adaptively

learns soft alignments h

t

between character depen-

dencies c

t

and attention inputs. Equation 12 formally

describes the character dependencies using attention

layer h

attention

t

as explained by (Dong et al., 2017).

c

t

=

attention

∑

i

exp(tanh(W

s

[h

t

, attention

i

]))

∑

exp(tanh(W

s

[h

t

, attention

i

]))

attention

i

h

attention

t

= tanh(W

1

c

t

+W

2

h

t

)

(12)

Generator Model. The generator model is built on

character-level. To do so, we have to maximize a non-

linear softmax function to compute the conditional

WEBIST 2018 - 14th International Conference on Web Information Systems and Technologies

40

probability p among the characters, as presented in

Equation 13. The text generation task concatenates

an initial prime text as the start symbol in each gener-

ated review-based explanation according to different

item’s content features. Finally, the network feeds the

softmax layer with its output data, as shown in Equa-

tion 13.

p = softmax(H

attention

t

W + b), char = argmax p

(13)

where H

attention

t

is the output of a LSTM cell, W

and b are the weight and bias of the softmax layer,

respectively.

This procedure produces a character char recur-

sively for each time step, until it finds the pre-defined

end symbol.

5 EXPERIMENTS

In this section, we describe our experiment setup. We

start by detailing the datasets, the evaluation metrics

and the baselines.

5.1 Datasets

We use two datasets to conduct our experiments: Yelp

and Amazon.

The original Yelp dataset was published in April

2013 and has been used by the recommender systems

community, due to the user reviews. The dataset has

45,981 users, 11,537 items, and 22,907 reviews, how-

ever it is sparse, since 49% of the users have only one

review, making it difficult to evaluate in top-N recom-

mendation. We filtered the users with more than 10

reviews as (Zhang et al., 2014; He et al., 2015). The

Amazon dataset contains more than 800k users, 80k

items and 11.3M reviews, however it is more sparse

than Yelp, making us adopt the same strategy as ap-

plied in the previous dataset. Table 1 summarizes the

dataset information.

Table 1: Dataset Summarization.

Yelp Amazon

#users 3,835 2,933

#items 4,043 14,370

#reviews 114,316 55,677

#density 0.74% 0.13%

The experiments were performed in a Unix server

with the following settings: 32GB of RAM and 8 core

CPU Intel Xeon with 2.80GHz. The parameters for

collective matrix factorization were set as: learning

rate = 0.001; k = 45; iterations = 50 (to ensure the

convergence point); and λ = 0.5. The LSTM net-

work has two hidden layers with 1024 LSTM cells per

layer, where the input data was split in 100 batches

with size equal to 128 and each batch has a sequence

length equal to 280. For this experiment, we ap-

plied cross-validation to avoid overfitting, where each

dataset were divided into 5 − f olds.

5.2 Evaluation Metrics

The output of the algorithms is a ranked list of items

considering the user’s feedback. For this list, the

effectiveness of the top-N recommendation is mea-

sured according to information retrieval ranking met-

rics: NDCG and Hit Ratio (HR). We apply NDCG to

measure the ranking quality as defined by Equation

14, where the metric gives higher score to the items at

the top rank, and lower scores to the items at the low

rank.

NDCG@N = Z

N

N

∑

i=1

2

r

i

− 1

log

2

(i + 1)

(14)

where Z

N

normalizes the values, which guaran-

tees the perfect ranking has a value of 1; and r

i

is

the graded relevance of item at position i. We define

r

i

= 1 if the item is in the test dataset, and otherwise

0.

Hit Ratio has been commonly applied in top-

N evaluation for recommender systems to asses the

ranked list with the ground-truth test dataset (GT). A

hit is denoted when an item from the test dataset ap-

pears in the recommended list. We calculate it as:

Hit@N =

Numbero f Hits@N

|GT |

(15)

The number of listed item in the trained dataset is

defined by N. Therefore, we set N = 5 and N = 10,

due to large values of N would result in extra work for

the user to filter among a long list of relevant items.

High values of NDCG or HR indicate better perfor-

mance.

5.3 Comparison Baselines

We list the related works in Section 2, where we high-

lighted the techniques based on their recommendation

tasks: top-N and explainable recommendations. In

this section we describe four baselines, where two are

popular techniques for top-N recommendations and

the two other are recent techniques applying review-

based explanations.

Neural Explainable Collective Non-negative Matrix Factorization for Recommender Systems

41

Table 2: NDCG and Hit Ratio results for NECoNMF and compared methods at rank 5 and 10.

Dataset Yelp Amazon

Metric NDCG@5 HIT@5 NDCG@10 HIT@10 NDCG@5 HIT@5 NDCG@10 HIT@10

Top-N Algorithms

ItemPop 0.0110 0.0136 0.0185 0.0306 0.0077 0.0082 0.0136 0.0238

PageRank 0.0235 0.0278 0.0313 0.0452 0.0978 0.1070 0.1029 0.1200

Explainable Recommendation

TriRank 0.0258 0.0313 0.0353 0.0527 0.1033 0.1127 0.1086 0.1266

EFM 0.2840 0.0448 0.2955 0.0678 0.3615 0.1284 0.3670 0.1429

NECoNMF 0.3366 0.0503 0.3461 0.0763 0.3892 0.1301 0.3962 0.1486

Top-N Recommendation

• ItemPop. The method ranks items by their popu-

larity given by the number of ratings. It usually

presents good performance, because users tend to

consume popular items.

• PageRank. Personalized Pagerank is a widely

used method for top-N recommendation. We ap-

plied the configuration proposed by (He et al.,

2015), considering the user-item graph and the

damping parameter is respectively optimized to

0.9 and 0.3 for Yelp and Amazon datasets.

Explainable Recommendation

• EFM. The model applies a phrase-level sentiment

analysis of user reviews for personalized recom-

mendation. It extracts explicit product features

and user opinions from reviews, then incorporates

user-feature and item-feature relations as well as

user-item ratings into a hybrid matrix factoriza-

tion framework. The method has k as hyper-

parameter to define the number of most cared

features, which we define k = 45 as reported in

(Zhang et al., 2014) to have the best performance

in the Yelp dataset.

• TriRank. The model ranks the vertices of user—

item—aspect tripartite graph by regularizing the

smoothness and fitting constraints. TriRank is

used for review-aware recommendation, where

the ranking constraints directly model the col-

laborative and aspect filtering. The authors de-

scribe in (He et al., 2015) an experiment regard-

ing the parameters used by TriRank algorithm,

where setting item − aspect, user − aspect, and

aspect − query as 0 results in poor performance.

For the experiments in this paper we use the de-

fault settings α = 9, β = 6, and γ = 1.

6 RESULTS AND DISCUSSIONS

In this section we present the results regarding the rat-

ing prediction for top-N recommendation, and discuss

the explanations for rating prediction.

6.1 Overall Performance

This section describes the overall performance of

NECoNMF regarding the rating prediction for top-N

recommendation in comparison with other methods.

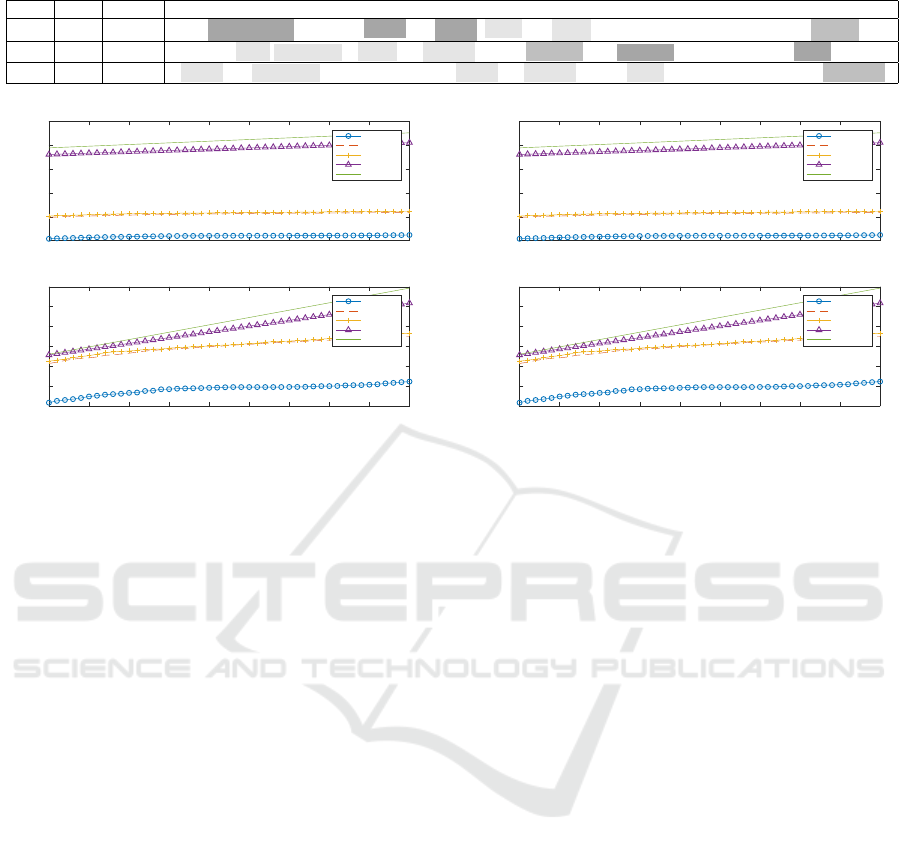

Analyzing the NDCG@5 score of NECoNMF in

comparison with ItemPop in Table 2 we observe an

improvement of 30× for the Yelp dataset and 50×

for the Amazon dataset, while HIT@5 is 4× better

for the Yelp dataset and 16× for the Amazon dataset.

ItemPop has shown the poorest overall performance

because it does not apply personalization during its

prediction task.

On the other hand, personalized PageRank had a

good performance due to apply the user’s historical

information to predict the user’s preferences. De-

spite the good performance, PageRank does not use

latent information to infer the recommendation, for

example item’s content features, context, sentiment,

and ratings, what may explain the lower performance

when compared to NECoNMF. Comparing the re-

sults for the Yelp dataset the accuracy of NECoNMF

compared to PageRank was improved in 14.4× for

NDCG@5 and 2× for HIT@5. Applying the recom-

mendation model for the Amazon dataset leads us to

a similar result where NECoNMF performs better in

3.9× for NDCG@5 and 21% for HIT@5.

TriRank performed poorer in comparison to

NECoNMF, because it applies tripartite graph ap-

proach to predict users’ preferences making it unable

to identify hidden features. During the experiments

for the Yelp dataset the accuracy was improved in

13× for NDCG@5 and 60% for HIT@5. Likewise,

for the Amazon dataset we observed an improvement

of 3.7× for NDCG@5 and 15.4× for HIT@5. Ob-

serving Hit Ratio scores in Table 2 we noticed PageR-

WEBIST 2018 - 14th International Conference on Web Information Systems and Technologies

42

Table 3: Example explanations produced by NECoNMF on the Yelp dataset.

User Item Rating Generated Explanation

A X 1 i was disappointed . it was too small and noisy . food was good , but i just can’t come back with my family .

A Y 3 this was a nice restaurant . i liked the service and the location . i’m not sure i’d recommend the food there.

B X 5 i loved this restaurant . i could not leave it. i loved the service and the food . i will be back again next weekend .

5 10 15 20 25 30 35 40 45 50

N

0

0.1

0.2

0.3

0.4

0.5

NDCG@N

ItemPop

PageRank

TriRank

EFM

ECoNMF

5 10 15 20 25 30 35 40 45 50

N

0

0.05

0.1

0.15

0.2

0.25

0.3

Hit@N

ItemPop

PageRank

TriRank

EFM

ECoNMF

Figure 4: Empirical evaluation on Yelp dataset for N from

5 to 50.

ank and TriRank have close scores, however TriRank

performed better because it applies tripartite graph

user–item–aspect, allowing TriRank to incorporate

one more feature during the recommendation process.

Comparing NECoNMF and EFM, we ob-

serve a close performance between them, however

NECoNMF presented an improvement for top-N rec-

ommendation. Considering the results in Table 2

we observe an improvement of 18.3% in NDCG@5,

12.2% in HIT@5, 17.1% in NDCG@10, and 12.2%

in HIT@10 for the Yelp dataset. Similar improve-

ment was observed in the Amazon dataset, 7.6% in

NDCG@5, 1.3% in HIT@5, 7.9% in NDCG@10,

and 3.9% in HIT@10. NECoNMF factorizes item’s

content features and contextual information as addi-

tional features allowing a better user modeling in the

vectorial space and improving the personalized rec-

ommendation task. On the other hand, EFM mod-

els the user’s behaviour based only on ratings and as-

pects. Those features play an important role in achiev-

ing better NDCG score, hence it defines users’ in-

terests in specifics scenarios, for example, brunch in

a restaurant on a Sunday. Furthermore, EFM fac-

torizes its input matrices into four different latent

spaces, while NECoNMF collectively factorizes into

one common latent space among the matrices. There-

fore, jointly factorizing the matrices may identify hid-

den latent features not selected by EFM model during

the recommendation process.

Moreover, the models presented better perfor-

mance for top@10 than top@5 recommendation, due

5 10 15 20 25 30 35 40 45 50

N

0

0.1

0.2

0.3

0.4

0.5

NDCG@N

ItemPop

PageRank

TriRank

EFM

ECoNMF

5 10 15 20 25 30 35 40 45 50

N

0

0.05

0.1

0.15

0.2

0.25

0.3

Hit@N

ItemPop

PageRank

TriRank

EFM

ECoNMF

Figure 5: Empirical evaluation on Amazon dataset for N

from 5 to 50.

to the error is minimized when the system has a

higher number of items. Analyzing Figures 4 and 5

we observe NECoNMF presents higher accuracy per-

formance for top-N recommendation when N varies

from 5 to 50.

6.2 Explainability

NECoNMF presents the ability to provide explainable

recommendation, however explainability for recom-

mendation is not easy to evaluate. We apply the evalu-

ation method described by (Zhang et al., 2014), where

they assess the quality of explanations throughout ex-

amples generated by the explainable model.

Table 3 shows three explanations outcomes

according to different predicted user’s ratings.

NECoNMF presents an explanation according to

user’s writing style for each tuple user-item-rating,

where the dark grey represents negative sentiment,

and the light grey describes positive sentiment regard-

ing different item’s features. Furthermore, the mid-

grey scale represents the contextual feature such as

family, location and weekend. Note, the generated

explanation is presented in a personalized item’s re-

view style, because reviews have a high influence in

user’s decision. Comparing to (Zhang et al., 2014) ex-

planations, we observe a better readability in Table 3

when using review-based explanation, due to natural

language text generation.

Neural Explainable Collective Non-negative Matrix Factorization for Recommender Systems

43

7 CONCLUSIONS

We proposed a neural explainable collective non-

negative matrix factorization (NECoNMF) for recom-

mender systems combining ratings, content features,

sentiment, and contextual information in a common

latent space. Furthermore, we introduced a neural ex-

plainable model to interpret the predicted top-N rec-

ommendation. Finally, we presented the results re-

garding the experiments in two datasets, where we ob-

served that NECoNMF outperforms the state-of-the-

art methods.

The top-N recommendation task was addressed

using four different matrices as input for collective

non-negative matrix factorization. The combination

of content features, contexts, ratings, and sentiment

play an important role in explaining the recommended

list of items to a user.

The explainable model proved to be effective for

the review-oriented explanation task. The generated

explanations may help users during their decision

regarding specific item’s features, as users tend to

trust in the review-based explanation. Moreover, the

character-level text generation has the benefit of gen-

erating readable personalized text.

We would like to improve the readability pre-

sented by the explainable model and further extend

the project into a general explainable recommender

system, which is able to explain any recommendation

method. Furthermore, investigate technical improve-

ments related to the cold-start problem.

ACKNOWLEDGEMENTS

The authors wish to acknowledge the financial sup-

port and the fellow scholarship given to this re-

search from the Conselho Nacional de Desenvolvi-

mento Cient

´

ıfico e Tecnol

´

ogico - CNPq (grant#

206065/2014-0)

REFERENCES

Bengio, Y., Simard, P., and Frasconi, P. (1994). Learning

long-term dependencies with gradient descent is diffi-

cult. IEEE transactions on neural networks, 5(2):157–

166.

Costa, F. and Dolog, P. (2018). Hybrid learning model

with barzilai-borwein optimization for context-aware

recommendations. In Proceedings of the Thirty-

First International Florida Artificial Intelligence Re-

search Society Conference, FLAIRS 2018, Melbourne,

Florida USA., May 21-23 2018., pages 456–461.

Costa, F., Ouyang, S., Dolog, P., and Lawlor, A. (2018). Au-

tomatic generation of natural language explanations.

In Proceedings of the 23rd International Conference

on Intelligent User Interfaces Companion, IUI’18,

pages 57:1–57:2. ACM.

Cremonesi, P., Koren, Y., and Turrin, R. (2010). Perfor-

mance of recommender algorithms on top-n recom-

mendation tasks. In Proceedings of the Fourth ACM

Conference on Recommender Systems, RecSys ’10,

pages 39–46, New York, NY, USA. ACM.

Dong, L., Huang, S., Wei, F., Lapata, M., Zhou, M., and

XuT, K. (2017). Learning to generate product reviews

from attributes. In Proceedings of the 15th Conference

of the European Chapter of the Association for Com-

putational Linguistics, CECACL’17, pages 623–632.

Association for Computational Linguistics.

El Hihi, S. and Bengio, Y. (1995). Hierarchical recurrent

neural networks for long-term dependencies. In Pro-

ceedings of the 8th International Conference on Neu-

ral Information Processing Systems, NIPS’95, pages

493–499, Cambridge, MA, USA. MIT Press.

Haveliwala, T. H. (2002). Topic-sensitive pagerank. In

Proceedings of the 11th International Conference on

World Wide Web, WWW ’02, pages 517–526, New

York, NY, USA. ACM.

He, X., Chen, T., Kan, M.-Y., and Chen, X. (2015). Tri-

rank: Review-aware explainable recommendation by

modeling aspects. In Proceedings of the 24th ACM In-

ternational on Conference on Information and Knowl-

edge Management, CIKM ’15, pages 1661–1670,

New York, NY, USA. ACM.

He, X., Kan, M.-Y., Xie, P., and Chen, X. (2014).

Comment-based multi-view clustering of web 2.0

items. In Proceedings of the 23rd International Con-

ference on World Wide Web, WWW ’14, pages 771–

782, New York, NY, USA. ACM.

Hochreiter, S. and Schmidhuber, J. (1997). Long short-term

memory. Neural computation, 9(8):1735–1780.

Karpathy, A., Johnson, J., and Li, F. (2015). Visualiz-

ing and understanding recurrent networks. CoRR,

abs/1506.02078. International Conference on Learn-

ing Representaions.

Koren, Y., Bell, R., and Volinsky, C. (2009). Matrix factor-

ization techniques for recommender systems. Com-

puter, 42(8):30–37.

Le, Q. and Mikolov, T. (2014). Distributed representations

of sentences and documents. In Proceedings of the

31st International Conference on International Con-

ference on Machine Learning - Volume 32, ICML’14,

pages II–1188–II–1196. JMLR.org.

Lee, D. D. and Seung, H. S. (2000). Algorithms for non-

negative matrix factorization. In Proceedings of the

13th International Conference on Neural Information

Processing Systems, NIPS’00, pages 535–541, Cam-

bridge, MA, USA. MIT Press.

Liu, J., Wang, C., Gao, J., and Han, J. (2013). Multi-view

clustering via joint nonnegative matrix factorization.

In Proceedings of the 2013 SIAM International Con-

ference on Data Mining, pages 252–260. SIAM.

WEBIST 2018 - 14th International Conference on Web Information Systems and Technologies

44

Ren, Z., Liang, S., Li, P., Wang, S., and de Rijke, M.

(2017). Social collaborative viewpoint regression with

explainable recommendations. In Proceedings of the

Tenth ACM International Conference on Web Search

and Data Mining, WSDM ’17, pages 485–494, New

York, NY, USA. ACM.

Saveski, M. and Mantrach, A. (2014). Item cold-start

recommendations: Learning local collective embed-

dings. In Proceedings of the 8th ACM Conference

on Recommender Systems, RecSys ’14, pages 89–96,

New York, NY, USA. ACM.

Sutskever, I., Vinyals, O., and Le, Q. V. (2014). Se-

quence to sequence learning with neural networks. In

Advances in neural information processing systems,

pages 3104–3112.

Zhang, Y., Lai, G., Zhang, M., Zhang, Y., Liu, Y., and Ma,

S. (2014). Explicit factor models for explainable rec-

ommendation based on phrase-level sentiment analy-

sis. In Proceedings of the 37th International ACM SI-

GIR Conference on Research & Development in

Information Retrieval, SIGIR ’14, pages 83–92, New

York, NY, USA. ACM.

Neural Explainable Collective Non-negative Matrix Factorization for Recommender Systems

45