Usability Heuristics for Mobile Applications

A Systematic Review

Marcos Antonio Dur

˜

aes Dourado

1

and Edna Dias Canedo

2

1

Faculty of Gama (FGA), University of Bras

´

ılia (UnB), Bras

´

ılia-DF, Box 8114, CEP 72.444-240, Brazil

2

Department of Computer Science, Building CIC/EST, University of Bras

´

ılia (UnB),

Bras

´

ılia-DF, P.O. Box 4466, CEP 70910-900, Brazil

Keywords:

Usability, Usability Heuristics, Heuristic Evaluation, Mobile, Smartphone, Systematic Review, HumanCom-

puter Interaction.

Abstract:

Usability is one of the factors that most affects a software quality. The increasing adoption of mobile devices

brings new usability challenges, as well as a need for specific standards for this type of product. This paper

aims to conduct a systematic review of the literature, complemented by a manual and snowballing search to

obtain usability heuristics and heuristic evaluations for mobile applications. The result of the study was a set

of thirteen usability heuristics, specific to smartphones, related to the ten Nielsen’s heuristics. In addition,

five possible ways of evaluating the usability of mobile applications are described. The specification of the

heuristics found shows that they can be used both for the evaluation of already developed applications and for

the prototyping of new applications, which helps developers achieve their goals regarding product quality. The

main contributions of this work is the compilation of desktop usability heuristics in a new, more specific set of

heuristics adapted to the mobile paradigm.

1 INTRODUCTION

The use of smartphones has been growing substan-

tially in the market, together with the development

and use of applications for these devices (Biel et al.,

2010). With the progress in the use of mobile devi-

ces and their applications, new challenges appear and

some peculiarities need to be studied and developed,

such as usability (de Lima Salgado and Freire, 2014).

Usability is defined as the ”capacity to be used”,

that is, the capacity that the device has to be used

(Qui

˜

nones and Rusu, 2017). In practice, usability de-

pends on what the user wants to do and their goals

in the context in which the user is acting (Inostroza

et al., 2016).

Usability can be developed in the product and eva-

luated by usability inspections or usability testing.

The form that is constantly used to evaluate this re-

quirement is the heuristic evaluation (Qui

˜

nones and

Rusu, 2017).

Some researchers have been developing different

usability heuristics for specific contexts. The purpose

of this study is to verify the usability heuristics spe-

cific to mobile applications, and also to verify how to

evaluate the usability of these applications.

In this paper we used a systematic literature re-

view, suggested by Kitchenham (Kitchenham, 2004),

to specify usability heuristics and heuristic evaluati-

ons focused on mobile applications. In addition, a

manual search and snowballing practice, proposed by

(Wohlin and Prikladniki, 2013), were implemented in

this work. The goal was to list approaches that help

develop applications with a usability that meets the

needs of the end user.

This paper is organized in 7 Sections. The Section

2 shows the theoretical basis of this work. Section

3 presents the systematic review planning. Section 4

describes the conduct of the procedures for selecting

a study. The results of the research are described in

Section 5, answering the research questions. A case

study is described in the Section 6. Finally, Section 7

concludes the results obtained by this work.

2 CONTEXTUALIZATION

This Section presents the concepts of usability, heu-

ristic evaluation, and usability heuristics.

Durães Dourado, M. and Dias Canedo, E.

Usability Heuristics for Mobile Applications.

DOI: 10.5220/0006781404830494

In Proceedings of the 20th International Conference on Enterprise Information Systems (ICEIS 2018), pages 483-494

ISBN: 978-989-758-298-1

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

483

2.1 Usability

The usability principals have been applied to variety

of contexts, such as mobile device, computer devi-

ces, mobile apps, website on mobile device, website

on computer device, interface, software, PDA, tablets,

and so forth.

In the mobile context, the correlation between usa-

bility perception and information is greater when the

correlations between usability perception and other

factors potentially affecting it. In the mobile context,

the correlation between usability perception and ap-

plication design is greater when the correlations be-

tween usability perception and other factors potenti-

ally affecting it, except information.

In the computer website context, the correlation

between usability perception and information is grea-

ter when the correlations between usability perception

and other factors potentially affecting it. In the com-

puter website context, the correlation between usabi-

lity perception and application design is greater when

the correlations between usability perception and ot-

her factors potentially affecting it, except information.

Usability is a quality attribute that assesses how

easy user interfaces are to use. The word ”usability”

also refers to methods for improving ease-of-use du-

ring the design process. Usability is defined by 5 qua-

lity components (Nielsen, 2003):

• Learnability. How easy it is to learn the main

system functionality and gain proficiency to com-

plete the job. We usually assess this by measuring

the time a user spends working with the system

before that user can complete certain tasks in the

time it would take an expert to complete the same

tasks. This attribute is very important for novice

users

• Efficiency. The number of tasks per unit of time

that the user can perform using the system. We

look for the maximum speed of user task perfor-

mance. The higher system usability is, the faster

the user can perform the task and complete the

job.

• Memorability. When users return to the design

after a period of not using it, how easily can they

reestablish proficiency? It is critical for intermit-

tent users to be able to use the system without ha-

ving to climb the learning curve again. This at-

tribute reflects how well the user remembers how

the system works after a period of non-usage.

• Errors. This attribute contributes negatively to

usability. It does not refer to system errors. On the

contrary, it addresses the number of errors the user

makes while performing a task. Good usability

implies a low error rate. Errors reduce efficiency

and user satisfaction, and they can be seen as a

failure to communicate to the user the right way

of doing things.

• Satisfaction. How pleasant is it to use the design?

One problem concerning usability is that these at-

tributes sometimes conflict. For example, learnabi-

lity and efficiency usually influence each other nega-

tively. A system must be carefully designed if it re-

quires both high learnability and high efficiency for

example, using accelerators (a combination of keys to

perform a frequent task) usually solves this conflict.

The point is that a systems usability is not merely the

sum of these attributes values; it is defined as reaching

a certain level for each attribute (Ferr

´

e et al., 2001).

There are many other important quality attribu-

tes. A key one is utility, which refers to the design’s

functionality: Does it do what users need?

Usability and utility are equally important and to-

gether determine whether something is useful: It mat-

ters little that something is easy if it’s not what you

want. It’s also no good if the system can hypotheti-

cally do what you want, but you can’t make it happen

because the user interface is too difficult. To study

a design’s utility, you can use the same user research

methods that improve usability (Nielsen, 2003).

ISO / IEC 9126-1 (for Standardization and Com-

mission, 2001), related to Software Engineering and

product quality, describes usability as the ability of

the software product to be understood, its operation

learned, to be operated and to be attractive to the user.

In addition, it describes six categories related to appli-

cation quality that are relevant to being implemented

during application development.

ISO / IEC 25000 (Suryn et al., 2003) has been de-

veloped to replace and extend ISO / IEC 9126 and

ISO / IEC 14598. This ISO / IEC 25000 standard, also

known as SQUARE (Software Product Quality Re-

quirements and Evaluation), aims to organize, Con-

cepts related to two main processes: software qua-

lity requirements specification and software quality

assessment, supported by software quality measure-

ment process.

It is observed that there is a lack of a clear and ge-

nerally accepted definition that defines usability (In-

ostroza et al., 2016). The measure of usability is com-

plex because usability is not a specific property of

a person or product. One can not measure usability

with a simple usability thermometer (Lewis, 2014).

In view of the human and product factors that inter-

fere with usability the difficulty in measuring it is re-

markable. There are several papers that address this

difficulty (Qui

˜

nones and Rusu, 2017).

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

484

2.2 Heuristic Evaluation

The heuristic evaluation, proposed by Nielsen and

Molich (Nielsen, 1990), which aims to evaluate the

product based on the principles, or heuristics of usa-

bility, imposes that between three or five specialists

should inspect the application, pointing out what is

correct or incorrect (Scholtz, 2004).

In order to evaluate the usability of touchscreen

devices, specific aspects of these devices should be

taken into account (Inostroza et al., 2012a). The de-

sign of smartphones are influenced, mainly, by three

aspects (Inostroza et al., 2013):

1. Smartphones are mainly used in the hands of the

user.

2. They are operated wirelessly.

3. Support the addition of new applications and In-

ternet connection.

Elements such as light, sound and shape of itera-

tion are not as well defined as in traditional applica-

tions. Another challenge is the context of using tou-

chscreen mobile devices. In traditional applications

the context of use is well defined in terms of light,

sound and form of interaction (mouse and keyboard)

(Suryn et al., 2003). However, the ability to use the

device in various places, such as queues, hospitals,

banks, among others, make smartphone portability an

advantage.

There are several other methods to evaluate the

usability of an application (Qui

˜

nones and Rusu,

2017). As one can observe the evaluation heuris-

tics for mobile devices is not something simple. This

study aims to obtain more specific and efficient heu-

ristics for smartphones.

2.3 Usability Heuristics

Usability heuristics have this name, since they are

usability guidelines (Qui

˜

nones and Rusu, 2017). In

1982, Malone (Malone and W., 1982) proposed the

first heuristics to design a user-friendly application. It

is revised, that these heuristics were limited, and only

applicable for high-level issues in games.

Widely known heuristics are the ten Nielsen heu-

ristics (Jackob Nielsen, 1995). These principles were

written by the same authors who idealized the eva-

luation heuristics to inspect the product aiming the

quality of this one. The application building guide

contains ten principles (Jackob Nielsen, 1995):

1. Visibility of System Status – The system should

always keep users informed about what is going

on, through appropriate feedback within reasona-

ble time.

2. Correspondence between the System and the

Real World – The system should speak the users’

language, with words, phrases and concepts fami-

liar to the user, rather than system-oriented terms.

Follow real-world conventions, making informa-

tion appear in a natural and logical order.

3. User Control and Freedom – Users often choose

system functions by mistake and will need a cle-

arly marked ”emergency exit” to leave the unwan-

ted state without having to go through an extended

dialogue. Support undo and redo.

4. Consistency and Standards – Users should not

have to wonder whether different words, situati-

ons, or actions mean the same thing. Follow plat-

form conventions.

5. Prevention of Errors – Even better than good

error messages is a careful design which pre-

vents a problem from occurring in the first place.

Either eliminate error-prone conditions or check

for them and present users with a confirmation op-

tion before they commit to the action.

6. Recognition and not Remembering – Minimize

the user’s memory load by making objects, acti-

ons, and options visible. The user should not have

to remember information from one part of the di-

alogue to another. Instructions for use of the sy-

stem should be visible or easily retrievable whe-

never appropriate.

7. Flexibility and Efficiency of Use – Accelerators

– unseen by the novice user – may often speed

up the interaction for the expert user such that the

system can cater to both inexperienced and expe-

rienced users. Allow users to tailor frequent acti-

ons.

8. Aesthetic and Minimalist Design – Dialogues

should not contain information which is irrelevant

or rarely needed. Every extra unit of information

in a dialogue competes with the relevant units of

information and diminishes their relative visibi-

lity.

9. Help Users Recognize, Diagnose, and Recover

Errors – Error messages should be expressed in

plain language (no codes), precisely indicate the

problem, and constructively suggest a solution.

10. Help and Documentation – Even though it is bet-

ter if the system can be used without documen-

tation, it may be necessary to provide help and

documentation. Any such information should be

easy to search, focused on the user’s task, list con-

crete steps to be carried out, and not be too large.

Although these heuristics are widely used, it is

necessary to use specific heuristics for each type of

Usability Heuristics for Mobile Applications

485

application (Qui

˜

nones and Rusu, 2017). With this

in view, new heuristics have been created so that in-

spections and results can be more efficient (Inostroza

et al., 2016).

3 SYSTEMATIC REVIEW

PLANNING

The systematic review uses the approach suggested by

Kitchenham (Kitchenham, 2004). A systematic lite-

rature review is a means of identifying, evaluating and

interpreting all available research relevant to a parti-

cular research question, or topic area, or phenomenon

of interest. Individual studies contributing to a syste-

matic review are called primary studies; a systematic

review is a form a secondary study.

The systematic review involves three steps:

1. Review Planning: define the need for a systema-

tic review; Raise research questions; And define a

review protocol: data sources, strategy and search

terms, study selection criteria, study quality, data

extraction, and data synthesis.

2. Realization of the Review: select and analyze

the studies; Answering research questions; And

present the results, discussions and conclusions.

3. Reporting the Review: to write the review re-

sults and format the final document.

Inclusion and exclusion criteria were defined for

the selection of papers, which should deal with heu-

ristics, or guides, of usability only for mobile appli-

cations, therefore, papers that were specific to ap-

plications or desktop sites were excluded. After the

search, ambiguous and / or irrelevant papers for the

study were also excluded. Each of these steps will be

described in detail the following.

In addition to the systematic purist review propo-

sed by Kitchenham (Kitchenham, 2004), other rese-

arch techniques were also performed: the manual se-

arch and snowballing. As can be seen in Section 3.6.

The manual search, described in Section 3.6.1, and

snowballing, described in Section 3.6.2.

3.1 Research Questions

The systematic review will seek to answer through the

selected primary studies the research questions shown

in the Table 1.

3.2 Databases

In the systematic review (Qui

˜

nones and Rusu, 2017),

it is pointed out as later works the use of the IEEE and

Table 1: Research Questions.

ID Research Question

RQ1 What heuristics are used to evaluate pro-

duct quality in mobile applications?

RQ2 What metrics are used to evaluate usability

heuristics for mobile applications?

ACM bases, besides the ScienceDirect that was used

by the researchers. Therefore, the 3 bases will be used

in this systematic review.

3.3 Search String

The search string that has been used expects at le-

ast one of the terms ”usability heuristic” or ”usability

heuristics” to refer to at least one of the terms ”mo-

bile” or ”smartphone”, so that only mobile-related

usability heuristics are selected. The terms ”evalua-

tion” and ”Human-Computer Interaction” were also

inserted with the intention of refining the research.

After planning what terms should be inserted, the

result was:

((”usability heuristic” OR ”usability heuristics”)

AND (mobile OR smartphone)) AND (evaluation)

AND (”Human-Computer Interaction”) AND (”user

experience”)

3.4 Study Selection Criteria

A number of different criteria were used to select stu-

dies that fit the needs of this research. For this, criteria

for inclusion and exclusion of studies were developed.

To be selected the studies should follow the following

criteria:

1. Research papers that contain propositions for usa-

bility heuristics for mobile applications;

2. Studies that propose an approach, process or met-

hodology to establish usability heuristics;

3. Studies published between 2007 and 2017 and

written in English or Portuguese.

4. papers written in English or Portuguese.

The following types of papers were excluded:

1. Studies that contain proposed heuristics for ot-

her aspects (eg aesthetics, automation, hyper-

heuristics);

2. Studies that do not explain how usability heuris-

tics were developed;

3. Theses (eg master’s theses) or monographs;

4. papers not focused primarily on the definition of

usability heuristics, such as reports on usability

case studies or usability tests;

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

486

5. Studies related to the infrastructure of mobile

communication, mobile hardware or robotics;

6. papers focused on desktop, web or game applica-

tions;

7. Studies outside the field of computer or software

engineering;

8. Incomplete studies, such as only abstracts and ex-

panded abstracts;

9. Studies with less than four pages, published as

ShortPaper;

10. Studies that present only opinions without any

empirical evidence of support.

After using the search string in the previously se-

lected databases, an initial set of 43 papers were

obtained. From these studies the qualitative analysis

was performed, in which, if an paper did not have re-

levant information to extract, could be excluded from

the analysis. Taking into account the purpose of the

review of this study, a set of four criteria was establis-

hed:

1. The paper contains specific heuristics for mobile

devices;

2. The paper describes in detail the proposed usabi-

lity heuristics, with sufficient information to un-

derstand them;

3. The set of usability heuristics is an original propo-

sal or the adaptation of another set of heuristics;

4. The paper presents, in detail, a way to evaluate the

usability of the application.

3.5 Data Extraction

The data extraction strategy was mainly defined by

the design of the data extraction forms that would

precisely register the information obtained from the

selected studies.

After including the study in the systematic review,

the following information was identified and extrac-

ted:

1. The authors and the year of a study;

2. The usability heuristics used;

3. The heuristic evaluation used to validate the usa-

bility of the application.

3.6 Procedures for Selecting a Study

With the technique of Systematic Review it is pos-

sible, from the research questions and the defined

string, besides the inclusion and exclusion criteria, to

identify papers in the selected databases. However,

in this work, with the reading of the papers it was

possible to implement the Manual Search in periodi-

cals previously known as of the area, and the use of

the technique snowballing (Wohlin and Prikladniki,

2013), that allows the search of papers from the re-

ferences of the papers selected by the Systematic Re-

view.

The research procedures of this work in which the

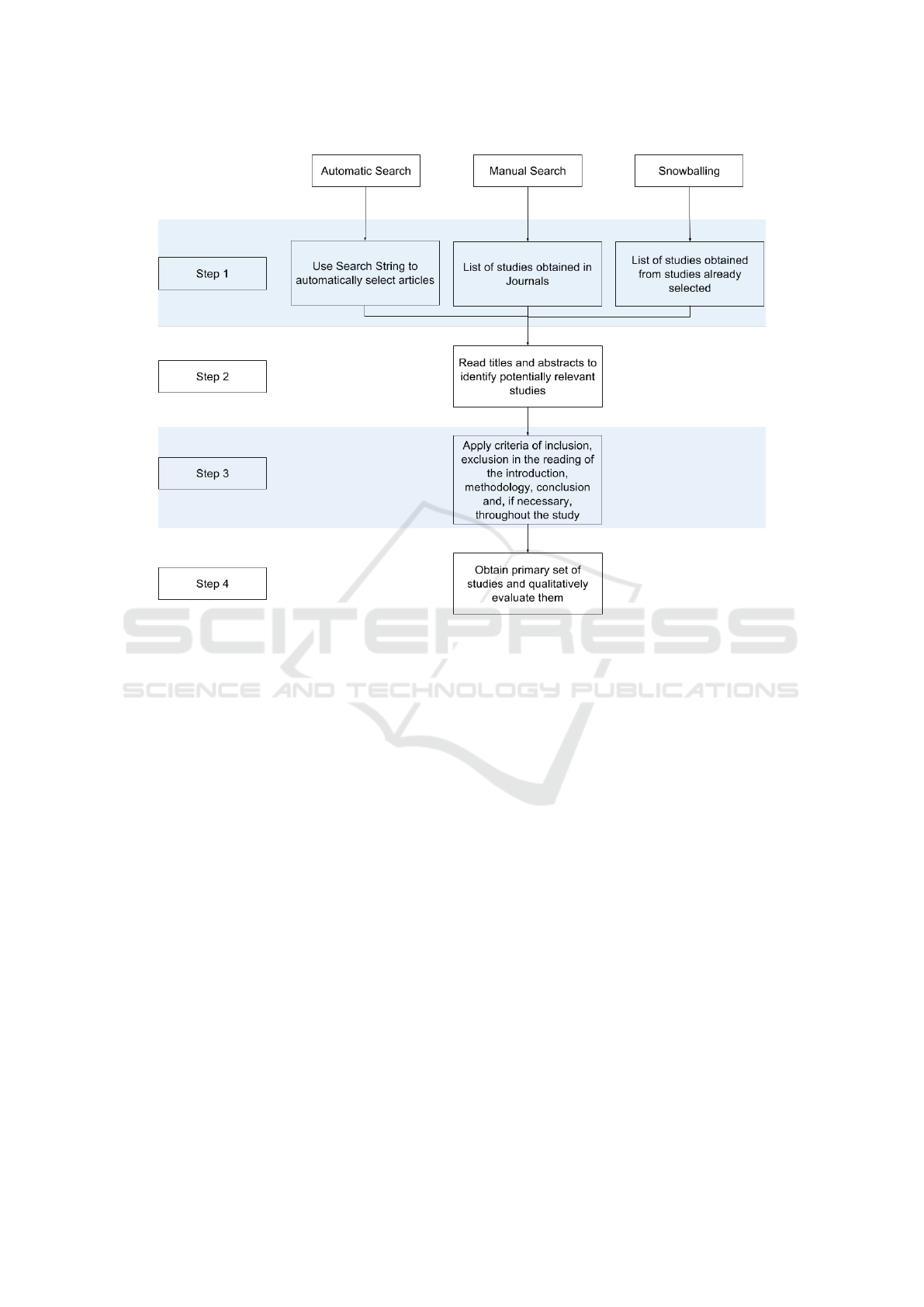

Systematic Review is used in Automatic Search, fol-

lowed by Manual Search and Snowballing. This way

of researching is similar to the one used in (Selleri

Silva et al., 2015). The steps of this research are des-

cribed on the Figure 1. All the steps are also better

described bellow:

• Step 1: Perform automatic search, manual and

snowballing in order to identify a preliminary list

of studies. Duplicate studies were discarded.

• Step 2: Identification of potentially relevant stu-

dies, based on title and abstract analysis, discar-

ding studies that are clearly irrelevant to the rese-

arch. If there was any doubt about a study regar-

ding its inclusion or exclusion, the next step was

to check whether the study was relevant or not.

• Step 3: Selected studies in previous steps were re-

viewed by reading the introduction, methodology

section and conclusion and applying the inclusion

and exclusion criteria. If reading the above items

was not enough to make a firm decision, the study

was read in its entirety.

• Step 4: thus, a list of primary studies was obtai-

ned and subsequently subjected to critical exami-

nation using the criteria established.

3.6.1 Manual Search

The manual search was performed by analyzing the

titles and abstracts (if necessary) of studies published

in Journals that deal with Human-Computer Iteration.

In addition, the Search String, has been applied in

Google Scholar. Those considered potentially rele-

vant were added to the set of papers selected.

3.6.2 Snowballing

Database searches are challenging for a variety of re-

asons, including selecting databases to use, different

interfaces to databases, different ways of constructing

search strings, different search limitations in databa-

ses, and identifying databases and synonyms of terms

used[6]. This reasoning leads to two conclusions:

1. Choosing the first step in the search strategy often

becomes the only step, that is, search databases;

Usability Heuristics for Mobile Applications

487

Figure 1: Procedure for Selecting a Study.

2. Given the challenges with the databases, impor-

tant studies can be lost.

Based on the snowballing instructions proposed by

Wohlin and Prikladniki (Wohlin and Prikladniki,

2013), in this study the steps used to perform this

technique were:

1. Use the papers selected in automatic and manual

searches as the initial set of selected studies;

2. Based on the selected studies, check references

by looking at works of authors already included,

since they obviously carry out relevant research in

relation to their objectives;

3. Based on the set of documents found, check stu-

dies that cite the selected studies (forward snow-

balling). It is recommended to use Google Scho-

lar as it captures more than individual databases.

4 CONDUCTING THE

PROCEDURES FOR

SELECTING A STUDY

This Section presents how the studies were selected

doing the procedures described on the Section 3.6.

4.1 Conduct of Systematic Review

Using the search string in the previously chosen data-

bases, a total of 38 papers were pre-selected, finis-

hing Step 1 of the job search procedure. described in

Section 3.6.

By performing Step 2, the titles and abstracts of

the selected studies were read and, if necessary, the

reading of the introduction, methodology and conclu-

sion was carried out, thus performing Step 3. At the

end of these procedures a total of 5 papers were cho-

sen.

4.2 Conduct of Manual Search

The Manual Search was carried out in parallel with

the Systematic Review. The bases chosen to search

for new studies were:

• Google Scholar - https://scholar.google.com.br/;

• Springer - http://www.springer.com/;

• MobileHCI - https://mobilehci.acm.org/.

A total of 4 papers were pre-selected, submitted

to the steps described in 3.6. At the end of the Manual

Search search, 2 papers were inserted into the primary

set of studies.

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

488

4.3 Conduct of Snowballing

After obtaining the 7 papers selected via Manual Se-

arch and Systematic Review, Snowballing was perfor-

med. Where 10 papers were pre-selected and submit-

ted to the same selection criteria of the other papers.

One of these papers was selected, since the others had

already been selected by the other search methods, or

were useful only for specific fields, such as games or

maps.

5 RESULTS

This section summarizes the results obtained after the

systematic review. The results analysis focuses on the

presentation of Table 2 which shows the studies found

using Manual Search, Systematic Review and Snow-

balling. In addition, Research Question (RQ) 1 and

2 presented in the Table 1 are also answered in this

Section.

A total of 50 papers were analyzed during the

conduction of the searches specified in sections 4, 5

and 6. From this total of studies 8 were selected for

Data Extraction, as Section 3.5 presents.

Table 2 shows the selected studies. Based on the

results obtained, the following subsections summa-

rize the analysis of each research question.

Table 3 shows the amount of studies selected re-

lated to their database.

5.1 RQ1 What Heuristics are Used to

Evaluate Product Quality in Mobile

Applications?

Since its appearance, Nielsen’s set of usability heu-

ristics (Jackob Nielsen, 1995) has been widely used

in many research papers. However, nowadays there is

more effort to develop and provide new sets of heu-

ristics (Jimenez et al., 2016). Currently, Nielsen heu-

ristics are used as a basis for developing or adapting

new sets of usability heuristics.

Through this study, one can detect the use and de-

velopment of different sets of usability heuristics spe-

cific to mobile applications. These heuristics are lis-

ted below:

ID - Name: MHU1 - Visibility of System Status

• Definition: The device must keep the user infor-

med about all processes and state changes through

comments and within a reasonable time frame.

• Explanation: Through interaction with the de-

vice, the user must be able to perform different

tasks. These actions can lead to a system state

change, which must be communicated to the user

in some way. In addition, there are other events

that are not triggered by user interaction, but re-

quire further response, ie: phone calls, video calls,

and more.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Chuan et al., 2014), (In-

ostroza et al., 2013), (Inostroza et al., 2016) and

(Qui

˜

nones and Rusu, 2017).

ID - Name: MHU2 - Correspondence between the

Application and the Real World

• Definition: The device must speak the language

of the users and not technical terms of the system.

The device must follow the conventions of the real

world and display the information in a logical and

natural order.

• Explanation: Today, touch-screen-based mobile

devices have particular features that allow the user

to interact with them in innovative ways, such as:

touchscreen, proximity sensor, and GPS. Through

these new modes of interaction, the user can per-

form tasks more intuitively, imitating real-world

interaction rules. As an example, by scrolling

down a long list, if the user ”slides” with a cer-

tain speed, the list will continue to move, mi-

micking the effect of inertia. Each interaction is

expected to show a response similar to that ex-

pected in the real world. In addition, the language

(text or icons) must be related to real world and

recognizable concepts.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Chuan et al., 2014), (In-

ostroza et al., 2013), (Inostroza et al., 2016) and

(Qui

˜

nones and Rusu, 2017).

ID - Name: MHU3 - User Control and Freedom

• Definition: The device must allow the user to

undo and redo their actions and provide ”emer-

gency exits” clearly pointed out of leaving un-

wanted states. These options should preferably be

available through a physical button or equivalent.

• Explanation: When the user makes a mistake

when entering text, modifying configuration op-

tions or just reaching an unwanted state, the sy-

stem must provide appropriate ”emergency ex-

its”. These outputs should easily allow the user

to move from an unwanted state to a desired one.

In addition, the user should be able to undo and

redo their actions in a simple and intuitive way.

On the other hand, the user must also be able to

easily manage the applications that are running on

Usability Heuristics for Mobile Applications

489

Table 2: Selected Studies for Data Extraction.

Search Type Research

Systematic review

1. (Motlagh Tehrani et al., 2014);

2. (Chuan et al., 2014);

3. (Inostroza et al., 2013);

4. (Inostroza et al., 2016);

5. (Qui

˜

nones and Rusu, 2017).

Manual Search

1. (Y

´

a

˜

nez G

´

omez et al., 2014);

2. (Al-nuiam, 2015).

Snowballing

1. (Inostroza et al., 2012b).

Table 3: Selected Studies Related to their Database.

Database Amount

ACM 1

IEEE 4

ScienceDirect 2

International Journal of Human Computer Interaction 1

Table 4: Selected Studies for Data Extraction.

MHU S 1 S 2 S 3 Average Number Standard Deviation

MH1 1.00 0.00 0.50 0.50 0.50

MH2 1.00 2.00 0.00 1.00 1.00

MH3 2.00 0.00 2.50 1.50 1.32

MH4 3.00 1.00 0.00 1.00 1.00

MH5 4.00 1.00 1.00 2.00 1.73

MH6 1.00 0.00 0.00 0.33 0.58

MH7 1.00 1.00 0.00 0.67 0.58

MH8 3.00 0.00 0.50 1.17 1.61

MH9 2.00 0.00 0.00 0.67 1.15

MH10 4.00 2.00 0.00 2.00 2.00

MH11 1.50 3.00 3.00 2.50 0.87

MH12 1.00 2.00 0.00 1.00 1.00

MH13 3.00 3.00 0.00 2.00 1.73

the device and the features in use. When using the

data network, the user must be able to control the

amount of data being transmitted and the associa-

ted time.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Inostroza et al., 2013), (In-

ostroza et al., 2016) and (Qui

˜

nones and Rusu,

2017).

ID - Name: MHU4 - Consistency and Standards

• Definition: The device must follow the establis-

hed conventions, allowing the user to do things in

a familiar, standardized and consistent way.

• Explanation: Often, different parts of the system

that are related and must be similar have different

design or logic. In general, all concepts presented

in contrast to the conception of the user concept

produce confusion to some degree. This confu-

sion can lead to decreased use efficiency or poor

satisfaction, among other side effects. All in all, it

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

490

is expected that the system will follow standards

and conventions to achieve an intuitive and easy

to use interface.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Chuan et al., 2014), (In-

ostroza et al., 2013), (Inostroza et al., 2016) and

(Qui

˜

nones and Rusu, 2017).

ID - Name: MHU5 - Error Prevention

• Definition: Your device must hide or disable una-

vailable feature.

• Warn users about critical actions, and provide

access to additional information.

• Explanation: The device should attempt to be

explicit with respect to each option and feature.

Considering a small screen size, this can be a

big challenge. In this way, the icons play a

very important role. Unfortunately, sometimes a

small image is not enough to describe in detail a

function or similar, and to correct this, the system

must provide additional information on the user’s

demand. The information should be clearly dis-

played, trying to avoid long dialogue sequences.

In addition, the user should be warned, especially

when some actions may have unwanted effects.

Potentially dangerous options should be placed at

deeper menu levels (so it is not recommended to

assign a physical button to one of these options).

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Inostroza et al., 2013), (In-

ostroza et al., 2016) and (Qui

˜

nones and Rusu,

2017).

ID - Name: MHU6 - Minimize User Memory

Load

• Definition: The device must provide visible ob-

jects, actions, and options to prevent users from

having to memorize information from one part of

the dialog box to another.

• Explanation: Short-term human memory is limi-

ted, so the user should not be forced to remember

information from one part of the system to anot-

her. Instructions on how to use the system should

be visible or easy to obtain. When talking about

mobile devices, the limited display size puts de-

signers in a difficult position as to which interface

elements should be hidden or minimized. In this

way, it is important that confidential information

be placed in a visible position. Users should not

write text from one part of the system to another,

on these devices it is better to select and copy than

to write.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Inostroza et al., 2013), (In-

ostroza et al., 2016) and (Qui

˜

nones and Rusu,

2017).

ID - Name: MHU7 - Customization and Shortcuts

• Definition: The device must provide basic and

advanced settings for setting and customizing

shortcuts for frequent actions.

• Explanation: Each user has their own needs and

trying to satisfy them all with a standard menu

or interface can be challenging. In this way, al-

low users to create their own shortcuts and custo-

mize most parts of the system can help. Through

access to advanced configuration options, savvy

users can improve their usability and new users

can have a deeper sense of ownership.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Chuan et al., 2014) and (In-

ostroza et al., 2016).

ID - Name: MHU8 - Efficiency of Use and Per-

formance

• Definition: The device must be able to load and

display information in a reasonable amount of

time and minimize the steps required to perform

a task. Animations and transitions should be dis-

played seamlessly.

• Explanation: The combination of hardware fea-

tures and software needs is not always the best.

The basic software is expected to be compatible

with hardware, especially with processing capabi-

lities, to avoid black screens and long standby ti-

mes. In addition, animations, effects, and transiti-

ons should be displayed seamlessly without inter-

ruption. Another critical point is the length of the

sequence of steps to perform a task. Complex, po-

tentially dangerous, or infrequent tasks may con-

tain several steps to enhance security. Simple or

frequent tasks should be short. If the user wants to

set an alarm at 4 A.M, he does not expect a 4-step

process.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Inostroza

et al., 2016) and (Qui

˜

nones and Rusu, 2017).

ID - Name: MHU9 - Aesthetic and Minimalist

Design

• Definition: The device should avoid displaying

unwanted information by overloading the screen.

• Explanation: For devices with an old release

date, each unit of information displayed on a

Usability Heuristics for Mobile Applications

491

small screen involves less performance. Desig-

ners should be careful when displaying informa-

tion across the screen. In addition, overloaded in-

terfaces can cause stress to the user.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Inostroza et al., 2013), (In-

ostroza et al., 2016) and (Qui

˜

nones and Rusu,

2017).

ID - Name: MHU10 - Helping Users Recognize,

Diagnose and Recover from Errors.

• Definition: The device should display error mes-

sages in a familiar language to the user, accura-

tely indicating the problem and suggesting a con-

structive solution.

• Explanation: When an error occurs, the user does

not need technical details or cryptographic alert

messages. The user needs clear feedback messa-

ges in a recognized language with instructions on

how to recover from the error.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Chuan et al., 2014), (In-

ostroza et al., 2013), (Inostroza et al., 2016) and

(Qui

˜

nones and Rusu, 2017).

ID - Name: MHU11 - Help and Documentation

• Definition: The device should provide documen-

tation that is easy to find and help, focusing on the

user’s current task and indicating concrete steps to

follow.

• Explanation: The device must provide access to

detailed information about the available features

in a clear and simple way, from any part or state

of the system where the user is located. It is re-

commended that this information be included in

the device. Otherwise, the documentation must

be available on a website or in print.

• Studies that Justify Its Use: (Inostroza et al.,

2012b), (Y

´

a

˜

nez G

´

omez et al., 2014), (Motlagh

Tehrani et al., 2014), (Chuan et al., 2014), (Inos-

troza et al., 2016) and (Qui

˜

nones and Rusu, 2017).

ID - Name: MHU12 - Pleasant and Respectful

Interaction with the User

• Definition: The device must provide a pleasant

iteration with the user so that the user does not

feel uncomfortable while using the application.

• Explanation: The system must complete partial

data entry in specific fields, as well as grant the

possibility of saving the data that the user inserted

in screens with many fields. The data entry fields

must match the expected data type.

• Studies that Justify Its Use: (Y

´

a

˜

nez G

´

omez

et al., 2014), (Chuan et al., 2014) and (Inostroza

et al., 2016).

ID - Name: MHU13 - Privacy

• Definition: The device must protect the user’s

confidential data.

• Explanation: The system should request the

user’s password for the modification of important

data, as well as provide information about how the

user’s personal data is protected and about copy-

right content.

• Studies that Justify Its Use: (Y

´

a

˜

nez G

´

omez

et al., 2014).

5.2 RQ2 What Metrics are Used to

Evaluate Usability Heuristics for

Mobile Applications?

After developing an application using the usability

heuristics listed on the Section 5.1 it should be chec-

ked whether the application contains them. The heu-

ristic evaluations used by the studies selected are

shown below:

ID: HE1

• Explanation: Specialists judge 1 to 4 as the ap-

plication: 1 for heuristic items, 2 for those that

correspond to usability gaps, 3 for heuristic items

that were not evaluable in the real life-cycle phase,

and 4 for non-usability issues to the interface.

• Studies that Justify Its Use: (Y

´

a

˜

nez G

´

omez

et al., 2014)

ID: HE2

• Explanation: The evaluation process came about

in the evaluators’ environment. All 6 experts

spent about 30 minutes to 45 minutes examining

the prototype. The steps in the procedure were

to identify the number of specialists, identify suit-

able evaluators, organize a consultation with the

evaluators, distribute the questionnaire to the spe-

cialists, complete the questionnaire by the eva-

luators, obtain comments to improve the design

and redesign the application based on expert com-

ments for better interactive interface.

• Studies that Justify Its Use: (Motlagh Tehrani

et al., 2014)

ID: HE3

• Explanation: Given a series of activities for 15

users, it was timed the time each took to complete

them. These activities were performed under dif-

ferent environmental conditions (heat, light, etc.).

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

492

The average user time was compared to the time

a specialist took to complete the activities, if the

average user time and expert time were similar,

then this means that the application has good usa-

bility. In addition, a questionnaire with 47 ques-

tions was applied to all those involved, in which

one should note between 1 and 5 for the parame-

ters: learning ability, memorability, efficiency and

error rate.

• Studies that Justify Its Use: (Al-nuiam, 2015)

ID: HE4

• Explanation: Two separate groups of evaluators

inspected the device. Each group consisted of two

or three evaluators. One group used the proposed

heuristics while the other group used Nielsen.

• Studies that Justify Its Use: (Inostroza et al.,

2013)(Inostroza et al., 2012b)

ID: HE5

• Explanation: The participants performed a heu-

ristic evaluation of the app. Then, the number of

problems by heuristics/experimental groups, the

average severity and the associated standard devi-

ation. Severity was estimated on a 0 (low) to 4

(high) scale.

• Studies that Justify Its Use: (Inostroza et al.,

2016)

6 CASE STUDY

A total of 03 specialists performed the HE5 on an An-

droid app called Carona Phone.

The app consists in a platform which wishes to

help people who wants to request a ride to go from

a place to another, it is more used by students who

want to go from their house the university. The per-

son who will give the ride have to register his or her

information in the application, such as: name, car and

route. On the other hand, the person who wants to

request the ride have to put his or her information on

the Carona Phone. Finally, the software will show the

user who wants a ride or who can give the ride in the

specific route and time.

The specialists separately tested each one of the

features, and then, rated it. The problems were es-

timated on on a 0 (low) to 4 (high) scale. In this

way they were not influenced by the other judgments.

To do it they used the 13 mobile usability heuristics

found in this paper, this elements were the ones who

received the grade from 0 to 4. In this way they

could say which heuristics were implemented well

and which ones needed to be improved.

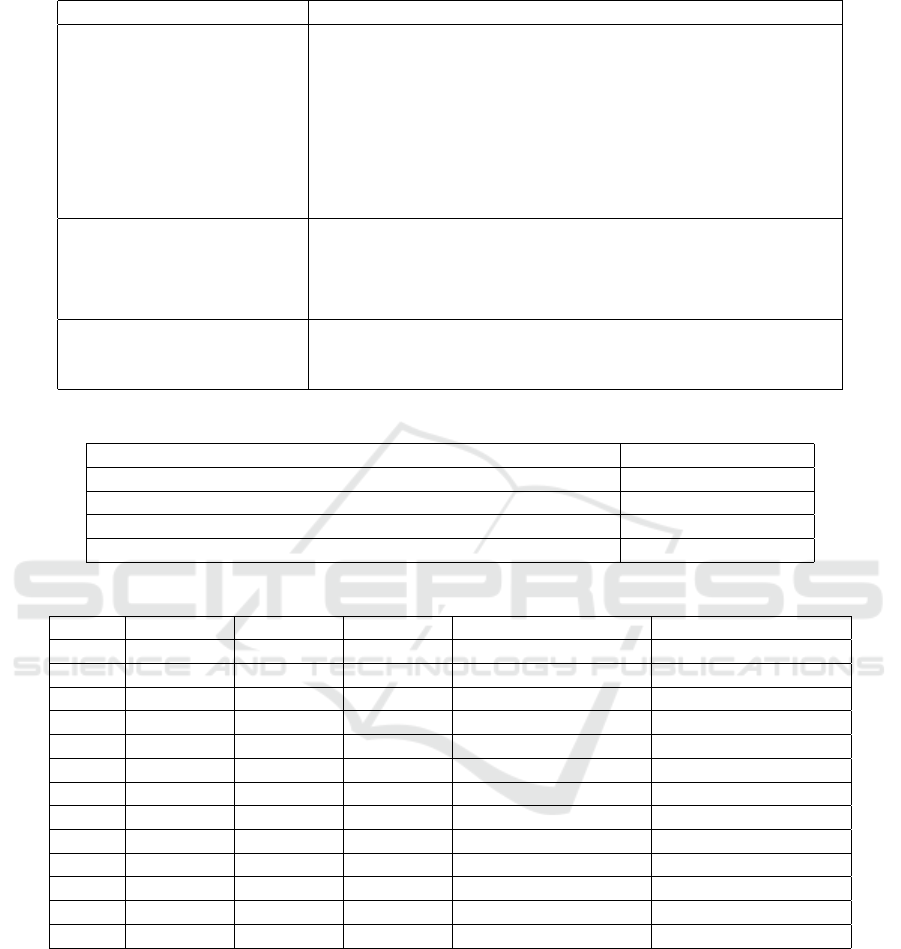

The Table 4 shows the severity number related to

the usability heuristics for mobile by each specialist,

the average number and the associated standard devi-

ation.

The heuristic with the best average number, clo-

sest to 0, which means few problems, was the

MH1(Visibility of system status) showing that this

heuristic was well developed by the designers of the

application. On the other hand, MH5(Error preven-

tion), MH10 (Helping users recognize, diagnose and

recover from errors) and MH13 (Privacy) evaluated

2.00, and finally, MH11 (Help and documentation)

got the highest value, 2.50, meaning that those aspects

are highly recommended to be redesigned to get a bet-

ter user experience of the application.

The case study show that the heuristic evaluations

and the usability heuristics for mobile that are shown

in this paper can help the designers to find the pro-

blems on the applications that are being developed

and fix them.

7 CONCLUSION

In view of the growth in smartphone production, usa-

bility is a key attribute for product quality. Usability

is also a fact that facilitates the use of the software by

the customer, which can help in the user’s loyalty.

In order to reach the final set of usability heuristics

and heuristic evaluations, 4 steps were taken to select

studies. At the end of the study selection process a

set of 13 usability heuristics were found, along with 5

possible ways of evaluating them.

The main contributions of this work is the compi-

lation of desktop usability heuristics in a new, more

specific set of heuristics adapted to the new mobile

paradigm. In addition, the study shows which heu-

ristics are currently used by researchers of usability

heuristics for smartphones. The specification of the

collected items shows that it can be used as a refe-

rence guide to help design more usable interfaces and

not just as a reactive assessment tool for existing pro-

totypes. Future work must consider this to contain

this partial result.

Another case study using these heuristics and one

of the evaluations listed may be a future work. The

proposed 13 heuristics facilitate the detection of usa-

bility errors. However, it is always possible to im-

prove usability heuristics, heuristic evaluations, and

research methodology.

Usability Heuristics for Mobile Applications

493

REFERENCES

Al-nuiam, H. (2015). User Interface Context of Use Guide-

lines for Mobile Apps.

Biel, B., Grill, T., and Gruhn, V. (2010). Exploring the

benefits of the combination of a software architecture

analysis and a usability evaluation of a mobile applica-

tion. Journal of Systems and Software, 83(11):2031–

2044.

Chuan, N. K., Sivaji, A., and Ahmad, W. F. W. (2014). Pro-

posed Usability Heuristics for Testing Gestural Inte-

raction. In 2014 4th International Conference on Ar-

tificial Intelligence with Applications in Engineering

and Technology, pages 233–238. IEEE.

de Lima Salgado, A. and Freire, A. P. (2014). Heuristic eva-

luation of mobile usability: A mapping study. pages

178–188.

Ferr

´

e, X., Juristo, N., Windl, H., and Constantine, L. (2001).

Usability basics for software developers. IEEE soft-

ware, 18(1):22–29.

for Standardization, I. O. and Commission, I. E. (2001).

Software Engineering–Product Quality: Quality mo-

del, volume 1. ISO/IEC.

Inostroza, R., Roncagliolo, S., Rusu, C., and Rusu, V.

(2013). Usability Heuristics for Touchscreen-based

Mobile Devices: Update. pages 24–29.

Inostroza, R., Rusu, C., Roncagliolo, S., Jimenez, C.,

and Rusu, V. (2012a). Usability Heuristics for

Touchscreen-based Mobile Devices. In 2012 Ninth

International Conference on Information Technology

- New Generations, pages 662–667. IEEE.

Inostroza, R., Rusu, C., Roncagliolo, S., Jim

´

enez, C., and

Rusu, V. (2012b). Usability heuristics validation

through empirical evidences: a touchscreen-based

mobile devices proposal. (1):60–68.

Inostroza, R., Rusu, C., Roncagliolo, S., Rusu, V., and

Collazos, C. A. (2016). Developing SMASH: A set

of smartphone’s usability heuristics. Computer Stan-

dards & Interfaces, 43:40–52.

Jackob Nielsen (1995). 10 Usability Heuristics for User

Interface Design.

Jimenez, C., Lozada, P., Rosas, P., Universidad, P., and Val-

para

´

ıso, C. D. (2016). Usability Heuristics : A Syste-

matic Review.

Kitchenham, B. (2004). Procedures for Performing Syste-

matic Reviews.

Lewis, J. R. (2014). Usability: lessons learned and yet to be

learned. International Journal of Human-Computer

Interaction, 30(9):663–684.

Malone, T. W. and W., T. (1982). Heuristics for designing

enjoyable user interfaces. In Proceedings of the 1982

conference on Human factors in computing systems -

CHI ’82, pages 63–68, New York, USA. ACM Press.

Motlagh Tehrani, S. E., Zainuddin, N. M. M., and Takavar,

T. (2014). Heuristic evaluation for Virtual Museum on

smartphone. In 2014 3rd International Conference on

User Science and Engineering (i-USEr), pages 227–

231. IEEE.

Nielsen, J. (2003). Usability 101: Introduction to usability.

Nielsen, M. (1990). Heuristic evaluation of user interfa-

ces. In Proceedings of the SIGCHI conference on Hu-

man factors in computing systems Empowering people

- CHI ’90, pages 249–256, New York, USA. ACM

Press.

Qui

˜

nones, D. and Rusu, C. (2017). How to develop usability

heuristics: A systematic literature review. Computer

Standards & Interfaces, 53:89–122.

Scholtz, J. (2004). Usability evaluation. National Institute

of Standards and Technology, 1.

Selleri Silva, F., Soares, F. S. F., Peres, A. L., Azevedo,

I. M. D., Vasconcelos, A. P. L. F., Kamei, F. K., and

Meira, S. R. D. L. (2015). Using CMMI together with

agile software development: A systematic review. In-

formation and Software Technology, 58:20–43.

Suryn, W., Abran, A., and April, A. (2003). ISO/IEC

SQuaRE. The Second Generation of Standards for

Software Product Quality.

Wohlin, C. and Prikladniki, R. (2013). Systematic litera-

ture reviews in software engineering. Information and

Software Technology, 55(Inf. Softw. Technol., vol. 55,

no. 6):919–920.

Y

´

a

˜

nez G

´

omez, R., Cascado Caballero, D., and Sevillano, J.-

L. (2014). Heuristic evaluation on mobile interfaces:

a new checklist. The Scientific World Journal, 2014.

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

494