Outdoors Mobile Augmented Reality Application Visualizing 3D

Reconstructed Historical Monuments

Chris Panou

2

, Lemonia Ragia

1

, Despoina Dimelli

1

and Katerina Mania

2

1

School of Architectural Engineering, Technical University of Crete, Kounoupidiana, Chania, Greece

2

Department of Electrical and Computer Engineering, Technical University of Crete, Kounoupidiana, Chania, Greece

Keywords: Augmented Reality, 3D Reconstruction, Cultural Heritage, Computer Graphics.

Abstract: We present a mobile Augmented Reality (AR) tourist guide to be utilized while walking around cultural

heritage sites located in the Old town of the city of Chania, Crete, Greece. Instead of the traditional static

images or text presented by mobile, location-aware tourist guides, the main focus is to seamlessly and

transparently superimpose geo-located 3D reconstructions of historical buildings, in their past state, onto the

real world, while users hold their consumer grade mobile phones walking on-site, without markers placed

onto the buildings, offering a Mobile Augmented Reality experience. we feature three monuments; e.g., the

‘GialiTzamisi’, an Ottoman mosque; part of the south side of a Byzantine Wall and the ‘Saint Rocco’ Venetian

chapel. Advances in mobile technology have brought AR to the public by utilizing the camera, GPS and

inertial sensors present in modern smart phones. Technical challenges such as accurate registration of 3D and

real world, in outdoors settings, have prevented AR becoming main stream. We tested commercial AR

frameworks and built a mobile AR app which offers users, while visiting these monuments in the challenging

outdoors environment, a virtual reconstruction displaying the monument in its past state superimposed onto

the real world. Position tracking is based on the mobile phone’s GPS and inertial sensors. The users explore

interest areas and unlock historical information, earning points. By combining AR technologies with location-

aware, gamified and social aspects, we enhance interaction with cultural heritage sites.

1 INTRODUCTION

AR is the act of superimposing digital artefacts on

real environments. In contrast to Virtual Reality

where the user is immersed in a completely synthetic

environment, AR aims to digitally complement

reality (Azuma et al. 2001, Zhou et al., 2018). In

comparison to older systems that used a combination

of cumbersome hardware and software, recent

advances in mobile technology has led to an

integrated platform including GPS functionality,

ideal for the development of AR experiences, often

referred to as Mobile AR (MAR). Applications in the

fields of medical visualization, maintenance/repair,

annotation, robot path planning and entertainment

enrich the world with information the users cannot

directly detect with their own senses (Nagakura et al.,

2014; Niedmermair et al., 2011; Kutter et al., 2008,

Ragia et al., 2015).

In this paper, we present the design and

implementation of a MAR digital guide application

for Android devices, that provides on-site 3D

visualisation and reconstructions of historical

buildings in the Old Town of Chania, Crete, Greece.

3D imagery of how archaeological sites existed in the

past, are superimposed over their real-world

equivalent, as part of a smart AR tourist guide.

Instead of the traditional static images or text

presented by mobile, location-aware tourist guides,

we aim to enrich the sightseeing experience by

providing 3D imagery visualising the past glory of

these sites in the context of their real surroundings,

seamlessly, without markers placed onto the

buildings, offering a MAR personalized, gamified

experience, showcasing the city’s cultural wealth.

The mobile AR application features a multimedia

database that holds records of various monuments.

The database also stores the users’ documentation of

their visits and interactions in the areas of interest.

User requirements gathering and AR development

while located in the challenging outdoors

environment of a city, pose significant technical as

well as user interaction challenges. Reliable position

and pose tracking is paramount so that 3D content is

Panou, C., Ragia, L., Dimelli, D. and Mania, K.

Outdoors Mobile Augmented Reality Application Visualizing 3D Reconstructed Historical Monuments.

DOI: 10.5220/0006701800590067

In Proceedings of the 4th International Conference on Geographical Information Systems Theory, Applications and Management (GISTAM 2018), pages 59-67

ISBN: 978-989-758-294-3

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

59

accurately superimposed on real settings, at the exact

position required and is one of the major technical

problems of AR technologies.

Our system features a geo-location and sensor

approach which compared to optical tracking

techniques allows for free user movement throughout

the site, independent of changes in the building’s

structure. The MAR application proposed provides an

easily extendable platform for future additions of

digital content requiring a minimal amount of

development and technical expertise.The goal is to

provide a complete and operational AR experience to

the end-user by tackling AR technical challenges

efficiently, as well as offering insight for future

development in similar scenarios.

1.1 Motivation

Since the Neolithic era, the city of Chania has faced

many conquerors and the influences of many

civilizations through time. Byzantine, Arabic,

Venetian and Ottoman characteristics are evident

around the cultural center of the town clustered

towards the old Venetian Harbor. In order to provide

the tourist with a view of the past, based on cutting-

edge AR technologies, we designed a historical route

throughout the city consisting of a selection of

historical buildings, to be digitally reconstructed and

presented in their past state through an AR paradigm.

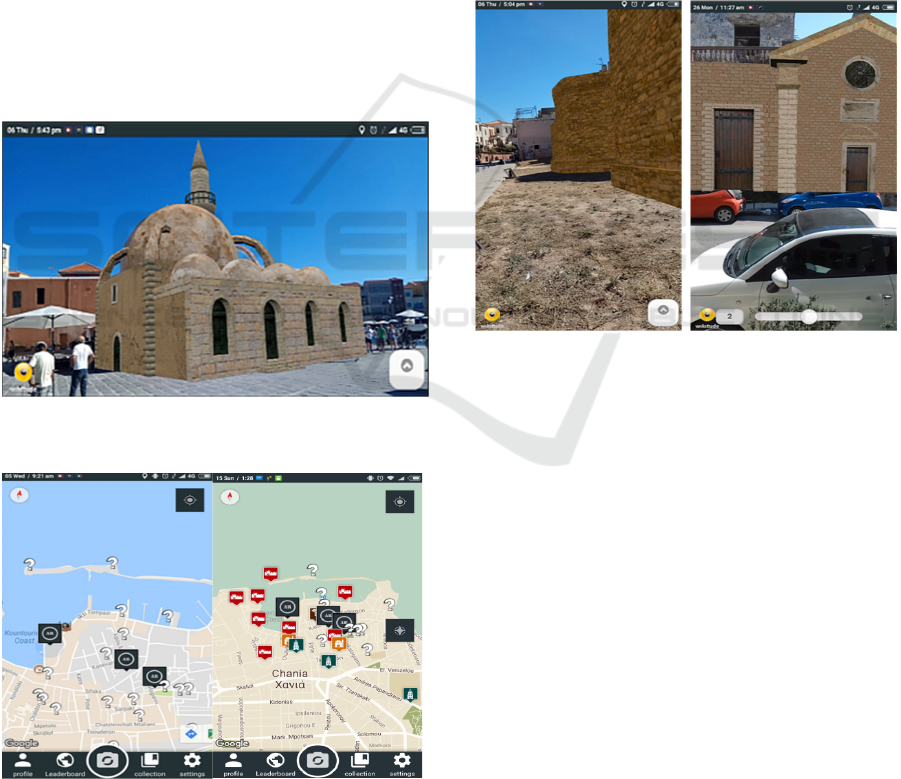

The final selection includes three monuments that

represent key historical periods of the Town of

Chania (Figure 1).

The Glass Mosque is located in the Venetian

Harbor of Chania and it is the first mosque built in

Crete and the only surviving in the City dating from

the second half of the 17

th

Century. The mosque is a

jewel of Islamic art in the Renaissance and featured a

small but picturesque minaret demolished in 1920 or

in 1939. The Saint Rocco temple is a Venetian

chapelon that consists of two different forms of

vaulted roof aisles. Although the southernmost part is

preserved in good condition, the northern and oldest

one has had its exterior painted over, covering its

stony façade, while a residential structure is built on

top. The Byzantine wall was built over the old

fortifications of the Chydonia settlement around the

6

th

and 7

th

century AD. Its outline is irregular with

longitudinal axle from the East to the West, where its

two central gates were located. The wall consists of

rectilinear parts, interrupted by small oblong or

polygonal towers many of which are now partly or

completely demolished.

The scope of this work is to virtually restore

partially or fully damaged buildings and structures on

historic sites and enable visitors to see them

integrated with their real environment, while using a

sophisticated AR mobile tourist guide. We aim to

deliver geo-located information to the users as well as

calculate accurate registration positioning between

the real-world monument and 3D digitisations, while

users document their visits. By integrating digital

maps and a location-aware experience we aim to urge

the users to further investigate interest areas in the

city of Chania, Crete, and uncover their underlining

history by exploiting cutting edge AR mobile

technologies.

Figure 1: The Glass Mosque (left), the Saint Rocco Temple

(right), the Byzantine wall (middle).

1.2 Previous Work

AR has been utilized for a number of applications in

cultural heritage. One of the initial MAR systems

provided on-site help and AR reconstructions of the

ruins of ancient Olympia, Greece (Vlahakis et al.,

2001). The system utlilized a compass, a DGPS

receiver and combined with live view images from a

webcam, it obtained the user’s location and

orientation. Visitors carried a heavy backpack

computer and wore a see-through Head Mounted

Display (HMD) to display the digital content. The

system was a cumbersome MAR unit not acceptable

by today’s standards. MARCH (Choudary et. al.

2009) was a MAR application developed in Symbian

C++, running on a Nokia N95. The system made use

of the phone’s camera to detect images of cave

engravings and overlay them using an image

indicating ancient drawings. Although this was the

first attempt of a real time MAR application without

the use of grey-scale markers, the system still needed

the placement of coloured patches at the corners of

2D images captured in caves and the experience was

not tested in cave environments.

With the advent of mobile devices, more

sophisticated AR experiences are made possible such

GISTAM 2018 - 4th International Conference on Geographical Information Systems Theory, Applications and Management

60

as the one for the Bergen-Belsen memorial site,

(Pacheco et. al. 2014), a former WWII concentration

camp in northern Germany which was burned down

after its liberation. The application integrated

database interaction, reconstruction modelling and

content presentation in a hand held device. Real time

tracking was performed with the device’s GPS and

orientation sensors and navigation was conducted

either via map or the camera. The system was

superimposing the reconstructed building models on

the phone’s camera feed. Focusing more on the

promotion of cultural heritage in outdoor settings,

VisAge (Julier et al., 2016) was an application aiming

to turn users into authors of stories and cultural

histories in urban environments. The system featured

an online portal where users could create their stories

using routes through physical space. A story is a set

of spatially distributed POIs (Points of Interest). Each

POI has its own digital content consisting of images,

text or audio. A viewing tool was developed for

mobile tablets in Unity using Vuforia’s tracking

library to overlay the digital content in the real space.

The users could follow routes in the city and

experience new stories. Tracking was performed

using feature detection algorithms from the camera’s

feed. As per any optical approach, content delivery is

not guaranteed due to the lighting variations of the

outdoor setting.

Further work in 3D reconstructions was shown in

CityViewAR (Lee et al., 2012), a mobile outdoor AR

application that was developed to allow people to

explore destroyed buildings after the major

earthquakes in Christchurch, New Zealand. Besides

providing stories and pictures of the buildings, the

main feature of the application is the ability to

visualize 3D models of the buildings in AR, displayed

on a map. Finally, a practical solution presented in

(Hable et al., 2012) targeted guiding groups of

visitors in noisy in-door environments, based on

design decisions such as analogue audio transmission

and reliably trackable AR markers. However,

preparation of the environment with fiducials is time

consuming and the supervision of the visits by experts

is necessary to avoid accidents and interference with

the working environment.

2 METHODOLOGY

We present a MAR application that besides offering

geo-localised textual information concerning cultural

sites to a visitor, it also superimposes location-aware

3D reconstructions of historical buildings, positioned

exactly where the real-world monuments are located,

displayed on visitors’ Android mobile phone. The

geo-location approach used for real-time tracking is

based on sensors available to both high and low-end

mobile phones eliminating hardware restrictions and

allowing for easy integration of added historical

buildings. It also offers the opportunity to visualize

historical sites in non-intrusive ways without placing

markers or patches on their walls. It also introduces

gamified elements of cultural exploration.

The geo-location approach is adopted that

employs the GPS and the inertial sensors of the device

so that when the specific location of the actual

monument is registered, then the 3D reconstruction of

it would be displayed. Locations containing latitude

and longitude information are received from the GPS

while the accelerometer and geo-magnetic sensors are

used to estimate the device pose in the earth’s frame.

A visual reconstruction is then matched to the user’s

position and viewing angle displaying the overlaid

models on the mobile phone’s screen. This

implementation offers the most reliable registration

of 3D content accurately superimposed on the real-

world site, demanding less actions from the users,

therefore, ensuring a robust and intuitive experience

(Střeláket. al. 2016).

The preparation of the 3D models required the

acquisition of historical information and the accurate

depiction of them in scale with the real world. Due to

the lack of accurate plots and outlines of the

buildings, Lidar and DSM data were exported from

Open Street Maps and used to create the final models.

Designing for a mobile device means that limited

processing power and the requirements of the AR

technologies need to be taken into account. Complex

geometries can impair performance so a low-poly,

high-resolution texture approach was adopted in

order to avoid frame rate drops. The final models are

then processed through Google Sketch-up to geo-

reference and position them onto the real world while

looking at the screen of a mobile phone.

Digital maps and an AR camera displaying the

interest areas were integrated to assist in navigation

through the geo-located content. The client-server

architecture ensures that personalised experiences are

provided by storing information about user visits and

progress. Changes in the server can be conducted

without interfering with the mobile application

allowing for an easily extendable platform where new

monuments could be added as visiting areas. The

monuments’ information and assets are stored in a

database and delivered to the mobile application in a

location-request basis. The application was

developed for Android in Java as well as employing

the Wikitude Javascript API for the AR views. It

Outdoors Mobile Augmented Reality Application Visualizing 3D Reconstructed Historical Monuments

61

features a local database based on SQLite cashing the

downloaded content.

2.1 3D Modeling and Texturing

In order to record the past state of the selected

monuments, old photographs, historical information

and estimates from experts were utilized. The 3D

models visualizing their past state are presented in

real size superimposed over the real-world monument

and must be in proportion with their surroundings.

Therefore, accurate measurements of their structure

are necessary. Due to the lack of schematics and plots,

we relied on data derived from online mapping

repositories which provide outlines and height. The

outlines of the three monuments were acquired from

Open Street Map (OSM). By selecting specific areas

of the monuments on the map, we can then export an

.osm file that contains the available information

concerning that area, including building outlines and

height, where available. This file is essentially an xml

file including OSM raw data about roads, nodes, tags

etc. The file is then imported into OSM2World, e.g.,

a Java application whose aim is to produce a 3D scene

importing as input the underline data.

The modelling process was focused on preserving

a low vertex count as complex geometry

compromises interactive frame rate in systems of low

processing power such as mobile phones. Complete

3D reconstructions of the selected monuments were

created so that they completely overlap the real ones

based on AR viewing. The most important aspect of

this strategy is to keep the reconstructions in

proportion. The final scale and size are defined in

Google Sketch-up, while the final model is positioned

in the world coordinate system. The Byzantine wall

being the most abstracted, resulted in 422 vertexes.

The Glass Mosque counts 7,614 and the Saint Rocco

temple 4,919 vertexes.

Images captured from the real monuments on site

were used as references. A diverse range of materials

are assigned to different parts of the buildings. Due to

the lack of information, the actual texture of the

reconstructed parts is unknown so the aim is to more

accurately represent the compositing material rather

than the actual surface. The AR framework supports

only a power of 2 .png or .jpeg single material texture

map. That means that bumps, normal maps and multi-

textures are not included. The materials that compose

the entire texture set are baked into one image that

will serve as the final texture. UV mapping is the

process of unwrapping the 3D shape of the model into

a 2D map. This map contains the coordinates of each

vertex of the model placed on an image. Taking into

account that the monuments will be displayed on a

mobile phone screen in real size, high resolution

textures were required. This raises the final size of the

texture files, however, the process results in a high

quality visual result (2048x2048 pixels).

2.2 Geo-Positioning

In order for the reconstructions to be accurately

displayed, combined with real-time viewing of the

real world, an initial transformation and rotation is

applied. The models were exported in .dae format and

imported into Google Sketch-up.

Figure 2: Final 3D models.

The area of the monuments provided by Google

maps was projected on a ground plane. The

monument is, then, positioned on its counterpart on

the map. Given that the proportions of the monuments

are in line, the final model is scaled to fit on the

outlines. The location of the monument is then added

to the file and provided to the framework. In order

include the model in the AR framework, we export it

to .fbx and use the provided 3D encoder to make a

packaged version of the file together with the textures

in the custom wt3 format. This file is, then,

channelled to the MAR app.

3 AR SOLUTIONS

The most significant technical challenge of AR

systems is the registration issue. The virtual objects

and the real ones must be appropriately aligned in

order to maintain the illusion of integrated presence.

Registration in an AR system relies on the accurate

computation of the user’s position and pose as well as

the location of the virtual objects. Focusing on MAR

tracking is either conducted based on sensor-based

implementations or computer vision techniques.

While computer vision approaches seem to provide

pixel perfect registration, anything that compromises

the visibility between the user and the augmented area

GISTAM 2018 - 4th International Conference on Geographical Information Systems Theory, Applications and Management

62

can result in the virtual scene jitter or collapse. In

addition to this, adequate preparation of the

environment needs to take place. While sensor

approaches are more robust, limits in acquiring

reliable data leads to low accuracy. The work

proposed focuses on providing a complete MAR

experience to the end-user. Commercial AR

frameworks were employed, initially evaluating both

optical and sensor-based approaches.

3.1 Optical Implementation

In computer vision implementations, the live feed

from the camera is processed in real-time to identify

points in space, known a priori to the system and

estimate the user’s position in the AR scene. These

tracking methods require that scene images contain

natural or intentionally placed features (fiducials)

whose positions are known. Since we don’t want to

interfere with the monuments, placing fiducial

markers in the scenes was out of the question so we

relied on pictures and the natural features of the

scenes. A basic application was developed in Unity

employing the Vuforia plug-in to test the registration.

The image recognition methods use “trackable

targets” to be recognized in the real environment. The

targets are images processed through natural feature

detection algorithms to produce 2D point clouds of

the detected features to later be identified by the

mobile device in the camera’s feed. When detected,

pose and position estimations are available relative to

the surfaces they correspond to. The denser the point

clouds are, the better the estimate. This means that the

initial images need to contain a large amount of

detectable features. For real world buildings, these

features most often represent window and door

corners and intense changes in the texture, which do

not provide a large enough number of features for the

algorithm to track. Taking into account the lighting

variations of outdoors, the sets of features provided to

the system differed greatly from the actual scene and

pose tracking was not achievable. Outdoors

environments present very challenging conditions to

such implementations. Building façades provided a

limited amount of features and together with

variations in lighting conditions, such a system would

need a huge amount of images and training to reliably

track the outdoor scene. Taking also into account the

cramped environment of a touristic site, image

recognition was not a realistic choice.

3.2 Sensor Implementation

Sensor approaches use long-range sensors and

trackers that report the locations of the user and the

surrounding objects in the environment. These

tracking techniques do not require any preparations of

the environment and their implementation relies on

cheap sensors present in every modern smart phone.

They require fewer actions by the users and ensure

that the AR experience will be delivered independent

of external conditions. The AR system is aware of the

distance to the virtual objects, because that model is

built into the system. The AR system may not know

where the real objects are placed in the environment

and is relying on a “sensed” view with no feedback

on how close the two align. Specifically in MAR,

modern devices are equipped with a variety of sensors

able to provide position and pose estimations. In

outdoor settings, the Assisted GPS allows for position

tracking with an accuracy of up to 3 meters and for

pose estimation, the system combines data from the

accelerometer and geo-magnetic sensors. The

accelerometer provides the orientation relative to the

centre of the Earth and the geo-magnetic sensor to the

North. By combining this information, an estimate of

the device’s orientation in the world coordinate

system is provided. For this approach, we used the

Wikitude’s JavaScript API in combination with our

own location strategy. The Wikitude JavaScript API

was selected due to its robust results, licensing

options, big community and customer service. The

implementation includes the Android Location API

and the Google Play Services Location API. For the

later, the application creates a request, specifying

location frequency and battery consumption priority.

After it is sent out, location events are fired including

information about latitude, longitude, altitude,

accuracy and bearing. These are then provided to the

AR activities to transform and scale the

corresponding digital content on the screen.

4 SYSTEM ARCHITECTURE

4.1 Client-Server Implementation

One of the main challenges we faced in designing the

proposed MAR application was the lack of

established guidelines in the application and

integration of AR technologies in outdoor heritage

sites. The overall design of the system consists of two

main parts: the mobile client and the server. The

server facilitates a database developed in MYSQL

which holds the records about the monuments (name,

description, latitude, longitude etc.) and user specific

information. The information is delivered to the

mobile unit on a location request basis. The database

Outdoors Mobile Augmented Reality Application Visualizing 3D Reconstructed Historical Monuments

63

is exposed to the users via a Rest-full Web-Service. A

basic registration to the system is required. The

functions provided include storing data about visits,

marked places and the overall progress visualizing all

sites in a specific area.

4.2 Mobile AR Application

The mobile application’s architecture has been

designed to be extendable and respond easily to

changes in the underling model of the server. It is

based on three main layers. The Views layer is where

the interactions with the users take place. Together

with the background location service they act as the

main input points to the system. The events that take

place are forwarded to the Handling layer which

consists of two modules following the singleton

pattern. The data handler is responsible for interaction

with the local content and communicating with the

views while the Rest Client is responsible for commu-

nicating with the Web Service. The Model layer

consists of basic helper modules to parse the obtained

JSON files and interact with the local database. The

actions flow from the Views layer tothe lower

components. Responding to a user event or a location

update, a call is made to the Handling layer which will

access the model to return the requested data.

The Handling layer is the most important of the

three; all interactions, exchange of information and

synchronization passes through this layer. The Rest

Client provides an interface for receiving and sending

information to the remote database as requested by

the other layers. It is responsible for sending data

about user visits, saved places, updates in progress

and personal information. It also provides functions

for receiving data from the Server concerning Points

of Interest (POI) information and images. It also

allows for synchronizing and queuing the requests.

While the rest client is responsible for the interactions

with the server, the Data Handler is managing the

communication of the local content. Information

received are parsed and stored in the local DB. The

handler responds to events from the views and

background service and handles the business logic for

the other components. It forms and serves the

available information based on the state of the

application.

The views are basic user-interface components

facilitating the possible interactions with the users.

The Map View is a fragment containing a 2D map

developed with the Google Maps API. It displays the

user’s location, as obtained by the background

service, and the POIs as markers on the map. The AR

view is based on the Wikitude JavaScript API and is

where the AR experiences take place. It is a Web view

with a transparent background overlaid on top of a

camera surface. It displays the 3D reconstructions

while it receives location updates from the

background service and orientation updates from the

underlying sensor implementation. It also contains a

navigation view, where the POIs are displayed as

labels on the real world. Interactivity is handled in

JavaScript and it is independent from the native code.

The View pagers are framework specific UI elements

that display lists of the POIs, details for each POI,

user leader-boards and user profiles. Finally, the

Notification View is used when the application is in

the background and aims to provide control over the

location service. It is a permanent notification on the

system tray where the user can change all preferences

of the location strategy and start, stop or pause the

service at will.

The aim of the standalone background service is

to allow users roam freely in the city while receiving

notifications about nearby POIs. It is responsible for

supplying the locations obtained by the GPS to the

Map View and AR Views. The location Provider

obtains the locations and offers the option to swap

between the Google Play Services API and the

Android Location API; e.g. two location strategies. In

order to offer control over battery life and data-usage,

the users can customize its frequency settings from

the preferences. The views requesting location

updates are registered as Listeners to the Location

Service and receive locations containing latitude,

longitude, altitude, accuracy information etc. The

Location Event Controller serves the location events

to the registered views. The user’s location is

continuously compared to that of the available POIs

and if the corresponding distance is in an acceptable

range, the user can interact with the POI. The events

sent, include entering and leaving the active area of a

POI. If the application is in the background, a

notification is issued leading to the AR Views and

Map Views.

The Model layer consists of standard storing units

and handlers to enable parsing JSON files obtained

from the server and interfaces to interact with the

local DB. It stores the historical information

concerning the current local, user specific

information and additional variables needed to ensure

the optimal flow of the application. The local assets,

including the 3D models and the html, JavaScript files

required by the Wikitude API, are stored in this layer

and provided to the Handling layer as requested. The

SQLite Helper is the component responsible for

updating the local storage and offers an interface to

the Data Handler containing all available interactions.

GISTAM 2018 - 4th International Conference on Geographical Information Systems Theory, Applications and Management

64

5 MAR USER INTERFACE

The main technical challenge was to visualize, on-

site, the 3D reconstructions of monuments displayed

on a standard mobile phone, merged with the real-

world offering a location-aware experience. In this

section, we present the final user interface of the

application and the flow of the experience. Upon the

activation of the application the user is welcomed in

a splash screen and is requested to create an account

or login with an existing one. After the login process,

the application checks the location and requests the

POIs and the historical information from the server

while it transfers to the main screen. The map activity

forms the main screen of our application and

facilitates the core of the functionality as shown in

Figure 4. POIs are displayed on the map in their

corresponding geo-locations. By clicking on a

marker, the user can see the info window of the POI

containing information, a thumbnail and the distance

between POIs.

Figure 3: 3D reconstruction of the Glass mosque featuring

the now demolished minaret, as seen by MAR users.

Figure 4: Map view displaying the POIs.

By interacting with a bar at the bottom of the

screen, the user navigates the app. These include user

profile and preferences, leader-boards and the library

while a round button placed in the middle is used to

swap between map and camera navigation.

The Camera View displays the real-world content

as 2D labels, which contain basic information about

the POIs and displays them as dots on the radar to

assist in navigation. By clicking on a label, a bottom

drawer appears which holds additional information

and allows for more interactions. Users can save the

POI for later reference, access the reconstruction if

available, or return to the map. The main concept of

the experience is to reveal the available information

under controllable conditions, to facilitate a diverse

range of textual, image and 3D interactions.

Figure 5: Reconstructions of the demolished towers of the

Byzantine Wall and the restoration of the Rocco temple.

When in the initial state, the user is shown the

available 3D reconstructions on the map. After

visiting the monuments and viewing them in AR, the

rest of the POIs locations are unlocked and displayed

on the map indicated as question marks. The goal is

to visit them and classify them to the historical

periods based on their architectural characteristics

and on clues obtained in the library page and from the

already visited monuments. The exploration of the

POIs can be conducted freely by employing the

background service of the app. The users enable or

disable this functionality. The aim of this approach is

to urge the visitor to observe the monuments, consult

the information available and even interact with each

other and locals to make each decision. The more

areas they visit and unlock, the more points they earn

for themselves. The overall progress in the city can be

viewed in the Leader-boards page, accessible from

the map view.

Outdoors Mobile Augmented Reality Application Visualizing 3D Reconstructed Historical Monuments

65

The 3D reconstructions are the main feature we

aimed to provide as shown in Figures 3, 5. By

situating information in the context of their real

surroundings, we aim to elevate the communication

of cultural and historical information from static

forms to visual standards. The 3D reconstructions are

accessed from the navigation views. When the user is

in close proximity to the monuments, the location

Event Handler informs the views to update the

content and enable the AR experiences. In this screen,

a reconstructed 3D model of the monument is

overlaid on the camera and the GPS and inertial

sensors are exploited to display the monument on its

real world location. The users can freely move around

the real site to view the monuments from all available

angles. They can click on the model to get

information or access the slider, available at the

bottom right, to change between the available 3D

models. The user can shift between visualizing either

the whole model or the reconstructed parts.

The Library page is where a collection of the

historical information is displayed (Figure 6). It

consists of a view pager containing the historical

periods in chronological order. Each page includes a

historical briefing and an image showing the active

area for that period as well as a list containing the

monuments that have been correctly classified. The

locked monuments are contained in a separate list.

The users can see the monument-specific information

by selecting the items on the list. The user can also

acquire historical information, including text and

images, mark and save monuments or get directions

to specific locations.

Figure 6: Pager View of Library, Monument details page.

6 EVALUATION

The MAR functionality as well as generic user

interface of the application was constantly evaluated

since the start of the technical development. Users

communicated comments concerning the AR camera

view during navigation stating that the use of the

camera instead of the map limited their movements

and perception of their surroundings and generally

refrained from using it except to locate specific sites

and to classify the monuments. During the

classification process, the AR camera proved useful

as it helped locate specified monuments. Moreover,

when the AR camera was on in conjunction with the

GPS, high battery consumption was an issue.

Following such comments, the AR camera was

defined as a standalone activity instead of as map

replacement.

In relation to users’ general impression using AR

experiences, user feedback was quite promising.

Most users had never been acquainted with a similar

application and were very excited to see the

reconstructions superimposed on the real-world

monument. Although the registration problem was

commented by most users, the geo-location approach

was intuitive. The instant tracking method was

overall challenging for an unaccustomed audience,

but after an initial explanation and guidance, users got

used to it and proceeded to experiment with placing

the models in the annotated area so that they are

accurately overlaid on the actual real-world building,

as viewed on the mobile phone.

7 CONCLUSIONS

We presented the design of a mobile Augmented

Reality application, geo-located and gamified, aimed

for consumer-grade mobile phones increasing the

synergy between visitors and cultural heritage sites.

In addition to exploring application screens and web

content, we offer a novel AR approach for visualizing

historical information on-site, outdoors. By offering

3D reconstructions of cultural sites through AR, we

enhance digital guide experience while the user is

visiting sites of cultural heritage and bridge the gap

between digital content and the real world. Our design

was focused on providing an expandable platform

that could envelop additional sites enabling future

experts to display their digitized collections using

cutting edge AR technologies.

Although outdoors Mobile Augmented Reality is

still hampered by technical challenges concerning

GISTAM 2018 - 4th International Conference on Geographical Information Systems Theory, Applications and Management

66

localization and registration, it offers novel

experiences to a wide audience. The availability and

technological advances of modern smart phones will

allow, without a doubt, seamless and fascinating AR

experiences enhancing the understanding of historical

sites and datasets.

The field of Augmented Reality is a quickly

evolving. Tracking and registration in AR are far

from solved. Employing a low-level AR SDK, such

as the AR-toolkit, would provide access to low level

functionality. In its current state, the application does

not support interaction between the users, apart from

the overall rankings. Extending the platform to

include comments, likes, shares etc. and adding an

additional communication layer would increase

interest in cultural heritage. Gamification combined

with scavenging and treasure hunts, location-aware

storytelling etc. would add to an even more

immersing experience and increase visitor

involvement and engagement.

REFERENCES

Azuma, R. T., Baillot, Y., Behringer, R., Feiner, S., Julier,

S., MacIntyre, B., 2001. Recent Advances in

Augmented Reality. Computer Graphics and

Applications, IEEE, 21(6):34–47.

Choudary, O., Charvillat, V., Grigoras, R., Gurdjos, P.,

2009. MARCH: Mobile AR for Cultural Heritage, In

Proc. 17th ACM Int. Conf on Multimedia, 1023-1024.

Hable, R., Rößler, T., Schuller, C., 2012. evoGuide:

Implementation of a Tour Guide Support Solution with

Multimedia and AR content. Proc. 11th Conf. on

Mobile and Ubiquitous Multimedia, No 29.

Julier, S. J., Schieck, A. F., Blume, P., Moutinho, A.,

Koutsolampros, P., Javornik, A., Rovira, A.,

Kostopoulou, E., 2016. VisAge: Augmented Reality for

Heritage. In Proc. ACM PerDis 2016, 257-258.

Kutter, O., Aichert, A., Bichlmeier, C., Traub, J., Heining,

S.M., Ockert, B., Euler E., Navab, N., 2008. Real-time

Volume Rendering for High Quality Visualization in

AR. In Proc. AMI-ARCS 2008, NY, USA.

Lee, J. Y., Lee, S. H., Park, H. M., Lee, S. K., Choi, J. S.,

Kwon, J. S.. 2010. Design and Implementation of a

Wearable AR Annotation System using Gaze

Interaction. Consumer Electronics (ICCE), 2010, 185–

186.

Nagakura, T., Sung, W., 2014. Ramalytique: Augmented

Reality in Architectural Exhibitions. In Proc. CHNT

2014.

Niedmermair, S., Ferschin, P., 2011. An AR Framework for

On-Site Visualization of Archaeological Data. In Proc.

16th Int. Conf. on Cultural Heritage and New

Technologies, 636-647.

Pacheco, D., Wierenga, S., Omedas, P., Wilbricht, S.,

Knoch, H., Paul, F. M., J., 2014. Spatializing

Experience: a Framework for the Geolocalization,

Visualization and Exploration of Historical Data using

VR/AR Tech. Proc. ACM VRIC 2014, No 1.

Ragia, L., Sarri, F., Mania, K., 2015. 3D Reconstruction

and Visualization of Alternatives for Restoration of

Historic Buildings: A New Approach. In Proc. GISTAM

2015.

Střelák, D., Škola, F., Liarokapis, F., 2016. Examining User

Experiences in a Mobile Augmented Reality Tourist

Guide, In Proc. PETRA 2016, No 19.

Vlahakis, V., Karigiannis, J., Tsotros, M., Gounaris, M.,

Almeida, L., Stricker, D., Gleue, T., Christou, I. T.,

Carlucci, R., Ioannidis, N., 2001. Archeoguide: First

Results of an AR, Mobile Computing System in

Cultural Heritage Sites. Proc. VAST 2001, 131-140,

ACM.

Zhou, F., Been-Lirn, H. D., Billinghurst, M., 2008. Trends

in Augmented Reality Tracking, Interaction and

Display: A Review of 10 Years of ISMAR. Proc.

ISMAR 2008, 193-202.

Outdoors Mobile Augmented Reality Application Visualizing 3D Reconstructed Historical Monuments

67