Digital Assisted Communication

Paula Escudeiro

1,2

, Nuno Escudeiro

1,3

, Marcelo Norberto

1,2

, Jorge Lopes

1,2

and Fernando Soares

1,2

1

Departamento de Engenharia informática - Instituto Superior de Engenharia do Porto, Porto, Portugal

2

GILT – Games, Interaction and Learning Technologies, Porto, Portugal

3

INESC TEC - Laboratory of Artificial Intelligence and Decision Support, Porto, Portugal

Keywords: Sign Language, Blind, Deaf, Kinect, Sensor Gloves, Translator.

Abstract: The communication with the deaf community can prove to be very challenging without the use of sign

language. There is a considerable difference between sign and written language as they differ in both syntax

and semantics. The work described in this paper addresses the development of a bidirectional translator

between several sign languages and their respective text, as well as the evaluation methods and results of

those tools. A multiplayer game is using the translator is also described on this paper. The translator from sign

language to text employs two devices, namely the Microsoft Kinect and 5DT Sensor Gloves in order to gather

data about the motion and shape of the hands. This translator is being adapted to allow the communication

with the blind as well. The Quantitative Evaluation Framework (QEF) and the ten-fold cross-validation

methods were used to evaluate the project and show promising results. Also, the product goes through a

validation process by sign language experts and deaf users who provide their feedback answering a

questionnaire. The translator exhibits a precision higher than 90% and the projects overall quality rates are

close to 90% based on the QEF.

1 INTRODUCTION

Promoting equal opportunities and social inclusion of

people with disabilities is one of the main concerns of

the modern society and also a key topic in the agenda

of the European Higher Education.

The emergence of new technologies combined

with the commitment and dedication of many

teachers, researchers and the deaf community is

allowing the creation of tools to improve the social

inclusion and simplify the communication between

hearing impaired people and the rest.

Despite all the efforts there are still a lot of

improvements to be done for this matter. For

example, in the public services, it is not unusual for a

deaf citizen to need assistance to communicate with

an employee. In such circumstances it can be quite

complex to establish communication. Another critical

area is education. Deaf children have significant

difficulties in reading due to difficulties in

understanding the meaning of the vocabulary and the

sentences. This fact together with the lack of

communication via sign language in schools severely

compromises the development of linguistic,

emotional and social skills in deaf students.

The VirtualSign project intends to reduce the

linguistic barriers between the deaf community and

those not suffering from hearing disabilities.

The project aims to improve the accessibility in

terms of communication for people with disabilities

in speech, hearing and also the blind. ACE also

encourages and supports the learning of sign

language.

The sign language, like any other living language,

is constantly evolving and becoming effectively a

contact language with listeners, increasingly being

seen as a language of instruction and learning in

different areas, a playful language in times of leisure,

and professional language in several areas of work

(Morgado and

Martins, 2009).

2 LINGUISTIC ASPECTS

The sign language involves a set of components that

make it a rich and hard to decode communication

channel. Although it is not as formal and not as

structured as written text it contains a far more

complex way of expression. When performing sign

language, we must take account of a series of

Escudeiro, P., Escudeiro, N., Norberto, M., Lopes, J. and Soares, F.

Digital Assisted Communication.

DOI: 10.5220/0006377903950402

In Proceedings of the 13th International Conference on Web Information Systems and Technologies (WEBIST 2017), pages 395-402

ISBN: 978-989-758-246-2

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

395

parameters that define the manual and non-manual

components. The manual component includes:

Configuration of the hand. In Portuguese sign

language there is a total of 57 identified hand

configurations.

Orientation of the palm of the hand. Some pairs

of configurations differ only in the palm’s

orientation.

Location of articulation (gestural space).

Movement of the hands.

The non-manual component comprises:

Body movement. The body movement is

responsible for introducing a temporal context.

Facial expressions. The facial expressions add

a sense of emotion to the speech.

3 RELATED WORK

In the last two decades a significant number of works

focusing on the development of techniques to

automate the translation of sign languages with

greater incidence for the American Sign Language

(Morrissey and Way, 2005), and the introduction of

serious games in the education of people with speech

and/or hearing disabilities (

Gameiro et al., 2014) have

been published.

Several of the methods proposed to perform

representation and recognition of sign language

gestures, apply some of the main state-of-the-art

techniques, involving segmentation, tracking and

feature extraction as well as the use of specific

hardware as depth sensors and data gloves.

The collected data is classified by applying a

random forests algorithm (Biau, 2012), yielding an

average accuracy rate of 49,5%.

Cooper et al. (

Cooper et al., 2011) use linguistic

concepts in order to identify the constituent features

of the gesture, describing the motion, location and

shape of the hand. These elements are combined

using HMM for gesture recognition. The recognition

rates of the gestures are in the order of 71,4%.

The project CopyCat (

Brashear et al., 2010) is an

interactive adventure and educational game with ASL

recognition. Colorful gloves equipped with

accelerometers are used in order to simplify the

segmentation of the hands and allow the estimation of

motion acceleration, direction and the rotation of the

hands. The data is classified using HMM, yielding an

accuracy of 85%.

ProDeaf is an application that does the translation

of Portuguese text or voice to Brazilian sign language

(ProDeaf, 2016). This project is very similar to one of

the main components used on the VirtualSign game,

which is the text to gesture translation. The objective

of ProDeaf is to make the communication between

mute and deaf people easier by making digital content

accessible in Brazilian sign language. The translation

is done using a 3D avatar that performs the gestures.

ProDeaf already has over 130 000 users.

Showleap is a recent Spanish Sign language

translator (Showleap, 2016), it claims to translate sign

language to voice and voice into sign language. So far

Showleap uses the Leap motion which is a piece of

hardware capable of detecting hands through the use

of two monochromatic IR cameras and three infrared

LEDs and showleap uses also the Myo armband . This

armband is capable of detecting the arm motion,

rotation and some hand gestures through

electromyographic sensors that detect electrical

signals from the muscles of the arm. So far Showleap

has no precise results on the translation and the

creators claim that the product is 90% done

(Showleap, 2015).

Motionsavvy Uni is another sign language

translator that makes use of the leapmotion

(Motionsavvy, 2016). This translator converts

gestures into text and voice and voice into text. Text

and voice are not converted into sign language with

Uni. The translator has been designed to be built into

a tablet. Uni claims to have 2000 signs on launch and

allows users to create their own signs.

Two university students at Washington University

won the Lemelson-MIT Student Prize by creating a

prototype of a glove that can translate sign language

into speech or text (University of Washington, 2016).

The gloves have sensors in both the hands and the

wrist from where the information of the hand

movement and rotation is retrieved. There is no clear

results yet as the project is a recent prototype.

4 VirtualSign TRANSLATOR

VirtualSign aims to contribute to a greater social

inclusion for the deaf through the creation of a bi-

direction translator between sign language and text.

In addition a serious game was also developed in

order to assist in the process of learning sign

language.

The project bundles three interlinked modules:

Translator of Sign Language to Text:

module responsible for the capture,

interpretation and translation of sign language

gestures to text. A pair of sensors gloves (5DT

Data Gloves) provides input about the

configuration of the hands while the Microsoft

WEBIST 2017 - 13th International Conference on Web Information Systems and Technologies

396

Kinect provides information about the

orientation and movement of the hands. The

Figure 1 shows its interface.

Figure 1: Sign to text translator interface.

Translator of Text to Sign Language: (Figure

2): This module is responsible for the

translation of text to sign language. The

gestures are performed by an avatar based on a

defined set of parameters that are created using

the VirtualSign Studio (VSS). The VSS

provides an interface to the users to create all

the gestures and that information is stored on

the VirtualSign server and is reused for the

translations.

Figure 2: Text to sign translator interface.

Serious Game: Module responsible for the

didactic aspects which integrates the two

modules above described into a serious game.

The system architecture as a whole has two

main components. The main component is the

game client that includes the game module and

the VirtualSign translator. Then there is the

Web Server component that hosts all the web

services needed for the game. Those web

services have access to the server database

where the players’ information is kept. The

game clients communicate with each other

using Unity network commands and with the

web server through HTTP requests.

5 SIGN LANGUAGE TO TEXT

The translation process between gesture and text is

done by combining the data received through the

Kinect and the data received from the 5DT gloves.

To simplify, we consider that a word corresponds

to a gesture in sign language.

A gesture comprises a sequence of configurations

from the dominant hand, each associated with

(possibly) a configuration of the support hand, and a

motion and orientation of both hands. Each element

of the sequence is defined as an atom of the gesture.

The beginning of a gesture is marked by the adoption

of a configuration by the dominant hand. In the case

of a configuration change, two scenarios may arise:

the newly identified configuration is an atom of the

sequence of the gesture in progress or the acquired

atom closes the sequence of the gesture in progress

and signals the beginning of a new gesture that will

start with the following atom.

5.1 Hand Configuration

When we speak of sign language, we must mention

that each Country, sometimes each Region, has its

own Sign Language. In Portuguese Sign

Language(PSL), there are a total of 57 hand

configurations, reduced to 42, since 15 pairs differ

only in the orientation, as is the case of the letters M

and W.

The configuration assumed by the hand is

identified through classification – a machine learning

setting by which one (eventually more) category from

a set of pre-defined categories is assigned to a given

object. A classification model is learned from a set of

labelled samples. Then, this model is used to classify

in real time new samples as they are acquired.

5.1.1 Hand Configuration Inputs

In order to obtain the necessary information to

identify the configuration of each hand, 5DT data

gloves (5DT, 2011) are used. Each glove has 14

sensors, placed in specific places of the joints of the

hand and it is possible to obtain data at a rate of 100

samples per second.

To increase robustness in reading data and reduce

the weight of that noise, a set of sensor data is only

maintained (for further classification) if that data is

stable for a pre-defined period of time, after having

detected a significant change.

Digital Assisted Communication

397

5.1.2 Classification

The classification is made from labelled samples, and

then the program classifies the new samples in real

time. After the sample is obtained the data is passed

thought the classification.

To improve the result of this classification

process, the 42 hand configurations were divided into

different groups. The differential factor between each

group was the fingers with greater relevance for this

group of configurations.

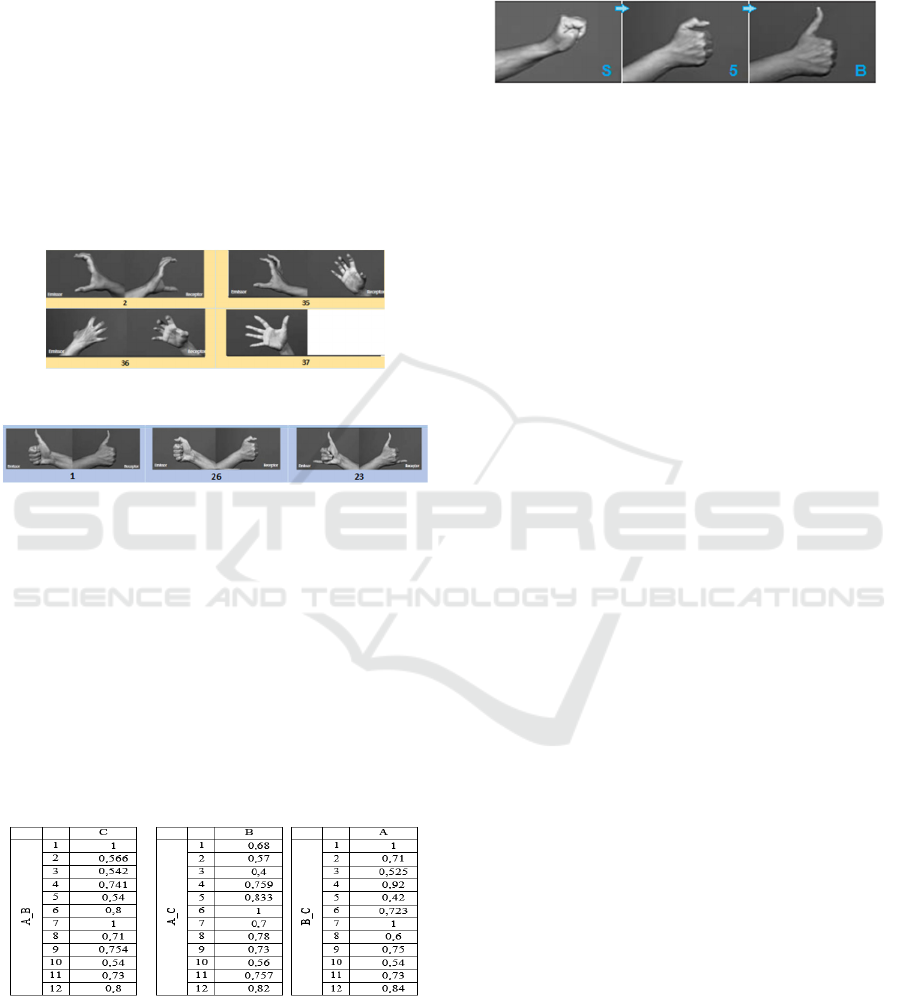

As seen in Figure 3, in group 3, the configurations

similar to the hand-opening gesture were grouped. On

the other hand, in Figure 4, we see that in group 2, the

configurations related to the thumb raising.

Figure 3: Group 3 - Open hand related configurations.

Figure 4: Group 2 - Thumb raising hand configurations.

Three individuals, named A, B and C performed

the tests. Each with a dataset with 10 samples for each

existing configuration, with a total of 1260 samples.

This samples were then crossed with datasets, using 2

of the individuals as training and the remainder as

test, using a k-nearest neighbors (KNN) classifier

(Zhang et al., 2006).To reduce the variance of our

estimates we have used 10-fold cross validation.

In the following tables we see the results of the

tests performed.

Table 1: Correct prediction rate in the group classifier test.

A point to take into consideration is the fact that

intermediate (fake) configurations that constitute

only noise may occur during the transition between

two distinct configurations.

As example we can see in Figure 5 the transition

from the configuration corresponding to the letter "S"

to the configuration corresponding to the letter "B",

where we obtain as noise an intermediate

configuration associated that matches the hand

configuration for number "5" in PSL.

Figure 5: Transition from configuration S to configuration

B, through the intermediate configuration (noise) 5.

Intermediate configurations differ from the others

by the time component, i.e., intermediate

configurations have a shorter steady time, which is a

constant feature that may be used to distinguish

between a valid configuration and a noisy,

intermediate configurations. Thus, we use

information about the dwell time of each

configuration as an element of discrimination by

setting a minimum execution (steady) time below

which configurations are considered invalid.

5.2 Hand Motion and Orientation

To obtain information that allows characterizing the

movement and orientation of the hands we use the

Microsoft Kinect.

The skeleton feature allows tracking up to four

people at the same time, with the extraction of

characteristics from 20 skeletal points in a human

body, at a maximum rate of 30 frames per second.

Of the 20 points available only 6 are used, in

particular the points corresponding to the hands,

elbows, hip and head.

The information about the motion is only saved

when a significant movement happens, i.e. when the

difference between the position of the dominant hand

(or both hands), and the last stored position is greater

than a predefined threshold.

Therefore, when a significant movement is

detected we save an 8-dimensional vector

corresponding to the normalized coordinates of each

hand (x

n

, y

n

, z

n

) and the angle that characterizes its

orientation. If the gesture is performed just with the

dominant hand, the coordinates and angle of the

support hand assume the value zero. The coordinates

are normalized by performing a subtraction of the

vector that represents the hand position (x

m

, y

m

, z

m

)

by the vector that defines the central hip position (x

a

,

y

a

, z

a

).

,

,

,

,

,

,

To be capable of getting the orientation made, the

WEBIST 2017 - 13th International Conference on Web Information Systems and Technologies

398

angular coefficient is defined to a straight line of the

intersection of the hand and the elbow.

In summary, for each configuration assumed by

the dominant hand, a set of vectors characterizing the

motion (position and orientation) of the hands are

recorded.

5.3 Evaluation

The main focus in performance is in the capacity of

the model to predict correctly the words being

represented through sign language.

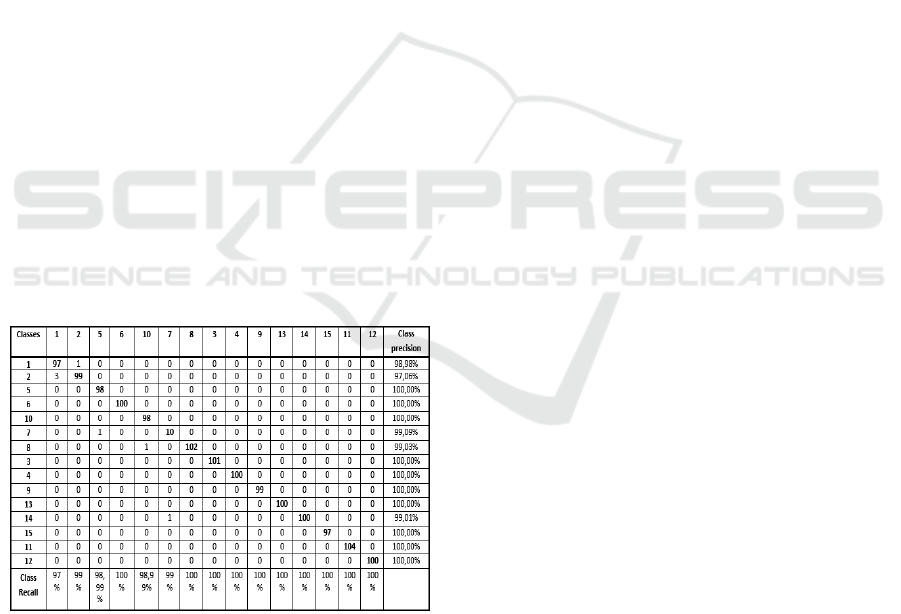

This evaluation counts with 15 words, these 15

words have 2260 samples, but only 750 samples were

used to train the SVM (Steinwart and Christmann,

2008). Each one of the 15 words has 50 examples, the

same number was used for all of them to ensure a

uniform distribution of the classes.

This gestures have been obtained by different

users. The recall is a measure to select all the

instances of a particular class and the precision is the

percentage of correct predictions to a particular class.

The classes (words) used are “Olá”(1), “Adeus”(2),

“Sorrir”(3), “Segredo”(4), “Floresta”(5), “Sol”(6),

“Flor”(7), “Aluno”(8), “Escola”(9), “Casa”(10),

“Aulas”(11), “Desenho”(12), “Amigo”(13),

“Pais”(14) and “Desporto”(15).

The confusion matrix shown in Table 2 clearly

shows the effectiveness of the classifier that achieves

an average precision above 99% for this test scenario.

Table 2: Confusion Matrix.

6 TEXT TO SIGN LANGUAGE

The translation of any text to sign language is a quite

demanding task due to the specific linguistic aspects.

Such as any other language, the sign language has

its own grammatical aspects that must be taken into

consideration. Those details are taken into

consideration in the VSS.

A server based syntax and semantics analyser is

being developed to improve the accuracy of the

translation. Deaf people usually have a hard time

reading and writing their language. The avatar used

for the translations was created with Autodesk Maya

as well as its hand animations.

The Figure 2 shows the avatar body that has

identical properties to a human one in order to get the

best accuracy possible performing the gestures.

Other aspects were taken into consideration, such

as the background that must create a contrast with the

avatar. That contrast is needed so all the gestures and

the movements can be easily understood by the deaf.

6.1 Structure

The text to sign language translator module is divided

in several parts. In order to improve the distribution

and efficiency of all the project components their

interconnections were carefully planned.

The connection to the Kinect and data gloves is

based on sockets. This protocol was also used for the

PowerPoint Add-in, the text from the powerpoint will

be sent to the translator, thus the avatar will translate

all the text to sign language. The Add-in will send

each word on the slide, highlighting it and waiting for

the reply in order to continue so that the user knows

what is being translated at the time.

The database contains all the parameters and the

corresponding text. During the translation process the

application will search the database for the word that

came as input. When the text is found in the database

the parameters containing the data required for the

avatar to translate will be sent to the application. If

there is no match in the database, the avatar will then

translate the word letter by letter.

6.1.1 Architecture

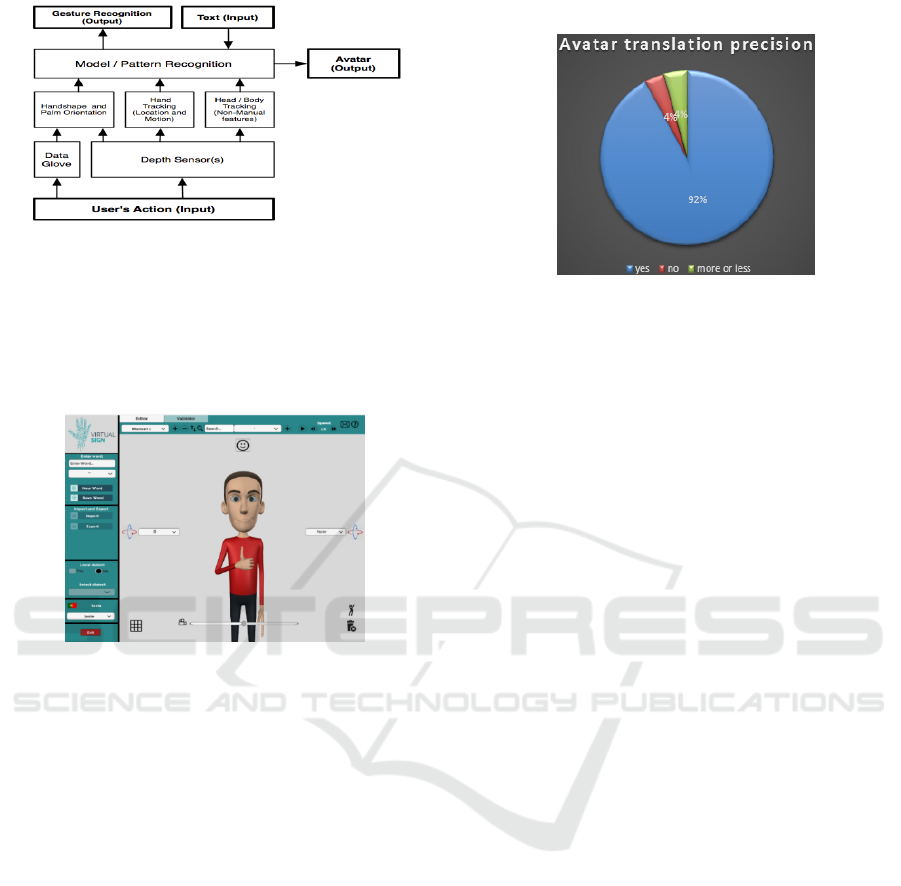

The Figure 6 shows the two main modules, which

carry out the steps needed in the translation process:

The first module (text recognition) converts the

written text into signals, which is represented by an

animated character.

The second module translates the gestures of sign

language into text. In this process we used two

devices: The Kinect for motion recognition and the

gloves for the recognition of static hand

configurations.

Digital Assisted Communication

399

Figure 6: Translator architecture.

6.2 VirtualSign Studio

The VSS is the tool where users can create the

gestures for each word. VSS main interface is

represented on Figure 7.

Figure 7: VirtualSign Studio interface.

In order to use this application the user only needs

to put the user and the password to login. There are

two types of users, editors and validators.

The editor is capable of creating words to be

introduced in the data-base. To create the words the

editor has access to all the linguistic aspects

represented by the avatar. There is also a possibility

to import a word already made.

The validator has the possibility of validating

words, the validator imports words that need to be

validated and can then use the preview system to see

if the word is correct, if the word is correct then the

validator only needs to click in the validate button and

after that moment this word starts to be used to

translate the texts.

The validator can also set a word in a review state

where the editor can fix it.

6.3 Text to Sign Results

Anonymous questionnaires were made to obtain

information about the quality of translation of the

translation between gesture to text and text to gesture.

The text to gesture already has a large database with

arround 600 words.

Figure 8: Text to sign results.

The Figure 8 presents the results obtained about

the precision of the 600 gestures that have been

analyzed by four specialists of PSL. The range of ages

of the specialist that have answered these

questionnaires went from 39 to 64 years old.

7 SERIOUS GAME

The digital games provide a remarkable opportunity

to overcome the lack of educational digital content

available for the hearing impaired community.

Playing a game as the name suggest has a great leisure

aspect that can’t be found in conventional educational

means. Educational game researcher James Gee (Gee,

2003) shows how good game designers manage to get

new players to learn their long, complex, and difficult

games.

Two games were created for the project.

A single player game where the player controls a

character that interacts with various objects and non-

player characters with the aim of collecting several

gestures from the Portuguese Sign Language

(Escudeiro, 2014).

The first game is played in first person view in

which the player controls a character on the map.

Each map represents a level and each level has several

objects that represent signs scattered through the map

for the player to interact with.

The second game created is a multiplayer game.

The game consists of a first person puzzle game and

requires two players to cooperate in order to get

through the game.

7.1 Game Concept

VirtualSign games aim to be an educational and

social integration tool to improve the user’s sign

language skills and allow them to communicate with

WEBIST 2017 - 13th International Conference on Web Information Systems and Technologies

400

others using those skills.

The game is a first person puzzle game, where the

puzzles are based on simple form objects such as

cubes and spheres. The Player will be motivated into

solving the puzzles using the surrounding

environment. The objects can be moved by the user

and there is also intractable objects such as buttons

and switches. However, some of those objects are

only accessible to one of the players, thus, creating

the need to cooperate with each other in order to finish

each level.

After the players complete the level, the time they

took to complete will be registered on an in game

worldwide ranking table with others players times to

incite competition. There is also a personal score that

each player achieves so there is competition between

the two players as well.

The communication between the players is shown

as gestures in real time but there will be also a text

chat where the previous messages can be accessed.

The Figure 9 shows the input application and the

avatar translating after receiving a message.

Figure 9: VirtualSign Cooperation game interface during

gameplay.

7.2 Game Results

Ten testers (8 male and 2 female) aged between 23

and 65 tested the game and answered surveys.

However, due to the need of using the VS translator

only 5 of those testers were able to try that feature and

answer one of the questions. The link with the form

and instructions was given to the testers. The forms

were answered anonymously. The survey of the beta

test phase had 20 questions of which the last one was

an optional written answer about possible

improvements to the game.

The questions graded the game between 1 and 5

with 1 being the worst and 5 being the best. The final

average was 4.5 out of 5 at the end of the testing

phase.

8 VISUAL IMPAIRED

INCLUSION

Besides the social inclusion of the deaf with the

VirtualSign translator, the ACE project also aims to

reach visually impaired people. With the use of voice

as input and output for the translator ACE aims to

provide the means for a deaf person to talk with a

blind one and vice-versa.

So far there is a game under development ACE for

the blind, which gives the player feedback trough

sound. The voice features are also being integrated in

VirtualSign in order to achieve ACE final goal.

9 CONCLUSIONS

The VirtualSign system is prepared to work with

several distinct sign languages making it possible to

have deaf people from different countries

understanding each other.

The machine learning techniques used to process

the inputs from Kinect and the 5dt Gloves are able to

identify the signs being represented with high

accuracy.

VirtualSign can be applied in places with public

attendance to facilitate the communication among

deaf and non-deaf people. It is naturally accepted that

having assistance to understand sign language in

places like fire departments, police stations,

restaurants, museums and airports, among many

others, will be of clear added value in the promotion

of equal opportunities and social inclusion of the deaf

and hearing impaired.

The VirtualSign translator was tested by several

users. The estimated accuracy of the conversion from

gestures to text reaches values of 97%.

For future work on the translator gesture to text a

system with the same precision and accuracy without

using gloves is being planned.

Also an intermediate system of semantics

between sign language and the written text is being

created. The semantic and syntax are very important

because the translation between the languages is not

direct.

There is also the blind voice recognition and

synthetization that is being developed as mentioned

before and there is a game for the blind under

development. We also aim to create a way for both

deaf and blind to create digital arts through an

application using all the progress made so far.

Digital Assisted Communication

401

ACKNOWLEDGEMENTS

This work was supported by FCT - ACE - Assisted

Communication for Education (ref: PTDC/IVC-

COM/5869/2014) and European Union- Erasmus + I-

ACE International Assisted Communication for

Education 2016-1-PT01-KA201-022812.

REFERENCES

Morgado, M., Martins, M., 2009. Língua Gestual

Portuguesa. Comunicar através de gestos que falam é o

nosso desafio, neste 25. º número da Diversidades,

rumo à descoberta de circuns-tâncias propiciadoras

nas quais todos se tornem protagonistas e

interlocutores de um diálogo universal., 7.

Morrissey, S., Way, A., 2005. An example-based approach

to translating sign language.

Gameiro, J., Cardoso, T., Rybarczyk, Y., 2014. Kinect-

Sign, Teaching sign language to “listeners” through a

game. Procedia Technology, 17, 384-391.

Biau, G., 2012. Analysis of a random forests

model. Journal of Machine Learning

Research, 13(Apr), 1063-1095.

Cooper, H., Pugeault, N., & Bowden, R., 2011. Reading the

signs: A video based sign dictionary. In Computer

Vision Workshops (ICCV Workshops), 2011 IEEE

International Conference on (pp. 914-919). IEEE.

Steinwart, I., & Christmann, A., 2008. Support vector

machines. Springer Science & Business Media.

Brashear, H., Zafrulla, Z., Starner, T., Hamilton, H., Presti,

P., & Lee, S., 2010. CopyCat: A Corpus for Verifying

American Sign Language During Game Play by Deaf

Children. In 4th Workshop on the Representation and

Processing of Sign Languages: Corpora and Sign

Language Technologies.

ProDeaf, 2016. Solutions. [Online] Available at:

http://www.prodeaf.net/en-us/Solucoes [Accessed

December 2016].

Showleap, 2015. Showleap blog. [Online] Available at:

http://blog.showleap.com/2015/12/2016-nuestro-ano/

[Accessed December 2016]. 79.

Showleap, 2016. Showleap. [Online] Available at:

http://www.showleap.com/ [December July 2016].

Motionsavvy, 2016. Motionsavvy Uni. [Online] Available

at: http://www.motionsavvy.com/ [Accessed December

2016].

University of Washington, 2016. UW undergraduate team

wins $10,000 Lemelson-MIT Student Prize for gloves

that translate sign language. [Online] Available at:

http://www.washington.edu/news/2016/04/12/uw-

undergraduate-team-wins-10000-lemelson-mit-

student-prize-for-gloves-that-translate-sign-language/

[Accessed December 2016].

Gloves, 5DT., 2011. Fifth dimension technologies. [Online]

Available at: http://www.5dt.com/?page_id=34.

Zhang, H., Berg, A. C., Maire, M., & Malik, J., 2006. SVM-

KNN: Discriminative nearest neighbor classification

for visual category recognition. In Computer Vision

and Pattern Recognition, 2006 IEEE Computer Society

Conference on (Vol. 2, pp. 2126-2136).

IEEE.

Gee, J. P., 2003. What video games have to teach us about

learning and literacy. Computers in Entertainment

(CIE), 1(1), 20-20.

Escudeiro, P., Escudeiro, N., Reis, R., Barbosa, M.,

Bidarra, J., Baltasar, A. B., ... & Norberto, M. (2014).

Virtual sign game learning sign language. In A.

Zaharim, & K. Sopian (Eds.), Computers and

Technology in Modern Education, ser. Proceedings of

the 5th International Conference on Education and

Educational technologies, Malaysia.

WEBIST 2017 - 13th International Conference on Web Information Systems and Technologies

402