Fast Moving Object Detection from Overlapping Cameras

Mika

¨

el A. Mousse

1,2

, Cina Motamed

1

and Eug

`

ene C. Ezin

2

1

Laboratoire d’Informatique Signal et Image de la C

ˆ

ote d’Opale, Universit

´

e du Littoral C

ˆ

ote d’Opale, Calais, France

2

Unit

´

e de Recherche en Informatique et Sciences Appliqu

´

ees, Institut de Math

´

ematiques et de Sciences Physiques,

Universit

´

e d’Abomey-Calavi, Abomey Calavi, B

´

enin

Keywords:

Motion Detection, Codebook, Homography, Overlapping Camera, Information Fusion.

Abstract:

In this work, we address the problem of moving object detection from overlapping cameras. We based on

homographic transformation of the foreground information from multiple cameras to reference image. We

introduce a new algorithm based on Codebook to get each single views foreground information. This method

integrates a region based information into the original codebook algorithm and uses CIE L*a*b* color space

information. Once the foreground pixels are detected in each view, we approximate their contours with poly-

gons and project them into the ground plane (or into the reference plane). After this, we fuse polygons in

order to obtain foreground area. This fusion is based on geometric properties of the scene and on the quality

of each camera detection. Assessment of experiments using public datasets proposed for the evaluation of

single camera object detection demonstrate the performance of our codebook based method for moving object

detection in single view. Results using multi-camera open dataset also prove the efficiency of our multi-view

detection approach.

1 INTRODUCTION

In computer vision community, the use of multi-

camera takes a lot of scope. Indeed, motivations are

multiple and concern various fields as monitoring and

surveillance of significant protected sites, control and

estimation of flows (car parks, airports, ports, and mo-

torways). Because of the fast growing of data pro-

cessing, communications and instrumentation, such

applications become possible. These kind of systems

require more cameras to cover overall field-of-view.

They reduce the effects of objects dynamic occlu-

sion and improve foreground zone estimation accu-

racy. The performance of multi-camera surveillance

systems depends on the quality of each single view

object detection algorithm and on the quality of the

fusion of the single views decision.

In single camera system, many algorithms about

object detection exist with different purposes. These

algorithms are subdivide in three categories : with-

out background modeling, with background modeling

and combined approach. Algorithms based on back-

ground modeling are recommended in case of dy-

namic background observed by a static camera. Many

research works used this approach.One of these algo-

rithms is the codebook based algorithm proposed in

(Kim et al., 2005).

1.1 Object Detection using Codebook

The method proposed by Kim et al. detects in real-

time object in dynamic background. In this method,

each pixel p

t

is represented by a codebook C =

{c

1

,c

2

,...,c

L

} and each codeword c

i

, i = 1,...,L by

a RGB vector v

i

and a 6-tuples aux

i

={

ˇ

I

i

,

ˆ

I

i

, f

i

, p

i

,

λ

i

, q

i

} where

ˇ

I and

ˆ

I are the minimum and maximum

brightness of all pixels assigned to this codeword c

i

,

f

i

is the frequency at which the codeword has oc-

curred, λ

i

is the maximum negative run length defined

as the longest interval during the training period that

the codeword has not recurred, p

i

and q

i

are the first

and last access times, respectively, that the codeword

has occurred. The codebook model is created or up-

dated using two criteria. The first criterion is based

on color distortion (1) whereas the second is based on

brightness distortion (2).

q

||p

t

||

2

−C

2

p

<= ε

1

(1)

I

low

≤ I ≤ I

hi

(2)

In (1), the autocorrelation value C

2

p

is given by equa-

tion (3) and ||p

t

||

2

is given by equation (4).

C

2

p

=

(R

i

R + G

i

G + B

i

B)

2

R

2

i

+ G

2

i

+ B

2

i

(3)

296

Mousse M., Motamed C. and Ezin E..

Fast Moving Object Detection from Overlapping Cameras.

DOI: 10.5220/0005541402960303

In Proceedings of the 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2015), pages 296-303

ISBN: 978-989-758-123-6

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

||p

t

||

2

= R

2

+ G

2

+ B

2

(4)

In relation (2), I

low

= α

ˆ

I

i

, I

hi

= min{β

ˆ

I,

ˇ

I

α

} and

I =

√

R

2

+ G

2

+ B

2

.

After the training period, if an incoming pixel

matches with a codeword in the codebook, then

this codeword will be updated and this pixel will be

treated as a background pixel. If the pixel doesn’t

match, its information will be put in cache word and

this pixel will be treated as a foreground pixel. Due

to the performance of the codebook based method,

several researchers continue by exploring further (Li

et al., 2006; Cheng et al., 2010; Fang et al., 2013;

Mousse et al., 2014).

In this work, instead using HSV color space

(Doshi and Trivedi, 2006), HSL color space (Fang

et al., 2013) or YUV color space (Cheng et al., 2010),

we investigate a new color space. Then, we propose

a new approach by converting pixels to CIE L*a*b*

color space. We also use the Improved Simple Lin-

ear Iterative Clustering (Schick et al., 2012) to cluster

pixels. Finally, we build the codebook model on every

cluster. With each single view foreground informa-

tion, multi-camera object detection can be performed.

1.2 Multi-camera Object Detection

According to Xu et al., existing multi-camera surveil-

lance systems may be classified into three categories

(Xu et al., 2011).

• The system in the first category fuses low-level in-

formation. In this category, multi camera surveil-

lance systems detect and/or track in a single cam-

era view. They switch to another camera when the

systems predict that the current camera will not

have a good view of the scene (Cai and Aggarwal,

1998; Khan and Shah, 2003).

• In the second one, system extracts features and/or

even tracks targets in each individual camera. Af-

ter this, we integrate all features and tracks in or-

der to obtain a global estimate.These systems are

of intermediate-level information fusion (Kang

et al., 2003; Xu et al., 2005; Hu et al., 2006).

• The system in the third category fuses high-level

information. In these systems, individual cam-

eras don’t extract features but provide foreground

bitmap information to the fusion center. Detection

and/or tracking are performed by a fusion center

(Yang et al., 2003; Khan and Shah, 2006; Eshel

and Moses, 2008; Khan and Shah, 2009; Xu et al.,

2011).

This paper points out on the approaches in the third

category. In this category some algorithms have been

proposed. (Khan and Shah, 2006) proposed to use a

planar homographic occupancy constraint to combine

foreground likelihood images from different views.

It resolves occlusions and determines regions on the

ground plane that are occupied by people. (Khan and

Shah, 2009) extended the ground plane to a set of

planes parallel to it, but at some heights off the ground

plane to reduce false positives and missing detections.

The foreground intensity bitmaps from each individ-

ual camera are warped to the reference image by (Es-

hel and Moses, 2008). The set of scene planes are at

the height of people heads. The head tops are detected

by applying intensity correlation to align frames from

different cameras. This work is able to handle highly

crowded scenes. (Yang et al., 2003) detect objects

by finding visual hulls of the binary foreground im-

ages from multiple cameras. These methods use the

visual cues from multiple cameras and are robust in

coping with occlusion. However the pixel-wise ho-

mographic transformation at image level slows down

the processing speed. To overcome this drawback,

(Xu et al., 2011) proposed an object detection ap-

proach via homography mapping of foreground poly-

gons from multiple camera. They approximate the

contour of each foreground region with a polygon and

only transmit and project the vertices of the polygons.

The foreground regions are detected by using Gaus-

sian mixture model. These polygons are then rebuilt

and fused in the reference image. This method pro-

vides good results, but fails if a moving object is oc-

cluded by a static object.

In this work, we propose a new strategy based on

polygons projection. For each object, our strategy is

to detect the camera which has the best view of the ob-

ject and we only project the corresponding polygon in

the reference plane. A foreground polygon is obtained

by finding the convex hull of each single view fore-

ground region. This selection incorporates geometric

properties of the scene and the quality of each single

view detection in order to make better decisions. This

paper is subdivided in four sections. The second sec-

tion presents our approach. The experimental results

and performance evaluation are presented in the third

section. In the fourth section, we conclude this work.

2 OVERVIEW OF OUR METHOD

In this section we present our multi-view foreground

regions detection algorithm. Firstly we detect in each

single view the foreground maps and fuse them to ob-

tain a multi-view foreground object.

FastMovingObjectDetectionfromOverlappingCameras

297

This section is subdivided in four parts : the first

subsection presents the foreground pixels identifica-

tion algorithm, the second shows how foreground pix-

els are grouped, the planar homography mapping is

presented in the third subsection and the fourth sub-

section presents our multi-view fusion approach.

2.1 Foreground Pixels Segmentation

In this part, we present our single view foreground

pixel extraction based on codebook. We convert all

pixel from RGB to CIE Lab color space and in-

tegrate superpixel segmentation algorithm in back-

ground modeling step. The use of superpixels be-

comes increasingly popular for computer vision ap-

plications. In this work, we adopt the algorithm pro-

posed by (Schick et al., 2012) because they proved

the efficacy of this superpixels segmentation algo-

rithm in image segmentation. After the extraction

of superpixels, we build a codebook background

model. Let P ={s

1

,s

2

,...,s

k

} represents the K su-

perpixels obtained after superpixels segmentation.

Each superpixel s

j

, j ∈{1,2,...,k} is composed by

m pixels. With each superpixel, we buid a code-

book C ={c

1

,c

2

,...,c

L

} which contains L codewords

c

i

,i ∈{1,2,...,L}. Each codewords c

i

consists on an

vector v

i

= ( ¯a

i

,

¯

b

i

) and 6-tuples aux

i

={

ˇ

L

i

,

ˆ

L

i

, f

i

, p

i

,

λ

i

, q

i

}in which

ˇ

L

i

,

ˆ

L

i

are the minimum and maximum

of luminance value, f

i

is the frequency at which the

codeword has occurred, λ

i

is the maximum negative

run length defined as the longest interval during the

training period that the codeword has not recurred, p

i

and q

i

are the first and last access times, respectively,

that the codeword has occurred.

¯

L, ¯a,

¯

b are respec-

tively the average value of component L*, a* and b*

in a superpixel. We compute the color distortion by

replacing (5) and (6) into (1). For the brightness dis-

tortion degree we use

¯

L value as the intensity of the

superpixel. Therefore in expression (2) the value of I

is given by

¯

L.

p

t

= ¯a

2

+

¯

b

2

(5)

C

2

p

=

( ¯a

i

¯a +

¯

b

i

¯

b)

2

¯a

2

i

+

¯

b

2

i

(6)

After the learning phase, we detect foreground pixel

by subtracting the current image from the background

model. The substraction method is based on superpix-

els. The proposed algorithm is detailed in Algorithm

2.

1

BGS(p

k

) is a procedure which subtracts an incoming pixel

p

k

from the background. It’s defined as Algorithm 3.

Algorithm 1: Foreground objects segmentation.

1 l ← 0,t ← 1

2 for each frame F

t

of input sequence S do

3 Segment frame F

t

into k-superpixels

4 for each superpixels Su

k

of frame F

t

do

5 p

t

(

¯

L, ¯a,

¯

b)

6 for i = 1 to l do

7 if (colordist (p

t

,v

i

)) and

(brightness (

¯

L,

ˇ

L

i

,

ˆ

L

i

)) then

8 Select a matched codeword c

i

9 Break

10 if there is no match then

11 l ← l + 1

12 create codeword c

L

by setting

parameter v

L

← ( ¯a,

¯

b) and aux

L

←{

¯

L,

¯

L, 1, t −1, t, t}

13 else

14 update codeword c

i

by setting

v

i

← (

f

i

¯a

i

+ ¯a

f

i

+1

,

f

i

¯

b

i

+

¯

b

f

i

+1

) and

aux

i

←{min(

¯

L,

ˇ

L

i

), max(

¯

L,

ˆ

L

i

),

f

i

+ 1, max(λ

i

,t −q

i

), p

i

, t}

15 for each codeword c

i

do

16 λ

i

←max{λ

i

,((m×n×t)−q

i

+ p

i

−1)}

17 if t > N then

18 for each pixels p

k

of frame F

t

do

19 BGS(p

k

)

1

20 t ←t +1

Algorithm 2: procedure BGS(p

k

)

1 for all codewords do

2 find the codeword c

m

matching to p

k

based

on color and brightness distortions.

3

BGS(p

k

) =

foreground if there is no match

background otherwise

2.2 Foreground Pixels Grouping

After the foreground pixels in each view are detected,

these pixels need to be grouped into foreground re-

gion. The region is obtained by finding the con-

vex hull of all contours detected in threshold image.

Then, all region can be approximated by a polygon

and each polygon is convex. To use the polygon ver-

tices in the information fusion module, we have de-

cided to assign an unique identifier id to each polygon

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

298

and each polygon is characterized by a vector V

id

=

(v

1 id

,v

2 id

,...,v

k id

) in which each v

j id

, j = 1,...,k

represents a vertex of the polygon.

2.3 Planar Homography Mapping

Homographies are usually estimated between a pair

of images by finding feature correspondence in these

images. The most commonly used feature is corre-

sponded points in different images, though other fea-

tures such as lines or conics in the individual images

may be used. These features are selected and matched

manually or automatically from 2D images to com-

pute the homography between two camera views or

the homography between one camera view and the

top view. The homography transformation is a spe-

cial variation of the projective transformation. Let us

consider the point x = (x

s

,y

s

,1) in the image with-

out distortion and the point X = (X

w

,Y

w

,Z

w

,1) in the

3D world. The projection transformed from X to x is

given by equation (7).

x

s

y

s

1

=

f /s

x

s C

x

0

0 f /s

y

C

y

0

0 0 1 0

r

11

r

12

r

13

t

x

r

21

r

22

r

23

t

y

r

31

r

32

r

33

t

z

0 0 0 1

X

w

Y

w

0

1

(7)

If X is limited on the ground plane, therefore Z

w

will

be 0 and the projection transformed from X to x be-

comes:

x

s

y

s

1

=

f /s

x

s C

x

0 f /s

y

C

y

0 0 1

r

11

r

12

r

13

t

x

r

21

r

22

r

23

t

y

r

31

r

32

r

33

t

z

X

w

Y

w

1

(8)

x

s

y

s

1

=

h

11

h

12

h

13

h

21

h

22

h

23

h

31

h

32

h

33

X

w

Y

w

1

(9)

Planar homography mapping consists in finding 3×3

matrix which correspond a point pt(x,y) to an other

point pt

0

(x

0

,y

0

) on the ground plane in two different

views.

2.4 Fusion Approach

After the detection of each foreground map, we need

to fuse these regions to get a multi-view information.

The aim of our approach is to detect the camera which

provides the best view of each object in the scene. The

union of the selected objects views gives an overview

of the scene. This selection is based on geometric

properties of the scene and on the quality of each cam-

era detection. Let us consider a scene being observed

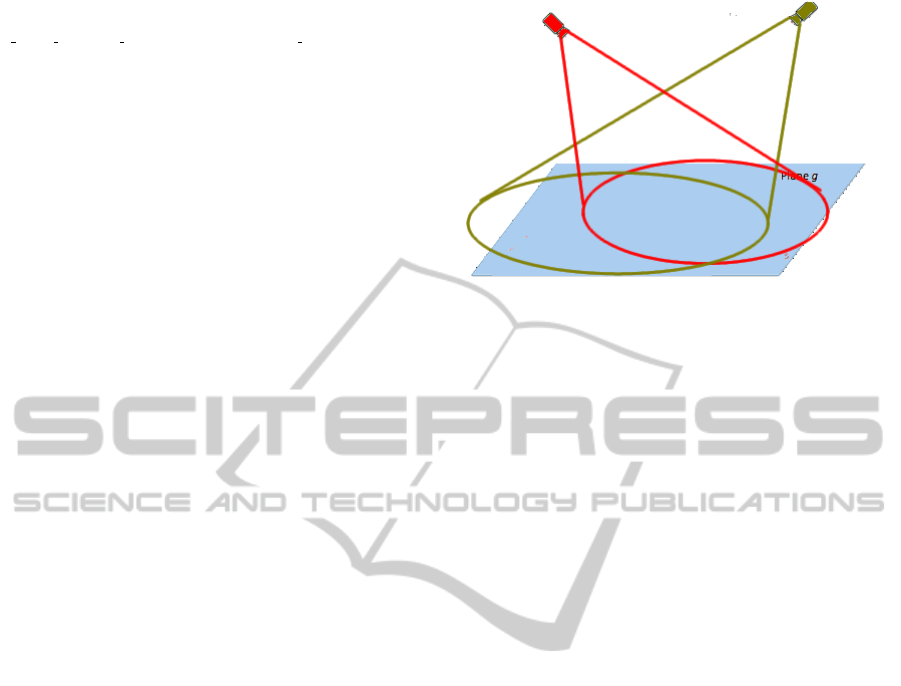

Figure 1: Illustration of scene observed by two cameras.

by cameras with overlapping views as shown in Fig-

ure 1. In Figure 1, the scene is observed by two cam-

eras.

Each camera observes the scene differently and it

is necessary to choose the camera which provides the

best view of an object.

Based on each single foreground polygons infor-

mation about the coverage area, we identify three

cases :

1. a polygon from the view of a camera is not asso-

ciated with any polygon from the view of another

camera;

2. a polygon from the view of a camera is associated

with only one polygon from the view of another

camera;

3. a polygon from the view of a camera is associated

with more than one polygon from the view of an-

other camera;

A polygon P1 will be considered associated with a

polygon P2 from another camera if the projection of

one of the P1 vertex into the plane of the second cam-

era view belongs to P2. The projection of the ver-

tex is performed by using the principles of planar ho-

mography mapping. Thus, a calibration of the stage

must be carried out for obtaining the projection ma-

trix. The ray casting algorithm proposed in (Suther-

land et al., 1974) has been used in order to resolve

point-in-polygon problem.

In the first case, we remark that only one camera

detect the object and we assume that this camera has

the best possible view of the object. Then the ver-

tices of the corresponding polygon are projected in

the ground plane (or the reference plane). The corre-

sponding multi-view foreground map is then obtained

by filling in the projected polygon.

In the second case, the object is detected by more

than one camera. For the selection of the best camera,

firstly we prioritize the cameras that detect the largest

FastMovingObjectDetectionfromOverlappingCameras

299

number of the lowest points of each polygon associ-

ated to the object as foreground pixels. For each poly-

gon the lowest point is the point nearest of the ground.

It is the vertex which has the largest value on the or-

dinate component in the camera coordinate system. If

at least two associated polygons satisfy this criterion,

then the selection is made with respect to the position

of the object relative to the camera. Indeed, this po-

sition has an influence on the rendering in the homo-

graphic plan. For performing this selection, for each

camera we calculate the distance between the projec-

tion of the highest vertex of the polygon in the ground

plane (or the reference plane) and the projection of

the lowest vertex of the polygon in the ground plane

(or the reference plane). The best camera is the cam-

era which has the smallest distance. Then the ver-

tices of the corresponding polygon are projected in

the ground plane (or the reference plane). The corre-

sponding multi-view foreground map is then obtained

by filling in the projected polygon.

In the third case,we conclude that this is dynamic

n occlusion between objects. According to this, the

best camera is the camera in which more than one

polygon is associated. Then the vertices of the corre-

sponding polygons are projected in the ground plane

(or the reference plane). The corresponding multi-

view foreground map is then obtained by filling in the

projected polygons.

3 EXPERIMENTAL RESULTS

AND PERFORMANCE

In this section, we present the performance of the pro-

posed approach by comparing it with other state of

the art method. Firstly we present the experimental

environment and results. After that we present and

analyze the performance of our system.

3.1 Experimental Results

We present experimental results at two levels. For

the validation of our single view algorithm, We have

selected two public benchmarking datasets (available

from http://www.changedetection.net) which are

covered under the work done in (Goyette et al.,

2012). They are “fall” and “boats” datasets. In order

to test our multi-view object localization algorithm,

we used video sequence which contains significant

lighting variation, dynamic occlusion and scene

activity. We selected “ Dataset 1” (available from

http://ftp.pets.rdg.ac.uk/pub/PETS2001/DATASET1/

sequence which has been used for testing several

multi view detection/tracking algorithms. These

datasets are made available to researchers for the

evaluation of moving object detection algorithms.

The experiment environment is Intel Core i7 CPU

L 640 @ 2.13GHz ×4 processor with 4GB memory

and the programming language is C++. The param-

eters of superpixels segmentation algorithm is given

by (Schick et al., 2012). In our implementation if the

size of input frame is (M ×N) then we construct

M×N

50

superpixels. The single view segmentation results are

presented in Figure 2 and Figure 3 whereas the multi-

view segmentation results are presented in Figure 4.

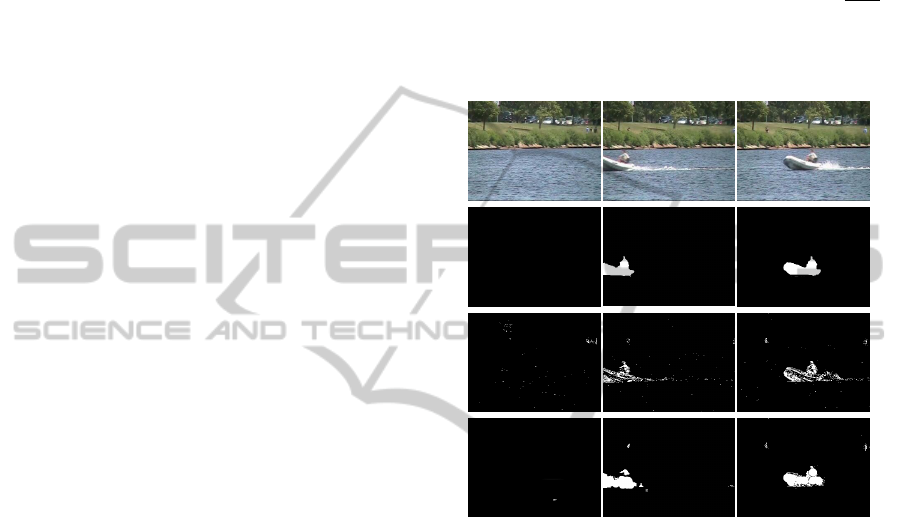

Figure 2: “boats” dataset segmentation results. The first

row shows the original images. The second row shows the

ground truth. The third row shows the detected results by

(Kim et al., 2005), and the last row shows the detected re-

sults by our proposed algorithm.

3.2 Performance Evaluation and

Discussion

Firstly, we evaluate the performance of our proposed

single view object detection and compare it to other

research works which extend the original codebook

by exploiting other color space (RGB, HSV, HSL,

YUV) information. In order to perform this compari-

son, we use an evaluation based on ground truth. The

ground truth has been obtained by manually labeling

foreground objects in the original frame. The ground

truth based metrics are : true negative (TN), true pos-

itive (TP), false negative (FN) and false positive (FP).

We use these metrics to compute other parameters

for the evaluation of the algorithm. These parame-

ters are false positive rate (FPR), true positive rate

(TPR), precision (PR) and F-measure (FM).These pa-

rameters are respectively computed using expressions

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

300

(10), (11), (12) and (13).

FPR = 1 −

T N

T N + FP

(10)

PR =

T P

T P + FP

(11)

T PR =

T P

T P + FN

(12)

FM =

2 ×PR ×T PR

PR + T PR

(13)

Results are presented in Table 1 and Table 2. In these

tables, CB refers to the method proposed by (Kim

et al., 2005), CB HSV refers to the method suggested

by (Doshi and Trivedi, 2006), CB HSL refers to the

algorithm proposed by (Fang et al., 2013), CB YUV

refers to the algorithm suggested by (Cheng et al.,

2010) and CB LAB refers to our proposed algorithm.

Table 1: Different metrics according to experiments with

“boats” dataset.

Metrics CB CB HSV CB HSL CB YUV CB LAB

FPR 0.23 0.25 0.21 0.27 0.21

PR 0.87 0.86 0.89 0.80 0.91

TPR 0.46 0.48 0.50 0.55 0.53

FM 0.60 0.62 0.64 0.65 0.66

According to results presented in Table 1 and Ta-

ble 2, we can conclude that our modified codebook

works better than standard codebook. The proposed

method reduces the percentage of false alarms and in-

creases the precision of object detection. According

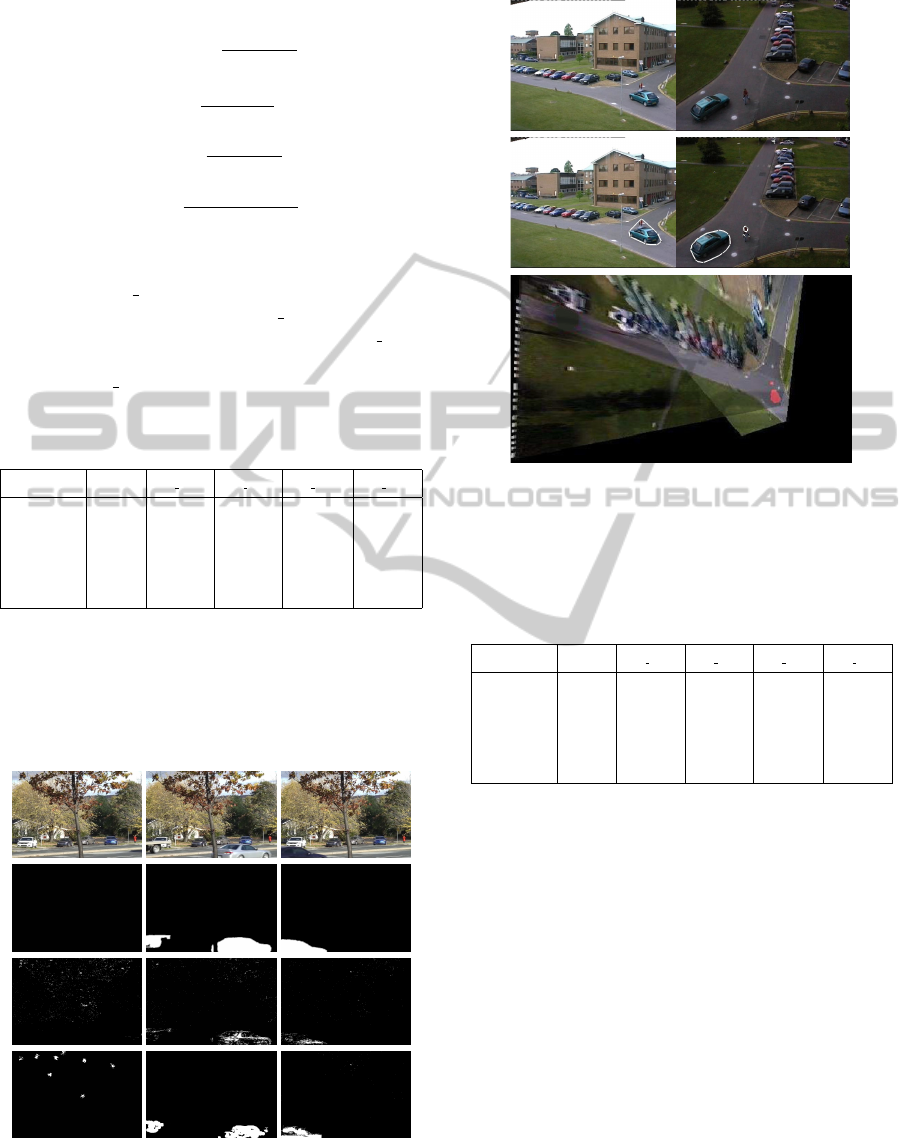

Figure 3: “fall” dataset segmentation results. The first

row shows the original images. The second row shows the

ground truth. The third row shows the detected results by

(Kim et al., 2005), and the last row shows the detected re-

sults by our proposed algorithm.

Figure 4: The first row shows the original 2 single views.

The second rows shows the foreground region detected in

single view. The third row shows the segmentation result

using a multi view informations.

Table 2: Different metrics according to experiments with

“fall” dataset.

Metrics CB CB HSV CB HSL CB YUV CB LAB

FPR 0.31 0.33 0.25 0.38 0.23

PR 0.56 0.60 0.63 0.41 0.67

TPR 0.32 0.39 0.43 0.45 0.45

FM 0.41 0.47 0.51 0.43 0.54

to F-measure values, we can claimed that the accu-

racy of object detection by using static camera is also

improved by using our proposed model. When we

compare our approach to other enhancement of code-

book based algorithm, we show that our method has

the best accuracy of object detection. With “boats”

dataset, the method proposed in (Cheng et al., 2010)

detects more true positive pixels than our proposed

method. But it also emits more false positive alarm

than our approach. The use of superpixel segmenta-

tion reduces the complexity of the modeling process

using codebook model. Secondly, we evaluate the

performance of our multi view detection approach.

The results high lightly that our approach provides

a good moving object location and provide good ac-

curacy in a dynamic occlusion case. It has similar

performance in moving object detection to other ap-

proaches of the state of the art such as (Xu et al.,

2011). However our approach detects less false pos-

FastMovingObjectDetectionfromOverlappingCameras

301

itive than the approach proposed by Xu et al. mainly

due to the fact that our single view detection algo-

rithm emits less false positive alarm than MoG which

is used in (Xu et al., 2011). Our proposed algorithm

is also faster than state of the art multi-view approach.

Indeed, comparing our method to Xu et al. algorithm

which is known as been 40 times faster than the exist-

ing algorithms, we note that our method improves the

execution time by 22%.

4 CONCLUSIONS

In this paper, we have proposed a fast algorithm

for object detection by using overlapping cameras

based on homography. In each camera, we propose

an improvement of codebook based algorithm to get

foreground pixels. The multi-view object detection

that we proposed in this work algorithm is based on

the fusion of multi camera foreground informations.

We approximate the contour of each foreground

region with a polygon and only project the vertices of

the relevant polygons. The experiments have shown

that this method can run in real time and generate

results similar to those warping foreground images.

ACKNOWLEDGEMENTS

This work is partially funded by the Association

AS2V and Fondation Jacques De Rette, France.

We also appreciate the help of Mr L

´

eonide Sinsin

and Mr Patrick A

¨

ınamon for proof-reading this ar-

ticle. Mika

¨

el A. Mousse is grateful to Service de

Coop

´

eration et d’Action Culturelle de Ambassade de

France au B

´

enin.

REFERENCES

Cai, Q. and Aggarwal, J. (1998). Automatic tracking of hu-

man motion in indoor scenes across multiple synchro-

nized video streams. In Proc. of IEEE International

Conference on Computer Vision.

Cheng, X., Zheng, T., and Renfa, L. (2010). A fast

motion detection method based on improved code-

book model. Journal of Computer and Development,

47:2149–2156.

Doshi, A. and Trivedi, M. M. (2006). Hybrid cone-

cylinder codebook model for foreground detection

with shadow and highlight suppression. In Proceed-

ings of the IEEE International Conference on Ad-

vanced Video and Signal Based Surveillance, pages

121–133.

Eshel, R. and Moses, Y. (2008). Homography based multi-

ple camera detection and tracking of people in a dense

crowd. In Proc. of 18th IEEE International Confer-

ence on Computer Vision and Pattern Recognition.

Fang, X., Liu, C., Gong, S., and Ji, Y. (2013). Object de-

tection in dynamic scenes based on codebook with su-

perpixels. In Proceedings of the Asian Conference on

Pattern Recognition, pages 430–434.

Goyette, N., Jodoin, P. M., Porikli, F., Konrad, J., and Ish-

war, P. (2012). Changedetection.net: A new change

detection benchmark dataset. In Proceedings of the

IEEE Computer Society Conference on Computer Vi-

sion and Pattern Recognition Workshops.

Hu, W., Hu, M., Zhou, X., Tan, T., Lou, J., and Maybank, S.

(2006). Principal axis-based correspondence between

multiple cameras for people tracking. In IEEE Trans.

on Pattern Analysis and Machine Intelligence, vol. 29.

Kang, J., Cohen, I., and Medioni, G. (2003). Continuous

tracking within and across camera streams. In Proc.

of International Conference on Pattern Recognition.

Khan, S. and Shah, M. (2003). Consistent labeling of

tracked objects in multiple cameras with overlapping

fields of view. In IEEE Trans. on Pattern Analysis and

Machine Intelligence, vol. 23.

Khan, S. M. and Shah, M. (2006). A multi-view approach to

tracking people in crowded scenes using a planar ho-

mography constraint. In Proc. of 9th European Con-

ference on Computer Vision.

Khan, S. M. and Shah, M. (2009). Tracking multiple oc-

cluding people by localizing on multiple scene planes.

In IEEE Transactions on Pattern Analysis and Ma-

chine Intelligence, vol. 31.

Kim, K., Chalidabhonse, T. H., Harwood, D., and Davis,

L. (2005). Real-time foreground-background segmen-

tation using codebook model. In Elsevier Real-Time

Imaging, 11(3) : 167-256.

Li, Y., Chen, F., Xu, W., and Du, Y. (2006). Gaussian-based

codebook model for video background subtraction. In

Lecture Notes in Computer Science.

Mousse, M. A., Ezin, E. C., and Motamed, C. (2014).

Foreground-background segmentation based on code-

book and edge detector. In Proc. of International Con-

ference on Signal Image Technology & Internet Based

Systems.

Schick, A., Fischer, M., and Stiefelhagen, R. (2012). Mea-

suring and evaluating the compactness of superpix-

els. In Proc. of International Conference on Pattern

Recognition, pages 930–934.

Sutherland, I. E., Sproull, R. F., and Schumacker, R. A.

(1974). A characterization of ten hidden surface al-

gorithms. In ACM Computing Surveys (CSUR).

Xu, M., Orwell, J., Lowey, L., and Thirde, D. (2005). Ar-

chitecture and algorithms for tracking football players

with multiple cameras. In IEE Proc. of Vision, Image

and Signal Processing.

Xu, M., Ren, J., Chen, D., Smith, J., and Wang, G. (2011).

Real-time detection via homography mapping of fore-

ground polygons from multiple. In Proc. of 18th IEEE

International Conference on Image Processing.

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

302

Yang, D. B., Gonzalez-Banos, H. H., and Guibas, L. J.

(2003). Counting people in crowds with a real-time

network of simple image sensors. In Proc. 9th IEEE

International Conference on Computer Vision.

FastMovingObjectDetectionfromOverlappingCameras

303